Plain Language Summary

Sentiment analysis is a method used to determine whether a piece of text expresses a positive, negative, or neutral opinion. Traditionally, researchers have used sentiment dictionaries – lists of words with assigned sentiment values – to analyse text. More recently, machine learning models have been developed to improve sentiment classification by learning patterns from large datasets. However, most research in this area has focused on English, while many languages – including German – are under-represented. This study examines different sentiment analysis techniques to determine which methods work best for analysing German-language texts, including historical documents. We compare three main approaches:

-

1. Dictionaries: A simple and transparent way to check words against predefined sentiment lists.

-

2. Fine-tuned transformer models: These models, such as BERT, are trained on sentiment data to improve classification accuracy.

-

3. Large Language Models (LLMs) with zero-shot capabilities: Newer deep learning models, such as ChatGPT, can classify sentiment without being explicitly trained for the task by using their broad general knowledge.

To test these methods, we used a variety of datasets, including social media posts, product reviews and historical texts. Our results show that dictionaries are easy to use but often inaccurate, especially for complex texts. We also show that fine-tuned models perform well but require a lot of labelled training data and computing power. Zero-shot models, especially dialogue-based models like ChatGPT, are very effective, even without training on sentiment data. However, they are sensitive to small changes in input instructions and require significant computational resources. Our findings indicate that LLM-based zero-shot classification is a promising tool for sentiment analysis in the computational humanities. It allows researchers to analyse texts in different languages and historical contexts without requiring large amounts of labelled data. However, choosing the right model still requires careful consideration of accuracy, accessibility and transparency, especially when dealing with complex datasets.

Introduction

Sentiment analysis is a key area of research within natural language processing that focuses on understanding the emotional tone, attitudes and evaluations expressed in text. A widely used approach within this field is sentiment classification (Pang, Lee, and Vaithyanathan Reference Pang, Lee, Vaithyanathan, Hajič and Matsumoto2002), which is often considered synonymous with sentiment analysis itself. One popular and rather simplistic approach is polarity-based sentiment classification, where sentences or documents are assigned to predefined categories such as positive and negative. Sentiment analysis initially gained popularity in the study of user-generated content on the social web, such as social media posts and review texts and for commercial purposes (Liang et al. Reference Liang, Liu, Zhang, Wang and Yangn.d.). However, it is now also used in the computational humanities for a wide range of research applications, including computational linguistics (Taboada Reference Taboada2016), computational literary studies (Dennerlein, Schmidt, and Wolff Reference Dennerlein, Schmidt and Wolff2023; Kim and Klinger Reference Kim and Klinger2019; McGillivray Reference McGillivray, Schuster and Dunn2021) and digital history (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023; Sprugnoli et al. Reference Sprugnoli, Tonelli, Marchetti and Moretti2016).

In this article, we provide a comprehensive evaluation of different sentiment analysis techniques for the case of German language corpora. This choice of language is motivated by a previous research project on media sentiment,Footnote 1 which focused on sentiment analysis in historical German newspapers. Given that most reference datasets used for sentiment analysis evaluations are based on contemporary English (Jim et al. Reference Jim, Talukder, Malakar, Kabir, Nur and Mridha2024), we believe that our evaluation study of methods with a focus on their performance on historical German texts provides a valuable contribution to the existing evaluation landscape. In particular, it enhances the field of computational humanities, where non-English historical languages are often the subject of research. For our evaluation, we focus on off-the-shelf methods and models, which means they can be acquired and applied to new target data without any kind of adaptation or, in the case of neural networks, fine-tuning.

A popular branch of off-the-shelf techniques are dictionary-based methods. These are essentially pre-defined lists of words and their specific sentiment values or scores, making them easy to interpret and implement. Dictionaries are computationally efficient, versatile and compatible with most hardware. However, their static nature limits their adaptability to evolving language trends and they often struggle with domain-specific terminology and more complex linguistic phenomena such as sarcasm or negation. Although specialised dictionaries can be developed without advanced technical skills, the process is very labour intensive. As an alternative to dictionaries, there are several machine learning approaches to sentiment analysis. The traditional supervised learning paradigm, which involves training models on labelled data, has advanced considerably with the emergence of transformer architectures and large language models (LLMs).Footnote 2 Notably, models such as BERT (Bidirectional Encoder Representations from Transformers) (Devlin et al. Reference Devlin, Chang, Lee, Toutanova, Burstein, Doran and Solorio2019) have contributed significantly to the rise of deep learning methods.

While dictionary-based approaches are highly dependent on language-specific features and often struggle with issues like orthographic errors or unknown words, the tokenisation procedures used by recent LLMs address these challenges more effectively. For instance, rare or out-of-vocabulary words are often broken into smaller subword tokens that the model can still interpret based on its training. Additionally, the contextualisation capabilities of LLMs enables them to automatically recognise negations and capture more nuanced semantic relationships. However, despite substantial improvements in processing speed and accuracy, generating training data for supervised learning remains a time-intensive task, especially in humanities projects that involve complex language data.

A promising development in this regard can be found in a branch of techniques known as zero-shot learning (Yin et al. Reference Yin, Hay, Roth, Jiang, Ng and Wan2019), a form of transfer learning that eliminates the need for task-specific annotated data. Zero-shot models use general knowledge gained from pre-training, allowing them to adapt to new domains with minimal to no customisation. Although these approaches offer flexibility and scalability, they remain computationally intensive and can pose challenges in terms of interpretability. The emergence of powerful LLMs such as GPT-3 (Brown et al. Reference Brown, Mann, Ryder, Subbiah, Kaplan, Dhariwal, Neelakantan, Shyam, Sastry, Askell, Agarwal, Herbert-Voss, Krueger, Henighan, Child, Ramesh, Ziegler, Wu, Winter, Hesse, Chen, Sigler, Litwin, Gray, Chess, Clark, Berner, McCandlish, Radford, Sutskever, Amodei, Larochelle, Ranzato, Hadsell, Balcan and Lin2020) and its chatbot interface, ChatGPT, has fundamentally changed the way we interact with these models. Dialogue-based LLMs allow tasks to be expressed through natural language prompts, greatly extending their applicability to different domains (Kocoń et al. Reference Kocoń, Cichecki, Szydło, Baran, Bielaniewicz, Gruza, Janz, Kanclerz, Kocoń, Koptyra, Mieleszczenko-Kowszewicz, Miłkowski, Oleksy, Piasecki, Radliński, Wojtasik, Woźniak and Kazienko2023). Beyond the accessibility of corporate solutions such as ChatGPT, concerns have emerged about security, resource consumption and cost. In response, a growing number of locally deployable, smaller and open dialogue LLMs have been developed, providing an alternative for individual use in different configurations.

Given the variety of available off-the-shelf approaches available for sentiment classification, ranging from dictionary-based methods to dialogue-based LLMs, it remains unclear how effectively these techniques perform and compare when applied to German historical text corpora. This study addresses this gap by evaluating a number of sentiment dictionaries, fine-tuned language models and different zero-shot approaches to sentiment analysis. We test the different approaches on a wide range of German-language datasets containing contemporary social media and review data as well as language samples from the humanities domain. These humanities texts are examples of more specific text collections from different disciplines, including history and literary studies. Compared to the contemporary datasets, the humanities datasets are characterised by a much higher degree of heterogeneity in terms of text length, language used and context of creation. With this evaluation study, we extend our previous research on sentiment analysis for German language corpora (Borst et al. Reference Borst, Wehrheim, Burghardt, Baillot, Tasovac, Scholger and Vogeler2023) by adding a wide range of LLM-based zero-shot classification techniques.Footnote 3 We provide an in-depth evaluation of their performance and explore the broader implications of their use in computational humanities, considering factors such as accuracy, efficiency, interpretability and practical applicability. The results of this evaluation are meant to guide researchers in selecting appropriate sentiment analysis methods for their research projects and to better understand the trade-offs of different approaches.

Related work

For a long time, dictionary-based sentiment analysis has been a lightweight and therefore popular approach (Kolb et al. Reference Kolb, Katharina, Bettina, Neidhardt, Wissik, Baumann and Calzolari2022; Lee et al. Reference Lee, Ma, Meng, Zhuang and Peng2022; Mengelkamp et al. Reference Mengelkamp, Koch and Schumann2022; Müller et al. Reference Müller, Pérez-Torró, Basile and Franco-Salvador2022; Pöferlein Reference Pöferlein2021; Puschmann et al. Reference Puschmann, Karakurt, Amlinger, Gess and Nachtwey2022; Schmidt et al. Reference Schmidt, Dangel and Wolff2021.Footnote 4 However, a major criticism of this method is its strong dependence on domain-specific classification performance (Borst et al. Reference Borst, Wehrheim, Niekler and Burghardt2023; van Atteveldt et al. Reference van Atteveldt, van der Velden and Boukes2021), which requires extensive revalidation to achieve satisfactory results (Chan et al. Reference Chan, Bajjalieh, Auvil, Wessler, Althaus, Welbers, van Atteveldt and Jungblut2021). In addition, sentiment dictionaries are inherently language dependent and cannot be directly translated without verification due to lexical ambiguity. Hybrid methods that integrate machine learning with semi-automatic word list generation or dictionary expansion have been proposed as promising alternatives. However, these approaches are often cumbersome due to the multiple validation steps required (Dobbrick et al. Reference Dobbrick, Jakob, Chan and Wessler2022; Palmer et al. Reference Palmer, Roeder and Muntermann2022; Stoll et al., Reference Stoll, Wilms and Ziegele2023). While dictionaries offer a low-barrier and resource-efficient solution that does not require training data (Schmidt et al. Reference Schmidt, Dangel and Wolff2021), they consistently underperform compared to supervised learning methods. This is true for both off-the-shelf and custom dictionaries, including self-implemented and commercial options (Barberá et al. Reference Barberá, Boydstun, Linn, McMahon and Nagler2021; Boukes et al. Reference Boukes, van de Velde, Araujo and Vliegenthart2020; Dobbrick et al. Reference Dobbrick, Jakob, Chan and Wessler2022; van Atteveldt et al. Reference van Atteveldt, van der Velden and Boukes2021; Widmann and Wich Reference Widmann and Wich2022).

In supervised learning, the fine-tuning of transformer-based language models, such as BERT (Devlin et al. Reference Devlin, Chang, Lee, Toutanova, Burstein, Doran and Solorio2019), has become the de facto standard for text classification tasks (Liu et al. Reference Liu, Ott, Goyal, Du, Joshi, Chen, Levy, Lewis, Zettlemoyer and Stoyanov2019; Yang et al. Reference Yang, Dai, Yang, Carbonell, Salakhutdinov, Le, Wallach, Larochelle, Beygelzimer, d'Alche-Buc and Fox2019). Traditionally, the technical development of new methods for sentiment classification is often centred around the English language. This disparity is reflected in the research literature. In the context of German sentiment classification, supervised training approaches using language models achieve significant improvements in classification performance and generally provide a reference benchmark for other approaches (Barbieri et al. Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022; Guhr et al. Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020; Idrissi-Yaghir et al. Reference Idrissi-Yaghir, Schäfer, Bauer and Friedrich2023; Manias et al. Reference Manias, Mavrogiorgou, Kiourtis, Symvoulidis and Kyriazis2023). The ability to fine-tune language models to a specific grammatical or morphological context proves particularly successful for sentiment classification in historical German language (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023; Schmidt et al. Reference Schmidt, Dangel and Wolff2021).

A key challenge in applying language models to domain-specific tasks is the need for annotated training data as well as for substantial computational resources (Schwartz et al. Reference Schwartz, Dodge, Smith and Etzioni2019). Domain adaptation through fine-tuning typically requires updating millions of parameters for each dataset, which can be computationally expensive. To address these issues, recent research has focused on reducing the reliance on large labelled datasets, leading to the rise of few shot (Bao et al. Reference Bao, Wu, Chang and Barzilay2020; Bragg et al. Reference Bragg, Cohan, Lo and Beltagy2021; Brown et al. Reference Brown, Mann, Ryder, Subbiah, Kaplan, Dhariwal, Neelakantan, Shyam, Sastry, Askell, Agarwal, Herbert-Voss, Krueger, Henighan, Child, Ramesh, Ziegler, Wu, Winter, Hesse, Chen, Sigler, Litwin, Gray, Chess, Clark, Berner, McCandlish, Radford, Sutskever, Amodei, Larochelle, Ranzato, Hadsell, Balcan and Lin2020; Wang et al. Reference Wang, Yao, Kwok and Ni2020) and even zero-shot models (Schönfeld et al. Reference Schönfeld, Ebrahimi, Sinha, Darrell and Akata2019; Xian et al. Reference Xian, Schiele and Akata2017; Yin et al. Reference Yin, Hay, Roth, Jiang, Ng and Wan2019). These approaches enable text classification without the need for extensive task-specific fine-tuning or manual data annotation, significantly lowering the barrier to entry.

An important milestone in this area was the introduction of GPT-3 (Brown et al. Reference Brown, Mann, Ryder, Subbiah, Kaplan, Dhariwal, Neelakantan, Shyam, Sastry, Askell, Agarwal, Herbert-Voss, Krueger, Henighan, Child, Ramesh, Ziegler, Wu, Winter, Hesse, Chen, Sigler, Litwin, Gray, Chess, Clark, Berner, McCandlish, Radford, Sutskever, Amodei, Larochelle, Ranzato, Hadsell, Balcan and Lin2020). Research into instruction-following models (Ouyang et al. Reference Ouyang, Wu, Jiang, Almeida, Wainwright, Mishkin, Zhang, Agarwal, Slama, Ray, Schulman, Hilton, Kelton, Miller, Simens, Askell, Welinder, Christiano, Leike and Lowe2022; Wei et al. Reference Wei, Bosma, Zhao, Guu, Yu, Lester, Du, Dai and Le2021) and the publication of dialogue-based systems, such as ChatGPT or Llama (Touvron et al. Reference Touvron, Lavril, Izacard, Martinet, Lachaux, Lacroix, Rozière, Goyal, Hambro, Azhar, Rodriguez, Joulin, Grave and Lample2023), have further increased accessibility. Initial research indicates strong zero-shot classification performance of ChatGPT (Gilardi et al. Reference Gilardi, Alizadeh and Kubli2023; Törnberg Reference Törnberg2023), although challenges such as non-determinism remain (Reiss Reference Reiss2023). Recent studies have increasingly explored their application to sentiment classification, particularly using OpenAI models as a comparison (Campregher and Diecke Reference Campregher and Diecke2024; Jim et al. Reference Jim, Talukder, Malakar, Kabir, Nur and Mridha2024; Kheiri and Karimi Reference Kheiri, Karimi, Ding, Lu, Wang, Di, Wu, Huan, Nambiar, Li, Ilievski, Baeza-Yates and Hu2024; Rauchegger et al. Reference Rauchegger, Wang, Delobelle, de Araujo, Baumann, Gromann, Krenn, Roth and Wiegand2024; Wu et al. Reference Wu, Ma, Zhang, Deng, He and Xue2024; Zhang et al. Reference Zhang, Deng, Liu, Pan, Bing, Duh, Gomez and Bethard2024; Zhu et al. Reference Zhu, Gardiner, Roldán, Rossouw, De Clercq, Barriere, Barnes, Klinger, Sedoc and Tafreshi2024). However, these benchmarks focus on English language datasets.

One way of dealing with non-English languages is to rely on machine translation (Feldkamp et al. Reference Feldkamp, Kostkan, Overgaard, Jacobsen, Bizzoni, de Clercq, Barriere, Barnes, Klinger, Sedoc and Tafreshi2024; Koto et al. Reference Koto, Beck, Talat, Gurevych, Baldwin, Graham and Purver2024; Miah et al. Reference Miah, Kabir, Sarwar, Safran, Alfarhood and Mridha2024), to make use of available tools for English. While a manual checking of translation quality remains a viable option for smaller datasets (Campregher and Diecke Reference Campregher and Diecke2024), this may become a factor when moving to historical or literary texts, given the unique and more complex language setting (Etxaniz et al. Reference Etxaniz, Azkune, Soroa, de Lacalle, Artetxe, Duh, Gomez and Bethard2024; Huang et al. Reference Huang, Tang, Zhang, Zhao, Song, Xia, Wei, Bouamor, Pino and Bali2023; Liu et al. Reference Liu, Zhang, Zhao, Luu and Bing2025). Recently, a growing number of LLMs have been optimising multiple languages simultaneously. However, as English often continues to dominate the training data of these models, research shows that switching to non-English languages reduces the level of performance within these models (Etxaniz et al. Reference Etxaniz, Azkune, Soroa, de Lacalle, Artetxe, Duh, Gomez and Bethard2024; Zhang et al. Reference Zhang, Fan, Zhang, Vlachos and Augenstein2023). Notably, there are some examples for cross-lingual zero-shot approaches, which seem to offer more consistent sentiment classification performance in non-English languages (Koto et al. Reference Koto, Beck, Talat, Gurevych, Baldwin, Graham and Purver2024; Manias et al. Reference Manias, Mavrogiorgou, Kiourtis, Symvoulidis and Kyriazis2023; Přibáň and Steinberger Reference Přibáň, Steinberger, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022; Sarkar et al. Reference Sarkar, Reddy and Iyengar2019; Wu et al. Reference Wu, Ma, Zhang, Deng, He and Xue2024).

Off-the-shelf methods and models for the German language are still scarce, and even more so in the field of computational humanities data. Among the few are Guhr et al. (Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020) and Barbieri et al. (Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022), offering ready-to-use fine-tuned models for German sentiment analysis. However, these models are trained on contemporary Twitter or review datasets, raising the question of how well their performance transfers to out-of-domain data. So far, sentiment analysis studies on historical German texts have been performed using dictionaries (Du and Mellmann Reference Du and Mellmann2019; Schmidt and Burghardt Reference Schmidt, Burghardt and Alex2018a; Schmidt et al. Reference Schmidt, Dangel and Wolff2021) or machine learning methods, such as SVMs (Zehe et al. Reference Zehe, Becker, Jannidis, Hotho, Kern-Isberner, Fürnkranz and Thimm2017) or fine-tuning (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023; Dennerlein, Schmidt, and Wolff Reference Dennerlein, Schmidt and Wolff2023; Schmidt et al. Reference Schmidt, Dennerlein, Wolff, Degaetano-Ortlieb, Kazantseva, Reiter and Szpakowicz2021). Yet, preliminary results from our previous work show that even a rather small German BERT-based zero-shot model can potentially deliver performance comparable to the aforementioned fine-tuned models on contemporary datasets and even outperform them and dictionary approaches on humanities datasets (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023).

Methods and experiments

Our previous research (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023) demonstrated that an natural language inference (NLI)-based zero-shot classifier consistently outperformed dictionary-based approaches across all datasets for polarity-based sentiment classification, although it did not achieve state-of-the-art (SotA) performance. Two key observations emerged from this study: (1) the zero-shot model demonstrated consistent performance patterns across both contemporary and humanities datasets, whereas fine-tuned and dictionary methods experienced significant performance declines on these datasets; and (2) the performance of dictionary-based approaches was notably inconsistent. These findings position the NLI-based zero-shot model as a promising middle ground between the efficiency of dictionary-based approaches, and the high performance of fine-tuned models, which come at a significant computational cost.

In this section, we extend the evaluation of the methods on the benchmark datasets of Borst et al. (Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023) by including a broader range of sentiment classification techniques Table 1. We then provide an overview of the main classes of methods identified in the literature and justify our choice of models for evaluation. Finally, we give a brief description, including critical remarks, of the benchmark datasets used in this evaluation study Table 2.

Table 1. Aggregated list of all evaluated LLMs, including their respective names as they appear on Hugging Face or version name

Note: It includes information on their individual parameter size values. The number of parameters of the GPT models are not publicly known.

Table 2. Statistics of all datasets used, including sentiment label distribution, average text length and temporal coverage

Dictionaries

We adopt the dictionary selection from Borst et al. (Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), which includes three widely used and universally applicable German sentiment dictionaries: BAWL-R (Võ et al. Reference Võ, Conrad, Kuchinke, Urton, Hofmann and Jacobs2009), SentiWS (Remus et al. Reference Remus, Quasthoff, Heyer, Calzolari, Choukri, Maegaard, Mariani, Odijk, Piperidis, Rosner and Tapias2010) and GermanPolarityClues (GPC) (Waltinger Reference Waltinger2010). To account for domain-specific variations, we also included the finance-specific dictionary BPW (Bannier et al. Reference Bannier, Pauls and Walter2019) and the literary studies dictionary SentiLitKrit (SLK) (Du and Mellmann Reference Du and Mellmann2019) to also ensure coverage of humanities datasets. As the ‘death of the dictionary’ has already been claimed in Borst et al. (Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), it was decided not to include additional dictionary resources in the evaluation. Instead, we focus on extending our experiments by incorporating additional fine-tuned language models and, in particular, exploring a wide range of LLMs with zero-shot capabilities.

Fine-tuned language models

Task-specific, fine-tuned language models – often regarded as the precursors of modern LLMs – have proven to be a viable approach for sentiment analysis (Wang et al. Reference Wang, Xie, Feng, Ding, Yang and Xia2023). The fine-tuning of a BERT-based language model to the target data still achieves the highest performance for various application areas, including almost all SotA results for the datasets we tested, and has long served as a benchmark.Footnote 5 Off-the-shelf models with task- or domain-specific training offer a wide range of applications even without the availability of annotated data.

We extend the model selection of our previous study (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), where the German multi-domain model germanSentiment (Guhr et al. Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020) was tested as a representative of the fine-tuned models, by adding the multilingual RoBERTa-based model, XML-T (Barbieri et al. Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022). This model, fine-tuned on millions of tweets for various tasks in different languages, provides an additional perspective on domain transfer of sentiment classification models. It is noteworthy that the training data of the fine-tuned models used in this evaluation overlaps with our benchmark Twitter and review datasets. However, the comparison still serves to answer two important questions: How well do the zero-shot methods perform on these datasets even though the fine-tuned models have seen them during training? How well does the performance of these models trained on contemporary data transfer to texts from the humanities domain? This is of particular interest, as these models may yet provide a standard method for performing sentiment analysis in the computational humanities.

When comparing our results with germanSentiment, we must include a disclaimer, as we were unable to replicate the exact test sets used in their reported results. In fact, competing versions of the models, each using different pre-processing methods, result in slight variations in performance values. Although we attempted to follow the authors’ instructions for applying the models via Hugging Face, we were unable to reproduce the reported SotA results for any of the models. Therefore, for this particular model, our evaluation may differ from the values originally reported by the authors. Currently, the only sentiment classification models in German that have been fine-tuned for broader applicability to humanities texts are the two that were reported in this section, with no other ready-to-use models available. However, the models we tested provide insight into their performance transfer across different datasets and domains.

LLM-based zero-shot text classification

To systematise our evaluation study, we identified three popular categories of methods for LLM-based zero-shot text classification in the existing literature. Each category is represented in the experiments by a specific selection of pre-trained models.

Sentence pair classification

Sentence pair classification models are one possible approach to zero-shot text classification. The goal of sentence pair classification is to determine the relationship between two input sentences. Popular sentence pair tasks include NLI, also called entailment, or next sentence prediction (NSP). NSP assesses whether one sentence is likely to follow another in a text. In the case of NLI, the aim is to decide whether the second sentence logically entails or contradicts the first.

In our evaluation study, we use NLI as the reference method, as originally proposed in Yin et al. (Reference Yin, Hay, Roth, Jiang, Ng and Wan2019). In this approach a sentence pairs, called premise and hypothesis, are classified as ‘entailment,’ ‘contradiction’ or ‘neutral,’ based on how well the hypothesis logically entails the premise. For zero-shot classification, we formulate hypotheses using the target labels. These hypotheses are created using the hypothesis template: ‘The sentiment is [blank]’.Footnote 6 The blank is then filled with the sentiment categories negative, neutral and positive. For application purposes the hypothesis template can have substantial impact on the quality of classification, and is part of the optimisation process similar to prompt engineering (Liu et al. Reference Liu, Yuan, Fu, Jiang, Hayashi and Neubig2023). Since we aim at comparing these models with zero knowledge about domain-specific assumptions or vocabulary, we use the same for all datasets and models. Each model generates probability scores for each premise and hypothesis pair, corresponding to the different entailment classes. From these scores, we identify the hypothesis with the highest probability of ‘entailment’ as the classification outcome and assign the corresponding category. Although there is some criticism about the performance of these models, particularly their reliance on spurious correlations in superficial text elements (Ma et al. Reference Ma, Yao, Lin, Zhao, Zong, Xia, Li and Navigli2021), these models (and their variants) still perform very well, especially in sentiment classification (Shu et al. Reference Shu, Xu, Liu and Chen2022; Zhang et al. Reference Zhang, Li, Hauer, Shi, Kondrak, Bouamor, Pino and Bali2023). In Borst et al. (Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023) a pre-trained BERT-based model was used. For our evaluation study, we extend the model selection of our previous study with a multi-lingual mDeBERTa-based model.

Similarity-based and Siamese networks

Methods in this category use embeddings to jointly embed text and labels into the same semantic space. By applying a similarity function (e.g., cosine similarity), the embeddings of labels and text are compared, and the resulting scores determine the label with the highest score. Originally proposed by Socher et al. (Reference Socher, Ganjoo, Manning and Ng2013) and Veeranna et al. (Reference Veeranna, Nam, Mencıa and Furnkranz2016), this approach has also been adopted in more recent studies (Mueller and Dredze, Reference Mueller, Dredze, Toutanova, Rumshisky, Zettlemoyer, Hakkani-Tur, Beltagy, Bethard, Cotterell, Chakraborty and Zhou2021; Molnar Reference Molnar2022). The key advantage of similarity-based methods is that they do not require explicit training on labelled sentiment datasets. Instead, they rely on pre-trained sentence encoders that capture general semantic relationships, making them applicable in a zero-shot setting. The model does not need to be fine-tuned on task-specific data. Rather, it assigns labels by measuring the similarity between input text and predefined class labels embedded in the same vector space.

Recently, pre-trained LLMs have been chosen as the backbone for the Siamese approach, where two identical neural networks process text and labels separately but share parameters to ensure a unified embedding space. Sentence-BERT (Reimers and Gurevych Reference Reimers, Gurevych, Inui, Jiang, Ng and Wan2019) models present a viable option for this approach. These are a class of embedding models specifically fine-tuned to embed sentences and have significantly improved performance on the semantic textual similarity (STS) benchmarks (Cer et al. Reference Cer, Diab, Agirre, Lopez-Gazpio, Specia, Bethard, Carpuat, Apidianaki, Mohammad, Cer and Jurgens2017). The final selection includes the cross-lingual MPNET-XNLI and two RoBERTa-based models fine-tuned on STS, namely RoBERTaX and RoBERTa-Sentence.

Instruction-following models or dialogue-based systems

In addition to using the generative capabilities of LLMs to predict individual tokens, instruction-following models (Wei et al. Reference Wei, Bosma, Zhao, Guu, Yu, Lester, Du, Dai and Le2021; Ouyang et al. Reference Ouyang, Wu, Jiang, Almeida, Wainwright, Mishkin, Zhang, Agarwal, Slama, Ray, Schulman, Hilton, Kelton, Miller, Simens, Askell, Welinder, Christiano, Leike and Lowe2022) and dialogue-based systems (Peng et al. Reference Peng, Galley, He, Brockett, Liden, Nouri, Yu, Dolan and Gao2022; Zhang et al. Reference Zhang, Sun, Galley, Chen, Brockett, Gao, Gao, Liu, Dolan, Celikyilmaz and Wen2020), such as ChatGPT, have made powerful models accessible to a wider audience. By instructing these models to generate a label from a set of pre-defined classes based on a task description and text input, they can effectively act as zero-shot text classifiers. Initial evaluations of zero-shot classification performance show promising results (Gilardi et al. Reference Gilardi, Alizadeh and Kubli2023; Törnberg Reference Törnberg2023), although they also highlight the caveat of non-determinism, such as the influence of the temperature hyperparameter (Reiss Reference Reiss2023). A critical factor influencing performance in this context, is the formulation of the task description (White et al. Reference White, Fu, Hays, Sandborn, Olea, Gilbert, Elnashar, Spencer-Smith and Schmidt2023). Additionally, models of this size typically cannot be run on conventional local hardware. This limitation has spurred a trend towards smaller instruction-following models, such as Llama (Touvron et al. Reference Touvron, Lavril, Izacard, Martinet, Lachaux, Lacroix, Rozière, Goyal, Hambro, Azhar, Rodriguez, Joulin, Grave and Lample2023) and Mistral (Jiang et al. Reference Jiang, Sablayrolles, Mensch, Bamford, Chaplot, Casas, Bressand, Lengyel, Lample, Saulnier, Lavaud, Lachaux, Stock, Le Scao, Lavril, Wang, Lacroix and El Sayed2023), making dialogue-based models a viable alternative for locally executed classification tasks.

In selecting the models to test as a local alternative to industry leader GPT, particular attention was paid to technical compatibility. Specifically, the models had to run on an enterprise-grade NVIDIA A30 graphics card without quantisation. Quantisation is a technique for significantly reducing the hardware requirements of LLMs, but there is still a lack of sufficiently generalised understanding of its differential impact on different model families. Although recent studies suggest that moderate quantisation can be applied without significant performance impact (Jin et al. Reference Jin, Du, Huang, Liu, Luan, Wang, Xiong, Ku, Martins and Srikumar2024; Liu et al. Reference Liu, Liu, Gao, Gao, Zhao, Li, Ding, Wen, Kan, Hoste, Lenci, Sakti and Xue2024), the choice of a quantisation approach and the desired level of precision introduce additional experimental variables. Furthermore, the availability of an executable model variant on the Hugging Face platform (Wolf et al. Reference Wolf, Debut, Sanh, Chaumond, Delangue, Moi, Cistac, Rault, Louf, Funtowicz, Davison, Shleifer, von Platen, Ma, Jernite, Plu, Xu, Le Scao, Gugger, Drame, Lhoest, Rush, Liu and Schlangen2020) was considered essential to ensure potential applicability in humanities research. Although there are alternative solutions for running LLMs locally, such as Ollama Footnote 7 or llama.cpp,Footnote 8 Hugging Face offers a wide range of models that can be easily integrated and used in a straightforward manner. We place particular emphasis on models within the 7B to 9B parameter range, as this strikes a balance between memory consumption, inference time and performance. These models can also be used on conventional GPUs, allowing them to be run locally without the need for a dedicated high performance computing cluster.

The final selection includes gpt-3.5-turbo-0125 and gpt-4o-2024-08-06, as well as LLama-3.1 (Touvron et al. Reference Touvron, Lavril, Izacard, Martinet, Lachaux, Lacroix, Rozière, Goyal, Hambro, Azhar, Rodriguez, Joulin, Grave and Lample2023) in its 8B and 70B configurations and Ministral 8B. These models are trained on multilingual data that also includes German. In addition, recent developments in specialised instruction models were included to examine whether language specificity significantly affects the results, including SauerkrautLM (a German Gemma variant) and its English-only base model Gemma 2 9B as well asTeuken 7B, a project aimed at an optimised model for all EU languages, co-funded by the German government.

All local LLMs were tested in the same test setup with the same prompt. The text was not pre-processed, as in German, for example, the removal of punctuation marks can significantly alter the semantic context. By using the Hugging Face pipeline, the results could be transferred directly as text output in separate columns in the datasets, which reduces possible formatting problems, but could be a possible factor in terms of speed or performance. The quality of the output was highly sensitive to the choice of the prompt and the chosen parameters, a factor that should not be underestimated and is briefly discussed here.

The question of which prompt produces the best results is subject to constant change, as research into the use of dialogue-based LLMs progresses and the models themselves are continually improved. While the initial research on sentiment analysis with LLMs was based on simple, reduced prompts, which were thought to have the greatest potential (Kheiri and Karimi Reference Kheiri, Karimi, Ding, Lu, Wang, Di, Wu, Huan, Nambiar, Li, Ilievski, Baeza-Yates and Hu2024; Miah et al. Reference Miah, Kabir, Sarwar, Safran, Alfarhood and Mridha2024; Wu et al. Reference Wu, Ma, Zhang, Deng, He and Xue2024; Zhang et al. Reference Zhang, Deng, Liu, Pan, Bing, Duh, Gomez and Bethard2024), there are now numerous prompting strategies with sometimes very contradictory results. The possibility of enriching prompts with semantic context or exploiting the reasoning capacities of the models via a so-called chain-of-thought (COT), as well as the combination with few-shot approaches, did not necessarily lead to better results (Rauchegger et al. Reference Rauchegger, Wang, Delobelle, de Araujo, Baumann, Gromann, Krenn, Roth and Wiegand2024; Wang and Luo Reference Wang and Luo2023; Wu et al. Reference Wu, Ma, Zhang, Deng, He and Xue2024; Zhang et al. Reference Zhang, Deng, Liu, Pan, Bing, Duh, Gomez and Bethard2024). Furthermore, it is beyond the scope of this work to include multiple parameters to our broad model evaluation.

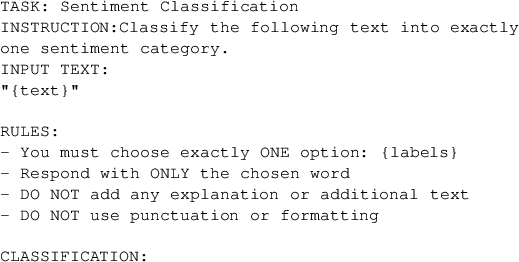

After some preliminary tests with a prompt as suggested by Kheiri and Karimi (Reference Kheiri, Karimi, Ding, Lu, Wang, Di, Wu, Huan, Nambiar, Li, Ilievski, Baeza-Yates and Hu2024) and a deterministic temperature setting, there were some significant deviations in the output. A more heavily formatted prompt based on current OpenAI recommendations was tested,Footnote 9 as well as various settings for the temperature. The combination of a temperature of 0.1 and the following prompt gave the highest consistency in the results over the entire test setup and was therefore adopted for all dialogue-based models:

Although this setup performed well in our tests, we refrain from making a generalised judgement due to the many additional influencing factors. In a non-deterministic setup, model behaviour may vary between different runs. This was investigated in preliminary tests, but no significant variations were observed. Despite the large context size of LLMs, the concatenation of multiple prompts per call introduced another source of error, leading us to adopt a slower, sequential inference approach. Nevertheless, incorrect outputs were observed in some cases, although they occurred least frequently in the chosen configuration. In this configuration, the only deviation from the expected labels was an empty output, which was considered an error and taken into account in the evaluation.

German language datasets

All the datasets used in this evaluation study were selected in the basis of open availability and mention of SotA results in recent research publications. Nevertheless, the availability of non-English datasets remains a major limiting factor and also poses a significant problem for the subsequent training of specialised language models. Following the evaluation design in (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), we used datasets from three different domains.

Humanities datasets

In addition to a total of seven contemporary German-language datasets that are based on social media posts and reviews, we also selected four domain-specific datasets from the humanties domain with a focus on historical German, which we henceforth refer to as Humanities Datasets. Based on previous research (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), the BBZ dataset (Wehrheim et al. Reference Wehrheim, Borst, Liebl, Burghardt and Spoerer2023) was created from articles published between 1872 and 1930 in the Berliner Börsenzeitung, a stock exchange newspaper. The dataset was annotated by a domain expert and contains polarity-based sentiment annotations for a total of 772 sentences.

The Lessing dataset (Schmidt et al. Reference Schmidt, Burghardt and Dennerlein2018) is another example of historical text analysis, comprising 200 speeches from Gotthold Ephraim Lessing’s theatre plays. These texts were annotated by five individual annotators and a domain expert, using binary sentiment labels. A similar approach was applied in the SentiLit–Krit (SLK) dataset (Du and Mellmann Reference Du and Mellmann2019), which consists of German literary criticism extracted from historical newspapers. This dataset includes 1,010 binary annotated sentences and originates from the same time period as the BBZ corpus. A broader time span is covered in the German Novel Dataset (GND) (Zehe et al. Reference Zehe, Becker, Jannidis, Hotho, Kern-Isberner, Fürnkranz and Thimm2017), which was derived from the German Novel Corpus through crowdsourced annotation. It contains 270 sentences from various literary works, offering insights into sentiment analysis in a wider historical context.

Twitter datasets

The GermEval 2017 dataset (Wojatzki et al. Reference Wojatzki, Ruppert, Holschneider, Zesch, Biemann, Wojatzki (Hrsg.), Ruppert (Hrsg.), Zesch (Hrsg.) and Biemann (Hrsg.)2017) is based on tweets and other social media posts related to Deutsche Bahn and was compiled between 2015 and 2016 as a benchmark dataset for various sentiment-related tasks. The dataset was cleaned, manually annotated and sampled for evaluation. We use the predefined synchronous test set with 2,566 examples labelled with positive, neutral or negative values. The PotTs (Sidarenka Reference Sidarenka, Calzolari, Choukri, Declerck, Goggi, Grobelnik, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2016) dataset contains 7,504 items from tweets during the 2013 German federal election and other political topics. The SB10k (Cieliebak et al. Reference Cieliebak, Deriu, Egger, Uzdilli, Ku and Li2017) dataset contains tweets from 2013 and was created with the intention of creating a German reference dataset. In a first step, the tweets were clustered to achieve a broad coverage of topics. In a second step, the contained polarity words were compared with the German Polarity Clues Lexicon (Waltinger Reference Waltinger2010) to ensure that actual sentiments were included. These clusters were then balance-sampled and manually annotated. However, as the published dataset only includes the Twitter link and annotation, it was decided to use the version created by Guhr et al. (Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020), which includes all tweets as text and the three labels positive, neutral and negative. SB10k is also often used as a German Twitter benchmark dataset in multilingual sentiment analysis experiments (Barbieri et al. Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022).

Review datasets

Datasets consisting of reviews are often used for sentiment analysis evaluation, because they are widely available and easily accessible. Many studies follow the approach of Pang, Lee, and Vaithyanathan (Reference Pang, Lee, Vaithyanathan, Hajič and Matsumoto2002), who categorise reviews as positive or negative based on their star ratings (Guhr et al. Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020; Manias et al. Reference Manias, Mavrogiorgou, Kiourtis, Symvoulidis and Kyriazis2023). For the sake of consistency, we used the same labelling strategy for our evaluation study as was used for the SotA results. This means that in most cases, 3-star reviews were excluded due to the difficulty in assigning a clear positive or negative label.Footnote 10

The Amazon Review (Keung et al. Reference Keung, Lu, Szarvas, Smith, Webber, Cohn, He and Liu2020) dataset is based on product reviews from 2015 to 2019 and includes multiple languages with equal proportions of entries. The pre-defined test set of 5,000 items was selected for the German language. The Filmstarts (FS) and Holidaycheck (HC) datasets were both created by Guhr et al. (Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020) for general sentiment classification in the German language and contain film and hotel reviews. The resulting datasets contain 55,620 entries for Filmstarts and almost 3,3 million entries for Holidaycheck. Furthermore, the SCARE (Sänger et al., Reference Sänger, Leser, Kemmerer, Adolphs, Klinger, Calzolari, Choukri, Declerck, Grobelnik, Maegaard, Mariani, Moreno, Odijk and Piperidis2016) dataset was selected, as it contains around 735,000 German-language reviews of almost 150 selected apps from the Google Playstore. The largest datasets, Holidaycheck and SCARE, were each sampled at 100,000 entries to reduce the significant inference time. An overview of the scope of the data records, a breakdown by represented labels and the average number of words per text in the data set, their standard deviation, and the covered time span in years is shown in Table 2.

Conclusive remarks on datasets

Regardless of domain and scope, the datasets vary in quality, which has a direct impact on the difficulty of classification. In addition, different annotation methods introduce a certain bias, as they shape the expectations about the nature of the data and its sentiment distribution. An important issue that often leads to problems with polarity-based sentiment detection is the ambiguity of the neutral label. This class can represent both mixed and non-sentiment statements and is not uniformly defined in its use. Especially in the case of reviews, the assumption that a mediocre rating is equivalent to neutral is problematic, so such ratings are often excluded (Guhr et al. Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020; Pang, Lee, and Vaithyanathan Reference Pang, Lee, Vaithyanathan, Hajič and Matsumoto2002). This decision is made primarily in the interest of optimising the classification metrics. In the context of humanities data, texts that are often ambiguous and fall between binary labels. Consequently, while the task is simplified by reducing the range of labels to binary options, the semantic quality of the classification itself and the applicability in more specific scenarios may be compromised. The resulting binary labels can usually be assigned more easily due to their diametric characters, which explains the extremely high classification results of all methods. Outside of this simple task, the SotA scores are significantly lower, indicating a higher level of difficulty.

However, a fundamental problem with user-generated content such as tweets is the large variation in text quality. While the GermEval dataset contains a large proportion of longer, official tweets with a clearer structure, the PotTs dataset contains a large number of semantically unclear texts in various samples. The assignment of these texts to a sentiment label seemed questionable. In contrast, the high SotA values on the BBZ data showed that even temporally and contingently extremely specific language contexts can be learned and used in the sentiment analysis task (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023). Notably, the texts and annotations of the GND dataset proved to be significantly less accurate, as reflected in the poor performance of all the models tested so far on this dataset. This raises the question of whether a model that performs optimally on such mediocre data, or on a simplified task, will also perform well on other datasets or domains. As social media and review data are widely available, there is an imbalance in the optimisation of model development and optimisation in favour of these domains. This has to be taken into account for the specific questions in the humanities, which also lead to other data qualities and requirements.

Results

In presenting the results, we would like to emphasise that the aim of this evaluation study was primarily to assess how well different models can adapt to different application contexts, rather than to identify the optimal model for a particular dataset. The evaluation was carried out by comparing the results of the different approaches with the SotA values for the different datasets as they are reported in the literature. In addition, we were interested in analysing performance across different domains and model classes in order to obtain a balanced perspective on the trade-offs and capabilities involved in domain adaptation. Table 3 shows the micro-F1 evaluation scores for all datasets and approaches. Overall, the zero-shot models perform reasonably well, with some notable exceptions. Most of the zero-shot approaches outperform the dictionary baseline, and some even reach performance levels of previous SotA scores. However, the exact performance on a given dataset seems to depend not only on the method and task, but also significantly on the choice of the specific model.

Table 3. This table presents the evaluation of all models and methods in this study based on micro F1 scores

Note: The columns represent the datasets, categorized into three parts: humanities datasets, contemporary Twitter datasets and contemporary review datasets. The rows list the tested models, grouped by their associated methods. Comparative SotA values taken from BBZ (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), GND (Zehe et al. Reference Zehe, Becker, Jannidis, Hotho, Kern-Isberner, Fürnkranz and Thimm2017), Lessing (Schmidt and Burghardt Reference Schmidt, Burghardt and Alex2018b), SLK (Du and Mellmann Reference Du and Mellmann2019), GermEval (Idrissi-Yaghir et al. Reference Idrissi-Yaghir, Schäfer, Bauer and Friedrich2023), SB10k (Barbieri et al. Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022), Amazon (Manias et al. Reference Manias, Mavrogiorgou, Kiourtis, Symvoulidis and Kyriazis2023) and PotTs, Filmstarts, Holidaycheck and SCARE (Guhr et al. Reference Guhr, Schumann, Bahrmann, Böhme, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Moreno, Odijk and Piperidis2020). The best value for each dataset is shown in bold.

Another notable observation is that all zero-shot models and dictionary-based approaches show slightly lower performance on Twitter datasets. This underperformance is likely due to the aforementioned problems associated with the low quality of language in Twitter data. In domains where large amounts of training data are available, such as the 200 million tweets used to train XLM-T (Barbieri et al. Reference Barbieri, Anke, Camacho-Collados, Calzolari, Béchet, Blache, Choukri, Cieri, Declerck, Goggi, Isahara, Maegaard, Mariani, Mazo, Odijk and Piperidis2022), or in cases where the data has a high degree of specificity, such as the qualitative BBZ dataset, the classification performance achieved by fine-tuned SotA language models remains superior to that of any zero-shot approach. A similar trend is observed in the mixed-domain GermEval dataset, given the performance gap between the SotA of 85.1% – achieved using a fine-tuned language model – and our best result with Llama 70B at 63.7%, as well as the performance of XLM-T. This further supports the notion that specifically fine-tuned models are likely to continue to outperform LLM-based zero-shot approaches on highly specialised tasks (Kheiri and Karimi Reference Kheiri, Karimi, Ding, Lu, Wang, Di, Wu, Huan, Nambiar, Li, Ilievski, Baeza-Yates and Hu2024; Wang et al. Reference Wang, Xie, Feng, Ding, Yang and Xia2023; Zhang et al. Reference Zhang, Deng, Liu, Pan, Bing, Duh, Gomez and Bethard2024) Building on previous findings (Borst et al. Reference Borst, Klähn, Burghardt, Šeļa, Jannidis and Romanowska2023), it is evident that dictionaries are no longer competitive in terms of classification performance, especially when modern dialogue-based language models are used. Moreover, the addition of XLM-T, which is primarily trained on Twitter data, reinforces the observation that the generalisation capability of earlier language models remains limited, even when task-specific refinements are applied to divergent domains.

Many models exhibit performance outliers, and even those that generally perform well tend to have weaknesses on at least one dataset. For example, while NLI-based models typically perform well, they show weaknesses on the GermEval and SB10k datasets. It is worth highlighting once again the strong performance of NLI models on humanities datasets. In particular, compared to other underperforming zero-shot approaches, such as the Siamese models, the relatively small NLI models are able to compete with dialogue-based models. Within the family of dialogue-based models, gemma-2-9b stands out as a significant performance outlier. Of all the variants tested, it has by far the weakest performance, with the model failing to produce valid responses in the majority of cases. The main reason for this poor performance probably is the fact that in our evaluation setup, gemma-2-9b is the only monolingual English language LLM that did not receive any specific pre-training on German content. In contrast, SauerkrautLM-9b, which originates from the same model family but has been explicitly fine-tuned for the German language, achieved strong results. Although this requires further analysis, it suggests that even in the era of LLMs, language barriers remain a significant factor and cannot be completely ignored. This aspect should not be underestimated, especially with regard to less widely used languages. On the other hand, the Teuken model, despite its extensive training in German, cannot compete with privately developed models. All the other LLMs show strong performance, albeit with some fluctuations. For this reason, we have adopted a broader perspective to facilitate the comparison of performance. In order to assess broad applicability, performance in different domains was evaluated relative to the previously established best results (SotA).

When analysing the aggregated performance, as shown in Table 4, certain models stand out as being particularly well suited. While the two largest models achieve the highest classification performance, as expected, the performance gap between them and language models in the range of 8–9 billion parameters is not significant on a global scale. The best overall performer is Llama-3.1-70B, which achieves an average of approximately 97% of SotA performance, making it a reliable default choice for many sentiment classification tasks. It is closely followed by the proprietary OpenAI models gpt-4o and gpt-3.5-turbo, as well as the smaller LLMs Llama-3.1-8B, SauerkrautLM-9b and Mistral-8B. Although the OpenAI models perform very well in comparison, they do not outperform the Llama-3.1-70B model, nor do they show a substantial advantage over smaller models, despite being by far the largest, with at least 175 billion parameters. NLI models follow closely, with BERT achieving about 84% of SotA performance. These models, particularly on humanities datasets, perform comparably to results reported in the literature, whereas dictionaries as well as Siamese approaches fall significantly short.

Table 4. This table aggregates Table 3, presenting the F1-score averaged across all datasets within each domain

Note: Additionally, the values in brackets represent the averages obtained after normalising each score by dividing it by the corresponding SotA value for the specific dataset. This normalisation enables a more comprehensive evaluation of the models’ performance relative to the established benchmark. The best value for each domain is shown in bold.

From a purely performance-oriented perspective dialogue-based and NLI methods prove to be robust options for sentiment classification in the German language domain. Humanities research often faces the challenge of working with small but highly domain- and task-specific datasets. In such cases, zero-shot approaches, which do not require fine-tuning or retraining, can offer significant advantages. Beyond performance considerations, however, there are important limitations to consider when evaluating these models and methods. As one moves from efficient and interpretable dictionary-based approaches to large, closed-source language models such as gpt-4o, a clear trade-off emerges: increased resource requirements and reduced transparency and interpretability. These trade-offs and their wider implications are discussed in more detail in the following section.

Discussion

In this chapter we critically examine the findings of our evaluation from several perspectives, considering both methodological and practical implications. We first explore the trade-offs between transparency, interpretability and accessibility in different sentiment classification approaches, highlighting the limitations of dictionary-based methods and the challenges posed by the increasing reliance on closed-source LLMs (see Section Transparency, interpretability and accessibility). This is followed by an examination of scalability and resource considerations, where we discuss the computational requirements of different model sizes, the feasibility of running LLMs on consumer-grade hardware, and the implications of quantisation strategies for practical deployment (see Section Scalability and resource considerations). We then consider the impact of non-determinism on inference errors, addressing the variability in LLM outputs, the role of hyperparameter tuning (e.g., temperature settings) and the challenges of ensuring consistency and reliability in zero-shot classification tasks (see Section The impact of non-determinism on model errors). Finally, we discuss the complexities of model selection and performance prediction, assessing how differences in training data, multilingual capabilities and benchmark design affect sentiment classification results, particularly in the context of computational humanities (see Section Model selection and predicting performance). By taking these different perspectives, this discussion aims to provide a nuanced understanding of the strengths, limitations and broader implications of using LLMs for sentiment classification on German-language data.

Transparency, interpretability and accessibility

Dictionaries provide a straightforward, explainable and interpretable approach to sentiment classification while also being highly efficient. Their transparent decision-making processes make them particularly valuable in scenarios where interpretability and low computational cost are required. However, their performance often lags behind more advanced alternatives, especially in nuanced or ambiguous language contexts. While NLI- and dialogue-based approaches provide viable alternatives with superior performance, they also introduce important considerations regarding model interpretability, transparency and accessibility.

Unlike dictionary methods, language model-based classifiers do not provide direct insight into their decision-making processes. Efforts have been made to explain how these classifiers arrive at specific decisions under the umbrella term interpretable AI (Lundberg and Lee Reference Lundberg, Lee, Guyon, Luxburg, Bengio, Wallach, Fergus, Vishwanathan and Garnett2017; Molnar, Reference Molnar2022; Shrikumar et al. Reference Shrikumar, Greenside and Kundaje2017; Simonyan et al. Reference Simonyan, Vedaldi and Zisserman2014), although these explanations are largely based on indirect mathematical approximations. These methods face additional challenges when applied to text generation models (Amara et al. Reference Amara, Sevastjanova, El-Assady, Longo, Lapuschki and Seifert2024): At the user level, the ability of generative models to produce not only a classification but also a proposed explanation offers an possibility of tracing the assumed reasoning process. However, this so-called CoT does not accurately reflect the underlying technical processes (Wei et al. Reference Wei, Wang, Schuurmans, Bosma, Ichter, Xia, Chi, Le, Zhou, Koyejo, Mohamed, Agarwal, Belgrave, Cho and Oh2022). Although this still may be a viable approach for a small number of examples, scaling to larger datasets is likely to result in an overwhelming volume of generated explanations, significantly increasing the effort required for validation and analysis. Beyond performance and interpretability, another layer of consideration is transparency and accessibility. NLI models and non-proprietary LLMs can be used entirely offline, as they are open weight and, in many cases, open-source.Footnote 11 In contrast, model transparency is diminished in closed-source systems like GPT-3.5-turbo and GPT-4o, which are accessible only through ChatGPT or the OpenAI API. These proprietary models are primarily commercial products that can only be used according to the manufacturer’s specifications and fee structures. While this simplifies accessibility and reduces the need for extensive hardware resources and technical expertise, it lacks direct control and reproducibility, and may even raise concerns about privacy or other ethical issues.

In addition, due to the immense costs associated with training and developing language models, there has been a shift away from the original open-source licensing seen in earlier models such as BERT (Devlin et al. Reference Devlin, Chang, Lee, Toutanova, Burstein, Doran and Solorio2019) and DeBERTa (He et al. Reference He, Gao and Chen2023), which remain openly accessible, shareable and reusable, thereby improving reproducibility for researchers and practitioners. Although some smaller LLMs can be fine-tuned and have publicly available code, many are no longer fully open-source, but only open weights, due to the specific terms of their individual licences.Footnote 12 In general, decreasing openness can lead to many concerns in terms of regulation, legal, or ethical issues (Liesenfeld and Dingemanse Reference Liesenfeld and Dingemanse2024; Liesenfeld et al. Reference Liesenfeld, Lopez and Dingemanse2023). In research practice, it remains important to consider this trade-off as part of the selection process: Although proprietary applications such as ChatGPT facilitate access to these technologies, the ability to download, view, reuse, share and reproduce them may lead to the choice of an LLM with a higher degree of openness.

Scalability and resource considerations

The size of dialogue-based LLMs varies considerably, ranging from about 1 billion to 405 billion parameters, with Llama-3.1-70B being the largest model tested as part of this evaluation study. Running Llama-70B at FP16 precision requires approximately 148 GB of VRAM, whereas models with 7–9 billion parameters can be accommodated within approximately 24 GB of VRAM. This implies that smaller models can be run locally for inference on consumer-grade hardware, such as a gaming GPU. In contrast, running the 70B model requires the use of eight enterprise-grade NVIDIA A30 GPUs. Given these hardware constraints, the impact of different quantisation settings in this context warrants further investigation. While 70B models are not easily executable on a single enterprise-grade GPU, even with a reduction in precision to 4-bit quantisation, an 8B model with a modest quantisation to 8 bits can run on a wide range of high-performance consumer and professional devices. Given the performance observed in this study, 8B models such as Llama-3.1-8B may represent an optimal balance, offering a trade-off between hardware requirements, performance and open source accessibility.

NLI-based methods, on the other hand, continue to offer a low-resource alternative. Most NLI models are fine-tuned variants of BERT, typically containing less than a billion parameters. These models require minimal hardware resources and can even run efficiently on modern laptops. For example, the BERT model used in this study consists of only 337 million parameters and fits comfortably within 8 GB of VRAM, which is considered the minimum for today’s GPU hardware. Given their strong performance, particularly on humanities datasets, NLI models represent a compelling alternative to larger dialogue-based models. This highlights an important trade-off: the choice between paying directly for access to proprietary systems, such as OpenAI’s APIs,Footnote 13 versus investing in hardware resources to run models locally. Another important consideration is that inference and, if necessary, training times are highly dependent on the hardware used. While dictionary-based approaches are highly efficient and do not require specialised hardware, neural network-based classifiers benefit greatly from GPU acceleration, often leading to substantial speed improvements. In addition, runtime is affected by the length of the input text, making absolute comparisons difficult. However, while dictionary-based evaluations could be performed in seconds on a standard laptop CPU, the use of language models resulted in considerably longer run times. As shown in Table 5, using the Amazon dataset as an example, an increase in the number of parameters corresponds to a noticeable decrease in processing speed. This effect is particularly pronounced for the largest model, Llama-3.1-70B, making computational efficiency a crucial factor to consider when using such models.

Table 5. Model runtime and items processed per second on the Amazon dataset

The impact of non-determinism on model errors

The application of dialogue models to zero-shot text classification has required practical decisions about prompt design and hyperparameter selection. Language models generate text in a non-deterministic way, by sampling the next token from a probability distribution over the vocabulary based on the given input sequence. Consequently, there is an inherent degree of randomness in the generated text, meaning that the same input may produce different outputs upon each execution. This sampling process is controlled by several hyperparameters, of which temperature is the most important. Higher temperature values increase randomness, potentially enhancing creativity, while lower values reduce variability, resulting in more deterministic outputs and improved reproducibility.

However, for any given input prompt and text example, the model may produce incorrect outputs that do not match the required structure – in this case, one of the predefined labels. In our study, we found that setting the temperature to zero slightly improved response accuracy, but resulted in a higher error rate. To achieve a balance, we set the temperature to 0.1, aiming to reduce error rates while ensuring that the model remained focused on the task without introducing excessive creativity. An analytical examination of the errors did not reveal any systematic patterns in their occurrence, either across the datasets or across the models, with the notable exception of the gemma base model, whose poor performance was primarily due to its high error rate as seen in Table 6.

Table 6. Total number of incorrect outputs of all 280,418 data records by model

The absence of German in the training data contributes greatly to the exceptionally high error sensitivity of gemma. Even subsequent fine-tuning, as tested with SauerkrautLM-9b, leads to significant performance improvements but fails to reduce the error rate to the level of models that were originally trained on multilingual data. Furthermore, the error rate does not always correspond to the classification performance. For example, the Teuken-7B model has the lowest error rate but yet delivers the weakest overall results. At the dataset level, the error distribution varies significantly between the models. For example, the best performing model in our test, Llama-3.1-70B, produces errors in only 4 out of the 11 datasets tested, but accounts for 99% of the errors in the Holidaycheck dataset. Although a disproportionate number of errors come from datasets with the neutral label, there is minimal overlap between the specific datasets that lead to incorrect outputs, suggesting that error patterns are dataset dependent rather than model specific.

Another critical factor influencing both classification performance and error rates is the precise formulation of the prompt. Identifying the optimal prompt is not only a task-specific challenge but also a model-specific optimisation process. As we used a standardised template across all datasets and models in this study, the evaluation revealed considerable variability in the performance of different models. Rather than optimising prompts for specific settings, improvements in prompt design may be better achieved by following established best practices and general guidelines, which remain an active area of research. For sentiment classification, previous experiments with optimised prompts have not produced consistently reliable improvements (Wang and Luo Reference Wang and Luo2023; Wu et al. Reference Wu, Ma, Zhang, Deng, He and Xue2024; Zhang et al. Reference Zhang, Deng, Liu, Pan, Bing, Duh, Gomez and Bethard2024). In addition, techniques that rely on multiple sub-prompts rather than a single prompt – such as some CoT approaches – not only significantly increase runtime but also lead to substantially higher costs when using fee-based services like GPT-4. Although certain trends can be inferred from the growing body of research, the sheer number of possible parameter variations and the model-specific performance fluctuations make systematic testing almost unmanageable. This challenge is further compounded by the rapid emergence of new model variants, which are introduced on an almost weekly basis.

Model selection and predicting performance

While some models perform well, others underperform significantly, raising the question: what determines model performance? Although larger models tend to perform better in general, there is variability even among models of comparable size. Each model has weaknesses for specific datasets, making it difficult to identify generalisable patterns. The broad applicability of LLMs has led to the development of increasingly complex and combined benchmarks. While these benchmarks provide a useful aggregated comparison of models, they often lack meaningful insights for specific use cases, limiting their practical applicability in individual scenarios. This highlights the ongoing challenge of predicting a model’s overall ability to perform specific tasks based on its advertised performance or its pre-training factors.

As with the first transformer models, the initial training of LLMs requires vast amounts of data, yet the details of this data are often not reported transparently. This lack of transparency is particularly problematic for non-English languages. Even in relatively open multilingual models such as Teuken, English content remains dominant. For the Llama family, the exact composition of training languages is completely unknown. Furthermore, benchmark datasets are often included in the training, making it difficult to ascertain whether predictions on publicly available datasets truly represent zero-shot classification. While this may not be a critical issue for practical applications, it highlights a broader dilemma regarding the transparency and the integrity of model evaluation.

When the generic performance metrics used to promote models do not provide clear insight into their suitability for a specific application, annotated data is required to evaluate classification performance. The mixed results for dialogue-based LLMs – where neither the industry leader GPT nor any other model showed clear dominance over its competitors, including NLI models – emphasise the limitations of standard evaluation metrics. These results suggest that commonly used benchmarks do not necessarily indicate the best performing model for sentiment analysis in specific humanities datasets. It also implies that the results obtained for German-language texts may not be directly transferable to other languages.

Conclusion

In this work, we have conducted a comprehensive evaluation of sentiment analysis techniques for German-language data, comparing dictionary-based methods, fine-tuned models and various zero-shot approaches, with a particular focus on dialogue-based LLMs. The results confirm that while fine-tuned models still provide the best performance in data-rich environments, their applicability is limited by the need for extensive training data and computational resources, and the adaptability to other domains is severely restricted. Although newly developed LLMs demonstrate remarkable performance in various contexts, their systematic application introduces a variety of new factors that need be considered.

While proprietary models such as GPT-4o perform well, they do not consistently outperform open-weight alternatives such as Llama-3.1-70B, which achieved the highest overall classification performance. This suggests that local, open-weight LLMs can serve as a viable alternative to proprietary models, provided that sufficient computational resources are available. The high performance of the models is accompanied by high resource consumption. While the smaller LLMs can still be operated with powerful consumer hardware, the best model we tested can only be used in a high performance cluster environment. However, the computational cost of running large models remains a critical factor, with smaller models such as Llama-3.1-8B or SauerkrautLM-9b offering a more practical balance between efficiency and performance. In some cases the differences in performance compared to the much less resource-intensive NLI approaches were marginal, suggesting that the number of parameters does not necessarily lead to better results. While the low-threshold nature of text input lowers barriers to entry, it also means that the generalisability of the results is limited by new parameters such as temperature and individual prompts. LLMs present challenges in terms of non-determinism, prompt sensitivity and resource requirements, making their systematic evaluation and deployment difficult. The unpredictability of model performance across different datasets emphasises the limitations of commonly used benchmarks for LLMs in assessing real-world applicability. The high complexity of the models also reduces transparency on several levels. First, the high cost of developing LLMs are increasingly pushing language models into the role of a commercial product whose components are restrictively hidden. Individual fine-tuning or even in-house development for specific research purposes is also almost impossible for individual academic institutions to finance, resulting in a considerable dependence on the published models. Second, the combined benchmarks used in LLM rankings are limited in their ability to indicate aptitude for a particular task or domain. This is even more apparent when the language is not English. Although the multilingual models for sentiment analysis had no problems with German, the English-only model showed that language specificity does exist. Conclusions about other languages are therefore not possible due to the unclear proportions of language composition in the training.

Future research should include further systematic investigation of prompt optimisation, quantification, or the potential of these models under the few-shot paradigm. Nevertheless, this study shows that, with the necessary precautions and appropriate hardware, the use of LLMs for sentiment analysis in German is a promising alternative to both dictionary-based approaches and fine-tuned models.

Data availability statement

The code needed to replicate the experiments and information on where and how to get the data, as well as detailed classification reports, can be found here: https://github.com/JK67FARE/llm_sentiment_german.

Disclosure of use of AI tools

This manuscript was prepared solely by the authors. ChatGPT4o and DeeplWrite were used to improve language and style, and to enhance clarity and readability. However, all original ideas, analyses and original chapter drafts are the genuine work of the authors. Occasionally, ChatGPT4o was used to draft low-novelty text (see the ACL 2023 Policy on AI Writing Assistance https://2023.aclweb.org/blog/ACL-2023-policy/), for instance for the abstract. No AI-generated content was included without human verification and revision. The authors take full responsibility for the final text.

Author contributions

J.K.: Conceptualization, data curation, formal analysis, investigation, software, validation, writing – original draft. J.B.-G.: Conceptualization, data curation, formal analysis, investigation, software, validation, writing – original draft. M.B.: Conceptualization, funding acquisition, supervision, writing – review and editing. All authors approved the final submitted draft.

Funding statement

This research was enabled through previous funding by the German Research Foundation (DFG) under project number BU 3502/1-1.

Competing interests

The authors declare none.

Rapid Responses

No Rapid Responses have been published for this article.