1. Introduction

One of the well-known applications for expansions of the Brownian bridge is the strong or

![]() $L^2(\mathbb{P})$

approximation of stochastic integrals. Most notably, the second iterated integrals of Brownian motion are required by high order strong numerical methods for general stochastic differential equations (SDEs), as discussed in [Reference Clark and Cameron4, Reference Kloeden and Platen22, Reference Rößler33]. Due to integration by parts, such integrals can be expressed in terms of the increment and Lévy area of Brownian motion. The approximation of multidimensional Lévy area is well studied, see [Reference Davie5, Reference Dickinson8, Reference Foster11, Reference Gaines and Lyons13, Reference Gaines and Lyons14, Reference Kloeden, Platen and Wright23, Reference Kuznetsov25, Reference Mrongowius and Rößler32, Reference Wiktorsson35], with the majority of the algorithms proposed being based on a Fourier series expansion or the standard piecewise linear approximation of Brownian motion. Some alternatives include [Reference Davie5, Reference Foster11, Reference Kuznetsov25] which consider methods associated with a polynomial expansion of the Brownian bridge.

$L^2(\mathbb{P})$

approximation of stochastic integrals. Most notably, the second iterated integrals of Brownian motion are required by high order strong numerical methods for general stochastic differential equations (SDEs), as discussed in [Reference Clark and Cameron4, Reference Kloeden and Platen22, Reference Rößler33]. Due to integration by parts, such integrals can be expressed in terms of the increment and Lévy area of Brownian motion. The approximation of multidimensional Lévy area is well studied, see [Reference Davie5, Reference Dickinson8, Reference Foster11, Reference Gaines and Lyons13, Reference Gaines and Lyons14, Reference Kloeden, Platen and Wright23, Reference Kuznetsov25, Reference Mrongowius and Rößler32, Reference Wiktorsson35], with the majority of the algorithms proposed being based on a Fourier series expansion or the standard piecewise linear approximation of Brownian motion. Some alternatives include [Reference Davie5, Reference Foster11, Reference Kuznetsov25] which consider methods associated with a polynomial expansion of the Brownian bridge.

Since the advent of Multilevel Monte Carlo (MLMC), introduced by Giles in [Reference Giles16] and subsequently developed in [Reference Belomestny and Nagapetyan2, Reference Debrabant, Ghasemifard and Mattsson6, Reference Debrabant and Rößler7, Reference Giles15, Reference Giles and Szpruch17], Lévy area approximation has become less prominent in the literature. In particular, the antithetic MLMC method introduced by Giles and Szpruch in [Reference Giles and Szpruch17] achieves the optimal complexity for the weak approximation of multidimensional SDEs without the need to generate Brownian Lévy area. That said, there are concrete applications where the simulation of Lévy area is beneficial, such as for sampling from non-log-concave distributions using Itô diffusions. For these sampling problems, high order strong convergence properties of the SDE solver lead to faster mixing properties of the resulting Markov chain Monte Carlo (MCMC) algorithm, see [Reference Li, Wu, Mackey and Erdogdu26].

In this paper, we compare the approximations of Lévy area based on the Fourier series expansion and on a polynomial expansion of the Brownian bridge. We particularly observe their convergence rates and link those to the fluctuation processes associated with the different expansions of the Brownian bridge. The fluctuation process for the polynomial expansion is studied in [Reference Habermann19], and our study of the fluctuation process for the Fourier series expansion allows us, at the same time, to determine the fluctuation process for the Karhunen–Loève expansion of the Brownian bridge. As an attractive side result, we extend the required analysis to obtain a stand-alone derivation of the values of the Riemann zeta function at even positive integers. Throughout, we denote the positive integers by

![]() $\mathbb{N}$

and the nonnegative integers by

$\mathbb{N}$

and the nonnegative integers by

![]() ${\mathbb{N}}_0$

.

${\mathbb{N}}_0$

.

Let us start by considering a Brownian bridge

![]() $(B_t)_{t\in [0,1]}$

in

$(B_t)_{t\in [0,1]}$

in

![]() $\mathbb{R}$

with

$\mathbb{R}$

with

![]() $B_0=B_1=0$

. This is the unique continuous-time Gaussian process with mean zero and whose covariance function

$B_0=B_1=0$

. This is the unique continuous-time Gaussian process with mean zero and whose covariance function

![]() $K_B$

is given by, for

$K_B$

is given by, for

![]() $s,t\in [0,1]$

,

$s,t\in [0,1]$

,

We are concerned with the following three expansions of the Brownian bridge. The Karhunen–Loève expansion of the Brownian bridge, see Loève [[Reference Loève27], p. 144], is of the form, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

\begin{equation} B_t=\sum _{k=1}^\infty \frac{2\sin\!(k\pi t)}{k\pi } \int _0^1\cos\!(k\pi r){\mathrm{d}} B_r. \end{equation}

\begin{equation} B_t=\sum _{k=1}^\infty \frac{2\sin\!(k\pi t)}{k\pi } \int _0^1\cos\!(k\pi r){\mathrm{d}} B_r. \end{equation}

The Fourier series expansion of the Brownian bridge, see Kloeden–Platen [[Reference Kloeden and Platen22], p. 198] or Kahane [[Reference Kahane21], Sect. 16.3], yields, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

\begin{equation} B_t=\frac{1}{2}a_0+\sum _{k=1}^\infty \left ( a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t) \right ), \end{equation}

\begin{equation} B_t=\frac{1}{2}a_0+\sum _{k=1}^\infty \left ( a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t) \right ), \end{equation}

where, for

![]() $k\in{\mathbb{N}}_0$

,

$k\in{\mathbb{N}}_0$

,

A polynomial expansion of the Brownian bridge in terms of the shifted Legendre polynomials

![]() $Q_k$

on the interval

$Q_k$

on the interval

![]() $[0,1]$

of degree

$[0,1]$

of degree

![]() $k$

, see [Reference Foster, Lyons and Oberhauser12, Reference Habermann19], is given by, for

$k$

, see [Reference Foster, Lyons and Oberhauser12, Reference Habermann19], is given by, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

\begin{equation} B_t=\sum _{k=1}^\infty (2k+1) c_k \int _0^t Q_k(r){\mathrm{d}} r, \end{equation}

\begin{equation} B_t=\sum _{k=1}^\infty (2k+1) c_k \int _0^t Q_k(r){\mathrm{d}} r, \end{equation}

where, for

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

These expansions are summarised in Table A1 in Appendix A and they are discussed in more detail in Section 2. For an implementation of the corresponding approximations for Brownian motion as Chebfun examples into MATLAB, see Filip, Javeed and Trefethen [Reference Filip, Javeed and Trefethen9] as well as Trefethen [Reference Trefethen34].

We remark that the polynomial expansion (1.5) can be viewed as a Karhunen–Loève expansion of the Brownian bridge with respect to the weight function

![]() $w$

on

$w$

on

![]() $(0,1)$

given by

$(0,1)$

given by

![]() $w(t) = \frac{1}{t(1-t)}$

. This approach is employed in [Reference Foster, Lyons and Oberhauser12] to derive the expansion along with the standard optimality property of Karhunen–Loève expansions. In this setting, the polynomial approximation of

$w(t) = \frac{1}{t(1-t)}$

. This approach is employed in [Reference Foster, Lyons and Oberhauser12] to derive the expansion along with the standard optimality property of Karhunen–Loève expansions. In this setting, the polynomial approximation of

![]() $(B_t)_{t\in [0,1]}$

is optimal among truncated series expansions in a weighted

$(B_t)_{t\in [0,1]}$

is optimal among truncated series expansions in a weighted

![]() $L^2(\mathbb{P})$

sense corresponding to the nonconstant weight function

$L^2(\mathbb{P})$

sense corresponding to the nonconstant weight function

![]() $w$

. To avoid confusion, we still adopt the convention throughout to reserve the term Karhunen–Loève expansion for (1.2), whereas (1.5) will be referred to as the polynomial expansion.

$w$

. To avoid confusion, we still adopt the convention throughout to reserve the term Karhunen–Loève expansion for (1.2), whereas (1.5) will be referred to as the polynomial expansion.

Before we investigate the approximations of Lévy area based on the different expansions of the Brownian bridge, we first analyse the fluctuations associated with the expansions. The fluctuation process for the polynomial expansion is studied and characterised in [Reference Habermann19], and these results are recalled in Section 2.3. The fluctuation processes

![]() $(F_t^{N,1})_{t\in [0,1]}$

for the Karhunen–Loève expansion and the fluctuation processes

$(F_t^{N,1})_{t\in [0,1]}$

for the Karhunen–Loève expansion and the fluctuation processes

![]() $(F_t^{N,2})_{t\in [0,1]}$

for the Fourier series expansion are defined as, for

$(F_t^{N,2})_{t\in [0,1]}$

for the Fourier series expansion are defined as, for

![]() $N\in{\mathbb{N}}$

,

$N\in{\mathbb{N}}$

,

\begin{equation} F_t^{N,1}=\sqrt{N}\left (B_t-\sum _{k=1}^N\frac{2\sin\!(k\pi t)}{k\pi }\int _0^1\cos\!(k\pi r){\mathrm{d}} B_r\right ), \end{equation}

\begin{equation} F_t^{N,1}=\sqrt{N}\left (B_t-\sum _{k=1}^N\frac{2\sin\!(k\pi t)}{k\pi }\int _0^1\cos\!(k\pi r){\mathrm{d}} B_r\right ), \end{equation}

and

\begin{equation} F_t^{N,2}=\sqrt{2N}\left (B_t-\frac{1}{2}a_0-\sum _{k=1}^N\left (a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t)\right )\right ). \end{equation}

\begin{equation} F_t^{N,2}=\sqrt{2N}\left (B_t-\frac{1}{2}a_0-\sum _{k=1}^N\left (a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t)\right )\right ). \end{equation}

The scaling by

![]() $\sqrt{2N}$

in the process

$\sqrt{2N}$

in the process

![]() $(F_t^{N,2})_{t\in [0,1]}$

is the natural scaling to use because increasing

$(F_t^{N,2})_{t\in [0,1]}$

is the natural scaling to use because increasing

![]() $N$

by one results in the subtraction of two additional Gaussian random variables. We use

$N$

by one results in the subtraction of two additional Gaussian random variables. We use

![]() $\mathbb{E}$

to denote the expectation with respect to Wiener measure

$\mathbb{E}$

to denote the expectation with respect to Wiener measure

![]() $\mathbb{P}$

.

$\mathbb{P}$

.

Theorem 1.1.

The fluctuation processes

![]() $(F_t^{N,1})_{t\in [0,1]}$

for the Karhunen–Loève expansion converge in finite dimensional distributions as

$(F_t^{N,1})_{t\in [0,1]}$

for the Karhunen–Loève expansion converge in finite dimensional distributions as

![]() $N\to \infty$

to the collection

$N\to \infty$

to the collection

![]() $(F_t^1)_{t\in [0,1]}$

of independent Gaussian random variables with mean zero and variance

$(F_t^1)_{t\in [0,1]}$

of independent Gaussian random variables with mean zero and variance

\begin{equation*} {\mathbb {E}}\left [\left (F_t^1\right )^2 \right ]= \begin {cases} \dfrac{1}{\pi ^2} & \text {if }\;t\in (0,1)\\[12pt] 0 & \text {if }\; t=0\;\text { or }\;t=1 \end {cases}\!. \end{equation*}

\begin{equation*} {\mathbb {E}}\left [\left (F_t^1\right )^2 \right ]= \begin {cases} \dfrac{1}{\pi ^2} & \text {if }\;t\in (0,1)\\[12pt] 0 & \text {if }\; t=0\;\text { or }\;t=1 \end {cases}\!. \end{equation*}

The fluctuation processes

![]() $(F_t^{N,2})_{t\in [0,1]}$

for the Fourier expansion converge in finite dimensional distributions as

$(F_t^{N,2})_{t\in [0,1]}$

for the Fourier expansion converge in finite dimensional distributions as

![]() $N\to \infty$

to the collection

$N\to \infty$

to the collection

![]() $(F_t^2)_{t\in [0,1]}$

of zero-mean Gaussian random variables whose covariance structure is given by, for

$(F_t^2)_{t\in [0,1]}$

of zero-mean Gaussian random variables whose covariance structure is given by, for

![]() $s,t\in [0,1]$

,

$s,t\in [0,1]$

,

\begin{equation*} {\mathbb {E}}\left [F_s^2 F_t^2 \right ]= \begin {cases} \dfrac{1}{\pi ^2} & \text {if }\;s=t\text { or } s,t\in \{0,1\}\\[12pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

\begin{equation*} {\mathbb {E}}\left [F_s^2 F_t^2 \right ]= \begin {cases} \dfrac{1}{\pi ^2} & \text {if }\;s=t\text { or } s,t\in \{0,1\}\\[12pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

The difference between the fluctuation result for the Karhunen–Loève expansion and the fluctuation result for the polynomial expansion, see [[Reference Habermann19], Theorem 1.6] or Section 2.3, is that there the variances of the independent Gaussian random variables follow the semicircle

![]() $\frac{1}{\pi }\sqrt{t(1-t)}$

whereas here they are constant on

$\frac{1}{\pi }\sqrt{t(1-t)}$

whereas here they are constant on

![]() $(0,1)$

, see Figure 1. The limit fluctuations for the Fourier series expansion further exhibit endpoints which are correlated.

$(0,1)$

, see Figure 1. The limit fluctuations for the Fourier series expansion further exhibit endpoints which are correlated.

Figure 1. Table showing basis functions and fluctuations for the Brownian bridge expansions.

As pointed out in [Reference Habermann19], the reason for considering convergence in finite dimensional distributions for the fluctuation processes is that the limit fluctuations neither have a realisation as processes in

![]() $C([0,1],{\mathbb{R}})$

, nor are they equivalent to measurable processes.

$C([0,1],{\mathbb{R}})$

, nor are they equivalent to measurable processes.

We prove Theorem 1.1 by studying the covariance functions of the Gaussian processes

![]() $(F_t^{N,1})_{t\in [0,1]}$

and

$(F_t^{N,1})_{t\in [0,1]}$

and

![]() $(F_t^{N,2})_{t\in [0,1]}$

given in Lemma 2.2 and Lemma 2.3 in the limit

$(F_t^{N,2})_{t\in [0,1]}$

given in Lemma 2.2 and Lemma 2.3 in the limit

![]() $N\to \infty$

. The key ingredient is the following limit theorem for sine functions, which we see concerns the pointwise convergence for the covariance function of

$N\to \infty$

. The key ingredient is the following limit theorem for sine functions, which we see concerns the pointwise convergence for the covariance function of

![]() $(F_t^{N,1})_{t\in [0,1]}$

.

$(F_t^{N,1})_{t\in [0,1]}$

.

Theorem 1.2.

For all

![]() $s,t\in [0,1]$

, we have

$s,t\in [0,1]$

, we have

\begin{equation*} \lim _{N\to \infty }N\left (\min\!(s,t)-st-\sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}\right )= \begin {cases} \dfrac{1}{\pi ^2} & \text {if } s=t\text { and } t\in (0,1)\\[10pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

\begin{equation*} \lim _{N\to \infty }N\left (\min\!(s,t)-st-\sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}\right )= \begin {cases} \dfrac{1}{\pi ^2} & \text {if } s=t\text { and } t\in (0,1)\\[10pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

The above result serves as one of four base cases in the analysis performed in [Reference Habermann18] of the asymptotic error arising when approximating the Green’s function of a Sturm–Liouville problem through a truncation of its eigenfunction expansion. The work [Reference Habermann18] offers a unifying view for Theorem 1.2 and [[Reference Habermann19], Theorem 1.5].

The proof of Theorem 1.2 is split into an on-diagonal and an off-diagonal argument. We start by proving the convergence on the diagonal away from its endpoints by establishing locally uniform convergence, which ensures continuity of the limit function, and by using a moment argument to identify the limit. As a consequence of the on-diagonal convergence, we obtain the next corollary which then implies the off-diagonal convergence in Theorem 1.2.

Corollary 1.3.

For all

![]() $t\in (0,1)$

, we have

$t\in (0,1)$

, we have

\begin{equation*} \lim _{N\to \infty } N\sum _{k=N+1}^\infty \frac {\cos\!(2k\pi t)}{k^2\pi ^2}=0. \end{equation*}

\begin{equation*} \lim _{N\to \infty } N\sum _{k=N+1}^\infty \frac {\cos\!(2k\pi t)}{k^2\pi ^2}=0. \end{equation*}

Moreover, and of interest in its own right, the moment analysis we use to prove the on-diagonal convergence in Theorem 1.2 leads to a stand-alone derivation of the result that the values of the Riemann zeta function

![]() $\zeta \colon{\mathbb{C}}\setminus \{1\}\to{\mathbb{C}}$

at even positive integers can be expressed in terms of the Bernoulli numbers

$\zeta \colon{\mathbb{C}}\setminus \{1\}\to{\mathbb{C}}$

at even positive integers can be expressed in terms of the Bernoulli numbers

![]() $B_{2n}$

as, for

$B_{2n}$

as, for

![]() $n\in{\mathbb{N}}$

,

$n\in{\mathbb{N}}$

,

see Borevich and Shafarevich [Reference Borevich and Shafarevich3]. In particular, the identity

\begin{equation} \sum _{k=1}^\infty \frac{1}{k^2} =\frac{\pi ^2}{6}, \end{equation}

\begin{equation} \sum _{k=1}^\infty \frac{1}{k^2} =\frac{\pi ^2}{6}, \end{equation}

that is, the resolution to the Basel problem posed by Mengoli [Reference Mengoli28] is a consequence of our analysis and not a prerequisite for it.

We turn our attention to studying approximations of second iterated integrals of Brownian motion. For

![]() $d\geq 2$

, let

$d\geq 2$

, let

![]() $(W_t)_{t\in [0,1]}$

denote a

$(W_t)_{t\in [0,1]}$

denote a

![]() $d$

-dimensional Brownian motion and let

$d$

-dimensional Brownian motion and let

![]() $(B_t)_{t\in [0,1]}$

given by

$(B_t)_{t\in [0,1]}$

given by

![]() $B_t=W_t-tW_1$

be its associated Brownian bridge in

$B_t=W_t-tW_1$

be its associated Brownian bridge in

![]() ${\mathbb{R}}^d$

. We denote the independent components of

${\mathbb{R}}^d$

. We denote the independent components of

![]() $(W_t)_{t\in [0,1]}$

by

$(W_t)_{t\in [0,1]}$

by

![]() $(W_t^{(i)})_{t\in [0,1]}$

, for

$(W_t^{(i)})_{t\in [0,1]}$

, for

![]() $i\in \{1,\ldots,d\}$

, and the components of

$i\in \{1,\ldots,d\}$

, and the components of

![]() $(B_t)_{t\in [0,1]}$

by

$(B_t)_{t\in [0,1]}$

by

![]() $(B_t^{(i)})_{t\in [0,1]}$

, which are also independent by construction. We now focus on approximations of Lévy area.

$(B_t^{(i)})_{t\in [0,1]}$

, which are also independent by construction. We now focus on approximations of Lévy area.

Definition 1.4. The Lévy area of the

![]() $d$

-dimensional Brownian motion

$d$

-dimensional Brownian motion

![]() $W$

over the interval

$W$

over the interval

![]() $[s,t]$

is the antisymmetric

$[s,t]$

is the antisymmetric

![]() $d\times d$

matrix

$d\times d$

matrix

![]() $A_{s,t}$

with the following entries, for

$A_{s,t}$

with the following entries, for

![]() $i,j\in \{1,\ldots,d\}$

,

$i,j\in \{1,\ldots,d\}$

,

For an illustration of Lévy area for a two-dimensional Brownian motion, see Figure 2.

Figure 2. Lévy area is the chordal area between independent Brownian motions.

Remark 1.5. Given the increment

![]() $W_t - W_s$

and the Lévy area

$W_t - W_s$

and the Lévy area

![]() $A_{s,t}$

, we can recover the second iterated integrals of Brownian motion using integration by parts as, for

$A_{s,t}$

, we can recover the second iterated integrals of Brownian motion using integration by parts as, for

![]() $i,j\in \{1,\ldots,d\}$

with

$i,j\in \{1,\ldots,d\}$

with

![]() $i\neq j$

,

$i\neq j$

,

We consider the sequences

![]() $\{a_k\}_{k\in{\mathbb{N}}_0}$

,

$\{a_k\}_{k\in{\mathbb{N}}_0}$

,

![]() $\{b_k\}_{k\in{\mathbb{N}}}$

and

$\{b_k\}_{k\in{\mathbb{N}}}$

and

![]() $\{c_k\}_{k\in{\mathbb{N}}}$

of Gaussian random vectors, where the coordinate random variables

$\{c_k\}_{k\in{\mathbb{N}}}$

of Gaussian random vectors, where the coordinate random variables

![]() $a_k^{(i)}$

,

$a_k^{(i)}$

,

![]() $b_k^{(i)}$

and

$b_k^{(i)}$

and

![]() $c_k^{(i)}$

are defined for

$c_k^{(i)}$

are defined for

![]() $i\in \{1,\ldots,d\}$

by (1.4) and (1.6), respectively, in terms of the Brownian bridge

$i\in \{1,\ldots,d\}$

by (1.4) and (1.6), respectively, in terms of the Brownian bridge

![]() $(B_t^{(i)})_{t\in [0,1]}$

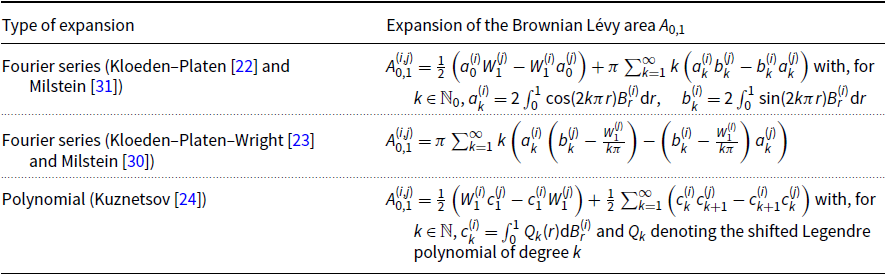

. Using the random coefficients arising from the Fourier series expansion(1.3), we obtain the approximation of Brownian Lévy area proposed by Kloeden and Platen [Reference Kloeden and Platen22] and Milstein [Reference Milstein31]. Further approximating terms so that only independent random coefficients are used yields the Kloeden–Platen–Wright approximation in [Reference Kloeden, Platen and Wright23, Reference Milstein30, Reference Wiktorsson35]. Similarly, using the random coefficients from the polynomial expansion (1.5), we obtain the Lévy area approximation first proposed by Kuznetsov in [Reference Kuznetsov24]. These Lévy area approximations are summarised in Table A2 in Appendix A and have the following asymptotic convergence rates.

$(B_t^{(i)})_{t\in [0,1]}$

. Using the random coefficients arising from the Fourier series expansion(1.3), we obtain the approximation of Brownian Lévy area proposed by Kloeden and Platen [Reference Kloeden and Platen22] and Milstein [Reference Milstein31]. Further approximating terms so that only independent random coefficients are used yields the Kloeden–Platen–Wright approximation in [Reference Kloeden, Platen and Wright23, Reference Milstein30, Reference Wiktorsson35]. Similarly, using the random coefficients from the polynomial expansion (1.5), we obtain the Lévy area approximation first proposed by Kuznetsov in [Reference Kuznetsov24]. These Lévy area approximations are summarised in Table A2 in Appendix A and have the following asymptotic convergence rates.

Theorem 1.6 (Asymptotic convergence rates of Lévy area approximations). For

![]() $n\in{\mathbb{N}}$

, we set

$n\in{\mathbb{N}}$

, we set

![]() $N=2n$

and define approximations

$N=2n$

and define approximations

![]() $\widehat{A}_{n}$

,

$\widehat{A}_{n}$

,

![]() $\widetilde{A}_{n}$

and

$\widetilde{A}_{n}$

and

![]() $\overline{A}_{2n}$

of the Lévy area

$\overline{A}_{2n}$

of the Lévy area

![]() $A_{0,1}$

by, for

$A_{0,1}$

by, for

![]() $i,j\in \{1,\ldots,d\}$

,

$i,j\in \{1,\ldots,d\}$

,

\begin{align} \widehat{A}_{n}^{ (i,j)} \;:\!=\; \frac{1}{2}\left (a_0^{(i)}W_1^{(j)} - W_1^{(i)}a_0^{(j)}\right ) + \pi \sum _{k=1}^{n-1} k\left (a_{k}^{(i)}b_k^{(j)} - b_k^{(i)}a_{k}^{(j)}\right ), \end{align}

\begin{align} \widehat{A}_{n}^{ (i,j)} \;:\!=\; \frac{1}{2}\left (a_0^{(i)}W_1^{(j)} - W_1^{(i)}a_0^{(j)}\right ) + \pi \sum _{k=1}^{n-1} k\left (a_{k}^{(i)}b_k^{(j)} - b_k^{(i)}a_{k}^{(j)}\right ), \end{align}

\begin{align} \widetilde{A}_{n}^{ (i,j)} \;:\!=\; \pi \sum _{k=1}^{n-1} k\left (a_{k}^{(i)}\left (b_k^{(j)} - \frac{1}{k\pi }W_1^{(j)}\right ) - \left (b_k^{(i)} - \frac{1}{k\pi }W_1^{(i)}\right )a_{k}^{(j)}\right ), \end{align}

\begin{align} \widetilde{A}_{n}^{ (i,j)} \;:\!=\; \pi \sum _{k=1}^{n-1} k\left (a_{k}^{(i)}\left (b_k^{(j)} - \frac{1}{k\pi }W_1^{(j)}\right ) - \left (b_k^{(i)} - \frac{1}{k\pi }W_1^{(i)}\right )a_{k}^{(j)}\right ), \end{align}

\begin{align} \overline{A}_{2n}^{ (i,j)} \;:\!=\; \frac{1}{2}\left (W_1^{(i)}c_1^{(j)} - c_1^{(i)}W_1^{(j)}\right ) + \frac{1}{2}\sum _{k=1}^{2n-1}\left (c_k^{(i)}c_{k+1}^{(j)} - c_{k+1}^{(i)}c_k^{(j)}\right ). \end{align}

\begin{align} \overline{A}_{2n}^{ (i,j)} \;:\!=\; \frac{1}{2}\left (W_1^{(i)}c_1^{(j)} - c_1^{(i)}W_1^{(j)}\right ) + \frac{1}{2}\sum _{k=1}^{2n-1}\left (c_k^{(i)}c_{k+1}^{(j)} - c_{k+1}^{(i)}c_k^{(j)}\right ). \end{align}

Then

![]() $\widehat{A}_{n}$

,

$\widehat{A}_{n}$

,

![]() $\widetilde{A}_{n}$

and

$\widetilde{A}_{n}$

and

![]() $\overline{A}_{2n}$

are antisymmetric

$\overline{A}_{2n}$

are antisymmetric

![]() $d \times d$

matrices and, for

$d \times d$

matrices and, for

![]() $i\neq j$

and as

$i\neq j$

and as

![]() $N\to \infty$

, we have

$N\to \infty$

, we have

\begin{align*}{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \widehat{A}_{n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{1}{\pi ^2}\bigg (\frac{1}{N}\bigg ),\\[3pt]{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \widetilde{A}_{n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{3}{\pi ^2}\bigg (\frac{1}{N}\bigg ),\\[3pt]{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \overline{A}_{2n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{1}{8}\bigg (\frac{1}{N}\bigg ). \end{align*}

\begin{align*}{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \widehat{A}_{n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{1}{\pi ^2}\bigg (\frac{1}{N}\bigg ),\\[3pt]{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \widetilde{A}_{n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{3}{\pi ^2}\bigg (\frac{1}{N}\bigg ),\\[3pt]{\mathbb{E}}\bigg [\Big (A_{0,1}^{(i,j)} - \overline{A}_{2n}^{ (i,j)}\Big )^2 \bigg ] & \sim \frac{1}{8}\bigg (\frac{1}{N}\bigg ). \end{align*}

The asymptotic convergence rates in Theorem 1.6 are phrased in terms of

![]() $N$

since the number of Gaussian random vectors required to define the above Lévy area approximations is

$N$

since the number of Gaussian random vectors required to define the above Lévy area approximations is

![]() $N$

or

$N$

or

![]() $N-1$

, respectively. Of course, it is straightforward to define the polynomial approximation

$N-1$

, respectively. Of course, it is straightforward to define the polynomial approximation

![]() $\overline{A}_{n}$

for

$\overline{A}_{n}$

for

![]() $n\in{\mathbb{N}}$

, see Theorem 5.4.

$n\in{\mathbb{N}}$

, see Theorem 5.4.

Intriguingly, the convergence rates for the approximations resulting from the Fourier series and the polynomial expansion correspond exactly with the areas under the limit variance function for each fluctuation process, which are

We provide heuristics demonstrating how this correspondence arises at the end of Section 5.

By adding an additional Gaussian random matrix that matches the covariance of the tail sum, it is possible to derive high order Lévy area approximations with

![]() $O(N^{-1})$

convergence in

$O(N^{-1})$

convergence in

![]() $L^2(\mathbb{P})$

. Wiktorsson [Reference Wiktorsson35] proposed this approach using the Kloeden–Platen–Wright approximation (1.11) and this was recently improved by Mrongowius and Rößler in [Reference Mrongowius and Rößler32] who use the approximation (1.10) obtained from the Fourier series expansion (1.3).

$L^2(\mathbb{P})$

. Wiktorsson [Reference Wiktorsson35] proposed this approach using the Kloeden–Platen–Wright approximation (1.11) and this was recently improved by Mrongowius and Rößler in [Reference Mrongowius and Rößler32] who use the approximation (1.10) obtained from the Fourier series expansion (1.3).

We expect that an

![]() $O(N^{-1})$

polynomial-based approximation is possible using the same techniques. While this approximation should be slightly less accurate than the Fourier approach, we expect it to be easier to implement due to both the independence of the coefficients

$O(N^{-1})$

polynomial-based approximation is possible using the same techniques. While this approximation should be slightly less accurate than the Fourier approach, we expect it to be easier to implement due to both the independence of the coefficients

![]() $\{c_k\}_{k\in{\mathbb{N}}}$

and the covariance of the tail sum having a closed-form expression, see Theorem 5.4. Moreover, this type of method has already been studied in [Reference Davie5, Reference Flint and Lyons10, Reference Foster11] with Brownian Lévy area being approximated by

$\{c_k\}_{k\in{\mathbb{N}}}$

and the covariance of the tail sum having a closed-form expression, see Theorem 5.4. Moreover, this type of method has already been studied in [Reference Davie5, Reference Flint and Lyons10, Reference Foster11] with Brownian Lévy area being approximated by

where the antisymmetric

![]() $d\times d$

matrix

$d\times d$

matrix

![]() $\lambda _{ 0,1}$

is normally distributed and designed so that

$\lambda _{ 0,1}$

is normally distributed and designed so that

![]() $\widehat{A}_{ 0,1}$

has the same covariance structure as the Brownian Lévy area

$\widehat{A}_{ 0,1}$

has the same covariance structure as the Brownian Lévy area

![]() $A_{ 0,1}$

. Davie [Reference Davie5] as well as Flint and Lyons [Reference Flint and Lyons10] generate each

$A_{ 0,1}$

. Davie [Reference Davie5] as well as Flint and Lyons [Reference Flint and Lyons10] generate each

![]() $(i,j)$

-entry of

$(i,j)$

-entry of

![]() $\lambda _{0,1}$

independently as

$\lambda _{0,1}$

independently as

![]() $\lambda _{ 0,1}^{(i,j)} \sim \mathcal{N}\big (0, \frac{1}{12}\big )$

for

$\lambda _{ 0,1}^{(i,j)} \sim \mathcal{N}\big (0, \frac{1}{12}\big )$

for

![]() $i \lt j$

. In [Reference Foster11], it is shown that the covariance structure of

$i \lt j$

. In [Reference Foster11], it is shown that the covariance structure of

![]() $A_{0,1}$

can be explicitly computed conditional on both

$A_{0,1}$

can be explicitly computed conditional on both

![]() $W_1$

and

$W_1$

and

![]() $c_1$

. By matching the conditional covariance structure of

$c_1$

. By matching the conditional covariance structure of

![]() $A_{ 0,1}$

, the work [Reference Foster11] obtains the approximation

$A_{ 0,1}$

, the work [Reference Foster11] obtains the approximation

where the entries

![]() $ \{\lambda _{ 0,1}^{(i,j)} \}_{i \lt j}$

are still generated independently, but only after

$ \{\lambda _{ 0,1}^{(i,j)} \}_{i \lt j}$

are still generated independently, but only after

![]() $c_1$

has been generated.

$c_1$

has been generated.

By rescaling (1.13) to approximate Lévy area on

![]() $\big [\frac{k}{N}, \frac{k+1}{N}\big ]$

and summing over

$\big [\frac{k}{N}, \frac{k+1}{N}\big ]$

and summing over

![]() $k\in \{0,\ldots, N-1\}$

, we obtain a fine discretisation of

$k\in \{0,\ldots, N-1\}$

, we obtain a fine discretisation of

![]() $A_{0,1}$

involving

$A_{0,1}$

involving

![]() $2N$

Gaussian random vectors and

$2N$

Gaussian random vectors and

![]() $N$

random matrices. In [Reference Davie5, Reference Flint and Lyons10, Reference Foster11], the Lévy area of Brownian motion and this approximation are probabilistically coupled in such a way that

$N$

random matrices. In [Reference Davie5, Reference Flint and Lyons10, Reference Foster11], the Lévy area of Brownian motion and this approximation are probabilistically coupled in such a way that

![]() $L^{2}(\mathbb{P})$

convergence rates of

$L^{2}(\mathbb{P})$

convergence rates of

![]() $O(N^{-1})$

can be established. Furthermore, the efficient Lévy area approximation (1.13) can be used directly in numerical methods for SDEs, which then achieve

$O(N^{-1})$

can be established. Furthermore, the efficient Lévy area approximation (1.13) can be used directly in numerical methods for SDEs, which then achieve

![]() $L^{2}(\mathbb{P})$

convergence of

$L^{2}(\mathbb{P})$

convergence of

![]() $O(N^{-1})$

under certain conditions on the SDE vector fields, see [Reference Davie5, Reference Flint and Lyons10]. We leave such high order polynomial-based approximations of Lévy area as a topic for future work.

$O(N^{-1})$

under certain conditions on the SDE vector fields, see [Reference Davie5, Reference Flint and Lyons10]. We leave such high order polynomial-based approximations of Lévy area as a topic for future work.

The paper is organised as follows

In Section 2, we provide an overview of the three expansions we consider for the Brownian bridge, and we characterise the associated fluctuation processes

![]() $(F_t^{N,1})_{t\in [0,1]}$

and

$(F_t^{N,1})_{t\in [0,1]}$

and

![]() $(F_t^{N,2})_{t\in [0,1]}$

. Before discussing their behaviour in the limit

$(F_t^{N,2})_{t\in [0,1]}$

. Before discussing their behaviour in the limit

![]() $N\to \infty$

, we initiate the moment analysis used to prove the on-diagonal part of Theorem 1.2 and we extend the analysis to determine the values of the Riemann zeta function at even positive integers in Section 3. The proof of Theorem 1.2 follows in Section 4, where we complete the moment analysis and establish a locally uniform convergence to identify the limit on the diagonal, before we deduce Corollary 1.3, which then allows us to obtain the off-diagonal convergence in Theorem 1.2. We close Section 4 by proving Theorem 1.1. In Section 5, we compare the asymptotic convergence rates of the different approximations of Lévy area, which results in a proof of Theorem 1.6.

$N\to \infty$

, we initiate the moment analysis used to prove the on-diagonal part of Theorem 1.2 and we extend the analysis to determine the values of the Riemann zeta function at even positive integers in Section 3. The proof of Theorem 1.2 follows in Section 4, where we complete the moment analysis and establish a locally uniform convergence to identify the limit on the diagonal, before we deduce Corollary 1.3, which then allows us to obtain the off-diagonal convergence in Theorem 1.2. We close Section 4 by proving Theorem 1.1. In Section 5, we compare the asymptotic convergence rates of the different approximations of Lévy area, which results in a proof of Theorem 1.6.

2. Series expansions for the Brownian bridge

We discuss the Karhunen–Loève expansion as well as the Fourier expansion of the Brownian bridge more closely, and we derive expressions for the covariance functions of their Gaussian fluctuation processes.

In our analysis, we frequently use a type of Itô isometry for Itô integrals with respect to a Brownian bridge, and we include its statement and proof for completeness.

Lemma 2.1.

Let

![]() $(B_t)_{t\in [0,1]}$

be a Brownian bridge in

$(B_t)_{t\in [0,1]}$

be a Brownian bridge in

![]() $\mathbb{R}$

with

$\mathbb{R}$

with

![]() $B_0=B_1=0$

, and let

$B_0=B_1=0$

, and let

![]() $f,g{\kern-0.5pt}\colon [0,1]\to{\mathbb{R}}$

be integrable functions. Setting

$f,g{\kern-0.5pt}\colon [0,1]\to{\mathbb{R}}$

be integrable functions. Setting

![]() $F(1)=\int _0^1 f(t){\mathrm{d}} t$

and

$F(1)=\int _0^1 f(t){\mathrm{d}} t$

and

![]() $G(1)=\int _0^1 g(t){\mathrm{d}} t$

, we have

$G(1)=\int _0^1 g(t){\mathrm{d}} t$

, we have

Proof. For a standard one-dimensional Brownian motion

![]() $(W_t)_{t\in [0,1]}$

, the process

$(W_t)_{t\in [0,1]}$

, the process

![]() $(W_t-t W_1)_{t\in [0,1]}$

has the same law as the Brownian bridge

$(W_t-t W_1)_{t\in [0,1]}$

has the same law as the Brownian bridge

![]() $(B_t)_{t\in [0,1]}$

. In particular, the random variable

$(B_t)_{t\in [0,1]}$

. In particular, the random variable

![]() $\int _0^1 f(t){\mathrm{d}} B_t$

is equal in law to the random variable

$\int _0^1 f(t){\mathrm{d}} B_t$

is equal in law to the random variable

Using a similar expression for

![]() $\int _0^1 g(t){\mathrm{d}} B_t$

and applying the usual Itô isometry, we deduce that

$\int _0^1 g(t){\mathrm{d}} B_t$

and applying the usual Itô isometry, we deduce that

\begin{align*} &{\mathbb{E}}\left [\left (\int _0^1 f(t){\mathrm{d}} B_t\right )\left (\int _0^1 g(t){\mathrm{d}} B_t\right )\right ]\\[5pt] &\qquad =\int _0^1 f(t)g(t){\mathrm{d}} t-F(1)\int _0^1 g(t){\mathrm{d}} t -G(1)\int _0^1 f(t){\mathrm{d}} t+F(1)G(1)\\[5pt] &\qquad =\int _0^1 f(t)g(t){\mathrm{d}} t - F(1)G(1), \end{align*}

\begin{align*} &{\mathbb{E}}\left [\left (\int _0^1 f(t){\mathrm{d}} B_t\right )\left (\int _0^1 g(t){\mathrm{d}} B_t\right )\right ]\\[5pt] &\qquad =\int _0^1 f(t)g(t){\mathrm{d}} t-F(1)\int _0^1 g(t){\mathrm{d}} t -G(1)\int _0^1 f(t){\mathrm{d}} t+F(1)G(1)\\[5pt] &\qquad =\int _0^1 f(t)g(t){\mathrm{d}} t - F(1)G(1), \end{align*}

as claimed.

2.1 The Karhunen–Loève expansion

Mercer’s theorem, see [Reference Mercer.29], states that for a continuous symmetric nonnegative definite kernel

![]() $K\colon [0,1]\times [0,1]\to{\mathbb{R}}$

there exists an orthonormal basis

$K\colon [0,1]\times [0,1]\to{\mathbb{R}}$

there exists an orthonormal basis

![]() $\{e_k\}_{k\in{\mathbb{N}}}$

of

$\{e_k\}_{k\in{\mathbb{N}}}$

of

![]() $L^2([0,1])$

which consists of eigenfunctions of the Hilbert–Schmidt integral operator associated with

$L^2([0,1])$

which consists of eigenfunctions of the Hilbert–Schmidt integral operator associated with

![]() $K$

and whose eigenvalues

$K$

and whose eigenvalues

![]() $\{\lambda _k\}_{k\in{\mathbb{N}}}$

are nonnegative and such that, for

$\{\lambda _k\}_{k\in{\mathbb{N}}}$

are nonnegative and such that, for

![]() $s,t\in [0,1]$

, we have the representation

$s,t\in [0,1]$

, we have the representation

\begin{equation*} K(s,t)=\sum _{k=1}^\infty \lambda _k e_k(s) e_k(t), \end{equation*}

\begin{equation*} K(s,t)=\sum _{k=1}^\infty \lambda _k e_k(s) e_k(t), \end{equation*}

which converges absolutely and uniformly on

![]() $[0,1]\times [0,1]$

. For the covariance function

$[0,1]\times [0,1]$

. For the covariance function

![]() $K_B$

defined by (1.1) of the Brownian bridge

$K_B$

defined by (1.1) of the Brownian bridge

![]() $(B_t)_{t\in [0,1]}$

, we obtain, for

$(B_t)_{t\in [0,1]}$

, we obtain, for

![]() $k\in{\mathbb{N}}$

and

$k\in{\mathbb{N}}$

and

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

The Karhunen–Loève expansion of the Brownian bridge is then given by

\begin{equation*} B_t=\sum _{k=1}^\infty \sqrt {2}\sin\!(k\pi t) Z_k \quad \text {where}\quad Z_k=\int _0^1 \sqrt {2}\sin\!(k\pi r) B_r {\mathrm {d}} r, \end{equation*}

\begin{equation*} B_t=\sum _{k=1}^\infty \sqrt {2}\sin\!(k\pi t) Z_k \quad \text {where}\quad Z_k=\int _0^1 \sqrt {2}\sin\!(k\pi r) B_r {\mathrm {d}} r, \end{equation*}

which after integration by parts yields the expression (1.2). Applying Lemma 2.1, we can compute the covariance functions of the associated fluctuation processes

![]() $(F_t^{N,1})_{t\in [0,1]}$

.

$(F_t^{N,1})_{t\in [0,1]}$

.

Lemma 2.2.

The fluctuation process

![]() $(F_t^{N,1})_{t\in [0,1]}$

for

$(F_t^{N,1})_{t\in [0,1]}$

for

![]() $N\in{\mathbb{N}}$

is a zero-mean Gaussian process with covariance function

$N\in{\mathbb{N}}$

is a zero-mean Gaussian process with covariance function

![]() $NC_1^N$

where

$NC_1^N$

where

![]() $C_1^N\colon [0,1]\times [0,1]\to{\mathbb{R}}$

is given by

$C_1^N\colon [0,1]\times [0,1]\to{\mathbb{R}}$

is given by

\begin{equation*} C_1^N(s,t)=\min\!(s,t)-st- \sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}. \end{equation*}

\begin{equation*} C_1^N(s,t)=\min\!(s,t)-st- \sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}. \end{equation*}

Proof. From the definition (1.7), we see that

![]() $(F_t^{N,1})_{t\in [0,1]}$

is a zero-mean Gaussian process. Hence, it suffices to determine its covariance function. By Lemma 2.1, we have, for

$(F_t^{N,1})_{t\in [0,1]}$

is a zero-mean Gaussian process. Hence, it suffices to determine its covariance function. By Lemma 2.1, we have, for

![]() $k,l\in{\mathbb{N}}$

,

$k,l\in{\mathbb{N}}$

,

\begin{equation*} {\mathbb {E}}\left [\left (\int _0^1\cos\!(k\pi r){\mathrm {d}} B_r\right )\left (\int _0^1\cos\!(l\pi r){\mathrm {d}} B_r\right )\right ] =\int _0^1\cos\!(k\pi r)\cos\!(l\pi r){\mathrm {d}} r= \begin {cases} \frac {1}{2} & \text {if }k=l\\[5pt] 0 & \text {otherwise} \end {cases} \end{equation*}

\begin{equation*} {\mathbb {E}}\left [\left (\int _0^1\cos\!(k\pi r){\mathrm {d}} B_r\right )\left (\int _0^1\cos\!(l\pi r){\mathrm {d}} B_r\right )\right ] =\int _0^1\cos\!(k\pi r)\cos\!(l\pi r){\mathrm {d}} r= \begin {cases} \frac {1}{2} & \text {if }k=l\\[5pt] 0 & \text {otherwise} \end {cases} \end{equation*}

and, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

Therefore, from (1.1) and (1.7), we obtain that, for all

![]() $s,t\in [0,1]$

,

$s,t\in [0,1]$

,

\begin{equation*} {\mathbb {E}}\left [F_s^{N,1}F_t^{N,1}\right ] =N\left (\min\!(s,t)-st -\sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}\right ), \end{equation*}

\begin{equation*} {\mathbb {E}}\left [F_s^{N,1}F_t^{N,1}\right ] =N\left (\min\!(s,t)-st -\sum _{k=1}^N\frac {2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2}\right ), \end{equation*}

as claimed.

Consequently, Theorem 1.2 is a statement about the pointwise convergence of the function

![]() $NC_1^N$

in the limit

$NC_1^N$

in the limit

![]() $N\to \infty$

.

$N\to \infty$

.

For our stand-alone derivation of the values of the Riemann zeta function at even positive integers in Section 3, it is further important to note that since, by Mercer’s theorem, the representation

\begin{equation} K_B(s,t)=\min\!(s,t)-st=\sum _{k=1}^\infty \frac{2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2} \end{equation}

\begin{equation} K_B(s,t)=\min\!(s,t)-st=\sum _{k=1}^\infty \frac{2\sin\!(k\pi s)\sin\!(k\pi t)}{k^2\pi ^2} \end{equation}

converges uniformly for

![]() $s,t\in [0,1]$

, the sequence

$s,t\in [0,1]$

, the sequence

![]() $\{C_1^N\}_{N\in{\mathbb{N}}}$

converges uniformly on

$\{C_1^N\}_{N\in{\mathbb{N}}}$

converges uniformly on

![]() $[0,1]\times [0,1]$

to the zero function. It follows that, for all

$[0,1]\times [0,1]$

to the zero function. It follows that, for all

![]() $n\in{\mathbb{N}}_0$

,

$n\in{\mathbb{N}}_0$

,

2.2 The Fourier expansion

Whereas for the Karhunen–Loève expansion the sequence

of random coefficients is formed by independent Gaussian random variables, it is crucial to observe that the random coefficients appearing in the Fourier expansion are not independent. Integrating by parts, we can rewrite the coefficients defined in (1.4) as

as well as, for

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

Applying Lemma 2.1, we see that

and, for

![]() $k,l\in{\mathbb{N}}$

,

$k,l\in{\mathbb{N}}$

,

\begin{equation} {\mathbb{E}}\left [a_k a_l\right ]={\mathbb{E}}\left [b_k b_l\right ]= \begin{cases} \dfrac{1}{2k^2\pi ^2} & \text{if }k=l\\[10pt] 0 & \text{otherwise} \end{cases}. \end{equation}

\begin{equation} {\mathbb{E}}\left [a_k a_l\right ]={\mathbb{E}}\left [b_k b_l\right ]= \begin{cases} \dfrac{1}{2k^2\pi ^2} & \text{if }k=l\\[10pt] 0 & \text{otherwise} \end{cases}. \end{equation}

Since the random coefficients are Gaussian random variables with mean zero, by (2.3) and (2.4), this implies that, for

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

For the remaining covariances of these random coefficients, we obtain that, for

![]() $k,l\in{\mathbb{N}}$

,

$k,l\in{\mathbb{N}}$

,

Using the covariance structure of the random coefficients, we determine the covariance functions of the fluctuation processes

![]() $(F_t^{N,2})_{t\in [0,1]}$

defined in (1.8) for the Fourier series expansion.

$(F_t^{N,2})_{t\in [0,1]}$

defined in (1.8) for the Fourier series expansion.

Lemma 2.3.

The fluctuation process

![]() $(F_t^{N,2})_{t\in [0,1]}$

for

$(F_t^{N,2})_{t\in [0,1]}$

for

![]() $N\in{\mathbb{N}}$

is a Gaussian process with mean zero and whose covariance function is

$N\in{\mathbb{N}}$

is a Gaussian process with mean zero and whose covariance function is

![]() $2NC_2^N$

where

$2NC_2^N$

where

![]() $C_2^N\colon [0,1]\times [0,1]$

is given by

$C_2^N\colon [0,1]\times [0,1]$

is given by

\begin{equation*} C_2^N(s,t)=\min\!(s,t)-st+\frac {s^2-s}{2}+\frac {t^2-t}{2}+\frac {1}{12}- \sum _{k=1}^N\frac {\cos\!(2k\pi (t-s))}{2k^2\pi ^2}. \end{equation*}

\begin{equation*} C_2^N(s,t)=\min\!(s,t)-st+\frac {s^2-s}{2}+\frac {t^2-t}{2}+\frac {1}{12}- \sum _{k=1}^N\frac {\cos\!(2k\pi (t-s))}{2k^2\pi ^2}. \end{equation*}

Proof. Repeatedly applying Lemma 2.1, we compute that, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

as well as, for

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

From (2.5) and (2.8), it follows that, for

![]() $s,t\in [0,1]$

,

$s,t\in [0,1]$

,

whereas (2.7) and (2.9) imply that

\begin{equation*} {\mathbb {E}}\left [\frac {1}{2}a_0\sum _{k=1}^N a_k \cos\!(2k\pi t) -B_s\sum _{k=1}^N a_k \cos\!(2k\pi t)\right ] =-\sum _{k=1}^N\frac {\cos\!(2k\pi s)\cos\!(2k\pi t)}{2k^2\pi ^2} \end{equation*}

\begin{equation*} {\mathbb {E}}\left [\frac {1}{2}a_0\sum _{k=1}^N a_k \cos\!(2k\pi t) -B_s\sum _{k=1}^N a_k \cos\!(2k\pi t)\right ] =-\sum _{k=1}^N\frac {\cos\!(2k\pi s)\cos\!(2k\pi t)}{2k^2\pi ^2} \end{equation*}

as well as

\begin{equation*} {\mathbb {E}}\left [B_s\sum _{k=1}^N b_k \sin\!(2k\pi t)\right ] =\sum _{k=1}^N\frac {\sin\!(2k\pi s)\sin\!(2k\pi t)}{2k^2\pi ^2}. \end{equation*}

\begin{equation*} {\mathbb {E}}\left [B_s\sum _{k=1}^N b_k \sin\!(2k\pi t)\right ] =\sum _{k=1}^N\frac {\sin\!(2k\pi s)\sin\!(2k\pi t)}{2k^2\pi ^2}. \end{equation*}

It remains to observe that, by (2.6) and (2.7),

\begin{align*} &{\mathbb{E}}\left [\left (\sum _{k=1}^N\left (a_k\cos\!(2k\pi s)+b_k\sin\!(2k\pi s)\right )\right ) \left (\sum _{k=1}^N\left (a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t)\right )\right )\right ]\\[5pt] &\qquad = \sum _{k=1}^N\frac{\cos\!(2k\pi s)\cos\!(2k\pi t)+\sin\!(2k\pi s)\sin\!(2k\pi t)}{2k^2\pi ^2}. \end{align*}

\begin{align*} &{\mathbb{E}}\left [\left (\sum _{k=1}^N\left (a_k\cos\!(2k\pi s)+b_k\sin\!(2k\pi s)\right )\right ) \left (\sum _{k=1}^N\left (a_k\cos\!(2k\pi t)+b_k\sin\!(2k\pi t)\right )\right )\right ]\\[5pt] &\qquad = \sum _{k=1}^N\frac{\cos\!(2k\pi s)\cos\!(2k\pi t)+\sin\!(2k\pi s)\sin\!(2k\pi t)}{2k^2\pi ^2}. \end{align*}

Using the identity

and recalling the definition (1.8) of the fluctuation process

![]() $(F_t^{N,2})_{t\in [0,1]}$

for the Fourier expansion, we obtain the desired result.

$(F_t^{N,2})_{t\in [0,1]}$

for the Fourier expansion, we obtain the desired result.

By combining Corollary 1.3, the resolution (1.9) to the Basel problem and the representation (2.1), we can determine the pointwise limit of

![]() $2N C_2^N$

as

$2N C_2^N$

as

![]() $N\to \infty$

. We leave further considerations until Section 4.2 to demonstrate that the identity (1.9) is really a consequence of our analysis.

$N\to \infty$

. We leave further considerations until Section 4.2 to demonstrate that the identity (1.9) is really a consequence of our analysis.

2.3 The polynomial expansion

As pointed out in the introduction and as discussed in detail in [Reference Foster, Lyons and Oberhauser12], the polynomial expansion of the Brownian bridge is a type of Karhunen–Loève expansion in the weighted

![]() $L^2({\mathbb{P}})$

space with weight function

$L^2({\mathbb{P}})$

space with weight function

![]() $w$

on

$w$

on

![]() $(0,1)$

defined by

$(0,1)$

defined by

![]() $w(t)=\frac{1}{t(1-t)}$

.

$w(t)=\frac{1}{t(1-t)}$

.

An alternative derivation of the polynomial expansion is given in [Reference Habermann19] by considering iterated Kolmogorov diffusions. The iterated Kolmogorov diffusion of step

![]() $N\in{\mathbb{N}}$

pairs a one-dimensional Brownian motion

$N\in{\mathbb{N}}$

pairs a one-dimensional Brownian motion

![]() $(W_t)_{t\in [0,1]}$

with its first

$(W_t)_{t\in [0,1]}$

with its first

![]() $N-1$

iterated time integrals, that is, it is the stochastic process in

$N-1$

iterated time integrals, that is, it is the stochastic process in

![]() ${\mathbb{R}}^N$

of the form

${\mathbb{R}}^N$

of the form

The shifted Legendre polynomial

![]() $Q_k$

of degree

$Q_k$

of degree

![]() $k\in{\mathbb{N}}$

on the interval

$k\in{\mathbb{N}}$

on the interval

![]() $[0,1]$

is defined in terms of the standard Legendre polynomial

$[0,1]$

is defined in terms of the standard Legendre polynomial

![]() $P_k$

of degree

$P_k$

of degree

![]() $k$

on

$k$

on

![]() $[-1,1]$

by, for

$[-1,1]$

by, for

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

It is then shown that the first component of an iterated Kolmogorov diffusion of step

![]() $N\in{\mathbb{N}}$

conditioned to return to

$N\in{\mathbb{N}}$

conditioned to return to

![]() $0\in{\mathbb{R}}^N$

in time

$0\in{\mathbb{R}}^N$

in time

![]() $1$

has the same law as the stochastic process

$1$

has the same law as the stochastic process

\begin{equation*} \left (B_t-\sum _{k=1}^{N-1}(2k+1)\int _0^tQ_k(r){\mathrm {d}} r \int _0^1Q_k(r){\mathrm {d}} B_r\right )_{t\in [0,1]}. \end{equation*}

\begin{equation*} \left (B_t-\sum _{k=1}^{N-1}(2k+1)\int _0^tQ_k(r){\mathrm {d}} r \int _0^1Q_k(r){\mathrm {d}} B_r\right )_{t\in [0,1]}. \end{equation*}

The polynomial expansion (1.5) is an immediate consequence of the result [[Reference Habermann19], Theorem 1.4] which states that these first components of the conditioned iterated Kolmogorov diffusions converge weakly as

![]() $N\to \infty$

to the zero process.

$N\to \infty$

to the zero process.

As for the Karhunen–Loève expansion discussed above, the sequence

![]() $\{c_k\}_{k\in{\mathbb{N}}}$

of random coefficients defined by (1.6) is again formed by independent Gaussian random variables. To see this, we first recall the following identities for Legendre polynomials [[Reference Arfken and Weber1], (12.23), (12.31), (12.32)] which in terms of the shifted Legendre polynomials read as, for

$\{c_k\}_{k\in{\mathbb{N}}}$

of random coefficients defined by (1.6) is again formed by independent Gaussian random variables. To see this, we first recall the following identities for Legendre polynomials [[Reference Arfken and Weber1], (12.23), (12.31), (12.32)] which in terms of the shifted Legendre polynomials read as, for

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

In particular, it follows that, for all

![]() $k\in{\mathbb{N}}$

,

$k\in{\mathbb{N}}$

,

which, by Lemma 2.1, implies that, for

![]() $k,l\in{\mathbb{N}}$

,

$k,l\in{\mathbb{N}}$

,

\begin{equation*} {\mathbb {E}}\left [c_k c_l\right ] ={\mathbb {E}}\left [\left (\int _0^1 Q_k(r){\mathrm {d}} B_r\right )\left (\int _0^1 Q_l(r){\mathrm {d}} B_r\right )\right ] =\int _0^1 Q_k(r) Q_l(r){\mathrm {d}} r= \begin {cases} \dfrac {1}{2k+1} & \text {if } k=l\\[6pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

\begin{equation*} {\mathbb {E}}\left [c_k c_l\right ] ={\mathbb {E}}\left [\left (\int _0^1 Q_k(r){\mathrm {d}} B_r\right )\left (\int _0^1 Q_l(r){\mathrm {d}} B_r\right )\right ] =\int _0^1 Q_k(r) Q_l(r){\mathrm {d}} r= \begin {cases} \dfrac {1}{2k+1} & \text {if } k=l\\[6pt] 0 & \text {otherwise} \end {cases}. \end{equation*}

Since the random coefficients are Gaussian with mean zero, this establishes their independence.

The fluctuation processes

![]() $(F_t^{N,3})_{t\in [0,1]}$

for the polynomial expansion defined by

$(F_t^{N,3})_{t\in [0,1]}$

for the polynomial expansion defined by

\begin{equation} F_t^{N,3}=\sqrt{N}\left (B_t-\sum _{k=1}^{N-1}(2k+1) \int _0^t Q_k(r){\mathrm{d}} r \int _0^1 Q_k(r){\mathrm{d}} B_r\right ) \end{equation}

\begin{equation} F_t^{N,3}=\sqrt{N}\left (B_t-\sum _{k=1}^{N-1}(2k+1) \int _0^t Q_k(r){\mathrm{d}} r \int _0^1 Q_k(r){\mathrm{d}} B_r\right ) \end{equation}

are studied in [Reference Habermann19]. According to [[Reference Habermann19], Theorem 1.6], they converge in finite dimensional distributions as

![]() $N\to \infty$

to the collection

$N\to \infty$

to the collection

![]() $(F_t^3)_{t\in [0,1]}$

of independent Gaussian random variables with mean zero and variance

$(F_t^3)_{t\in [0,1]}$

of independent Gaussian random variables with mean zero and variance

that is, the variance function of the limit fluctuations is given by a scaled semicircle.

3. Particular values of the Riemann zeta function

We demonstrate how to use the Karhunen–Loève expansion of the Brownian bridge or, more precisely, the series representation arising from Mercer’s theorem for the covariance function of the Brownian bridge to determine the values of the Riemann zeta function at even positive integers. The analysis further feeds directly into Section 4.1 where we characterise the limit fluctuations for the Karhunen–Loève expansion.

The crucial ingredient is the observation (2.2) from Section 2, which implies that, for all

![]() $n\in{\mathbb{N}}_0$

,

$n\in{\mathbb{N}}_0$

,

\begin{equation} \sum _{k=1}^\infty \int _0^1 \frac{2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}t^{n}{\mathrm{d}} t =\int _0^1\left (t-t^2\right )t^{n}{\mathrm{d}} t =\frac{1}{(n+2)(n+3)}. \end{equation}

\begin{equation} \sum _{k=1}^\infty \int _0^1 \frac{2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}t^{n}{\mathrm{d}} t =\int _0^1\left (t-t^2\right )t^{n}{\mathrm{d}} t =\frac{1}{(n+2)(n+3)}. \end{equation}

For completeness, we recall that the Riemann zeta function

![]() $\zeta \colon{\mathbb{C}}\setminus \{1\}\to{\mathbb{C}}$

analytically continues the sum of the Dirichlet series

$\zeta \colon{\mathbb{C}}\setminus \{1\}\to{\mathbb{C}}$

analytically continues the sum of the Dirichlet series

\begin{equation*} \zeta (s)=\sum _{k=1}^\infty \frac {1}{k^s}. \end{equation*}

\begin{equation*} \zeta (s)=\sum _{k=1}^\infty \frac {1}{k^s}. \end{equation*}

When discussing its values at even positive integers, we encounter the Bernoulli numbers. The Bernoulli numbers

![]() $B_n$

, for

$B_n$

, for

![]() $n\in{\mathbb{N}}$

, are signed rational numbers defined by an exponential generating function via, for

$n\in{\mathbb{N}}$

, are signed rational numbers defined by an exponential generating function via, for

![]() $t\in (\!-\!2\pi,2\pi )$

,

$t\in (\!-\!2\pi,2\pi )$

,

see Borevich and Shafarevich [[Reference Borevich and Shafarevich3], Chapter 5.8]. These numbers play an important role in number theory and analysis. For instance, they feature in the series expansion of the (hyperbolic) tangent and the (hyperbolic) cotangent, and they appear in formulae by Bernoulli and by Faulhaber for the sum of positive integer powers of the first

![]() $k$

positive integers. The characterisation of the Bernoulli numbers which is essential to our analysis is that, according to [[Reference Borevich and Shafarevich3], Theorem 5.8.1], they satisfy and are uniquely given by the recurrence relations

$k$

positive integers. The characterisation of the Bernoulli numbers which is essential to our analysis is that, according to [[Reference Borevich and Shafarevich3], Theorem 5.8.1], they satisfy and are uniquely given by the recurrence relations

In particular, choosing

![]() $m=1$

yields

$m=1$

yields

![]() $1+2B_1=0$

, which shows that

$1+2B_1=0$

, which shows that

Moreover, since the function defined by, for

![]() $t\in (\!-\!2\pi,2\pi )$

,

$t\in (\!-\!2\pi,2\pi )$

,

is an even function, we obtain

![]() $B_{2n+1}=0$

for all

$B_{2n+1}=0$

for all

![]() $n\in{\mathbb{N}}$

, see [[Reference Borevich and Shafarevich3], Theorem 5.8.2]. It follows from (3.2) that the Bernoulli numbers

$n\in{\mathbb{N}}$

, see [[Reference Borevich and Shafarevich3], Theorem 5.8.2]. It follows from (3.2) that the Bernoulli numbers

![]() $B_{2n}$

indexed by even positive integers are uniquely characterised by the recurrence relations

$B_{2n}$

indexed by even positive integers are uniquely characterised by the recurrence relations

These recurrence relations are our tool for identifying the Bernoulli numbers when determining the values of the Riemann zeta function at even positive integers.

The starting point for our analysis is (3.1), and we first illustrate how it allows us to compute

![]() $\zeta (2)$

. Taking

$\zeta (2)$

. Taking

![]() $n=0$

in (3.1), multiplying through by

$n=0$

in (3.1), multiplying through by

![]() $\pi ^2$

, and using that

$\pi ^2$

, and using that

![]() $\int _0^1\left (\sin\!(k\pi t)\right )^2{\mathrm{d}} t=\frac{1}{2}$

for

$\int _0^1\left (\sin\!(k\pi t)\right )^2{\mathrm{d}} t=\frac{1}{2}$

for

![]() $k\in{\mathbb{N}}$

, we deduce that

$k\in{\mathbb{N}}$

, we deduce that

\begin{equation*} \zeta (2)=\sum _{k=1}^\infty \frac {1}{k^2} =\sum _{k=1}^\infty \int _0^1 \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2} {\mathrm {d}} t =\frac {\pi ^2}{6}. \end{equation*}

\begin{equation*} \zeta (2)=\sum _{k=1}^\infty \frac {1}{k^2} =\sum _{k=1}^\infty \int _0^1 \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2} {\mathrm {d}} t =\frac {\pi ^2}{6}. \end{equation*}

We observe that this is exactly the identity obtained by applying the general result

\begin{equation*} \int _0^1 K(t,t){\mathrm {d}} t=\sum _{k=1}^\infty \lambda _k \end{equation*}

\begin{equation*} \int _0^1 K(t,t){\mathrm {d}} t=\sum _{k=1}^\infty \lambda _k \end{equation*}

for a representation arising from Mercer’s theorem to the representation for the covariance function

![]() $K_B$

of the Brownian bridge.

$K_B$

of the Brownian bridge.

For working out the values for the remaining even positive integers, we iterate over the degree of the moment in (3.1). While for the remainder of this section it suffices to only consider the even moments, we derive the following recurrence relation and the explicit expression both for the even and for the odd moments as these are needed in Section 4.1. For

![]() $k\in{\mathbb{N}}$

and

$k\in{\mathbb{N}}$

and

![]() $n\in{\mathbb{N}}_0$

, we set

$n\in{\mathbb{N}}_0$

, we set

Lemma 3.1.

For all

![]() $k\in{\mathbb{N}}$

and all

$k\in{\mathbb{N}}$

and all

![]() $n\in{\mathbb{N}}$

with

$n\in{\mathbb{N}}$

with

![]() $n\geq 2$

, we have

$n\geq 2$

, we have

subject to the initial conditions

Proof. For

![]() $k\in{\mathbb{N}}$

, the values for

$k\in{\mathbb{N}}$

, the values for

![]() $e_{k,0}$

and

$e_{k,0}$

and

![]() $e_{k,1}$

can be verified directly. For

$e_{k,1}$

can be verified directly. For

![]() $n\in{\mathbb{N}}$

with

$n\in{\mathbb{N}}$

with

![]() $n\geq 2$

, we integrate by parts twice to obtain

$n\geq 2$

, we integrate by parts twice to obtain

\begin{align*} e_{k,n} &=\int _0^1 2\left (\sin\!(k\pi t)\right )^2t^{n}{\mathrm{d}} t\\[5pt] &=1-\int _0^1\left (t-\frac{\sin\!(2k\pi t)}{2k\pi }\right ) nt^{n-1}{\mathrm{d}} t\\[5pt] &=1-\frac{n}{2}+\frac{n(n-1)}{2} \int _0^1\left (t^2- \frac{\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}\right )t^{n-2}{\mathrm{d}} t \\[5pt] &=\frac{2-n}{2}+\frac{n(n-1)}{2} \left (\frac{1}{n+1}-\frac{1}{2k^2\pi ^2}e_{k,n-2}\right )\\[5pt] &=\frac{1}{n+1}-\frac{n(n-1)}{4k^2\pi ^2}e_{k,n-2}, \end{align*}

\begin{align*} e_{k,n} &=\int _0^1 2\left (\sin\!(k\pi t)\right )^2t^{n}{\mathrm{d}} t\\[5pt] &=1-\int _0^1\left (t-\frac{\sin\!(2k\pi t)}{2k\pi }\right ) nt^{n-1}{\mathrm{d}} t\\[5pt] &=1-\frac{n}{2}+\frac{n(n-1)}{2} \int _0^1\left (t^2- \frac{\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}\right )t^{n-2}{\mathrm{d}} t \\[5pt] &=\frac{2-n}{2}+\frac{n(n-1)}{2} \left (\frac{1}{n+1}-\frac{1}{2k^2\pi ^2}e_{k,n-2}\right )\\[5pt] &=\frac{1}{n+1}-\frac{n(n-1)}{4k^2\pi ^2}e_{k,n-2}, \end{align*}

as claimed.

Iteratively applying the recurrence relation, we find the following explicit expression, which despite its involvedness is exactly what we need.

Lemma 3.2.

For all

![]() $k\in{\mathbb{N}}$

and

$k\in{\mathbb{N}}$

and

![]() $m\in{\mathbb{N}}_0$

, we have

$m\in{\mathbb{N}}_0$

, we have

\begin{align*} e_{k,2m}&=\frac{1}{2m+1}+ \sum _{n=1}^m\frac{(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}} \quad \text{and}\\[5pt] e_{k,2m+1}&=\frac{1}{2m+2}+ \sum _{n=1}^m\frac{(\!-\!1)^n(2m+1)!}{(2(m-n)+2)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}}. \end{align*}

\begin{align*} e_{k,2m}&=\frac{1}{2m+1}+ \sum _{n=1}^m\frac{(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}} \quad \text{and}\\[5pt] e_{k,2m+1}&=\frac{1}{2m+2}+ \sum _{n=1}^m\frac{(\!-\!1)^n(2m+1)!}{(2(m-n)+2)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}}. \end{align*}

Proof. We proceed by induction over

![]() $m$

. Since

$m$

. Since

![]() $e_{k,0}=1$

and

$e_{k,0}=1$

and

![]() $e_{k,1}=\frac{1}{2}$

for all

$e_{k,1}=\frac{1}{2}$

for all

![]() $k\in{\mathbb{N}}$

, the expressions are true for

$k\in{\mathbb{N}}$

, the expressions are true for

![]() $m=0$

with the sums being understood as empty sums in this case. Assuming that the result is true for some fixed

$m=0$

with the sums being understood as empty sums in this case. Assuming that the result is true for some fixed

![]() $m\in{\mathbb{N}}_0$

, we use Lemma 3.1 to deduce that

$m\in{\mathbb{N}}_0$

, we use Lemma 3.1 to deduce that

\begin{align*} e_{k,2m+2}&=\frac{1}{2m+3}-\frac{(2m+2)(2m+1)}{4k^2\pi ^2}e_{k,2m}\\[5pt] &=\frac{1}{2m+3}-\frac{2m+2}{4k^2\pi ^2}- \sum _{n=1}^m\frac{(\!-\!1)^n(2m+2)!}{(2(m-n)+1)!2^{2n+2}}\frac{1}{k^{2n+2}\pi ^{2n+2}}\\[5pt] &=\frac{1}{2m+3}+ \sum _{n=1}^{m+1}\frac{(\!-\!1)^n(2m+2)!}{(2(m-n)+3)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}} \end{align*}

\begin{align*} e_{k,2m+2}&=\frac{1}{2m+3}-\frac{(2m+2)(2m+1)}{4k^2\pi ^2}e_{k,2m}\\[5pt] &=\frac{1}{2m+3}-\frac{2m+2}{4k^2\pi ^2}- \sum _{n=1}^m\frac{(\!-\!1)^n(2m+2)!}{(2(m-n)+1)!2^{2n+2}}\frac{1}{k^{2n+2}\pi ^{2n+2}}\\[5pt] &=\frac{1}{2m+3}+ \sum _{n=1}^{m+1}\frac{(\!-\!1)^n(2m+2)!}{(2(m-n)+3)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}} \end{align*}

as well as

\begin{align*} e_{k,2m+3}&=\frac{1}{2m+4}-\frac{(2m+3)(2m+2)}{4k^2\pi ^2}e_{k,2m+1}\\[5pt] &=\frac{1}{2m+4}-\frac{2m+3}{4k^2\pi ^2}- \sum _{n=1}^m\frac{(\!-\!1)^n(2m+3)!}{(2(m-n)+2)!2^{2n+2}}\frac{1}{k^{2n+2}\pi ^{2n+2}}\\[5pt] &=\frac{1}{2m+4}+ \sum _{n=1}^{m+1}\frac{(\!-\!1)^n(2m+3)!}{(2(m-n)+4)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}}, \end{align*}

\begin{align*} e_{k,2m+3}&=\frac{1}{2m+4}-\frac{(2m+3)(2m+2)}{4k^2\pi ^2}e_{k,2m+1}\\[5pt] &=\frac{1}{2m+4}-\frac{2m+3}{4k^2\pi ^2}- \sum _{n=1}^m\frac{(\!-\!1)^n(2m+3)!}{(2(m-n)+2)!2^{2n+2}}\frac{1}{k^{2n+2}\pi ^{2n+2}}\\[5pt] &=\frac{1}{2m+4}+ \sum _{n=1}^{m+1}\frac{(\!-\!1)^n(2m+3)!}{(2(m-n)+4)!2^{2n}}\frac{1}{k^{2n}\pi ^{2n}}, \end{align*}

which settles the induction step.

Focusing on the even moments for the remainder of this section, we see that by (3.1), for all

![]() $m\in{\mathbb{N}}_0$

,

$m\in{\mathbb{N}}_0$

,

\begin{equation*} \sum _{k=1}^\infty \frac {e_{k,2m}}{k^2\pi ^2} =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

\begin{equation*} \sum _{k=1}^\infty \frac {e_{k,2m}}{k^2\pi ^2} =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

From Lemma 3.2, it follows that

\begin{equation*} \sum _{k=1}^\infty \frac {1}{k^2\pi ^2} \left (\sum _{n=0}^m\frac {(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}}\frac {1}{k^{2n}\pi ^{2n}}\right ) =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

\begin{equation*} \sum _{k=1}^\infty \frac {1}{k^2\pi ^2} \left (\sum _{n=0}^m\frac {(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}}\frac {1}{k^{2n}\pi ^{2n}}\right ) =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

Since

![]() $\sum _{k=1}^\infty k^{-2n}$

converges for all

$\sum _{k=1}^\infty k^{-2n}$

converges for all

![]() $n\in{\mathbb{N}}$

, we can rearrange sums to obtain

$n\in{\mathbb{N}}$

, we can rearrange sums to obtain

\begin{equation*} \sum _{n=0}^m\frac {(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}} \left (\sum _{k=1}^\infty \frac {1}{k^{2n+2}\pi ^{2n+2}}\right ) =\frac {1}{(2m+2)(2m+3)}, \end{equation*}

\begin{equation*} \sum _{n=0}^m\frac {(\!-\!1)^n(2m)!}{(2(m-n)+1)!2^{2n}} \left (\sum _{k=1}^\infty \frac {1}{k^{2n+2}\pi ^{2n+2}}\right ) =\frac {1}{(2m+2)(2m+3)}, \end{equation*}

which in terms of the Riemann zeta function and after reindexing the sum rewrites as

\begin{equation*} \sum _{n=1}^{m+1}\frac {(\!-\!1)^{n+1}(2m)!}{(2(m-n)+3)!2^{2n-2}} \frac {\zeta (2n)}{\pi ^{2n}} =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

\begin{equation*} \sum _{n=1}^{m+1}\frac {(\!-\!1)^{n+1}(2m)!}{(2(m-n)+3)!2^{2n-2}} \frac {\zeta (2n)}{\pi ^{2n}} =\frac {1}{(2m+2)(2m+3)}. \end{equation*}

Multiplying through by

![]() $(2m+1)(2m+2)(2m+3)$

shows that, for all

$(2m+1)(2m+2)(2m+3)$

shows that, for all

![]() $m\in{\mathbb{N}}_0$

,

$m\in{\mathbb{N}}_0$

,

\begin{equation*} \sum _{n=1}^{m+1}\binom {2m+3}{2n} \left (\frac {(\!-\!1)^{n+1}2(2n)!}{\left (2\pi \right )^{2n}}\zeta (2n)\right ) =\frac {2m+1}{2}. \end{equation*}

\begin{equation*} \sum _{n=1}^{m+1}\binom {2m+3}{2n} \left (\frac {(\!-\!1)^{n+1}2(2n)!}{\left (2\pi \right )^{2n}}\zeta (2n)\right ) =\frac {2m+1}{2}. \end{equation*}

Comparing the last expression with the characterisation (3.3) of the Bernoulli numbers

![]() $B_{2n}$

indexed by even positive integers implies that

$B_{2n}$

indexed by even positive integers implies that

that is, we have established that, for all

![]() $n\in{\mathbb{N}}$

,

$n\in{\mathbb{N}}$

,

4. Fluctuations for the trigonometric expansions of the Brownian bridge

We first prove Theorem 1.2 and Corollary 1.3 which we use to determine the pointwise limits for the covariance functions of the fluctuation processes for the Karhunen–Loève expansion and of the fluctuation processes for the Fourier series expansion, and then we deduce Theorem 1.1.

4.1 Fluctuations for the Karhunen–Loève expansion

For the moment analysis initiated in the previous section to allow us to identify the limit of

![]() $NC_1^N$

as

$NC_1^N$

as

![]() $N\to \infty$

on the diagonal away from its endpoints, we apply the Arzelà–Ascoli theorem to guarantee continuity of the limit away from the endpoints. To this end, we first need to establish the uniform boundedness of two families of functions. Recall that the functions

$N\to \infty$

on the diagonal away from its endpoints, we apply the Arzelà–Ascoli theorem to guarantee continuity of the limit away from the endpoints. To this end, we first need to establish the uniform boundedness of two families of functions. Recall that the functions

![]() $C_1^N\colon [0,1]\times [0,1]\to{\mathbb{R}}$

are defined in Lemma 2.2.

$C_1^N\colon [0,1]\times [0,1]\to{\mathbb{R}}$

are defined in Lemma 2.2.

Lemma 4.1.

The family

![]() $\{NC_1^N(t,t)\colon N\in{\mathbb{N}}\text{ and }t\in [0,1]\}$

is uniformly bounded.

$\{NC_1^N(t,t)\colon N\in{\mathbb{N}}\text{ and }t\in [0,1]\}$

is uniformly bounded.

Proof. Combining the expression for

![]() $C_1^N(t,t)$

from Lemma 2.2 and the representation (2.1) for

$C_1^N(t,t)$

from Lemma 2.2 and the representation (2.1) for

![]() $K_B$

arising from Mercer’s theorem, we see that

$K_B$

arising from Mercer’s theorem, we see that

\begin{equation*} NC_1^N(t,t)=N\sum _{k=N+1}^\infty \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}. \end{equation*}

\begin{equation*} NC_1^N(t,t)=N\sum _{k=N+1}^\infty \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}. \end{equation*}

In particular, for all

![]() $N\in{\mathbb{N}}$

and all

$N\in{\mathbb{N}}$

and all

![]() $t\in [0,1]$

, we have

$t\in [0,1]$

, we have

\begin{equation*} \left |NC_1^N(t,t)\right |\leq N\sum _{k=N+1}^\infty \frac {2}{k^2\pi ^2}. \end{equation*}

\begin{equation*} \left |NC_1^N(t,t)\right |\leq N\sum _{k=N+1}^\infty \frac {2}{k^2\pi ^2}. \end{equation*}

We further observe that

\begin{equation} \lim _{M\to \infty }N\sum _{k=N+1}^M\frac{1}{k^2}\leq \lim _{M\to \infty } N\sum _{k=N+1}^M\left (\frac{1}{k-1}-\frac{1}{k}\right ) =\lim _{M\to \infty }\left (1-\frac{N}{M}\right )=1. \end{equation}

\begin{equation} \lim _{M\to \infty }N\sum _{k=N+1}^M\frac{1}{k^2}\leq \lim _{M\to \infty } N\sum _{k=N+1}^M\left (\frac{1}{k-1}-\frac{1}{k}\right ) =\lim _{M\to \infty }\left (1-\frac{N}{M}\right )=1. \end{equation}

It follows that, for all

![]() $N\in{\mathbb{N}}$

and all

$N\in{\mathbb{N}}$

and all

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

which is illustrated in Figure 3 and which establishes the claimed uniform boundedness.

Figure 3. Profiles of

![]() $t\mapsto NC_1^N(t,t)$

plotted for

$t\mapsto NC_1^N(t,t)$

plotted for

![]() $N\in \{5, 25, 100\}$

along with

$N\in \{5, 25, 100\}$

along with

![]() $t\mapsto \frac{2}{\pi ^2}$

.

$t\mapsto \frac{2}{\pi ^2}$

.

Lemma 4.2.

Fix

![]() $\varepsilon \gt 0$

. The family

$\varepsilon \gt 0$

. The family

is uniformly bounded.

Proof. According to Lemma 2.2, we have, for all

![]() $t\in [0,1]$

,

$t\in [0,1]$

,

\begin{equation*} C_1^N(t,t)=t-t^2-\sum _{k=1}^N \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}, \end{equation*}

\begin{equation*} C_1^N(t,t)=t-t^2-\sum _{k=1}^N \frac {2\left (\sin\!(k\pi t)\right )^2}{k^2\pi ^2}, \end{equation*}

which implies that

\begin{equation*} N\frac {{\mathrm {d}}}{{\mathrm {d}} t}C_1^N(t,t)=N\left (1-2t-\sum _{k=1}^N\frac {2\sin\!(2k\pi t)}{k\pi }\right ). \end{equation*}

\begin{equation*} N\frac {{\mathrm {d}}}{{\mathrm {d}} t}C_1^N(t,t)=N\left (1-2t-\sum _{k=1}^N\frac {2\sin\!(2k\pi t)}{k\pi }\right ). \end{equation*}

Figure 4. Profiles of

![]() $t\mapsto N(\frac{\pi -t}{2}-\sum \limits _{k=1}^N \frac{\sin\!(kt)}{k})$

plotted for

$t\mapsto N(\frac{\pi -t}{2}-\sum \limits _{k=1}^N \frac{\sin\!(kt)}{k})$

plotted for

![]() $N\in \{5, 25, 100, 1000\}$

on

$N\in \{5, 25, 100, 1000\}$

on

![]() $[\varepsilon, 2\pi - \varepsilon ]$

with

$[\varepsilon, 2\pi - \varepsilon ]$

with

![]() $\varepsilon = 0.1$

.

$\varepsilon = 0.1$

.

The desired result then follows by showing that, for

![]() $\varepsilon \gt 0$

fixed, the family

$\varepsilon \gt 0$

fixed, the family

\begin{equation*} \left \{N\left (\frac {\pi -t}{2}-\sum _{k=1}^N\frac {\sin\!(kt)}{k}\right )\colon N\in {\mathbb {N}}\text { and }t\in [\varepsilon,2\pi -\varepsilon ]\right \} \end{equation*}

\begin{equation*} \left \{N\left (\frac {\pi -t}{2}-\sum _{k=1}^N\frac {\sin\!(kt)}{k}\right )\colon N\in {\mathbb {N}}\text { and }t\in [\varepsilon,2\pi -\varepsilon ]\right \} \end{equation*}

is uniformly bounded, as illustrated in Figure 4. Employing a usual approach, we use the Dirichlet kernel, for

![]() $N\in{\mathbb{N}}$

,

$N\in{\mathbb{N}}$

,

\begin{equation*} \sum _{k=-N}^N\operatorname {e}^{\operatorname {i} kt}=1+\sum _{k=1}^N2\cos\!(kt)= \frac {\sin\!\left (\left (N+\frac {1}{2}\right )t\right )}{\sin\!\left (\frac {t}{2}\right )} \end{equation*}

\begin{equation*} \sum _{k=-N}^N\operatorname {e}^{\operatorname {i} kt}=1+\sum _{k=1}^N2\cos\!(kt)= \frac {\sin\!\left (\left (N+\frac {1}{2}\right )t\right )}{\sin\!\left (\frac {t}{2}\right )} \end{equation*}

to write, for

![]() $t\in (0,2\pi )$

,

$t\in (0,2\pi )$

,

\begin{equation*} \frac {\pi -t}{2}-\sum _{k=1}^N\frac {\sin\!(kt)}{k} =-\frac {1}{2}\int _\pi ^t\left (1+\sum _{k=1}^N2\cos\!(ks)\right ){\mathrm {d}} s =-\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s. \end{equation*}

\begin{equation*} \frac {\pi -t}{2}-\sum _{k=1}^N\frac {\sin\!(kt)}{k} =-\frac {1}{2}\int _\pi ^t\left (1+\sum _{k=1}^N2\cos\!(ks)\right ){\mathrm {d}} s =-\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s. \end{equation*}

Integration by parts yields

\begin{equation*} -\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s =\frac {\cos\!\left (\left (N+\frac {1}{2}\right )t\right )}{(2N+1)\sin\!\left (\frac {t}{2}\right )} -\frac {1}{2N+1}\int _\pi ^t\cos\!\left (\left (N+\frac {1}{2}\right )s\right )\frac {{\mathrm {d}}}{{\mathrm {d}} s} \left (\frac {1}{\sin\!\left (\frac {s}{2}\right )}\right ){\mathrm {d}} s. \end{equation*}

\begin{equation*} -\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s =\frac {\cos\!\left (\left (N+\frac {1}{2}\right )t\right )}{(2N+1)\sin\!\left (\frac {t}{2}\right )} -\frac {1}{2N+1}\int _\pi ^t\cos\!\left (\left (N+\frac {1}{2}\right )s\right )\frac {{\mathrm {d}}}{{\mathrm {d}} s} \left (\frac {1}{\sin\!\left (\frac {s}{2}\right )}\right ){\mathrm {d}} s. \end{equation*}

By the first mean value theorem for definite integrals, it follows that for

![]() $t\in (0,\pi ]$

fixed, there exists

$t\in (0,\pi ]$

fixed, there exists

![]() $\xi \in [t,\pi ]$

, whereas for

$\xi \in [t,\pi ]$

, whereas for

![]() $t\in [\pi,2\pi )$

fixed, there exists

$t\in [\pi,2\pi )$

fixed, there exists

![]() $\xi \in [\pi,t]$

, such that

$\xi \in [\pi,t]$

, such that

\begin{equation*} -\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s =\frac {\cos\!\left (\left (N+\frac {1}{2}\right )t\right )}{(2N+1)\sin\!\left (\frac {t}{2}\right )} -\frac {\cos\!\left (\left (N+\frac {1}{2}\right )\xi \right )}{2N+1} \left (\frac {1}{\sin\!\left (\frac {t}{2}\right )}-1\right ). \end{equation*}

\begin{equation*} -\frac {1}{2}\int _\pi ^t\frac {\sin\!\left (\left (N+\frac {1}{2}\right )s\right )} {\sin\!\left (\frac {s}{2}\right )}{\mathrm {d}} s =\frac {\cos\!\left (\left (N+\frac {1}{2}\right )t\right )}{(2N+1)\sin\!\left (\frac {t}{2}\right )} -\frac {\cos\!\left (\left (N+\frac {1}{2}\right )\xi \right )}{2N+1} \left (\frac {1}{\sin\!\left (\frac {t}{2}\right )}-1\right ). \end{equation*}

Since

![]() $\left |\cos\!\left (\left (N+\frac{1}{2}\right )\xi \right )\right |$

is bounded above by one independently of

$\left |\cos\!\left (\left (N+\frac{1}{2}\right )\xi \right )\right |$

is bounded above by one independently of

![]() $\xi$

and as

$\xi$

and as

![]() $\frac{t}{2}\in (0,\pi )$

for

$\frac{t}{2}\in (0,\pi )$

for

![]() $t\in (0,2\pi )$

implies that

$t\in (0,2\pi )$

implies that

![]() $0\lt \sin\!\left (\frac{t}{2}\right )\leq 1$

, we conclude that, for all

$0\lt \sin\!\left (\frac{t}{2}\right )\leq 1$

, we conclude that, for all

![]() $N\in{\mathbb{N}}$

and for all

$N\in{\mathbb{N}}$

and for all

![]() $t\in (0,2\pi )$

,

$t\in (0,2\pi )$

,

\begin{equation*} N\left |\frac {\pi -t}{2}-\sum _{k=1}^N\frac {\sin\!(kt)}{k}\right | \leq \frac {2N}{(2N+1)\sin\!\left (\frac {t}{2}\right )}, \end{equation*}