Introduction

Implementation science benefits from theories, models, or frameworks (referred to as TMFs), which are used to (a) guide decisions, hypothesis generation, measure selection, and analyses; (b) enhance generalizability; and (c) improve outcomes or at least understanding [Reference Tabak, Khoong, Chambers and Brownson1,Reference Esmail, Hanson and Holroyd-Leduc2]. Use of a TMF has become a criterion for funding implementation science proposals. Given their critical role, TMFs should also evolve over time to reflect research findings and newer applications [Reference Kislov, Pope, Martin and Wilson3]. Keeping pace with TMFs, as well as operationalizing and applying them, can be challenging [Reference Birken, Rohweder and Powell4].

Because there are so many TMFs in implementation science – over 150 in one recent review [Reference Strifler, Cardoso and McGowan5] – and because they differ in type, purpose, and uses, it is helpful to have ways to organize, understand, and help researchers select the most appropriate TMFs for a given purpose [Reference Tabak, Khoong, Chambers and Brownson1]. Equally important, when researchers select or describe a TMF, they should review the most current writings and resources, rather than rely solely on the seminal paper or a review, since TMFs evolve as they are applied to different contexts and behaviors. For example, the PARIHS framework was modified, updated, and reintroduced as iPARIHS [Reference Harvey and Kitson6]. This issue is important because as Kislov et al. have articulated, TMFs need to evolve in response to data, new challenges, and changing context, rather than becoming ossified [Reference Kislov, Pope, Martin and Wilson3].

Given the complexity of the issues involved and magnitude of the literature on TMFs, researchers frequently – and understandably – refer to foundational papers or reviewer characterizations without searching for more recent articles on a TMF. Early categorizations then get perpetuated through implementation science courses, training programs, textbooks, later studies, review sections, and reviews of reviews. Consequently, researchers may not recognize the evolution, refinement, or expansion of TMFs over time as they are adapted, expanded, empirically tested, or applied in new ways, settings, or populations.

This has occurred with the RE-AIM framework [Reference Glasgow, Harden and Gaglio7,Reference Glasgow, Estabrooks and Ory8]. Often reviews of TMFs put firm boundaries around types of TMFs (e.g., as either explanatory, process, or outcome; can be used only for evaluation vs planning vs improvement). In reality, these boundaries are often blurry, and some TMFs may fit in more than one category. RE-AIM concepts and measures of key constructs have evolved since the initial RE-AIM publication, in line with emerging data and advances in research methods and implementation science more broadly. These issues and resulting misconceptions are discussed below.

It is important to make efforts to prevent and reduce confusion concerning the application of TMFs. Explicitly clarifying misconceptions, either because of misunderstanding or evolution of a TMF over time can accelerate the advancement of implementation science. Advancing understanding will reduce unintended consequences such as pigeonholing the application of TMFs, stifling innovation, and negatively impacting investigators.

The purposes of this paper are to (1) summarize key ways that RE-AIM has evolved over time and how this relates to misinterpretations; (2) identify specific misconceptions about RE-AIM and provide corrections; (3) identify resources to clear up confusion around use of RE-AIM; and (4) offer recommendations for TMF developers, reviewers, authors, and users of scientific literature to reduce the frequency of or mitigate the impact of TMF misinterpretations.

The Evolution of RE-AIM

Many TMFs evolve over time both as a result of their expanded use across settings, populations, and topic areas and also to accommodate scientific developments. For example, the Exploration, Preparation, Implementation, Sustainment (EPIS) framework was initially conceptualized in a more linear form [Reference Aarons, Hurlburt and Horwitz9], but as a result of its application in the international context, it evolved into a more circular model.

The RE-AIM framework was conceptualized over 20 years ago to address the well-documented failures and delays in the translation of scientific evidence into practice and policy [Reference Glasgow, Vogt and Boles10]. RE-AIM has been one of the key TMFs that highlights the importance of external validity (e.g., generalizability) in addition to internal validity, with an emphasis on transparency in reporting across all RE-AIM dimensions [Reference Glasgow, Harden and Gaglio7]. It has been one of the most commonly used planning and evaluation frameworks across the fields of public health, behavioral science, and implementation science [Reference Glasgow, Harden and Gaglio7]. There have been over 700 publications that explicitly use RE-AIM for planning, evaluation (most often), or both. RE-AIM has been applied in a wide range of settings, populations, and health issues across diverse clinical, community, and corporate contexts [Reference Glasgow, Harden and Gaglio7,Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11,Reference Harden, Smith, Ory, SMith-Ray, Estabrooks and Glasgow12], including policy and environmental change.

RE-AIM dimensions operate and are measured at both the individual level and multiple ecologic levels (most frequently at staff and setting levels in health systems, although it has also often been applied at community and national levels). Its key dimensions are reach and effectiveness (individual level), adoption and implementation (staff, setting, system, or policy/other levels), and maintenance (both individual and staff/setting/system/policy levels) [Reference Glasgow, Harden and Gaglio7]. Table 1 outlines each RE-AIM dimension with a definition, reporting recommendations, and an example. Reviews indicate that all RE-AIM indicators are not always addressed (e.g., adoption and maintenance are less commonly reported) [Reference Gaglio, Shoup and Glasgow13,Reference Harden, Gaglio and Shoup14], and in recent years, there has been greater emphasis on pragmatic application of the framework a priori with stakeholders to determine which dimensions should be prioritized for improvement, which can be excluded, which measured, and how they should be operationalized [Reference Glasgow, Harden and Gaglio7,Reference Glasgow and Estabrooks15,Reference Glasgow, Battaglia, McCreight, Ayele and Rabin16]. This evolution is discussed in detail in Glasgow et al. [Reference Glasgow, Harden and Gaglio7] and briefly summarized below.

Table 1. Clarifications of and reporting on RE-AIM dimension

RE-AIM, reach, effectiveness, adoption, implementation, and maintenance.

A soon to be published research topic in the journal Frontiers in Public Health, “Use of the RE-AIM Framework: Translating Research to Practice with Novel Applications and Emerging Directions” provides excellent examples and guidance on the wide range of rapidly expanding applications of RE-AIM for planning, adaptation, and evaluation purposes across diverse settings and populations.

The source RE-AIM publication by Glasgow et al. [Reference Glasgow, Vogt and Boles10] introduced the “RE-AIM evaluation model, which emphasizes the reach and representativeness of both participants and settings” (p. 1322). This is likely the basis for some misconceptions, and especially the repeated classification of RE-AIM as only an evaluation model [Reference Nilsen17]. Subsequent RE-AIM publications clarified the value of the model for planning, designing for dissemination, and addressing research across the translational science spectrum. As RE-AIM has evolved, it has been integrated into the assessment of the generalizability of findings in the Evidence-Based Cancer Control Programs repository [18] of evidence-based cancer prevention and control interventions and identified as a recommended implementation science model in NIH and foundation funding announcements.

As RE-AIM continues to evolve, it is well positioned to address the realist evaluation question of what intervention components are effective, with which implementation strategies, for whom, in what settings, how and why, and for how long [Reference Pawson19]. This contextualized evidence makes RE-AIM practical for replicating or adapting effective interventions in a way that will fit and be feasible for one’s local delivery setting. RE-AIM is less concerned with generating evidence on the question “In general, is a given intervention effective?” and more with impact on different dimensions and outcomes related to population health and the context in which they are and are not effective.

Understanding and Using RE-AIM: Common Misconceptions and Clarifications

The section below describes and provides guidance regarding common misconceptions of RE-AIM. These misconceptions were identified by polling members of the National RE-AIM Working Group [Reference Harden, Strayer and Smith20], which consists of individuals very active in research using RE-AIM. It includes the authors of this paper, the primary developer of RE-AIM, researchers with the highest number of RE-AIM publications, and those who host the RE-AIM.org website. These individuals are frequently asked to serve on review panels and to review manuscripts related to RE-AIM, as well as to present on RE-AIM at conferences, training programs, and invited lectures. Many of the misconceptions were identified through these processes as well as inquiries to the RE-AIM website. Although a new extensive quantitative review of the frequency of these misconceptions may have added justification, it is unlikely that conclusions of relatively recent systematic reviews of RE-AIM applications [Reference Harden, Smith, Ory, SMith-Ray, Estabrooks and Glasgow12–Reference Harden, Gaglio and Shoup14] would have changed. More importantly, a review of the published literature could not include primary sources of many of these misconceptions that have occurred in presentations, manuscript, and grant proposal reviews, training programs, and never-published papers.

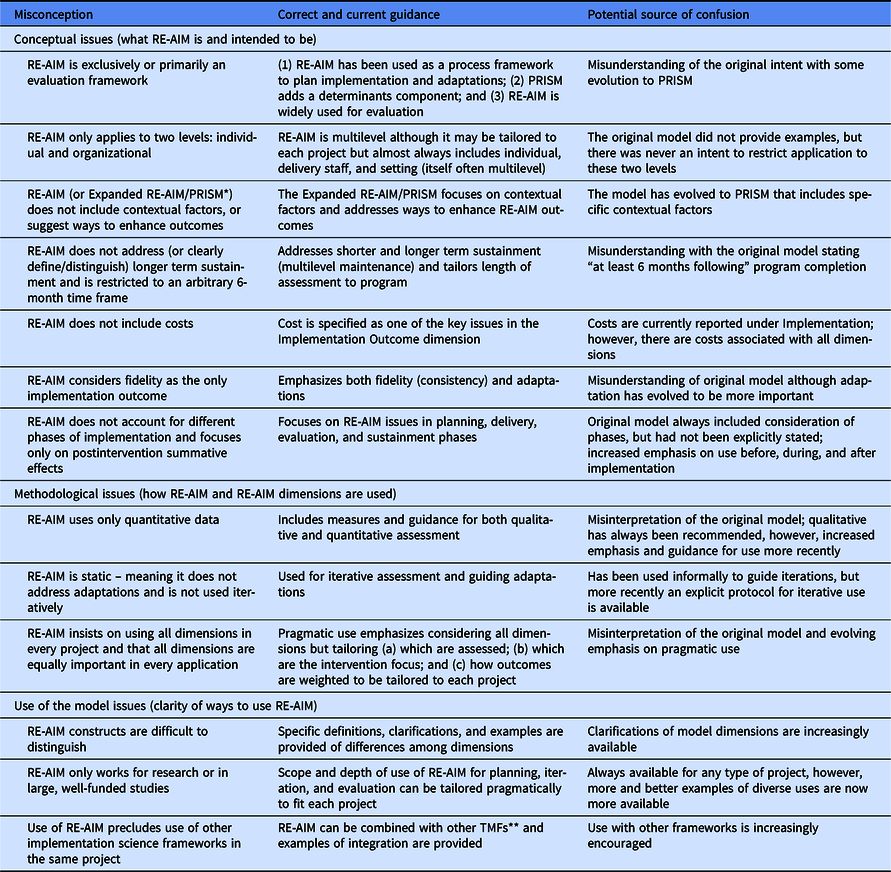

Thirteen misconceptions are summarized in Table 2 to illustrate the evolution of the model and clarify the current guidance. Misconceptions identified through the process above were listed, redundancies removed, and very similar issues combined and then organized into broader categorizes. The order of the 13 misconceptions is not prioritized or listed in terms of frequency, but presented under the categories of conceptual, methodological, and implementation issues for ease of understanding. A narrative explanation of each misconception is provided below.

Table 2. RE-AIM misconceptions including misunderstanding of the original model, evolution of the model, and the current guidance

RE-AIM is reach, effectiveness, adoption, implementation, and maintenance.

* PRISM is the practical, robust, implementation, and sustainability model.

** TMF refers to theories, models, and frameworks.

Conceptual Issues

RE-AIM is exclusively or primarily an evaluation framework

RE-AIM has always been designed to help translate research into practice [Reference Glasgow, Harden and Gaglio7,Reference Glasgow, Vogt and Boles10,Reference Glasgow and Estabrooks15,Reference Dzewaltowski, Glasgow, Klesges, Estabrooks and Brock21]. Early conceptualization of RE-AIM focused on the application of a comprehensive evaluation framework targeting multilevel interventions [Reference Glasgow, Vogt and Boles10]. While evaluation continues to be a focus, as early as 2005, Klesges et al. [Reference Klesges, Estabrooks, Dzewaltowski, Bull and Glasgow22] explicitly focused on RE-AIM as a tool to design programs, and Kessler et al. (2013) [Reference Kessler, Purcell, Glasgow, Klesges, Benkeser and Peek23] explicated full use of RE-AIM, synthesizing program planning, implementation, evaluation, and reporting with a focus on external validity. Thus, while evaluation was an early focus, RE-AIM emphasizes translation of research to practice, designing for dissemination, and the importance of considering context in planning and design, in addition to evaluation.

RE-AIM only applies at two levels: Individual and organizational

RE-AIM applies to many socioecologic levels, and understanding different levels is a common source of confusion. This may have resulted from early applications only providing examples at the individual (e.g., patient, employee, student) and organizational (e.g., clinic, workplace, school) levels and the explicit use of the R and E domains at the patient level and the A, I, and M domains at the organization level. However, there was never an intent to restrict application to these two levels. More recently, RE-AIM domains are applied at three or more levels [Reference Shaw, Sweet, McBride, Adair and Martin Ginis24].

The dimension within RE-AIM where multilevel application is most evident is the expansion of “adoption,” i.e., the settings where interventions are delivered, to include multiple levels of delivery agents and contexts, in addition to organizations. Given that human behavior takes place in, and is influenced by, multilevel contexts [Reference McLeroy, Bibeau, Steckler and Glanz25], and that exposure to an intervention across multi-level “settings” (e.g., policy, neighborhood, and organizations) may be necessary to maximize reach, collective impact, and sustained effectiveness [Reference Shaw, Sweet, McBride, Adair and Martin Ginis24], potential adopters could be policy makers, agencies, councils, urban planners, municipal departments, or community groups.

This broadened definition admittedly makes measurement of adoption more challenging as there are often multiple levels of nesting (e.g., clinics within health systems within regions). If the intervention is a policy change (e.g., national policy requiring nutrition labeling of packaged food), or an environmental change (e.g., open space improvement project to encourage physical activity), implementation strategies and their relevant metrics may include participation and engagement of decision-makers, supportive agencies, and enforcers. Specifying which key stakeholders are relevant during planning, approval, implementation, and sustainment phases, and which agency or individuals will be responsible for maintaining (or enforcing) the program or policy is key. Strategies for defining and measuring adoption should be tailored to one’s specific application.

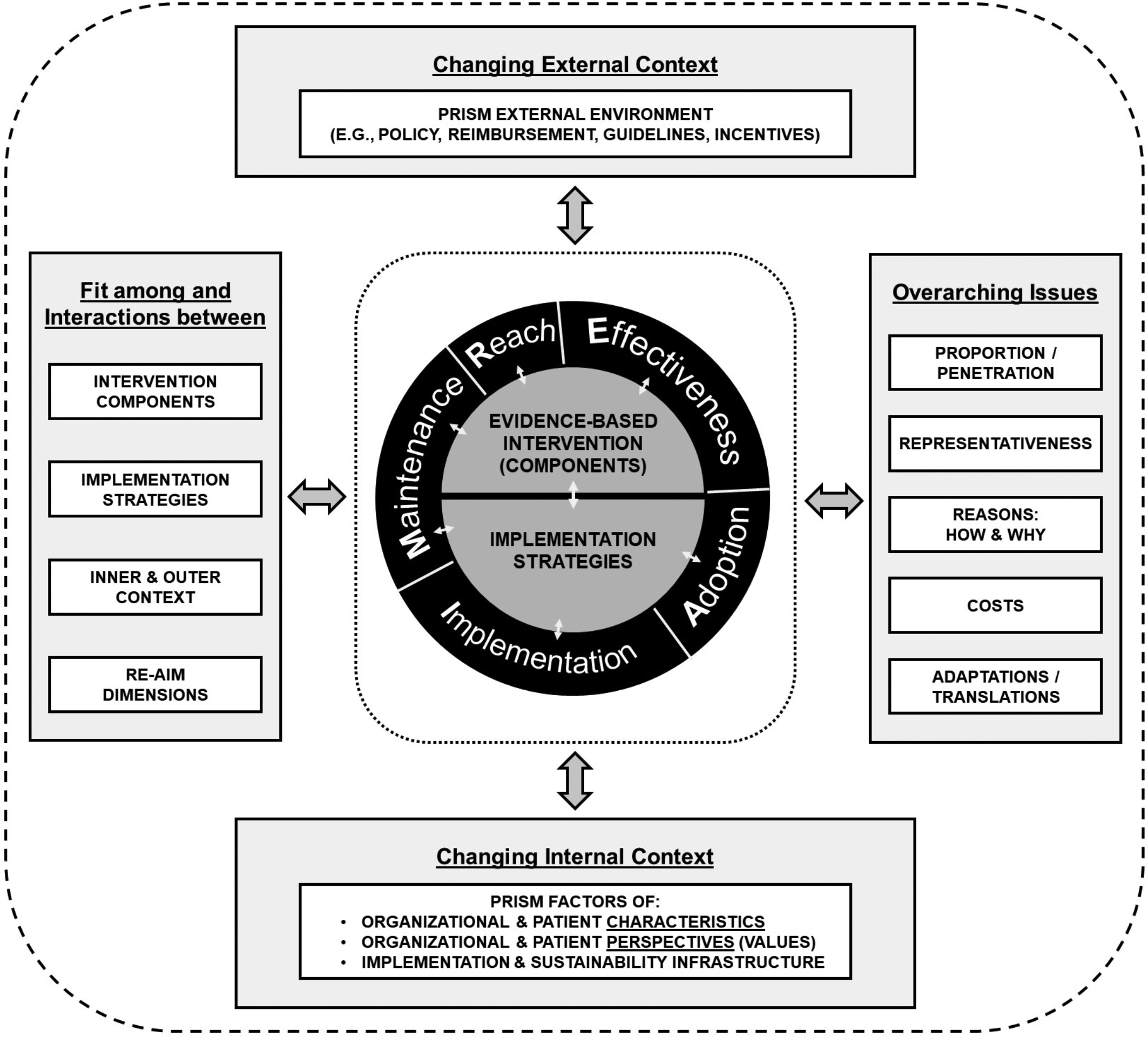

RE-AIM does not include “determinants,” contextual factors, or suggest ways to enhance outcomes

The original publication on and early research using RE-AIM did not include determinants. However, RE-AIM was expanded several years ago into PRISM [Reference Glasgow, Harden and Gaglio7,Reference Feldstein and Glasgow26]. The Practical Robust Implementation and Sustainability Model or PRISM includes RE-AIM outcomes (center section of Fig. 1) [Reference Feldstein and Glasgow26] and explicitly identifies key contextual factors related to these outcomes (see outer sections of Fig. 1). PRISM was developed in 2008 to address context and to provide a practical and actionable guide to researchers and practitioners for program planning, development, evaluation, and sustainment. It includes RE-AIM outcomes and also draws upon and integrates key concepts from Diffusion of Innovations [Reference Rogers27], the Chronic Care Model [Reference Wagner, Austin and Von Korff28], and the Institute for Healthcare Improvement (IHI) change model [Reference Berwick29].

Fig. 1. Pragmatic Robust Implementation and Sustainability Model (PRISM).

As shown in Fig. 1, PRISM (seewww.re-aim.org) identifies key “determinants” factors such as policies, “implementation and sustainability infrastructure,” and multilevel organizational perspectives [Reference McCreight, Rabin and Glasgow30]. It has been successfully used to guide planning, mid-course adjustments to implementation, and evaluation of interventions across a wide range of settings and topic areas [Reference Glasgow, Battaglia, McCreight, Ayele and Rabin16], with the original PRISM publication being cited by 160 different papers. The broad applicability of PRISM makes it a useful tool for conceptualizing context [Reference Glasgow, Harden and Gaglio7,Reference Feldstein and Glasgow26,Reference McCreight, Rabin and Glasgow30]. Feldstein and Glasgow and the RE-AIM website provide reflection questions for each factor for planning [Reference Feldstein and Glasgow26].

Depending on the study and/or needs, we recommend that researchers and practitioners who plan to use RE-AIM consider the use of PRISM. While not all studies or programs will decide to comprehensively evaluate or address context, PRISM can provide a pragmatic, feasible, and robust way to consider important contextual factors. There are new sections of the re-aim.org website that clarify these issues as well as how PRISM relates to RE-AIM.

RE-AIM does not address (or clearly define/distinguish) longer term sustainment and is restricted to an arbitrary 6-month time frame

This misconception likely came from the statement in the original RE-AIM publication that maintenance was defined as “at least 6 months following program completion” (emphasis added). This was never intended to mean that maintenance should only be assessed at or evaluation stopped at 6 months; there are numerous publications assessing maintenance at much longer intervals [Reference Glasgow, Gaglio and Estabrooks31]. RE-AIM can and should include assessment of long-term sustainment intentions and plans, even if funding limitations preclude assessment at distal time points [Reference Glasgow, Harden and Gaglio7]. Historically, RE-AIM is one of the first frameworks that explicitly called attention to issues of sustainability or maintenance at setting levels. Although systematic reviews of RE-AIM application have found that “maintenance” data are often not reported [Reference Harden, Gaglio and Shoup14], there has been growing recognition of the importance of understanding of longer term sustainment in a dynamic context [Reference Shelton, Cooper and Stirman32,Reference Chambers, Glasgow and Stange33]. A recent extension of RE-AIM addresses this gap and advances research by explicitly conceptualizing and measuring maintenance/sustainability as longer term (i.e., ideally at least 1–2 years post initial implementation and over time) and dynamic in nature [Reference Shelton, Chambers and Glasgow34].

The conceptualization of dynamic sustainability [Reference Chambers, Glasgow and Stange33] associated with RE-AIM includes consideration of (1) iterative application of RE-AIM assessments to address sustainability throughout the life cycle of an intervention; (2) guidance for operationalizing dynamic sustainability and the “evolvability” of interventions, including continued delivery of the original intervention functions and implementation strategies, adaptations, and potential de-implementation to produce sustained and equitable health outcomes; and (3) continued and explicit consideration of costs and equity as driving forces impacting sustainability across RE-AIM dimensions [Reference Shelton, Chambers and Glasgow34].

RE-AIM does not include costs

Cost and economic analyses are central issues in dissemination and implementation in any setting. Although cost has been explicitly included in RE-AIM since at least 2013, it is one of the least frequently reported elements in research reports, while often one of the primary concerns of potential adopters and decision makers [Reference Gaglio, Shoup and Glasgow13]. When costs are reported, this is often done inconsistently, making comparisons difficult [Reference Eisman, Kilbourne, Dopp, Saldana and Eisenberg35]. Understanding cost is challenging because it varies depending on the complexity of the intervention(s), the implementation strategies used, and the settings for delivery.

Currently, in RE-AIM cost is most explicitly considered and reported under Implementation, and has been for many years [Reference Kessler, Purcell, Glasgow, Klesges, Benkeser and Peek23]. More recently, some RE-AIM applications have included a focus on cost to obtain desired outcomes across all five framework dimensions [Reference Kessler, Purcell, Glasgow, Klesges, Benkeser and Peek23]. Rhodes et al. [Reference Rhodes, Ritzwoller and Glasgow36] identified and illustrated pragmatic processes and measures that capture cost and resource requirements from the perspectives of individual participants, intervention agents, and settings. Such evaluations from a variety of multilevel stakeholder perspectives are needed to enhance research translation into practice [Reference Eisman, Kilbourne, Dopp, Saldana and Eisenberg35].

RE-AIM considers fidelity as the only implementation outcome measure

In the original RE-AIM paper, implementation referred to the extent to which a program was delivered as intended (fidelity) [Reference Glasgow, Vogt and Boles10]. The implementation dimension has evolved dramatically over the last 20 years and now has the most components of any RE-AIM dimension. Currently, implementation evaluation criteria include fidelity to delivering the intervention, consistency of implementation across sites/setting, adaptations made to the intervention or implementation strategies, and, as discussed above, costs [Reference Glasgow, Harden and Gaglio7,Reference Kessler, Purcell, Glasgow, Klesges, Benkeser and Peek23]. Implementation seeks to understand how an intervention is integrated into diverse settings and populations, as well as how it is delivered and modified over time and across settings. The importance of identifying, tracking, and understanding the need for adaptation is central to implementation. Moreover, this dimension has benefitted greatly from expansion and incorporation of qualitative methods, which allow for a deeper exploration and understanding of not only what the adaptations were, but who made them, why they were made, and what the results were [Reference Pawson19,Reference McCreight, Rabin and Glasgow30,Reference Holtrop, Rabin and Glasgow37,Reference Rabin, McCreight and Battaglia38].

RE-AIM does not account for different phases of implementation

Some frameworks [Reference Aarons, Hurlburt and Horwitz9] explicitly define phases of implementation, and a criticism of RE-AIM is that it does not. Actually, PRISM has been used to address progress on and priority across different RE-AIM dimensions at different points in the implementation process (planning, early and mid-implementation, sustainment) [Reference Glasgow, Battaglia, McCreight, Ayele and Rabin16]. There is an inherent temporal order in RE-AIM such that a program has to be adopted before it can be implemented, it needs to be adopted, reach participants, and be implemented to be effective, etc. Pairing RE-AIM dimensions, including important questions implementation teams can ask themselves before, during, and after the implementation process, with a contextual determinants framework, such as PRISM [Reference Feldstein and Glasgow26] or the Consolidated Framework for Implementation Research (CFIR) [Reference King, Shoup and Raebel39,Reference Damschroder, Aron, Keith, Kirsh, Alexander and Lowery40], allows selection of the most relevant constructs at different points in time. Implementation teams can then use these constructs to help guide targeted implementation strategies that will ensure maximum reach, effectiveness, implementation fidelity, and maintenance.

Methods Issues

RE-AIM uses only quantitative data

Definitions of RE-AIM constructs have always had a quantitative component; for instance, reach is defined as the number and percent of eligible recipients who actually participate in an intervention and the representativeness of those participants. Yet, reach also includes the type of eligible recipients who participate (including equity in participation and access) and the reasons for participation or nonparticipation. While “type” and “reasons” can be operationalized as quantifiable measures (e.g., participant demographics, frequencies of people citing transportation or cost barriers to participation), there is important nuance and depth to the who, what, why, and how of reach that numbers may not convey. The same is true for the other RE-AIM dimensions. Qualitative and mixed methods are valuable tools for gaining deep insight into questions of who, what, how, and why people participate in and gain benefit from an intervention [Reference Holtrop, Rabin and Glasgow37] and have been explicitly recommended in multiple RE-AIM reviews [Reference Harden, Gaglio and Shoup14,Reference Kessler, Purcell, Glasgow, Klesges, Benkeser and Peek23]. Holtrop et al. (2018) recently provided a thorough synthesis and guide to qualitative applications of RE-AIM [Reference Holtrop, Rabin and Glasgow37].

RE-AIM is static (meaning not used iteratively)

In addition to RE-AIM’s use during planning, implementation, and sustainment phases of a program as mentioned earlier, RE-AIM can structure the assessment of progress and provide guidance for mid-course adjustments and adaptations by assessing the impact on all dimensions at a given point in time [Reference Harden, Smith, Ory, SMith-Ray, Estabrooks and Glasgow12]. Although RE-AIM has been used informally to guide iterations for many years [Reference Balis, Strayer, Ramalingam and Harden41,Reference Hill, Zoellner and You42], more recently, Glasgow et al. (2020) developed a specific protocol for the iterative use of RE-AIM and described key steps and application of this methodology across five health system studies in the VA [Reference Glasgow, Battaglia, McCreight, Ayele and Rabin16]. This methodology was found to be feasible and led to reflective conversations and adaptation planning within project teams.

RE-AIM requires the use of all dimensions, with all dimensions considered equally important

This misconception is likely based on an over reliance on the original RE-AIM source article that highlighted the importance of all five RE-AIM dimensions and proposed that the potential public health impact was the product of results on all dimensions [Reference Glasgow, Vogt and Boles10]. Reviews of the RE-AIM literature have consistently found that usually not all RE-AIM dimensions are reported within a single study [Reference Harden, Gaglio and Shoup14]. This and practical considerations precipitated a more explicit pragmatic approach to applying RE-AIM that relies on stakeholder priorities regarding the relative importance of and need to gather information across the RE-AIM dimensions [Reference Glasgow and Estabrooks15].

Not all RE-AIM dimensions need to be included in every application, but (a) consideration should be given to all dimensions; (b) a priori decisions made about priorities across dimensions and reasons provided for these decisions; and (c) transparent reporting conducted on the dimensions that are prioritized for evaluation or enhancement [Reference Glasgow and Estabrooks15]. This pragmatic approach encourages the use of all dimensions during planning, conducting evaluation based on dimensions important for decision-making, and providing contextual information on dimensions that are not directly targeted.

Implementation of RE-AIM

RE-AIM constructs are difficult to distinguish

New users of RE-AIM frequently conclude that reach and adoption are the same thing; that adoption and implementation overlap; or that it is unimportant or redundant to consider both. Concerns that RE-AIM constructs are not distinct or have what has been described as “fuzzy boundaries” [Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11] have been addressed through education and guidance on RE-AIM measures and application. While RE-AIM has face validity and is intuitive for many people, it does have nuances and requires study to learn and apply appropriately.

Reach is measured at the level of the individual – the intended beneficiary of a program (e.g., a patient or employee). Adoption is measured at one or more levels of the organizational or community setting that delivers the program and the “staff” or delivery agents within those settings (e.g., a healthcare provider or educator decides to offer or be trained in a program) [Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11]. Similarly, effectiveness refers to outcomes at the recipient level (e.g., increased physical activity, weight loss), while implementation refers to program delivery outcomes at the service provider level (e.g., the interventionist consistently follows the intervention protocol, a healthcare provider consistently screens patients).

RE-AIM only works for large-scale research or in large, well-funded evaluations

The value of RE-AIM for nonresearch application was summarized in a recent report on use of RE-AIM in the “real world” – i.e., use in clinical and community settings for planning, evaluating, and improving programs, products, or services intended for improving health in a particular population or setting (not to create generalizable knowledge) [Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11]. Many successful projects described use of RE-AIM with low budgets or even no budget. Data source, timeline, and scope and scale of a project all influence feasibility, timeline, and resource requirements. Picking one or two high-value metrics or qualitative questions for a RE-AIM-based evaluation and focusing on readily available data sources can help to keep an evaluation within scope. A special issue in Frontiers of Public Health in 2015 featured reports from a large number of organizations that—with very little funding—successfully applied RE-AIM to assess physical activity and achieve falls reduction among older adults [Reference Ory and Smith43].

Use of RE-AIM precludes use of other implementation science frameworks

Use of RE-AIM in combination with other frameworks is common [Reference King, Shoup and Raebel39] and encouraged [Reference Glasgow, Harden and Gaglio7], assuming that the models are compatible. Combining frameworks that yield complementary information (e.g., process, explanatory frameworks, TMFs from other disciplines) enhances our ability to understand why and how implementation succeeded or failed [Reference King, Shoup and Raebel39]. When multiple frameworks are used, it is important to provide a theoretical rationale for how the selected frameworks supplement each other [Reference Nilsen17]. An example of the use of multiple frameworks is Rosen et al. [Reference Rosen, Matthieu and Wiltsey Stirman44] who used the EPIS framework, along with CFIR to assess what emerged as a key adoption determinant (client needs and resources), and RE-AIM to assess relevant implementation outcomes (reach and effectiveness). Multiple examples of integrating RE-AIM with other TMFs are provided at www.re-aim.org.

Resources and Current Directions for RE-AIM

We have taken several steps and developed the resources summarized below to address the above misconceptions. We also briefly note ongoing activities that we hope will also help to provide additional clarification and guidance.

RE-AIM Website Revisions, Expansion, and Other Resources

Guidance on appropriate use of the RE-AIM (and other TMFs), beyond peer-reviewed publications, is needed to address these misconceptions. In recognition of this need, members of the National RE-AIM Working Group [Reference Harden, Strayer and Smith20] developed a website in 2004 (www.re-aim.org) to assist with the application of RE-AIM. The website provides definitions, calculators, checklists, interview guides, and a continuously updated searchable bibliography. The website has expanded to include blog posts, slide decks (one specifically on misconceptions), and a webinar series. Answers to questions about how to apply the framework, FAQs (http://www.re-aim.org/about/frequently-asked-questions/), and evidence for RE-AIM have been added or expanded.

Of particular relevance for readers of this article, we have recently (a) added new summary slides on RE-AIM that address many of the issues above (http://www.re-aim.org/recommended-re-aim-slides/) and (b) created a new summary figure that illustrates the PRISM/RE-AIM integration and highlights several crosscutting issues that should provide clarification on some of the misconceptions above (Fig. 1) [Reference Glasgow, Harden and Gaglio7]. The website is updated monthly, and a new section provides more examples and guidance for applying RE-AIM, including a checklist for users to self-evaluate their use of RE-AIM. Finally, members of the current National Working Group on the RE-AIM Planning and Evaluation Framework published a recent paper describing the use of this website platform to disseminate a number of resources and tools [Reference Harden, Strayer and Smith20].

Non-Researcher Use of RE-AIM

We have addressed the misconception that RE-AIM only works for controlled research or large, well-funded studies. However, applying RE-AIM in nonresearch settings often requires guidance and direction for optimal utilization by those not familiar with RE-AIM [Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11]. A basic understanding of RE-AIM concepts and methodologies can be obtained from pragmatic tools on the website and key publications demonstrating successful application of RE-AIM model in community or clinical settings [Reference Kwan, McGinnes, Ory, Estabrooks, Waxmonsky and Glasgow11,Reference Harden, Smith, Ory, SMith-Ray, Estabrooks and Glasgow12]. For more complex issues, we also recommend more interactive consultations with an experienced RE-AIM user or the National Working Group.

Current Directions

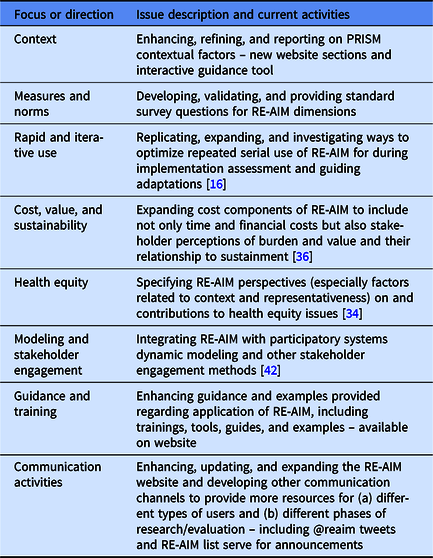

Over time, several core aspects and features of RE-AIM have remained the same, but others have substantially evolved [Reference Glasgow, Harden and Gaglio7]. The key principles and dimensions remain, and there is still an emphasis on actual or estimated public health impact and generalizability of findings. The changes to RE-AIM have been in response to growing knowledge in implementation science and practice and include (a) refinement of the operationalization and some expansion of the key dimensions; (b) incorporation of context, adaptations, health equity, and costs; and (c) guidance on applying qualitative approaches.

We expect that as our methodological approaches and measurement practices advance, RE-AIM/PRISM will continue evolving as recommended by Kislov et al. [Reference Kislov, Pope, Martin and Wilson3] Our key current emphases are summarized in Table 3 and include more in-depth assessment of context as part of PRISM. We are developing and testing standardized survey measures of RE-AIM dimensions, as well as guidance for iterative application of RE-AIM to inform adaptations [Reference Glasgow, Battaglia, McCreight, Ayele and Rabin16]. Other current directions include application of expanded RE-AIM/PRISM to address the interrelated issues of cost, value, and sustainability [Reference Shelton, Chambers and Glasgow34], and explication of how representativeness components of RE-AIM helps address health equity. We are also exploring the integration of RE-AIM with participatory systems dynamic modeling and other stakeholder engagement methods.

Table 3. Current directions and resources under development for the expanded RE-AIM/PRISM framework

PRISM, Pragmatic Robust Implementation and Sustainability Model; RE-AIM, reach, effectiveness, adoption, implementation, and maintenance.

Conclusions

TMFs have contributed substantially to implementation science [Reference Tabak, Khoong, Chambers and Brownson1,Reference Esmail, Hanson and Holroyd-Leduc2]. They evolve over time to address limitations and applications to new contexts and to improve their usefulness [Reference Kislov, Pope, Martin and Wilson3]. This evolution can create confusion about the possible, correct, and best uses of the TMF. In light of these issues, we make the following recommendations to reduce confusion and misunderstanding about the use of a TMF while also acknowledging that acting on such recommendations might not always be feasible.

1. With each major modification or update of a TMF, developers could produce a scholarly publication and an archived webinar that explicitly describes the modification, the problem it is addressing, and current guidance. It may help to include explicit language such as “An adaptation to TMF XX” to make it clear this is a revision, or an expanded version of the TMF. If the revision is not produced by the originators of the TMF, the developers should be consulted, and the publication should state whether they agree with the change.

2. Although sometimes challenging, reviewers who are summarizing or categorizing a TMF should check the recent literature and major users of that TMF to make sure descriptions of it are accurate.

3. Potential users of a TMF should complete a review, especially looking for recent updates, and explicitly search for adaptations to the TMF. In particular, they should check the website on the TMF, if one exists. Users should cite more recent uses of the TMF; not only the original publication or an earlier review. Finally, it is important for TMF users to carefully articulate why and how they are using a TMF, which may help alleviate some issues of mischaracterization.

4. Hosts of TMF websites should summarize improvements or revisions to the TMF in a prominent location and explicitly identify common misconceptions and what to do about them.

As Kislov and colleagues [Reference Kislov, Pope, Martin and Wilson3] have articulated, TMFs should change and adapt over time so that they do not become ossified. Table 3 outlines ways in which RE-AIM is currently evolving and resources that are being developed. When TMFs do change and adapt, it is important that researchers and users keep up with such changes. Although it is impossible to completely prevent such occurrences, we hope this discussion and our recommended actions will minimize TMF misapplication. We welcome dialogue about actions that can be taken by TMF developers, reviewers, and users.

Acknowledgments

We thank Elizabeth Staton, Lauren Quintana, and Bryan Ford for their assistance with editing and preparation of the manuscript.

Drs. Glasgow and Holtrop’s contributions are supported in part by grant #P50CA244688 from the National Cancer Institute. Dr. Estabrooks’ contributions supported by U54 GM115458-01 Great Plains IDEA CTR.

Disclosures

The authors have no conflicts of interest to declare. All statements in this report, including its findings and conclusions, are solely those of the authors and do not necessarily represent the views of the Patient-Centered Outcomes Research Institute (PCORI), its Board of Governors or Methodology Committee.