1 Introduction

Those interested in comparative research in an ever more interconnected world are increasingly turning to studying comparison itself as a social process with social consequences (Nelken, Reference Nelken, Mitsilegas, Alldrige and Cheliotis2015b; Reference Nelken2016; Reference Nelken2019b; Reference Nelken, Adams and Van Hoecke2021a). A leading example of such processes is found in the making and implementing of so-called global social indicators. A global social indicator has been defined by Sally Merry and her colleagues at New York University (NYU) as

‘a named collection of rank-ordered data that purports to represent the past or projected performance of different units. The data are generated through a process that simplifies raw data about a complex social phenomenon. The data, in this simplified and processed form, are capable of being used to compare particular units of analysis (such as countries or institutions or corporations), synchronically or over time, and to evaluate their performance by reference to one or more standards.’ (Merry et al., Reference Merry, Davis and Kingsbury2015, p. 4)

In her fundamental contributions to understanding these instruments, Merry also pointed to what she called their knowledge and governance ‘effects’ – the way in which they helped to construct the phenomena that they claimed to measure and to depoliticise the distributional decisions that they helped to justify (see e.g. Merry, Reference Merry2011; Davis et al., Reference Davis, Merry and Kingsbury2012; Merry et al., Reference Merry, Davis and Kingsbury2015). As a committed qualitative anthropologist, Merry also nurtured deep suspicions of the quantitative methodologies that were central to such global comparisons. Her last book focused explicitly on what she called ‘the seductions of quantification’ (Merry, Reference Merry2016) and showed how these instruments often failed to attend to differences in context amongst the places or units being compared.Footnote 1 Even writers who see more merit in these kinds of indicators than Merry also worry about the neglect of the local. For example, Rosga and Satterthwaite, who are activists and pioneering commentators on the role of indicators in the sphere of human rights, admit that ‘it may be true that quantitative methods, in their very abstraction and stripping away of contextualizing information have particular – and especially high – risks for misuse by those with the power to mobilize them’ (Rosga and Satterthwaite, Reference Rosga and Satterthwaite2009, p. 315).

But what if global indicators necessarily involve ‘stripping away contextualizing information’? As the definition given by the NYU scholars tells us, indicators are used both to compare and to evaluate. Could such instruments still play their evaluative role if they had to be faithful to local contexts? Is the failure of global indicators to capture faithfully different contexts a reason why they fail – or is it actually the prerequisite for their success?Footnote 2 The problem, as Merry herself puts it, is that ‘in order to be globally commensurate, they cannot be rooted in local contexts, but in order to accurately reflect local situations, they need to be’ (Merry and Wood, Reference Merry and Wood2015, p. 217). Put differently, context becomes moot because global social indicators are involved both in the practice of comparison, learning about similarities and differences, whilst alsor being linked, at the same time, to the goal of commensuration, seeking to rank performance and make matters come into line. For the first purpose, it is crucial to compare ‘like with like’ (and so avoid, as the saying goes, ‘comparing apples with pears’). But commensuration seeks to impose a standard on what are known to be very different units (see Espeland and Stevens, Reference Espeland and Stevens1998; Reference Espeland and Stevens2008). In this paper, I set out to explore how COVID indicators can be said to fall ‘between’ comparison and commensuration because of the way in which these exercises can overlap and come into conflict.Footnote 3

2 Not waving but drowning

As of writing, there have been over 100 million cases of infection and over 2 million deathsFootnote 4 as a consequence of the three ‘waves’ so far documented of the COVID-19 pandemic. And these figures – which too easily obscure the individual, often tragic, stories behind them – are still rising. Those following reports of the epidemic's progress can also feel as if they are ‘drowning’ in a sea of numbers. As Ashley Kirk commented, already in March 2020:

‘More than any other time in my career as a data journalist, the general public is obsessed with numbers. The number of coronavirus cases, the number of coronavirus deaths, the number of tests administered – as well as any analysis that slices and dices these data, whether that's daily increases or per-person rates.’Footnote 5

Such numbers are generated by national, international and transnational organisations, and illustrated in maps, graphs, tables and figures, as inputs of current data or as models to predict the future. Most commonly, they are presented as ‘snapshots’, illustrating diachronic developments over time or synchronic differences between countries. Alternatively, they may be displayed on so-called dashboards that focus on daily bulletins, or even minute-by-minute changes, some of which allow interaction with the user and offer the possibility of interrogating the data with respect to given localities and times.Footnote 6

Beyond monitoring the progress of the disease, COVID indicators were used by governments, administrators and citizens for such aims as predicting the future and co-ordinating and legitimating decision-making. As with other global social indicators, ranking what was happening in different countries pointed users to which places were safer for investors, for workers and for travellers, etc. They also formed part of rituals creating solidarity and distracting from other political issues. Above all, however, the ever-updated information in indicators comparing the relative success of a given country in dealing with the virus over time was intended to show the need for preventive action. Like other global social indicators, these comparisons act as a form of ‘soft regulation’ and can be placed along a continuum of other types of regulation from those most counter-factual to those most factual (see Figure 1). At one end of the continuum, COVID indicators were pressed into service by governments to justify rules requiring compliance by citizens (in association with both nudgingFootnote 7 or enforcement) and helped to justify legal crackdowns on those who failed to conform to health obligations. At the other, more manipulative end, they helped to prepare the way for the obligatory use of artificial-intelligence (AI) devices to measure people's rates of infection in ways that left little room for resistance.Footnote 8

Figure 1. A continuum of normative regulation

Placing global social indicators on such a continuum also raises important questions about the interaction between the various forms of regulation in the response to COVID. At one end, the intertwining of indicators and the law saw the use of indicators to justify detailed, confusing and fast-changing rules, and sometimes, and in some places, also the use of brutal policing methods, the emergency suspension of civil rights and the executive side-stepping of parliamentary scrutiny. At the other end, indicators often relied on data gathered by AI and pointed towards a brave new world of algorithmic regulation.Footnote 9 This time around, the use of tracing apps was not compulsory in most countries, and there was at best partial take-up. But there is every reason to think that this will change in the future and that, for better or worse, surveillance monitoring and self-monitoring will increasingly displace reliance on indicators at least as a means of ensuring compliance (Roberts, Reference Roberts2019).

Building on various sources of data, a range of global indicators were produced aiming either to report on the progress of the epidemic or assess the success of policies in dealing with it.Footnote 10 Examples that focused more on the first of these aims include:

• the World Health Organisation (WHO)'s ‘COVID-2019 situation reports’;Footnote 11

• the Johns Hopkins University (JHU)'s ‘COVID-19 Dashboard’;Footnote 12

• the European Centre for Disease Prevention and Control (ECDC)'s ‘COVID-19 situation update worldwide’;Footnote 13

• Worldometer (WoM)'s Our-World-in-Data (OWiD)'s statistics on the ‘Coronavirus Pandemic’;Footnote 14

• the Institute for Health Metrics and Evaluation (IHME)'s ‘COVID-19 Projections’;Footnote 15 and

• the US CDC Global COVID-19 website.Footnote 16

Examples of the second kind are:

• the Oxford University's ‘COVID-19 Government Response Tracker’ (Ox-CGRT);Footnote 17

• the Deep Knowledge Group (DKG)'s ‘COVID-19 Rankings and Analytics’;Footnote 18

• the Centre for Civil and Political Rights (CCPR)'s ‘State of Emergency Data’;Footnote 19

• Simon Porcher's ‘Rigidity of Governments’ Responses to COVID-19’ dataset and index;Footnote 20 and

• Policy Responses to COVID – the International Monetary Fund (IMF).Footnote 21

The emphasis in these latter indicators was on understanding which policies were best for limiting the spread of the virus. But some attention was also given to other aspects of the pandemic, such as its impact on the economy. The IMF indicator, for example, explains that ‘This policy tracker summarizes the key economic responses governments are taking to limit the human and economic impact of the COVID-19 pandemic’.Footnote 22 Most importantly for my argument, the same data were used for a variety of different roles: to explain rates of infections and death, to justify policy choices and to judge and rank performance. Comparison for the purpose of explanation was thus often linked to practical aims of policy-making and efforts at commensuration tried to institutionalise similarities in a world of difference. Reported rates of testing, of infection (with and without symptoms) deaths, numbers in home isolation and hospitalised, those on ventilators, recovered, excess deaths as compared to previous years, etc. were represented both as dependent variables to be accounted for and as the outcomes of performances for which organisations can be held accountable.

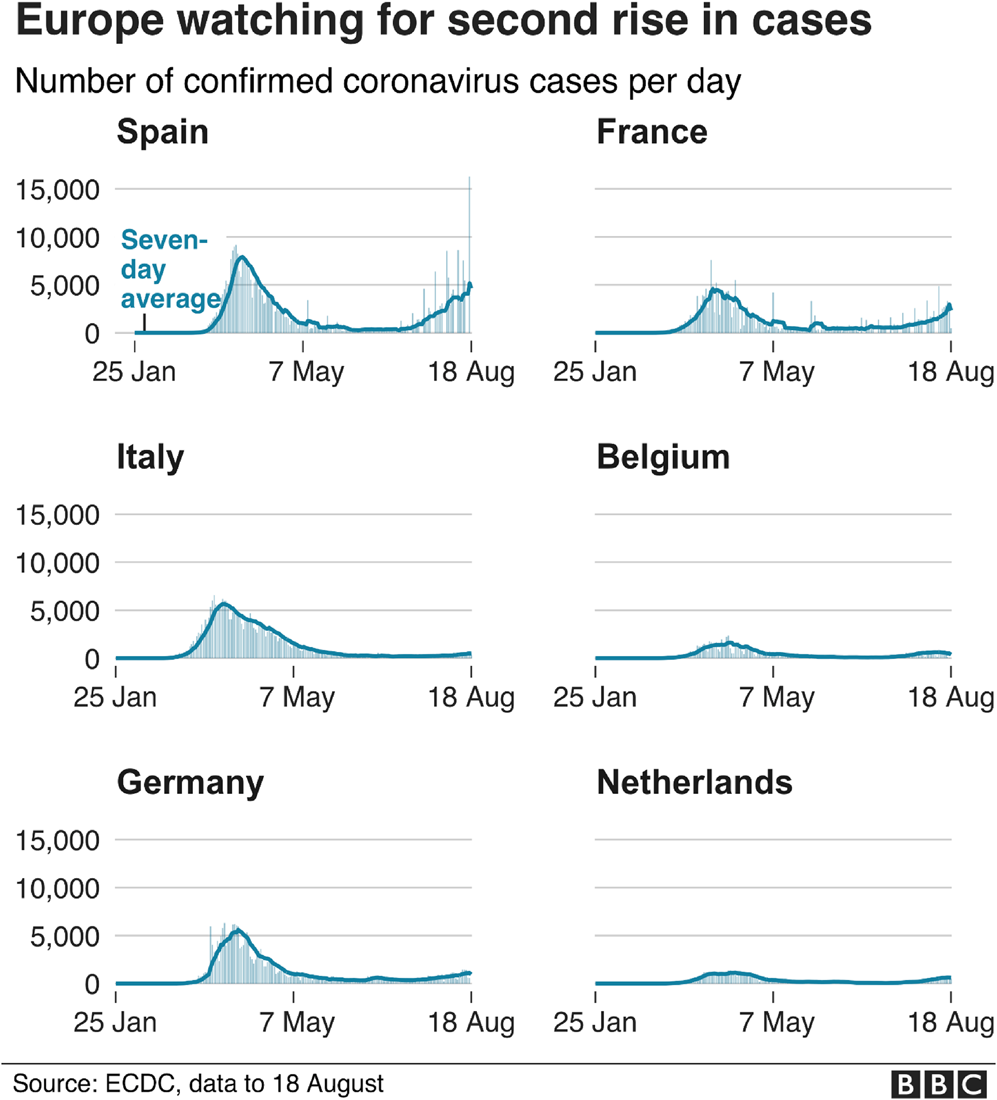

As with other global social indicators, these instruments played the familiar role of encouraging best practice by states and shaming those whose record seemed poorer. But, in addition, these indicators were relied upon by national governments as a way of helping to change the conduct of individuals. Although the general public were unlikely to consult the original sources, information from them was communicated indirectly, via the maps, tables and figures offered daily by different national newspapers and other media outlets. Such reports of the progress of the epidemic within and between places (mainly countries, regions or towns) were a constant of broadcast and online media. Sometimes, the reports left it to the reader to work out who was doing better or worse (as in Figure 2).Footnote 23

Figure 2. Implicit comparisons

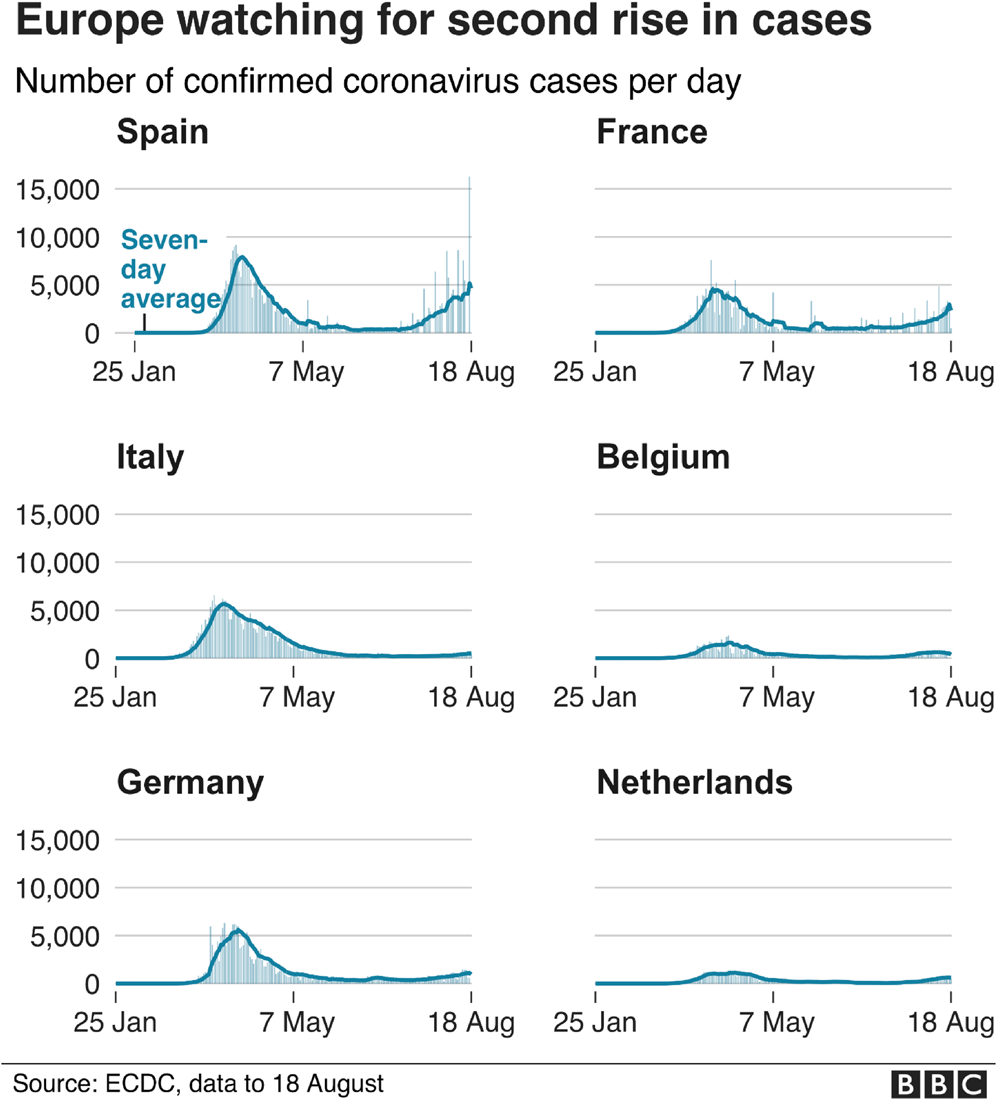

More often, however, they also made some effort to underline the relative success of some countries in relation to others, as shown in Figure 3.Footnote 24

Figure 3. Explicit comparisons

Insofar as COVID indicators aimed at successful comparison, this might be thought to require ‘comparing like with like’, implying that contextual differences would be all-important to explaining differences in outcomes of the spread of the disease. But, in the case of COVID indicators, as with other indicators aimed at ranking performance, comparisons were geared above all to evaluating relative success and bringing about improved responses to the disease. For example, according to the Our World in Data website:

‘Some countries have not been able to contain the pandemic. The death toll there continues to rise quickly week after week. Some countries saw large outbreaks, but then “bent the curve” and brought the number of deaths down again. Some were able to prevent a large outbreak altogether. Shown in the chart are South Korea and Norway. These countries had rapid outbreaks but were then able to reduce the number of deaths very quickly to low numbers.’Footnote 25

The search was, therefore, for practices and standards that were applicable despite differences in local contexts. Where commensuration was the goal, this presupposed that the units being compared were very different and that success consisted of ranking and seeking to transform such differences. In what follows, I shall explore further how COVID indicators fared when used for each of these exercises.

3 COVID indicators and successful comparison

COVID indicators had lots to tell us about what was happening in different places and to different groups in different phases. But it was sometimes hard to be sure that the comparisons they drew were fair ones. Even comparing the same country over time was complicated, given the changes in policies, testing and rules.Footnote 26 Indeed, the problem of not ‘comparing apples and pears’ was sometimes explicitly mentioned.Footnote 27 It was difficult to see what there was in common in the motley collection of more successful countries, such as Australia and New Zealand, Norway, Germany, Taiwan, Singapore, South Korea and central European countries such as Hungary, as opposed to less successful ones, such as the US, Brazil, Russia, Britain, Belgium, France, Spain, Peru, Mexico, Iran and India. But some effort was made. It was noted that some of the more successful countries, such as Taiwan, Singapore and South Korea, had recently experienced SARS and MERS epidemics (could we say that they had been ‘vaccinated’?). South Korea, for example, had specifically learnt from their mistakes last time around and had systems in place ready to deal with any new epidemic.Footnote 28 As regards the less successful places, commentators pointed to failures of leadership in the way the crisis was handled, underpreparation and slowness in reacting to the evidence.Footnote 29 At the outset, crucial errors had been made, such as confusing COVID with influenza, using ventilators too soon, exposing old people in care homes to the disease, putting political logic before health and not listening sufficiently to (the right) experts.

At different times, in accordance with changing casualty rates, some explanatory factors seemed to be more important than others. South Korea had done well, we were told, because it had a high rate of beds per inhabitants, and Germany likewise (even though it had been criticised by the OECD for such an alleged ‘oversupply’). Explanations for success often reflected different political starting points. Newspaper commentators were divided according to their usual ideological sympathies as to whether to blame social structural problems for the difficulty in making sound choicesFootnote 30 or instead blame overly individualistic and unruly populations.Footnote 31 Those wishing to put the blame on neoliberalism and privatisation noted that the Lombardy region of Italy – one of the worst affected – has been an experimental suite for health-care privatisation and the shifting of provision form community care to centralised hospitals. Others noted that it was in countries with ‘illiberal populist’ leaders, most obvious in the case of the US and Brazil, that the virus had got the most out of hand (Leonhardt and Leatherby, Reference Leonhardt and Leatherby2020).Footnote 32

Some authors insisted that, overall, countries with more autocratic regimes, starting with China itself, were the ones who had shown themselves most able to respond quickly and decisively. Cepaluni, Dorsch and Branyiczki sought to understand ‘why countries with more democratic political institutions experienced deaths on a larger per capita scale and sooner than less democratic countries’. According to them, more democratic regimes face a dilemma if they are

‘to respond quickly and more efficiently to future outbreaks of pandemics, or similar urgent crises. Successful strategies need to “include expedited decision-making processes that place unpalatable restrictions on individual liberties. In our view, failure to deal effectively with pandemics poses a risk to the public's trust in democratic governance and could contribute to the democratic roll-back that is happening in some regions of the world”.’Footnote 33

Moving from analysis to advocacy, they argued that, ‘Giving up some liberties in the short-run within democratic institutions may be necessary to ensure liberties into the future with democratic institutions’ (Cepaluni et al., Reference Cepaluni, Dorsch and Branyiczki2020, p. 25).

For other commentators, however, local historical and contextual details were all-important.Footnote 34 Difficulties in arresting the spread of the COVID virus was a function of the unhealthy population in the US; built-up resistance to antibiotics in the case of Italy; the distractions of Brexit in the UK; or tensions between the federal state and regional governments in Spain. Outside of Europe, the range of relevant local factors was even wider. In Peru, we were told, it was a challenge to enforce social distancing where so many lived in overcrowded homes, and the lack of refrigerators meant that people had to shop regularly and so risk coming into contact with market vendors who may have the illness. The society relied on its informal economy, and people had to use crowded public transport. It was difficult to deliver aid to those who needed it when only 38 per cent of the population had bank accounts.Footnote 35 In India, many people had a justified suspicion of top-down health interventions, as well as a strong commitment to collective religious practices that could expose them to danger. To understand the unexpectedly low rate of infections and deaths in most countries in Africa (other than South Africa), empirical research was needed to establish in each case whether this could be linked to their previous experience of dealing with pandemics or was more an artefact of poor record-keeping or other features of strained health systems.Footnote 36

But why do such local differences matter? Are they exactly what we want to discover by engaging in comparison, or do they show that places are not really comparable in the first place? It depends on our goal. If this is to discover and promote best practices in responding to COVID, we do need to compare like with like to see how recommendations would work out under different conditions. But, if we want to advise when countries should impose lockdowns or prohibit travelling to certain places or accept incoming travellers and tourists, the reasons why some are more or less handicapped in the fight against COVID is a secondary matter. What matters is the level of risk based on current performance. Yet, this distinction was often blurred. Many COVID reports did include careful disclaimers about the limited validity of their comparisons, suggesting that this would be relevant to their overall comparison of performance.Footnote 37 Serious efforts were made to compare deaths and infections per capita and not only in absolute terms, and to use moving averages to get a sense of trends. Less often, readers were also reminded that the numbers reported were highly dependent on the number of people being traced and tested for the virus. But the small print was usually ignored by those relying on indicators.

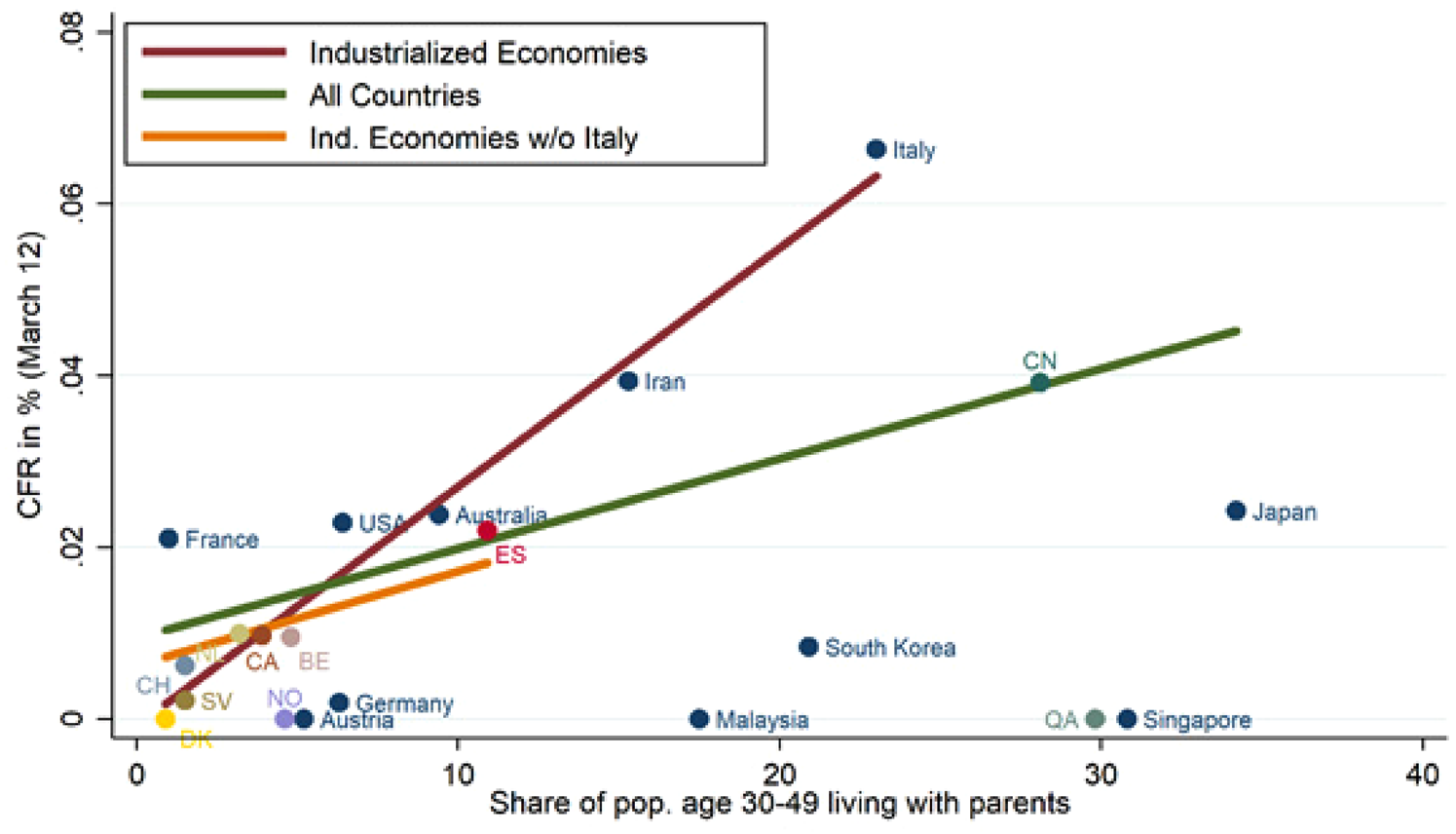

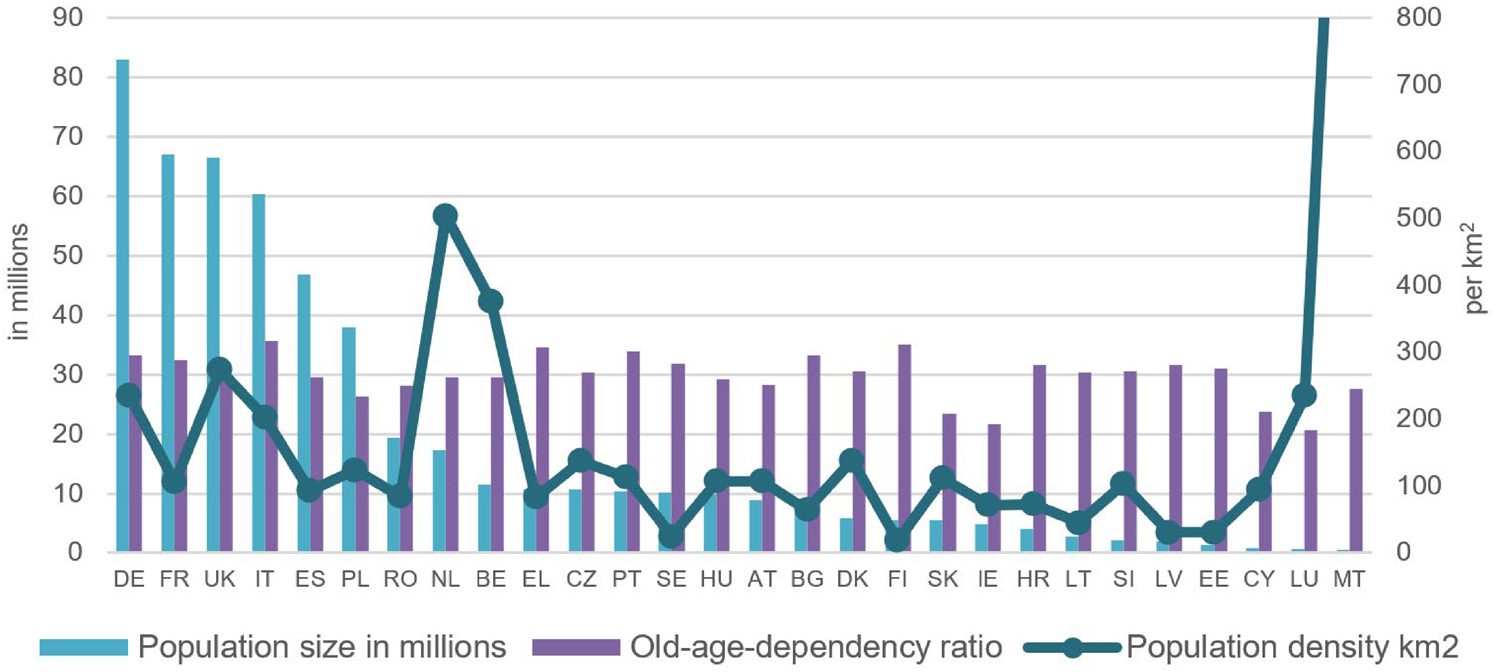

More interestingly, there was some gesture to the need to compare like with like in those graphs and tables found in the media that sought to ‘control’ for interfering variables so as to make the comparison fairer and more instructive. As shown in Figure 4, some reports tried to build into their comparisons the added risk of young and old living together at home. Other graphs, as seen in Figure 5, sought to acknowledge the potential relevance of old age, dependency ratio and population density.

Figure 4. Intergenerational ties and case-fatality rates. Source: ‘Intergenerational ties and case fatality rates: a cross-country analysis’, VOX EU CEPR, available at https://voxeu.org/article/intergenerational-ties-and-case-fatality-rates.

Figure 5. Death and infection rates for COVID: controlling for old age, dependency ratio and population density. Source: L. Hantrais, ‘Comparing European reactions to COVID-19: why policy decisions must be informed by reliable and contextualised evidence’, LSE Blogs, available at https://blogs.lse.ac.uk/europpblog/2020/05/19/comparing-european-reactions-to-COVID-19-why-policy-decisions-must-be-informed-by-reliable-and-contextualised-evidence/. See also Hantrais and Letablier (Reference Hantrais and Letablier2021).

What remains puzzling is why those making COVID-ranking indicators took account of so few of the many interfering variables that could potentially affect a fair comparison. It is plausible to think that this is because comparison and commensuration led in different directions. It may have been true that each country was so different that it needed to find its own way – that ‘each country's strategy to curb COVID-19 should be based on its specific situation and context and be both scientifically sound and culturally acceptable’.Footnote 38 But following the implications of this argument to their logical conclusions would have left little purchase for standardising indicators of performance.

4 Comparison and trade-offs

Despite the occasional reference to the need for ‘balancing’, careful cross-national measurements of the side effects of policies aimed at controlling the spread of COVID never became the main focus of the indicators consumed daily by the public. These were constructed principally so as to measure rates of infection, death and recovery. In this way, what appeared to be nothing other than an updating of the spread of the epidemic in practice smuggled in a preference for some goals over others. There was little discussion, for example, of how indicators could build in a plausible metric for the trade-off between employment and health, or take account of the extent to which damage to the economy does also have direct and indirect implications for health. The exclusive focus of indicators on what was happening to levels of infection also seemed to accept a considerable reduction in political freedoms in the name of stopping the epidemic and did not refer to this in evaluating performance.Footnote 39 On the other hand, even if much of the mainstream media preferred to focus on health indicators rather than the threat to normal civil rights and democratic functioning represented by actions taken to deal with the epidemic, this issue was regularly highlighted especially by newspapers on the right with a more libertarian streak.Footnote 40

Another limit on the usefulness of the comparisons being made can be seen in the limited attention given by COVID indicators to the possibility that the countries whose success they were evaluating were in fact trying to pursue different approaches to the same goal. How should judgments of success be affected by the costs that health interventions imposed on the economy? Some leaders spoke openly about giving priority to what they called the ‘health of the economy’.Footnote 41 But they tended to be seen as outliers. Rankings of countries’ achievements would have been very different, for example, if the metric that had been used was progress towards the goal of achieving herd immunity. This approach was soon abandoned officially, almost everywhere, because of the number of deaths that would have resulted and the too great a burden that it would have placed on health systems. But it may have been a goal sought by subgroups of a population, as is alleged to be the case for some religious groups in Israel.Footnote 42 It also remained in the background as a possible yardstick for deciding when a population was considered no longer at risk; and it again became relevant in determining how to distribute vaccines.

A good illustration of these weaknesses of indicators where approaches differed is offered by the case of Sweden, which (uniquely in Europe) chose to follow a less strict approach, in particular seeking to avoid at all costs imposing a lockdown. It closed schools for the over-sixteens and banned gatherings of more than fifty people, but otherwise relied on Swedes’ sense of civic responsibility to observe physical-distancing and home-working guidelines. Shops, restaurants and gyms remained open. On the other hand, differences on the ground should not be exaggerated. It would be misleading to assume that Sweden had no policies concerning social distancing. It also made use of contact-tracing.Footnote 43 Some commentators read the Swedish choice as one aimed at achieving herd immunity. For others, it was about giving priority to the economy.Footnote 44 But, for Sweden's chief epidemiologist, Anders Tegnell, the goal was slowing the spread of the virus. What authorities in Sweden were arguing was that public health should be viewed in the broadest sense, and that the kind of strict mandatory lockdowns imposed elsewhere were both unsustainable over the long run and could have serious secondary impacts including increased unemployment and problems of mental health.

This policy was held to steadily, even at the expense of a greater number of infections and deaths as compared to their Scandinavian neighbours.Footnote 45 Initially, in fact, the number of infections and deaths was no greater than in those European countries that did opt for lockdown.Footnote 46 But, as numbers went up, there was considerable internal debate and heart-searching in Sweden involving politicians, experts and public opinion.Footnote 47 It was also asserted that Sweden's strategy did not benefit it in economic terms, as it was badly affected almost as much as its Scandinavian neighbours (in large part because of its dependence on suppliers and markets in places hit by COVID).

Reference to other values as criteria of success did sometimes come up when politicians tried to deal with criticisms. After the WHO praised Germany and Italy for their improved performance in dealing with the COVID virus,Footnote 48 Prime Minister Boris Johnson explained to parliament (on 24 September 2020) that the UK had higher death rates than Germany and Italy because it gave a higher value to freedom.Footnote 49 Insofar as competing goals were recognised, the effort to reconcile them tended to produce incoherent rules. The official UK site giving advice on COVID-19 used criteria for imposing quarantine that were certainly not entirely consistent with health considerations. It explained:

‘Which workers are exempt from quarantine? There are a number of people who are exempt, regardless of where they are flying from, including: Road haulage and freight workers. Seasonal agricultural workers, if they self-isolate where they are working. UK residents who ordinarily travel overseas at least once a week for work’.Footnote 50

Similarly, people were often encouraged to go out so as to get the economy restarted, but, when numbers worsened, were ordered to retreat to the safety of their homes. A frequently used strategy for deflecting blame from governmentFootnote 51 was then to hold sections of the public responsible for the spread of the virus because of non-compliance with the latest version of the rules concerning social distancing.

5 COVID indicators and successful commensuration

Were COVID indicators more of a success in their role of creating standards and aiding compliance? Could what counts as a weakness when indicators are used for the purposes of comparison turn out to be a strength for the purposes of commensuration? Here, too, the record is a mixed one.

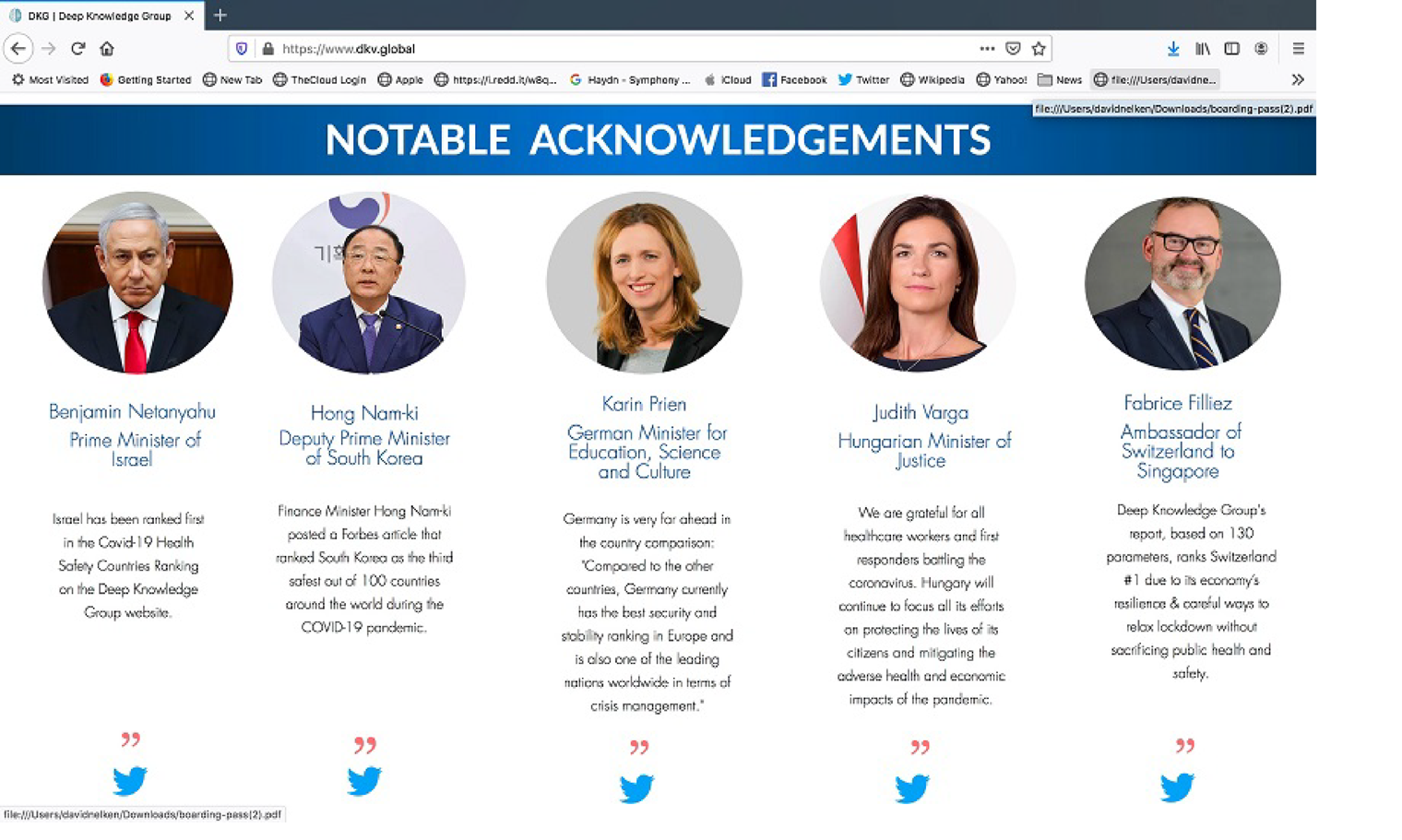

In one sense, commensuration is inherent in any attempt at comparison; definitions of what counts as an indicator variable for the purpose of comparison (whether it be countries, ethnic groups, rates of infection, etc.) all rely on explicit or implicit forms of commensuration. We could point also to the classificatory work that is involved in claiming that Black, Asian and Minority Ethnicities (BAME) individuals are more highly represented in COVID infections and deaths. What needs explaining here, however, is why no indicator emerged as having authority to standardise what counted as a COVID infection, or what needed to be measured in seeing how performance varied.Footnote 52 To take just two examples, the Johns Hopkins University website was very widely used, but it collated existing data rather than building its own, and it made no pretences to ranking. On the other hand, the Deep Knowledge website, which did rank countries, issued only the one snapshot in May 2020 and then went quiet.

To compare the varying outcomes between countries required some a priori standardisation of the definitions and practices used for defining COVID in each country. Both the WHO and the leading medical journal, The Lancet, pleaded for the introduction of similar criteria for all countries. But crucial differences persisted. Belgium, for example, was notorious for using an expansive definition of dying with the virus as dying from the virus; in the UK, the definition of death figures included only people who died within twenty-eight days of testing positive for coronavirus, and other ways of measuring suggested that the number of relevant deaths was even higher. Worse, once it became clear how often COVID can be present even without symptoms, the number of those infected came to be seen simply as a function of how much testing was being carried out – something that varied enormously between and within countries.Footnote 53 Where, as in most countries, priority was given to responding to those falling ill, this meant not testing on a larger scale so as to discover what was happening in the population more generally.

The alternative criterion for judging successful performance that increasingly came to be recommended was to rely on excess deaths in any one year as compared to the average of other years. But this too was not an easy criterion to standardise and relied on many uncertainties.Footnote 54 For example, the meaning of the statistic depended on whether it was realistic to hold constant across different years expected deaths from other causes, such as other health conditions, road accidents or crime, all of which can be anomalous in the midst of a pandemic. In any event, excess deaths were not the usual source of the numbers collated by Johns Hopkins University or the World Bank; nor were they the starting point for the statistics reworked for purposes of explanation and policy-making in the comparative tables and figures published by the daily newspapers and other media. These continued to focus on the changing numbers of medically certified deaths and infections, and did so even after it quickly became clear that the number of deaths did not mean the same over time and across different places, and that infection numbers depended on the level of testing.

Deciding who was performing better was supposed to provide clues as to how best to deal with COVID even as the relevant data were constantly changing; however, making sense of it depended on reaching common interpretations of the spread of the disease, its mutations, the changing risks it represented and variation in those being most affected. But the impression that the indicators gave was that all these matters were in constant flux. This was underlined by the extent to which COVID numbers were produced daily via dashboards, rather than the annual snapshots common with other global social indicators. This meant that countries frequently changed their place in the rankings over short periods. Places such as Italy that fared badly in the so-called first wave of the disease (Volpi and Serravalle, Reference Volpi and Serravalle2020) were then amongst the most successful, at least initially, in handling the threatened second wave. Others, such as Israel, that were stars in the first wave were trapped in escalating efforts at lockdown to deal with the second wave.Footnote 55 With the arrival of the third wave, everything again changed and news reports spoke in terms of emergencies even in what had been considered relatively successful countries such as GermanyFootnote 56 or South Korea.Footnote 57

Consensus on best practice was as much a precondition of the successful use of indicators as an outcome of them. Unpredictability in the way COVID was spreading reinforced doubts about whether experts and politicians really knew the answer. Experts were accused of putting too much faith in their models. There was no lack of prescriptions being put forward by highly qualified scientists.Footnote 58 But debates between scientists (despite this being the key to scientific progress) often undermined their authoritativeness. People found it difficult to treat the latest decrees and accompanying set of rules as absolute – when, only shortly before, different advice was being given and diametrically opposite rules were in force. The changing advice over the need to wear masks was only the most obvious of these switches. In England and Wales, disagreements between government advisers on the SAGE advisory body and its various subcommittees were kept secret, but individual members still got drawn into public debate. Commentators regularly raised doubts about whether politicians were following ‘the science’ or just selectively using what suited them.Footnote 59 Some criticised governments for listening too much to experts; others complained that governments did not listen to them enough.Footnote 60 Some critics asked why the decision was not taken to protect the vulnerable old and sick rather than curb the able-bodied, allowing the latter to resume their normal lives, while shielding those who were vulnerable to severe disease and death. More generally, this is a period where scientific expertise on matters such as how to respond to climate change is taken desperately seriously by some, but harshly questioned by others.

Of course, on a day-to-day basis, indicators played a key role in alerting governments and citizens to changing levels of risk and got this right at least some of the time. And it is not the case that nothing has been learnt about how better to respond to this and other pandemics.Footnote 61 Websites used indicators to showcase studies of better-performing countries as a means of identifying what previous actions may have led them to be better prepared and generalise from their best practices. Taking Vietnam as one of their so-called ‘exemplars’ of success, the Our World in Data website pointed to its investment in a public health infrastructure, such as emergency operation centres and surveillance systems, and learning lessons from SARS, leading to early action, ranging from border closures to testing to lockdowns. It noted that:

‘thorough contact tracing can help facilitate a targeted containment strategy. Quarantines based on possible exposure, rather than symptoms only, can reduce asymptomatic and pre-symptomatic transmission. Clear communication is crucial. A clear, consistent, and serious narrative is important throughout the crisis. A strong whole-of-society approach engages multi-sectoral stakeholders in decision-making process and activates cohesive participation of appropriate measures.’Footnote 62

As far as the causes of the epidemic's spreading, we now know of the heightened risks for care homes and for certain categories of victims, such as health workers, the old, people with higher body mass indexes, the poor and those compelled to work and shop in dangerous conditions. Some Asian countries have shown the importance of reducing the period from the first appearance of symptoms to the isolation of the individual.Footnote 63 There is a growing consensus that best practice includes making tests extensive and affordable, tracing and isolating, imposing social distancing early and keeping the public well informed and onside.Footnote 64 Closing borders, as was done quickly in Australasia, can keep out those travellers who might bring with them infection.

And yet, some places did badly, for other reasons, despite following recommended policies, as seen in the failure of stringent policies in FranceFootnote 65 or the way in which the level of excess death rates in Peru did not decline despite its long lockdown.Footnote 66 And considerable uncertainty and confusion persisted about the exact ways in which to implement crucial prophylactic measures such as social distancing, handwashing and the wearing of masks. Even if places in Asia that did best in reducing levels of infection had a tradition of wearing masks,Footnote 67 the worry about introducing this measure elsewhere was that people would become more complacent when wearing them; end up reusing them, which is unhygienic; or use masks sold on the black market or wear homemade masks, which could be of inferior quality and essentially useless.Footnote 68 Whether or not masks should be used in schools also remained controversial.Footnote 69 Doubts were sown even about the effectiveness of the lockdowns imposed after the first wave of the virus.Footnote 70

An important impediment to creating supposedly global standards was the way in which the transnational level of policy-making tended to be subordinated here to the national level.Footnote 71 This was not a situation in which governments felt the need to respond to the pressure of naming and shaming influential transnational (or even US-sponsored) indicators. The most obvious transnational authority in this case was the WHO. But it had lost prestige when handling earlier epidemics (Smallman, Reference Smallman2015). Their efforts to get traction for their warnings and recommendations were not helped by their own hesitation in declaring a pandemic and their equivocation over the benefits of wearing masks.Footnote 72 Their authority was also directly challenged by the US, who alleged that they had shown too much indulgence towards China and who threatened to withdraw funding.Footnote 73

Because the lead in policy-making was usually taken by nation states, these had more difficulty in presenting their standards than those with potential global applicability. Sometimes, countries, such as the US and Brazil, were led by politicians who even cast doubts on the problem they were supposed to be measuring.Footnote 74 National politicians did sometimes choose to refer to indicators produced by international or otherwise independent organisations so as to gain legitimacy for their policies (Nelken, Reference Nelken2018; Siems and Nelken, Reference Siems, Nelken and Zumbansen2021). But, as importantly, some of the makers of indicators themselves sought endorsements from political leaders (see Figure 6).Footnote 75

Figure 6. The Deep Knowledge indicator and political endorsement.

Importantly, the credibility of global social indicators as a guide to ranking responses to epidemics was also weakened by the way in which an earlier leading indicator of which countries were supposedly best prepared to deal with this kind of health emergency so clearly failed in its predictions (see Mahajan, in this issue). As opposed to its predictions of success for countries such as the US and the UK, actual success in dealing with COVID did not reflect well on the countries from the ‘Global North’ that usually stand higher in global rankings (and tend to play a major role in drafting them). The failure of this indicator relates in part to its attempt to predict the future rather than describe the present. But it is also a question of the polysemic meanings of a term such as ‘preparedness’ and the difficulty in reducing it to measurable indicators. Much the same applies to the current effort to link success in responding to the epidemic to different levels of ‘state capacity’. Even if this correlation with performance seems almost tautologous, it turns out that this term can be operationalised in too many ways.Footnote 76 On the other hand, however, even after what we know, or think we know, about the unexpected performances of some countries in dealing with COVID, official indicators such as the EU's INFORM-RISK INDEX still stressed that it was poorer countries that were most at risk of collapsing health systems and consequent humanitarian crises.Footnote 77

Although some progress has therefore been made in establishing common ways of measuring the COVID epidemic and taking steps to deal with it, these are as much a consequence of policy experimentation as the application of shared protocols. Ultimately, success in getting over the epidemic, or at least creating a ‘new normal’, will depend on the effective roll-out of vaccines. It is currently unclear how far the US and the UK will recover some of their lost prestige by coming up with an effective vaccine – and whether Russia and China have come up with reliable and cheap alternatives. Most of the countries that did best in dealing with the pandemic do not have the resources to compete in the discovery and production of vaccines. But there is still room for ranking which places offer the fastest and most effective way of delivering them to their populations.Footnote 78 It is not obvious how far the shock of failing to deal more effectively with this epidemic will lead to larger social changes and help the countries that did less well this time to be better prepared to deal with any new epidemic. Much depends on how much is different about the next global health challenge.

6 Conclusion: comparing success and the success of comparisons

This investigation of the role of comparison and commensuration in the making and use of COVID-19 indicators provides supporting evidence for scattered remarks by other commentators who have also pointed to the problematic role of comparison in responding to the pandemic.Footnote 79 We have seen how, on the one hand, the search for local differences and circumstances was all-important for understanding different patterns in the spread of COVID-19, while, on the other hand, efforts to impose best practices in responding to the virus on a global scale entailed simplifying and overlooking such local differences.Footnote 80 The challenge of deciding what counts as a successful comparison for the purpose of making and using global social indicators thus further complicates the question of identifying what Infantino calls their ‘hazards’ and ‘fallacies’ (Infantino, in this issue).

At the same time, the question remains: What, if anything, is special about COVID indicators? Insofar as it is true that all global social indicators fall ‘between’ comparison and commensuration, why in other cases have they nonetheless succeeded in establishing themselves? Of course, such global social indicators as the Programme for International Student Assessment measures of educational levels, the World Bank's measures of Better Business opportunities, Transparency International's claims about corruption levels worldwide or attempts to rank countries in their respect for the rule of law (Merry et al., Reference Merry, Davis and Kingsbury2015) are certainly also contestable and contested. But, in the cases of these more established measures, contestation itself points to the success that the indicator has achieved in promoting a certain international hierarchy – one that is difficult, if not impossible, to change.

The key to the success of these more established indicators, I have suggested, lies in their ability to subordinate comparison to commensuration – to make comparisons of success displace concerns about successful comparison. Those setting out to rank levels of corruption or adherence to the rule of law certainly do not assume that underlying circumstances and resources are the same in, say, Somalia as they are in Sweden. Indeed, the fact that some countries face greater difficulties in achieving the designated goal actually helps to make their poorer rankings credible and persuasive. In these cases, the differences in local context that distinguish less highly ranked from poorer performing units come to be understood not as vitiating the point of the comparison, but as helping to make sense of why it is that some do less well. In order to achieve better rankings, it is they who have the responsibility to transform the underlying conditions that help to explain their poor scores. Blameworthiness, or at least inferiority, supposedly attaches to those who do not meet a standard, whatever the reason for such failure.Footnote 81 By contrast, indicators that have yet not been established continue to be caught in the tension between the requirements of successful comparison and imposing supposedly consensual comparisons of success.

But why has stabilising through commensuration been more difficult in the case of COVID indicators? Much of the explanation lies in the difficulty of successfully bringing the epidemic under control. But there could be other explanations to consider. In terms of what these indicators seek to measure, it could be argued that health indicators should actually be less subject to culturally framing and therefore easier to commensurate than indicators of other matters. On the other hand, leading researchers have shown that defining matters of health is also (as much?) a matter of persuasive categorisation (Bowker and Star, Reference Bowker and Star1999). Likewise, standardising the collection of more accepted health indicators is just as problematic when we see what goes on behind the scenes (see e.g. Gerrets, Reference Gerrets and Rottenburg2015; Park, Reference Park and Rottenburg2015). In any case, those who have taken Merry's earlier analysis of global indicators further have explained that more successful indicators are not those that are better at representing ‘the facts’ (see e.g. Urueña, Reference Urueña, Merry, Davis and Kingsbury2015; Lang, Reference Lang, Hirsch and Lang2020). What matters is that those making indicators have the power to create persuasive definitions of given behaviours. In practice, such definitions are often imposed, as when the EU uses its indicators of respect for the rule of law as a criterion for the accession of candidate states, or the US judges what it counts as progress in dealing with human trafficking. Cultural and political hegemony is all. This was harder to achieve here because, as I have noted, it has generally been nation states rather than international or non-governmental organisations that have taken the lead in monitoring COVID; it is they who have made recommendations about appropriate behaviour, often arbitrarily (as in the exact rules about social distancing) distinguishing themselves from those standards laid down by other countries. Given the heavy involvement of nation states, we are dealing as much with government as with governance.

The problems involved in comparing and standardising did not interfere with all the tasks that indicators serve. It would be asking a lot to expect COVID indicators to succeed for all possible purposes, including predicting, shaping behaviour, legitimating decision-making, etc. And it is hardly surprising that they were successful in some ways but not in others. Indicators as a guide to future developments can never deal with all uncertainties (Esposito and Stark, Reference Esposito and Stark2019; King, Reference King2020). Insofar as these indicators were more about regulating individuals’ behaviour than those established indicators that focus on levels of rule of law, business-friendliness, etc., it seems as if the majority of people did their best to comply with the rules – even though protocols changed so often. Going further, even the regular challenging of COVID indicators, as seen in much commentary and protest, would count as success for those who see the role of indicators as increasing public debate over the meaning of contested values (Rosga and Satterthwaite, Reference Rosga and Satterthwaite2009).Footnote 82

As this suggests, a great deal turns on what we call success. Even if the persistence of the pandemic casts a pall over indicators, it is important not to identify their success with the results of the project of control that they were being used to measure. Even if resort to indicators did not defeat the pandemic, their task was precisely to chart whether or not the epidemic was under control.Footnote 83 The kind of challenge that COVID indicators faced was similar to all attempts to use metrics: how to both measure and change things at the same time (Goodhart, Reference Goodhart1975; Muller Reference Muller2018). Countries such as Belgium were willing to use more expansive definitions of deaths from COVID than others, even if it put them in a worse light. But places such as RussiaFootnote 84 and ChinaFootnote 85 later acknowledged the need for upward revisions of what had been their initial claims about the rate of infections and deaths.

On the other hand, to the extent that indicators have had more success in helping to reduce the epidemic, this could still be considered a partial failure insofar as it distracted from recognition of the side effects and uneven consequences of standardised response for different groups in a given society and amongst different societies. One-size-fits-all protocols, as always, inevitably led to different outcomes and negative side effects,Footnote 86 as has been documented most obviously regarding the effects of lockdown on poor workers and on poorer countries (see e.g. Joffe, Reference Joffe2020; Caduff, Reference Caduff2020; Harrington, in this issue). It would be superficial to judge success here, just as with other global social indicators, only in terms of whether they persuade governments to change track (Kelley and Simmons, Reference Kelley and Simmons2020). We must also pay heed to those critics who point to wider implications such as the way in which these indicators provided too ready an opportunity to extend emergency powers and justify patterns of surveillance that will not be so easily withdrawn afterwards.Footnote 87

Conflicts of Interest

None

Acknowledgements

None