1 Introduction

In this paper, we study the optimality of Malliavin-type remainders in the asymptotic density approximation formula for Beurling generalized integers, a problem that has its roots in a long-standing open question of Bateman and Diamond [Reference Bateman and Diamond2, Reference Landau13B, p. 199]. Let

![]() $\mathcal {P}:\:p_{1}\leq p_{2}\leq \dots $

be a Beurling generalized prime system, namely, an unbounded and nondecreasing sequence of positive real numbers satisfying

$\mathcal {P}:\:p_{1}\leq p_{2}\leq \dots $

be a Beurling generalized prime system, namely, an unbounded and nondecreasing sequence of positive real numbers satisfying

![]() $p_{1}>1$

, and let

$p_{1}>1$

, and let

![]() $\mathcal {N}$

be its associated system of generalized integers, that is, the multiplicative semigroup generated by 1 and

$\mathcal {N}$

be its associated system of generalized integers, that is, the multiplicative semigroup generated by 1 and

![]() $\mathcal {P}$

[Reference Bateman and Diamond2, Reference Beurling3, Reference Diamond and Zhang10]. We consider the functions

$\mathcal {P}$

[Reference Bateman and Diamond2, Reference Beurling3, Reference Diamond and Zhang10]. We consider the functions

![]() $\pi (x)$

and

$\pi (x)$

and

![]() $N(x)$

counting the number of generalized primes and integers, respectively, not exceeding x.

$N(x)$

counting the number of generalized primes and integers, respectively, not exceeding x.

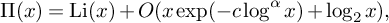

Malliavin discovered [Reference Malliavin14] that the two asymptotic relations

and

for some

![]() $c>0$

and

$c>0$

and

![]() $c'>0$

, are closely related to each other in the sense that if (Nβ

) holds for a given

$c'>0$

, are closely related to each other in the sense that if (Nβ

) holds for a given

![]() $0<\beta \leq 1$

, then (P

$0<\beta \leq 1$

, then (P

![]() $_{\alpha ^{\ast }}$

) is satisfied for some

$_{\alpha ^{\ast }}$

) is satisfied for some

![]() $\alpha ^\ast $

, and vice versa the relation (Pα

) for a given

$\alpha ^\ast $

, and vice versa the relation (Pα

) for a given

![]() $0<\alpha \leq 1$

ensures that (N

$0<\alpha \leq 1$

ensures that (N

![]() $_{\beta ^{\ast }}$

) holds for a certain

$_{\beta ^{\ast }}$

) holds for a certain

![]() $\beta ^{\ast }$

. A natural question is then what the optimal error terms of Malliavin-type are. Writing

$\beta ^{\ast }$

. A natural question is then what the optimal error terms of Malliavin-type are. Writing

![]() $\alpha ^{\ast }(\beta )$

and

$\alpha ^{\ast }(\beta )$

and

![]() $\beta ^{\ast }(\alpha )$

for the best possibleFootnote

1

exponents in these implications, we have:

$\beta ^{\ast }(\alpha )$

for the best possibleFootnote

1

exponents in these implications, we have:

Problem 1.1. Given any

![]() $\alpha ,\beta \in (0,1]$

, find the best exponents

$\alpha ,\beta \in (0,1]$

, find the best exponents

![]() $\alpha ^{\ast }(\beta )$

and

$\alpha ^{\ast }(\beta )$

and

![]() $\beta ^{\ast }(\alpha )$

.

$\beta ^{\ast }(\alpha )$

.

So far, there are only two instances where a solution to Problem 1.1 is known. In 2006, Diamond, Montgomery and Vorhauer [Reference Diamond, Montgomery and Vorhauer9] (cf. [Reference Zhang16]) demonstrated that

![]() $\alpha ^{\ast }(1) = 1/2$

, while in our recent work [7] we have shown that

$\alpha ^{\ast }(1) = 1/2$

, while in our recent work [7] we have shown that

![]() $\beta ^{\ast }(1)=1/2$

. The former result proves that the de la Vallée Poussin remainder is best possible in Landau’s classical prime number theorem (PNT) [Reference Landau13], whereas the latter one yields the optimality of a theorem of Hilberdink and Lapidus [Reference Hilberdink and Lapidus12].

$\beta ^{\ast }(1)=1/2$

. The former result proves that the de la Vallée Poussin remainder is best possible in Landau’s classical prime number theorem (PNT) [Reference Landau13], whereas the latter one yields the optimality of a theorem of Hilberdink and Lapidus [Reference Hilberdink and Lapidus12].

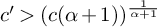

We shall solve here Problem 1.1 for any value

![]() $\alpha \in (0,1]$

. Improving upon Malliavin’s results, Diamond [Reference Diamond8] (cf. [Reference Hilberdink and Lapidus12]) established the lower bound

$\alpha \in (0,1]$

. Improving upon Malliavin’s results, Diamond [Reference Diamond8] (cf. [Reference Hilberdink and Lapidus12]) established the lower bound

![]() $\beta ^{\ast }(\alpha )\geq \alpha /(1+\alpha )$

. We will prove the reverse inequality:

$\beta ^{\ast }(\alpha )\geq \alpha /(1+\alpha )$

. We will prove the reverse inequality:

Theorem 1.2. We have

![]() $\beta ^{\ast }(\alpha )=\alpha /(1+\alpha )$

for any

$\beta ^{\ast }(\alpha )=\alpha /(1+\alpha )$

for any

![]() $\alpha \in (0,1]$

.

$\alpha \in (0,1]$

.

Our main result actually supplies more accurate information, and, in particular, it exhibits the best possible value of the constant

![]() $c'$

in (Nβ

). In order to explain it, let us first state Diamond’s result in a refined form, showing the explicit dependency of the constant

$c'$

in (Nβ

). In order to explain it, let us first state Diamond’s result in a refined form, showing the explicit dependency of the constant

![]() $c'$

on c and

$c'$

on c and

![]() $\alpha $

. We write

$\alpha $

. We write

![]() $\log _{k} x$

for the k times iterated logarithm. The Riemann prime counting function of the generalized number system naturally occurs in our considerations;Footnote

2

as in classical number theory, it is defined as

$\log _{k} x$

for the k times iterated logarithm. The Riemann prime counting function of the generalized number system naturally occurs in our considerations;Footnote

2

as in classical number theory, it is defined as

![]() $\Pi (x)=\sum _{n=1}^{\infty }\pi (x^{1/n})/n$

. We also mention that, for the sake of convenience, we choose to define the logarithmic integral as

$\Pi (x)=\sum _{n=1}^{\infty }\pi (x^{1/n})/n$

. We also mention that, for the sake of convenience, we choose to define the logarithmic integral as

$$ \begin{align} \operatorname{\mathrm{Li}} (x):= \int_{1}^{x} \frac{1-u^{-1}}{\log u} \mathrm{d} u. \end{align} $$

$$ \begin{align} \operatorname{\mathrm{Li}} (x):= \int_{1}^{x} \frac{1-u^{-1}}{\log u} \mathrm{d} u. \end{align} $$

Theorem 1.3. Suppose there exist constants

![]() $\alpha \in (0,1]$

and

$\alpha \in (0,1]$

and

![]() $c>0$

, with the additional requirement

$c>0$

, with the additional requirement

![]() $c\leq 1$

when

$c\leq 1$

when

![]() $\alpha =1$

, such that

$\alpha =1$

, such that

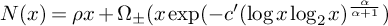

Then, there is a constant

![]() $\rho>0$

such that

$\rho>0$

such that

$$ \begin{align} N(x) = \rho x + O\biggl\{x\exp\biggl(-(c(\alpha+1))^{\frac{1}{\alpha+1}}(\log x\log_{2} x)^{\frac{\alpha}{\alpha+1}}\biggl(1+O\biggl(\frac{\log_{3}x}{\log_{2} x}\biggr)\biggr)\biggr)\biggr\}. \end{align} $$

$$ \begin{align} N(x) = \rho x + O\biggl\{x\exp\biggl(-(c(\alpha+1))^{\frac{1}{\alpha+1}}(\log x\log_{2} x)^{\frac{\alpha}{\alpha+1}}\biggl(1+O\biggl(\frac{\log_{3}x}{\log_{2} x}\biggr)\biggr)\biggr)\biggr\}. \end{align} $$

A proof of Theorem 1.3 can be given as in [Reference Broucke, Debruyne and Vindas5, Theorem A.1] (cf. [Reference Balazard1]), starting from the identity [Reference Diamond and Zhang10]

$$ \begin{align} \mathrm{d} N = \exp^{\ast}(\mathrm{d} \Pi) = \sum_{n=0}^{\infty}\frac{1}{n!}(\mathrm{d}\Pi)^{\ast n} \end{align} $$

$$ \begin{align} \mathrm{d} N = \exp^{\ast}(\mathrm{d} \Pi) = \sum_{n=0}^{\infty}\frac{1}{n!}(\mathrm{d}\Pi)^{\ast n} \end{align} $$

and using a version of the Dirichlet hyperbola method to estimate the convolution powers

![]() $(\mathrm{d} \Pi )^{\ast n}$

. The current article is devoted to showing the optimality of Theorem 1.3, including the optimality of the constant

$(\mathrm{d} \Pi )^{\ast n}$

. The current article is devoted to showing the optimality of Theorem 1.3, including the optimality of the constant

![]() $c' = (c(\alpha +1))^{1/(\alpha +1)}$

in the asymptotic estimate (1.3), as established by the next theorem. Note that Theorem 1.2 follows at once upon combining Theorem 1.3 and Theorem 1.4.

$c' = (c(\alpha +1))^{1/(\alpha +1)}$

in the asymptotic estimate (1.3), as established by the next theorem. Note that Theorem 1.2 follows at once upon combining Theorem 1.3 and Theorem 1.4.

Theorem 1.4. Let

![]() $\alpha $

and c be constants such that

$\alpha $

and c be constants such that

![]() $\alpha \in (0,1]$

and

$\alpha \in (0,1]$

and

![]() $c>0$

, where we additionally require

$c>0$

, where we additionally require

![]() $c\le 1$

if

$c\le 1$

if

![]() $\alpha =1$

. Then there exists a Beurling generalized number system such that

$\alpha =1$

. Then there exists a Beurling generalized number system such that

$$ \begin{align} \Pi(x) - \operatorname{\mathrm{Li}}(x) \ll \begin{cases} x\exp(-c(\log x)^{\alpha}) &\mbox{if}\ \alpha<1\ \mbox{or}\ \alpha=1\ \mbox{and}\ c<1, \\ \log_{2}x &\mbox{if}\ \alpha=c=1, \end{cases} \end{align} $$

$$ \begin{align} \Pi(x) - \operatorname{\mathrm{Li}}(x) \ll \begin{cases} x\exp(-c(\log x)^{\alpha}) &\mbox{if}\ \alpha<1\ \mbox{or}\ \alpha=1\ \mbox{and}\ c<1, \\ \log_{2}x &\mbox{if}\ \alpha=c=1, \end{cases} \end{align} $$

and

$$ \begin{align} N(x) = \rho x + \Omega_{\pm}\biggl\{x\exp\biggl(-(c(\alpha+1))^{\frac{1}{\alpha+1}}(\log x\log_{2} x)^{\frac{\alpha}{\alpha+1}}\biggl(1 + b\frac{\log_{3} x}{\log_{2} x}\biggr)\biggr)\biggr\}, \end{align} $$

$$ \begin{align} N(x) = \rho x + \Omega_{\pm}\biggl\{x\exp\biggl(-(c(\alpha+1))^{\frac{1}{\alpha+1}}(\log x\log_{2} x)^{\frac{\alpha}{\alpha+1}}\biggl(1 + b\frac{\log_{3} x}{\log_{2} x}\biggr)\biggr)\biggr\}, \end{align} $$

where

![]() $\rho>0$

is the asymptotic density of N and b is some positive constant.Footnote

3

$\rho>0$

is the asymptotic density of N and b is some positive constant.Footnote

3

The proof of Theorem 1.4 consists of two main steps. We shall first construct an explicit example of a continuous analog [Reference Beurling3, Reference Diamond and Zhang10] of a number system fulfilling all requirements from Theorem 1.4, and then we will discretize it by means of a probabilistic procedure. The second step will be accomplished in Section 6 with the aid of a recently improved version [Reference Broucke and Vindas6] of the Diamond–Montgomery–Vorhauer–Zhang random prime approximation method [Reference Diamond, Montgomery and Vorhauer9, Reference Zhang16]. The construction and analysis of the continuous example will be carried out in Sections 2–5.

Our method is in the same spirit as in [Reference Broucke, Debruyne and Vindas5], particularly making extensive use of saddle point analysis. Nevertheless, it is worthwhile to point out that showing Theorem 1.4 requires devising a new example. Even in the case

![]() $\alpha =1$

, our treatment here delivers novel important information that cannot be reached with the earlier construction. Direct generalizations of the example from [Reference Broucke, Debruyne and Vindas5] are unable to reveal the optimal constant

$\alpha =1$

, our treatment here delivers novel important information that cannot be reached with the earlier construction. Direct generalizations of the example from [Reference Broucke, Debruyne and Vindas5] are unable to reveal the optimal constant

![]() $c'$

in the remainder

$c'$

in the remainder

![]() $O(x\exp (-c' (\log x \log _2 x)^{\alpha /(\alpha +1)}(1+o(1))))$

of equation (1.3). In fact, upon sharpening the technique from [Reference Broucke, Debruyne and Vindas5] when

$O(x\exp (-c' (\log x \log _2 x)^{\alpha /(\alpha +1)}(1+o(1))))$

of equation (1.3). In fact, upon sharpening the technique from [Reference Broucke, Debruyne and Vindas5] when

![]() $\alpha =1$

, one would only be able to obtain the

$\alpha =1$

, one would only be able to obtain the

![]() $\Omega _{\pm }$

-estimate with

$\Omega _{\pm }$

-estimate with

![]() $c'>2\sqrt {c}$

, which falls short of the actual optimal value

$c'>2\sqrt {c}$

, which falls short of the actual optimal value

![]() $c'=\sqrt {2c}$

that we establish with our new construction. Furthermore, we deal here with the general case

$c'=\sqrt {2c}$

that we establish with our new construction. Furthermore, we deal here with the general case

![]() $0<\alpha \leq 1$

. There is a notable difference between generalized number systems satisfying equation (1.5) with

$0<\alpha \leq 1$

. There is a notable difference between generalized number systems satisfying equation (1.5) with

![]() $\alpha =1$

and those satisfying it with

$\alpha =1$

and those satisfying it with

![]() $0<\alpha <1$

. In the latter case, the zeta function admits, in general, no meromorphic continuationFootnote

4

beyond the line

$0<\alpha <1$

. In the latter case, the zeta function admits, in general, no meromorphic continuationFootnote

4

beyond the line

![]() $\sigma =1$

, which a priori renders direct use of complex analysis arguments impossible. We will overcome this difficulty with a truncation idea, where the analyzed continuous number system is approximated by a sequence of continuous number systems having very regular zeta functions in the sense that they are actually analytic on

$\sigma =1$

, which a priori renders direct use of complex analysis arguments impossible. We will overcome this difficulty with a truncation idea, where the analyzed continuous number system is approximated by a sequence of continuous number systems having very regular zeta functions in the sense that they are actually analytic on

![]() $\mathbb {C}\setminus \{1\}$

.

$\mathbb {C}\setminus \{1\}$

.

We conclude this introduction by mentioning that determining the best exponent

![]() $\alpha ^{\ast }(\beta )$

from Problem 1.1 remains wide open for

$\alpha ^{\ast }(\beta )$

from Problem 1.1 remains wide open for

![]() $0<\beta <1$

. Bateman and Diamond have conjectured that

$0<\beta <1$

. Bateman and Diamond have conjectured that

![]() $\alpha ^{\ast }(\beta )=\beta /(\beta +1)$

. The validity of this conjecture has only been verified [Reference Diamond, Montgomery and Vorhauer9] for

$\alpha ^{\ast }(\beta )=\beta /(\beta +1)$

. The validity of this conjecture has only been verified [Reference Diamond, Montgomery and Vorhauer9] for

![]() $\beta =1$

. It has recently been shown [Reference Broucke4] that

$\beta =1$

. It has recently been shown [Reference Broucke4] that

![]() $\alpha ^{\ast }(\beta )\leq \beta /(\beta +1)$

. However, the best-known admissible value [Reference Diamond and Zhang10, Theorem 16.8, p. 187] when

$\alpha ^{\ast }(\beta )\leq \beta /(\beta +1)$

. However, the best-known admissible value [Reference Diamond and Zhang10, Theorem 16.8, p. 187] when

![]() $0<\beta <1$

is

$0<\beta <1$

is

![]() $\alpha ^{*}\approx \beta /(\beta + 6.91)$

, which is still far from the conjectural exponent.

$\alpha ^{*}\approx \beta /(\beta + 6.91)$

, which is still far from the conjectural exponent.

2 Construction of the continuous example

We explain here the setup for the construction of our continuous example, whose analysis shall be the subject of Sections 3–5. Let us first clarify what is meant by a not necessarily discrete generalized number system. In a broader sense [Reference Beurling3, Reference Diamond and Zhang10], a Beurling generalized number system is merely a pair of nondecreasing right continuous functions

![]() $(\Pi ,N)$

with

$(\Pi ,N)$

with

![]() $\Pi (1)=0$

and

$\Pi (1)=0$

and

![]() $N(1)=1$

, both having support in

$N(1)=1$

, both having support in

![]() $[1,\infty )$

, and subject to the relation (1.4), where the exponential is taken with respect to the (multiplicative) convolution of measures [Reference Diamond and Zhang10]. Since our hypotheses always guarantee convergence of the Mellin transforms, the latter becomes equivalent to the zeta function identity

$[1,\infty )$

, and subject to the relation (1.4), where the exponential is taken with respect to the (multiplicative) convolution of measures [Reference Diamond and Zhang10]. Since our hypotheses always guarantee convergence of the Mellin transforms, the latter becomes equivalent to the zeta function identity

$$\begin{align*}\zeta(s) :=\int^{\infty}_{1^{-}} x^{-s}\mathrm{d}N(x)= \exp\left(\int^{\infty}_{1}x^{-s}\mathrm{d}\Pi(x)\right). \end{align*}$$

$$\begin{align*}\zeta(s) :=\int^{\infty}_{1^{-}} x^{-s}\mathrm{d}N(x)= \exp\left(\int^{\infty}_{1}x^{-s}\mathrm{d}\Pi(x)\right). \end{align*}$$

We define our continuous Beurling system via its Chebyshev function

![]() $\psi _{C}$

. This uniquely defines

$\psi _{C}$

. This uniquely defines

![]() $\Pi _{C}$

and

$\Pi _{C}$

and

![]() $N_{C}$

by means of the relations

$N_{C}$

by means of the relations

![]() $\mathrm{d} \Pi _{C}(u) = (1/\log u)\mathrm{d} \psi _{C}(u)$

and

$\mathrm{d} \Pi _{C}(u) = (1/\log u)\mathrm{d} \psi _{C}(u)$

and

![]() $\mathrm{d} N_{C} = \exp ^{\ast }(\mathrm{d} \Pi _{C})$

. For

$\mathrm{d} N_{C} = \exp ^{\ast }(\mathrm{d} \Pi _{C})$

. For

![]() $x\ge 1$

, set

$x\ge 1$

, set

$$ \begin{align} \psi_{C}(x) = x - 1 - \log x + \sum_{k=0}^{\infty}(R_{k}(x) + S_{k}(x)). \end{align} $$

$$ \begin{align} \psi_{C}(x) = x - 1 - \log x + \sum_{k=0}^{\infty}(R_{k}(x) + S_{k}(x)). \end{align} $$

Here,

![]() $x-1-\log x = \int _{1}^{x}\log u \mathrm{d} \operatorname {\mathrm {Li}}(u)$

is the main term (cf. equation (1.1)), the terms

$x-1-\log x = \int _{1}^{x}\log u \mathrm{d} \operatorname {\mathrm {Li}}(u)$

is the main term (cf. equation (1.1)), the terms

![]() $R_{k}$

are the deviations which will create a large oscillation in the integers, while the

$R_{k}$

are the deviations which will create a large oscillation in the integers, while the

![]() $S_{k}$

are introduced to mitigate the jump discontinuity of

$S_{k}$

are introduced to mitigate the jump discontinuity of

![]() $R_{k}$

and make

$R_{k}$

and make

![]() $\psi _{C}$

absolutely continuous. The effect of the terms

$\psi _{C}$

absolutely continuous. The effect of the terms

![]() $S_{k}$

on the asymptotics of

$S_{k}$

on the asymptotics of

![]() $N_{C}$

will be harmless. Concretely, we consider fast growing sequences

$N_{C}$

will be harmless. Concretely, we consider fast growing sequences

![]() $(A_{k})_{k}$

,

$(A_{k})_{k}$

,

![]() $(B_{k})_{k}$

,

$(B_{k})_{k}$

,

![]() $(C_{k})_{k}$

and

$(C_{k})_{k}$

and

![]() $(\tau _{k})_{k}$

with

$(\tau _{k})_{k}$

with

![]() $A_{k}<B_{k}<C_{k}<A_{k+1}$

, and defineFootnote

5

$A_{k}<B_{k}<C_{k}<A_{k+1}$

, and defineFootnote

5

We require that

![]() $\tau _{k}\log A_{k}, \tau _{k}\log B_{k} \in 2\pi \mathbb {Z}$

and define

$\tau _{k}\log A_{k}, \tau _{k}\log B_{k} \in 2\pi \mathbb {Z}$

and define

![]() $C_{k}$

as the unique solution of

$C_{k}$

as the unique solution of

![]() $R_{k}(B_{k}) + (1/2)\bigl (B_{k}-1-\log B_{k} - (C_{k}-1-\log C_{k})\bigr )= 0$

. Notice that for

$R_{k}(B_{k}) + (1/2)\bigl (B_{k}-1-\log B_{k} - (C_{k}-1-\log C_{k})\bigr )= 0$

. Notice that for

![]() $A_{k}\le x \le B_{k}$

,

$A_{k}\le x \le B_{k}$

,

$$ \begin{align*} R_{k}(x) &= \frac{\tau_{k}^{2}}{2(\tau_{k}^{2}+1)}\biggl(\frac{x}{\tau_{k}}\sin(\tau_{k}\log x) + \frac{x}{\tau_{k}^{2}}\cos(\tau_{k} \log x) - \frac{A_{k}}{\tau_{k}^{2}}\biggr) - \frac{\sin(\tau_{k}\log x)}{2\tau_{k}}, \\ R_{k}(B_{k}) &= \frac{B_{k}-A_{k}}{2(\tau_{k}^{2}+1)}> 0, \end{align*} $$

$$ \begin{align*} R_{k}(x) &= \frac{\tau_{k}^{2}}{2(\tau_{k}^{2}+1)}\biggl(\frac{x}{\tau_{k}}\sin(\tau_{k}\log x) + \frac{x}{\tau_{k}^{2}}\cos(\tau_{k} \log x) - \frac{A_{k}}{\tau_{k}^{2}}\biggr) - \frac{\sin(\tau_{k}\log x)}{2\tau_{k}}, \\ R_{k}(B_{k}) &= \frac{B_{k}-A_{k}}{2(\tau_{k}^{2}+1)}> 0, \end{align*} $$

so the definition of

![]() $C_{k}$

makes sense (i.e.,

$C_{k}$

makes sense (i.e.,

![]() $C_{k}>B_{k}$

). We will also set

$C_{k}>B_{k}$

). We will also set

![]() $A_{k}=\sqrt {B_{k}}$

and

$A_{k}=\sqrt {B_{k}}$

and

then

With these definitions in place, we have that

![]() $\psi _{C}$

is absolutely continuous, nondecreasing, and satisfies

$\psi _{C}$

is absolutely continuous, nondecreasing, and satisfies

![]() $\psi _{C}(x) = x + O\bigl (x\exp (-c(\log x)^{\alpha })\bigr )$

, which implies thatFootnote

6

equation (1.5) holds for

$\psi _{C}(x) = x + O\bigl (x\exp (-c(\log x)^{\alpha })\bigr )$

, which implies thatFootnote

6

equation (1.5) holds for

![]() $\Pi _{C}(x) = \int _{1}^{x}(1/\log u)\mathrm{d} \psi _{C}(u)$

. Finally we define a sequence

$\Pi _{C}(x) = \int _{1}^{x}(1/\log u)\mathrm{d} \psi _{C}(u)$

. Finally we define a sequence

![]() $(x_{k})_{k}$

via the relation

$(x_{k})_{k}$

via the relation

Here,

![]() $(\varepsilon _{k})_{k}$

is a bounded sequence which is introduced to control the value of

$(\varepsilon _{k})_{k}$

is a bounded sequence which is introduced to control the value of

![]() $\tau _{k}\log x_{k}$

mod

$\tau _{k}\log x_{k}$

mod

![]() $2\pi $

(this will be needed later on). It is on the sequence

$2\pi $

(this will be needed later on). It is on the sequence

![]() $(x_{k})_{k}$

that we will show the oscillation estimate (1.6).

$(x_{k})_{k}$

that we will show the oscillation estimate (1.6).

We collect all technical requirements of the considered sequences in the following lemma. The rapid growth of the sequence

![]() $(B_{k})_{k}$

will be formulated as a general inequality

$(B_{k})_{k}$

will be formulated as a general inequality

![]() $B_{k+1}>\max \{F(B_{k}), G(k)\}$

, for some functions F and G. We will not specify here what F and G we require. At each point later on where the rapid growth is used, it will be clear what kind of growth (and what F, G) is needed.

$B_{k+1}>\max \{F(B_{k}), G(k)\}$

, for some functions F and G. We will not specify here what F and G we require. At each point later on where the rapid growth is used, it will be clear what kind of growth (and what F, G) is needed.

Lemma 2.1. Let F, G be increasing functions. There exist sequences

![]() $(B_{k})_{k}$

and

$(B_{k})_{k}$

and

![]() $(\varepsilon _{k})_{k}$

such that, with the definitions of

$(\varepsilon _{k})_{k}$

such that, with the definitions of

![]() $(A_{k})_{k}$

,

$(A_{k})_{k}$

,

![]() $(C_{k})_{k}$

,

$(C_{k})_{k}$

,

![]() $(\tau _{k})_{k}$

and

$(\tau _{k})_{k}$

and

![]() $(x_{k})_{k}$

as above, the following properties hold:

$(x_{k})_{k}$

as above, the following properties hold:

-

(a)

$B_{k+1}> \max \{F(B_{k}), G(k)\}$

;

$B_{k+1}> \max \{F(B_{k}), G(k)\}$

; -

(b)

$\tau _{k}\log A_{k}\in 2\pi \mathbb {Z}$

and

$\tau _{k}\log A_{k}\in 2\pi \mathbb {Z}$

and

$\tau _{k}\log B_{k} \in 2\pi \mathbb {Z}$

;

$\tau _{k}\log B_{k} \in 2\pi \mathbb {Z}$

; -

(c)

$\tau _{k}\log x_{k} \in \pi /2 + 2\pi \mathbb {Z}$

when k is even, and

$\tau _{k}\log x_{k} \in \pi /2 + 2\pi \mathbb {Z}$

when k is even, and

$\tau _{k}\log x_{k} \in 3\pi /2 + 2\pi \mathbb {Z}$

when k is odd;

$\tau _{k}\log x_{k} \in 3\pi /2 + 2\pi \mathbb {Z}$

when k is odd; -

(d)

$(\varepsilon _{k})_{k}$

is a bounded sequence.

$(\varepsilon _{k})_{k}$

is a bounded sequence.

Proof. We define the sequences inductively. Consider the function

![]() $f(u) = u\mathrm {e}^{cu^{\alpha }}$

. Let

$f(u) = u\mathrm {e}^{cu^{\alpha }}$

. Let

![]() $B_{0}$

be some (large) number with

$B_{0}$

be some (large) number with

![]() $f(\log B_{0})\in 4\pi \mathbb {Z}$

so that (b) is satisfied with

$f(\log B_{0})\in 4\pi \mathbb {Z}$

so that (b) is satisfied with

![]() $k=0$

. Define

$k=0$

. Define

![]() $y_{0}$

via

$y_{0}$

via

![]() $\log B_{0} = (c(\alpha +1))^{\frac {-1}{\alpha +1}}(\log y_{0}\log _{2} y_{0})^{\frac {1}{\alpha +1}}$

. We have that

$\log B_{0} = (c(\alpha +1))^{\frac {-1}{\alpha +1}}(\log y_{0}\log _{2} y_{0})^{\frac {1}{\alpha +1}}$

. We have that

![]() $\tau _{0}\log x_{0} - \tau _{0}\log y_{0} \asymp -\varepsilon _{0}\tau _{0}(\log B_{0})^{\alpha }/\log _{2}B_{0}$

, if

$\tau _{0}\log x_{0} - \tau _{0}\log y_{0} \asymp -\varepsilon _{0}\tau _{0}(\log B_{0})^{\alpha }/\log _{2}B_{0}$

, if

![]() $\varepsilon _{0}$

is bounded, say, so we may pick an

$\varepsilon _{0}$

is bounded, say, so we may pick an

![]() $\varepsilon _{0}$

satisfying even

$\varepsilon _{0}$

satisfying even

![]() $0 \le \varepsilon _{0} \ll \tau _{0}^{-1}(\log B_{0})^{-\alpha }\log _{2}B_{0}$

so that

$0 \le \varepsilon _{0} \ll \tau _{0}^{-1}(\log B_{0})^{-\alpha }\log _{2}B_{0}$

so that

![]() $\tau _{0}\log x_{0} \in \pi /2+2\pi \mathbb {Z}$

.

$\tau _{0}\log x_{0} \in \pi /2+2\pi \mathbb {Z}$

.

Now suppose that

![]() $B_{k}$

and

$B_{k}$

and

![]() $\varepsilon _{k}$

,

$\varepsilon _{k}$

,

![]() $0\le k \le K$

are defined. Choose a number

$0\le k \le K$

are defined. Choose a number

![]() $B_{K+1}>\max \{4(C_{K})^{2}, F(B_{K}), G(k)\}$

with

$B_{K+1}>\max \{4(C_{K})^{2}, F(B_{K}), G(k)\}$

with

![]() $f(\log B_{K+1}) \in 4\pi \mathbb {Z}$

, taking care of (a) and (b). As before, one might choose

$f(\log B_{K+1}) \in 4\pi \mathbb {Z}$

, taking care of (a) and (b). As before, one might choose

![]() $\varepsilon _{K+1}$

,

$\varepsilon _{K+1}$

,

![]() $0\le \varepsilon _{K+1}\ll \tau _{K+1}^{-1}(\log B_{K+1})^{-\alpha }\log _{2}B_{K+1}$

such that (c) holds. Property (d) is obvious.

$0\le \varepsilon _{K+1}\ll \tau _{K+1}^{-1}(\log B_{K+1})^{-\alpha }\log _{2}B_{K+1}$

such that (c) holds. Property (d) is obvious.

In order to deduce the asymptotics of

![]() $N_{C}$

, we shall analyze its zeta function

$N_{C}$

, we shall analyze its zeta function

![]() $\zeta _{C}$

and use an effective Perron formula:

$\zeta _{C}$

and use an effective Perron formula:

$$ \begin{align} N_{C}(x) = \frac{1}{2\pi\mathrm{i}}\int_{\kappa-\mathrm{i} T}^{\kappa+\mathrm{i} T}x^{s}\zeta_{C}(s)\frac{\mathrm{d} s}{s} + \mbox{ error term}. \end{align} $$

$$ \begin{align} N_{C}(x) = \frac{1}{2\pi\mathrm{i}}\int_{\kappa-\mathrm{i} T}^{\kappa+\mathrm{i} T}x^{s}\zeta_{C}(s)\frac{\mathrm{d} s}{s} + \mbox{ error term}. \end{align} $$

Here,

![]() $\kappa>1$

, the parameter

$\kappa>1$

, the parameter

![]() $T>0$

is some large number, and the error term depends on these numbers. The usual strategy is then to push the contour of integration to the left of

$T>0$

is some large number, and the error term depends on these numbers. The usual strategy is then to push the contour of integration to the left of

![]() $\sigma = \operatorname {Re} s =1$

; the pole of

$\sigma = \operatorname {Re} s =1$

; the pole of

![]() $\zeta _{C}$

at

$\zeta _{C}$

at

![]() $s=1$

will give the main term, while lower order terms will arise from the integral over the new contour (whose shape will be dictated by the growth of

$s=1$

will give the main term, while lower order terms will arise from the integral over the new contour (whose shape will be dictated by the growth of

![]() $\zeta _{C}$

). In its current form, this approach is not suited for our problem since it is not clear if our zeta function admits a meromorphic continuation to the left of

$\zeta _{C}$

). In its current form, this approach is not suited for our problem since it is not clear if our zeta function admits a meromorphic continuation to the left of

![]() $\sigma =1$

. However, we can remedy this with the following truncation idea.

$\sigma =1$

. However, we can remedy this with the following truncation idea.

Consider

![]() $x\ge 1$

, and let K be such that

$x\ge 1$

, and let K be such that

![]() $x < A_{K+1}$

. We denote by

$x < A_{K+1}$

. We denote by

![]() $\psi _{C,K}$

the Chebyshev function defined by equation (2.1) but where the summation range in the series is altered to the restricted range

$\psi _{C,K}$

the Chebyshev function defined by equation (2.1) but where the summation range in the series is altered to the restricted range

![]() $ 0\le k\le K$

. For

$ 0\le k\le K$

. For

![]() $x<A_{K+1}$

, we have

$x<A_{K+1}$

, we have

![]() $\psi _{C,K}(x) = \psi _{C}(x)$

, and, setting

$\psi _{C,K}(x) = \psi _{C}(x)$

, and, setting

![]() $\mathrm{d} \Pi _{C,K}(u) = (1/\log u) \mathrm{d} \psi _{C,K}(u)$

and

$\mathrm{d} \Pi _{C,K}(u) = (1/\log u) \mathrm{d} \psi _{C,K}(u)$

and

![]() $\mathrm{d} N_{C,K}(u) = \exp ^{\ast }(\mathrm{d} \Pi _{C,K}(u))$

, we also have that

$\mathrm{d} N_{C,K}(u) = \exp ^{\ast }(\mathrm{d} \Pi _{C,K}(u))$

, we also have that

![]() $N_{C,K}(x) = N_{C}(x)$

holds in this range. Hence, for these x, the above Perron formula (2.5) remains valid if we replace

$N_{C,K}(x) = N_{C}(x)$

holds in this range. Hence, for these x, the above Perron formula (2.5) remains valid if we replace

![]() $\zeta _{C}$

by

$\zeta _{C}$

by

![]() $\zeta _{C,K}$

, the zeta function of

$\zeta _{C,K}$

, the zeta function of

![]() $N_{C,K}$

, which does admit meromorphic continuation beyond

$N_{C,K}$

, which does admit meromorphic continuation beyond

![]() $\sigma =1$

.

$\sigma =1$

.

In the following two sections, we will study the Perron integral in equation (2.5) for

![]() $x = x_{K}$

and with

$x = x_{K}$

and with

![]() $\zeta _{C}$

replaced by

$\zeta _{C}$

replaced by

![]() $\zeta _{C,K}$

. Note that by (a), we may assume that

$\zeta _{C,K}$

. Note that by (a), we may assume that

![]() $x_{K} < A_{K+1}$

. To asymptotically evaluate this integral, we will use the saddle point method, also known as the method of steepest descent. For an introduction to the saddle point method, we refer to [Reference de Bruijn7, Chapters 5 and 6] or [Reference Estrada and Kanwal11, Section 3.6].

$x_{K} < A_{K+1}$

. To asymptotically evaluate this integral, we will use the saddle point method, also known as the method of steepest descent. For an introduction to the saddle point method, we refer to [Reference de Bruijn7, Chapters 5 and 6] or [Reference Estrada and Kanwal11, Section 3.6].

In Section 3, we will estimate the contribution from the integral over the steepest paths through the saddle points. This contribution will match the oscillation term in equation (1.6). In Section 4, we will connect these steepest paths to each other and to the vertical line

![]() $[\kappa -\mathrm {i} T, \kappa +\mathrm {i} T]$

and determine that the contribution of these connecting pieces to equation (2.5) is of lower order than the contribution from the saddle points. We also estimate the error term in the effective Perron formula in Section 5 and conclude the analysis of the continuous example. Finally, in Section 6 we use probabilistic methods to show the existence of a discrete Beurling system

$[\kappa -\mathrm {i} T, \kappa +\mathrm {i} T]$

and determine that the contribution of these connecting pieces to equation (2.5) is of lower order than the contribution from the saddle points. We also estimate the error term in the effective Perron formula in Section 5 and conclude the analysis of the continuous example. Finally, in Section 6 we use probabilistic methods to show the existence of a discrete Beurling system

![]() $(\Pi , N)$

that inherits the asymptotics of the continuous system

$(\Pi , N)$

that inherits the asymptotics of the continuous system

![]() $(\Pi _{C}, N_{C})$

.

$(\Pi _{C}, N_{C})$

.

3 Analysis of the saddle points

First we compute the zeta function

![]() $\zeta _{C,K}$

. Computing the Mellin transform of

$\zeta _{C,K}$

. Computing the Mellin transform of

![]() $\psi _{C,K}$

gives that

$\psi _{C,K}$

gives that

$$\begin{align*}-\frac{\zeta_{C,K}'}{\zeta_{C,K}}(s) = \frac{1}{s-1} - \frac{1}{s} + \sum_{k=0}^{K}\bigl( \eta_{k}(s) + \tilde{\eta}_{k}(s) + \xi_{k}(s) - \eta_{k}(s+1) - \tilde{\eta}_{k}(s+1) - \xi_{k}(s+1)\bigr), \end{align*}$$

$$\begin{align*}-\frac{\zeta_{C,K}'}{\zeta_{C,K}}(s) = \frac{1}{s-1} - \frac{1}{s} + \sum_{k=0}^{K}\bigl( \eta_{k}(s) + \tilde{\eta}_{k}(s) + \xi_{k}(s) - \eta_{k}(s+1) - \tilde{\eta}_{k}(s+1) - \xi_{k}(s+1)\bigr), \end{align*}$$

where

$$ \begin{align} \eta_{k}(s) = \frac{B_{k}^{1-s} - A_{k}^{1-s}}{4(1+\mathrm{i}\tau_{k} -s)}, \quad \tilde{\eta}_{k}(s) = \frac{B_{k}^{1-s} - A_{k}^{1-s}}{4(1-\mathrm{i}\tau_{k} -s)}, \quad \xi_{k}(s) = \frac{B_{k}^{1-s}-C_{k}^{1-s}}{2(1-s)}, \end{align} $$

$$ \begin{align} \eta_{k}(s) = \frac{B_{k}^{1-s} - A_{k}^{1-s}}{4(1+\mathrm{i}\tau_{k} -s)}, \quad \tilde{\eta}_{k}(s) = \frac{B_{k}^{1-s} - A_{k}^{1-s}}{4(1-\mathrm{i}\tau_{k} -s)}, \quad \xi_{k}(s) = \frac{B_{k}^{1-s}-C_{k}^{1-s}}{2(1-s)}, \end{align} $$

and where we used property (b) of the sequences

![]() $(A_{k})_{k}$

,

$(A_{k})_{k}$

,

![]() $(B_{k})_{k}$

. Integrating gives

$(B_{k})_{k}$

. Integrating gives

$$\begin{align*}\log \zeta_{C,K}(s) = \log\frac{s}{s-1} + \sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z, \end{align*}$$

$$\begin{align*}\log \zeta_{C,K}(s) = \log\frac{s}{s-1} + \sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z, \end{align*}$$

the integration constant being

![]() $0$

because

$0$

because

![]() $\log \zeta _{C,K}(\sigma ) \rightarrow 0$

as

$\log \zeta _{C,K}(\sigma ) \rightarrow 0$

as

![]() $\sigma \rightarrow \infty $

. The main term of the Perron integral formula for

$\sigma \rightarrow \infty $

. The main term of the Perron integral formula for

![]() $N_{C,K}(x_{K})$

becomes

$N_{C,K}(x_{K})$

becomes

$$\begin{align*}\frac{1}{2\pi\mathrm{i}}\int_{\kappa-\mathrm{i} T}^{\kappa+\mathrm{i} T}\frac{x_{K}^{s}}{s-1}\exp\biggl(\sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z\biggr)\mathrm{d} s. \end{align*}$$

$$\begin{align*}\frac{1}{2\pi\mathrm{i}}\int_{\kappa-\mathrm{i} T}^{\kappa+\mathrm{i} T}\frac{x_{K}^{s}}{s-1}\exp\biggl(\sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z\biggr)\mathrm{d} s. \end{align*}$$

The idea of the saddle point method is to estimate an integral of the form

![]() $\int _{\Gamma }\mathrm {e}^{f(s)}g(s)\mathrm{d} s$

, with f and g analytic, by shifting the contour

$\int _{\Gamma }\mathrm {e}^{f(s)}g(s)\mathrm{d} s$

, with f and g analytic, by shifting the contour

![]() $\Gamma $

to a contour which passes through the saddle points of f via the paths of steepest descent. Since the main contribution in the Perron integral will come from

$\Gamma $

to a contour which passes through the saddle points of f via the paths of steepest descent. Since the main contribution in the Perron integral will come from

![]() $x_{K}^{s}\exp (\int _{s}^{\infty }\eta _{K}(z)\mathrm{d} z)$

, we will apply the method with

$x_{K}^{s}\exp (\int _{s}^{\infty }\eta _{K}(z)\mathrm{d} z)$

, we will apply the method with

$$ \begin{align} f(s) &= f_{K}(s) = s\log x_{K} + \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z,\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\quad \end{align} $$

$$ \begin{align} f(s) &= f_{K}(s) = s\log x_{K} + \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z,\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\quad \end{align} $$

$$ \begin{align} g(s) &= g_{K}(s) = \frac{1}{s-1}\exp\biggl(\sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z - \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z\biggr). \end{align} $$

$$ \begin{align} g(s) &= g_{K}(s) = \frac{1}{s-1}\exp\biggl(\sum_{k=0}^{K}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z - \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z\biggr). \end{align} $$

Note also that by writing

![]() $\int _{s}^{\infty }\eta _{K}(z)\mathrm{d} z $

as a Mellin transform, we obtain the alternative representation

$\int _{s}^{\infty }\eta _{K}(z)\mathrm{d} z $

as a Mellin transform, we obtain the alternative representation

$$ \begin{align} \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z = \frac{1}{4}\int_{A_{K}}^{B_{K}}x^{-s}\mathrm{e}^{\mathrm{i}\tau\log x}\frac{1}{\log x}\mathrm{d} x = \frac{1}{4}\int_{1/2}^{1}\frac{B_{K}^{(1+\mathrm{i}\tau_{K} - s)u}}{u}\mathrm{d} u, \end{align} $$

$$ \begin{align} \int_{s}^{\infty}\eta_{K}(z)\mathrm{d} z = \frac{1}{4}\int_{A_{K}}^{B_{K}}x^{-s}\mathrm{e}^{\mathrm{i}\tau\log x}\frac{1}{\log x}\mathrm{d} x = \frac{1}{4}\int_{1/2}^{1}\frac{B_{K}^{(1+\mathrm{i}\tau_{K} - s)u}}{u}\mathrm{d} u, \end{align} $$

as we have set

![]() $A_{K} = \sqrt {B_{K}}$

. In the rest of this section, we will mostly work with

$A_{K} = \sqrt {B_{K}}$

. In the rest of this section, we will mostly work with

![]() $f_{K}$

, and we will drop the subscripts K where there is no risk of confusion.

$f_{K}$

, and we will drop the subscripts K where there is no risk of confusion.

3.1 The saddle points

We will now compute the saddle points of f, which are solutions of the equation

For integers m, set numbers

![]() $t^{\pm }_{m}$

as

$t^{\pm }_{m}$

as

![]() $t^{\pm }_{m} = \tau + (2\pi m \pm \pi /2)/\log B$

, and let

$t^{\pm }_{m} = \tau + (2\pi m \pm \pi /2)/\log B$

, and let

![]() $V_{m}$

be the rectangle with vertices

$V_{m}$

be the rectangle with vertices

$$\begin{align*}1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{\pm}_{m}, \quad \frac{1}{2} + \mathrm{i} t^{\pm}_{m}. \end{align*}$$

$$\begin{align*}1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{\pm}_{m}, \quad \frac{1}{2} + \mathrm{i} t^{\pm}_{m}. \end{align*}$$

Lemma 3.1. Suppose that

![]() $\left \lvert m \right \rvert < \log _{2} B$

. Then

$\left \lvert m \right \rvert < \log _{2} B$

. Then

![]() $f'$

has a unique simple zero

$f'$

has a unique simple zero

![]() $s_{m}$

in the interior of

$s_{m}$

in the interior of

![]() $V_{m}$

.

$V_{m}$

.

Proof. We apply the argument principle. Note that from equation (2.4) it follows that

$$ \begin{align*} f'\biggl(\frac{1}{2} + \mathrm{i} t^{-}_{m}\biggr) \!&= -\frac{\mathrm{i}}{2}B^{1/2}\bigl(1+o(1)\bigr), & f'\biggl(\kern-1.2pt 1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{-}_{m}\biggr) \!&=\log x\bigl(1+o(1)\bigr), \\ f'\biggl(1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{+}_{m}\biggr) \!&=\log x\bigl(1+o(1)\bigr), & f'\biggl(\frac{1}{2} + \mathrm{i} t^{+}_{m}\biggr)\! &= \frac{\mathrm{i}}{2}B^{1/2}\bigl(1+o(1)\bigr)\kern-1.2pt. \end{align*} $$

$$ \begin{align*} f'\biggl(\frac{1}{2} + \mathrm{i} t^{-}_{m}\biggr) \!&= -\frac{\mathrm{i}}{2}B^{1/2}\bigl(1+o(1)\bigr), & f'\biggl(\kern-1.2pt 1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{-}_{m}\biggr) \!&=\log x\bigl(1+o(1)\bigr), \\ f'\biggl(1-\frac{\frac{\alpha}{2}\log_{2} B}{\log B} + \mathrm{i} t^{+}_{m}\biggr) \!&=\log x\bigl(1+o(1)\bigr), & f'\biggl(\frac{1}{2} + \mathrm{i} t^{+}_{m}\biggr)\! &= \frac{\mathrm{i}}{2}B^{1/2}\bigl(1+o(1)\bigr)\kern-1.2pt. \end{align*} $$

On the lower horizontal side of

![]() $V_{m}$

, we have

$V_{m}$

, we have

$$\begin{align*}\operatorname{Im} f'(\sigma+\mathrm{i} t^{-}_{m}) = -\frac{B^{1-\sigma}/4}{(1-\sigma)^{2}+(\tau-t_{m}^{-})^{2}}\biggl\{ \biggl(1-\frac{\sqrt{2}}{2}B^{\frac{\sigma-1}{2}}\biggr)(1-\sigma) + \frac{\sqrt{2}}{2}B^{\frac{\sigma-1}{2}}(\tau-t_{m}^{-})\biggr\} < 0, \end{align*}$$

$$\begin{align*}\operatorname{Im} f'(\sigma+\mathrm{i} t^{-}_{m}) = -\frac{B^{1-\sigma}/4}{(1-\sigma)^{2}+(\tau-t_{m}^{-})^{2}}\biggl\{ \biggl(1-\frac{\sqrt{2}}{2}B^{\frac{\sigma-1}{2}}\biggr)(1-\sigma) + \frac{\sqrt{2}}{2}B^{\frac{\sigma-1}{2}}(\tau-t_{m}^{-})\biggr\} < 0, \end{align*}$$

as the factor inside the curly brackets is positive in the considered ranges for

![]() $\sigma $

and m. Similarly, we have

$\sigma $

and m. Similarly, we have

![]() $\operatorname {Im} f'(\sigma +\mathrm {i} t^{+}_{m})>0$

on the upper horizontal edge of

$\operatorname {Im} f'(\sigma +\mathrm {i} t^{+}_{m})>0$

on the upper horizontal edge of

![]() $V_{m}$

. On the right vertical edge,

$V_{m}$

. On the right vertical edge,

$$\begin{align*}\operatorname{Re} f'\biggl(1-\frac{\frac{\alpha}{2}\log_{2}B}{\log B} +\mathrm{i} t\biggr)>0, \end{align*}$$

$$\begin{align*}\operatorname{Re} f'\biggl(1-\frac{\frac{\alpha}{2}\log_{2}B}{\log B} +\mathrm{i} t\biggr)>0, \end{align*}$$

and on the left vertical edge,

$$\begin{align*}f'\biggl(\frac{1}{2}+\mathrm{i} t\biggr) = \frac{B^{1/2}}{2}\mathrm{e}^{\mathrm{i}\pi-\mathrm{i}(t-\tau)\log B}\bigl(1+o(1)\bigr). \end{align*}$$

$$\begin{align*}f'\biggl(\frac{1}{2}+\mathrm{i} t\biggr) = \frac{B^{1/2}}{2}\mathrm{e}^{\mathrm{i}\pi-\mathrm{i}(t-\tau)\log B}\bigl(1+o(1)\bigr). \end{align*}$$

Starting from the lower left vertex of

![]() $V_{m}$

and moving in the counterclockwise direction, we see that the argument of

$V_{m}$

and moving in the counterclockwise direction, we see that the argument of

![]() $f'$

starts off close to

$f'$

starts off close to

![]() $-\pi /2$

, increases to about

$-\pi /2$

, increases to about

![]() $0$

on the lower horizontal edge, remains close to

$0$

on the lower horizontal edge, remains close to

![]() $0$

on the right vertical edge, increases to about

$0$

on the right vertical edge, increases to about

![]() $\pi /2$

on the upper horizontal edge and finally increases to approximately

$\pi /2$

on the upper horizontal edge and finally increases to approximately

![]() $3\pi /2$

on the left vertical edge. This proves the lemma.

$3\pi /2$

on the left vertical edge. This proves the lemma.

From now on, we assume that

![]() $\left \lvert m \right \rvert < \varepsilon \log _{2} B$

for some small

$\left \lvert m \right \rvert < \varepsilon \log _{2} B$

for some small

![]() $\varepsilon>0$

. (In fact, later on we will further reduce the range to

$\varepsilon>0$

. (In fact, later on we will further reduce the range to

![]() $\left \lvert m \right \rvert \le (\log _{2} B)^{3/4}$

.) We denote the unique saddle point in the rectangle

$\left \lvert m \right \rvert \le (\log _{2} B)^{3/4}$

.) We denote the unique saddle point in the rectangle

![]() $V_{m}$

by

$V_{m}$

by

![]() $s_{m} = \sigma _{m} + \mathrm {i} t_{m}$

. The saddle point equation (3.5) implies that

$s_{m} = \sigma _{m} + \mathrm {i} t_{m}$

. The saddle point equation (3.5) implies that

$$ \begin{align*} \sigma_{m} &= 1-\frac{1}{\log B}\biggl(\log_{2} x + \log 4 - \log\left\lvert 1-B^{(s_{m}-1)/2} \right\rvert - \log\left\lvert \frac{1}{1+\mathrm{i}\tau-s_{m}} \right\rvert \,\biggr), \\ t_{m} &= \tau + \frac{1}{\log B}\biggl(2\pi m + \arg\bigl(1-B^{(s_{m}-1)/2}\bigr) - \arg\bigl(1+\mathrm{i}\tau - s_{m}\bigr)\biggr), \end{align*} $$

$$ \begin{align*} \sigma_{m} &= 1-\frac{1}{\log B}\biggl(\log_{2} x + \log 4 - \log\left\lvert 1-B^{(s_{m}-1)/2} \right\rvert - \log\left\lvert \frac{1}{1+\mathrm{i}\tau-s_{m}} \right\rvert \,\biggr), \\ t_{m} &= \tau + \frac{1}{\log B}\biggl(2\pi m + \arg\bigl(1-B^{(s_{m}-1)/2}\bigr) - \arg\bigl(1+\mathrm{i}\tau - s_{m}\bigr)\biggr), \end{align*} $$

with the understanding that the difference of the arguments in the formula for

![]() $t_{m}$

lies in

$t_{m}$

lies in

![]() $[-\pi /2,\pi /2]$

. We set

$[-\pi /2,\pi /2]$

. We set

$$\begin{align*}E_{m} = \log\left\lvert \frac{1}{1+\mathrm{i}\tau - s_{m}} \right\rvert. \end{align*}$$

$$\begin{align*}E_{m} = \log\left\lvert \frac{1}{1+\mathrm{i}\tau - s_{m}} \right\rvert. \end{align*}$$

Since

![]() $s_{m}\in V_{m}$

, we have

$s_{m}\in V_{m}$

, we have

![]() $0\le E_{m}\le \log _{2} B$

. Also

$0\le E_{m}\le \log _{2} B$

. Also

![]() $\log \left \lvert 1-B^{(s_{m}-1)/2} \right \rvert = O(1)$

. This implies that

$\log \left \lvert 1-B^{(s_{m}-1)/2} \right \rvert = O(1)$

. This implies that

so that

![]() $E_{m} = \log _{2} B - \log _{3}x + O(1)$

. Here, we have also used that

$E_{m} = \log _{2} B - \log _{3}x + O(1)$

. Here, we have also used that

the last formula following from equation (2.4). This in turn implies that

where we again used equation (2.4). Combining this with equation (3.5), we get in particular that

$$ \begin{align} \log x = \frac{B^{1-s_{m}}}{4(1+\mathrm{i}\tau-s_{m})}\bigl(1+O\bigl((\log B)^{-\alpha/2}\bigr)\bigr). \end{align} $$

$$ \begin{align} \log x = \frac{B^{1-s_{m}}}{4(1+\mathrm{i}\tau-s_{m})}\bigl(1+O\bigl((\log B)^{-\alpha/2}\bigr)\bigr). \end{align} $$

For

![]() $t_{m}$

, we have that

$t_{m}$

, we have that

$$ \begin{align*} \arg(1-B^{(s_{m}-1)/2}) &\ll (\log B)^{-\alpha/2}, \\ \arg(1+\mathrm{i}\tau - s_{m}) &= -\frac{2\pi m}{\alpha\log_{2} B} + O\biggl(\frac{1}{\log_{2} B} + \frac{\left\lvert m \right\rvert }{(\log_{2} B)^{2}} + \frac{\left\lvert m \right\rvert ^{3}}{(\log_{2} B)^{3}}\biggr). \end{align*} $$

$$ \begin{align*} \arg(1-B^{(s_{m}-1)/2}) &\ll (\log B)^{-\alpha/2}, \\ \arg(1+\mathrm{i}\tau - s_{m}) &= -\frac{2\pi m}{\alpha\log_{2} B} + O\biggl(\frac{1}{\log_{2} B} + \frac{\left\lvert m \right\rvert }{(\log_{2} B)^{2}} + \frac{\left\lvert m \right\rvert ^{3}}{(\log_{2} B)^{3}}\biggr). \end{align*} $$

We get that

$$ \begin{align} t_{m} = \tau + \frac{1}{\log B}\biggl\{2\pi m\biggl(1+\frac{1}{\alpha\log_{2} B}\biggr) + O\biggl(\frac{1}{\log_{2} B} + \frac{\left\lvert m \right\rvert }{(\log_{2} B)^{2}} + \frac{\left\lvert m \right\rvert ^{3}}{(\log_{2} B)^{3}}\biggr) \biggr\}. \end{align} $$

$$ \begin{align} t_{m} = \tau + \frac{1}{\log B}\biggl\{2\pi m\biggl(1+\frac{1}{\alpha\log_{2} B}\biggr) + O\biggl(\frac{1}{\log_{2} B} + \frac{\left\lvert m \right\rvert }{(\log_{2} B)^{2}} + \frac{\left\lvert m \right\rvert ^{3}}{(\log_{2} B)^{3}}\biggr) \biggr\}. \end{align} $$

Also, it is important to notice that

![]() $t_{0} = \tau $

.

$t_{0} = \tau $

.

The main contribution to the Perron integral (2.5) will come from the saddle point

![]() $s_{0}$

; see Subsection 3.3. We will show in Subsection 3.5 that the contribution from the other saddle points

$s_{0}$

; see Subsection 3.3. We will show in Subsection 3.5 that the contribution from the other saddle points

![]() $s_{m}$

,

$s_{m}$

,

![]() $m\neq 0$

, is of lower order. This will require a finer estimate for

$m\neq 0$

, is of lower order. This will require a finer estimate for

![]() $\sigma _{m}$

, which is the subject of the following lemma.

$\sigma _{m}$

, which is the subject of the following lemma.

Lemma 3.2. There exists a fixed constant

![]() $d>0$

, independent of K and m such that for

$d>0$

, independent of K and m such that for

![]() $\left \lvert m \right \rvert \le (\log _{2}B)^{3/4}$

,

$\left \lvert m \right \rvert \le (\log _{2}B)^{3/4}$

,

![]() $m\neq 0$

,

$m\neq 0$

,

$$\begin{align*}\sigma_{m} \le \sigma_{0} - \frac{d}{\log B(\log_{2} B)^{2}}. \end{align*}$$

$$\begin{align*}\sigma_{m} \le \sigma_{0} - \frac{d}{\log B(\log_{2} B)^{2}}. \end{align*}$$

Proof. We use equations (3.6) and (3.8) to get a better estimate for

![]() $E_{m}$

, which will in turn yield a better estimate for

$E_{m}$

, which will in turn yield a better estimate for

![]() $\sigma _{m}$

. We iterate this procedure three times.

$\sigma _{m}$

. We iterate this procedure three times.

The first iteration yields

$$\begin{align*}\sigma_{m} = 1 - \frac{1}{\log B}\biggl\{\log_{2} x - \log_{2} B + \log_{3} B + \log 4 + \log\alpha + O\biggl(\frac{1+\left\lvert m \right\rvert }{\log_{2} B}\biggr)\biggr\}. \end{align*}$$

$$\begin{align*}\sigma_{m} = 1 - \frac{1}{\log B}\biggl\{\log_{2} x - \log_{2} B + \log_{3} B + \log 4 + \log\alpha + O\biggl(\frac{1+\left\lvert m \right\rvert }{\log_{2} B}\biggr)\biggr\}. \end{align*}$$

Write

![]() $Y = \log _{2}x - \log _{2}B + \log _{3}B$

, and note that

$Y = \log _{2}x - \log _{2}B + \log _{3}B$

, and note that

![]() $Y \asymp \log _{2}B$

. Iterating a second time, we get

$Y \asymp \log _{2}B$

. Iterating a second time, we get

$$\begin{align*}\sigma_{m} = 1-\frac{1}{\log B}\biggl\{\log_{2}x - \log_{2}B + \log Y +\log 4 + \frac{\log4+\log\alpha}{Y} + O\biggl(\frac{1+m^{2}}{(\log_{2}B)^{2}}\biggr)\biggr\}. \end{align*}$$

$$\begin{align*}\sigma_{m} = 1-\frac{1}{\log B}\biggl\{\log_{2}x - \log_{2}B + \log Y +\log 4 + \frac{\log4+\log\alpha}{Y} + O\biggl(\frac{1+m^{2}}{(\log_{2}B)^{2}}\biggr)\biggr\}. \end{align*}$$

We now set

![]() $Y' = \log _{2}x-\log _{2}B + \log Y$

and note again that

$Y' = \log _{2}x-\log _{2}B + \log Y$

and note again that

![]() $Y'\asymp \log _{2}B$

. A final iteration gives

$Y'\asymp \log _{2}B$

. A final iteration gives

$$ \begin{align*} \sigma_{m} = 1 - \frac{1}{\log B}\biggl\{\log_{2}x - \log_{2}B &+ \log Y' + \log4 + \frac{\log 4}{Y'} + \frac{\log4+\log\alpha}{YY'} \\ & -\frac{(\log4)^{2}}{2Y^{\prime2}} +\frac{2\pi^{2}m^{2}}{Y^{\prime2}} - \frac{4\pi^{4}m^{4}}{Y^{\prime4}} + O\biggl(\frac{1+m^{2}}{(\log_{2}B)^{3}}\biggr)\biggr\}. \end{align*} $$

$$ \begin{align*} \sigma_{m} = 1 - \frac{1}{\log B}\biggl\{\log_{2}x - \log_{2}B &+ \log Y' + \log4 + \frac{\log 4}{Y'} + \frac{\log4+\log\alpha}{YY'} \\ & -\frac{(\log4)^{2}}{2Y^{\prime2}} +\frac{2\pi^{2}m^{2}}{Y^{\prime2}} - \frac{4\pi^{4}m^{4}}{Y^{\prime4}} + O\biggl(\frac{1+m^{2}}{(\log_{2}B)^{3}}\biggr)\biggr\}. \end{align*} $$

The lemma now follows from comparing the above formula in the case

![]() $m=0$

with the case

$m=0$

with the case

![]() $m\neq 0$

.

$m\neq 0$

.

Near the saddle points, we will approximate f and

![]() $f'$

by their Taylor polynomials.

$f'$

by their Taylor polynomials.

Lemma 3.3. There are holomorphic functions

![]() $\lambda _{m}$

and

$\lambda _{m}$

and

![]() $\tilde {\lambda }_{m}$

such that

$\tilde {\lambda }_{m}$

such that

$$ \begin{align*} f(s) &= f(s_{m}) + \frac{f"(s_{m})}{2}(s-s_{m})^{2}(1+\lambda_{m}(s)), \\ f'(s) &= f"(s_{m})(s-s_{m})(1+\tilde{\lambda}_{m}(s)), \end{align*} $$

$$ \begin{align*} f(s) &= f(s_{m}) + \frac{f"(s_{m})}{2}(s-s_{m})^{2}(1+\lambda_{m}(s)), \\ f'(s) &= f"(s_{m})(s-s_{m})(1+\tilde{\lambda}_{m}(s)), \end{align*} $$

and with the property that for each

![]() $\varepsilon>0$

, there exists a

$\varepsilon>0$

, there exists a

![]() $\delta>0$

, independent of K and m such that

$\delta>0$

, independent of K and m such that

Proof. We have

$$\begin{align*}f"(s) = (\log B)\frac{B^{1-s} - \frac{1}{2} B^{(1-s)/2}}{4(1+\mathrm{i}\tau - s)} - \frac{B^{1-s} - B^{(1-s)/2}}{4(1+\mathrm{i}\tau-s)^{2}}, \quad \left\lvert f"(s_{m}) \right\rvert \asymp \frac{(\log B)^{\alpha}(\log B)^{2}}{\log_{2}B}, \end{align*}$$

$$\begin{align*}f"(s) = (\log B)\frac{B^{1-s} - \frac{1}{2} B^{(1-s)/2}}{4(1+\mathrm{i}\tau - s)} - \frac{B^{1-s} - B^{(1-s)/2}}{4(1+\mathrm{i}\tau-s)^{2}}, \quad \left\lvert f"(s_{m}) \right\rvert \asymp \frac{(\log B)^{\alpha}(\log B)^{2}}{\log_{2}B}, \end{align*}$$

where we have used equation (3.6), and

$$\begin{align*}f"'(s) = -(\log B)^{2}\frac{B^{1-s} - \frac{1}{4}B^{(1-s)/2}}{4(1+\mathrm{i}\tau-s)} + (\log B)\frac{B^{1-s}-\frac{1}{2}B^{(1-s)/2}}{2(1+\mathrm{i}\tau-s)^{2}} - \frac{B^{1-s}-B^{(1-s)/2}}{2(1+\mathrm{i}\tau-s)^{3}}. \end{align*}$$

$$\begin{align*}f"'(s) = -(\log B)^{2}\frac{B^{1-s} - \frac{1}{4}B^{(1-s)/2}}{4(1+\mathrm{i}\tau-s)} + (\log B)\frac{B^{1-s}-\frac{1}{2}B^{(1-s)/2}}{2(1+\mathrm{i}\tau-s)^{2}} - \frac{B^{1-s}-B^{(1-s)/2}}{2(1+\mathrm{i}\tau-s)^{3}}. \end{align*}$$

If

![]() $\left \lvert s-s_{m} \right \rvert \ll 1/\log B$

, then

$\left \lvert s-s_{m} \right \rvert \ll 1/\log B$

, then

$$\begin{align*}\left\lvert f"'(s) \right\rvert \ll \frac{(\log B)^{\alpha}(\log B)^{3}}{\log_{2}B}. \end{align*}$$

$$\begin{align*}\left\lvert f"'(s) \right\rvert \ll \frac{(\log B)^{\alpha}(\log B)^{3}}{\log_{2}B}. \end{align*}$$

It follows that

$$\begin{align*}\left\lvert \frac{f"'(s)}{f"(s_{m})}(s-s_{m}) \right\rvert < \varepsilon, \end{align*}$$

$$\begin{align*}\left\lvert \frac{f"'(s)}{f"(s_{m})}(s-s_{m}) \right\rvert < \varepsilon, \end{align*}$$

if

![]() $\left \lvert s-s_{m} \right \rvert < \delta /\log B$

, for sufficiently small

$\left \lvert s-s_{m} \right \rvert < \delta /\log B$

, for sufficiently small

![]() $\delta $

. The lemma now follows from Taylor’s formula.

$\delta $

. The lemma now follows from Taylor’s formula.

3.2 The steepest path through

$s_{0}$

$s_{0}$

The equation for the path of steepest descent through

![]() $s_{0}$

is

$s_{0}$

is

Using the formula (3.4) for

![]() $\int _{s}^{\infty }\eta (z)\mathrm{d} z$

, we get the equation

$\int _{s}^{\infty }\eta (z)\mathrm{d} z$

, we get the equation

$$\begin{align*}t\log x - \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma)u}\sin\bigl((t-\tau)(\log B) u\bigr)\frac{\mathrm{d} u}{u} = \tau\log x. \end{align*}$$

$$\begin{align*}t\log x - \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma)u}\sin\bigl((t-\tau)(\log B) u\bigr)\frac{\mathrm{d} u}{u} = \tau\log x. \end{align*}$$

Setting

![]() $\theta =(t-\tau )\log B$

, this is equivalent to

$\theta =(t-\tau )\log B$

, this is equivalent to

$$ \begin{align} \theta\frac{\log x}{\log B} = \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma)u}\sin(\theta u)\frac{\mathrm{d} u}{u}. \end{align} $$

$$ \begin{align} \theta\frac{\log x}{\log B} = \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma)u}\sin(\theta u)\frac{\mathrm{d} u}{u}. \end{align} $$

Note that, as t varies between

![]() $t_{0}^{-}$

and

$t_{0}^{-}$

and

![]() $t_{0}^{+}$

,

$t_{0}^{+}$

,

![]() $\theta $

varies between

$\theta $

varies between

![]() $-\pi /2$

and

$-\pi /2$

and

![]() $\pi /2$

. This equation has every point of the line

$\pi /2$

. This equation has every point of the line

![]() $\theta =0$

as a solution. However, one sees that the line

$\theta =0$

as a solution. However, one sees that the line

![]() $\theta =0$

is the path of steepest ascent since

$\theta =0$

is the path of steepest ascent since

![]() $\operatorname {Re} f(s) \ge \operatorname {Re} f(s_{0})$

there. We now show the existence of a different curve through

$\operatorname {Re} f(s) \ge \operatorname {Re} f(s_{0})$

there. We now show the existence of a different curve through

![]() $s_{0}$

of which each point is a solution of equation (3.9). This is then necessarily the path of steepest descent. For each fixed

$s_{0}$

of which each point is a solution of equation (3.9). This is then necessarily the path of steepest descent. For each fixed

![]() $\theta \in [-\pi /2, \pi /2] \setminus \{0\}$

, equation (3.9) has a unique solution

$\theta \in [-\pi /2, \pi /2] \setminus \{0\}$

, equation (3.9) has a unique solution

![]() $\sigma =\sigma _{\theta }$

since the right-hand side is a continuous and monotone function of

$\sigma =\sigma _{\theta }$

since the right-hand side is a continuous and monotone function of

![]() $\sigma $

, with range

$\sigma $

, with range

![]() $\mathbb {R}_{\gtrless 0}$

, if

$\mathbb {R}_{\gtrless 0}$

, if

![]() $\theta \gtrless 0$

. This shows the existence of the path of steepest descent

$\theta \gtrless 0$

. This shows the existence of the path of steepest descent

![]() $\Gamma _{0}$

through

$\Gamma _{0}$

through

![]() $s_{0}$

. This path connects the lines

$s_{0}$

. This path connects the lines

![]() $\theta =-\pi /2$

and

$\theta =-\pi /2$

and

![]() $\theta =\pi /2$

.

$\theta =\pi /2$

.

One can readily see that

Integrating by parts, we see that

$$ \begin{align*} \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma_{\theta})u}\sin(\theta u)\frac{\mathrm{d} u}{u} &= \frac{1}{4}\sin\theta \frac{B^{1-\sigma_{\theta}}}{(1-\sigma_{\theta})\log B}\bigl(1+O\bigl((\log B)^{-\alpha/2}\bigr) + O\bigl((\log_{2} B)^{-1}\bigr)\bigr) \\ &=\frac{\sin\theta}{4\log B}\frac{B^{1-\sigma_{0}}}{1-\sigma_{0}}\mathrm{e}^{a_{\theta}}\bigl(1+O\bigl((\log_{2} B)^{-1}\bigr)\bigr) \\ &=\sin\theta\frac{\log x}{\log B}\mathrm{e}^{a_{\theta}}\bigl(1+O\bigl((\log_{2} B)^{-1}\bigr)\bigr), \end{align*} $$

$$ \begin{align*} \frac{1}{4}\int_{1/2}^{1}B^{(1-\sigma_{\theta})u}\sin(\theta u)\frac{\mathrm{d} u}{u} &= \frac{1}{4}\sin\theta \frac{B^{1-\sigma_{\theta}}}{(1-\sigma_{\theta})\log B}\bigl(1+O\bigl((\log B)^{-\alpha/2}\bigr) + O\bigl((\log_{2} B)^{-1}\bigr)\bigr) \\ &=\frac{\sin\theta}{4\log B}\frac{B^{1-\sigma_{0}}}{1-\sigma_{0}}\mathrm{e}^{a_{\theta}}\bigl(1+O\bigl((\log_{2} B)^{-1}\bigr)\bigr) \\ &=\sin\theta\frac{\log x}{\log B}\mathrm{e}^{a_{\theta}}\bigl(1+O\bigl((\log_{2} B)^{-1}\bigr)\bigr), \end{align*} $$

where we used equation (3.7) in the last line. Equation (3.9) then implies that

Let

![]() $\gamma $

now be a unit speed parametrization of this path of steepest descent:

$\gamma $

now be a unit speed parametrization of this path of steepest descent:

The fact that

![]() $\Gamma _{0}$

is the path of steepest descent implies that for

$\Gamma _{0}$

is the path of steepest descent implies that for

![]() $y<0$

,

$y<0$

,

![]() $\gamma '(y)$

is a positive multiple of

$\gamma '(y)$

is a positive multiple of

![]() $\overline {f'}(\gamma (y))$

, while for

$\overline {f'}(\gamma (y))$

, while for

![]() $y>0$

,

$y>0$

,

![]() $\gamma '(y)$

is a negative multiple of

$\gamma '(y)$

is a negative multiple of

![]() $\overline {f'}(\gamma (y))$

. We now show that the argument of the tangent vector

$\overline {f'}(\gamma (y))$

. We now show that the argument of the tangent vector

![]() $\gamma '(y)$

is sufficiently close to

$\gamma '(y)$

is sufficiently close to

![]() $\pi /2$

.

$\pi /2$

.

Lemma 3.4. For

![]() $y\in [y^{-}, y^{+}]$

,

$y\in [y^{-}, y^{+}]$

,

![]() $\left \lvert \arg \bigl (\gamma '(y)\mathrm {e}^{-\mathrm {i}\pi /2}\bigr ) \right \rvert < \pi /5$

.

$\left \lvert \arg \bigl (\gamma '(y)\mathrm {e}^{-\mathrm {i}\pi /2}\bigr ) \right \rvert < \pi /5$

.

Proof. We consider two cases: the case where s is sufficiently close to

![]() $s_{0}$

so that we can apply Lemma 3.3 to estimate the argument of

$s_{0}$

so that we can apply Lemma 3.3 to estimate the argument of

![]() $\overline {f'}$

and the remaining case, where we will estimate this argument via the definition of f.

$\overline {f'}$

and the remaining case, where we will estimate this argument via the definition of f.

We apply Lemma 3.3 with

![]() $\varepsilon = 1/5$

to find a

$\varepsilon = 1/5$

to find a

![]() $\delta>0$

such that for

$\delta>0$

such that for

![]() $\left \lvert s-s_{0} \right \rvert < \delta /\log B$

,

$\left \lvert s-s_{0} \right \rvert < \delta /\log B$

,

Set

![]() $s-s_{0} = r\mathrm {e}^{\mathrm {i}\phi }$

with

$s-s_{0} = r\mathrm {e}^{\mathrm {i}\phi }$

with

![]() $r<\delta /\log B$

and

$r<\delta /\log B$

and

![]() $-\pi < \phi \le \pi $

. Using that

$-\pi < \phi \le \pi $

. Using that

![]() $f"(s_{0})$

is real and positive, we have

$f"(s_{0})$

is real and positive, we have

$$ \begin{align*} \operatorname{Re} h(s) &= \frac{f"(s_{0})}{2}r^{2}\bigl((1+\operatorname{Re}\lambda_{0}(s))\cos2\phi - (\operatorname{Im}\lambda_{0}(s))\sin2\phi\bigr)\\ \operatorname{Im} h(s) &= \frac{f"(s_{0})}{2}r^{2}\bigl((1+\operatorname{Re}\lambda_{0}(s))\sin2\phi + (\operatorname{Im}\lambda_{0}(s))\cos2\phi\bigr). \end{align*} $$

$$ \begin{align*} \operatorname{Re} h(s) &= \frac{f"(s_{0})}{2}r^{2}\bigl((1+\operatorname{Re}\lambda_{0}(s))\cos2\phi - (\operatorname{Im}\lambda_{0}(s))\sin2\phi\bigr)\\ \operatorname{Im} h(s) &= \frac{f"(s_{0})}{2}r^{2}\bigl((1+\operatorname{Re}\lambda_{0}(s))\sin2\phi + (\operatorname{Im}\lambda_{0}(s))\cos2\phi\bigr). \end{align*} $$

Suppose

![]() $s\in \Gamma _{0}\setminus \{s_{0}\}$

with

$s\in \Gamma _{0}\setminus \{s_{0}\}$

with

![]() $\left \lvert s-s_{0} \right \rvert < \delta /\log B$

. Then

$\left \lvert s-s_{0} \right \rvert < \delta /\log B$

. Then

![]() $\operatorname {Re} h(s)<0$

and

$\operatorname {Re} h(s)<0$

and

![]() $\operatorname {Im} h(s)=0$

. The condition

$\operatorname {Im} h(s)=0$

. The condition

![]() $\operatorname {Re} h(s) < 0$

implies that

$\operatorname {Re} h(s) < 0$

implies that

![]() $\phi \in (-4\pi /5, -\pi /5) \cup (\pi /5, 4\pi /5)$

say, as

$\phi \in (-4\pi /5, -\pi /5) \cup (\pi /5, 4\pi /5)$

say, as

![]() $\left \lvert \lambda _{0}(s) \right \rvert < 1/5$

. In combination with

$\left \lvert \lambda _{0}(s) \right \rvert < 1/5$

. In combination with

![]() $\operatorname {Im} h(s) = 0$

, this implies that

$\operatorname {Im} h(s) = 0$

, this implies that

![]() $\phi \in (-3\pi /5,-2\pi /5) \cup (2\pi /5, 3\pi /5)$

whenever

$\phi \in (-3\pi /5,-2\pi /5) \cup (2\pi /5, 3\pi /5)$

whenever

![]() $s\in \Gamma _{0}\setminus \{s_{0}\}$

,

$s\in \Gamma _{0}\setminus \{s_{0}\}$

,

![]() $\left \lvert s-s_{0} \right \rvert < \delta /\log B$

. Again by Lemma 3.3,

$\left \lvert s-s_{0} \right \rvert < \delta /\log B$

. Again by Lemma 3.3,

It follows that

![]() $\left \lvert \arg \bigl (\gamma '(y)\mathrm {e}^{-\mathrm {i}\pi /2}\bigr ) \right \rvert < \pi /5$

when

$\left \lvert \arg \bigl (\gamma '(y)\mathrm {e}^{-\mathrm {i}\pi /2}\bigr ) \right \rvert < \pi /5$

when

![]() $\left \lvert \gamma (y)-s_{0} \right \rvert < \delta /\log B$

.

$\left \lvert \gamma (y)-s_{0} \right \rvert < \delta /\log B$

.

It remains to treat the case

![]() $\left \lvert \gamma (y)-s_{0} \right \rvert \ge \delta /\log B$

. For these points, we have that

$\left \lvert \gamma (y)-s_{0} \right \rvert \ge \delta /\log B$

. For these points, we have that

![]() $\delta /2 \le \left \lvert \theta \right \rvert \le \pi /2$

, where we used the notation

$\delta /2 \le \left \lvert \theta \right \rvert \le \pi /2$

, where we used the notation

![]() $\theta = (\operatorname {Im} \gamma (y) -\tau )\log B$

as before. Set

$\theta = (\operatorname {Im} \gamma (y) -\tau )\log B$

as before. Set

![]() $\gamma (y) = s = \sigma +\mathrm {i} t$

with

$\gamma (y) = s = \sigma +\mathrm {i} t$

with

![]() $\sigma =\sigma _{0} - a_{\theta }/\log B$

. Recalling that

$\sigma =\sigma _{0} - a_{\theta }/\log B$

. Recalling that

![]() $\tau \log B\in 4\pi \mathbb {Z}$

, we obtain the following explicit expression for

$\tau \log B\in 4\pi \mathbb {Z}$

, we obtain the following explicit expression for

![]() $\overline {f'}$

:

$\overline {f'}$

:

$$ \begin{align*} \overline{f'}(s)& = \log x - \frac{1/4}{(1-\sigma)^{2}+(t-\tau)^{2}}\biggl\{ B^{1-\sigma}\biggl(\!\biggl((1-\sigma)\cos\theta + \frac{\theta\sin\theta}{\log B}\biggr) + \mathrm{i}\biggl((1-\sigma)\sin\theta - \frac{\theta\cos\theta}{\log B}\biggr)\!\biggr) \\[5pt] &\quad-B^{(1-\sigma)/2}\biggl(\biggl((1-\sigma)\cos(\theta/2) + \frac{\theta\sin(\theta/2)}{\log B}\biggr) + \mathrm{i}\biggl((1-\sigma)\sin(\theta/2) - \frac{\theta\cos(\theta/2)}{\log B}\biggr)\biggr)\biggr\}. \end{align*} $$

$$ \begin{align*} \overline{f'}(s)& = \log x - \frac{1/4}{(1-\sigma)^{2}+(t-\tau)^{2}}\biggl\{ B^{1-\sigma}\biggl(\!\biggl((1-\sigma)\cos\theta + \frac{\theta\sin\theta}{\log B}\biggr) + \mathrm{i}\biggl((1-\sigma)\sin\theta - \frac{\theta\cos\theta}{\log B}\biggr)\!\biggr) \\[5pt] &\quad-B^{(1-\sigma)/2}\biggl(\biggl((1-\sigma)\cos(\theta/2) + \frac{\theta\sin(\theta/2)}{\log B}\biggr) + \mathrm{i}\biggl((1-\sigma)\sin(\theta/2) - \frac{\theta\cos(\theta/2)}{\log B}\biggr)\biggr)\biggr\}. \end{align*} $$

Using equations (3.7) and (3.10), we see that

This implies

The last inequality follows from the fact that

![]() $\left \lvert 1/\theta -\cot \theta \right \rvert < 2/\pi $

for

$\left \lvert 1/\theta -\cot \theta \right \rvert < 2/\pi $

for

![]() $\theta \in [-\pi /2, \pi /2]$

and that

$\theta \in [-\pi /2, \pi /2]$

and that

![]() $\arctan (2/\pi ) \approx 0.18\pi < \pi /5$

.

$\arctan (2/\pi ) \approx 0.18\pi < \pi /5$

.

3.3 The contribution from

$s_{0}$

$s_{0}$

We will now estimate the contribution from

![]() $s_{0}$

, by which we mean

$s_{0}$

, by which we mean

$$\begin{align*}\frac{1}{\pi}\operatorname{Im}\int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s, \end{align*}$$

$$\begin{align*}\frac{1}{\pi}\operatorname{Im}\int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s, \end{align*}$$

and where f and g are given by equations (3.2) and (3.3), respectively. We have combined the two pieces in the upper and lower half plane

![]() $\int _{\Gamma _{0}}$

and

$\int _{\Gamma _{0}}$

and

![]() $-\int _{\overline {\Gamma _{0}}}$

into one integral using

$-\int _{\overline {\Gamma _{0}}}$

into one integral using

![]() $\zeta _{C}(\overline {s}) = \overline {\zeta _{C}(s)}$

. To estimate this integral, we will use the following simple lemma (see, e.g., [Reference Broucke, Debruyne and Vindas5, Lemma 3.3]).

$\zeta _{C}(\overline {s}) = \overline {\zeta _{C}(s)}$

. To estimate this integral, we will use the following simple lemma (see, e.g., [Reference Broucke, Debruyne and Vindas5, Lemma 3.3]).

Lemma 3.5. Let

![]() $a<b$

, and suppose that

$a<b$

, and suppose that

![]() $F: [a,b] \to \mathbb {C}$

is integrable. If there exist

$F: [a,b] \to \mathbb {C}$

is integrable. If there exist

![]() $\theta _{0}$

and

$\theta _{0}$

and

![]() $\omega $

with

$\omega $

with

![]() $0\le \omega < \pi /2$

such that

$0\le \omega < \pi /2$

such that

![]() $\left \lvert \arg (F\mathrm {e}^{-\mathrm {i}\theta _{0}}) \right \rvert \le \omega $

, then

$\left \lvert \arg (F\mathrm {e}^{-\mathrm {i}\theta _{0}}) \right \rvert \le \omega $

, then

$$\begin{align*}\int_{a}^{b}F(u)\mathrm{d} u = \rho\mathrm{e}^{\mathrm{i}(\theta_{0}+\varphi)} \end{align*}$$

$$\begin{align*}\int_{a}^{b}F(u)\mathrm{d} u = \rho\mathrm{e}^{\mathrm{i}(\theta_{0}+\varphi)} \end{align*}$$

for some real numbers

![]() $\rho $

and

$\rho $

and

![]() $\varphi $

satisfying

$\varphi $

satisfying

$$\begin{align*}\rho \ge (\cos \omega)\int_{a}^{b}\left\lvert F(u) \right\rvert \mathrm{d} u \quad \mbox{and} \quad \left\lvert \varphi \right\rvert \le \omega. \end{align*}$$

$$\begin{align*}\rho \ge (\cos \omega)\int_{a}^{b}\left\lvert F(u) \right\rvert \mathrm{d} u \quad \mbox{and} \quad \left\lvert \varphi \right\rvert \le \omega. \end{align*}$$

We will estimate g with the following lemma.

Lemma 3.6. Let

![]() $\varepsilon>0$

, and suppose that

$\varepsilon>0$

, and suppose that

![]() $s=\sigma +\mathrm {i} t$

satisfies

$s=\sigma +\mathrm {i} t$

satisfies

$$\begin{align*}\sigma \ge 1 - O\left(\frac{\log_{2}B_{K}}{\log B_{K}} \right), \quad t \gg \tau_{K}. \end{align*}$$

$$\begin{align*}\sigma \ge 1 - O\left(\frac{\log_{2}B_{K}}{\log B_{K}} \right), \quad t \gg \tau_{K}. \end{align*}$$

Then for

![]() $K (> K(\varepsilon ))$

sufficiently large,

$K (> K(\varepsilon ))$

sufficiently large,

$$\begin{align*}\left\lvert \sum_{k=0}^{K-1}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z + \int_{s}^{s+1}\bigl(\tilde{\eta}_{K}(z)+\xi_{K}(z)\bigr)\mathrm{d} z - \int_{s+1}^{\infty}\eta_{K}(z)\mathrm{d} z \right\rvert < \varepsilon. \end{align*}$$

$$\begin{align*}\left\lvert \sum_{k=0}^{K-1}\int_{s}^{s+1}\bigl(\eta_{k}(z) + \tilde{\eta}_{k}(z) + \xi_{k}(z)\bigr)\mathrm{d} z + \int_{s}^{s+1}\bigl(\tilde{\eta}_{K}(z)+\xi_{K}(z)\bigr)\mathrm{d} z - \int_{s+1}^{\infty}\eta_{K}(z)\mathrm{d} z \right\rvert < \varepsilon. \end{align*}$$

Proof. By the definition (3.1) of the functions

![]() $\eta _{k}$

,

$\eta _{k}$

,

![]() $\tilde {\eta }_{k}$

, and

$\tilde {\eta }_{k}$

, and

![]() $\xi _{k}$

, we have

$\xi _{k}$

, we have

$$\begin{align*}\sum_{k=0}^{K}\int_{s}^{s+1}\xi_{k}(z)\mathrm{d} z \ll \sum_{k=0}^{K}\frac{C_{k}^{1-\sigma}}{\left\lvert s \right\rvert \log C_{k}} \ll K\frac{(\log B_{K})^{O(1)}}{\tau_{K}}, \end{align*}$$

$$\begin{align*}\sum_{k=0}^{K}\int_{s}^{s+1}\xi_{k}(z)\mathrm{d} z \ll \sum_{k=0}^{K}\frac{C_{k}^{1-\sigma}}{\left\lvert s \right\rvert \log C_{k}} \ll K\frac{(\log B_{K})^{O(1)}}{\tau_{K}}, \end{align*}$$

where in the last step we used that

![]() $C_{K} \asymp B_{K}$

by equation (2.3). This quantity is bounded by

$C_{K} \asymp B_{K}$

by equation (2.3). This quantity is bounded by

![]() $\exp \bigl (\log K - c(\log B_{K})^{\alpha } + O(\log _{2}B_{K})\bigr )$

, which can be made arbitrarily small by taking K sufficiently large, due to the rapid growth of

$\exp \bigl (\log K - c(\log B_{K})^{\alpha } + O(\log _{2}B_{K})\bigr )$

, which can be made arbitrarily small by taking K sufficiently large, due to the rapid growth of

![]() $(B_{k})_{k}$

(property (a)). The condition

$(B_{k})_{k}$

(property (a)). The condition

![]() $t \gg \tau _{K}$

together with the rapid growth of

$t \gg \tau _{K}$

together with the rapid growth of

![]() $(\tau _{k})_{k}$

implies that

$(\tau _{k})_{k}$

implies that

![]() $\left \lvert 1 \pm \mathrm {i}\tau _{k} - s \right \rvert \gg \tau _{K}$

, for

$\left \lvert 1 \pm \mathrm {i}\tau _{k} - s \right \rvert \gg \tau _{K}$

, for

![]() $0\le k \le K-1$

(at least when K is sufficiently large). Hence,

$0\le k \le K-1$

(at least when K is sufficiently large). Hence,

$$\begin{align*}\sum_{k=0}^{K-1}\int_{s}^{s+1}(\eta_{k}(z)+\tilde{\eta}_{k}(z))\mathrm{d} z \ll \sum_{k=0}^{K-1}\frac{B_{k}^{1-\sigma}}{\tau_{K}\log B_{k}} \ll \exp\bigl(\log K - c(\log B_{K})^{\alpha} + O(\log_{2}B_{K})\bigr). \end{align*}$$

$$\begin{align*}\sum_{k=0}^{K-1}\int_{s}^{s+1}(\eta_{k}(z)+\tilde{\eta}_{k}(z))\mathrm{d} z \ll \sum_{k=0}^{K-1}\frac{B_{k}^{1-\sigma}}{\tau_{K}\log B_{k}} \ll \exp\bigl(\log K - c(\log B_{K})^{\alpha} + O(\log_{2}B_{K})\bigr). \end{align*}$$

Finally, we have

$$ \begin{align*} \int_{s}^{s+1}\tilde{\eta}_{K}(z)\mathrm{d} z &\ll \frac{B_{K}^{1-\sigma}}{\tau_{K}\log B_{K}} = \exp\bigl(-c(\log B_{K})^{\alpha} + O(\log_{2}B_{K})\bigr), \\ \int_{s+1}^{\infty}\eta_{K}(z)\mathrm{d} z &\ll \frac{B_{K}^{-\sigma}}{\log B_{K}}.\\[-36pt] \end{align*} $$

$$ \begin{align*} \int_{s}^{s+1}\tilde{\eta}_{K}(z)\mathrm{d} z &\ll \frac{B_{K}^{1-\sigma}}{\tau_{K}\log B_{K}} = \exp\bigl(-c(\log B_{K})^{\alpha} + O(\log_{2}B_{K})\bigr), \\ \int_{s+1}^{\infty}\eta_{K}(z)\mathrm{d} z &\ll \frac{B_{K}^{-\sigma}}{\log B_{K}}.\\[-36pt] \end{align*} $$

In particular, we may assume that on the contour

![]() $\Gamma _{0}$

, these terms are in absolute value smaller than

$\Gamma _{0}$

, these terms are in absolute value smaller than

![]() $\pi /40$

, say. Also,

$\pi /40$

, say. Also,

![]() $1/\left \lvert s-1 \right \rvert \sim 1/\tau _{K}$

and

$1/\left \lvert s-1 \right \rvert \sim 1/\tau _{K}$

and

![]() $\left \lvert \arg \bigl (\mathrm {e}^{\mathrm {i}\pi /2}/(s-1)\bigr ) \right \rvert < \pi /40$

on

$\left \lvert \arg \bigl (\mathrm {e}^{\mathrm {i}\pi /2}/(s-1)\bigr ) \right \rvert < \pi /40$

on

![]() $\Gamma _{0}$

. We have

$\Gamma _{0}$

. We have

$$\begin{align*}\int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s = \mathrm{e}^{f(s_{0})}\int_{\Gamma_{0}}\mathrm{e}^{f(s)-f(s_{0})}g(s)\mathrm{d} s. \end{align*}$$

$$\begin{align*}\int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s = \mathrm{e}^{f(s_{0})}\int_{\Gamma_{0}}\mathrm{e}^{f(s)-f(s_{0})}g(s)\mathrm{d} s. \end{align*}$$

We now apply Lemma 3.5 to estimate the size and argument of this integral. By Property (c) and Lemma 3.4 we get that

$$ \begin{gather*} \int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s = (-1)^{K}R\mathrm{e}^{\mathrm{i}(\pi/2 + \varphi)}, \\ R \gg \frac{\mathrm{e}^{\operatorname{Re} f(s_{0})}}{\tau_{K}}\int_{y^{-}}^{y^{+}}\exp\bigl( f(\gamma(y))-f(s_{0})\bigr)\mathrm{d} y, \quad \left\lvert \varphi \right\rvert < \frac{\pi}{5} + \frac{\pi}{40} + \frac{\pi}{40} = \frac{\pi}{4}. \end{gather*} $$

$$ \begin{gather*} \int_{\Gamma_{0}}\mathrm{e}^{f(s)}g(s)\mathrm{d} s = (-1)^{K}R\mathrm{e}^{\mathrm{i}(\pi/2 + \varphi)}, \\ R \gg \frac{\mathrm{e}^{\operatorname{Re} f(s_{0})}}{\tau_{K}}\int_{y^{-}}^{y^{+}}\exp\bigl( f(\gamma(y))-f(s_{0})\bigr)\mathrm{d} y, \quad \left\lvert \varphi \right\rvert < \frac{\pi}{5} + \frac{\pi}{40} + \frac{\pi}{40} = \frac{\pi}{4}. \end{gather*} $$

Note that

![]() $f(\gamma (y))-f(s_{0})$

is real. In order to bound the remaining integral from below, we restrict the range of integration to the points

$f(\gamma (y))-f(s_{0})$

is real. In order to bound the remaining integral from below, we restrict the range of integration to the points

![]() $s=\gamma (y)$

in the disk

$s=\gamma (y)$

in the disk

![]() $B(s_{0}, \delta /\log B)$