1. Introduction

In this paper we are concerned with the normalized binary contact path process (NBCPP). For later use, we first introduce some notation. For

![]() $d\geq 1$

, the d-dimensional lattice is denoted by

$d\geq 1$

, the d-dimensional lattice is denoted by

![]() $\mathbb{Z}^d$

. For

$\mathbb{Z}^d$

. For

![]() $x,y\in \mathbb{Z}^d$

, we write

$x,y\in \mathbb{Z}^d$

, we write

![]() $x\sim y$

when they are neighbors. The origin of

$x\sim y$

when they are neighbors. The origin of

![]() $\mathbb{Z}^d$

is denoted by O. Now we recall the definition of the binary contact path process (BCPP) introduced in [Reference Griffeath6]. The binary contact path process

$\mathbb{Z}^d$

is denoted by O. Now we recall the definition of the binary contact path process (BCPP) introduced in [Reference Griffeath6]. The binary contact path process

![]() $\{\phi_t\}_{t\geq 0}$

on

$\{\phi_t\}_{t\geq 0}$

on

![]() $\mathbb{Z}^d$

is a continuous-time Markov process with state space

$\mathbb{Z}^d$

is a continuous-time Markov process with state space

![]() $\mathbb{X}=\{0,1,2,\ldots\}^{\mathbb{Z}^d}$

and evolves as follows. For any

$\mathbb{X}=\{0,1,2,\ldots\}^{\mathbb{Z}^d}$

and evolves as follows. For any

![]() $x\in \mathbb{Z}^d$

,

$x\in \mathbb{Z}^d$

,

![]() $x\sim y$

, and

$x\sim y$

, and

![]() $t\geq 0$

,

$t\geq 0$

,

\begin{align*} \phi_t(x)\rightarrow \begin{cases} 0 & \text{at rate}\,\dfrac{1}{1+2\lambda d}, \\[7pt] \phi_t(x)+\phi_t(y) & \text{at rate}\,\dfrac{\lambda}{1+2\lambda d}, \end{cases}\end{align*}

\begin{align*} \phi_t(x)\rightarrow \begin{cases} 0 & \text{at rate}\,\dfrac{1}{1+2\lambda d}, \\[7pt] \phi_t(x)+\phi_t(y) & \text{at rate}\,\dfrac{\lambda}{1+2\lambda d}, \end{cases}\end{align*}

where

![]() $\lambda$

is a positive constant. As a result, the generator

$\lambda$

is a positive constant. As a result, the generator

![]() $\Omega$

of

$\Omega$

of

![]() $\{\phi_t\}_{t\geq 0}$

is given by

$\{\phi_t\}_{t\geq 0}$

is given by

for any f from

![]() $\mathbb{X}$

to

$\mathbb{X}$

to

![]() $\mathbb{R}$

depending on finitely many coordinates and

$\mathbb{R}$

depending on finitely many coordinates and

![]() $\phi\in \mathbb{X}$

, where, for all

$\phi\in \mathbb{X}$

, where, for all

![]() $x,y,z\in \mathbb{Z}^d$

,

$x,y,z\in \mathbb{Z}^d$

,

\begin{equation*} \phi^{x,-}(z) = \begin{cases} \phi(z) & \text{if}\ z\neq x, \\ 0 & \text{if}\ z=x; \end{cases} \qquad \phi^{x,y}(z) = \begin{cases} \phi(z) & \text{if}\ z\neq x, \\ \phi(x)+\phi(y) & \text{if}\ z=x. \end{cases}\end{equation*}

\begin{equation*} \phi^{x,-}(z) = \begin{cases} \phi(z) & \text{if}\ z\neq x, \\ 0 & \text{if}\ z=x; \end{cases} \qquad \phi^{x,y}(z) = \begin{cases} \phi(z) & \text{if}\ z\neq x, \\ \phi(x)+\phi(y) & \text{if}\ z=x. \end{cases}\end{equation*}

Intuitively,

![]() $\{\phi_t\}_{t\geq 0}$

describes the spread of an epidemic on

$\{\phi_t\}_{t\geq 0}$

describes the spread of an epidemic on

![]() $\mathbb{Z}^d$

. The integer value a vertex takes is the seriousness of the illness on this vertex. A vertex taking value 0 is healthy and one taking a positive value is infected. An infected vertex becomes healthy at rate

$\mathbb{Z}^d$

. The integer value a vertex takes is the seriousness of the illness on this vertex. A vertex taking value 0 is healthy and one taking a positive value is infected. An infected vertex becomes healthy at rate

![]() ${1}/({1+2\lambda d})$

. A vertex x is infected by a given neighbor y at rate

${1}/({1+2\lambda d})$

. A vertex x is infected by a given neighbor y at rate

![]() ${\lambda}/({1+2\lambda d})$

. When the infection occurs, the seriousness of the illness on x is added with that on y.

${\lambda}/({1+2\lambda d})$

. When the infection occurs, the seriousness of the illness on x is added with that on y.

The BCPP

![]() $\{\phi_t\}_{t\geq 0}$

was introduced in [Reference Griffeath6] to improve upper bounds of critical values of contact processes (CPs) according to the fact that the CP

$\{\phi_t\}_{t\geq 0}$

was introduced in [Reference Griffeath6] to improve upper bounds of critical values of contact processes (CPs) according to the fact that the CP

![]() $\{\xi_t\}_{t\geq 0}$

on

$\{\xi_t\}_{t\geq 0}$

on

![]() $\mathbb{Z}^d$

can be equivalently defined as

$\mathbb{Z}^d$

can be equivalently defined as

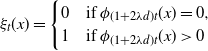

\begin{align*} \xi_t(x)= \begin{cases} 0 & \text{if}\ \phi_{(1+2\lambda d)t}(x)=0, \\ 1 & \text{if}\ \phi_{(1+2\lambda d)t}(x)>0 \end{cases}\end{align*}

\begin{align*} \xi_t(x)= \begin{cases} 0 & \text{if}\ \phi_{(1+2\lambda d)t}(x)=0, \\ 1 & \text{if}\ \phi_{(1+2\lambda d)t}(x)>0 \end{cases}\end{align*}

for any

![]() $x\in \mathbb{Z}^d$

. For a detailed survey of CPs, see [Reference Liggett11, Chapter 6] and [Reference Liggett12, Part II]. To emphasize the dependence on

$x\in \mathbb{Z}^d$

. For a detailed survey of CPs, see [Reference Liggett11, Chapter 6] and [Reference Liggett12, Part II]. To emphasize the dependence on

![]() $\lambda$

, we also write

$\lambda$

, we also write

![]() $\xi_t$

as

$\xi_t$

as

![]() $\xi_t^\lambda$

. The critical value

$\xi_t^\lambda$

. The critical value

![]() $\lambda_\mathrm{c}$

of the CP is defined as

$\lambda_\mathrm{c}$

of the CP is defined as

\begin{align*} \lambda_\mathrm{c} = \sup\Bigg\{\lambda>0\colon \mathbb{P}\Bigg(\sum_{x\in\mathbb{Z}^d}\xi_t^{\lambda}(x)=0\,\text{for some}\,t>0 \mid \sum_{x}\xi^\lambda_0(x)=1\Bigg)=1\Bigg\}.\end{align*}

\begin{align*} \lambda_\mathrm{c} = \sup\Bigg\{\lambda>0\colon \mathbb{P}\Bigg(\sum_{x\in\mathbb{Z}^d}\xi_t^{\lambda}(x)=0\,\text{for some}\,t>0 \mid \sum_{x}\xi^\lambda_0(x)=1\Bigg)=1\Bigg\}.\end{align*}

Applying the above coupling relationship between BCPP and CP, it is shown in [Reference Griffeath6] that

![]() $\lambda_\mathrm{c}(d)\leq {1}/{2d(2\gamma_d-1)}$

for

$\lambda_\mathrm{c}(d)\leq {1}/{2d(2\gamma_d-1)}$

for

![]() $d\geq 3$

, where

$d\geq 3$

, where

![]() $\gamma_d$

is the probability that the simple random walk on

$\gamma_d$

is the probability that the simple random walk on

![]() $\mathbb{Z}^d$

starting at O never returns to O again. In particular,

$\mathbb{Z}^d$

starting at O never returns to O again. In particular,

![]() $\lambda_\mathrm{c}(3)\leq 0.523$

as a corollary of the above upper bound. In [Reference Xue17], a modified version of BCPP is introduced and then a further improved upper bound of

$\lambda_\mathrm{c}(3)\leq 0.523$

as a corollary of the above upper bound. In [Reference Xue17], a modified version of BCPP is introduced and then a further improved upper bound of

![]() $\lambda_\mathrm{c}(d)$

is given for

$\lambda_\mathrm{c}(d)$

is given for

![]() $d\geq 3$

. It is shown in [Reference Xue17] that

$d\geq 3$

. It is shown in [Reference Xue17] that

![]() $\lambda_\mathrm{c}(d)\leq ({2-\gamma_d})/({2d\gamma_d})$

and consequently

$\lambda_\mathrm{c}(d)\leq ({2-\gamma_d})/({2d\gamma_d})$

and consequently

![]() $\lambda_\mathrm{c}(3)\leq 0.340$

.

$\lambda_\mathrm{c}(3)\leq 0.340$

.

The BCPP belongs to a family of continuous-time Markov processes called linear systems defined in [Reference Liggett11, Chapter 9], since there are a series of linear transformations

![]() $\{\mathcal{A}_k\colon k\geq 1\}$

on

$\{\mathcal{A}_k\colon k\geq 1\}$

on

![]() $\mathbb{X}$

such that

$\mathbb{X}$

such that

![]() $\phi_t=\mathcal{A}_k\phi_{t-}$

for some

$\phi_t=\mathcal{A}_k\phi_{t-}$

for some

![]() $k\geq 1$

at each jump moment t. As a result, for each

$k\geq 1$

at each jump moment t. As a result, for each

![]() $m\geq 1$

, Kolmogorov–Chapman equations for

$m\geq 1$

, Kolmogorov–Chapman equations for

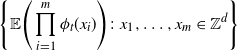

\begin{align*} \Bigg\{\mathbb{E}\Bigg(\prod_{i=1}^m\phi_t(x_i)\Bigg)\colon x_1,\ldots,x_m\in \mathbb{Z}^d\Bigg\}\end{align*}

\begin{align*} \Bigg\{\mathbb{E}\Bigg(\prod_{i=1}^m\phi_t(x_i)\Bigg)\colon x_1,\ldots,x_m\in \mathbb{Z}^d\Bigg\}\end{align*}

are given by a series of linear ordinary differential equations, where

![]() $\mathbb{E}$

is the expectation operator. For the mathematical details, see [Reference Liggett11, Theorems 9.1.27 and 9.3.1].

$\mathbb{E}$

is the expectation operator. For the mathematical details, see [Reference Liggett11, Theorems 9.1.27 and 9.3.1].

For technical reasons, it is convenient to investigate a rescaled time change

![]() $\{\eta_t\}_{t\geq 0}$

of the BCPP defined by

$\{\eta_t\}_{t\geq 0}$

of the BCPP defined by

The process

![]() $\{\eta_t\}_{t\geq 0}$

is introduced in [Reference Liggett11, Chapter 9] and is called the normalized binary contact path process (NBCPP) since

$\{\eta_t\}_{t\geq 0}$

is introduced in [Reference Liggett11, Chapter 9] and is called the normalized binary contact path process (NBCPP) since

![]() $\mathbb{E}\eta_t(x)=1$

for all

$\mathbb{E}\eta_t(x)=1$

for all

![]() $x\in \mathbb{Z}^d$

and

$x\in \mathbb{Z}^d$

and

![]() $t>0$

conditioned on

$t>0$

conditioned on

![]() $\mathbb{E}\eta_0(x)=1$

for all

$\mathbb{E}\eta_0(x)=1$

for all

![]() $x\in \mathbb{Z}^d$

(see Proposition 2.1).

$x\in \mathbb{Z}^d$

(see Proposition 2.1).

Here we recall some basic properties of the NBCPP given in [Reference Liggett11, Section 9.6]. The state space of the NBCPP

![]() $\{\eta_t\}_{t\geq 0}$

is

$\{\eta_t\}_{t\geq 0}$

is

![]() $\mathbb{Y}=[0, +\infty)^{\mathbb{Z}^d}$

and the generator

$\mathbb{Y}=[0, +\infty)^{\mathbb{Z}^d}$

and the generator

![]() $\mathcal{L}$

of

$\mathcal{L}$

of

![]() $\{\eta_t\}_{t\geq 0}$

is given by

$\{\eta_t\}_{t\geq 0}$

is given by

for any

![]() $\eta\in\mathbb{Y}$

, where

$\eta\in\mathbb{Y}$

, where

![]() $f_x^\prime$

is the partial derivative of f with respect to the coordinate

$f_x^\prime$

is the partial derivative of f with respect to the coordinate

![]() $\eta(x)$

and, for all

$\eta(x)$

and, for all

![]() $x,y,z\in \mathbb{Z}^d$

,

$x,y,z\in \mathbb{Z}^d$

,

\begin{align*} \eta^{x,-}(z)= \begin{cases} \eta(z) & \text{if}\ z\neq x, \\ 0 & \text{if}\ z=x; \end{cases} \qquad \eta^{x,y}(z)= \begin{cases} \eta(z) & \text{if}\ z\neq x, \\ \eta(x)+\eta(y) & \text{if}\ z=x. \end{cases}\end{align*}

\begin{align*} \eta^{x,-}(z)= \begin{cases} \eta(z) & \text{if}\ z\neq x, \\ 0 & \text{if}\ z=x; \end{cases} \qquad \eta^{x,y}(z)= \begin{cases} \eta(z) & \text{if}\ z\neq x, \\ \eta(x)+\eta(y) & \text{if}\ z=x. \end{cases}\end{align*}

Applying [Reference Liggett11, Theorem 9.1.27], for any

![]() $x\in \mathbb{Z}^d$

,

$x\in \mathbb{Z}^d$

,

![]() $t\geq0$

, and

$t\geq0$

, and

![]() $\eta\in \mathbb{Y}$

,

$\eta\in \mathbb{Y}$

,

where

![]() $\mathbb{E}_\eta$

is the expectation operator with respect to the NBCPP with initial state

$\mathbb{E}_\eta$

is the expectation operator with respect to the NBCPP with initial state

![]() $\eta_0=\eta$

. For any

$\eta_0=\eta$

. For any

![]() $x,y\in \mathbb{Z}^d$

,

$x,y\in \mathbb{Z}^d$

,

![]() $t\geq 0$

, and

$t\geq 0$

, and

![]() $\eta\in \mathbb{Y}$

, let

$\eta\in \mathbb{Y}$

, let

![]() $F_{\eta,t}(x,y)=\mathbb{E}_\eta(\eta_t(x)\eta_t(y))$

. Consider

$F_{\eta,t}(x,y)=\mathbb{E}_\eta(\eta_t(x)\eta_t(y))$

. Consider

![]() $F_{\eta,t}$

as a column vector from

$F_{\eta,t}$

as a column vector from

![]() $\mathbb{Z}^d\times \mathbb{Z}^d$

to

$\mathbb{Z}^d\times \mathbb{Z}^d$

to

![]() $[0, +\infty)$

; applying [Reference Liggett11, Theorem 9.3.1], there exists a

$[0, +\infty)$

; applying [Reference Liggett11, Theorem 9.3.1], there exists a

![]() $(\mathbb{Z}^d)^2\times (\mathbb{Z}^d)^2$

matrix

$(\mathbb{Z}^d)^2\times (\mathbb{Z}^d)^2$

matrix

![]() $H=\{H((x,y), (v,w))\}_{x,y,v,w\in \mathbb{Z}^d}$

, independent of

$H=\{H((x,y), (v,w))\}_{x,y,v,w\in \mathbb{Z}^d}$

, independent of

![]() $\eta, t$

, such that

$\eta, t$

, such that

for any

![]() $\eta\in \mathbb{Y}$

and

$\eta\in \mathbb{Y}$

and

![]() $t\geq 0$

. For a precise expression for H, see Section 3, where proofs of main results of this paper are given. Roughly speaking, both

$t\geq 0$

. For a precise expression for H, see Section 3, where proofs of main results of this paper are given. Roughly speaking, both

![]() $\mathbb{E}_\eta\eta_t(x)$

and

$\mathbb{E}_\eta\eta_t(x)$

and

![]() $\mathbb{E}_\eta(\eta_t(x)\eta_t(y))$

are driven by linear ordinary differential equations, which is a basic property of general linear systems as we recalled above. For more properties of the NBCPP as consequences of (1.1) and (1.2), see Section 2.

$\mathbb{E}_\eta(\eta_t(x)\eta_t(y))$

are driven by linear ordinary differential equations, which is a basic property of general linear systems as we recalled above. For more properties of the NBCPP as consequences of (1.1) and (1.2), see Section 2.

In this paper we study the law of large numbers and the central limit theorem (CLT) of the occupation time process

![]() $\big\{\int_0^t\eta_u(O)\,{\mathrm{d}} u\big\}_{t\geq 0}$

of an NBCPP as

$\big\{\int_0^t\eta_u(O)\,{\mathrm{d}} u\big\}_{t\geq 0}$

of an NBCPP as

![]() $t\rightarrow+\infty$

. The occupation time is the simplest example of the so-called additive functions of interacting particle systems, whose limit theorems have been popular research topics since the 1970s (see the references cited in [Reference Komorowski, Landim and Olla7]). In detail, let

$t\rightarrow+\infty$

. The occupation time is the simplest example of the so-called additive functions of interacting particle systems, whose limit theorems have been popular research topics since the 1970s (see the references cited in [Reference Komorowski, Landim and Olla7]). In detail, let

![]() $\{X_t\}_{t\geq 0}$

be an interacting particle system with state space

$\{X_t\}_{t\geq 0}$

be an interacting particle system with state space

![]() $S^{\mathbb{Z}^d}$

, where

$S^{\mathbb{Z}^d}$

, where

![]() $S\subseteq \mathbb{R}$

, then

$S\subseteq \mathbb{R}$

, then

![]() $\int_0^tf(X_s)\,{\mathrm{d}} s$

is called an additive function for any

$\int_0^tf(X_s)\,{\mathrm{d}} s$

is called an additive function for any

![]() $f\colon S^{\mathbb{Z}^d}\rightarrow \mathbb{R}$

. If

$f\colon S^{\mathbb{Z}^d}\rightarrow \mathbb{R}$

. If

![]() $\{X_t\}_{t\geq 0}$

starts from a stationary probability measure

$\{X_t\}_{t\geq 0}$

starts from a stationary probability measure

![]() $\pi$

and

$\pi$

and

![]() $f\in L^1(\pi)$

, then the law of large numbers of

$f\in L^1(\pi)$

, then the law of large numbers of

![]() $\int_0^tf(X_s)\,{\mathrm{d}} s$

is a direct application of the Birkhoff ergodic theorem such that

$\int_0^tf(X_s)\,{\mathrm{d}} s$

is a direct application of the Birkhoff ergodic theorem such that

where

![]() $\mathcal{I}$

is the set of all invariant events. Furthermore, if the stationary process

$\mathcal{I}$

is the set of all invariant events. Furthermore, if the stationary process

![]() $\{X_t\}_{t\geq 0}$

starting from

$\{X_t\}_{t\geq 0}$

starting from

![]() $\pi$

is ergodic, i.e.

$\pi$

is ergodic, i.e.

![]() $\mathcal{I}$

is trivial, then

$\mathcal{I}$

is trivial, then

![]() $\mathbb{E}_\pi(f\mid\mathcal{I})=\mathbb{E}_\pi f=\int_{S^{\mathbb{Z}^d}}f(\eta)\,\pi({\mathrm{d}}\eta)$

almost surely. In the ergodic case, it is natural to further investigate whether a CLT corresponding to the above Birkhoff ergodic theorem holds, i.e. whether

$\mathbb{E}_\pi(f\mid\mathcal{I})=\mathbb{E}_\pi f=\int_{S^{\mathbb{Z}^d}}f(\eta)\,\pi({\mathrm{d}}\eta)$

almost surely. In the ergodic case, it is natural to further investigate whether a CLT corresponding to the above Birkhoff ergodic theorem holds, i.e. whether

in distribution for some

![]() $\sigma^2=\sigma^2(f)>0$

as

$\sigma^2=\sigma^2(f)>0$

as

![]() $t\rightarrow+\infty$

. It is shown in [Reference Komorowski, Landim and Olla7] that if the generator of the ergodic process

$t\rightarrow+\infty$

. It is shown in [Reference Komorowski, Landim and Olla7] that if the generator of the ergodic process

![]() $\{X_t\}_{t\geq 0}$

satisfies a so-called sector condition, then the CLT above holds. As an application of this result, [Reference Komorowski, Landim and Olla7, Part 2] shows that mean-zero asymmetric exclusion processes starting from Bernoulli product measures are ergodic and satisfy the sector condition. Consequently, corresponding CLTs of additive functions are given. For exclusion processes, particles perform random walks on

$\{X_t\}_{t\geq 0}$

satisfies a so-called sector condition, then the CLT above holds. As an application of this result, [Reference Komorowski, Landim and Olla7, Part 2] shows that mean-zero asymmetric exclusion processes starting from Bernoulli product measures are ergodic and satisfy the sector condition. Consequently, corresponding CLTs of additive functions are given. For exclusion processes, particles perform random walks on

![]() $\mathbb{Z}^d$

but jumps to occupied sites are suppressed. We refer the reader to [Reference Liggett11, Chapter 8] for the basic properties of exclusion processes.

$\mathbb{Z}^d$

but jumps to occupied sites are suppressed. We refer the reader to [Reference Liggett11, Chapter 8] for the basic properties of exclusion processes.

CLTs of additive functions are also discussed for the supercritical contact process

![]() $\xi_t^{\lambda, d}$

, where

$\xi_t^{\lambda, d}$

, where

![]() $\lambda>\lambda_\mathrm{c}$

. It is shown in [Reference Liggett11, Chapter 6] that the supercritical contact process has a nontrivial ergodic stationary distribution

$\lambda>\lambda_\mathrm{c}$

. It is shown in [Reference Liggett11, Chapter 6] that the supercritical contact process has a nontrivial ergodic stationary distribution

![]() $\overline{\nu}$

, which is called the upper invariant distribution. CLTs of additive functions of supercritical contact processes starting from

$\overline{\nu}$

, which is called the upper invariant distribution. CLTs of additive functions of supercritical contact processes starting from

![]() $\overline{\nu}$

are given in [Reference Schonmann16]. Since marginal distributions of

$\overline{\nu}$

are given in [Reference Schonmann16]. Since marginal distributions of

![]() $\overline{\nu}$

do not have clear expressions as product measures, the proof of the main theorem in [Reference Schonmann16] follows a different strategy than that given in [Reference Komorowski, Landim and Olla7]. It is shown in [Reference Schonmann16] that additive functions of the supercritical contact process satisfy a so-called FKG condition, and then corresponding CLTs follow from [Reference Newman15, Theorem 3].

$\overline{\nu}$

do not have clear expressions as product measures, the proof of the main theorem in [Reference Schonmann16] follows a different strategy than that given in [Reference Komorowski, Landim and Olla7]. It is shown in [Reference Schonmann16] that additive functions of the supercritical contact process satisfy a so-called FKG condition, and then corresponding CLTs follow from [Reference Newman15, Theorem 3].

For a general interacting particle system starting from a stationary distribution

![]() $\pi$

which is not ergodic (or we do not know whether

$\pi$

which is not ergodic (or we do not know whether

![]() $\pi$

is ergodic), we should first study which type of f makes

$\pi$

is ergodic), we should first study which type of f makes

![]() $\mathbb{E}_\pi(f\mid\mathcal{I})=\mathbb{E}_\pi f$

almost surely and then the investigation of a corresponding CLT is meaningful. It is natural to first consider f with the simplest form

$\mathbb{E}_\pi(f\mid\mathcal{I})=\mathbb{E}_\pi f$

almost surely and then the investigation of a corresponding CLT is meaningful. It is natural to first consider f with the simplest form

![]() $f(\eta)=\eta(x)$

for some

$f(\eta)=\eta(x)$

for some

![]() $x\in \mathbb{Z}^d$

, then the corresponding additive function reduces to the occupation time. CLTs of occupation times of interacting particle systems are discussed for critical branching processes and voter models on

$x\in \mathbb{Z}^d$

, then the corresponding additive function reduces to the occupation time. CLTs of occupation times of interacting particle systems are discussed for critical branching processes and voter models on

![]() $\mathbb{Z}^d$

in [Reference Birkner and Zähle1, Reference Cox and Griffeath3] respectively. For voter models, each

$\mathbb{Z}^d$

in [Reference Birkner and Zähle1, Reference Cox and Griffeath3] respectively. For voter models, each

![]() $x\in \mathbb{Z}^d$

has an opinion in

$x\in \mathbb{Z}^d$

has an opinion in

![]() $\{0, 1\}$

and x adopts each neighbor’s opinion at rate 1. For critical branching random walks, there are several particles on each

$\{0, 1\}$

and x adopts each neighbor’s opinion at rate 1. For critical branching random walks, there are several particles on each

![]() $x\in \mathbb{Z}^d$

, the number of which is considered as the state of x. All particles perform independent random walks on

$x\in \mathbb{Z}^d$

, the number of which is considered as the state of x. All particles perform independent random walks on

![]() $\mathbb{Z}^d$

and each particle dies with probability

$\mathbb{Z}^d$

and each particle dies with probability

![]() $\frac12$

or splits into two new particles with probability

$\frac12$

or splits into two new particles with probability

![]() $\frac12$

at rate 1. As shown in [Reference Birkner and Zähle1, Reference Cox and Griffeath3], CLTs of occupation times of both models perform dimension-dependent phase transition phenomena. For critical branching random walks, the CLTs above have three different forms in the respective cases where

$\frac12$

at rate 1. As shown in [Reference Birkner and Zähle1, Reference Cox and Griffeath3], CLTs of occupation times of both models perform dimension-dependent phase transition phenomena. For critical branching random walks, the CLTs above have three different forms in the respective cases where

![]() $d=3$

,

$d=3$

,

![]() $d=4$

, and

$d=4$

, and

![]() $d\geq 5$

. For voter models, the CLTs above have five different forms in the respective cases where

$d\geq 5$

. For voter models, the CLTs above have five different forms in the respective cases where

![]() $d=1$

,

$d=1$

,

![]() $d=2$

,

$d=2$

,

![]() $d=3$

,

$d=3$

,

![]() $d=4$

, and

$d=4$

, and

![]() $d\geq 5$

.

$d\geq 5$

.

The investigation of the CLT of the occupation time of an NBCPP in this paper is greatly inspired by [Reference Birkner and Zähle1, Reference Cox and Griffeath3]. Several basic properties of NBCPPs are similar to those of the voter model and the critical branching random walk. For example, in all these three models, the expectations of states of sites on

![]() $\mathbb{Z}^d$

are all driven by linear ordinary differential equations. Furthermore, when

$\mathbb{Z}^d$

are all driven by linear ordinary differential equations. Furthermore, when

![]() $d\geq 3$

(and

$d\geq 3$

(and

![]() $\lambda$

is sufficiently large for an NBCPP), each model has a class of nontrivial extreme stationary distributions distinguished by a parameter representing the mean particle number on each site, the probability of a site taking opinion 1, and the mean seriousness of the illness on each site in critical branching random walks, voter models, and NBCPPs respectively. In addition, the voter model and NBCPP are both linear systems. In conclusion, according to all these similarities, it is natural to ask whether CLTs similar to those of voter models and critical branching random walks hold for the NBCPP.

$\lambda$

is sufficiently large for an NBCPP), each model has a class of nontrivial extreme stationary distributions distinguished by a parameter representing the mean particle number on each site, the probability of a site taking opinion 1, and the mean seriousness of the illness on each site in critical branching random walks, voter models, and NBCPPs respectively. In addition, the voter model and NBCPP are both linear systems. In conclusion, according to all these similarities, it is natural to ask whether CLTs similar to those of voter models and critical branching random walks hold for the NBCPP.

On the other hand, the evolution of NBCPPs has different mechanisms than those of voter models and critical branching random walks. For the voter model, the state of each site is bounded by 1. For the critical branching random walk, although the number of particles on a site is not bounded from above, each jump, birth, and death of a particle can only change one or two sites’ states by one. The above properties make it easy to bound high-order moments of states of sites for voter models and critical branching random walks. For NBCPPs, the seriousness of the illness on a site is unbounded and the increment of this seriousness when being further infected is also unbounded, which makes the estimation of

![]() $\mathbb{E}((\eta_t(x))^m)$

difficult for large m. Hence, it is also natural to discuss the influence of the above differences between NBCPPs and the other two models on the techniques utilized in the proof of the CLT of NBCPPs.

$\mathbb{E}((\eta_t(x))^m)$

difficult for large m. Hence, it is also natural to discuss the influence of the above differences between NBCPPs and the other two models on the techniques utilized in the proof of the CLT of NBCPPs.

According to a calculation of the variance (see Remark 2.3), it is natural to guess that the CLT of the occupation time of an NBCPP should take three different forms in the respective cases where

![]() $d\geq 5$

,

$d\geq 5$

,

![]() $d=4$

, and

$d=4$

, and

![]() $d=3$

, as in [Reference Cox and Griffeath3, Theorem 1] and [Reference Birkner and Zähle1, Theorem 1.1]. However, in this paper we only give a rigorous result for part of the first case, i.e. the CLT of the occupation time for sufficiently large d, since we cannot bound the fourth moment of

$d=3$

, as in [Reference Cox and Griffeath3, Theorem 1] and [Reference Birkner and Zähle1, Theorem 1.1]. However, in this paper we only give a rigorous result for part of the first case, i.e. the CLT of the occupation time for sufficiently large d, since we cannot bound the fourth moment of

![]() $\eta_t(O)$

uniformly for

$\eta_t(O)$

uniformly for

![]() $t\geq 0$

when the dimension d is low. For our main result and the mathematical details of the proof, see Sections 2 and 3, respectively.

$t\geq 0$

when the dimension d is low. For our main result and the mathematical details of the proof, see Sections 2 and 3, respectively.

Since the 1980s, the large deviation principle (LDP) of the occupation time has also been a popular research topic for models such as exclusion processes, voter models, critical branching random walks, and critical branching

![]() $\alpha$

-stable processes [Reference Bramson, Cox and Griffeath2, Reference Deuschel and Rosen4, Reference Landim8–Reference Li and Ren10, Reference Maillard and Mountford13]. It is natural to ask whether an LDP holds for the occupation time of an NBCPP. We think the core difficulty in the study of this problem is the estimation of

$\alpha$

-stable processes [Reference Bramson, Cox and Griffeath2, Reference Deuschel and Rosen4, Reference Landim8–Reference Li and Ren10, Reference Maillard and Mountford13]. It is natural to ask whether an LDP holds for the occupation time of an NBCPP. We think the core difficulty in the study of this problem is the estimation of

![]() $\mathbb{E}\exp\big\{\alpha\int_0^t\eta_s(O)\,{\mathrm{d}} s\big\}$

for

$\mathbb{E}\exp\big\{\alpha\int_0^t\eta_s(O)\,{\mathrm{d}} s\big\}$

for

![]() $\alpha\neq 0$

. We will work on this problem as a further investigation.

$\alpha\neq 0$

. We will work on this problem as a further investigation.

We are also inspired by investigations of CLTs of empirical density fields of NBCPPs, which are also called fluctuations. For sufficiently large d and

![]() $\lambda$

, [Reference Xue and Zhao18] gives the fluctuation of the NBCPP

$\lambda$

, [Reference Xue and Zhao18] gives the fluctuation of the NBCPP

![]() $\{\eta_t^{\lambda,d}\}_{t\geq 0}$

starting from the configuration where all sites are in state 1; [Reference Nakashima14] gives another type of fluctuation of a class of linear systems starting from a configuration with finite total mass, including NBCPP as a special case. The boundedness of

$\{\eta_t^{\lambda,d}\}_{t\geq 0}$

starting from the configuration where all sites are in state 1; [Reference Nakashima14] gives another type of fluctuation of a class of linear systems starting from a configuration with finite total mass, including NBCPP as a special case. The boundedness of

![]() $\sup_{t\geq 0}\mathbb{E}\big(\big(\eta_t^{\lambda, d}(O)\big)^4\big)$

given in [Reference Xue and Zhao18] for sufficiently large d and

$\sup_{t\geq 0}\mathbb{E}\big(\big(\eta_t^{\lambda, d}(O)\big)^4\big)$

given in [Reference Xue and Zhao18] for sufficiently large d and

![]() $\lambda$

is a crucial property for the proofs of the main results in this paper. For the mathematical details, see Section 2.

$\lambda$

is a crucial property for the proofs of the main results in this paper. For the mathematical details, see Section 2.

2. Main results

In this section we give our main result. For later use, we first introduce some notation and definitions. We denote by

![]() $\{S_n\}_{n\geq 0}$

the discrete-time simple random walk on

$\{S_n\}_{n\geq 0}$

the discrete-time simple random walk on

![]() $\mathbb{Z}^d$

, i.e.

$\mathbb{Z}^d$

, i.e.

![]() $\mathbb{P}(S_{n+1}=y\mid S_n=x)={1}/{2d}$

for any

$\mathbb{P}(S_{n+1}=y\mid S_n=x)={1}/{2d}$

for any

![]() $n\geq 0$

,

$n\geq 0$

,

![]() $x\in \mathbb{Z}^d$

, and

$x\in \mathbb{Z}^d$

, and

![]() $y\sim x$

. We denote by

$y\sim x$

. We denote by

![]() $\{Y_t\}_{t\geq 0}$

the continuous-time simple random walk on

$\{Y_t\}_{t\geq 0}$

the continuous-time simple random walk on

![]() $\mathbb{Z}^d$

with generator L given by

$\mathbb{Z}^d$

with generator L given by

![]() $Lh(x)=({1}/{2d})\sum_{y\sim x}(h(y)-h(x))$

for any bounded h from

$Lh(x)=({1}/{2d})\sum_{y\sim x}(h(y)-h(x))$

for any bounded h from

![]() $\mathbb{Z}^d$

to

$\mathbb{Z}^d$

to

![]() $\mathbb{R}$

and

$\mathbb{R}$

and

![]() $x\in \mathbb{Z}^d$

. As defined in Section 1, we use

$x\in \mathbb{Z}^d$

. As defined in Section 1, we use

![]() $\gamma_d$

to denote the probability that

$\gamma_d$

to denote the probability that

![]() $\{S_n\}_{n\geq 1}$

never returns to O again conditioned on

$\{S_n\}_{n\geq 1}$

never returns to O again conditioned on

![]() $S_0=O$

, i.e.

$S_0=O$

, i.e.

for any

![]() $x\sim O$

. For any

$x\sim O$

. For any

![]() $t\geq 0$

, we use

$t\geq 0$

, we use

![]() $p_t(\,\cdot\,, \cdot\,)$

to denote the transition probabilities of

$p_t(\,\cdot\,, \cdot\,)$

to denote the transition probabilities of

![]() $Y_t$

, i.e.

$Y_t$

, i.e.

![]() $p_t(x,y)=\mathbb{P}(Y_t=y\mid Y_0=x)$

for any

$p_t(x,y)=\mathbb{P}(Y_t=y\mid Y_0=x)$

for any

![]() $x,y\in \mathbb{Z}^d$

. For any

$x,y\in \mathbb{Z}^d$

. For any

![]() $x\in \mathbb{Z}^d$

, we define

$x\in \mathbb{Z}^d$

, we define

We use

![]() $\vec{1}$

to denote the configuration in

$\vec{1}$

to denote the configuration in

![]() $\mathbb{Y}$

where all vertices take the value 1. For any

$\mathbb{Y}$

where all vertices take the value 1. For any

![]() $\eta\in \mathbb{Y}$

, as defined in Section 1, we denote by

$\eta\in \mathbb{Y}$

, as defined in Section 1, we denote by

![]() $\mathbb{E}_\eta$

the expectation operator of

$\mathbb{E}_\eta$

the expectation operator of

![]() $\{\eta_t\}_{t\geq 0}$

conditioned on

$\{\eta_t\}_{t\geq 0}$

conditioned on

![]() $\eta_0=\eta$

. Furthermore, for any probability measure

$\eta_0=\eta$

. Furthermore, for any probability measure

![]() $\mu$

on

$\mu$

on

![]() $\mathbb{Y}$

, we use

$\mathbb{Y}$

, we use

![]() $\mathbb{E}_\mu$

to denote the expectation operator of

$\mathbb{E}_\mu$

to denote the expectation operator of

![]() $\{\eta_t\}_{t\geq 0}$

conditioned on

$\{\eta_t\}_{t\geq 0}$

conditioned on

![]() $\eta_0$

being distributed with

$\eta_0$

being distributed with

![]() $\mu$

.

$\mu$

.

Now we recall more properties of the NBCPP

![]() $\{\eta_t\}_{t\geq 0}$

proved in [Reference Liggett11, Chapter 9] and [Reference Xue and Zhao18, Section 2].

$\{\eta_t\}_{t\geq 0}$

proved in [Reference Liggett11, Chapter 9] and [Reference Xue and Zhao18, Section 2].

Proposition 2.1. (Liggett, [Reference Liggett11].) For any

![]() $\eta\in \mathbb{Y}$

,

$\eta\in \mathbb{Y}$

,

![]() $t\geq 0$

, and

$t\geq 0$

, and

![]() $x\in \mathbb{Z}^d$

,

$x\in \mathbb{Z}^d$

,

Furthermore,

![]() $\mathbb{E}_{\vec{1}}\,\eta_t(x)=1$

for any

$\mathbb{E}_{\vec{1}}\,\eta_t(x)=1$

for any

![]() $x\in \mathbb{Z}^d$

and

$x\in \mathbb{Z}^d$

and

![]() $t\geq 0$

.

$t\geq 0$

.

Proposition 2.1 follows from (1.1) directly. In detail, since the generator L of the simple random walk on

![]() $\mathbb{Z}^d$

satisfies

$\mathbb{Z}^d$

satisfies

\begin{align*} L(x,y)= \begin{cases} -1 & \text{if}\ x=y, \\ \dfrac{1}{2d} & \text{if}\ x\sim y, \\ 0 & \,\text{otherwise}, \end{cases}\end{align*}

\begin{align*} L(x,y)= \begin{cases} -1 & \text{if}\ x=y, \\ \dfrac{1}{2d} & \text{if}\ x\sim y, \\ 0 & \,\text{otherwise}, \end{cases}\end{align*}

we have

![]() $\mathbb{E}_\eta\eta_t(x)=\sum_{y\in \mathbb{Z}^d}{\mathrm{e}}^{tL}(x,y)\mathbb{E}_\eta\eta_t(y)$

by (1.1), where

$\mathbb{E}_\eta\eta_t(x)=\sum_{y\in \mathbb{Z}^d}{\mathrm{e}}^{tL}(x,y)\mathbb{E}_\eta\eta_t(y)$

by (1.1), where

![]() ${\mathrm{e}}^{tL}=\sum_{n=0}^{+\infty}{t^nL^n}/{n!}=p_t$

.

${\mathrm{e}}^{tL}=\sum_{n=0}^{+\infty}{t^nL^n}/{n!}=p_t$

.

Proposition 2.2. (Liggett, [Reference Liggett11].) There exist a series of functions

![]() $\{q_t\}_{t\geq 0}$

from

$\{q_t\}_{t\geq 0}$

from

![]() $(\mathbb{Z}^d)^2$

to

$(\mathbb{Z}^d)^2$

to

![]() $\mathbb{R}$

and a series of functions

$\mathbb{R}$

and a series of functions

![]() $\{\hat{q}_t\}_{t\geq 0}$

from

$\{\hat{q}_t\}_{t\geq 0}$

from

![]() $(\mathbb{Z}^d)^4$

to

$(\mathbb{Z}^d)^4$

to

![]() $\mathbb{R}$

such that

$\mathbb{R}$

such that

for any

![]() $t\geq 0$

,

$t\geq 0$

,

![]() $x,y\in \mathbb{Z}^d$

. and

$x,y\in \mathbb{Z}^d$

. and

![]() $\eta\in \mathbb{Y}$

. Furthermore,

$\eta\in \mathbb{Y}$

. Furthermore,

for any

![]() $x,w\in \mathbb{Z}^d$

.

$x,w\in \mathbb{Z}^d$

.

Proposition 2.2 follows from (1.2). In detail, (2.3) holds by taking

![]() $\hat{q}_t={\mathrm{e}}^{tH}$

. When

$\hat{q}_t={\mathrm{e}}^{tH}$

. When

![]() $\eta_0=\vec{1}$

, applying the spatial homogeneity of

$\eta_0=\vec{1}$

, applying the spatial homogeneity of

![]() $\eta_t$

, we have

$\eta_t$

, we have

Denote by

![]() $A_t(x)$

the expression

$A_t(x)$

the expression

![]() $F_{\vec{1}, t}(O,x)$

, applying (1.2) and (2.5), we have

$F_{\vec{1}, t}(O,x)$

, applying (1.2) and (2.5), we have

for any

![]() $t\geq 0$

and a

$t\geq 0$

and a

![]() $\mathbb{Z}^d\times \mathbb{Z}^d$

matrix

$\mathbb{Z}^d\times \mathbb{Z}^d$

matrix

![]() $Q=\{q(x,y)\}_{x,y\in \mathbb{Z}^d}$

independent of t. Consequently, (2.2) holds by taking

$Q=\{q(x,y)\}_{x,y\in \mathbb{Z}^d}$

independent of t. Consequently, (2.2) holds by taking

![]() $q_t={\mathrm{e}}^{tQ}$

. Since

$q_t={\mathrm{e}}^{tQ}$

. Since

\begin{align*} \frac{{\mathrm{d}}}{{\mathrm{d}} t}A_t(x) & = \frac{{\mathrm{d}}}{{\mathrm{d}} t}F_{\vec{1}, t}(z+x,z) \\ & = \sum_u\sum_vH((z+x,z), (u,v))F_{\vec{1}, t}(u,v) = \sum_{y\in \mathbb{Z}^d}\sum_{(u,v):u-v=y}H((z+x,z), (u,v))A_t(y),\end{align*}

\begin{align*} \frac{{\mathrm{d}}}{{\mathrm{d}} t}A_t(x) & = \frac{{\mathrm{d}}}{{\mathrm{d}} t}F_{\vec{1}, t}(z+x,z) \\ & = \sum_u\sum_vH((z+x,z), (u,v))F_{\vec{1}, t}(u,v) = \sum_{y\in \mathbb{Z}^d}\sum_{(u,v):u-v=y}H((z+x,z), (u,v))A_t(y),\end{align*}

the

![]() $\mathbb{Z}^d\times \mathbb{Z}^d$

matrix Q can be chosen such that

$\mathbb{Z}^d\times \mathbb{Z}^d$

matrix Q can be chosen such that

![]() $q(x,y)=\sum_{(u, v): u-v=y}H((z+x, z), (u, v))$

for any

$q(x,y)=\sum_{(u, v): u-v=y}H((z+x, z), (u, v))$

for any

![]() $x,y,z\in \mathbb{Z}^d$

. Applying this equation repeatedly, we have

$x,y,z\in \mathbb{Z}^d$

. Applying this equation repeatedly, we have

for all

![]() $n\geq 1$

by induction, and then

$n\geq 1$

by induction, and then

As a result, (2.4) holds.

Proposition 2.3. (Liggett, [Reference Liggett11].) When

![]() $d\geq 3$

and

$d\geq 3$

and

![]() $\lambda>{1}/({2d(2\gamma_d-1)})$

, we have the following properties:

$\lambda>{1}/({2d(2\gamma_d-1)})$

, we have the following properties:

-

(i) For any

$x,y,z,w\in \mathbb{Z}^d$

,

$x,y,z,w\in \mathbb{Z}^d$

,

$\lim_{t\rightarrow+\infty}q_t^{\lambda, d}(x,y) = \lim_{t\rightarrow+\infty}\hat{q}_t^{\lambda, d}(x,y,z,w) = 0$

.

$\lim_{t\rightarrow+\infty}q_t^{\lambda, d}(x,y) = \lim_{t\rightarrow+\infty}\hat{q}_t^{\lambda, d}(x,y,z,w) = 0$

. -

(ii) Conditioned on

$\eta_0=\vec{1}$

,

$\eta_0=\vec{1}$

,

$\eta_t$

converges weakly as

$\eta_t$

converges weakly as

$t\rightarrow+\infty$

to a probability measure

$t\rightarrow+\infty$

to a probability measure

$\nu_{\lambda, d}$

on

$\nu_{\lambda, d}$

on

$\mathbb{Y}$

.

$\mathbb{Y}$

. -

(iii) For any

$x,y\in \mathbb{Z}^d$

, where

$x,y\in \mathbb{Z}^d$

, where \begin{align*} \lim_{t\rightarrow+\infty}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) & = \sup_{t\geq 0}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) = \mathbb{E}_{\nu_{\lambda, d}}((\eta_0(O))^2) = 1 + \frac{1}{h_{\lambda, d}}, \\ \lim_{t\rightarrow+\infty}{\rm Cov}_{\vec{1}}\,(\eta_t(x), \eta_t(y)) & = {\rm Cov}_{\nu_{\lambda, d}}(\eta_0(x), \eta_0(y)) = \frac{\Phi(x-y)}{h_{\lambda,d}}, \end{align*}

and

\begin{align*} \lim_{t\rightarrow+\infty}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) & = \sup_{t\geq 0}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) = \mathbb{E}_{\nu_{\lambda, d}}((\eta_0(O))^2) = 1 + \frac{1}{h_{\lambda, d}}, \\ \lim_{t\rightarrow+\infty}{\rm Cov}_{\vec{1}}\,(\eta_t(x), \eta_t(y)) & = {\rm Cov}_{\nu_{\lambda, d}}(\eta_0(x), \eta_0(y)) = \frac{\Phi(x-y)}{h_{\lambda,d}}, \end{align*}

and $$h_{\lambda, d}=\frac{2\lambda d(2\gamma_d-1)-1}{1+2d\lambda}$$

$$h_{\lambda, d}=\frac{2\lambda d(2\gamma_d-1)-1}{1+2d\lambda}$$

$\Phi$

is defined as in (2.1).

$\Phi$

is defined as in (2.1).

Proposition 2.3 follows from [Reference Liggett11, Theorem 2.8.13, Corollary 2.8.20, Theorem 9.3.17]. In detail, let

![]() $\{\overline{p}(x.y)\}_{x,y\in \mathbb{Z}^d}$

be the one-step transition probabilities of the discrete-time simple random walk on

$\{\overline{p}(x.y)\}_{x,y\in \mathbb{Z}^d}$

be the one-step transition probabilities of the discrete-time simple random walk on

![]() $\mathbb{Z}^d$

, and

$\mathbb{Z}^d$

, and

![]() $\{\overline{q}(x,y)\}_{x,y\in \mathbb{Z}^d}$

be defined as

$\{\overline{q}(x,y)\}_{x,y\in \mathbb{Z}^d}$

be defined as

\begin{align*} \overline{q}(x,y)= \begin{cases} \frac12{q(x,y)} & \text{if}\ x\neq y, \\[5pt] \frac12{q(x,y)}+1 & \text{if}\ x=y. \end{cases}\end{align*}

\begin{align*} \overline{q}(x,y)= \begin{cases} \frac12{q(x,y)} & \text{if}\ x\neq y, \\[5pt] \frac12{q(x,y)}+1 & \text{if}\ x=y. \end{cases}\end{align*}

Furthermore, let

![]() $\overline{h}\colon \mathbb{Z}^d\rightarrow (0,+\infty)$

be defined as

$\overline{h}\colon \mathbb{Z}^d\rightarrow (0,+\infty)$

be defined as

Then, direct calculations show that

![]() $\overline{p}$

,

$\overline{p}$

,

![]() $\overline{q}$

, and

$\overline{q}$

, and

![]() $\overline{h}$

satisfy the assumption of [Reference Liggett11, Theorem 2.8.13]. Consequently, applying [Reference Liggett11, Theorem 2.8.13],

$\overline{h}$

satisfy the assumption of [Reference Liggett11, Theorem 2.8.13]. Consequently, applying [Reference Liggett11, Theorem 2.8.13],

![]() $\lim_{t\rightarrow+\infty}\overline{q}_t(x,y)=0$

for all

$\lim_{t\rightarrow+\infty}\overline{q}_t(x,y)=0$

for all

![]() $x,y\in \mathbb{Z}^d$

, where

$x,y\in \mathbb{Z}^d$

, where

![]() $\overline{q}_t={\mathrm{e}}^{-t}{\mathrm{e}}^{t\overline{q}}$

. It is easy to check that

$\overline{q}_t={\mathrm{e}}^{-t}{\mathrm{e}}^{t\overline{q}}$

. It is easy to check that

![]() $q_t=\overline{q}_{2t}$

and hence the first limit in (i) is zero. By (2.6),

$q_t=\overline{q}_{2t}$

and hence the first limit in (i) is zero. By (2.6),

![]() $\hat{q}_t(x,y,z,w)\leq q_t((x-y), (z-w))$

and hence the second limit in (i) is zero. Therefore, (i) holds. It is not difficult to show that

$\hat{q}_t(x,y,z,w)\leq q_t((x-y), (z-w))$

and hence the second limit in (i) is zero. Therefore, (i) holds. It is not difficult to show that

![]() $\overline{p}$

,

$\overline{p}$

,

![]() $\overline{q}$

, and

$\overline{q}$

, and

![]() $\overline{h}$

also satisfy the assumption of [Reference Liggett11, Corollary 2.8.20]. Applying this corollary, we have

$\overline{h}$

also satisfy the assumption of [Reference Liggett11, Corollary 2.8.20]. Applying this corollary, we have

![]() $\lim_{t\rightarrow+\infty}\sum_{y}q_t(x,y)=\overline{h}(x)$

. Equation (2.2) shows that

$\lim_{t\rightarrow+\infty}\sum_{y}q_t(x,y)=\overline{h}(x)$

. Equation (2.2) shows that

![]() $\sum_{y}q_t(x,y)=\mathbb{E}(\eta_t(z)\eta_t(z+x))$

, and hence

$\sum_{y}q_t(x,y)=\mathbb{E}(\eta_t(z)\eta_t(z+x))$

, and hence

\begin{align*} \lim_{t\rightarrow+\infty}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) & = \overline{h}(0)=1+\frac{1}{h_{\lambda, d}}, \\ \lim_{t\rightarrow+\infty}{\rm Cov}_{\vec{1}}\,(\eta_t(x), \eta_t(y)) & = \overline{h}(x,y) - 1 = \frac{\Phi(x-y)}{h_{\lambda,d}}.\end{align*}

\begin{align*} \lim_{t\rightarrow+\infty}\mathbb{E}_{\vec{1}}\,((\eta_t(x))^2) & = \overline{h}(0)=1+\frac{1}{h_{\lambda, d}}, \\ \lim_{t\rightarrow+\infty}{\rm Cov}_{\vec{1}}\,(\eta_t(x), \eta_t(y)) & = \overline{h}(x,y) - 1 = \frac{\Phi(x-y)}{h_{\lambda,d}}.\end{align*}

Note that the second limit also applies Proposition 2.1. These last two limits along with (i) ensure that

![]() $\{q_t\}_{t\geq 0}$

and

$\{q_t\}_{t\geq 0}$

and

![]() $\vec{1}$

satisfy the assumption of [Reference Liggett11, Theorem 9.3.17]. By this theorem, (ii) and (iii) hold.

$\vec{1}$

satisfy the assumption of [Reference Liggett11, Theorem 9.3.17]. By this theorem, (ii) and (iii) hold.

Proposition 2.4 ([Reference Xue and Zhao18].) There exist an integer

![]() $d_0\geq 5$

and a real number

$d_0\geq 5$

and a real number

![]() $\lambda_0>0$

satisfying the following properties:

$\lambda_0>0$

satisfying the following properties:

-

(i) If

$d\geq d_0$

and

$d\geq d_0$

and

$\lambda\geq \lambda_0$

, then

$\lambda\geq \lambda_0$

, then

$\lambda>{1}/{2d(2\gamma_d-1)}$

.

$\lambda>{1}/{2d(2\gamma_d-1)}$

. -

(ii) For any

$d\geq d_0$

,

$d\geq d_0$

,

$\lambda\geq \lambda_0$

, and

$\lambda\geq \lambda_0$

, and

$x\in \mathbb{Z}^d$

,

$x\in \mathbb{Z}^d$

,  \begin{align*} \mathbb{E}_{\nu_{\lambda, d}}((\eta_0(O)^4)) \leq \liminf_{t\rightarrow+\infty}\mathbb{E}^{\lambda,d}_{\vec{1}}((\eta_t(x))^4) < +\infty. \end{align*}

\begin{align*} \mathbb{E}_{\nu_{\lambda, d}}((\eta_0(O)^4)) \leq \liminf_{t\rightarrow+\infty}\mathbb{E}^{\lambda,d}_{\vec{1}}((\eta_t(x))^4) < +\infty. \end{align*}

-

(iii) For any

$d\geq d_0$

and

$d\geq d_0$

and

$\lambda\geq \lambda_0$

, where

$\lambda\geq \lambda_0$

, where \begin{align*} \lim_{M\rightarrow+\infty} \underset{\substack{{(x,y,z)\in(\mathbb{Z}^d)^3:} \\\|x-y\|_1\wedge\|x-z\|_1\geq M }}{\sup} {\rm Cov}_{\nu_{\lambda,d}}((\eta_0(x))^2, \eta_0(y)\eta_0(z))=0, \end{align*}

\begin{align*} \lim_{M\rightarrow+\infty} \underset{\substack{{(x,y,z)\in(\mathbb{Z}^d)^3:} \\\|x-y\|_1\wedge\|x-z\|_1\geq M }}{\sup} {\rm Cov}_{\nu_{\lambda,d}}((\eta_0(x))^2, \eta_0(y)\eta_0(z))=0, \end{align*}

$\|\cdot\|_1$

is the

$\|\cdot\|_1$

is the

$l_1$

-norm on

$l_1$

-norm on

$\mathbb{R}^d$

and

$\mathbb{R}^d$

and

$a\wedge b=\min\{a,b\}$

for

$a\wedge b=\min\{a,b\}$

for

$a,b\in \mathbb{R}$

.

$a,b\in \mathbb{R}$

.

Here we briefly recall how to obtain Proposition 2.4 from the main results given in [Reference Xue and Zhao18]. Note that, in [Reference Xue and Zhao18], limit behaviors are discussed for

![]() $\eta_{tN^2}$

as

$\eta_{tN^2}$

as

![]() $N\rightarrow+\infty$

and t is fixed. All these behaviors can be equivalently given for

$N\rightarrow+\infty$

and t is fixed. All these behaviors can be equivalently given for

![]() $\eta_t$

as

$\eta_t$

as

![]() $t\rightarrow+\infty$

. In [Reference Xue and Zhao18], a discrete-time symmetric random walk

$t\rightarrow+\infty$

. In [Reference Xue and Zhao18], a discrete-time symmetric random walk

![]() $\{S_n^{\lambda,d}\}_{n\geq 0}$

on

$\{S_n^{\lambda,d}\}_{n\geq 0}$

on

![]() $(\mathbb{Z}^d)^4$

and function

$(\mathbb{Z}^d)^4$

and function

![]() $\overline{H}^{\lambda,d}\colon (\mathbb{Z}^d)^4\times(\mathbb{Z}^d)^4\rightarrow [1, +\infty)$

are introduced such that

$\overline{H}^{\lambda,d}\colon (\mathbb{Z}^d)^4\times(\mathbb{Z}^d)^4\rightarrow [1, +\infty)$

are introduced such that

\begin{align*} \mathbb{E}^{\lambda,d}_{\vec{1}}\Bigg(\prod_{i=1}^4\eta_t(x_i)\Bigg) \leq \mathbb{E}_{\vec{x}}\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n, S_{n+1})\Bigg)\end{align*}

\begin{align*} \mathbb{E}^{\lambda,d}_{\vec{1}}\Bigg(\prod_{i=1}^4\eta_t(x_i)\Bigg) \leq \mathbb{E}_{\vec{x}}\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n, S_{n+1})\Bigg)\end{align*}

for any

![]() $\vec{x}=(x_1, x_2, x_3, x_4)\in (\mathbb{Z}^d)^4$

and

$\vec{x}=(x_1, x_2, x_3, x_4)\in (\mathbb{Z}^d)^4$

and

![]() $t\geq 0$

. We refer the reader to [Reference Xue and Zhao18] for precise expressions of

$t\geq 0$

. We refer the reader to [Reference Xue and Zhao18] for precise expressions of

![]() $\overline{H}$

and the transition probabilities of

$\overline{H}$

and the transition probabilities of

![]() $\{S_n\}_{n\geq 0}$

. It is shown in [Reference Xue and Zhao18] that there exist

$\{S_n\}_{n\geq 0}$

. It is shown in [Reference Xue and Zhao18] that there exist

![]() $\,\hat{\!d}_0$

and

$\,\hat{\!d}_0$

and

![]() $\hat{\lambda}_0$

such that

$\hat{\lambda}_0$

such that

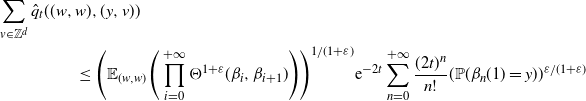

\begin{equation} \sup_{\vec{x}\in(\mathbb{Z}^d)^4} \mathbb{E}_{\vec{x}}\Bigg(\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n,S_{n+1})\Bigg)^{1+\varepsilon}\Bigg) < +\infty\end{equation}

\begin{equation} \sup_{\vec{x}\in(\mathbb{Z}^d)^4} \mathbb{E}_{\vec{x}}\Bigg(\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n,S_{n+1})\Bigg)^{1+\varepsilon}\Bigg) < +\infty\end{equation}

for some

![]() $\varepsilon=\varepsilon(\lambda, d)>0$

when

$\varepsilon=\varepsilon(\lambda, d)>0$

when

![]() $d\geq \,\hat{\!d}_0$

and

$d\geq \,\hat{\!d}_0$

and

![]() $\lambda\geq \hat{\lambda}_0$

. Taking

$\lambda\geq \hat{\lambda}_0$

. Taking

(i) holds. By Proposition 2.3(ii) and Fatou’s lemma, we have

Then, Proposition 2.4(ii) follows from (2.7). It is further shown in [Reference Xue and Zhao18] that

\begin{align*} |{\rm Cov}_{\vec{1}}\,(\eta_t(x)\eta_t(v),\eta_t(y)\eta_t(z))| \leq \mathbb{E}_{(x,v,y,z)}\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n, S_{n+1}), \sigma<+\infty\Bigg)\end{align*}

\begin{align*} |{\rm Cov}_{\vec{1}}\,(\eta_t(x)\eta_t(v),\eta_t(y)\eta_t(z))| \leq \mathbb{E}_{(x,v,y,z)}\Bigg(\prod_{n=0}^{+\infty}\overline{H}(S_n, S_{n+1}), \sigma<+\infty\Bigg)\end{align*}

for any

![]() $x,v,y,z\in \mathbb{Z}^d$

and

$x,v,y,z\in \mathbb{Z}^d$

and

![]() $t\geq 0$

, where

$t\geq 0$

, where

![]() $\sigma=\inf\{n\colon\{S_n(1), S_n(2)\}\bigcap\{S_n(3), S_n(4)\}\neq\emptyset\}$

and

$\sigma=\inf\{n\colon\{S_n(1), S_n(2)\}\bigcap\{S_n(3), S_n(4)\}\neq\emptyset\}$

and

![]() $S_n(j)$

is the jth component of

$S_n(j)$

is the jth component of

![]() $S_n$

. Applying Proposition 2.4(ii) and (iii), the left-hand side in the last inequality can be replaced by

$S_n$

. Applying Proposition 2.4(ii) and (iii), the left-hand side in the last inequality can be replaced by

![]() $|{\rm Cov}_{\nu_{\lambda,d}}(\eta_0(x)\eta_0(v),\eta_0(y)\eta_0(z))|$

. By (2.7) and the Hölder inequality, (iii) holds by checking that

$|{\rm Cov}_{\nu_{\lambda,d}}(\eta_0(x)\eta_0(v),\eta_0(y)\eta_0(z))|$

. By (2.7) and the Hölder inequality, (iii) holds by checking that

By the transition probabilities of

![]() $\{S_n\}_{n\geq 0}$

,

$\{S_n\}_{n\geq 0}$

,

![]() $\{S_n(i)-S_n(j)\}_{n\geq 0}$

is a lazy version of the simple random walk on

$\{S_n(i)-S_n(j)\}_{n\geq 0}$

is a lazy version of the simple random walk on

![]() $\mathbb{Z}^d$

for any

$\mathbb{Z}^d$

for any

![]() $1\leq i\neq j\leq 4$

. Hence, (2.8) follows from the transience of the simple random walk on

$1\leq i\neq j\leq 4$

. Hence, (2.8) follows from the transience of the simple random walk on

![]() $\mathbb{Z}^d$

for

$\mathbb{Z}^d$

for

![]() $d\geq 3$

, and then (iii) holds.

$d\geq 3$

, and then (iii) holds.

Now we give our main results. The first one is about the law of large numbers of the occupation time process

![]() $\big\{\int_0^t \eta_u(O)\,{\mathrm{d}} u\big\}_{t\geq 0}$

.

$\big\{\int_0^t \eta_u(O)\,{\mathrm{d}} u\big\}_{t\geq 0}$

.

Theorem 2.1. Assuming that

![]() $d\geq 3$

and

$d\geq 3$

and

![]() $\lambda>{1}/{2d(2\gamma_d-1)}$

.

$\lambda>{1}/{2d(2\gamma_d-1)}$

.

-

(i) If the NBCPP on

$\mathbb{Z}^d$

starts from

$\mathbb{Z}^d$

starts from

$\nu_{\lambda, d}$

, then a.s., and is in

$\nu_{\lambda, d}$

, then a.s., and is in \begin{align*} \lim_{N\rightarrow+\infty}\frac{1}{N}\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u = t \end{align*}

\begin{align*} \lim_{N\rightarrow+\infty}\frac{1}{N}\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u = t \end{align*}

$L^2$

for any

$L^2$

for any

$t>0$

.

$t>0$

.

-

(ii) If the NBCPP on

$\mathbb{Z}^d$

starts from

$\mathbb{Z}^d$

starts from

$\vec{1}$

, then is in

$\vec{1}$

, then is in \begin{align*} \lim_{N\rightarrow+\infty}\frac{1}{N}\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u = t \end{align*}

\begin{align*} \lim_{N\rightarrow+\infty}\frac{1}{N}\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u = t \end{align*}

$L^2$

for any

$L^2$

for any

$t>0$

.

$t>0$

.

Remark 2.1. By Proposition 2.3, an NBCPP starting from

![]() $\nu_{\lambda, d}$

is stationary. Hence, Theorem 2.1(i) will hold immediately when we can check the ergodicity. However, as far as we know, it is still an open question as to whether an NBCPP starting from

$\nu_{\lambda, d}$

is stationary. Hence, Theorem 2.1(i) will hold immediately when we can check the ergodicity. However, as far as we know, it is still an open question as to whether an NBCPP starting from

![]() $\nu_{\lambda, d}$

is ergodic. As far as we understand, the currently known properties of NBCPPs do not provide convincing evidence for either a positive or negative answer to this problem. We will work on this problem as a further investigation.

$\nu_{\lambda, d}$

is ergodic. As far as we understand, the currently known properties of NBCPPs do not provide convincing evidence for either a positive or negative answer to this problem. We will work on this problem as a further investigation.

It is natural to ask what happens for the occupation time for small

![]() $\lambda$

and

$\lambda$

and

![]() $d=1,2$

. Currently, we have the following result.

$d=1,2$

. Currently, we have the following result.

Theorem 2.2. Assuming that

![]() $d\geq 1$

and

$d\geq 1$

and

![]() $\lambda<{1}/{2d}$

, if the NBCPP on

$\lambda<{1}/{2d}$

, if the NBCPP on

![]() $\mathbb{Z}^d$

starts from

$\mathbb{Z}^d$

starts from

![]() $\vec{1}$

, then

$\vec{1}$

, then

for any

![]() $t>0$

.

$t>0$

.

Remark 2.2. Theorem 2.2 shows that there is no convergence in

![]() $L^2$

for the occupation time when

$L^2$

for the occupation time when

![]() $\lambda<1/2d$

and the process starts from

$\lambda<1/2d$

and the process starts from

![]() $\vec{1}$

. Combined with Theorem 2.1, the occupation time for the NBCPP on

$\vec{1}$

. Combined with Theorem 2.1, the occupation time for the NBCPP on

![]() $\mathbb{Z}^d$

for

$\mathbb{Z}^d$

for

![]() $d\geq 3$

performs a phase transition as

$d\geq 3$

performs a phase transition as

![]() $\lambda$

grows from small to large. More problems arise from Theorem 2.2. What occurs in cases where

$\lambda$

grows from small to large. More problems arise from Theorem 2.2. What occurs in cases where

![]() $d=1,2$

and

$d=1,2$

and

![]() $\lambda\geq 1/2d$

, or

$\lambda\geq 1/2d$

, or

![]() $d\geq 3$

and

$d\geq 3$

and

![]() $1/2d\leq \lambda\leq {1}/({2d(2\gamma_d-1)})$

? Furthermore, does the occupation time converge to 0 in distribution when

$1/2d\leq \lambda\leq {1}/({2d(2\gamma_d-1)})$

? Furthermore, does the occupation time converge to 0 in distribution when

![]() $\lambda$

is sufficiently small? Our current strategy cannot solve these questions, which we will work on as further investigations.

$\lambda$

is sufficiently small? Our current strategy cannot solve these questions, which we will work on as further investigations.

Our third main result is about the CLT of the occupation time process. To state our result, we first introduce some notation and definitions. For any

![]() $t\geq 0$

and

$t\geq 0$

and

![]() $N\geq 1$

, we define

$N\geq 1$

, we define

We write

![]() $X_t^N$

as

$X_t^N$

as

![]() $X_{t,\lambda,d}^N$

when we need to distinguish d and

$X_{t,\lambda,d}^N$

when we need to distinguish d and

![]() $\lambda$

.

$\lambda$

.

Here we recall the definition of ‘weak convergence’. Let S be a topological space. Assuming that

![]() $\{Y_n\}_{n\geq 1}$

is a sequence of S-valued random variables and Y is an S-valued random variable, then we say that

$\{Y_n\}_{n\geq 1}$

is a sequence of S-valued random variables and Y is an S-valued random variable, then we say that

![]() $Y_n$

converges weakly to Y when and only when

$Y_n$

converges weakly to Y when and only when

![]() $\lim_{n\rightarrow+\infty}\mathbb{E}f(Y_n)=\mathbb{E}f(Y)$

for any bounded and continuous f from S to

$\lim_{n\rightarrow+\infty}\mathbb{E}f(Y_n)=\mathbb{E}f(Y)$

for any bounded and continuous f from S to

![]() $\mathbb{R}$

.

$\mathbb{R}$

.

Now we give our central limit theorem.

Theorem 2.3. Let

![]() $d_0$

and

$d_0$

and

![]() $\lambda_0$

be defined as in Proposition 2.4. Assume that

$\lambda_0$

be defined as in Proposition 2.4. Assume that

![]() $d\geq d_0$

,

$d\geq d_0$

,

![]() $\lambda>\lambda_0$

, and the NBCPP on

$\lambda>\lambda_0$

, and the NBCPP on

![]() $\mathbb{Z}^d$

starts from

$\mathbb{Z}^d$

starts from

![]() $\nu_{\lambda, d}$

. Then, for any integer

$\nu_{\lambda, d}$

. Then, for any integer

![]() $m\geq 1$

and

$m\geq 1$

and

![]() $t_1,t_2,\ldots,t_m\geq 0$

,

$t_1,t_2,\ldots,t_m\geq 0$

,

![]() $\big(X_{t_1, \lambda, d}^N, X_{t_2, \lambda, d}^N,\ldots, X_{t_m, \lambda, d}^N\big)$

converges weakly, with respect to the Euclidean topology of

$\big(X_{t_1, \lambda, d}^N, X_{t_2, \lambda, d}^N,\ldots, X_{t_m, \lambda, d}^N\big)$

converges weakly, with respect to the Euclidean topology of

![]() $\mathbb{R}^m$

, to

$\mathbb{R}^m$

, to

![]() $\sqrt{C_1(\lambda, d)}(B_{t_1}, B_{t_2},\ldots,B_{t_m})$

as

$\sqrt{C_1(\lambda, d)}(B_{t_1}, B_{t_2},\ldots,B_{t_m})$

as

![]() $N\rightarrow+\infty$

, where

$N\rightarrow+\infty$

, where

![]() $\{B_t\}_{t\geq 0}$

is a standard Brownian motion and

$\{B_t\}_{t\geq 0}$

is a standard Brownian motion and

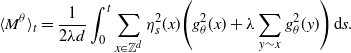

Remark 2.3. Theorem 2.3 is consistent with a calculation of variance. Applying Propositions 2.1–2.3 and the Markov property of

![]() $\{\eta_t\}_{t\geq 0}$

,

$\{\eta_t\}_{t\geq 0}$

,

Applying the strong Markov property of the simple random walk,

and hence

\begin{align*} \int_0^{+\infty}\sum_x\Phi(x)p_\theta(O,x)\,{\mathrm{d}}\theta & = \frac{\int_0^{+\infty}\int_0^{+\infty}\sum_xp_r(O,x)p_\theta(x,O)\,{\mathrm{d}} r\,{\mathrm{d}}\theta} {\int_0^{+\infty}p_\theta(O, O)\,{\mathrm{d}}\theta} \\ & = \frac{\int_0^{+\infty}\int_0^{+\infty}p_{r+\theta}(O, O)\,{\mathrm{d}} r\,{\mathrm{d}}\theta} {\int_0^{+\infty}p_\theta(O, O)\,{\mathrm{d}}\theta}. \end{align*}

\begin{align*} \int_0^{+\infty}\sum_x\Phi(x)p_\theta(O,x)\,{\mathrm{d}}\theta & = \frac{\int_0^{+\infty}\int_0^{+\infty}\sum_xp_r(O,x)p_\theta(x,O)\,{\mathrm{d}} r\,{\mathrm{d}}\theta} {\int_0^{+\infty}p_\theta(O, O)\,{\mathrm{d}}\theta} \\ & = \frac{\int_0^{+\infty}\int_0^{+\infty}p_{r+\theta}(O, O)\,{\mathrm{d}} r\,{\mathrm{d}}\theta} {\int_0^{+\infty}p_\theta(O, O)\,{\mathrm{d}}\theta}. \end{align*}

Note that

![]() $p_t(O,O)=O(t^{-{d}/{2}})$

. Hence,

$p_t(O,O)=O(t^{-{d}/{2}})$

. Hence,

when and only when

![]() $d\geq 5$

. That is why we guess that Theorem 2.3 holds for all

$d\geq 5$

. That is why we guess that Theorem 2.3 holds for all

![]() $d\geq 5$

and sufficiently large

$d\geq 5$

and sufficiently large

![]() $\lambda$

. Note that

$\lambda$

. Note that

by the strong Markov property, and hence

where

![]() $\{C(x)\}_{x\in \mathbb{Z}^d}$

are constants given in [Reference Cox and Griffeath3, Theorem 1] for cases where

$\{C(x)\}_{x\in \mathbb{Z}^d}$

are constants given in [Reference Cox and Griffeath3, Theorem 1] for cases where

![]() $d\geq 5$

. Explicit calculations of the constants occurring in the main theorems and their dependency on d are given in [Reference Birkner and Zähle1, Section 1] and [Reference Cox and Griffeath3, Sections 0 and 2].

$d\geq 5$

. Explicit calculations of the constants occurring in the main theorems and their dependency on d are given in [Reference Birkner and Zähle1, Section 1] and [Reference Cox and Griffeath3, Sections 0 and 2].

Remark 2.4. Under assumptions of Theorem 2.3, it is natural to ask, for any given

![]() $T>0$

, whether

$T>0$

, whether

![]() $\big\{\big\{X_{t,\lambda,d}^N\big\}\big\}_{0\leq t\leq T}$

converges in distribution to

$\big\{\big\{X_{t,\lambda,d}^N\big\}\big\}_{0\leq t\leq T}$

converges in distribution to

![]() $\{\sqrt{C_1(\lambda, d)}B_t\}_{0\leq t\leq T}$

with respect to the Skorokhod topology. We think the answer is positive but we cannot prove this claim according to our current approach. Roughly speaking, in the proof of Theorem 2.3 we decompose the centralized occupation time as a martingale plus an error function. We can show that the error function converges to 0 in distribution at each moment

$\{\sqrt{C_1(\lambda, d)}B_t\}_{0\leq t\leq T}$

with respect to the Skorokhod topology. We think the answer is positive but we cannot prove this claim according to our current approach. Roughly speaking, in the proof of Theorem 2.3 we decompose the centralized occupation time as a martingale plus an error function. We can show that the error function converges to 0 in distribution at each moment

![]() $t>0$

but we cannot check the tightness of this error function to show that it converges weakly to the zero function with respect to the Skorokhod topology. We will work on this problem as a further investigation.

$t>0$

but we cannot check the tightness of this error function to show that it converges weakly to the zero function with respect to the Skorokhod topology. We will work on this problem as a further investigation.

Theorem 2.3 shows that the central limit theorem of the NBCPP is an analogue of that of voter models and branching random walks given in [Reference Birkner and Zähle1, Reference Cox and Griffeath3] when the dimension d is sufficiently large. Using Proposition 2.3 and the fact that

![]() $p_t(O,O)=O(t^{-{d}/{2}})$

, calculations of variances similar to that in Remark 2.3 show that

$p_t(O,O)=O(t^{-{d}/{2}})$

, calculations of variances similar to that in Remark 2.3 show that

when

![]() $d=4$

and

$d=4$

and

when

![]() $d=3$

for any

$d=3$

for any

![]() $t,s>0$

and some constants

$t,s>0$

and some constants

![]() $K_3, K_4\in (0, +\infty)$

. These two limits provide evidence for us to guess that, as N grows to infinity,

$K_3, K_4\in (0, +\infty)$

. These two limits provide evidence for us to guess that, as N grows to infinity,

converges weakly to

![]() $\sqrt{K_3}$

times the fractional Brownian motion with Hurst parameter

$\sqrt{K_3}$

times the fractional Brownian motion with Hurst parameter

![]() $\frac34$

when

$\frac34$

when

![]() $d=3$

, and

$d=3$

, and

converges weakly to

![]() $\{\sqrt{K_4}B_t\}_{t\geq 0}$

when

$\{\sqrt{K_4}B_t\}_{t\geq 0}$

when

![]() $d=4$

, where

$d=4$

, where

![]() $\{B_t\}_{t\geq 0}$

is the standard Brownian motion. Furthermore, Remark 2.3 provides evidence for us to guess that Theorem 2.3 holds for all

$\{B_t\}_{t\geq 0}$

is the standard Brownian motion. Furthermore, Remark 2.3 provides evidence for us to guess that Theorem 2.3 holds for all

![]() $d\geq 5$

. That is to say, central limit theorems of occupation times of NBCPPs on

$d\geq 5$

. That is to say, central limit theorems of occupation times of NBCPPs on

![]() $\mathbb{Z}^3$

,

$\mathbb{Z}^3$

,

![]() $\mathbb{Z}^4$

, and

$\mathbb{Z}^4$

, and

![]() $\mathbb{Z}^d$

for

$\mathbb{Z}^d$

for

![]() $d\geq 5$

are guessed to be analogues of those of voter models and branching random walks in each case. However, our current proof of Theorem 2.3 relies heavily on the fact that

$d\geq 5$

are guessed to be analogues of those of voter models and branching random walks in each case. However, our current proof of Theorem 2.3 relies heavily on the fact that

![]() $\sup_{t\geq 0}\mathbb{E}_{\vec{1}}^{\lambda, d}((\eta_t(O))^4)<+\infty$

when d and

$\sup_{t\geq 0}\mathbb{E}_{\vec{1}}^{\lambda, d}((\eta_t(O))^4)<+\infty$

when d and

![]() $\lambda$

are sufficiently large, which we have not managed to prove yet for small d. That is why we currently only discuss the NBCPP on

$\lambda$

are sufficiently large, which we have not managed to prove yet for small d. That is why we currently only discuss the NBCPP on

![]() $\mathbb{Z}^d$

with d sufficiently large.

$\mathbb{Z}^d$

with d sufficiently large.

Another possible way to extend Theorem 2.3 to cases with small d is to check the ergodicity of the NBCPP, as mentioned in Remark 2.1. If the NBCPP is ergodic, then we can apply Birkhoff’s theorem for f with the form

![]() $f(\eta)=\eta^2(O)$

and then an upper bound of fourth moments of

$f(\eta)=\eta^2(O)$

and then an upper bound of fourth moments of

![]() $\{\eta_t(O)\}_{t\geq 0}$

is not required. We will work on this way as a further investigation.

$\{\eta_t(O)\}_{t\geq 0}$

is not required. We will work on this way as a further investigation.

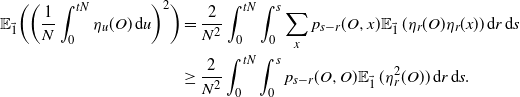

Proofs of Theorems 2.1, 2.2, and 2.3 are given in Section 3. The proof of Theorem 2.1 is relatively easy, where it is shown that

according to Propositions 2.1 and 2.3. The proof of Theorem 2.2 utilizes the coupling relationship between the NBCPP and the contact process to show that

The proof of Theorem 2.3 is inspired by [Reference Birkner and Zähle1], which gives CLTs of occupation times of critical branching random walks. The core idea of the proof is to decompose

![]() $\int_0^t(\eta_u(O)-1)\,{\mathrm{d}} u$

as a martingale

$\int_0^t(\eta_u(O)-1)\,{\mathrm{d}} u$

as a martingale

![]() $M_t$

plus a remainder

$M_t$

plus a remainder

![]() $R_t$

such that the quadratic variation process

$R_t$

such that the quadratic variation process

![]() $({1}/{N})\langle M\rangle_{tN}$

of

$({1}/{N})\langle M\rangle_{tN}$

of

![]() $({1}/{\sqrt{N}})M_{tN}$

converges to

$({1}/{\sqrt{N}})M_{tN}$

converges to

![]() $C_1 t$

in

$C_1 t$

in

![]() $L^2$

and

$L^2$

and

![]() $({1}/{\sqrt{N}})R_{tN}$

converges to 0 in probability as

$({1}/{\sqrt{N}})R_{tN}$

converges to 0 in probability as

![]() $N\rightarrow+\infty$

. To give the above decomposition, a resolvent function of the simple random walk

$N\rightarrow+\infty$

. To give the above decomposition, a resolvent function of the simple random walk

![]() $\{Y_t\}_{t\geq 0}$

on

$\{Y_t\}_{t\geq 0}$

on

![]() $\mathbb{Z}^d$

is utilized. There are two main technical difficulties in the execution of the above strategy for the NBCPP. The first one is to prove that

$\mathbb{Z}^d$

is utilized. There are two main technical difficulties in the execution of the above strategy for the NBCPP. The first one is to prove that

![]() ${\rm Var}(({1}/{N})\langle M\rangle_{tN})$

converges to 0 as

${\rm Var}(({1}/{N})\langle M\rangle_{tN})$

converges to 0 as

![]() $N\rightarrow+\infty$

, which ensures that the limit of

$N\rightarrow+\infty$

, which ensures that the limit of

![]() $({1}/{N})\langle M\rangle_{tN}$

is deterministic. The second one is to show that

$({1}/{N})\langle M\rangle_{tN}$

is deterministic. The second one is to show that

![]() $\mathbb{E}(({1}/{N})\sup_{0\leq s\leq tN}(M_s-M_{s-})^2)$

converges to 0 as

$\mathbb{E}(({1}/{N})\sup_{0\leq s\leq tN}(M_s-M_{s-})^2)$

converges to 0 as

![]() $N\rightarrow+\infty$

, which ensures that the limit process of

$N\rightarrow+\infty$

, which ensures that the limit process of

![]() $\{({1}/{\sqrt{N}})M_{tN}\}_{t\geq 0}$

is continuous. To overcome the first difficulty, we relate the calculation of

$\{({1}/{\sqrt{N}})M_{tN}\}_{t\geq 0}$

is continuous. To overcome the first difficulty, we relate the calculation of

![]() ${\rm Var}(\langle M\rangle_{tN})$

to a random walk on

${\rm Var}(\langle M\rangle_{tN})$

to a random walk on

![]() $\mathbb{Z}^{2d}$

according to the Kolmogorov–Chapman equation of linear systems introduced in [Reference Liggett11]. To overcome the second difficulty, we bound

$\mathbb{Z}^{2d}$

according to the Kolmogorov–Chapman equation of linear systems introduced in [Reference Liggett11]. To overcome the second difficulty, we bound

![]() $\mathbb{P}(({1}/{N})\sup_{0\leq s\leq tN}(M_s-M_{s-})^2>\varepsilon)$

from above by

$\mathbb{P}(({1}/{N})\sup_{0\leq s\leq tN}(M_s-M_{s-})^2>\varepsilon)$

from above by

![]() $O\big(({1}/{N^2})\int_0^{tN}\mathbb{E}(\eta^4_s(O))\,{\mathrm{d}} s\big)$

according to the strong Markov property of our process. For the mathematical details, see Section 3.

$O\big(({1}/{N^2})\int_0^{tN}\mathbb{E}(\eta^4_s(O))\,{\mathrm{d}} s\big)$

according to the strong Markov property of our process. For the mathematical details, see Section 3.

3. Theorem proofs

In this section we prove Theorems 2.1, 2.2, and 2.3. We first give the proof of Theorem 2.1.

Proof of Theorem 2.1. Throughout this proof we assume that

![]() $d\geq 3$

and

$d\geq 3$

and

![]() $\lambda>{1}/({2d(2\gamma_d-1)})$

. We only prove (i), since (ii) follows from an analysis similar to that leading to the

$\lambda>{1}/({2d(2\gamma_d-1)})$

. We only prove (i), since (ii) follows from an analysis similar to that leading to the

![]() $L^2$

convergence in (i). So in this proof we further assume that

$L^2$

convergence in (i). So in this proof we further assume that

![]() $\eta_0$

is distributed with

$\eta_0$

is distributed with

![]() $\nu_{\lambda,d}$

. By Proposition 2.3, the NBCPP starting from

$\nu_{\lambda,d}$

. By Proposition 2.3, the NBCPP starting from

![]() $\nu_{\lambda, d}$

is stationary. Hence, applying Birkhoff’s ergodic theorem,

$\nu_{\lambda, d}$

is stationary. Hence, applying Birkhoff’s ergodic theorem,

where

![]() $\mathcal{I}$

is the set of all invariant events. As a result, we only need to show that the convergence in Theorem 2.1 is in

$\mathcal{I}$

is the set of all invariant events. As a result, we only need to show that the convergence in Theorem 2.1 is in

![]() $L^2$

.

$L^2$

.

Applying Proposition 2.3,

![]() $\{\eta_u(O)\}_{u\geq 0}$

are uniformly integrable and hence

$\{\eta_u(O)\}_{u\geq 0}$

are uniformly integrable and hence

Therefore,

for any

![]() $N\geq 1$

and

$N\geq 1$

and

![]() $t>0$

. Consequently,

$t>0$

. Consequently,

Hence, to prove the

![]() $L^2$

convergence in Theorem 2.1 we only need to show that

$L^2$

convergence in Theorem 2.1 we only need to show that

for any

![]() $t\geq 0$

. Since

$t\geq 0$

. Since

![]() $\nu_{\lambda,d}$

is an invariant measure of our process,

$\nu_{\lambda,d}$

is an invariant measure of our process,

\begin{align} {\rm Var}_{\nu_{\lambda, d}}\bigg(\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u\bigg) & = 2\int_0^{tN}\bigg(\int_0^\theta{\rm Cov}_{\nu_{\lambda, d}}(\eta_r(O),\eta_{\theta}(O))\,{\mathrm{d}} r\bigg)\,{\mathrm{d}}\theta \notag \\ & = 2\int_0^{tN}\bigg(\int_0^\theta{\rm Cov}_{\nu_{\lambda, d}}(\eta_0(O),\eta_{\theta-r}(O))\,{\mathrm{d}} r\bigg)\,{\mathrm{d}}\theta. \end{align}

\begin{align} {\rm Var}_{\nu_{\lambda, d}}\bigg(\int_0^{tN}\eta_u(O)\,{\mathrm{d}} u\bigg) & = 2\int_0^{tN}\bigg(\int_0^\theta{\rm Cov}_{\nu_{\lambda, d}}(\eta_r(O),\eta_{\theta}(O))\,{\mathrm{d}} r\bigg)\,{\mathrm{d}}\theta \notag \\ & = 2\int_0^{tN}\bigg(\int_0^\theta{\rm Cov}_{\nu_{\lambda, d}}(\eta_0(O),\eta_{\theta-r}(O))\,{\mathrm{d}} r\bigg)\,{\mathrm{d}}\theta. \end{align}

Applying Propositions 2.1 and 2.3,

\begin{align} {\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_t(O)) & = \mathbb{E}_{\nu_{\lambda,d}}(\eta_0(O)\eta_t(O)) - 1 \notag \\ & = \mathbb{E}_{\nu_{\lambda,d}}\Bigg(\eta_0(O)\Bigg(\sum_{y\in \mathbb{Z}^d}p_t(O, y)\eta_0(y)\Bigg)\Bigg)-1 \notag \\ & = \sum_{y\in\mathbb{Z}^d}p_t(O,y)(\mathbb{E}_{\nu_{\lambda,d}}(\eta_0(O)\eta_0(y)) - 1) \notag \\ & = \sum_{y\in\mathbb{Z}^d}p_t(O,y){\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_0(y)) \notag\\ & = \frac{1}{h_{\lambda, d}}\sum_{y\in \mathbb{Z}^d}p_t(O, y)\Phi(y). \end{align}

\begin{align} {\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_t(O)) & = \mathbb{E}_{\nu_{\lambda,d}}(\eta_0(O)\eta_t(O)) - 1 \notag \\ & = \mathbb{E}_{\nu_{\lambda,d}}\Bigg(\eta_0(O)\Bigg(\sum_{y\in \mathbb{Z}^d}p_t(O, y)\eta_0(y)\Bigg)\Bigg)-1 \notag \\ & = \sum_{y\in\mathbb{Z}^d}p_t(O,y)(\mathbb{E}_{\nu_{\lambda,d}}(\eta_0(O)\eta_0(y)) - 1) \notag \\ & = \sum_{y\in\mathbb{Z}^d}p_t(O,y){\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_0(y)) \notag\\ & = \frac{1}{h_{\lambda, d}}\sum_{y\in \mathbb{Z}^d}p_t(O, y)\Phi(y). \end{align}

Therefore, by (3.3), for any

![]() $t\geq 0$

,

$t\geq 0$

,

\begin{equation} -1 \leq {\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_t(O)) \leq \frac{1}{h_{\lambda,d}}\sum_{y\in\mathbb{Z}^d}p_t(O,y) = \frac{1}{h_{\lambda, d}}, \end{equation}

\begin{equation} -1 \leq {\rm Cov}_{\nu_{\lambda,d}}(\eta_0(O),\eta_t(O)) \leq \frac{1}{h_{\lambda,d}}\sum_{y\in\mathbb{Z}^d}p_t(O,y) = \frac{1}{h_{\lambda, d}}, \end{equation}

and hence

![]() $\sup_{t\geq 0}|{\rm Cov}_{\nu_{\lambda, d}}(\eta_0(O), \eta_t(O))|<+\infty$