Introduction

University rankings assume paramount significance within the contemporary higher education landscape, exerting profound influence on the strategic planning of tertiary institutions, guiding the decision-making processes of diverse stakeholders, and exerting a palpable impact on both national and international higher education policies. These rankings are ardently embraced by universities on a global scale, serving as pivotal metrics to gauge institutional performance, assess reputation, and facilitate the identification of institutional strengths and weaknesses. In parallel, discerning students, encompassing both domestic and international cohorts, invariably deploy these rankings as a critical determinative factor when making their educational choices, engendering consequential ramifications on enrolment patterns and, consequently, the fiscal health of universities. Furthermore, rankings actively contribute to the formulation of national and international higher education policies, thereby influencing governmental funding mechanisms and necessitating university compliance with stringent criteria and objectives defined by regulatory bodies. The influence of these rankings pervades the academic, administrative, and policy echelons of the higher education sector, corroborating their indispensability in shaping the strategic trajectory and policy milieu of tertiary education institutions (Altbach and Hazelkorn Reference Altbach and Hazelkorn2019; Marginson Reference Marginson2014).

Since the beginning of the new millennium, ranking universities has become a worldwide phenomenon in higher education. Although first rankings seem to appear in the United States as early as 1910 by McKeen Cattell (Hammarfelt et al. Reference Hammarfelt, de Rijcke and Wouters2017), it has been with the help of the internet, and therefore the ease of reaching information, that rankings have reached the popularity that they have today. Not only have they included more and more institutions as calculative metrics, but also they have been regarded as assets of marketing and one of the motives behind organizational policy to target a better standing in national and international systems. That brought about a lot of criticism on the methodological validity and reliability of rankings, as well as the determinants and implications of rankings in higher education institutions. The reason why the matter has received so much response is partially because of how university rankings are used. First, they matter to education administrators, they matter to students, as well as parents and academicians. Second, we should not forget that part of the reason why rankings matter is that some of the organizations that produce them work really hard to make them matter (Lim Reference Lim2018; Ringel et al. Reference Ringel, Brankovic and Werron2020). It is even more than that. With the increasing attention to accountability and transparency in the management of institutions, universities (have to) openly and regularly declare a large amount of data on their performance, on a scope that is ranging from academic production to student enrolment and non-academic affairs. Therefore, whether they like it or not, higher education institutions are and will continue to be ranked by different ranking systems whose methodology, scope and implications will definitely continue to be criticized in the future.

It is, however, pretty interesting that the big three ranking systems – Times Higher Education (THE), QS and ARWU – are still the most researched and most referenced ranking systems, and which more or less continue their original methodology despite all the criticism they have received so far. Normally, when there are profound discussions regarding the fundamentals of a design, that design is very likely to be transformed into, or replaced by, another, which to begin with would receive more support in academic circles. Perhaps what Hazelkorn (Reference Hazelkorn2015) defined as ‘the battle for world-class excellence’ has already turned into ‘a battle for prestige’ and however unfavourable the term may sound, it surely is very appealing and deceitful.

One of the reasons behind why university ranking systems do not change (drastically) to address the concerns that are argued in the literature is perhaps the underlying problem of the effectiveness of knowledge sharing (Bejan Reference Bejan2007). In other words, although academic research is initiated by research questions, to what extent every research question addresses a gap in the literature is a different story. In order to fill those gaps, it is suggested that research gaps should be structured and characterized based on their functionality (Miles Reference Miles2017). To do that, it is imperative that the scope of literature, or at least some part of it, is determined. Likewise, scoping reviews are deemed fit when there is a body of literature that has not been comprehensively reviewed or exhibits an enormous and complex nature (Peters et al. Reference Peters, Godfrey, Khalil, McInerney, Parker and Soares2015).

At this point, it would be beneficial to shed more light on potential gaps in the literature in relation to what the researchers are looking at for the past five years and to provide more insight into the potential size and scope of research on university rankings to help the discussion progress (Romund Reference Romund2023). This is actually one of the main aims of scoping reviews and this study. A scoping review is defined as a systematic approach to map evidence on a topic and identify the main concepts, theories, sources, and knowledge gaps (Tricco et al. Reference Tricco, Lillie, Zarin, O’Brien, Colquhoun, Levac and Hempel2018). Typically, a scoping review does not try to put together quantitative and qualitative data, but rather pinpoint, exhibit and discuss relevant features of sources of proof (Peters et al. Reference Peters, Marnie, Colquhoun, Garritty, Hempel, Horsley and Tricco2021). It is indeed asserted in the literature that scoping reviews enable identification of research and systematic review topics (Lockwood et al. Reference Lockwood, Dos Santos and Pap2019).

Following the same rationale, this scoping review highlights that most of the research in this domain focuses on implications and determinants of current university ranking systems. Methodology, on the other hand, continues to be discussed comprehensively, specifically trying to answer such questions – whether university rankings indeed measure what they intend to measure, whether they provide similar results using similar data and whether their performance indicators can comparatively and effectively reflect institutional performance. On a different note, as shown by the findings of this research, only 10% of research focuses on alternative models.

Additionally, this study is the first analytical study, in any given period, of research trends in university rankings. By identifying the scope of research that is distributed across the topic of university rankings, gaps in current literature and areas that need to be investigated further, this study aims to help researchers plan their studies accordingly, identify more beneficial research methods in the light of what has been already mapped by this study and inspire them to expand the scope of research on university rankings. Therefore, the purpose of this article is essentially to capture the state of the art in the research on university rankings. However, based on the analyses of data in this study, an overarching purpose of all research on this topic may be ‘perfecting ranking methodologies’, that is also why the author has included remarks in this direction in the conclusion.

In terms of a scoping analysis of publication trends in higher education rankings, this study is one of the first (if not the first) analyses of the publication scope of academic articles on the topic. When a thorough research is done on trends in university rankings, what comes up as a result is mostly articles discussing the current popular ranking systems, namely THE, QS and ARWU (Natalia Reference Natalia2020), and reports on emerging topics getting attention, such as internationalization (De Wit Reference De Wit2010). Although university rankings is mentioned as one of the trending topics in higher education in different publications (Altbach et al. Reference Altbach, Reisberg and Rumbley2009; Shin and Harman Reference Shin and Harman2009), there hasn’t been any scoping analysis on research publications so far.

Consequently, the purpose of this research is to conduct a scoping analysis of the top 100 most cited articles on university rankings published in academic journals in a five-year period, from the year 2017 to year 2021. In order to fulfil this purpose, the following research questions are sought to be answered:

-

RQ1. What are the most cited top 100 academic articles in university rankings?

-

RQ2. Of the articles included, what are the most frequent: (a) research areas, (b) author affiliations, (c) research designs, (d) sample ranking systems, (e) data collection instruments, (f) data analysis techniques, (g) focused variables, (h) keywords?

-

RQ3. What are the topics (themes) obtained as a result of the content analysis of the abstracts of these articles?

Methods

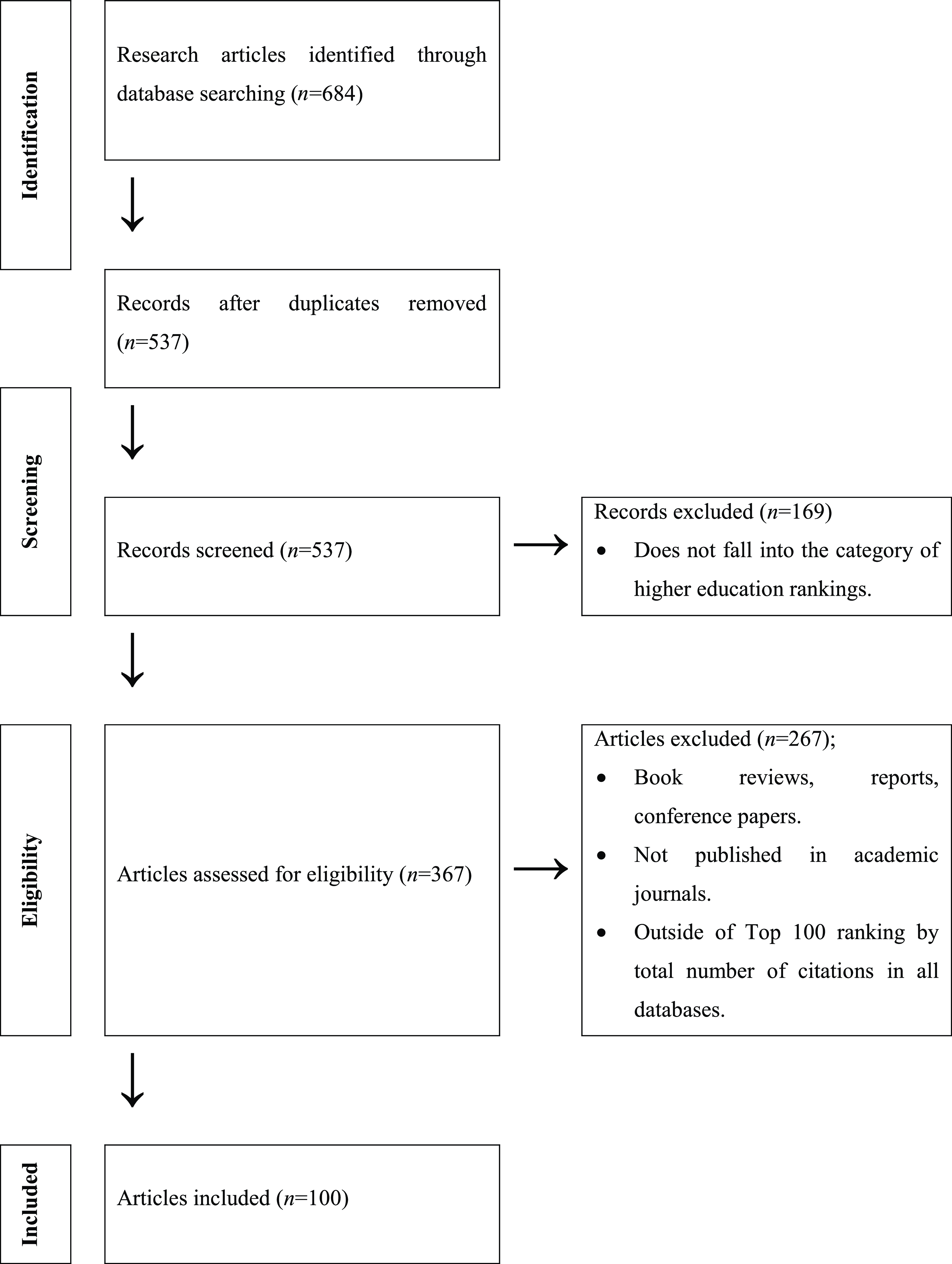

This article follows the guidance of PRISMA extension for scoping reviews (Tricco et al. Reference Tricco, Lillie, Zarin, O’Brien, Colquhoun, Levac and Hempel2018). A detailed overview of the application of the protocol can be found in Table 2 of the Supplementary Material to the article. In order to answer the research questions, the articles are selected using PRISMA protocol for the identification, screening, eligibility and inclusion as shown in Figure 1.

Figure 1. PRISMA flow of article selection process.

A number of databased are searched for academic articles; ABI/INFORM Collection, Academic Search Ultimate, Google Scholar, JSTOR Archive Collection A–Z Listing, SpringerLink Contemporary (1997–Present), International Bibliography of Social Sciences (IBSS), Wiley Online Library All Journals, SpringerNature Springer Journals All 2020, Taylor & Francis Current Content Access. Research terms that are used in these databases are: university rankings, college rankings and higher education rankings. To illustrate, the following samples of search strategy are used while conducting the initial research:

‘ranking’ OR ‘rankings’ AND university

higher education OR university AND ranking

The total number of citations is not a sum of citations from different databases such as Web of Science, Scopus or Google Scholar. Total citation numbers are taken only from Google Scholar. The research is narrowed down to publications from 2017 to 2021. This time frame was chosen in order to better reflect the current trends in literature. Publishing journals, titles and abstracts are reviewed in order to ensure that the article is published in an academic journal, and it is related to the subject of university rankings. The articles are then ordered according to the total number of citations in the Google Scholar database. As a result of such ranking, the first 100 articles with the highest number of citations are included in this study. In other words, the researcher studied 684 articles that met the initial database research and was left with 100 articles that met all the criteria at the end. The inclusion criteria were: (a) article published in an academic journal that is indexed in the above-mentioned databases; (b) article published between 2017 and 2021; (c) article’s language is English; (d) article is related to the topic of ‘university rankings’ either in the methodology or in the conclusion. This means an article is included on the basis that it contains one or more university ranking systems in the sample either as an overall performance ranker or a subject/country specific ranking system, or it draws conclusions from such ranking systems either comparatively or descriptively in its conclusion. Data extraction is handled manually by the researcher using an Excel workbook in which the articles’ (a) titles and abstracts, (b) number of citations, (c) first author’s affiliations, (d) names of the publishing journals, (e) publication years, (f) author names, (g) research areas, (h) research methodology, (i) samples, (j) data collection instruments, (k) data analysis techniques, (l) focused variables and (m) keywords are noted down. The researcher analysed the articles’ abstracts and keywords using NVIVO software to obtain the keyword frequency table, topics and concept maps. A detailed formulation process of the concept map in Figure 4, later, is given in Table 3 of the Supplementary Material to this article. Creating a mind map offers value through its visual clarity and organization, aiding in consistency and stability. It allows for easy updates without disrupting the overall structure, traceability of information sources, and modularity for isolated changes. Revision control in digital tools enables the tracking of modifications and reversions when necessary. Effective communication and collaboration on a shared mind map help maintain data integrity and understanding among collaborators.

Findings

The top 100 most cited articles are given in Table 1 of the Supplementary Material to this article. The research areas of these articles are grouped according to the main purpose of research, in other words, what they intend to analyse using the methodology that is described in the study. Based on this rationale, the following areas are determined:

-

(1) Implications of university rankings

-

(a) Internationalization and competition

-

(b) Governance and autonomy

-

(c) Productivity and quality

-

-

(2) Determinants of university rankings

-

(a) Institutional determinants

-

(b) Regional or country-specific determinants

-

(c) Global determinants

-

(d) Other (e.g. multi-authoring)

-

-

(3) Alternative models

-

(a) Theoretical models (suggested model is given as a framework in theory)

-

(b) Practical models (suggested model is applied to HEIs and presented in conclusion)

-

-

(4) Methodology

-

(a) Validity (measuring what it intends to measure)

-

(b) Reliability (similarity of results when the same methodology is repeated)

-

(c) Performance indicators (methodological, holistic, lexical, semantic problems)

-

-

(5) Role (function) of university rankings

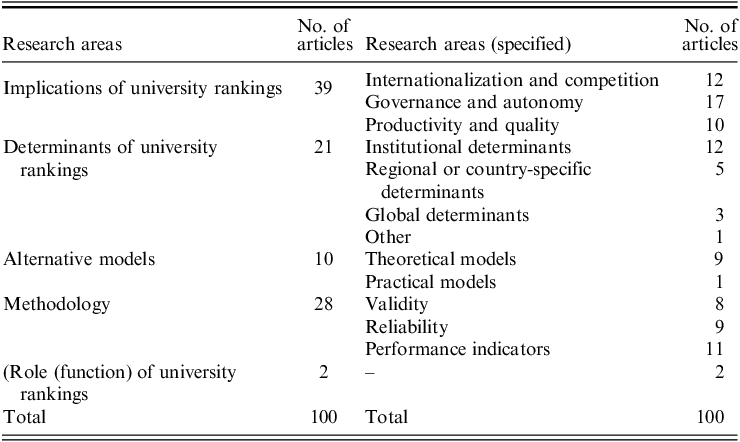

Accordingly, the frequency of the research areas is given in Table 1.

Table 1. Number of articles by research areas

It is noted in findings of research areas that implications of university rankings constitute 39% of all research in the top 100 most cited articles in academic journals, making this the most researched topic between years 2017 and 2021. Matters related to how university rankings influence the governance and autonomy of higher education institutions get the highest attention of scholars. When we look into the variables of such research it is worth noticing that most of the research on implications have organizational policy (16),Footnote a quality assurance (2) and resource allocation (1) as one of the variables to look into.

The second most researched area is the methodology of university rankings with 28% of all research on this topic. The most common variables that researchers are analysing in this aspect are: performance indicators (22), rank differences (21), validity and reliability (11); based on whether they measure what they intend to or how similar the results are if the same dataset is applied into another ranking system. ‘Rank differences’ constitute the most common variable that methodology researchers are looking into. This type of research is divided between a quantitative-based method with a comparative correlational approach (16), where scholars are using statistical information that they obtain from publicly declared ranking tables (35) and conducting analysis through correlations (Pearson or Spearman; 16), regressions (20), central tendency (mean-median-mode; 4) and variability (variance-standard deviation-range; 11); and a qualitative-based method with a content analysis approach (29), in which scholars are collecting data through document analysis (22) and conducting analysis through content analysis (11), discourse analysis (6) and thematic analysis (5).

The third most researched area is determinants of university rankings. Research in this dimension takes 21% of all studies in the top 100. These studies focus mostly on institutional determinants (12), which are basically the performance indicators of the universities that are internally targeted through strategic planning and are thought to have a direct impact on the standing of the universities in national or international rankings.

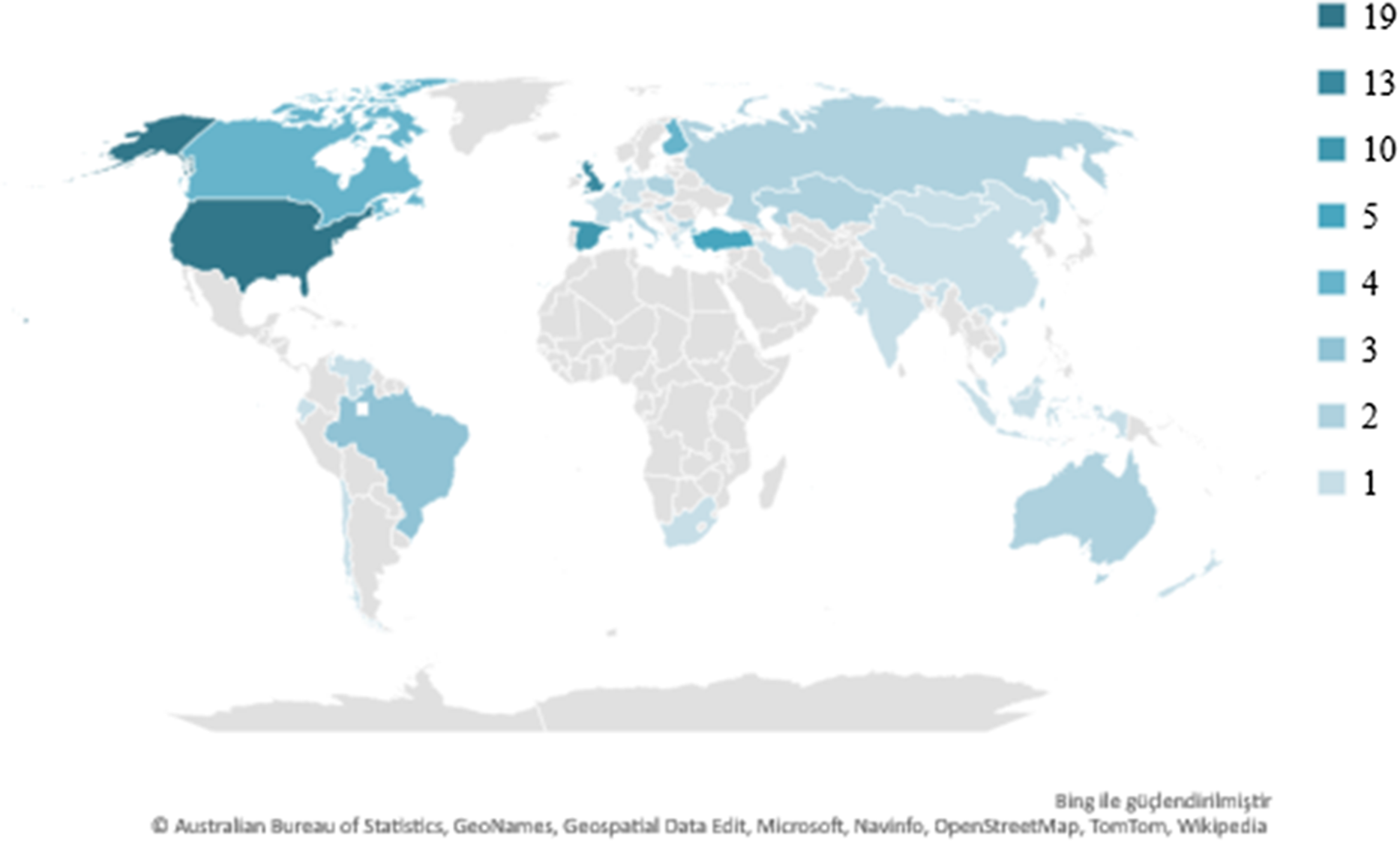

In terms of first author affiliations that are displayed in Figure 2, United States holds the first place with 19 articles whose first authors are affiliated to this country. It is seen that these authors have studied the implications of university rankings (7) especially in terms of governance and autonomy (5) and productivity and quality (2), institutional determinants (4) and regional and country-specific determinants (1). There are also theoretical models (3) that are suggested by authors from the US. When we look at the research design of these articles, comparative correlational and content analysis have five each, followed by causal comparative (2) and group comparisons (1) as the most preferred. The scope of these studies mainly focuses on international ranking systems (15). Following the US, authors from the United Kingdom take the second place with 13 articles published in the top 100. Most of these studies (8) focus on implications of university rankings using qualitative data analysis techniques such as content analysis (4), thematic analysis (2) and discourse analysis (1). Organizational policy seems to be the most common variable (5) that these studies focus on, followed by methodology (4) and rank differences (2). The third country with the most articles in the top 100 is Spain with 10 articles. These articles are mostly about the determinants of university rankings (5), implications (3) and methodology (2). Almost all publications (9) by these authors use quantitative methodologies, in which causal comparative (3) and comparative correlational designs (6) are dominant. Following Spain, Turkey has five articles in the top 100, followed by Canada, the Netherlands and Finland with four articles. Brazil has three articles in the list and Australia, Bulgaria, Hungary, Italy, Kazakhstan, Poland, Russia, Slovenia and Taiwan have two articles each. Other countries in the list have one article in the top 100. These are: Belgium, Chile, China, Denmark, Ecuador, France, Germany, Greece, India, Indonesia, Iran, Luxembourg, Malaysia, Mauritius, Mongolia, New Zealand, Serbia, South Africa, Venezuela, and Vietnam. It is also worth noticing that multi-authoring is quite common in the top 100. Although articles with only one author have the highest number (29) compared with articles with two, three, four or five authors; this also means that 71 articles have a minimum of two authors.

Figure 2. First authors’ affiliation by country.

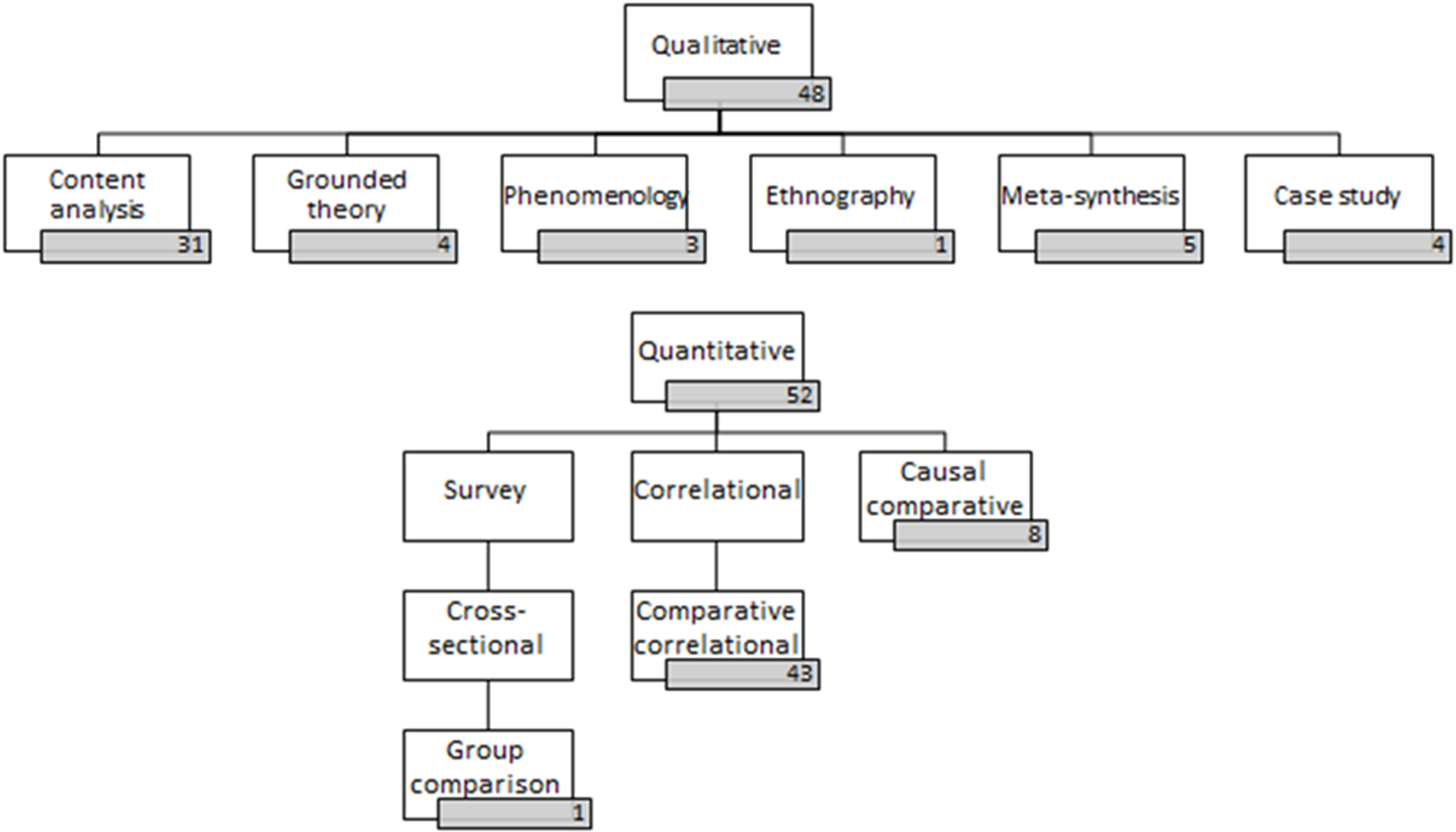

As for research designs, which can also be seen in Figure 3, comparative correlational studies make up to 43 articles in the top 100. These quantitative studies fundamentally compare a minimum of two variables by looking at correlations in between. In the context of higher education rankings, these correlations are analysed through Pearson correlations (14), regression analysis (13), variability (variance, standard deviation, range) (8) and central tendency (mean-median-mode) (4). Content analysis, on the other hand, is the second most popular research design with 31 articles in the top 100. These studies analyse data through content analysis (15), discourse analysis (6) and thematic analysis (8).

Figure 3. Research designs.

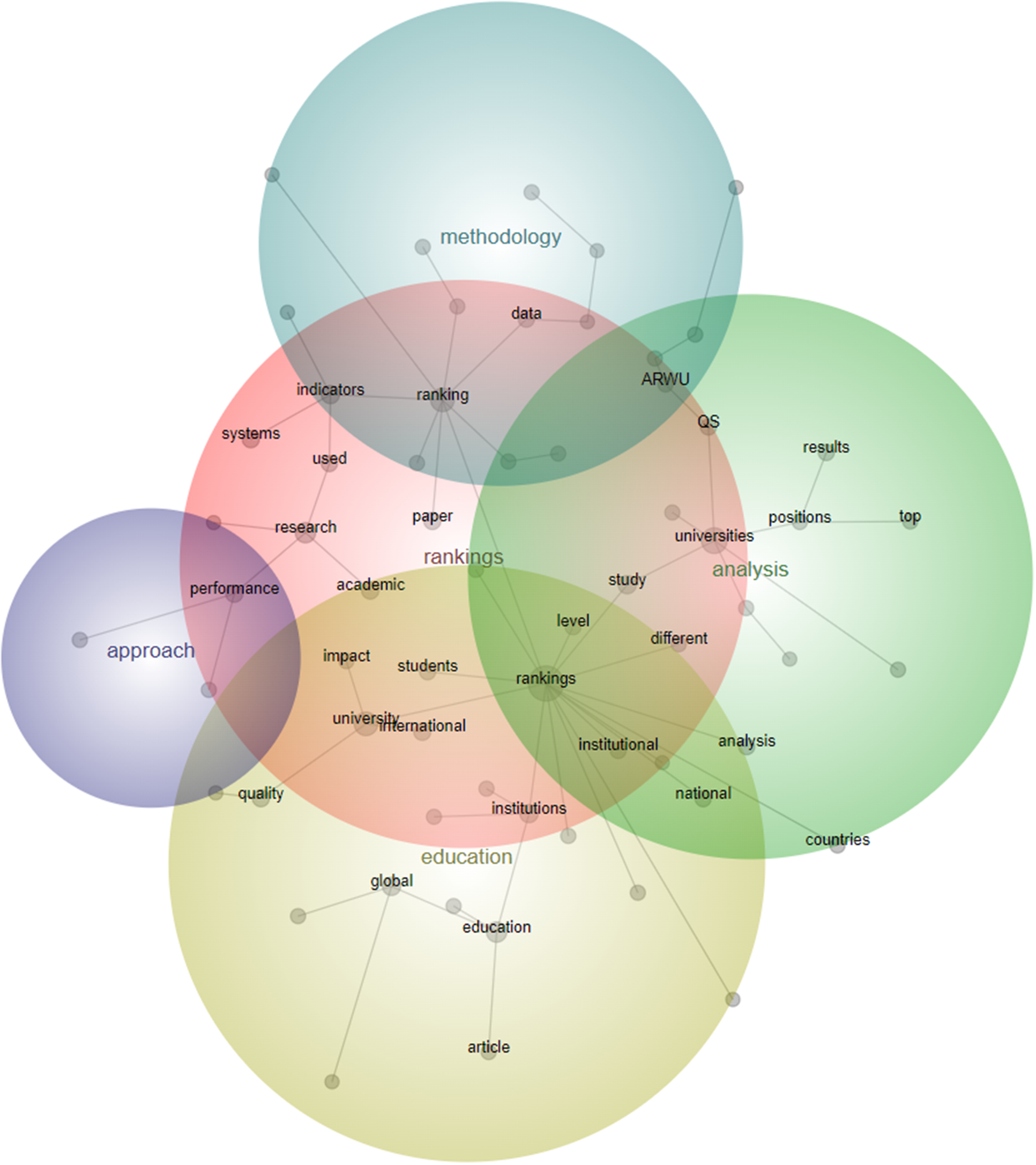

Figure 4. Topics obtained from the abstracts of top 100 most cited articles.

Ranking systems that are investigated in research articles are divided into three groups. The first is the international ranking systems with 82 articles in the top 100. Most commonly analysed ranking systems are: THE (54), QS (52), ARWU (48), USN&W (18), Leiden (12), URAP (7), Webometrics (6), U-Multirank (6) and NTU (5). It is also seen that in almost half of all the articles (46) QS, THE and ARWU are investigated together. In fact, most of the articles (91) have a minimum of two ranking systems to analyse. The number of articles analysing only one ranking system is 9, two ranking systems is 14, three ranking systems is 6, four is 8, five is 12, six is 5 and seven is 2. The second group of ranking systems are subject-specific ranking systems (4). Subjects in this group are health, engineering, and architecture. The third group is national ranking systems (13). This group covers a variety of national ranking systems that are specific to only one country, including the United States, India, Poland and the United Kingdom.

It is also seen that USN&W rankings are mainly sought by authors whose first affiliation is the USA (18), whereas authors from the United Kingdom mostly search ARWU (8) either on its own (1) or with other ranking systems (7). Spanish authors tend to search QS more (6) and authors from Turkey do research mostly on THE, QS and ARWU together (5). In addition to these ranking systems, Turkish authors also add URAP (3), a Turkish university originated ranking methodology. In addition to Turkish authors, URAP is also researched by authors from New Zealand (1), Finland (1) and Serbia (1). Canadian authors mostly search THE (3), Dutch authors ARWU, THE and QS together (3) and Finnish authors are also interested in USN&W (2) in addition to the ‘big three’.

Clearly, the USA and the UK have many articles in top cited (32), given that the articles in the analysis are limited to those in English. This limitation should be taken into account as a number of academicians argued the possible implications of doing academic research in a language other than a person’s own (Turner Reference Trner2004; Duszak and Lewkowicz Reference Duszak and Lewkowicz2008; Snow and Uccelli Reference Snow, Uccelli, Olson and Torrance2009).

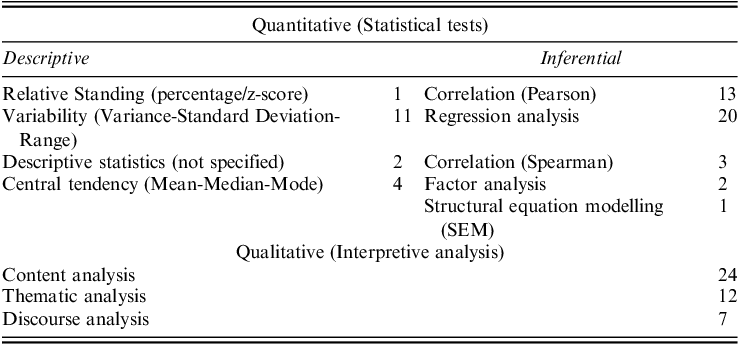

Data collection instruments in the top 100 articles are: publicly announced reports containing statistical data (50), document analysis (46) and surveys (4). As can be seen in Table 2, content analysis is the most common data analysis technique (24) that is used in the top 100 most cited articles, followed by regression analysis (20), thematic analysis (12), Pearson correlations (13) and variability (11). In terms of focused variables, performance indicators seem to be the most researched variable (22). Other popular variables are rank differences (21), organizational policy (16), and methodology (10). Other variables that are worth noting are quality assurance (2) and reputation (2).

Table 2. Data Analysis Techniques

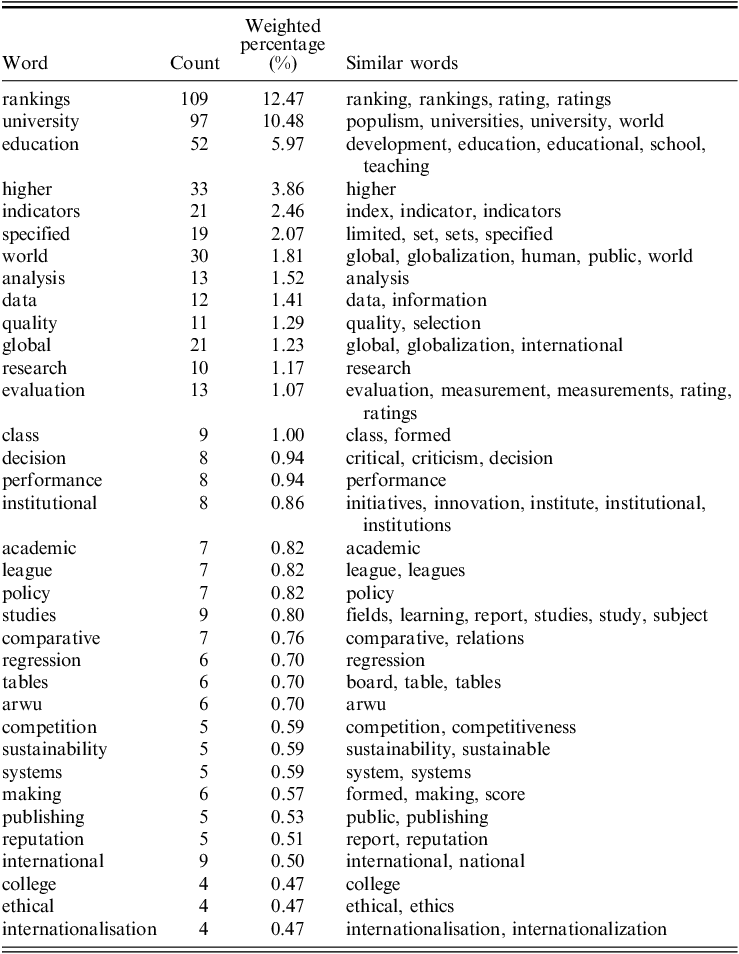

The most frequent 35 keywords are given in Table 3. Notable results in the table include ‘indicators’ as in ‘performance indicators’ as being one of the highest mentioned keywords in research. Quality, policy, competition, sustainability, reputation and internationalisation in this list are worth noting as they summarize the fundamental role of university rankings in the international arena.

Table 3. Most common keywords

As for topics (themes) that are obtained from the abstracts of these articles, five main themes emerge. These are: rankings, methodology, analysis, approach and education. Overall, it is seen in the concept map that in relation to the main topic of rankings, the top 100 most cited articles focus on methodology, analysis, education and approach. All of these topics seem to interrelate with rankings in certain sections. Although there are intersections where two topics other than rankings are seen, these intersections have extensional sub-topics that originated from the main topic of rankings. Additionally, there are intersections populated by three topics such as rankings-methodology-analysis, rankings-analysis-education and rankings-education-approach. As shown in Figure 4, these themes relate to each other in terms of, for example, how rankings affect education in general, quality of education, and decision of international students. There are also intersections in the concept map displaying relationships between institutional determinants and educational activities; and also with processes of analysis in the institution. Another cross-sectional relationship happens to be between the educational approach and performance in rankings. In terms of methodology of rankings, the indicators seem to be on the focus of published articles in the top 100, as well as analyses of universities’ positions, especially the ones in the top places in results. Using two different codes for ‘university’ in singular and plural form likely serves to distinguish between individual universities (singular) and the concept or category of universities as a whole (plural). This differentiation helps the researcher organize and analyse information more effectively, especially when dealing with data that may involve both specific institutions and broader trends or characteristics within the entire category of universities. It allows for precise categorization and analysis of data in a research context.

Discussion

Summary of Evidence

The scope of the top 100 most cited research articles published in academic journals in this period (2017–2021) primarily sheds light on the research topics that have reached popularity among academicians. These topics are divided into five categories, which can be summarized as what rankings affect and what affects rankings, methodological studies questioning validity, reliability and indicators of such measures, suggested frameworks that are both applied and conceptual, and the function of these ranking systems at a time when data about organizational performance are collected and shared more than ever.

In parallel to research topics, the variables researchers are looking at are mostly about the technical aspects of ranking systems. Variables such as performance indicators, rank differences, and methodology seem to be the result of a growing interest towards analysing and comparing the methods that are used in these measures. On a slightly different note, implications of ranking systems on universities becomes obvious with the selection of variables such as organizational policy, quality assurance and reputation.

The United States holds first place when it comes to the association of the first authors of research articles on university rankings between 2017 and 2021. The United Kingdom follows the States with the second highest number of first authors in the list. Third and fourth places go to Spain and Turkey respectively. Canada, the Netherlands and Finland follow the forerunners in this category. Among the top 100 most cited articles, there are authors affiliated with countries ranging from Kazakhstan to Mongolia, Ecuador to Mauritius, and Iran to South Africa.

Qualitative research, specifically content analysis, and quantitative research, particularly comparative correlational studies, dominate the research designs. Qualitative studies mostly analyse the implications of rankings on organizational policies through content analysis, thematic analysis and discourse analysis whereas quantitative studies mostly analyse relationships among different methodologies through comparative correlational approaches. There are also qualitative studies in the top 100 in which theoretical and practical frameworks are suggested through grounded theory and quantitative studies where performance indicators in different ranking systems are examined with a causal comparative approach.

Data are collected mostly through detailed reports of rankings, documents that are shared by higher education institutions, and surveys. Qualitative studies choose to analyse data mostly by content analysis and thematic analysis. Quantitative studies analyse data mostly by regression analysis, correlation analysis and variability.

Topics in abstracts mostly fall under ‘rankings’, which acts as an umbrella category. In research articles, authors focused on issues regarding the role of educational activities and its relation to rankings, the analysis of organizational performance in reference to performance indicators in ranking systems, different approaches to measuring performance and the methodology of rankings mainly dealing with how they handle the large amount of data coming from higher education institutions. Therefore, the scope of this study does not necessarily limit the scope of academic articles in terms of having a direct connection to popular, global university ranking systems but takes a more comprehensive approach by screening the content of academic articles to make sure that the content is relevant to the topic of university rankings.

Limitations

-

This study is limited to the top 100 most cited research articles published in peer-reviewed academic journals in English.

-

Articles are selected by the total number of citations in selected databases that are mentioned in the methods section of this article.

-

Selected articles are manually evaluated to ensure that they are directly related to the topic of university rankings (alternative keywords are also taken into account).

Conclusions

It is worth noticing that, in research, one of the overarching topics is the concern regarding the methodology of rankings. Not only have the authors questioned the pros and cons of the existence of such ranking measures, but they have also technically examined whether such systems are valid and reliable. This is a big step towards perfecting ranking methodologies, and also food for thought on how to make the amount of big data more presentable and accessible to more institutions and to the public. It is also a sign of growing concern in academic circles in regard to what and who these rankings really represent.

A significant amount of popularity of academic publications seems to be stemming from their focus on rank differences across different ranking systems. There is also interest in comparing performance indicators in those systems, a deeper analysis to determine an ideal way of measurement. This may lend itself into the development of a multidimensional, more collaborative ranking system and perhaps an interdisciplinary, multi-layered form of measurement in future studies.

The involvement of authors from different parts of the world provides a wider perspective in terms of region and country-specific factors that are both affected and affect the rankings. Moreover, maximizing the variety of different institutions has let the researchers look closer into the fine-tuning of organizational policies in higher education institutions. This provides more insight into analysing whether these policies are more productive if they come into existence in consequence of standings in ranking systems or they are developed with a specific purpose to target desired positions and only focus on specific performance indicators rather than increasing the performance of the institution as a whole.

The discussion about the role of rankings seems to take a significant portion of the publications in the top 100 most cited articles. In particular, the question about who should give more importance to rankings appears to expand as more authors attempt to explain the rationale behind why and how these measures facilitate change, providing different and expanding perspectives. On the other hand, the fundamental issue about education administrators’ role in facilitating this change remains intact as they have always been accepted as guides of policy in terms of how the institution will handle its position (or lack of position) in rankings.

A significant number of articles in the top 100 appear to be in favour of an update in ranking methodologies. In a swiftly changing atmosphere, shaped by great forces such as the internet, social media and pandemics, rankings cannot stand still but only adapt and respond, not only as entities that are affected, but also as assets that affect the choices of students, academicians, institutions and even countries.

In such an atmosphere, whether rankings should focus on the international platform or, instead, be more localized has different benefits. After all, higher education institutions continue to be ranked by different organizations and there is no escaping from that. On the other hand, institutions are now a part of the ranking ecosystem whether they are listed or not. This means that the lack of a standing in a particular ranking system does not stop the institution from targeting certain indicators in that system and acting accordingly. This, in a way, turns into a tailored, individual acting plan for the institution in which it is possible to see similar performance indicators from different ranking systems. Increasingly, it is already known that some universities take this approach while setting their strategic goals and listing them in their strategic plans.

Suggestions for Future Research

It is one of the aims of a scoping review to determine the gaps in literature on university rankings and give suggestions in regard to possible research areas that need to be investigated further. Therefore, suggestions for future research are drawn in the light of the evidence and conclusions provided in this study.

First, the function of university rankings might be studied more as it compiles only 2% of all studies that are included in this research. It is argued that higher education institutions do not need university rankings to shape their decisions during strategic planning processes (Bornmann Reference Bornmann2014). However, there are some scholars who argue that university rankings affect the reputation of an institution (Sarupiciute and Druteikiene Reference Sarupiciute and Druteikiene2018) in terms of indicating world-class status and internationalization (Lo Reference Lo2014). Authors need to be careful as there is an argument that methodologies which use reputation as a benchmark for rankings are widely criticized for being overly subjective, self-referential and self-perpetuating where participant’s knowledge is limited to what they know and reputation is equated with quality or organizational age (Hazelkorn Reference Hazelkorn2019).

Another potential topic for further research might be alternative models to existing ranking methodologies. In this scoping review, it is seen that academic articles that focus on alternative models, both theoretical and practical, are only 10% of all research in the top 100. Especially during and after the pandemic, there have been numerous studies reflecting on how Covid-19 reshaped higher education. In order to respond to such changes on the institutional level, university rankings have declared an interest in adapting their methodology to those changes (Holmes Reference Holmes2020). It would therefore be very interesting to look into those factors that facilitate this change and evaluate how well ranking systems respond.

In addition, it is seen that most research in the top 100 focuses mainly on THE, QS and ARWU. Other ranking methodologies are investigated much less. This indicates that there is a lot of room for identifying any superior aspects if any, comparing their methodologies and perhaps proving why other ranking systems such as U-MULTIRANK and URAP deserve more attention in research.

In terms of research designs, it is observed that case studies take 4% of all studies in the top 100 most cited articles. Case studies do not only raise knowledge on different and complicated cultural and social settings, but they also inform about how studies handle significant conceptional problems (Allen Reference Allen2018; Singh-Peterson et al. Reference Singh-Peterson, Carnegie, Bourke, Bue, Kunatuba, Laqeretabua, Vilisoni, Singh-Peterson and Carnegie2019). In this sense, to better see a variation of how theory is related to the application in different countries and institutions, increasing the number of case studies will definitely be helpful. It is in fact one of the criticisms of global university rankings that certain countries and institutions have the upper hand. Therefore, case studies will present researchers with new opportunities to compare different settings and actually prove or disprove if such criticism is scientifically justified.

Another research design that might be employed more often at this point could be meta-analysis. It is seen in evidence that meta-analysis accounts for up to 5% of all studies in the top 100. On the other hand, comparative correlational analysis accounts for up to 43% of all studies. This suggests that there may be similar correlational comparisons that could be used to come up with meta-analyses. The superiority of meta-analysis over other types of conventional research methodology would definitely expand the scope of studies on university rankings.

When it comes to data analysis techniques, it is shown by evidence that only 3% of all studies in the top 100 use factor analysis and structural equation modelling (SEM), while in the literature there is a considerable amount of discussion on how university rankings affect different aspects of higher education and vice versa. In this respect, future studies would benefit from clarifying those factors in the form of illustrating and validating relationships among such variables.

In terms of keywords, on the presumption that keywords give an educated guess regarding what the article is mainly about, some keywords are worth noticing. For instance, reputation, ethical and internationalization occurred far less than other keywords in the list. Researchers might benefit from looking into these concepts in relation to potential areas of interest for research. Needless to say, these keywords on their own might initiate new perspectives for university rankings research, and even an inferential test might be done between such keywords, such as whether there is a significant meaningful relationship between reputation and internationalization.

When research topics are analysed, social and cultural implications and determinants of university rankings emerge as an area that needs to be investigated further. For example, how university rankings affect relationships among higher education institutions and other stakeholders or certain industries; or how the society affects or responds to university rankings, might also be possible topics for research. These could shed light on clarifying whether such a relationship exists and, if it does, to what extent and what kind of nature. Eventually, this would help policymakers and academicians, as well as society, to understand whether these metrics have bonds to the social and cultural aspects of their immediate environment.

Supplementary Material

To view supplementary material for this article, please visit https://www.doi.org/10.1017/S1062798723000595.

About the Author

İrfan Ayhan, PhD. is an instructor at Sabancı University, Turkey. His research areas cover university rankings, strategic planning, higher education and quality management.