1. Introduction

Turbulence is ubiquitous in the stably stratified ocean, where it facilitates the vertical mixing of water masses, thus playing a vital role in determining global transport of scalars such as heat, carbon and nutrients (Ivey, Winters & Koseff Reference Ivey, Winters and Koseff2008; Talley et al. Reference Talley2016). Observational estimates of small-scale turbulence quantities may be obtained using measurements of fluctuating density (or temperature, assuming a linear equation of state for a single dynamic scalar) and velocity fields captured by microstructure profilers, small probes that descend vertically through a column of water to depths of up to 6000 m and that have been deployed across much of the ocean (Waterhouse et al. Reference Waterhouse2014). The primary quantities of interest for diagnosing mixing rates are the rate of dissipation of density variance ![]() $\chi$ and the rate of dissipation of turbulent kinetic energy

$\chi$ and the rate of dissipation of turbulent kinetic energy ![]() $\varepsilon$ (defined below). Calculating precise values of

$\varepsilon$ (defined below). Calculating precise values of ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ requires that nine spatial derivatives of velocities and three spatial derivatives of density be resolved simultaneously, and so in practice theoretical ‘surrogate’ models are commonly invoked which require only derivatives in a single coordinate direction (typically the vertical). Our focus here is on estimating

$\chi$ requires that nine spatial derivatives of velocities and three spatial derivatives of density be resolved simultaneously, and so in practice theoretical ‘surrogate’ models are commonly invoked which require only derivatives in a single coordinate direction (typically the vertical). Our focus here is on estimating ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ given exact values of these vertical derivatives alone as notionally measured by an idealised microstructure profiler. This facilitates a study of the underlying stratified turbulence dynamics and is a step toward improved mixing parameterisations ‘output’ from ocean measurement ‘inputs’, although it does not address other causes of uncertainty in obtaining accurate and robust in situ measurements of

$\chi$ given exact values of these vertical derivatives alone as notionally measured by an idealised microstructure profiler. This facilitates a study of the underlying stratified turbulence dynamics and is a step toward improved mixing parameterisations ‘output’ from ocean measurement ‘inputs’, although it does not address other causes of uncertainty in obtaining accurate and robust in situ measurements of ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$, due for example to uncertainties in the mapping between the raw measurements and the vertical gradients of velocity and density (Gregg et al. Reference Gregg, D'Asoro, Riley and Kunze2018).

$\chi$, due for example to uncertainties in the mapping between the raw measurements and the vertical gradients of velocity and density (Gregg et al. Reference Gregg, D'Asoro, Riley and Kunze2018).

The majority of models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ are based on the fundamental assumption that turbulence is homogeneous and isotropic at small scales, although there have been efforts to model dissipation in stratified turbulence by use of a suitable proxy that captures the modifying influence of the stratification (Weinstock Reference Weinstock1981; Fossum, Wingstedt & Reif Reference Fossum, Wingstedt and Reif2013). We appeal to modern data-driven tools to supplement and extend existing theoretical models for

$\chi$ are based on the fundamental assumption that turbulence is homogeneous and isotropic at small scales, although there have been efforts to model dissipation in stratified turbulence by use of a suitable proxy that captures the modifying influence of the stratification (Weinstock Reference Weinstock1981; Fossum, Wingstedt & Reif Reference Fossum, Wingstedt and Reif2013). We appeal to modern data-driven tools to supplement and extend existing theoretical models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ in the anisotropic stratified turbulent regime. Data-driven methods have become popular for the modelling of fluid flows due to their inherent ability to capture complex spatio-temporal dynamics (Salehipour & Peltier Reference Salehipour and Peltier2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020) and reveal insights into flow physics (Callaham et al. Reference Callaham, Koch, Brunton, Kutz and Brunton2021; Couchman et al. Reference Couchman, Wynne-Cattanach, Alford, Caulfield, Kerswell, MacKinnon and Voet2021). They are also seeing widespread use for closure modelling, for example improving sub-grid parameterisation schemes in simulations of turbulence (Maulik et al. Reference Maulik, San, Rasheed and Vedula2019; Portwood et al. Reference Portwood, Nadiga, Saenz and Livescu2021; Subel et al. Reference Subel, Chattopadhyay, Guan and Hassanzadeh2021) and large-scale models of the atmosphere and ocean (Rasp, Pritchard & Gentine Reference Rasp, Pritchard and Gentine2018; Bolton & Zanna Reference Bolton and Zanna2019). Bayesian models that can provide uncertainty estimates as opposed to standard deterministic predictions are appealing in these settings as they give an important measure of model reliability and can accurately reproduce higher-order moments of a distribution of values over a set of data (Maulik et al. Reference Maulik, Fukami, Ramachandra, Fukagata and Taira2020; Barnes & Barnes Reference Barnes and Barnes2021; Guillaumin & Zanna Reference Guillaumin and Zanna2021).

$\chi$ in the anisotropic stratified turbulent regime. Data-driven methods have become popular for the modelling of fluid flows due to their inherent ability to capture complex spatio-temporal dynamics (Salehipour & Peltier Reference Salehipour and Peltier2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020) and reveal insights into flow physics (Callaham et al. Reference Callaham, Koch, Brunton, Kutz and Brunton2021; Couchman et al. Reference Couchman, Wynne-Cattanach, Alford, Caulfield, Kerswell, MacKinnon and Voet2021). They are also seeing widespread use for closure modelling, for example improving sub-grid parameterisation schemes in simulations of turbulence (Maulik et al. Reference Maulik, San, Rasheed and Vedula2019; Portwood et al. Reference Portwood, Nadiga, Saenz and Livescu2021; Subel et al. Reference Subel, Chattopadhyay, Guan and Hassanzadeh2021) and large-scale models of the atmosphere and ocean (Rasp, Pritchard & Gentine Reference Rasp, Pritchard and Gentine2018; Bolton & Zanna Reference Bolton and Zanna2019). Bayesian models that can provide uncertainty estimates as opposed to standard deterministic predictions are appealing in these settings as they give an important measure of model reliability and can accurately reproduce higher-order moments of a distribution of values over a set of data (Maulik et al. Reference Maulik, Fukami, Ramachandra, Fukagata and Taira2020; Barnes & Barnes Reference Barnes and Barnes2021; Guillaumin & Zanna Reference Guillaumin and Zanna2021).

Deep neural network models for determining the relationship between physical observables can be essentially described as the substitution of an equation whose functional form and accompanying parameters are based on known physical constraints for an equation whose functional form is determined by optimising an extremely large number of parameters using data obtained from measurements of the system: this results in what is commonly referred to as a ‘black-box algorithm’. In cases where the underlying physical processes are not fully understood, or not fully representable using a limited subset of system observables, these deep learning methods often result in greatly improved accuracy over traditional physics-based methods. This is, however, at the expense of generalisability to data from similar but distinct systems, where knowledge of how the underlying physics is encoded into a traditional theoretical model can be used to adapt it. Since they consist of such a large number of parameters – leaving the physics somewhat intractable – it is not obvious how to adapt deep learning models in the same way. To try to overcome this difficulty, either some information about the physics can be encoded into the architecture of the model a priori (such models are often referred to as ‘physics-informed’) (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Zanna & Bolton Reference Zanna and Bolton2020; Beucler et al. Reference Beucler, Pritchard, Rasp, Ott, Baldi and Gentine2021), or a highly general model can be analysed a posteriori to ‘discover’ physically relevant features (Toms, Barnes & Ebert-Uphoff Reference Toms, Barnes and Ebert-Uphoff2020; Portwood et al. Reference Portwood, Nadiga, Saenz and Livescu2021).

Since exact observational measurements of ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ are not feasible at present for testing our methodology, we consider direct numerical simulations (DNS) of stably stratified turbulence (SST) as a model flow for the turbulence length and time scales of the ocean, the relevance of which is supported by a modest but increasing body of observations (Riley & Lindborg Reference Riley and Lindborg2008). Broadly, such flows can be characterised by suitable Reynolds and Froude numbers,

$\chi$ are not feasible at present for testing our methodology, we consider direct numerical simulations (DNS) of stably stratified turbulence (SST) as a model flow for the turbulence length and time scales of the ocean, the relevance of which is supported by a modest but increasing body of observations (Riley & Lindborg Reference Riley and Lindborg2008). Broadly, such flows can be characterised by suitable Reynolds and Froude numbers, ![]() $Re_h$ and

$Re_h$ and ![]() $Fr_h$, as well as the Prandtl number

$Fr_h$, as well as the Prandtl number ![]() $Pr$, defined here as

$Pr$, defined here as

Here, ![]() $U_h$ and

$U_h$ and ![]() $L_h$ are horizontal velocity and length scales,

$L_h$ are horizontal velocity and length scales, ![]() $N$ is a characteristic buoyancy frequency associated with the ambient stratification,

$N$ is a characteristic buoyancy frequency associated with the ambient stratification, ![]() $\nu$ is the kinematic viscosity and

$\nu$ is the kinematic viscosity and ![]() $\kappa$ is the thermal diffusivity. The SST regime is then a turbulent flow regime where buoyancy effects due to the presence of a background density stratification have a leading-order influence on the flow, which corresponds to

$\kappa$ is the thermal diffusivity. The SST regime is then a turbulent flow regime where buoyancy effects due to the presence of a background density stratification have a leading-order influence on the flow, which corresponds to ![]() $Re_h\gg 1$ and

$Re_h\gg 1$ and ![]() $Fr_h \lesssim 1$. As

$Fr_h \lesssim 1$. As ![]() $Fr_h$ decreases below

$Fr_h$ decreases below ![]() $O(1)$, horizontal motions tends to decouple into thin vertical layers with large horizontal extent, with the resulting vertical shearing being an important mechanism for generating turbulence (Lilly Reference Lilly1983). The resulting ‘pancake’ layers are predicted to evolve with vertical length scale

$O(1)$, horizontal motions tends to decouple into thin vertical layers with large horizontal extent, with the resulting vertical shearing being an important mechanism for generating turbulence (Lilly Reference Lilly1983). The resulting ‘pancake’ layers are predicted to evolve with vertical length scale ![]() $U_h/N$ (Billant & Chomaz Reference Billant and Chomaz2001), a behaviour that has been observed in experiments (e.g. Lin & Pao Reference Lin and Pao1979; Spedding Reference Spedding1997), as well as in both forced and freely decaying DNS (e.g. Riley & de Bruyn Kops Reference Riley and de Bruyn Kops2003; Brethouwer et al. Reference Brethouwer, Billant, Lindborg and Chomaz2007).

$U_h/N$ (Billant & Chomaz Reference Billant and Chomaz2001), a behaviour that has been observed in experiments (e.g. Lin & Pao Reference Lin and Pao1979; Spedding Reference Spedding1997), as well as in both forced and freely decaying DNS (e.g. Riley & de Bruyn Kops Reference Riley and de Bruyn Kops2003; Brethouwer et al. Reference Brethouwer, Billant, Lindborg and Chomaz2007).

The SST regime exhibits anisotropy across a range of scales (Lang & Waite Reference Lang and Waite2019), the largest of which is set by the vertical layered structure described above and whose existence is predicted by the value of ![]() $Fr_h$. Whether anisotropy persists at the smallest scales of motion (sometimes referred to as return to isotropy; Lumley & Newman Reference Lumley and Newman1977), thus affecting the way in which models for

$Fr_h$. Whether anisotropy persists at the smallest scales of motion (sometimes referred to as return to isotropy; Lumley & Newman Reference Lumley and Newman1977), thus affecting the way in which models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ depend on individual velocity and density derivatives, depends on the existence of an inertial range between these scales and the length scale of motion below which eddies evolve without being influenced by the stratification (Gargett, Osborn & Nasmyth Reference Gargett, Osborn and Nasmyth1984). For the velocity field, the breadth of the inertial range is characterised by an emergent Reynolds number, which for stratified flows is most commonly defined as the buoyancy Reynolds number

$\chi$ depend on individual velocity and density derivatives, depends on the existence of an inertial range between these scales and the length scale of motion below which eddies evolve without being influenced by the stratification (Gargett, Osborn & Nasmyth Reference Gargett, Osborn and Nasmyth1984). For the velocity field, the breadth of the inertial range is characterised by an emergent Reynolds number, which for stratified flows is most commonly defined as the buoyancy Reynolds number

If ![]() $Re_b \ll 1$, scaling arguments and simulation results indicate that

$Re_b \ll 1$, scaling arguments and simulation results indicate that ![]() $\varepsilon$ will be dominated by vertical shear (Riley, Metcalfe & Weissman Reference Riley, Metcalfe and Weissman1981; Godoy-Diana, Chomaz & Billant Reference Godoy-Diana, Chomaz and Billant2004; de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). On the other hand, when

$\varepsilon$ will be dominated by vertical shear (Riley, Metcalfe & Weissman Reference Riley, Metcalfe and Weissman1981; Godoy-Diana, Chomaz & Billant Reference Godoy-Diana, Chomaz and Billant2004; de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). On the other hand, when ![]() $Re_b\gg 1$ and turbulence is locally almost isotropic, one can appeal to symmetry arguments to relate directly the horizontal and vertical spatial derivatives of velocity and density, thus simplifying the expression for

$Re_b\gg 1$ and turbulence is locally almost isotropic, one can appeal to symmetry arguments to relate directly the horizontal and vertical spatial derivatives of velocity and density, thus simplifying the expression for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ (see e.g. Pope Reference Pope2000). Several numerical studies quantify the degree of small-scale isotropy in SST as a function of

$\chi$ (see e.g. Pope Reference Pope2000). Several numerical studies quantify the degree of small-scale isotropy in SST as a function of ![]() $Re_b$ (Hebert & de Bruyn Kops Reference Hebert and de Bruyn Kops2006; de Bruyn Kops Reference de Bruyn Kops2015) from which we conclude that SST will be close to isotropic at the small scales if

$Re_b$ (Hebert & de Bruyn Kops Reference Hebert and de Bruyn Kops2006; de Bruyn Kops Reference de Bruyn Kops2015) from which we conclude that SST will be close to isotropic at the small scales if ![]() $Re_b \approx 50$ or larger. Importantly, there are many regions of the ocean in which it is estimated

$Re_b \approx 50$ or larger. Importantly, there are many regions of the ocean in which it is estimated ![]() $Re_b < 50$ (e.g. Moum Reference Moum1996; Jackson & Rehmann Reference Jackson and Rehmann2014; Scheifele et al. Reference Scheifele, Waterman, Merckelbach and Carpenter2018), with potentially significant consequences for the accuracy of isotropic models for

$Re_b < 50$ (e.g. Moum Reference Moum1996; Jackson & Rehmann Reference Jackson and Rehmann2014; Scheifele et al. Reference Scheifele, Waterman, Merckelbach and Carpenter2018), with potentially significant consequences for the accuracy of isotropic models for ![]() $\varepsilon$. When

$\varepsilon$. When ![]() $Pr>1$, density fluctuations are expected on smaller scales than those of velocity leading to the emergence of a viscous–convective subrange. Nonetheless, it may still be argued that the isotropic inertial range in the density field has the same high wavenumber cutoff as that of the velocity field (Batchelor Reference Batchelor1959), and thus the breadth is again governed by

$Pr>1$, density fluctuations are expected on smaller scales than those of velocity leading to the emergence of a viscous–convective subrange. Nonetheless, it may still be argued that the isotropic inertial range in the density field has the same high wavenumber cutoff as that of the velocity field (Batchelor Reference Batchelor1959), and thus the breadth is again governed by ![]() $Re_b$. We note that technically even for

$Re_b$. We note that technically even for ![]() $Re_b\gg 1$ the scalar derivatives cannot be exactly isotropic if there is a mean gradient (Warhaft Reference Warhaft2000), although this detail will not be of practical importance for our purposes here. When only measurements of the density field are available, the buoyancy Reynolds number is sometimes replaced by the parameter

$Re_b\gg 1$ the scalar derivatives cannot be exactly isotropic if there is a mean gradient (Warhaft Reference Warhaft2000), although this detail will not be of practical importance for our purposes here. When only measurements of the density field are available, the buoyancy Reynolds number is sometimes replaced by the parameter ![]() $Pr^{-1} Cx$, where

$Pr^{-1} Cx$, where ![]() $Cx = |\boldsymbol {\nabla } \rho |^2/(\partial \bar {\rho }/\partial z)^2$ is the Cox number for a flow with turbulent density fluctuations

$Cx = |\boldsymbol {\nabla } \rho |^2/(\partial \bar {\rho }/\partial z)^2$ is the Cox number for a flow with turbulent density fluctuations ![]() $\rho$ about a background density profile

$\rho$ about a background density profile ![]() $\bar {\rho }(z)$ (Dillon & Caldwell Reference Dillon and Caldwell1980).

$\bar {\rho }(z)$ (Dillon & Caldwell Reference Dillon and Caldwell1980).

Mean values of ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ can be accurately computed from single velocity and scalar derivatives if, by some method, the anisotropy in the velocity and scalar fields is known. This is the basis of the majority of existing models, e.g. estimating the dissipation rates by assuming isotropy. In practice, since

$\chi$ can be accurately computed from single velocity and scalar derivatives if, by some method, the anisotropy in the velocity and scalar fields is known. This is the basis of the majority of existing models, e.g. estimating the dissipation rates by assuming isotropy. In practice, since ![]() $Re_b$ itself depends on

$Re_b$ itself depends on ![]() $\varepsilon$, it is not possible to diagnose precisely a turbulent regime given a limited subset of derivatives of velocity and density. Moreover, while the mean dissipation rates may be accurate based on assumptions of isotropy, the distribution of local values

$\varepsilon$, it is not possible to diagnose precisely a turbulent regime given a limited subset of derivatives of velocity and density. Moreover, while the mean dissipation rates may be accurate based on assumptions of isotropy, the distribution of local values ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ (hereafter differentiated from appropriately averaged mean values

$\chi _0$ (hereafter differentiated from appropriately averaged mean values ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ using a subscript

$\chi$ using a subscript ![]() $0$) will not be. Using simulations of almost perfectly homogeneous isotropic turbulence, Almalkie & de Bruyn Kops (Reference Almalkie and de Bruyn Kops2012b) show that the probability density function (p.d.f.) of any of the velocity derivatives have nearly exponential left tails rather than the approximately log-normal tail assumed by Kolmogorov (Reference Kolmogorov1962) for the local dissipation rate, that is actually also widely observed in simulation data.

$0$) will not be. Using simulations of almost perfectly homogeneous isotropic turbulence, Almalkie & de Bruyn Kops (Reference Almalkie and de Bruyn Kops2012b) show that the probability density function (p.d.f.) of any of the velocity derivatives have nearly exponential left tails rather than the approximately log-normal tail assumed by Kolmogorov (Reference Kolmogorov1962) for the local dissipation rate, that is actually also widely observed in simulation data.

Motivated by the discussion above, in this work our primary aim will be to build highly general models for estimating local (![]() $\varepsilon _0, \chi _0$) and mean values (

$\varepsilon _0, \chi _0$) and mean values (![]() $\varepsilon,\chi$) of the dissipation rates, that can make accurate predictions across a range of turbulent regimes described by different values of

$\varepsilon,\chi$) of the dissipation rates, that can make accurate predictions across a range of turbulent regimes described by different values of ![]() $Re_b$. We construct and train a probabilistic convolutional neural network (PCNN) model to compute local values of dissipation

$Re_b$. We construct and train a probabilistic convolutional neural network (PCNN) model to compute local values of dissipation ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ within vertical fluid columns, motivated by available observational data collected by microstructure profilers. The architecture of the model is constructed based on the fundamental physical arguments that vertical gradients of velocity and density should be strongly correlated and of leading-order importance for determining local dissipation rates in stratified turbulence, crucially both locally and non-locally (for example, whether or not a particular region of fluid supports energetic turbulence is likely to depend at least on the local shear as well as some surrounding background density gradient) (Caulfield Reference Caulfield2021). The probabilistic component is included based on the emerging importance of accurately predicting the tails of the distributions of values of small-scale mixing properties (Cael & Mashayek Reference Cael and Mashayek2021; Couchman et al. Reference Couchman, Wynne-Cattanach, Alford, Caulfield, Kerswell, MacKinnon and Voet2021).

$\chi _0$ within vertical fluid columns, motivated by available observational data collected by microstructure profilers. The architecture of the model is constructed based on the fundamental physical arguments that vertical gradients of velocity and density should be strongly correlated and of leading-order importance for determining local dissipation rates in stratified turbulence, crucially both locally and non-locally (for example, whether or not a particular region of fluid supports energetic turbulence is likely to depend at least on the local shear as well as some surrounding background density gradient) (Caulfield Reference Caulfield2021). The probabilistic component is included based on the emerging importance of accurately predicting the tails of the distributions of values of small-scale mixing properties (Cael & Mashayek Reference Cael and Mashayek2021; Couchman et al. Reference Couchman, Wynne-Cattanach, Alford, Caulfield, Kerswell, MacKinnon and Voet2021).

The data-driven models may be used as an effective tool revealing the fundamental limitations of theoretical models for local stratified turbulent flow properties derived based on global statistics. For this purpose, it will be important to compare the data-driven models with strict benchmarks whose functional form incorporates as many of the currently known fluid dynamical constraints as possible. Therefore we also propose new empirically derived theoretical models for calculating ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ using the same inputs based on the knowledge that the local isotropy of the flow is primarily determined by the buoyancy Reynolds number

$\chi _0$ using the same inputs based on the knowledge that the local isotropy of the flow is primarily determined by the buoyancy Reynolds number ![]() $Re_b$, which here serve as a helpful baseline to compare with the deep learning model, but may also be considered practical substitutes for isotropic models in their own right. A comparison between the theoretical and PCNN models provides some interesting insights into the limitations of using mean quantities rather than local information for predicting mixing properties of stratified turbulent flows. Moreover, we analyse the structure of the optimised deep learning models to attempt to interpret which features give rise to improvements in accuracy over physics-based theoretical models.

$Re_b$, which here serve as a helpful baseline to compare with the deep learning model, but may also be considered practical substitutes for isotropic models in their own right. A comparison between the theoretical and PCNN models provides some interesting insights into the limitations of using mean quantities rather than local information for predicting mixing properties of stratified turbulent flows. Moreover, we analyse the structure of the optimised deep learning models to attempt to interpret which features give rise to improvements in accuracy over physics-based theoretical models.

To achieve our primary aim, the remainder of this paper is organised as follows. In § 2 we outline the key features of the DNS used throughout and describe how assumptions of isotropy may be used to construct models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$. We also present empirically determined theoretical models for

$\chi$. We also present empirically determined theoretical models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ based on the value of a suitable proxy for the buoyancy Reynolds number

$\chi$ based on the value of a suitable proxy for the buoyancy Reynolds number ![]() $Re_b$, and introduce the probabilistic deep learning model to be evaluated against the theoretical models. Qualitative and quantitative comparisons are shown in § 3, and an analysis and interpretation of the deep learning model is carried out in § 4. We discuss some of the wider implications of the results and conclude in § 6.

$Re_b$, and introduce the probabilistic deep learning model to be evaluated against the theoretical models. Qualitative and quantitative comparisons are shown in § 3, and an analysis and interpretation of the deep learning model is carried out in § 4. We discuss some of the wider implications of the results and conclude in § 6.

2. Methods

2.1. Simulations and theory

Much of the ocean thermocline is believed to be in a ‘strongly stratified turbulence’ regime characterised by large Reynolds number and relatively small Froude number (Moum Reference Moum1996; Brethouwer et al. Reference Brethouwer, Billant, Lindborg and Chomaz2007), defined by (1.1a–c), or in their ‘turbulent’ form by eliminating the horizontal length scale ![]() $L_h$ in favour of a measured turbulent dissipation via the relation

$L_h$ in favour of a measured turbulent dissipation via the relation ![]() $\varepsilon = U_h^3/L_h$, as

$\varepsilon = U_h^3/L_h$, as

For a flow with zero mean shear such as will be considered here, ![]() $U_h$ is taken to be the root mean squared (r.m.s.) velocity and

$U_h$ is taken to be the root mean squared (r.m.s.) velocity and ![]() $\varepsilon$ is volume averaged over the flow domain. In order to obtain a dataset of stratified turbulence that is broadly representative of a diverse and continuously evolving ocean environment, we appeal to DNS of decaying, strongly stratified turbulence with a large dynamic range accommodating motions on a wide range of spatial scales (de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). Simulations are carried out by solving the Navier–Stokes equations with the non-hydrostatic Boussinesq approximation, in the dimensionless form

$\varepsilon$ is volume averaged over the flow domain. In order to obtain a dataset of stratified turbulence that is broadly representative of a diverse and continuously evolving ocean environment, we appeal to DNS of decaying, strongly stratified turbulence with a large dynamic range accommodating motions on a wide range of spatial scales (de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). Simulations are carried out by solving the Navier–Stokes equations with the non-hydrostatic Boussinesq approximation, in the dimensionless form

The dimensionless parameters ![]() $Re$,

$Re$, ![]() $Pr$ and

$Pr$ and ![]() $Fr$ are defined by

$Fr$ are defined by

Here, ![]() $U_0$ and

$U_0$ and ![]() $L_0$ are characteristic (horizontal) velocity and length scales of the flow in its initialised state,

$L_0$ are characteristic (horizontal) velocity and length scales of the flow in its initialised state, ![]() $\nu$ and

$\nu$ and ![]() $\kappa$ are the momentum and density diffusivities and

$\kappa$ are the momentum and density diffusivities and ![]() $N$ is the (imposed and uniform) background buoyancy frequency. Density is non-dimensionalised using the scale

$N$ is the (imposed and uniform) background buoyancy frequency. Density is non-dimensionalised using the scale ![]() $-L_0 N^2\rho _a/g$, where

$-L_0 N^2\rho _a/g$, where ![]() $g$ is the acceleration due to gravity and

$g$ is the acceleration due to gravity and ![]() $\rho _a$ is a reference density, whilst time is non-dimensionalised using the scale

$\rho _a$ is a reference density, whilst time is non-dimensionalised using the scale ![]() $L_0/U_0$. Note that

$L_0/U_0$. Note that ![]() $\rho$ is defined as a departure from an imposed uniform background density gradient, so that the total dimensionless density

$\rho$ is defined as a departure from an imposed uniform background density gradient, so that the total dimensionless density ![]() $\rho _t$ can be written (up to a constant reference density) as

$\rho _t$ can be written (up to a constant reference density) as ![]() $\rho _t = \rho + z$.

$\rho _t = \rho + z$.

Equations (2.2)–(2.4) are solved in a triply periodic domain using a pseudospectral method, with a third-order Adams–Bashforth method used to advance the equations in time and a 2/3 method of truncation for dealiasing fields. Simulations are initialised with the velocity fields in a state of homogeneous, isotropic turbulence. This is achieved by first performing unstratified simulations which are forced to match an empirical spectrum suggested by Pope (Reference Pope2000), using the method described in Almalkie & de Bruyn Kops (Reference Almalkie and de Bruyn Kops2012b). Once suitable conditions have been achieved, a gravitational field is imposed on the density stratification and forcing is switched off, leaving turbulence to decay freely. With the stratification applied, the flow evolves with a natural time scale given by the buoyancy period ![]() $T_B = {2{\rm \pi} / N}$. As discussed by de Bruyn Kops & Riley (Reference de Bruyn Kops and Riley2019), it is often instructive to observe the flow in terms of the number of buoyancy periods

$T_B = {2{\rm \pi} / N}$. As discussed by de Bruyn Kops & Riley (Reference de Bruyn Kops and Riley2019), it is often instructive to observe the flow in terms of the number of buoyancy periods ![]() $T$ after the stratification has been imposed.

$T$ after the stratification has been imposed.

The evolution of turbulence and its interaction with the background density stratification is dynamically rich and motions on a variety of length scales emerge which may be used to describe the instantaneous state of the flow. The largest-scale horizontal motions in the flow are described by the integral length scale ![]() $L_h$ calculated here from the r.m.s. velocities using the method described in appendix E of Comte-Bellot & Corrsin (Reference Comte-Bellot and Corrsin1971). This characterises the largest eddies which inject energy into turbulent motions. A turbulent cascade drives this energy down scale until it is dissipated as heat at the Kolmogorov scale

$L_h$ calculated here from the r.m.s. velocities using the method described in appendix E of Comte-Bellot & Corrsin (Reference Comte-Bellot and Corrsin1971). This characterises the largest eddies which inject energy into turbulent motions. A turbulent cascade drives this energy down scale until it is dissipated as heat at the Kolmogorov scale ![]() $L_K$ (or, for the density field, the Batchelor scale

$L_K$ (or, for the density field, the Batchelor scale ![]() $L_B$). Two intermediate scales that arise due to the presence of the stratification are the buoyancy scale

$L_B$). Two intermediate scales that arise due to the presence of the stratification are the buoyancy scale ![]() $L_b$ and the Ozmidov scale

$L_b$ and the Ozmidov scale ![]() $L_O$. The buoyancy scale is a vertical scale describing the height of the pancake layers that form, whilst the Ozmidov scale describes the size of the largest eddies which are unaffected by the stratification. These length scales are defined as follows:

$L_O$. The buoyancy scale is a vertical scale describing the height of the pancake layers that form, whilst the Ozmidov scale describes the size of the largest eddies which are unaffected by the stratification. These length scales are defined as follows:

De Bruyn Kops & Riley (Reference de Bruyn Kops and Riley2019) perform four simulations for various ![]() $Fr$ and

$Fr$ and ![]() $Re$ at

$Re$ at ![]() $Pr=1$. Here, we use data from a modified version of the simulation with largest

$Pr=1$. Here, we use data from a modified version of the simulation with largest ![]() $Re$ which has

$Re$ which has ![]() $Pr=7$ to be more representative of the ocean in regions where heat is the primary stratifying agent. Further details may be found in Riley, Couchman & de Bruyn Kops (Reference Riley, Couchman and de Bruyn Kops2023). The Froude and Reynolds numbers for the simulation are

$Pr=7$ to be more representative of the ocean in regions where heat is the primary stratifying agent. Further details may be found in Riley, Couchman & de Bruyn Kops (Reference Riley, Couchman and de Bruyn Kops2023). The Froude and Reynolds numbers for the simulation are ![]() $Fr=1.1$ and

$Fr=1.1$ and ![]() $Re=2480$. To obtain an appropriate rate of decay of turbulence, the horizontal domain size

$Re=2480$. To obtain an appropriate rate of decay of turbulence, the horizontal domain size ![]() $L_x=L_y$ is chosen to initially accommodate roughly 84 integral length scales

$L_x=L_y$ is chosen to initially accommodate roughly 84 integral length scales ![]() $L_h$, with the vertical extent

$L_h$, with the vertical extent ![]() $L_z=L_x/2$. To resolve motions adequately at the Batchelor scale

$L_z=L_x/2$. To resolve motions adequately at the Batchelor scale ![]() $L_B$, this requires a maximum resolution of

$L_B$, this requires a maximum resolution of ![]() $N_x=N_y=12\ 880$,

$N_x=N_y=12\ 880$, ![]() $N_z=N_x/2$. We take fully three-dimensional snapshots of the velocity and density fields (evenly sparsed by a factor of two in each coordinate direction for computational ease) at various time points

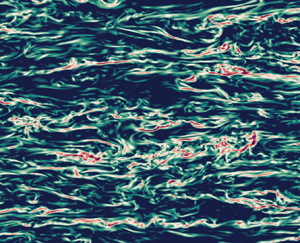

$N_z=N_x/2$. We take fully three-dimensional snapshots of the velocity and density fields (evenly sparsed by a factor of two in each coordinate direction for computational ease) at various time points ![]() $T=0.5,1,2,4,6,7.7$ during the flow evolution. This covers an extent of time over which the turbulence decays from being highly energetic and almost isotropic, to a near quasi-horizontal regime in which flow fields have the structure of thin horizontal layers, characteristic of stratified turbulence. The evolution of non-dimensional parameters

$T=0.5,1,2,4,6,7.7$ during the flow evolution. This covers an extent of time over which the turbulence decays from being highly energetic and almost isotropic, to a near quasi-horizontal regime in which flow fields have the structure of thin horizontal layers, characteristic of stratified turbulence. The evolution of non-dimensional parameters ![]() $Fr_h$,

$Fr_h$, ![]() $Re_h$,

$Re_h$, ![]() $Fr_t$,

$Fr_t$, ![]() $Re_t$ and

$Re_t$ and ![]() $Re_b$ describing the instantaneous state of the turbulence at each time point are given in table 1.

$Re_b$ describing the instantaneous state of the turbulence at each time point are given in table 1.

Table 1. Non-dimensional parameters for the simulation used at various numbers of buoyancy periods ![]() $T$ following the introduction of the stratification. Note at

$T$ following the introduction of the stratification. Note at ![]() $T=0.5$ we have

$T=0.5$ we have ![]() $Re_h=Re_0=2480$ and

$Re_h=Re_0=2480$ and ![]() $Fr_h=Fr_0=0.175$. Here,

$Fr_h=Fr_0=0.175$. Here, ![]() $\varDelta = L_x/(N_x/2)$ is the grid spacing of the snapshots, which are evenly sparsed by a factor of 2 in each coordinate direction from the DNS resolution.

$\varDelta = L_x/(N_x/2)$ is the grid spacing of the snapshots, which are evenly sparsed by a factor of 2 in each coordinate direction from the DNS resolution.

2.2. Dissipation rates and isotropic models

The local (dimensionless) instantaneous rate of turbulent energy dissipation ![]() $\varepsilon _0$ is defined as

$\varepsilon _0$ is defined as

where the ![]() $u_i$ represent turbulent velocity fluctuations. The corresponding local rate of potential energy dissipation

$u_i$ represent turbulent velocity fluctuations. The corresponding local rate of potential energy dissipation ![]() $\chi _0$ is defined as

$\chi _0$ is defined as

As noted in the introduction, measuring the exact value of ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ requires that all spatial derivatives of the velocity and density fields be resolved, a task that is difficult even for laboratory flows, and certainly not possible at present in the context of oceanographic data. Thus it is common to make simplifying assumptions about the turbulence in order to proceed. If one assumes that turbulence is both homogeneous and isotropic, then averaging over some sufficient region, both expressions above can be written in terms of a single derivative. Microstructure profilers usually measure vertical derivatives, hence here we will consider the following isotropic models for

$\chi _0$ requires that all spatial derivatives of the velocity and density fields be resolved, a task that is difficult even for laboratory flows, and certainly not possible at present in the context of oceanographic data. Thus it is common to make simplifying assumptions about the turbulence in order to proceed. If one assumes that turbulence is both homogeneous and isotropic, then averaging over some sufficient region, both expressions above can be written in terms of a single derivative. Microstructure profilers usually measure vertical derivatives, hence here we will consider the following isotropic models for ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$:

$\chi _0$:

Here, ![]() $S^2$ is the (squared) local vertical shear. As pointed out by Almalkie & de Bruyn Kops (Reference Almalkie and de Bruyn Kops2012b), these models are exact in the means (which here we take to be a spatial volume average denoted by angle brackets

$S^2$ is the (squared) local vertical shear. As pointed out by Almalkie & de Bruyn Kops (Reference Almalkie and de Bruyn Kops2012b), these models are exact in the means (which here we take to be a spatial volume average denoted by angle brackets ![]() $\langle {\cdot } \rangle$) when turbulence is perfectly isotropic, but even in this idealised situation there may be significant differences in the frequency distributions of local values.

$\langle {\cdot } \rangle$) when turbulence is perfectly isotropic, but even in this idealised situation there may be significant differences in the frequency distributions of local values.

2.3. An empirical model

Equations (2.9) and (2.10) are only appropriate (strictly in the mean sense) provided that there exists an inertial range of scales above the Kolmogorov scale ![]() $L_K$ (or equivalently for

$L_K$ (or equivalently for ![]() $\chi$, the Batchelor scale

$\chi$, the Batchelor scale ![]() $L_B$) within which the flow is close to isotropic. Noting that we can write

$L_B$) within which the flow is close to isotropic. Noting that we can write ![]() $Re_b = (L_O/L_K)^{4/3}$, whether or not such a range exists at small scales is primarily dependent on the value of the buoyancy Reynolds number, making this the most important parameter for determining the accuracy of the isotropic models above. Observational evidence supporting this hypothesis dates back to the measurements of Gargett et al. (Reference Gargett, Osborn and Nasmyth1984), with many recent DNS confirming the agreement with the theory (see, e.g. de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019; Lang & Waite Reference Lang and Waite2019; Portwood, de Bruyn Kops & Caulfield Reference Portwood, de Bruyn Kops and Caulfield2019). Lang & Waite (Reference Lang and Waite2019) demonstrate that the primary effect of the Froude number

$Re_b = (L_O/L_K)^{4/3}$, whether or not such a range exists at small scales is primarily dependent on the value of the buoyancy Reynolds number, making this the most important parameter for determining the accuracy of the isotropic models above. Observational evidence supporting this hypothesis dates back to the measurements of Gargett et al. (Reference Gargett, Osborn and Nasmyth1984), with many recent DNS confirming the agreement with the theory (see, e.g. de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019; Lang & Waite Reference Lang and Waite2019; Portwood, de Bruyn Kops & Caulfield Reference Portwood, de Bruyn Kops and Caulfield2019). Lang & Waite (Reference Lang and Waite2019) demonstrate that the primary effect of the Froude number ![]() $Fr_h$ in this context is to control the larger-scale anisotropy. Therefore, even in a strongly stratified flow with

$Fr_h$ in this context is to control the larger-scale anisotropy. Therefore, even in a strongly stratified flow with ![]() $Fr_h\lesssim 1$, (2.9) and (2.10) are still expected to be accurate provided

$Fr_h\lesssim 1$, (2.9) and (2.10) are still expected to be accurate provided ![]() $Re_b$ is sufficiently large.

$Re_b$ is sufficiently large.

When ![]() $Re_b$ becomes small, buoyancy starts to dominate inertia at even the smallest vertical scales, prohibiting the formation of an inertial range. Consequently, the relative magnitudes of the velocity and density derivatives in (2.7) and (2.8) are expected to be substantially different from those predicted by isotropy, eventually being dominated by the vertical derivative terms in the limit

$Re_b$ becomes small, buoyancy starts to dominate inertia at even the smallest vertical scales, prohibiting the formation of an inertial range. Consequently, the relative magnitudes of the velocity and density derivatives in (2.7) and (2.8) are expected to be substantially different from those predicted by isotropy, eventually being dominated by the vertical derivative terms in the limit ![]() $Re_b\ll 1$ as demonstrated (at least for

$Re_b\ll 1$ as demonstrated (at least for ![]() $\varepsilon$) numerically and experimentally by, e.g. de Bruyn Kops & Riley (Reference de Bruyn Kops and Riley2019), Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006), Praud, Fincham & Sommeria (Reference Praud, Fincham and Sommeria2005) and Fincham, Maxworthy & Spedding (Reference Fincham, Maxworthy and Spedding1996). Therefore, as suggested by Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006), it is reasonable to suppose that the ratios

$\varepsilon$) numerically and experimentally by, e.g. de Bruyn Kops & Riley (Reference de Bruyn Kops and Riley2019), Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006), Praud, Fincham & Sommeria (Reference Praud, Fincham and Sommeria2005) and Fincham, Maxworthy & Spedding (Reference Fincham, Maxworthy and Spedding1996). Therefore, as suggested by Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006), it is reasonable to suppose that the ratios ![]() $\varepsilon /S^2$ and

$\varepsilon /S^2$ and ![]() $\chi/(\partial \rho/\partial z)^2$ should be a function of

$\chi/(\partial \rho/\partial z)^2$ should be a function of ![]() $Re_b$, giving a natural way of constructing models for

$Re_b$, giving a natural way of constructing models for ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$ in stratified flows that take only vertical derivatives of velocity and density as inputs. The caveat of models constructed in this way is is that the additional required input

$\chi$ in stratified flows that take only vertical derivatives of velocity and density as inputs. The caveat of models constructed in this way is is that the additional required input ![]() $Re_b$ is itself by definition a function of the desired output

$Re_b$ is itself by definition a function of the desired output ![]() $\varepsilon.$ To circumvent this issue, we appeal to a surrogate buoyancy Reynolds number

$\varepsilon.$ To circumvent this issue, we appeal to a surrogate buoyancy Reynolds number

which at least varies monotonically with ![]() $Re_b$ (and which we note is implicitly invoked via the isotropic assumption when working with observational data).

$Re_b$ (and which we note is implicitly invoked via the isotropic assumption when working with observational data).

We wish to derive a local model for ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ that now depends on

$\chi _0$ that now depends on ![]() $Re_b^S$ as well as

$Re_b^S$ as well as ![]() $S^2$ and

$S^2$ and ![]() $\partial \rho /\partial z$. An important subtlety to note is the introduction of some ambiguity in defining a suitable surrogate buoyancy Reynolds number in the local picture due to how the (assumed) spatial averaging in (2.11) is interpreted. Such ambiguities can have appreciable effects on subsequently diagnosed turbulent statistics in stratified flows, as has been discussed by Arthur et al. (Reference Arthur, Venayagamoorthy, Koseff and Fringer2017) and Lewin & Caulfield (Reference Lewin and Caulfield2021). Because it describes anisotropy, which is inherently a non-local flow property, here we assume the ‘true’

$\partial \rho /\partial z$. An important subtlety to note is the introduction of some ambiguity in defining a suitable surrogate buoyancy Reynolds number in the local picture due to how the (assumed) spatial averaging in (2.11) is interpreted. Such ambiguities can have appreciable effects on subsequently diagnosed turbulent statistics in stratified flows, as has been discussed by Arthur et al. (Reference Arthur, Venayagamoorthy, Koseff and Fringer2017) and Lewin & Caulfield (Reference Lewin and Caulfield2021). Because it describes anisotropy, which is inherently a non-local flow property, here we assume the ‘true’ ![]() $Re_b^S$ is a bulk value averaged over the domain i.e.

$Re_b^S$ is a bulk value averaged over the domain i.e. ![]() $Re_b^S = Fr^2\langle S^2\rangle$ (since

$Re_b^S = Fr^2\langle S^2\rangle$ (since ![]() $\langle \partial \rho _t /\partial z\rangle =-1$ by construction). Of course, in the observational setting where measurements are limited, a representative bulk value may be computed by averaging a suitably large section of a vertical profile, over a scale at least as big as the Ozmidov scale

$\langle \partial \rho _t /\partial z\rangle =-1$ by construction). Of course, in the observational setting where measurements are limited, a representative bulk value may be computed by averaging a suitably large section of a vertical profile, over a scale at least as big as the Ozmidov scale ![]() $L_O$. However, this method may be ineffective when the flow is spatially separated into dynamically distinct regions as discussed by Portwood et al. (Reference Portwood, de Bruyn Kops, Taylor, Salehipour and Caulfield2016). This issue is circumvented by the data-driven model we present in § 2.4, which implicitly ‘learns’ the relevant non-local region of influence on local dissipation rates. With a suitably defined

$L_O$. However, this method may be ineffective when the flow is spatially separated into dynamically distinct regions as discussed by Portwood et al. (Reference Portwood, de Bruyn Kops, Taylor, Salehipour and Caulfield2016). This issue is circumvented by the data-driven model we present in § 2.4, which implicitly ‘learns’ the relevant non-local region of influence on local dissipation rates. With a suitably defined ![]() $Re_b^S$, we propose the following model:

$Re_b^S$, we propose the following model:

where ![]() $f(Re_b^S)$ and

$f(Re_b^S)$ and ![]() $g(Re_b^S)$ are functions to be determined empirically, based on theoretical constraints. For

$g(Re_b^S)$ are functions to be determined empirically, based on theoretical constraints. For ![]() $Re_b^S\gg 1$ the flow is locally isotropic and, in the mean sense, we should have

$Re_b^S\gg 1$ the flow is locally isotropic and, in the mean sense, we should have ![]() $f\to 15/4$,

$f\to 15/4$, ![]() $g\to 3$, as in (2.9) and (2.10). For

$g\to 3$, as in (2.9) and (2.10). For ![]() $Re_b^S\ll 1$, horizontal diffusion becomes negligible and we therefore have

$Re_b^S\ll 1$, horizontal diffusion becomes negligible and we therefore have ![]() $f\to 1$,

$f\to 1$, ![]() $g\to 1$ (Riley et al. Reference Riley, Metcalfe and Weissman1981; Godoy-Diana et al. Reference Godoy-Diana, Chomaz and Billant2004; de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). Data from the decaying turbulence simulation indicate a roughly linear transition from the viscously dominated

$g\to 1$ (Riley et al. Reference Riley, Metcalfe and Weissman1981; Godoy-Diana et al. Reference Godoy-Diana, Chomaz and Billant2004; de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019). Data from the decaying turbulence simulation indicate a roughly linear transition from the viscously dominated ![]() $Re_b^S\ll 1$ regime to the isotropic

$Re_b^S\ll 1$ regime to the isotropic ![]() $Re_b^S\gg 1$ regime, as shown in figure 1. Therefore, to match the required asymptotic behaviour and linear transition region, we propose the following models for the dimensionless functions

$Re_b^S\gg 1$ regime, as shown in figure 1. Therefore, to match the required asymptotic behaviour and linear transition region, we propose the following models for the dimensionless functions ![]() $f$ and

$f$ and ![]() $g$:

$g$:

Here, the constants ![]() $b$ and

$b$ and ![]() $d$ loosely represent the transition value between stratified turbulent and stratified viscous regimes, whilst

$d$ loosely represent the transition value between stratified turbulent and stratified viscous regimes, whilst ![]() $a$ and

$a$ and ![]() $c$ characterise the width of the transition region. These can be specified by a simple least-squares optimisation procedure that compares the functional approximations with the DNS data at the values of

$c$ characterise the width of the transition region. These can be specified by a simple least-squares optimisation procedure that compares the functional approximations with the DNS data at the values of ![]() $Re_b^S$ indicated in figure 1: it is found that empirical values of

$Re_b^S$ indicated in figure 1: it is found that empirical values of ![]() $a=1, b=0.8$ and

$a=1, b=0.8$ and ![]() $c=d=0.9$ give an extremely close qualitative fit of the empirical function curves with the domain-averaged data, as can be seen in the figure. Note that in general

$c=d=0.9$ give an extremely close qualitative fit of the empirical function curves with the domain-averaged data, as can be seen in the figure. Note that in general ![]() $a\neq c$ and

$a\neq c$ and ![]() $b\neq d$ due to the difference in the smallest scales of motion caused by a non-unity Prandtl number

$b\neq d$ due to the difference in the smallest scales of motion caused by a non-unity Prandtl number ![]() $Pr=7$, although in practice we see that these differences are relatively small (and largely insignificant).

$Pr=7$, although in practice we see that these differences are relatively small (and largely insignificant).

Figure 1. Values of the ratios (a) ![]() $Re \varepsilon /(\langle S^2\rangle )$ and (b)

$Re \varepsilon /(\langle S^2\rangle )$ and (b) ![]() $Re\,Pr\,Fr^2\chi /(\langle \rho _z^2\rangle )$ for different values of the surrogate buoyancy Reynolds number

$Re\,Pr\,Fr^2\chi /(\langle \rho _z^2\rangle )$ for different values of the surrogate buoyancy Reynolds number ![]() $Re_b^S$. Here,

$Re_b^S$. Here, ![]() $Re_b^S$ is computed by interpreting the averaging in (2.11) as over the entire domain. The solid blue lines represent the empirical model functions (2.12) and (2.13), whilst the dashed lines represent the isotropic ratios from (2.9) and (2.10). Black markers represent the true values obtained from domain-averaged DNS quantities. The Jupyter notebook for producing the figure can be found https://www.cambridge.org/S0022112023006791/JFM-Notebooks/files/Fig1/fig1.ipynb.

$Re_b^S$ is computed by interpreting the averaging in (2.11) as over the entire domain. The solid blue lines represent the empirical model functions (2.12) and (2.13), whilst the dashed lines represent the isotropic ratios from (2.9) and (2.10). Black markers represent the true values obtained from domain-averaged DNS quantities. The Jupyter notebook for producing the figure can be found https://www.cambridge.org/S0022112023006791/JFM-Notebooks/files/Fig1/fig1.ipynb.

There have been a limited number of attempts to model the influence of strong anisotropy on dissipation rates ![]() $\varepsilon$ and

$\varepsilon$ and ![]() $\chi$. Weinstock (Reference Weinstock1981) proposes a modified model for

$\chi$. Weinstock (Reference Weinstock1981) proposes a modified model for ![]() $\varepsilon$ in stratified turbulence that depends on r.m.s. vertical velocities

$\varepsilon$ in stratified turbulence that depends on r.m.s. vertical velocities ![]() $\langle w^2 \rangle$ and the buoyancy frequency

$\langle w^2 \rangle$ and the buoyancy frequency ![]() $N$. Fossum et al. (Reference Fossum, Wingstedt and Reif2013) instead suggest a model for

$N$. Fossum et al. (Reference Fossum, Wingstedt and Reif2013) instead suggest a model for ![]() $\varepsilon$ in terms of

$\varepsilon$ in terms of ![]() $\chi$, pointing out that

$\chi$, pointing out that ![]() $\chi$ itself may be replaced by vertical density derivatives via a suitable tuning coefficient. To our knowledge, the empirical models (2.12) and (2.13) constitute a first attempt to construct an explicit theoretical model for dissipation rates during the transition of such a flow from a near-isotropic to a buoyancy-dominated regime based on vertical derivatives of velocity and density only. Based on the theoretical scaling arguments discussed above, the functional forms of

$\chi$ itself may be replaced by vertical density derivatives via a suitable tuning coefficient. To our knowledge, the empirical models (2.12) and (2.13) constitute a first attempt to construct an explicit theoretical model for dissipation rates during the transition of such a flow from a near-isotropic to a buoyancy-dominated regime based on vertical derivatives of velocity and density only. Based on the theoretical scaling arguments discussed above, the functional forms of ![]() $f$ and

$f$ and ![]() $g$ are expected to remain independent of flow configuration, with the relevant asymptotic behaviour and linear transition having been observed in a number of previous forced and freely evolving DNS studies, e.g. Lang & Waite (Reference Lang and Waite2019), Brethouwer et al. (Reference Brethouwer, Billant, Lindborg and Chomaz2007) and Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006). The transition value of

$g$ are expected to remain independent of flow configuration, with the relevant asymptotic behaviour and linear transition having been observed in a number of previous forced and freely evolving DNS studies, e.g. Lang & Waite (Reference Lang and Waite2019), Brethouwer et al. (Reference Brethouwer, Billant, Lindborg and Chomaz2007) and Hebert & de Bruyn Kops (Reference Hebert and de Bruyn Kops2006). The transition value of ![]() $Re_b^S$ and width of the transition region represented by the values of

$Re_b^S$ and width of the transition region represented by the values of ![]() $a$,

$a$, ![]() $b$,

$b$, ![]() $c$ and

$c$ and ![]() $d$ also appear to be roughly consistent across studies, although a more detailed investigation would be required to quantitatively verify this. Here, (2.12) and (2.13) serve as useful comparative benchmarks for the data-driven model outlined below that are more strict than the isotropic models (2.9) and (2.10).

$d$ also appear to be roughly consistent across studies, although a more detailed investigation would be required to quantitatively verify this. Here, (2.12) and (2.13) serve as useful comparative benchmarks for the data-driven model outlined below that are more strict than the isotropic models (2.9) and (2.10).

2.4. Constructing a data-driven model

2.4.1. Model architecture

Here, we construct a general model for computing local values of ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ from measurements of vertical derivatives of velocity and density, without explicitly appealing to arguments that rely on mean averaged quantities used for deriving the theoretical models above. We restrict ourselves to mimicking the oceanographic microstructure scenario where only vertical column measurements are available. To facilitate a comparison between this approach and the explicit theoretical models described above, we allow

$\chi _0$ from measurements of vertical derivatives of velocity and density, without explicitly appealing to arguments that rely on mean averaged quantities used for deriving the theoretical models above. We restrict ourselves to mimicking the oceanographic microstructure scenario where only vertical column measurements are available. To facilitate a comparison between this approach and the explicit theoretical models described above, we allow ![]() $\varepsilon _0$ to depend on local values of

$\varepsilon _0$ to depend on local values of ![]() $S^2$ and

$S^2$ and ![]() $\chi _0$ to depend on local values of

$\chi _0$ to depend on local values of ![]() $(\partial \rho /\partial z)^2$. We additionally allow both

$(\partial \rho /\partial z)^2$. We additionally allow both ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ to depend on the local value of the background (total) density gradient field

$\chi _0$ to depend on the local value of the background (total) density gradient field ![]() $\partial \rho _t/\partial z$, which is suggested to be correlated with

$\partial \rho _t/\partial z$, which is suggested to be correlated with ![]() $\chi$ and

$\chi$ and ![]() $\varepsilon$ in stratified turbulent flows in the sense that regions that are, or are close to being, statically unstable (positive background density gradient) are more likely to support more energetic turbulence (Portwood et al. Reference Portwood, de Bruyn Kops, Taylor, Salehipour and Caulfield2016; Caulfield Reference Caulfield2021). We anticipate that the precise dependencies on the inputs will be a function of the interaction of turbulence with buoyancy effects as would normally be characterised by the buoyancy Reynolds number, which we assume is unavailable as an input. We also might expect non-local correlations between the inputs with themselves and each other to be important. Finally, to capture the tails of the p.d.f.s of values of

$\varepsilon$ in stratified turbulent flows in the sense that regions that are, or are close to being, statically unstable (positive background density gradient) are more likely to support more energetic turbulence (Portwood et al. Reference Portwood, de Bruyn Kops, Taylor, Salehipour and Caulfield2016; Caulfield Reference Caulfield2021). We anticipate that the precise dependencies on the inputs will be a function of the interaction of turbulence with buoyancy effects as would normally be characterised by the buoyancy Reynolds number, which we assume is unavailable as an input. We also might expect non-local correlations between the inputs with themselves and each other to be important. Finally, to capture the tails of the p.d.f.s of values of ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$ accurately over a given region, it is beneficial to introduce a statistical component to the model whereby outputs are sampled from a distribution rather than predicted deterministically. This motivates the following log-normal models for local values of

$\chi _0$ accurately over a given region, it is beneficial to introduce a statistical component to the model whereby outputs are sampled from a distribution rather than predicted deterministically. This motivates the following log-normal models for local values of ![]() $\varepsilon _0$ and

$\varepsilon _0$ and ![]() $\chi _0$:

$\chi _0$:

where the means and variances of the distributions ![]() $\mu _a$ and

$\mu _a$ and ![]() $\sigma _a$ are functions of the inputs, which we write generally as

$\sigma _a$ are functions of the inputs, which we write generally as ![]() $X_a$ and

$X_a$ and ![]() $Y$, for

$Y$, for ![]() $a=\varepsilon, \chi$. We have

$a=\varepsilon, \chi$. We have ![]() $X_\varepsilon =S^2$ and

$X_\varepsilon =S^2$ and ![]() $X_\chi =(\partial \rho /\partial z)^2$, whilst

$X_\chi =(\partial \rho /\partial z)^2$, whilst ![]() $Y=\partial \rho _t/\partial z$ is the same for both models. The functions

$Y=\partial \rho _t/\partial z$ is the same for both models. The functions ![]() $\mu _a$ and

$\mu _a$ and ![]() $\sigma _a$ are to be determined, or ‘learned’ from the data

$\sigma _a$ are to be determined, or ‘learned’ from the data

The interval ![]() $[-\alpha,\alpha ]$, where

$[-\alpha,\alpha ]$, where ![]() $\alpha$ is a user-specified constant, represents the vertical height of the surrounding window of input values that the local output can depend on. We choose to make predictions of the logarithm of dissipation values for improved convergence during model training. Note that, because the

$\alpha$ is a user-specified constant, represents the vertical height of the surrounding window of input values that the local output can depend on. We choose to make predictions of the logarithm of dissipation values for improved convergence during model training. Note that, because the ![]() $\mu _a$ and

$\mu _a$ and ![]() $\sigma _a$ are functions of the inputs, the global distribution of outputs (i.e. over an entire vertical column) is not necessarily constrained itself to be log-normal, although in practice this is a classical theoretical prediction for isotropic turbulence (Kolmogorov Reference Kolmogorov1962; Oboukhov Reference Oboukhov1962) and has been found to be accurate in many stratified decaying turbulent flows (de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019).

$\sigma _a$ are functions of the inputs, the global distribution of outputs (i.e. over an entire vertical column) is not necessarily constrained itself to be log-normal, although in practice this is a classical theoretical prediction for isotropic turbulence (Kolmogorov Reference Kolmogorov1962; Oboukhov Reference Oboukhov1962) and has been found to be accurate in many stratified decaying turbulent flows (de Bruyn Kops & Riley Reference de Bruyn Kops and Riley2019).

The task is now to choose a functional form for ![]() $\mu _a$ and

$\mu _a$ and ![]() $\sigma _a$. We use a deep convolutional neural network which consists of several layers, each being a nonlinear function of outputs from the previous layer with

$\sigma _a$. We use a deep convolutional neural network which consists of several layers, each being a nonlinear function of outputs from the previous layer with ![]() $j$ parameters

$j$ parameters ![]() $\beta ^L_j$ within each layer

$\beta ^L_j$ within each layer ![]() $L$ (weights and biases) whose values are determined through an iterative optimisation procedure. Convolutional layers have a unique functional form which is highly suited to identifying structures or patterns in images, making them a natural choice for our model whose inputs are vertical columns of data with spatial structures over multiple different length scales. With the eventual outputs from the model being sampled from a distribution whose parameters are learnt by the network, we refer to the entire architecture as a PCNN, falling in a broader class of Bayesian deep learning frameworks known as mixture density networks.

$L$ (weights and biases) whose values are determined through an iterative optimisation procedure. Convolutional layers have a unique functional form which is highly suited to identifying structures or patterns in images, making them a natural choice for our model whose inputs are vertical columns of data with spatial structures over multiple different length scales. With the eventual outputs from the model being sampled from a distribution whose parameters are learnt by the network, we refer to the entire architecture as a PCNN, falling in a broader class of Bayesian deep learning frameworks known as mixture density networks.

We can write the inputs to the network in discrete form as ![]() $\{X_a(z+\delta )\}_{\delta \in [-\alpha, \alpha ]} = \boldsymbol {X}_a = \{X_{a_1},X_{a_2},\ldots,X_{a_m} \}, $

$\{X_a(z+\delta )\}_{\delta \in [-\alpha, \alpha ]} = \boldsymbol {X}_a = \{X_{a_1},X_{a_2},\ldots,X_{a_m} \}, $ ![]() $\{ Y(z+\delta )\}_{\delta \in [-\alpha, \alpha ]} = \boldsymbol {Y} = \{Y_1,Y_2,\ldots,Y_m\}$, where the

$\{ Y(z+\delta )\}_{\delta \in [-\alpha, \alpha ]} = \boldsymbol {Y} = \{Y_1,Y_2,\ldots,Y_m\}$, where the ![]() $X_{ai}$ and

$X_{ai}$ and ![]() $Y_i$ represent

$Y_i$ represent ![]() $m$ equispaced values sampled in the interval

$m$ equispaced values sampled in the interval ![]() $z\in [-\alpha, \alpha ]$. This is of course the natural representation for DNS where values of physical fields are discretely sampled at each grid point, and for oceanographic data where values are sampled at a particular frequency as the microstructure probe descends in the water column. Finally then, we write our data-driven neural network model as

$z\in [-\alpha, \alpha ]$. This is of course the natural representation for DNS where values of physical fields are discretely sampled at each grid point, and for oceanographic data where values are sampled at a particular frequency as the microstructure probe descends in the water column. Finally then, we write our data-driven neural network model as

where ![]() $\beta _j^L$ and

$\beta _j^L$ and ![]() $\gamma _j^L$ are trainable parameters. The PCNN used here comprises three fully connected convolutional layers with 32 filters and a kernel with a vertical height of

$\gamma _j^L$ are trainable parameters. The PCNN used here comprises three fully connected convolutional layers with 32 filters and a kernel with a vertical height of ![]() $3$ grid points, with a max-pooling layer between the second and third convolutional layers to reduce the dimensionality. The outputs from the convolutional layers are reshaped and fed into a dense layer which outputs values for

$3$ grid points, with a max-pooling layer between the second and third convolutional layers to reduce the dimensionality. The outputs from the convolutional layers are reshaped and fed into a dense layer which outputs values for ![]() $\mu _a$ and

$\mu _a$ and ![]() $\sigma _a$. For activation functions, we use the standard rectified linear unit, or ReLU, function in all layers. In total, there are roughly 60 000 training parameters to be optimised by fitting to the data.

$\sigma _a$. For activation functions, we use the standard rectified linear unit, or ReLU, function in all layers. In total, there are roughly 60 000 training parameters to be optimised by fitting to the data.

2.4.2. Dataset and training

A schematic outlining the process of transforming the input columns from the DNS into local outputs of ![]() $\varepsilon_0$ is shown in figure 2. The model for

$\varepsilon_0$ is shown in figure 2. The model for ![]() $\chi_0$ has an identical internal structure (highlighted in grey in the figure). To build the training dataset, we randomly sample vertical columns of data from the three-dimensional field of the simulation described in table 1 at time steps

$\chi_0$ has an identical internal structure (highlighted in grey in the figure). To build the training dataset, we randomly sample vertical columns of data from the three-dimensional field of the simulation described in table 1 at time steps ![]() $T=0.5,1,2,4,7.7$, to give a total of approximately

$T=0.5,1,2,4,7.7$, to give a total of approximately ![]() $n=150\ 000$ training samples. Note we intentionally withhold the time step

$n=150\ 000$ training samples. Note we intentionally withhold the time step ![]() $T=6$ for testing. The chosen height

$T=6$ for testing. The chosen height ![]() $2\alpha$ of input columns is a free parameter; for a fixed grid spacing this is determined by the number of grid points in the column

$2\alpha$ of input columns is a free parameter; for a fixed grid spacing this is determined by the number of grid points in the column ![]() $m$. Here, we take

$m$. Here, we take ![]() $m=50$, which is largely justified by the sensitivity analysis detailed in § 4 demonstrating that outputs are relatively insensitive to inputs outside of this surrounding radius. A more thorough analysis can be found in the supplementary material available at https://doi.org/10.1017/jfm.2023.679. We then compute scaled values of local shear and vertical density gradients for each grid point within the columns

$m=50$, which is largely justified by the sensitivity analysis detailed in § 4 demonstrating that outputs are relatively insensitive to inputs outside of this surrounding radius. A more thorough analysis can be found in the supplementary material available at https://doi.org/10.1017/jfm.2023.679. We then compute scaled values of local shear and vertical density gradients for each grid point within the columns

The resulting inputs are written as ![]() $\boldsymbol {X}_a^j=\{X^j_{a_1},\ldots, X^j_{a_m}\}$ and

$\boldsymbol {X}_a^j=\{X^j_{a_1},\ldots, X^j_{a_m}\}$ and ![]() $\boldsymbol {Y}^j=\{Y^j_1,\ldots, Y^j_{m}\}$, whilst the labels are denoted

$\boldsymbol {Y}^j=\{Y^j_1,\ldots, Y^j_{m}\}$, whilst the labels are denoted ![]() $\varepsilon ^j_0$ and

$\varepsilon ^j_0$ and ![]() $\chi _0^j$. The labels are the exact values of the local dissipation defined in (2.7) evaluated at the midpoint of the input column, to be compared against model predictions. The prefactors of

$\chi _0^j$. The labels are the exact values of the local dissipation defined in (2.7) evaluated at the midpoint of the input column, to be compared against model predictions. The prefactors of ![]() $Re$,

$Re$, ![]() $Fr$ and

$Fr$ and ![]() $Pr$ in front of

$Pr$ in front of ![]() $X_a$ and

$X_a$ and ![]() $Y$ are chosen based on dimensional considerations with (2.7) and (2.8), so that the model can be evaluated on data from simulations with different

$Y$ are chosen based on dimensional considerations with (2.7) and (2.8), so that the model can be evaluated on data from simulations with different ![]() $Re$,

$Re$, ![]() $Fr$ and

$Fr$ and ![]() $Pr$. Indeed, using dimensional inputs in a black-box model such as ours results in the undesirable situation of outputs whose physical dimensions are unknown. Note that, in our notation,

$Pr$. Indeed, using dimensional inputs in a black-box model such as ours results in the undesirable situation of outputs whose physical dimensions are unknown. Note that, in our notation, ![]() $X^j_{ai}$ denotes the value of the

$X^j_{ai}$ denotes the value of the ![]() $i$th grid point from input

$i$th grid point from input ![]() $\boldsymbol {X}^j_a$, where the superscript

$\boldsymbol {X}^j_a$, where the superscript ![]() $j\in \{1,\ldots, n\}$ indexes each individual data column.

$j\in \{1,\ldots, n\}$ indexes each individual data column.

Figure 2. Schematic outlining the process of obtaining data from the DNS in vertical column format and the PCNN model architecture.

The test set is a separate dataset from the same simulation used to assess qualitatively and quantitatively the performance of the network, which comprises two-dimensional (2-D) vertical snapshots of the flow at each time step obtained by sampling 250 horizontally adjacent columns, scaled in the same way as above. These columns can be fed through the network successively to obtain a model output of the entire 2-D snapshot. By design, the test set does not contain any columns of data previously sampled for the training set, and also contains data from the entirely unseen time step ![]() $T=6$ as noted above.

$T=6$ as noted above.

The training and test data are normalised to improve convergence rates during model training (cf. ‘whitening’ in the machine learning literature, for instance LeCun et al. Reference LeCun, Bottou, Genevieve and Müller2012)

Here, ![]() $\bar {X}$ represents the mean of the inputs

$\bar {X}$ represents the mean of the inputs ![]() $\boldsymbol {X}_a$, and

$\boldsymbol {X}_a$, and ![]() $\varSigma _{X_a}$ and

$\varSigma _{X_a}$ and ![]() $\varSigma _Y$ are similarly the standard deviations of the inputs

$\varSigma _Y$ are similarly the standard deviations of the inputs ![]() $\boldsymbol {X}_a$ and

$\boldsymbol {X}_a$ and ![]() $\boldsymbol {Y}$. These statistics are computed over the training set only as is standard practice. Note that we intentionally do not scale

$\boldsymbol {Y}$. These statistics are computed over the training set only as is standard practice. Note that we intentionally do not scale ![]() $\boldsymbol {Y}$ by its mean value. This is because the sign of the total vertical density derivative indicates whether a given grid point is in a statically stable density region (

$\boldsymbol {Y}$ by its mean value. This is because the sign of the total vertical density derivative indicates whether a given grid point is in a statically stable density region (![]() $\partial \rho _t /\partial z <0$) or a statically unstable density region (

$\partial \rho _t /\partial z <0$) or a statically unstable density region (![]() $\partial \rho _t/ \partial z >0$). The static stability of a region of stratified turbulent flow has been previously linked to the intensity of turbulence and therefore

$\partial \rho _t/ \partial z >0$). The static stability of a region of stratified turbulent flow has been previously linked to the intensity of turbulence and therefore ![]() $\varepsilon$; this is a dynamical feature we would like to be able to test for in our model.

$\varepsilon$; this is a dynamical feature we would like to be able to test for in our model.

Training is carried out by iteratively optimising a specified loss function ![]() $\mathcal {L}$ which measures the accuracy of model predictions over batches of training data that are successively and then repeatedly fed through the network. For the PCNN which predicts a distribution of outputs, we use a negative log-likelihood loss function which is a quantitative measure of the difference between the frequency distribution (or p.d.f.) of outputs and distribution of true labels over the batch

$\mathcal {L}$ which measures the accuracy of model predictions over batches of training data that are successively and then repeatedly fed through the network. For the PCNN which predicts a distribution of outputs, we use a negative log-likelihood loss function which is a quantitative measure of the difference between the frequency distribution (or p.d.f.) of outputs and distribution of true labels over the batch

Here, ![]() $p^j$ is the value of the probability density function of the predicted distribution

$p^j$ is the value of the probability density function of the predicted distribution ![]() $\mathcal {N}(\mu ^j, \sigma ^j)$ of dissipation for column

$\mathcal {N}(\mu ^j, \sigma ^j)$ of dissipation for column ![]() $j$ evaluated at the true label

$j$ evaluated at the true label ![]() $\varepsilon ^j$. The Adam optimiser (Kingma & Ba Reference Kingma and Ba2014) with a learning rate of 0.005 is used to perform stochastic gradient descent. Good convergence was found after approximately 200 epochs (evaluations on each batch), which took around 5 minutes using TensorFlow on an NVIDIA Volta V100 GPU.

$\varepsilon ^j$. The Adam optimiser (Kingma & Ba Reference Kingma and Ba2014) with a learning rate of 0.005 is used to perform stochastic gradient descent. Good convergence was found after approximately 200 epochs (evaluations on each batch), which took around 5 minutes using TensorFlow on an NVIDIA Volta V100 GPU.

3. Results

3.1. Model deployment

Outputs ![]() $\varepsilon _0$ and