1. Introduction

Sometimes, doing two things together is easier than doing only one.

An outstanding and fascinating fact about bilingualism that has emerged over and over is that the two languages a bilingual person knows are always active to some degree when they are engaged in tasks of various types, even those explicitly requiring one language only. This has been found in reading tasks (Dijkstra & Kroll, Reference Dijkstra and Kroll2005), in listening tasks (Marian & Spivey, Reference Marian and Spivey2003), in spontaneous speech (Kroll et al., Reference Kroll, Bobb and Wodniecka2006), and in a family of Stroop tasks (Giezen et al., Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015). Cross-language activation has been shown to be effective at various levels of processing. For instance, cross-linguistic priming effects have been found at both lexical (Finkbeiner et al., Reference Finkbeiner, Forster, Nicol and Nakamura2004) and syntactic (Loebell & Bock, Reference Loebell and Bock2003) levels. These effects are not only restricted to languages sharing a similar writing or phonological system: they were documented in English–Japanese bilinguals (Ikeda, Reference Ikeda1998), and even across modalities in bimodal sign-speech bilinguals (Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011; Ormel et al., Reference Ormel, Hermans, Knoors and Verhoeven2012; Shook & Marian, Reference Shook and Marian2012). In sum, there is growing consensus that bilinguals do not “unplug” the language that they are not using, even when it would be beneficial to do so.

Within this framework, the case of bimodal bilingualism is the focus of our paper. Bimodal bilinguals are competent users of a sign language and a spoken language, and thus have access to two largely independent articulatory channels: the visual-manual channel and the auditory-vocal channel. In this setting, no articulatory constraint prevents bimodal bilinguals from using both languages simultaneously, which makes them an ideal test bed for investigating the interaction of the two languages and the limits of their co-activation beyond articulatory constraints. The possibility of articulating two languages simultaneously unveils important questions about the nature of their representations and the possible costs or benefits associated with accessing two representations at the same time.

Among bimodal bilinguals, we shall only focus on hearing bilinguals in this paper, and in particular, on populations of CODAs (Children Of Deaf Adults), hearing adults who acquired spontaneously the sign language in their family and the spoken language in the larger surrounding environment.

The paper is organized as follows: section 1.1 provides an overview of the specificities of language mixing in bimodal bilinguals’ production, and section 1.2. summarizes the few experimental results available on their comprehension, which is the focus of the present investigation. Section 2 introduces the present study, outlining the main hypotheses at stake. Section 3 presents the experimental methods; section 4 illustrates their results. Section 5 is a discussion, and section 6 concludes the paper.

1.1. Code-blending in bimodal bilinguals: production

The possibility of simultaneous two-language use is fully exploited in bimodal bilinguals. Differently from unimodal bilinguals, they tend not to switch from one language to the other during the same utterance, sentence, or phrase (Muysken, Reference Muysken2000). Rather, they prefer to code-blend (Emmorey et al., Reference Emmorey, Borinstein, Thompson and Gollan2008), meaning that they sometimes produce fragments belonging to the two languages simultaneously. This appears to be true for all the bimodal populations that have been observed so far, be they adults or children (American Sign Language (ASL)–English in Bishop & Hicks, Reference Bishop and Hicks2005; Dutch Sign Language (NGT)–Dutch in Baker & Van den Bogaerde, Reference Baker, Van den Bogaerde, Plaza-Pust and Morales-López2008; Quebec Sign Language (LSQ)–French in Petitto et al., Reference Petitto, Katerlos, Levy, Gauna, Tetreault and Ferraro2001; Brazilian Sign Language (LIBRAS)–Portuguese in de Quadros, Reference de Quadros2018; Italian Sign Language (LIS)–Italian in Bishop et al., Reference Bishop, Hicks, Bertone and Sala2007, Donati & Branchini, Reference Donati, Branchini, Roberts and Biberauer2013, Branchini & Donati, Reference Branchini and Donati2016; Finnish Sign Language (FinSL)–Finnish in Kanto et al., Reference Kanto, Huttunen and Laakso2013).

For example, Emmorey et al. (Reference Emmorey, Borinstein, Thompson and Gollan2008) analyzed the mixed utterances produced by a group of American adult CODAs in spontaneous conversation and narrative tasks, and found that code-blending accounted for 36% of the overall production and 98% of the mixing data. Similar patterns have been reported for KODAS (i.e., Kids Of Deaf Adults, a population of hearing bimodal bilingual children: NGT/Dutch – Baker & Van den Bogaerde, Reference Baker, Van den Bogaerde, Plaza-Pust and Morales-López2008; LSQ/French – Petitto et al., Reference Petitto, Katerlos, Levy, Gauna, Tetreault and Ferraro2001). While the vast majority of studies focuses on hearing bimodal bilinguals, and so do we in this paper, this pattern appears to extend to Deaf children and adult bilinguals with oral training, at least according to the few studies available (e.g., Fung & Tang, Reference Fung and Tang2016; Rinaldi & Caselli, Reference Rinaldi and Caselli2014; Rinaldi et al., Reference Rinaldi, Lucioli, Sanalitro and Caselli2021). In a group of adult Deaf bilinguals of Hong Kong Sign Language (HKSL)–Cantonese observed by Fung and Tang (Reference Fung and Tang2016), code-blends accounted for around 40% of the overall production, and above 95% of mixing data.

Going back to hearing bilinguals, in the vast majority of cases reported in the literature, code-blended productions are syntactically and semantically congruent – hence exhibiting aligned and semantically equivalent signed and spoken content: 81% for Emmorey et al. (Reference Emmorey, Borinstein, Thompson and Gollan2008); more than 80% in the Dutch study; 75% in the Canadian one (Petitto et al., Reference Petitto, Katerlos, Levy, Gauna, Tetreault and Ferraro2001). An example of such syntactic/semantic congruent blends where this alignment extends to the entire sentence is given in (1), taken from Emmorey et al. (Reference Emmorey, Borinstein, Thompson and Gollan2008, p. 48).

In (1) two full semantically equivalent utterances are produced simultaneously and all their components are syntactically aligned.

As an important clarification, congruent blends are rarely fully so due to some fundamental differences between sign languages and spoken languages grammar. A number of functional elements that can typically surface in spoken languages, such as auxiliaries, prepositions, articles, clitics, and conjunctions, do not exist in sign languages as documented so far, and are therefore never «paired» in sign when uttered in a code-blend. The same holds, on the other hand, for so-called classifiers in sign languages, i.e., « morphemes with a non-specific meaning, expressed by particular configurations of the hands and which represent entities by denoting salient characteristics » (Zwitzerlood, Reference Zwitzerlood, Pfau, Steinbach and Woll2012, p. 158), which have no grammatical equivalent in spoken languages (see de Quadros et al. (Reference de Quadros, Davidson, Lillo-Martin and Emmorey2020) for an interesting analysis of what happens when blending involve depicting classifiers).

Interestingly, congruent code-blends can be produced in one of two different ways: the congruency and systematic alignment can be due to the fact that the two languages happen to prescribe the same word order which is thus consistent across the two strings. This is the case we shall see with LSF–French in the experiments described below, and it is further illustrated in (2) with an ASL–English blend. In the other case, congruency and alignment can be obtained by imposing the word order corresponding to one language only to both strings with a process akin to syntactic calque (illustrated in 3)Footnote 2.

Each language string in (2) exhibits a full-fledged utterance that would be acceptable even uttered alone, in a unimodal context. This is very different from what is observed in (3).

Here, both in (3a) and (3b) only one of the two language strings makes up a grammatical sentence that could be produced in a monolingual context, while the other string is a syntactic calque of the construction prescribed by what is often called the matrix language: ASL in (3a) and Italian in (3b).

Be that as it may, in most cases of spontaneous production, only some portions of the utterance are bimodal and congruently aligned, and only one language is fully responsible for the utterance content/word order. These partial blends are illustrated in (4), taken from Bishop (Reference Bishop2011, p. 225): (4a) is an English-dominant code-blend, while (4b) is an ASL-dominant one.

Turning to incongruent code-blends, where some mismatch occurs between the two language strings, some further distinctions need to be made, based on the empirically available cases. On the one hand, a code-blend can be semantically incongruent in one of two very different ways. First, it can be incongruent because the two language fragments express a slightly different content, as in (5).

In this interesting code-blend, observed in the spontaneous conversation of a 10 year old LIS–Italian KODA, the blend is incongruent because it contains two simultaneous sentences that have a slightly different meaning (there were no jellyfish/ I saw no jellyfish). In general, semantically incongruent code-blends of this kind are at best anecdotal according to the studies cited above. Semantic incongruence is typically more limited, with one of the two languages simply providing a piece of more specific information (Twitty/bird in (6)), but still compatible with a redundant description of the same event in the two blended languages

But code-blend can be semantically incongruent in a completely different sense, when the fragments simultaneously provided by the two languages are compositionally integrated to form a unique proposition. An example is given in (7).

This second type of semantically incongruent code-blend is slightly more frequent in existing corpora.

On the other hand, code-blends can be syntactically incongruent – hence displaying the same lexical items in the two languages but not aligned into the same word order. This can typically occur when the two languages display different word orders, as in LIS–Italian. An example is given in (8).

However, most pairs of languages involved in existing studies display similar word orders, at least as far as lexical items are concerned. So, it is possible that the predominance of syntactically congruent code-blends that is observed in those studies is in part due to the nature of the sample, which is biased in favor of congruent code-blends because the grammars of the paired languages have similar word ordersFootnote 4. In fact, works on code-blends in two syntactically distant languages like LIS and Italian found several cases of syntactically incongruent blends (Branchini & Donati, Reference Branchini and Donati2016; Donati & Branchini, Reference Donati, Branchini, Roberts and Biberauer2013). Even if Italian and LIS are rather free word order languages, the canonical word order in an out-of-the-blue context is divergent, with LIS preferentially head final (hence SOV, to say it simply: Branchini & Mantovan, Reference Branchini and Mantovan2020) and Italian consistently head initial (hence SVO). Although no quantitative measure is provided in those studies, Donati and Branchini observed a consistent production of syntactically incongruent code-blends that were semantically congruent. These data emerged in a variety of contexts, like spontaneous conversations, narrative tasks, and repetition tasks (see also Lillo-Martin et al., Reference Lillo-Martin, de Quadros and Chen Pichler2016 for a discussion). Crucially, these productions are congruent from the point of view of lexical access but misaligned with respect to the order of the constituents in the two languages. Their status should be further investigated, a point to which we shall shortly turn.

Summarizing, code-blending is very natural in the spontaneous production of bimodal bilingual CODAs, and it comes in various flavors. Blending can be full (when two complete utterances are produced simultaneously) or partial; syntactically and semantically congruent or incongruent. These distinctions are summarized in the graph below (Figure 1).

Figure 1. A schematic summary of the types of congruent and incongruent code-blendings

Going back to a more general level, as discussed by Emmorey et al. (Reference Emmorey, Borinstein, Thompson and Gollan2008), the fact that bimodal bilinguals produce more code-blending than code-switching is per se a confirmation that double selection is less costly for bilinguals than inhibition, at least at the lexical level. Otherwise, we would expect bimodal bilinguals to prefer code-switching over code-blending. Magnetoencephalography data have indeed confirmed that dominant language inhibition requires more cognitive resources than simultaneous lexical selection and production in both languages of bimodal bilinguals (Blanco-Elorrieta et al., Reference Blanco-Elorrieta, Emmorey and Pylkkänen2018).

Related to this, Emmorey et al. (Reference Emmorey, Petrich and Gollan2012) investigated the response latencies of a group of American CODAs (N = 18) and a group of hearing late learners of ASL (N = 20) in a picture-naming task where participants were asked to provide ASL signs only, English words only or ASL–English congruent blends. The authors found that blending did not slow lexical retrieval for ASL and actually facilitated access to low-frequency signs. Blending delayed speech production because bimodal bilinguals synchronized English and ASL lexical onsets.

1.2. Code-blending in hearing bimodal bilinguals: comprehension

Turning to comprehension, if dual lexical access is less costly than inhibition, we should also expect congruent blends to be processed more easily (hence quicker) than unimodal items. In the just cited study (Emmorey et al., Reference Emmorey, Petrich and Gollan2012), Emmorey and collaborators tested the comprehension of code-blended lexical items in 18 American CODAs and 25 hearing late learners of ASL. They used a semantic categorization task (Is the item edible?) and compared semantic decision latencies for ASL-English congruent lexical blends with those for ASL signs alone and audiovisual English words alone.

They did not directly compare RTs for ASL and English because possible confounding effects of language modality were present in the stimuli (i.e., visual-manual signs vs. auditory-vocal words). Thus, for each language, they compared RTs and accuracy in the unimodal condition and in the blended one. The results showed that code-blending speeded up responses with respect to both languages, clearly showing that L1/L2 simultaneous processing does not require additional cognitive resources compared to the processing of single L1 or L2 expressions.

To the best of our knowledge, no study has yet experimentally investigated the comprehension of sentential code-blends, where lexical items need not only to be accessed but also to be integrated syntactically, or compared the comprehension of syntactically congruent and incongruent blends.

2. The present study: comprehending lexical and sentential code-blendings

The first aim of the present study is to replicate Emmorey et al. (Reference Emmorey, Petrich and Gollan2012)'s original finding on the comprehension of code-blended lexical items with new populations of CODAs: LSF–French bilinguals and LIS–Italian bilinguals. We thus designed two fully parallel online experiments (LEX-F, for the French population, and LEX-I, for the Italian one) involving lexical items under three conditions: spoken language (French; Italian), sign language (LSF; LIS) and code-blending (LSF– French; LIS– Italian), asking participants to respond as soon as possible to a simple semantic categorization question (Is it edible?). The hypothesis is that if the advantage found by the original study is a consistent feature of bimodal bilingualism, we should be able to replicate the same results in two new language pairs.

But, as we have reported in the examples above, the types of code-blends that bimodal bilinguals spontaneously produce are not limited to isolated words, they can rather involve entire sentences or, at least, constituents. The second aim of this study is to test whether the advantage of blending observed in Emmorey et al. (Reference Emmorey, Petrich and Gollan2012) also holds when full sentential blends are involved.

We believe this question is of general importance for the study of bilingualism because the advantage observed by Emmorey and her group for blended lexical items can be seen as yet another reflex of language co-activation: given that lexical co-activation is costless for bilinguals, accessing two lexical entries in processing lexical blends is faster than suppressing one lexical entry in processing unimodal stimuli. But, as we mentioned earlier, most studies on language co-activation and its facility focus on pure lexical co-activation. A natural question that arises is: do bilinguals only co-activate lexical items, or do they extend this co-activation to syntactically organized items? Studying whether code-blended sentences provide a processing advantage over unimodal sentences might contribute important evidence for addressing this question. However, since processing sentential structures is notoriously more complex than single-word processing, co-activation at the syntactic level might also translate into a processing disadvantage.

As a terminological clarification, this question is meaningful if considered according to mainstream models of the syntax-lexicon interface (so-called lexicalism, stemming from Chomsky, Reference Chomsky, Jacobs and Rosenbaum1970). Within this view, syntax is feature-driven, in the sense that syntactic derivations read and obey the information that is encoded in lexical items. When bilinguals co-activate two lexical items in their two languages, do they also build two syntactic representations stemming from the features encoded in the words? Other “constructivist” models (e.g., Distributed Morphology, stemming from Halle & Marantz, Reference Halle, Marantz, Hale and Keyser1993), are even more permeated by the feature-driven approach up to the point that the building of syntactic structures is treated as a pre-requisite to lexical insertion. Under this view, the question would be: does co-activation work at the level of lexical insertion only, or does it proceed directly from features?

We designed two similar extensions of the original lexical comprehension experiment (SEN-F and SEN-I), this time including full sentences, again presented under three conditions: either spoken language (French; Italian), or sign language (LSF; LIS), or code-blending (LSF–French; LIS–Italian), asking participants to answer as soon as possible a simple truth value question (Is the sentence generally true?). Crucially, all the items were constructed in such a way that the compositional integration of the verb with each of its arguments was necessary to provide the required truth judgment. An illustration is given in (9).

In order to evaluate the truth of this sentence, whatever its condition of presentation, it is not enough to simply juxtapose the lexical meaning of the three words, but it is rather necessary to integrate them into a syntactic structure, by which ‘lions’ is the agent of the eating event, whose theme is ‘croissants’ (There is nothing wrong with the association of lions and eating, or with that of eating and croissant; still the sentences in (9) are generally false because lions do not typically eat croissant).

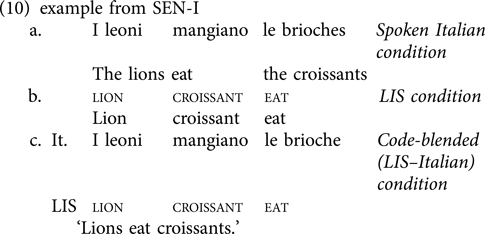

This is where the replication of the same design in two different language pairs becomes of special importance. French and LSF are both head initial languages and can exhibit the same word order being at the same time consistent with their grammar. Therefore, all the sentential code-blends used in the SEN-F experiment are syntactically and semantically congruent, as in (9). Italian and LIS are typologically opposite, and therefore the sentential code-blends of the SEN-I experiment are all syntactically (but, crucially, not semantically) incongruent. To illustrate, the equivalent of (9) in SEN-I corresponds to (10), where the blended condition (10c) is syntactically incongruent.

Here too, no matter the condition of the presentation, the only way to compute and assess the truth of the sentence is to syntactically integrate all of its components.

With this new pair of experiments, as we said, the first question we wanted to address was whether the same advantage of code-blending that Emmorey and her group found in the comprehension of single lexical items could be observed in a more complex task involving a full sentence. Concerning the impact of congruent and incongruent code-blends in this task, the hypotheses at stake are intricate because several factors may bias the outcome in various directions.

First, there might be a processing reflex of the advantage of double lexical access in the code-blend condition, which should result in a facilitation bias when the information is aligned, as already documented for categorization tasks in Emmorey et al. (Reference Emmorey, Petrich and Gollan2012), and might result in a penalization if the information is not aligned. Following this line, a facilitation effect is expected in the code-blended condition over the unimodal condition(s) in the congruent experiment (SEN-F), because lexical alignment across modality would speed processing (hence higher accuracy and shorter RTs). In the incongruent experiment (SEN-I), we expect a negative effect on the code-blended condition because lexical information coming from the two modalities is misaligned.

Second, there could be an effect induced by the temporality of access to the information needed to compute the truth value of the sentence. For example, the truth value of sentences with incongruent blends like (10c) can be computed earlier than their unimodal counterpart. In fact, the temporal alignment of ‘lion/leoni – croissant/eat’ in (10c) is already enough to determine whether the blended sentence is true or not, without waiting the end of the utterance. Such an effect is not expected in sentences with congruent blends like (9c) because the systematic alignment of each cross-modal element does not allow for an early access to the truth value of the sentence. Following this line, an advantage for the code-blended condition over the unimodal condition(s) is expected in the incongruent experiment (SEN-I) and not in the congruent experiment (SEN-F).

However, sentences are not just sequences of words/signs: they are associated with a syntactic structure, which also needs to be processed in order to perform the task of the experiments we designed. In this respect, there is a debate on the syntax of code-blended sentences displaying two syntactically congruent (cf. 2 and 9) and incongruent (cf. 8 and 10) strings. Lillo-Martin et al. (Reference Lillo-Martin, de Quadros and Chen Pichler2016) claim that blended sentences always involve only one shared structure that is lexicalized twice, either with the same linear order (as in congruent blends) or with two different ones (through two different linearization algorithms), as in incongruent blends. This proposal is based on the observation that in most production corpora the majority of code-blends are congruent, and that in many cases this happens because the word order of one language is imposed onto the blended language string, in what looks like a syntactic calque (see (3) above). Branchini and Donati (Reference Branchini and Donati2016) maintain that code-blended sentences can also be associated with two syntactic structures, one for each utterance, when both strings comply with the word order required by each language and calque is not at stake. These structures can be either similar (as in non-calque congruent blends à la LSF–French: (9)) or different (as in incongruent ones à la LIS–Italian: (10)). This alternative proposal is based on the observation that blends that involve no calque and are thus either congruent blends, like LSF–French, or syntactically incongruent, like LIS–Italian, display morphosyntactic properties that are strongly divergent from what is visible in calqued blends, and call for the activation of two distinct grammars, beyond simple linearizationFootnote 5.

According to Lillo-Martin et al.'s proposal, congruent blends have one structure which is linearized once, while incongruent blends have one structure which is linearized twice. Following this proposal, no bias is expected to favor or disfavor the blended condition over the unimodal condition(s) in the congruent experiment (SEN-F) because in all conditions there is just one structure to be processed. In the incongruent experiment (SEN-I), the expectation is that of a negative bias on the code-blended condition due to the costs of connecting two strings with different linear orders to a single structure.

According to Branchini and Donati's proposal, incongruent blends and non-calque congruent blends like the ones used in the stimuli of our experiments always involve two separate structures, therefore the expectation is that blended expressions are always penalized over non-blended expressions no matter whether congruent (SEN-F) or incongruent (SEN-I). The size of this penalization might be modulated by the divergence of the two syntactic structures to be processed. So, a larger negative bias is expected in SEN-I with respect to SEN-F.

Clearly, each factor might operate on an independent dimension, and a quantification of the strength of the biases is complicated to determine. Nonetheless, one can observe that the effect of the factors is predicted to be mostly negative for incongruent blends (penalization in the processing of the code-blended condition over the unimodal condition in SEN-I), where only early access to meaning might give a positive bias.

The picture is more differentiated for congruent blends: a processing facilitation is expected if one structure and one linearization is involved due to the positive effect of lexical alignment; while if two separate syntactic structures are involved, the positive effect of lexical alignment might be overshadowed by the extra costs imposed by syntax. Taking all these aspects into consideration, two alternative predictions can be formulated: Under one account, the combined effect of Lillo-Martin et al.'s proposal and the effects of lexical alignment should lead to facilitation in the processing of the code-blended condition over the unimodal condition in the congruent experiment (SEN-F).

Under the other account, the predictions for the congruent experiment (SEN-F) are more nuanced and crucially depend on the strength of the positive bias exerted by lexical alignment and the negative bias exerted by computing two structures (the Branchini and Donati's proposal) that are nevertheless very similar. Thus, if the positive bias and the negative bias have equal strength, the expectation is to find no difference between the bimodal and the unimodal conditions. If the positive bias is stronger than the negative bias, the expectation is to find a facilitation for the code-blended condition (in this particular case the two hypotheses make the same predictions), while if the negative bias is stronger than the positive one, the opposite prediction is expected.

3. Methods

The four experiments we conducted in this study were similar in methods and involved the same participants in each country: more precisely, the same group of participants in France took LEX-F and SEN-F, while the same group of participants in Italy took LEX-I and SEN-I. Within this experimental setup, results of the lexical experiment constitute a crucial baseline against which to interpret the sentence task results.

Due to the COVID-19 restrictions that have made the access to the peculiar population of hearing native bimodal bilinguals even more difficult, data collection was web-based. This is another reason why the replication of the original lexical experiment of Emmorey and colleagues was a necessary step before moving to the study of the effects of code-blending in sentence comprehension.

All materials are stored on the OSF repository of the present paper (osf.io/z8smh/).

3.1 Participants

Participants were recruited through flyers on social media and snowball sampling in both countries. The link with the experiment was sent via e-mail to 72 CODAs (the criteria of inclusion were to have at least one deaf signing parent and to self-define as bilingual), 40 from France (LSF-French bimodal bilinguals), and 32 from Italy (LIS-Italian bimodal bilinguals). However, several of them did not complete the experiment, therefore the final sample consisted of 25 French CODAs (20 females, mean age = 34 yrs, sd = 11yrs) and 25 Italian CODAs (17 females, mean age = 37 yrs, sd = 10 yrs)Footnote 6.

Before taking part to the experiments, participants answered a brief biographical questionnaire. As for French CODAs, 16 of them (64%) declared using French and LSF daily, 6 of them (24%) French on a daily basis and LSF several times a week, and the remaining 3 (12%) French on a daily basis and LSF several times a month. 7 French CODAs (28%) had high school diploma as their highest qualification, 5 (20%) a bachelor degree, 10 (40%) a master degree, 1 (4%) a doctoral degree, and 2 selected “Autre” (i.e., none of the proposed choices) in the questionnaire.

Considering Italian CODAs, 21 of them (84%) declared using Italian and LIS daily, whereas 2 of them (8%) declared using Italian every day and LIS several times a week, and other 2 (8%) Italian every day and LIS several times a month. 11 Italian CODAs (44%) had high school diploma as highest qualification, 8 (32%) a bachelor degree, 5 (20%) a master degree and 1 (4%) a doctoral degree.

All participants received 10€ for their participation, as an Amazon Gift Card.

The study was approved by the Ethics Committee of the Department of Psychology at the University of Milano-Bicocca. The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008.

3.2. Lexical task: materials

For the two language pairs, materials consisted of a list of 90 concrete nouns that could refer either to an edible/drinkable entity (e.g., cheese, pasta, juice; N = 45) or to a non-edible/non-drinkable entity (e.g., blanket, t-shirt, pencil; N = 45). As selection criteria, we considered only nouns for which the corresponding sign was not a compound, to maximize sign-word alignment in code-blends. Moreover, we selected nouns for which the corresponding sign does not require an obligatory oral componentFootnote 7, which would be lost in the code-blended condition. We also selected only signs that can have only one meaning when translated into the corresponding sign language. Last but not least, since lexical variation is widespread among sign languages, we selected common variants trying to avoid specific regional signs. Even if the vast majority of items between LEX-F and LEX-I corresponds to the same entity, our criteria made it impossible to have exactly the same list in the two experiments.

Two actors (a LSF-French CODA and a LIS-Italian CODA) produced the 90 target items plus 4 additional training items in three different conditions: spoken, signed, and code-blended (spoken + signed). Each CODA actor was filmed while producing the various items, maintaining a constant framing in the three modalities, with a frequency of 25 frames per second. For the spoken language condition, the two actors were instructed to keep their hands one above the other beneath their waist while pronouncing the words. The same position was used as starting and final position for each item in the sign language and in the code-blending condition. For the sign language condition, we asked our actors not to produce any mouth movement. We are aware that this is not an ecological choice, since signs are often accompanied by different kinds of movements of the mouths – however, since some of them have a direct link with spoken language, this option was the only one assuring that in the sign language condition, there wasn't any kind of spoken language input (for a thorough discussion see again footnote 7). Then, the video was edited to have one clip for each item in each condition.

The same person edited both French and Italian videos using Apple iMovie according to the following criteria: for items in the spoken condition, each clip was cut 9 frames (i.e., 360 ms) before and after the frames containing the actual sound of the word; for items in the signed and in the bimodal conditions, each clip began with the frame in which the hands began to move from the resting position and ended with the frame in which the hands were back still in the resting position.

For each language pair, the 90 target items were divided into three lists of 30 items each, 15 edible and 15 non-edible. For each language pair, the three lists were balanced for spoken language frequency, based on film subtitles corpora (for French – New et al., Reference New, Brysbaert, Veronis and Pallier2007; for Italian – Crepaldi et al., Reference Crepaldi, Amenta, Pawel, Keuleers and Brysbaert2015). Currently, there are no frequency databases for LSF nor LIS.

In their relevant language pair and for each condition, each participant received one list, with lists counterbalanced across participants, so that all items appeared in all conditions and no participant received the same item twice.

3.3. Sentence task: materials

Following the design of the lexical tasks, material for the two language pairs consisted of a list of 90 sentences that could be either generally true (e.g., Priests believe in God; N = 45) or false (e.g., Newborns drink beer; N = 45). All items were simple sentences containing a transitive or ditransitive verb and its arguments. To ensure some variability between items, some items also contained negation, and some other a modal verb.

Crucially, in the SEN-F experiment, including French and LSF sentences, word order was always the same (congruent word order: SVO) in the spoken and in the signed version of each sentence and consistent with both grammars, whereas in the SEN-I experiment, with Italian and LIS sentences, word order was always different (incongruent word order: SVO/SOV) as required by each grammar. Nouns and verbs were selected so that no sign required an obligatory mouth component, and signs displaying multiple meanings or regional variations were avoided.

To select our items, we performed several pilots, starting with one with French hearing non-signers and one with Italian hearing non-signers. They were asked to judge the truth value of a wider list of sentences presented in written French or in written Italian, respectively, and, for each country, those sentences that received an unexpected rating by more than 7% of the participants were excluded. The reduced set of French sentences was adapted into LSF and was also evaluated by two Deaf LSF signers, and the reduced set of Italian sentences was adapted into LIS and evaluated by one Deaf LIS signer. Those sentences judged as not clear by our Deaf informants were also not included as final experimental items.

The two CODA actors who produced the lexical items were also filmed producing the 90 target sentences plus 4 additional training items, in the same three conditions as in the lexical task. For each language pair, the recording setting was the same as for the lexical items and the cutting procedure was identical.

For each language pair, the 90 target items were divided into three lists of 30 items each, 15 true and 15 false, so that each list had a similar number of transitive/ditransitive sentences, modals, and negations.

In their relevant language pair and for each condition, each participant received one different list, with lists counterbalanced across participants, so that all items appeared in all conditions and no participant received the same item twice.

3.4. Procedure

Both the LSF-French and the LIS-Italian experiments were implemented on Labvanced (Finger et al., Reference Finger, Goeke, Diekamp, Standvoß and König2017) and distributed online through a web link. The structure of the experiment was the same for the two language pairs. Questions and instructions, as well as informed consent forms, were all presented in written language (hence French or Italian, respectively).

Firstly, participants were asked a number of biographical questions. Only participants who declared to have at least one deaf signing parent were allowed to undertake the experiment.

Participants either took the lexical task followed by the sentence task, or the other way around, in a counterbalanced order. Each task was preceded by specific written instructions.

The instructions of the lexical task were to decide as fast and accurately as possible whether each noun produced in the video corresponded to an entity that one can “eat or drink”. Participants responded with two keys: Q-M for French participants (AZERTY keyboard) and A-L for Italian participants (QWERTY keyboard). The location of the two keys is the same across the two types of keyboards. The match between the yes/no answer and the two answer keys varied across participants.

The instructions of the sentence task were to decide as fast and accurately as possible if the meaning of each sentence produced in the video was generally true or false. The match between the yes/no answer and the two keys was coherent with that of the lexical task.

As in the original experiment by Emmorey et al. (Reference Emmorey, Petrich and Gollan2012), within each task, participants received the three lists each in a separate block, i.e., there was a block per condition. The order of blocks was counterbalanced across participants, and each block began with 4 training items in the appropriate condition.

In both tasks, each trial began with a fixation point of 1000ms, followed by the video clip.

For both tasks, we recorded accuracy and reaction times (RTs). For items in the spoken language condition, RTs were assessed from the voice onset of the audio track (RTs-audio), calculated on the basis of the relative spectrogram visualized with PRAAT (Boersma & Weenink, Reference Boersma and Weenink2022). For items in the sign language condition, RTs were calculated from the beginning of the video clip (RTs-video). For each item in the code-blending condition, two RTs were calculated, one from the voice onset of the audio track and one from the beginning of the video clip.

3.5. Data analysis

All statistical analyses were performed using R version 4.1.2 (R Core Team, 2021). We performed a separate analysis for each language pair and for each task. Before proceeding, we checked overall participants accuracy to remove participants with an error rate higher than 20%. No participant was excluded considering this criterion. Visual inspection of the French participants’ data (Figures on OSF) clearly showed that one French participant was extremely slow in performing the task, being an outlier in almost all conditions. This participant was removed from the analysis.

Accuracy analysis was performed on all itemsFootnote 8, whereas RTs analysis considered only correct answers. For each task in each language pair, we performed two different RTs analyses. We considered RTs-audio to compare responses in the spoken language and in the code-blending conditions, whereas we considered RTs-video to compare responses in the sign language and in the code-blending condition.

RTs that were two standard deviations above or below the mean for each participant for each condition were discarded from the analysis. Considering the French experiments, 5.0% of RTs-video and 4.8% of RTs-audio were discarded in the lexical task, and 5.2% of RTs-video and 4.6% of RTs-audio in the sentence task. Considering the Italian experiment, this implied the elimination of 5.7% of RTs-video and 4.5% of RTs-audio in the lexical task and 4.7% of RTs-video and 4.9% of RTs-audio in the sentence task.

Accuracy data were analyzed with generalized mixed-effects regression (family: binomial, link function: logit; Jaeger, Reference Jaeger2008) with condition as fixed factor (treatment coding with code-blending as the reference level, to compare accuracy in the sign language condition with accuracy in the code-blending condition and accuracy in the spoken language condition with accuracy in the code-blending condition).

RT data were analyzed with generalized mixed-effects regression (family: inverse gaussian, link function: identity, Lo & Andrews, Reference Lo and Andrews2015; see also Farhy et al., Reference Farhy, Veríssimo and Clahsen2018). Condition was entered in the models as fixed factor, with code-blending coded as 0 in each analysis, and the other level (either sign language, for RTs-video or spoken language, for RTs-audio) as 1.

Both in the accuracy and in the RTs data analyses we used the maximal random effect structure justified for the design, i.e., random intercepts for participants and items and by participants and by items random slopes for the effect of condition ((condition|participant) + (condition|item)). If the model with this random structure did not converge, we simplified the random structure omitting random slopes until we reached convergence and we always avoided random structures resulting in a singular fit.

Anonymized data, as well as the R scripts to perform the analysis are stored on the OSF page of the present paper (osf.io/z8smh/).

4. Results

4.1. Lexical task

Both French and Italian CODAs were highly accurate in the spoken language and in the code-blending conditions, and less accurate in the sign language condition (Table 1).

Table 1. Overall mean accuracy for semantic categorization decisions to LSF signs, French words, and LSF–French code-blends (LEX-F) and to LIS signs, Italian words, and LIS-Italian code-blends (LEX-I).

Specifically, for French CODAs, accuracy in the sign language condition (LSF) was significantly lower than accuracy in the code-blending condition (LSF + French) (b = −1.59, z = −5.55, p < .0001), whereas accuracy between the spoken language condition (French) and the code-blending condition did not significantly differ (b = 0.05, z = 0.14, p = .8920). As for Italian CODAs, accuracy in the sign language condition (LIS) was significantly lower than accuracy in the code-blending condition (LIS + Italian) (b = −2.59, z = −2.66, p = .0078), whereas accuracy in the spoken language condition (Italian) did not significantly differ with accuracy in the code-blending condition (b = −0.16, z = −0.12, p = .9030).

As for the RTs, for French CODAs RTs-video did not significantly differ between the sign language condition and the code-blending condition (b = 12, t = 0.89, p = .37), whereas RTs-audio were significantly slower in the spoken language condition compared to the code-blending condition (French + LSF) (b = 161, t = 15.76, p < .0001) (Figure 2).

Figure 2. LEX-F: Box plots representing the distribution of by subject mean RTs-video (left) and RTs-audio (right) by condition (code-blending vs. sign language and code-blending vs. spoken language). The “+” symbol indicates the mean value by condition.

Considering Italian CODAs, RTs-video were significantly slower in the sign language condition compared to the code-blending condition (b = 240, t = 5.66, p < .0001), and RTs-audio were significantly slower in the spoken language condition compared to the code-blending condition (b = 94, t = 3.22, p = .0013) (Figure 3).

Figure 3. LEX-I: Box plots representing the distribution of by subject mean RTs-video (left) and RTs-audio (right) by condition (code-blending vs. sign language and code-blending vs. spoken language). The “+” symbol indicates the mean value by condition.

4.2. Sentence task

Accuracy in the sentence task was higher in the spoken language and in the code-blending condition than in the sign language condition both for SEN-F and SEN-I, involving French and Italian CODAs, respectively (Table 2).

Table 2. Accuracy for truth value decisions to LSF sentences, French sentences, and LSF–French sentential code-blends (SEN-F) and to LIS sentences, Italian sentences, and LIS-Italian sentential code-blends (SEN-I).

In particular, French CODAs’ accuracy in the sign language condition (LSF) was significantly lower than accuracy in the code-blending condition (LSF + French) (b = −2.11, z = −9.02, p < .0001), whereas the difference in accuracy between the spoken language condition (French) and the code-blending condition was minimal and not significant (b = −0.27, z = −1.00, p = .3160). Likewise, Italian CODAs’ accuracy in the sign language condition (LIS) was significantly lower than accuracy in the code-blending condition (LIS + Italian) (b = −1.85, z = −6.07, p < .0001), whereas accuracy in the spoken language condition (Italian) was higher than in the code-blending condition, but the difference was not significant (b = 1.66, z = 1.82, p = .0691).

Turning to RTs, in French CODAs RTs-video were significantly higher in the sign language condition (LSF) compared to the code-blending condition (LSF + French) (b = 844, t = 9.51, p < .0001), while RTs-audio did not differ between the spoken language condition (French) and the code-blending condition (French + LSF) (b = 18, t = 0.57, p = .0570) (Figure 4).

Figure 4. SEN-F: Box plots representing the distribution of by subject mean RTs-video (left) and RTs-audio (right) by condition (code-blending vs. sign language and code-blending vs. spoken language). The “+” symbol indicates the mean value by condition.

For Italian CODAs RTs-video were also significantly higher in the sign language condition (LIS) compared to the code-blending condition (LIS + Italian) (b = 704, t = 14.25, p < .0001), but RTs-audio were significantly lower in the spoken language condition (Italian) compared to the code-blending condition (Italian + LIS) (b = −157, t = −3.05, p = .0023) (Figure 5).

Figure 5. SEN-I: Box plots representing the distribution of by subject mean RTs-video (left) and RTs-audio (right) by condition (code-blending vs. sign language and code-blending vs. spoken language). The “+” symbol indicates the mean value by condition.

5. Discussion

The two lexical experiments that were performed with French and Italian CODAs substantially replicate the findings of Emmorey et al. (Reference Emmorey, Petrich and Gollan2012): in LEX-I there was always a code-blending advantage, both with respect to spoken language (Italian) and with respect to sign language (LIS), while in LEX-F the code-blending advantage was limited to code-blending vs. spoken language (French). Even if RTs for LSF–French code-blends were slightly lower than for LSF signs, there was no significant difference between the two conditions.

As for LEX-F, the advantage of code-blending over French is of primary importance, since French is the dominant language of the participants. In general, the dominance of the spoken language in CODAs is well known in the literature such that researchers argue that CODAs are a special type of heritage speakers (e.g., de Quadros, Reference de Quadros2018; Polinsky, Reference Polinsky2018). The spoken French dominance of our CODA participants is also confirmed more concretely by accuracy data, which show that participants were significantly less accurate in the sign language condition than in the spoken language or code-blending condition (Tables 1 and 2).

It is therefore reasonable to conclude that our data support the idea that double lexical access is not costly, but can rather be advantageous for bimodal bilinguals, and that processing a lexical item in two modalities beats the cost of double lexical access.

Turning to the sentence tasks, we found that blended sentences were evaluated faster than sign language sentences both in SEN-F and SEN-I. This can probably be explained by the fact that participants, being less confident in their sign language, relied more on speech when performing this more complex task. It is also possible that the lack of mouth movements in the signed videos, which were avoided on purpose in creating the signed stimuli given the obvious incompatibility with code-blending (see above, §3 including footnote 6) might have caused some extra difficulty in comprehension. This is because in spontaneous conversations most signed utterances are indeed supplemented with mouthings reproducing the equivalent words (or parts of them) in the spoken language (see e.g., Crasborn et al., Reference Crasborn, van der Kooij, Woll and Mesch2008; Vinson et al., Reference Vinson, Thompson, Skinner, Fox and Vigliocco2010; and § 3.2, note 7).

As for the crucial comparison between code-blending and spoken language the two experiments yielded divergent results.

Starting from SEN-I, we found that responses to code-blended items were significantly slower than responses to Italian-only items. This clearly suggests a cognitive cost in processing two non-congruent sentences on the fly.

In SEN-F, on the other hand, we found no significant difference between code-blending and spoken language (French). As is generally the case with null results, they are difficult to interpret. One possibility is that the experimental design was not accurate enough to detect an existing code-blend advantage with congruent sentences, a point to which we shortly come back.

With respect to our initial hypothesis, the combination of lexical alignment with either Lillo-Martin et al.'s or Branchini and Donati's proposals correctly predicts the results in the incongruent sentence experiment (SEN-I), while they diverge on the predictions for the congruent sentence experiment (SEN-F). In fact, the hypothesis including Lillo-Martin et al.'s proposal predicts a processing advantage for the blended condition over the unimodal (speech only) condition. This hypothesis is not corroborated by the experimental evidence. This in turn may receive an explanation under the hypothesis that includes Branchini and Donati's proposal. Under this second hypothesis, the lack of difference between the blend and the unimodal speech-only condition can be interpreted by assuming that the positive bias of lexical integration is balanced by the negative bias of processing two separate but similar syntactic structures.

A way to better understand the null result we found in SEN-F would be to run an analogous experiment involving a similar semantic categorization task with three signs/words utterances under the same three conditions (French, LSF, congruent code-blend), but this time involving simple lists of words, crucially not inserted into a syntactic structure. For example, we could provide lists such as Berlin, Paris, Chicago and ask participants to answer the question: Is there an intruder? As far as lexical access is concerned, these items would not differ from those of SEN-F (at least those without modals) in the code-blend condition. In this case, as in SEN-F, each lexical item would be synchronically paired twice in the code-blend condition. But this time, no syntactic structure needs to be processed in order to achieve the task. If we were to find an advantage of code-blend in this case, we would be in a better position to conclude that it is the processing of (congruent) syntactic structures that cancels the advantage associated with a double lexicalization.

A second direction for further research is to use more sensitive measures of processing mechanisms. It is a fact that experimental measures that exploit a single index of comprehension difficulty, like reaction times and accuracy, might not be reliable because slow responses may reflect slow processing, careful processing, or both (Xiang et al., Reference Xiang, Dillon, Wagers, Liu and Guo2014). Methodologies that allow to control for the tradeoff between processing speed and processing accuracy, as is the case with speed accuracy tradeoff methodology, might be the way to go.

Finally, the study of how code-blending is processed could not be complete without addressing the issue of how integrated blends are comprehended. It would be of great interest to assess with a similar experimental design whether integrated code-blends such as (11) present an advantage or not over their unimodal counterparts (12) and (13).

Remember that integrated blends like (11) are instances of incongruent blends (no alignment between redundant lexical items) where fragments belonging to the two languages are produced simultaneously and need to be integrated in a unique proposition/utterance. Since meaning is computed from structure, these are likely to involve one single syntactic structure, where items belonging to the two languages are integrated. In this case, even the proponents of the hypothesis that congruent and incongruent blends involve two syntactic structures (one corresponding to each simultaneous utterances) would posit a one and only structure, associated to the one and only proposition that is produced scattered along the two channels. If lexical misalignment is what makes incongruent code-blends difficult, we would expect these to be hard to process as well. If what makes sentential blends more difficult is the processing of two syntactic structures associated to two potentially independent simultaneous utterances/propositions, this difficulty should not hold here.

Be that as it may, we believe that studying the processing of blended utterances of various degrees of complexity, integration, and congruence might play a crucial role in answering long-standing questions about the interaction and accessibility of the two languages in bilingual individuals.

6. Conclusions

In this paper, we investigated the comprehension of bimodal bilingual utterances both at the lexical and the sentential level by conducting four on-line experiments. In the first two lexical semantic judgment experiments, we replicated a previous finding by Emmorey et al. (Reference Emmorey, Petrich and Gollan2012) with two new pairs of languages (LSF–French and LIS–Italian) – namely, that processing code-blended bimodal bilingual stimuli is not costly with respect to monolingual stimuli, but rather advantageous.

In the other two experiments, we investigated whether the same effect is also found at the sentential level. Results showed that in syntactically congruent language pairs (SEN-F, LSF–French) no difference was found between the code-blending condition and the spoken language condition, while an advantage was found for the code-blending condition over the sign language condition. In syntactically incongruent language pairs (SEN-I, LIS–Italian) a disadvantage was found for the code-blending condition over the spoken language condition, while an advantage was found over the sign language condition. These results call for further experimental studies of code-blending in sentence processing.

Data availability

The data that support the findings of this study are openly available in OSF (osf.io/z8smh/)

Acknowledgements

We thank our CODA consultants, Candy Prouhèze and Rita Sala. Moreover, we are grateful to Federica Loro and Alessandra Gentili for their help in data collection. We also thank all participants who took part in the study and those, Deaf and hearing, who took part in the various pilots we ran. We are grateful to the audience of the following conferences and seminars for their comments and suggestions: 35th Annual Conference on Human Sentence Processing (HSP 2022), Theoretical Issues in Sign Language Research (TISLR 14); Collège de France Seminar; Language Attitudes and Bi(dia)lectal Competence (LABiC 2022); Bilingualism Matters Research Symposium (BMRS 2022).

Authors’ contributions

All authors conceived and designed the study; AJ and CB prepared the experimental materials; AJ implemented the tasks; BG analysed the data; all authors discussed the results; CD and BG drafted the manuscript; CG and CB revised the drafts. All authors have approved the manuscript before submission.