The purpose of this paper is to identify the important gaps in research coverage, particularly in areas key to the National Service Framework for Mental Health (NSF-MH) (Department of Health, 1999) and the NHS Plan (Department of Health, 2000), and to translate these gaps into researchable questions, with a view to developing a potential research agenda for consideration by research funders.

Three sets of source material were subject to a series of expert assessments by the review panel: the Thematic Review conducted by our team and completed in October 2000 (Reference Wright, Bindman and ThornicroftWright et al, 2000), the Scoping Review of the Effectiveness of Mental Health Services, produced by the Centre for Reviews and Dissemination at the University of York in September 2000 (Reference Jepson, Di Blasi and WrightJepson et al, 2000) and the Report of the Mental Health Topic Working Group, which reported to the Clarke Research and Development Review Committee (Mental Health Topic Working Group, 1999). The focus is on adults of working age with mental health problems, and upon mental health services for adults, including their interfaces with services for substance misuse, older adults, children and adolescents, and people with learning disability. We have produced 11 recommendations designed to strengthen the research infrastructure.

Creating the research infrastructure to answer the researchable questions

Training gaps

To address shortfalls in research capacity and competence, we propose a series of recommendations for debate. Potential gains from multi-disciplinary research in mental health are not realised because of the lack of availability of suitably qualified social scientists. The UK is very weak compared to the USA and the rest of Europe in producing quantitative social scientists (Reference HuxleyHuxley, 2001; Reference MajorMajor, 2001; Reference MarshallMarshall, 2001). The Economic and Social Research Council (ESRC) is considering establishing special programmes and centres for the development of these skills and has recently issued new postgraduate training guidelines (ESRC, 2001; http://www.esrc.ac.uk), in the context of increasing operational and managerial integration between mental health and social care services on the ground.

Recommendation 1.

Funded opportunities should be created to stimulate the creation of a social science capacity in mental health research at pre-and postdoctoral levels.

User participation in research

Professional research careers are competitive, insecure and poorly rewarded. Nevertheless, users are already beginning to participate in the whole research process, from the generation of the ideas to the conduct and dissemination of the research.

Recommendation 2.

A review is necessary on meaningful and sustainable ways in which users can directly participate in research.

Large-scale randomised controlled trials

Very few large-scale randomised controlled trials (RCTs) are funded in the mental health sector. Current plans for the national implementation of the NSF-MH and the NHS Plan lend themselves to pragmatic, cluster randomised trials.

Recommendation 3.

Funding arrangements in mental health should allow justifiable large-scale RCTs to take place to provide a strong evidence base for policy and practice, and to evaluate the implementation of components of the NSF-MH and the NHS Plan.

Large-scale and national data-sets

The Department of Health has encouraged the development of the national mental health minimum data-set and routine outcome measurement within it (Health of the National Outcome Scale). The benefits of using large-scale and national data-sets could be very substantial, but arrangements are not in place to capitalise upon this potential.

Recommendation 4.

Scoping studies should be commissioned on the costs and benefits of using the national mental health minimum data-set for research purposes, and of incorporating mental health measures into national surveys.

Evaluation of training

Although there has been some systematic evaluation of the benefits of training in psychosocial interventions and cognitive—behavioural therapy (CBT), largely with nurses, there has been very little systematic research into the benefits of current training and education for other professional and non-professional workers in the field.

Recommendation 5.

A new stream of research funding should be initiated to evaluate the cost-effectiveness of different forms of teaching and training to produce their intended outcomes.

Evaluation of dissemination

The problems of inadequate dissemination of research results, and those of introducing evidence-based practice (Reference Evans and HainesEvans & Haines, 2000; Reference WaddellWaddell, 2001) are well-known. Further research into the cost-effectiveness of different dissemination strategies is required.

Recommendation 6.

Methods should be developed to allow the systematic evaluation of the effectiveness of disseminating evidence-based knowledge. Such methods should then be applied to key areas of the NSF-MH and the NHS plan.

Evaluation of system level interventions

Most evaluation is conducted at the individual or programme level. Our ability to understand system level changes, for example the interventions from the Commission for Health Improvement or from consultancy groups, and their impacts, are not well developed and relevant multi-disciplinary research is needed.

Recommendation 7.

Commission a scoping study on the adequacy of current methods to understand the costs and effects of interventions at the whole system level.

Operationalising key concepts

Despite the importance attached in the NSF-MH and NHS Plan to key concepts such as accessibility and continuity, these are not yet clearly defined, nor do adequate measures exist that can be used for research purposes.

Recommendation 8.

Developmental work is required to establish realistic definitions and measures of key concepts, including accessibility and continuity.

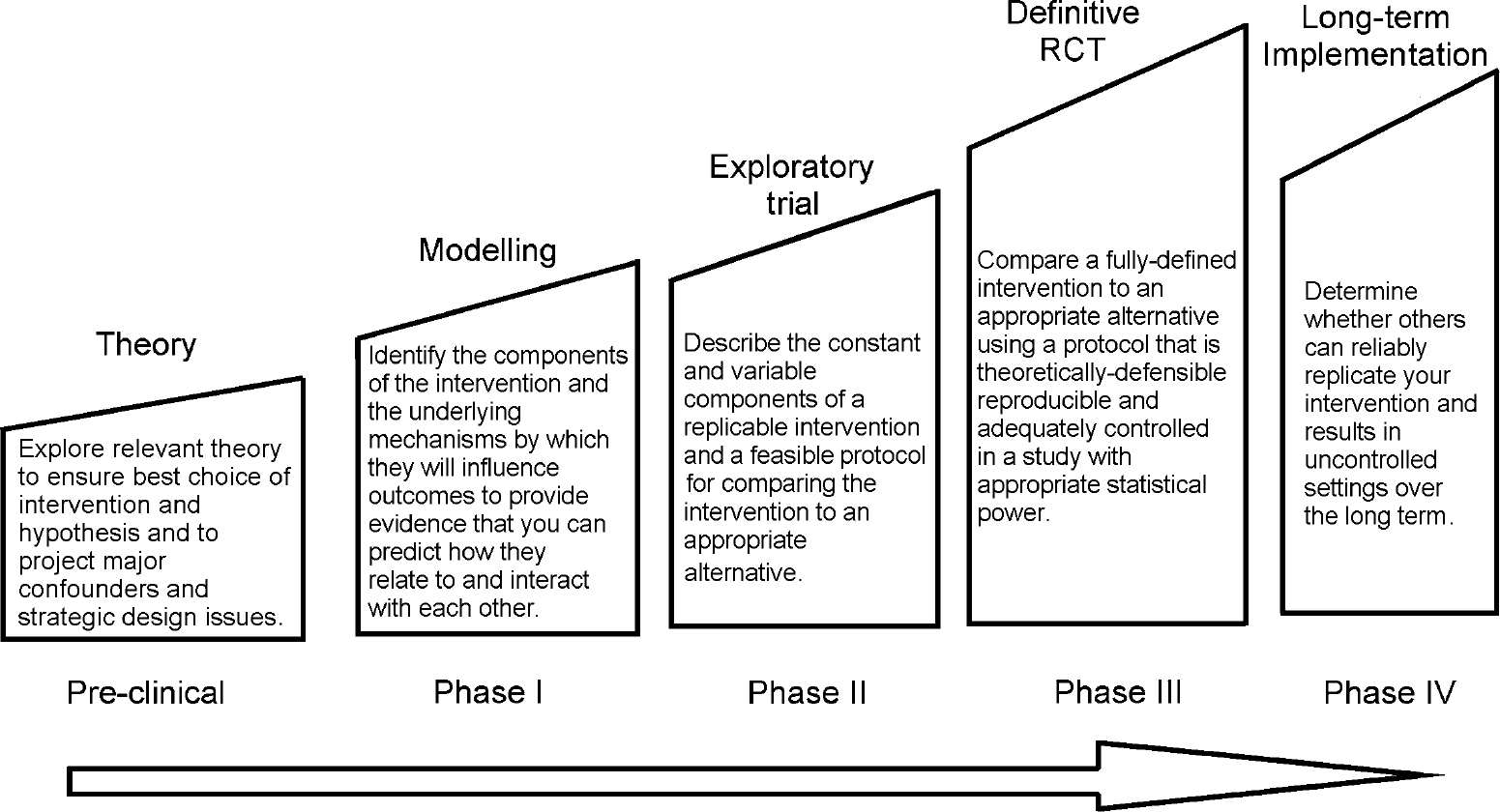

The recently published Medical Research Council (MRC) framework (Campbell et al, 2001; Fig. 1) offers a structure to the chain of events from initial idea to dissemination of proven intervention, and can be seen as a parallel to the five phases recognised in the development of pharmaceutical interventions. In terms of the research that has been conducted over the past decade in particular, several issues are notable in relation to the five stages of this framework.

Fig. 1. A structural framework for development and evaluation of randomised controlled trials (RCTs) for complex interventions to improve health. (Sources: Reference Campbell, Fitzpatrick and HainesCampbell et al, 2000, BMJ, 321, 694-696.) Printed with permission from the BMJ Publishing Group.

Recommendation 9.

Where appropriate, research should address the mechanisms whereby interventions are effective.

Pre-clinical phase

The large majority of studies reviewed for this report are largely or completely atheoretical, the exceptions being mostly those that concern psychological interventions. The MRC framework encourages the development of a theoretical understanding of the processes at work in effective interventions, and we concur.

Recommendation 10.

Those developing effective interventions should also produce detailed manuals so that the intervention can be applied consistently by others elsewhere.

Phase I: manualisation

This phase allows the components of the intervention to be modelled, together with the underlying mechanisms by which they influence outcomes to provide evidence that their relationship and interaction with each other can be predicted. In this phase, the intervention may be manualised.

Phase II: exploratory trials

These may be carried out to explore the feasibility of a new procedure before full randomisation is undertaken; to gain a rough idea of the effectiveness of a new intervention; or because a full RCT is not feasible at the time of the study. An example of the first would be Lam et al's (Reference Lam, Bright and Jones2000) exploratory study of the feasibility of CBT, of the second is Sutherby & Szmukler's (Reference Sutherby and Szmukler1998) investigation of crisis cards and self-help initiatives in patients with chronic psychoses, and of the last would be the adoption by Brooker and his colleagues (Reference Brooker, Falloon and Butterworth1994) of a ‘before and after’ design in gauging the effectiveness of training community psychiatric nurses in family interventions.

Phase III: definitive trials

We have discussed above the lack of sufficiently powered trials in most areas relevant to the NSF-MH and NHS Plan, and the trials that have been completed are reviewed in the York Scoping Review (Reference Jepson, Di Blasi and WrightJepson et al, 2000). We therefore refer to our recommendation 3.

Phase IV: dissemination

This is arguably the area that needs most research input. Although there is a fairly large number of studies that include a training component, there are no studies at all that randomise a sufficiently large sample of staff to an experimental training condition or a no-training control. In order to test whether an intervention (which has previously been proven to be effective) can be effectively ‘trained’ in the workforce, trials need to be designed wherein the unit of analysis is the member of staff. There is no existing literature, so power calculations need to be based on theoretical estimates of differences in outcome between trained and non-trained staff. This calculation may be further complicated by the likelihood that there will be a considerable variation in skill uptake between different members of the training cohort, leading to a variation in the way that the intervention is delivered to the patient.

Recommendation 11.

Investment is necessary to produce effective training packages suitable for widespread use.

The effectiveness of the training needs to be measured against two important sets of variables. The first set of variables relates to the trainee and to the increase in his/her skill and knowledge. The second set relates to the change in patient outcomes, and these should include economic as well as clinical and social measures. These are two important areas where training needs to be tested.

Studies have already been conducted to examine effectiveness, but there have been no controlled studies at all to examine the current training models (such as the Thorn Programme, the Cope Initiative from the University of Manchester and the Sainsbury Centre for Mental Health training courses. These consume a large proportion of the mental health component of consortium budgets).

Fidelity and implementation

What naturally follows from the issue of disseminating research findings into the workplace and, hence, into the wider population of those with mental health problems, is research into the long-term maintenance of skills. Furthermore, we need to examine the implementation issues concerning the way that services may, or may not, adapt to the presence of a newly-trained worker. We have evidence from the family intervention area (Kavannagh et al, 1992) that once trained, staff may not continue to use their skills. Furthermore, although there is widespread anecdotal evidence that there are barriers to implementing new models of working, there is absolutely no robust research evidence in this regard. This area of research raises important methodological questions, notably the place for qualitative research. It seems clear that quantitative findings from trials of training need to be complemented by qualitative studies that tease out some of the important implementation issues, such as attitudes of co-workers and organisational and management issues.

Declaration of interest

None.

eLetters

No eLetters have been published for this article.