1. Introduction

When I received this invitation to speak at this conference, I was very happy to accept because I like coming to France. I haven't been to France for many years, but my early childhood was spent in Jersey in the Channel Islands. So, I feel quite close to France.

Anyway, when I received the invitation back in February, I was very pleased to accept and the topic of data-driven learning (DDL) was fine for me. I was quite easily able to write the abstract that you have in your book of abstracts. But then, as time went by, I started to get a little bit concerned. I don't know if you can guess why? It wasn't because of the bedbugs we were reading about in the UK.Footnote 1 No, it was ChatGPT, which had come out a couple of months before and everyone was beginning to talk about. And it sounded like, ‘wow, this is going to totally negate all the work that I've been doing for so many years with corpora. And what am I going to say at this conference?’

I had a conversation with my son one day asking him if he was using ChatGPT in his job. And he said, ‘Oh, yeah, it's great. We do all our emails with ChatGPT.’ And I told him about the problem I had – all that research that I've been doing that he'd had to listen to me talking about with my wife Lynne over the years. ‘It's going to be redundant. I don't know what I'm going to say at the conference,’ I said. He immediately replied, ‘Well, it's easy. All you do is you talk about the beginning, and you show how we got to where we are now’, which I thought was quite a good idea. So, I'm basically going to take that idea, but I'm going to simplify it a lot. I'm going to do three case studies. The first will be a very early example of corpus-based data-driven learning, John Sinclair and his work on the Collins Cobuild Dictionary, to show how indirect DDL started. Then I'll talk about a more recent study describing a workshop in DDL that I ran some years ago with a colleague (Chen & Flowerdew, Reference Chen and Flowerdew2018) where post-graduate students were introduced to corpus tools to help them with research writing. This will be an example of direct DDL. And then I'll talk about what would happen if you conducted that workshop again, but using large language models (LLMs), for example, ChatGPT.

I'll begin with a brief definition of data-driven learning (DDL), followed by the first case study and some more general comments about the contribution of John Sinclair. Next, I'll present the second case study describing the workshop. After that, after briefly introducing the notion of LLMs and their relation to corpus tools, I'll go on to case study 3, which will apply LLMs to the research writing workshop, which I will already have described. Finally, I'll talk in more general terms about the affordances and limitations of LLMs and end the talk with a brief conclusion.

2. What is DDL?

Very briefly, data-driven learning is the application of the affordances of corpus linguistics to language learning (see Boulton & Vyatkina, Reference Boulton and Vyatkina2021; Dong et al., Reference Dong, Zhao and Buckingham2022 for recent research syntheses). This may apply in two ways, either indirectly as input to designing curriculum materials or directly as a learning tool where learners interact directly with corpus tools and corpora. I will cover both types in my case studies – indirect in case study 1 and direct in case studies 2 and 3.

3. Case study 1: John Sinclair and Collins Cobuild Dictionary

The first case study is the Collins Cobuild English Language Dictionary, published in 1987 and edited by John Sinclair (Sinclair, Reference Sinclair1987). It's still going, now in its 10th edition, and called Collins COBUILD Advanced Learner's Dictionary (Collins Cobuild, 2023). Sinclair was a leader in our field. He had revolutionary ideas about corpora and language. Rather than summarise them myself, here are some comments from a relatively recent handbook article which talks about Sinclair's dictionary (Xu, Reference Xu, Jablonkai and Csomay2022, p. 16). I separated the following as a series of quotations, but actually they are part of a single paragraph, but it is very dense and it tells us a lot about Sinclair and the Collins Cobuild English Language Dictionary and so it's good to dwell on each of the statements separately.

The Collins COBUILD English dictionary [CCD] is probably the bona fide game changer of dictionary making in the 20th century.

Corpus methodology is inherent in almost every bit of the dictionary.

For instance, the selection of head words is based on the frequency count of all English words in a 7.3-million-word corpus, initially called the main corpus, later referred to as Bank of English.

Actually, the Bank of English still exists. But it's a 450-million-word subset of the 4.5-billion-word COBUILD corpus of written and spoken English texts (Collins language lover's blog, ). So, it's moved on a lot, but it was revolutionary at the time.

The main innovation of the CCD is its phraseological description of the entry word.

For example, the typical collocation of the word brink, namely, on the brink of is in the first place embedded in the whole sentence definition ‘If you are on the brink of something, usually something important, terrible or exciting, you're just about to do it or experience it.’

This is important. Sinclair's corpus approach allows for whole-sentence dictionary definitions based on authentic language, as opposed to the fabricated examples of traditional dictionaries.

The contextualised definition is itself a condensed piece of learning material.

This is also very important. and why I cited the name Advanced Learner's Dictionary because from the very outset this dictionary was targeted at language learners, learners of English.

At the end of the entry, the colligational pattern is summarized as ‘N-SING: usu. on/to/from the N of n’. The three prepositions separated by slashes are ordered according to their probability of occurrence in the corpus.

Two example sentences in the same dictionary entry, namely, ‘Their economy is teetering on the brink of collapse’ and ‘Failure to communicate had brought the two nations to the brink of war’, were taken from the Birmingham Corpus to illustrate the characteristic uses of on the brink of and to the brink of.

The co-occurrence of brink with collapse and brink with war implies the negative semantic prosody of the entry word.

Semantic prosody (and semantic preference) were key innovations of Sinclair in linguistic theory. And as far as language teaching is concerned, authentic chunks of language (based on corpus data) are essential for learners to get to grips with the nuances of meaning conveyed by this concept.

And finally, the extended unit of meaning model. That is the phraseological framework has been systematically implemented in the CCD.

Again, this is another important linguistic insight of Sinclair's. In fact, it underlies his whole theory of language, the idea that meaning is conveyed not just by words, but by lexical items, which are not just words, but may be a single word or a group of words (Stubbs, Reference Stubbs2009).

One thing to say about this early corpus work is that Sinclair (as with the creators of LLMs) was a great believer in bigger is better. He wanted to have as much data as possible. But in those days, spoken data was very difficult to come by. And there were lots of restrictions on copyright of written data. So, you'll find in that early corpus a lot of the data was from novels which were out of copyright. So, people at the time were saying, ‘well, you know, if you want to learn how to write a novel, you can use Cobuild.’ But it didn't really give you much information about different genres. That's not the case now, because they've reorganised and redesigned the corpus, but that was the situation at the time.

So, just to summarise some of Sinclair's theoretical contributions more generally for us. His notion of the idiom principle – the idea that language is made up of more or less pre-fabricated units of meaning rather than just single open choices according to the Chomskyan view of language is very important (Erman & Warren, Reference Erman and Warren2000). Sinclair had a notion that language is primarily made up of these prefabricated chunks, which is very important for us, and, of course, for LLMs.

Moreover, Sinclair's emphasis on how the collocations of a word affect its meaning – the ‘extended unit of meaning’ that was mentioned in that citation above – is very important. You don't need to just consider a single word to understand its meaning; you need to look at the words it typically occurs with. To comprehend a word, you need to consider the company it typically keeps, to paraphrase Sinclair's teacher, Firth (Reference Firth1957), and many other subsequent linguists.

Also very important are his notions of semantic preference and prosody. Semantic preference – how words are associated with particular other words in semantic fields and certain words more likely to occur together based on their shared meanings or associations within a given context. And semantic prosody – how certain words carry not only their literal meaning but also an additional evaluative or connotative sense based on their typical patterns of collocation, the inherent evaluative or emotional colouring that words may acquire based on their frequent co-occurrence with other words in specific contexts.

And finally, I'd also mention – although this is not perhaps a corpus linguistics notion so much, but for this conference where we're talking about genres is very important – one of the defining features of a genre is its staged nature; it has a schematic structure; it goes from one stage to the next. Sinclair's work with Malcolm Coulthard (Sinclair & Coulthard, Reference Sinclair and Coulthard1975) is not talked about so much nowadays, but their study of classroom discourse was revolutionary, because they analysed the structure of discourse above the level of the sentence. Their notion of exchange structure consisting of three moves – initiation, response, follow up – the typical three-part exchange in the classroom – was very important. Most genre-based work in our field nowadays cites Swales (Reference Swales1990) and his notion of the moves in the research article. I'll talk about this shortly. It's very significant.

It's interesting that Swales and Sinclair were both working in Birmingham at the same time. I often wondered whether Swales had taken the term move from Sinclair. I did in fact ask him prior to this conference by email and he said he hadn't (Swales, personal communication 30 November 2023). But you know, they were both working at the same time in Birmingham. They were in different universities. But I think it was something in the air perhaps at the time. Certainly, the notion of larger units of discourse is very important and it can be dealt with in DDL, as we'll see later.

So, Sinclair's work on the Collins Cobuild English Dictionary was a groundbreaking application of data-driven learning, indirect data-driven learning.

4. More recent examples following Sinclair's groundbreaking work

I can give you some more recent DDL examples that apply Sinclair's ideas, so you can see his influence. The Academic Phrasebank (University of Manchester, n.d. (a)) is an open-source resource, available on- or off-line. It's a resource for academic writers and it gives you phraseological units that you can use in your research writing, organised according to the sections of the research article and broken down according to rhetorical moves.Footnote 2 It's basically organised according to the moves in Swales's research article introductions. A functionally organised text, the academic writer can use this to find relevant prefabricated chunks – extended units of meaning – if you like, to help with their writing.

Another example is the Louvain EAP Dictionary (LEAD) (Centre for English Corpus Linguistics, n.d.; Granger & Paquot, Reference Granger and Paquot2015). This is an online dictionary and, again, focuses on typical collocations. So, it's somewhat like the Academic Phrasebank, but is based on the British National Corpus (BNC) (British National Corpus, n.d.). It develops awareness of discipline-specific phraseology. You can select a particular discipline with this resource and it will give you language specific to that discipline. Again, it's based on the original ideas of Sinclair.

You are also probably all familiar with online mobile dictionaries and translation applications like Reverso (Reverso Technologies Inc., n.d.), Linguee (DeepL, Reference DeepL2023), among others. They quote concordance lines as well as the translations of words and phrases you look up. The examples look very much like those in the Cobuild Dictionary, based on key-word-in-context (kwic) concordance lines, except you get many more than in the dictionary. This allows the user to really get a feel for the various nuances in meaning. The data is based on large parallel corpora, translation memory, and/or data scraped from the internet. I use Reverso myself a lot for French and Spanish (Figure 1).

Figure 1. Reverso App showing kwic feature

In my view, these apps are the most user-friendly direct applications of DDL, as they seamlessly provide concordance lines along with possible translations of the searched words or phrases, referred to as ‘examples’ (thereby getting away from some of the linguistic jargon that might be off-putting for learners). These apps use parallel corpora, but they also use various AI tools as well.

The important point to make about all of these texts and apps in this section is that they work on the same principles developed by Sinclair, in particular the idiom principle, the extended unit of meaning, and the key-word-in-context.

5. Case study 2: A DDL workshop in research writing for postgraduate students

This is a study that I conducted with Meilin Chen, who was originally my Ph.D. student and later my collaborator (Chen & Flowerdew, Reference Chen and Flowerdew2018). The authors describe how they introduced data-driven learning to Ph.D. students for research writing purposes in a territory-wide project in Hong Kong. The main purpose of case study 2 is not to discuss that workshop, but to prepare the way for case study 3, which builds on case study 2. Secondarily, I'll take the opportunity to demonstrate an application of another LLM, Microsoft Copilot (Microsoft, n.d.), which has just become available.

As I'm going to be talking about ChatGPT and other LLMs later in case study 3, I thought I would save myself summarising my own article and get Microsoft Copilot to do the job for me. Copilot is a chatbot based on ChatGPT. One of the functions of these chatbots is that they can summarise text. Microsoft Copilot suddenly appeared on my computerFootnote 3 and so I thought I would use it. This is the summary of the article from Microsoft Copilot.

The project involved delivering 24 workshops on DDL to 473 Ph.D. students from six Hong Kong universities. The workshops aimed to introduce students to corpus tools and resources that can help them improve their research writing skills. The authors described the corpora and tools they used, the workshop outline, and the activities they implemented. They illustrated how they introduced students to BNCweb and AntConc, and how they guided students to explore lexico-grammatical and discoursal features of research writing using corpora. They summarised the students’ evaluation of the workshop, which was highly positive and enthusiastic. They also discussed the students’ suggestions for improvement and the challenges they faced in using corpora. They also explained how they provided follow-up support to students who wanted to continue practising their corpus skills.

Content-wise, this is quite an accurate summary, but it is missing one very important element of the workshop. As well as using BNCweb (BNCweb, n.d.) and AntConc, we also showed the students how to make their own corpus, their own tailor-made corpus, not mentioned by Microsoft Copilot. I think that's quite a serious criticism of the summarising function of Microsoft Copilot. Another minor point is that Microsoft Copilot didn't explain what BNCweb and AntConc are – rather important details for anyone not familiar with these applications. Just for the record, if you didn't already know it, BNCweb is the online version of the BNC and AntConc (Anthony, Reference Anthony2023) is Laurence Anthony's well-known concordancing programme.

As well as the content summary, the chatbot provided a synopsis of the feedback on the project.

The authors reported on the feedback they collected from the students through a questionnaire and interviews. They summarised the students’ evaluation of the workshop, which was highly positive and enthusiastic. They also discussed the students’ suggestions for improvement and the challenges they faced in using corpora.

The participants gave highly positive feedback on the workshops, rating them on seven statements and two open-ended questions. They found the workshops useful, friendly, high-quality, and informative. They also said they would recommend the workshops to others and use corpora in the future. The participants mentioned various aspects that they liked most, such as different corpus resources, useful expressions, hands-on activities, personal corpora, and writing conventions. They appreciated the affordances of corpora, the teacher's guidance, and the relevance to their writing problems.

The researchers interviewed three students who attended the workshops and found that they had different experiences and attitudes towards using corpora. One student was very enthusiastic and used BNCweb regularly. Another student was frustrated and switched to Google Scholar. The third student was selective and used BNCweb as a last resort. The cases showed that students’ corpus use was influenced by factors such as time, computer skills, and availability of resources.

This again is quite an accurate summary. It's worth pausing here just briefly, because I think the three student cases referred to here are quite indicative of what happens when you try to persuade students to use corpora. Some of them are very enthusiastic, others not so enthusiastic. I think it's largely to do with how easy or how difficult it is to actually use the software.

A rather misleading aspect of this part of the summary is the statement that ‘researchers interviewed three students who attended the workshops’. This strongly implies that only three students were interviewed, when in fact the three were selected from a larger sample of interviews as indicative of different attitudes. There are many reports online and in the literature of how LLMs tend to provide categoric statements that may be misleading and/or erroneous (Borji, Reference Borji2023), sometimes referred to as hallucinations. Certainly, this is an aspect of LLMs that unsuspecting students need to be aware of.

6. LLMs and corpus tools

L'éléphant dans la piece is not a French expression. It's a literal translation of the English The elephant in the room. It refers to something you are very aware of, but you are not talking about for whatever reason. Interestingly, a common example translation in Reverso for ‘elephant in the room’ is ‘éléphant dans un magasin de porcelaine’, which translates back into English as ‘bull in a China shop’, which is something completely different. So, as an aside, this highlights a problem with dictionaries based on parallel corpora – a case of garbage in, garbage out. If the data in the translated corpus is not accurate, then the concordance examples based on it will also not be accurate. I've introduced this expression because LLMs are something that we need to be talking about in this meeting. And I think that if you're talking about LLMs, young people are going to know more about them than we as teachers do. So that's another elephant in the room that we need to consider as teachers in this era of Artificial Intelligence.

LLMs are applications that allow you to type in a question or command in ordinary language, referred to as a prompt. They produce an answer in ordinary language based on a huge training language database, or corpus in our jargon. It's an advance on Google and other search engines where key words produce a list of related websites. And it's a bit different to corpus linguistics, where a search word or group of words identifies all instances of that word or group in a corpus. LLMs seem to understand the question and provide an answer. Although they're using the same predictive principle as in Google – as Sinclair showed us so insightfully in linguistics – LLMs can participate in a whole conversation in this way. Bear in mind though that they never take the initiative – the perfect partner who never answers back, if you like. And they have no memory beyond the conversational thread they are participating in. Once you turn the thread off, it stops and restarts from the beginning, with no recollection of what has gone before.

7. Case study 3: Applying LLMs to the research writing workshop corpus queries

Let's see what happens if we use these LLMs to perform some of the tasks which we gave the students in those workshops that were summarised earlier; how we could use ChatGPT in that workshop if we wanted to do that.

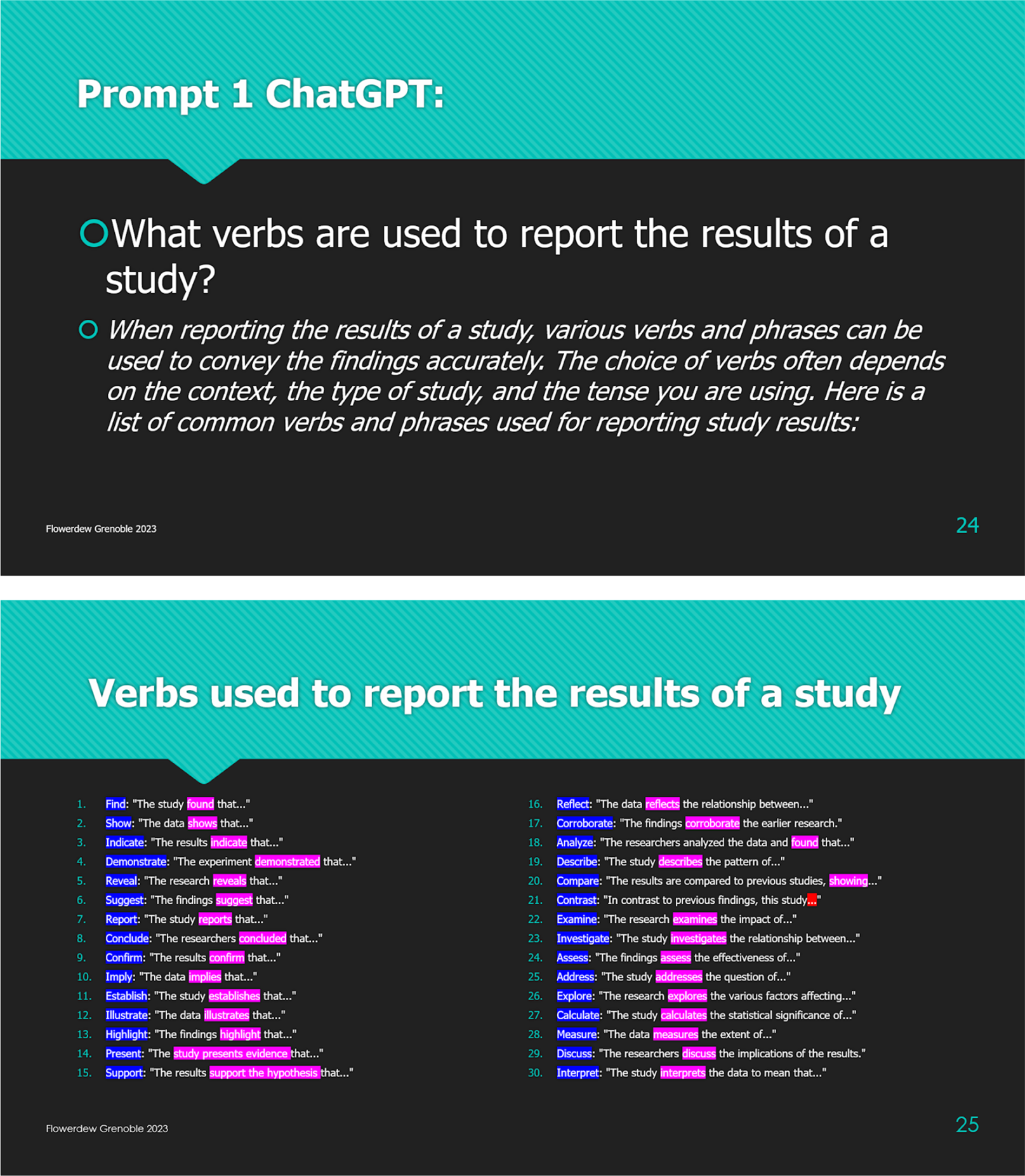

In the workshop reported as Chen and Flowerdew (Reference Chen and Flowerdew2018) and summarised here as case study 2 by Copilot, we began by brainstorming the following question with the students: What verbs are used to report the results of a study? Of course, nowadays, this could be done with ChatGPT. So, this question is also the first prompt that I gave ChatGPT in replicating the workshop. As Copilot only became available literally a few days ago, I didn't use it for this case study (except in a secondary role), instead using ChatGPT3.Footnote 4 The two slides in Figure 2 represent the result from this prompt:

Figure 2. What verbs are used to report the results of a study? (ChatGPT)

One interesting thing about ChatGPT that you can see from Figure 2 is that it gives you a little introductory paragraph, which actually I find really annoying, but you can ignore it. However, for our purposes, it's quite interesting to look at this anyway. When reporting, it tells you how to actually use the verbs. I didn't ask it to do this. I just asked it what verbs there were. But it gives you this preamble. I've actually colour-coded this somewhat and tidied it up. It doesn't look so neat and tidy in ChatGPT, but it's just to show you that it does give you a nice selection of verbs. The verbs it gives are as follows: find, show, indicate, demonstrate, reveal, suggest, report, conclude. This is very useful. It also gives you an example, or part of the sentence it's taken from, which I didn't ask it for. It seems to have a mind of its own. It seems to be saying: ‘You probably want to know how to use these. So, I'll show you that as well.’

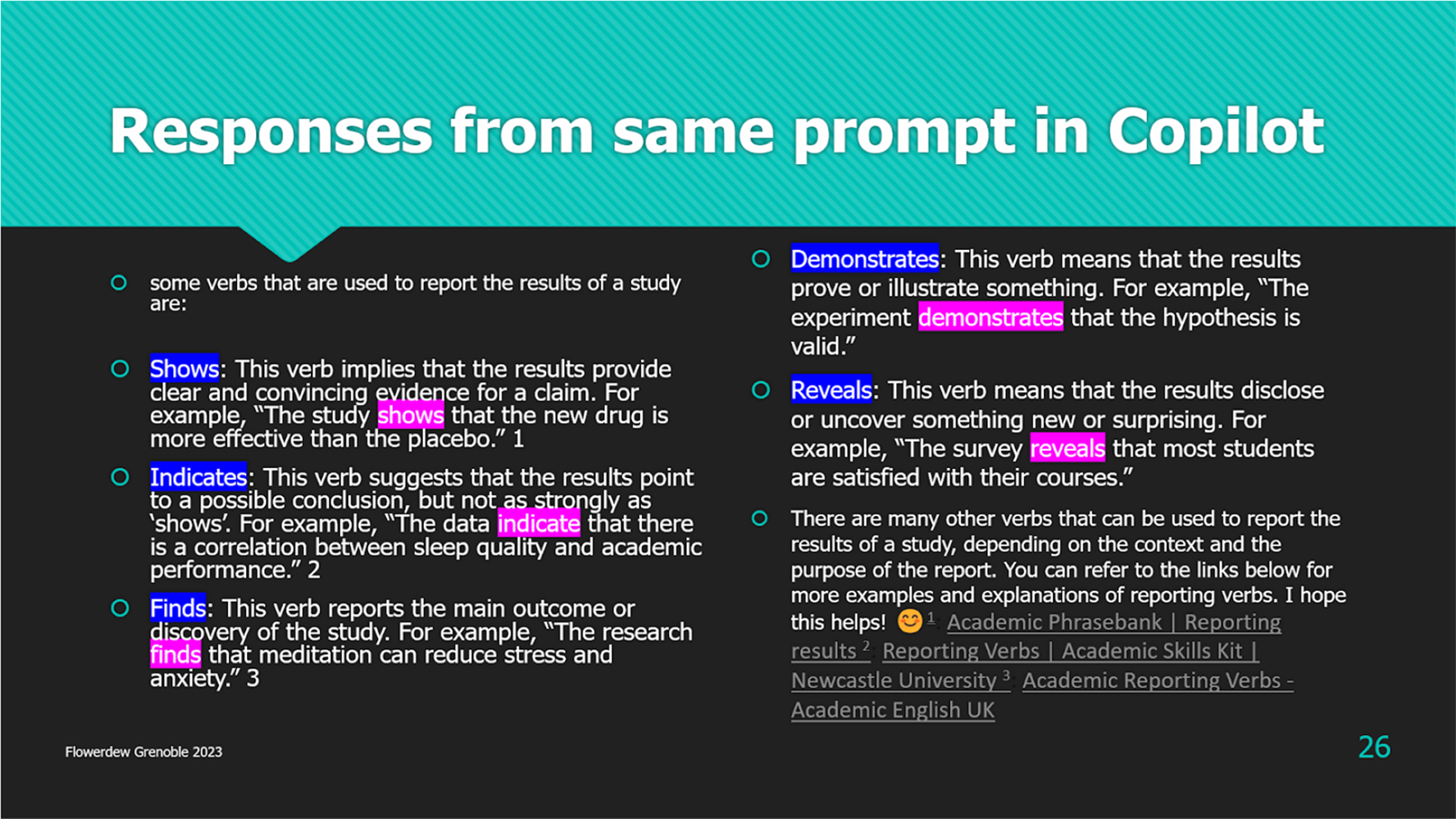

If you use Microsoft Copilot for the same prompt, the result is a bit different (Figure 3).

Figure 3. What verbs are used to report the results of a study? (Microsoft Copilot)

Again, it gives you the verbs, which I've highlighted. And it gives you examples, which I didn't ask it for. Now, one of the criticisms of LLMs is that you don't know what the data are that you get the answer from. But with Copilot, if you look at the bottom of the figure, it tells you where it's got the data (or where you can read up on the topic). So, interestingly, one of the sources is Academic Phrasebank, the resource I referred to earlier. So presumably, that book has been loaded into the training data. This highlights the fact that if you ask an LLM to tell you something, it's likely to tell you what it finds in a book or some other text. It doesn't actually explain it itself. So, for example, if you ask it for a grammatical rule, it just gives the answer from a grammar book; it doesn't actually create a rule itself (although if you manipulated the prompts enough it would probably be able to do that). So, artificial intelligence is not in fact intelligent at all, based on this data; it just regurgitates what is already in its database. (Either that or it decides that the internet will provide a better answer more easily than working out its own rule). LLMs are not able to truly understand concepts or make human-like inferences. Their responses are based purely on patterns learned from the data they were trained on (Borji, Reference Borji2023).

So here in this example (Figure 3) it's doing the same thing with Academic Phrasebank. I actually contacted John Morley, the writer of this text, and asked him what he thought about it (personal communication, 17 November 2023). He said he didn't know a lot about LLMs, but he was relaxed about his material being used, as long as it was helping people to write and that the creativity and sense of ownership that he sees with those who currently use it is not compromised. I thought that quite interesting. On the other hand, I went to a meeting in London recently with the Authors' Licensing and Collecting Society (ALCS), the society in the UK that collects royalties for writers, where there was a big debate about the ethics of writers' data being loaded onto these LLMs without permission or royalties paid. They were angry that their creative work was being used in this way. I think we will be hearing more about this issue in the coming weeks and months. There are already many court cases going on regarding this copyright issue.

As stated above, in the workshop we asked the students to brainstorm verbs to report results and then we showed them how they could search for these verbs using the corpus. Here are some examples of how the verb indicate is used if we ask ChatGPT to do this next task (Figure 4).

Figure 4. Prompt 2: Give me examples of the verb indicate in research articles

After the preamble (the first of the two slides in this figure), it gives us what looks like a concordance you might do with AntConc or some other concordancer: indicate, indicate, indicate, indicates, indicates, indicate, etc. . . . This is very nice. Of course, if you did this with a concordancer you'd have to use a wild card symbol – a symbol standing for any character – to get the different forms.

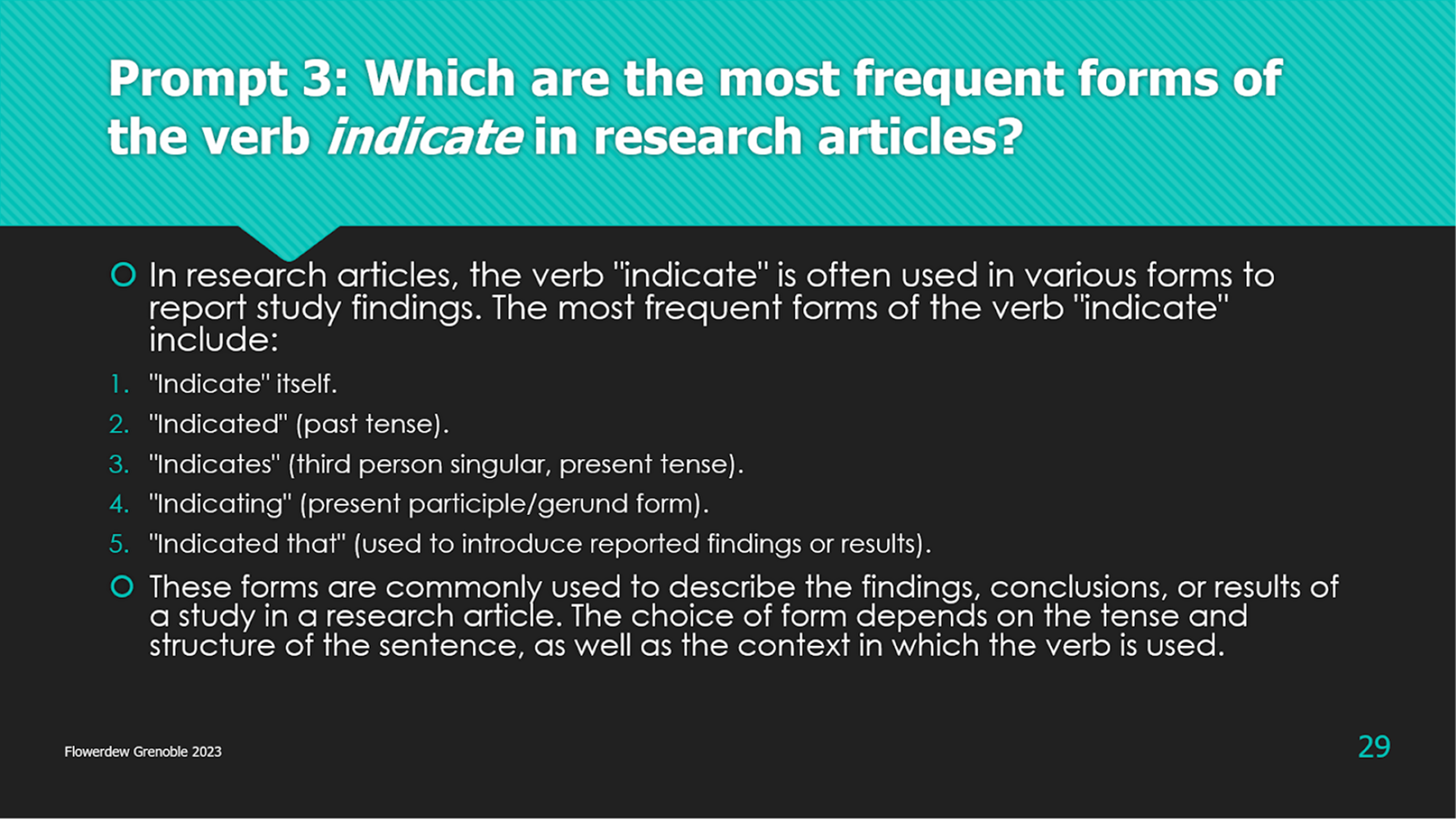

Now, prompt number three: Which are the most frequent forms of the verb indicate in research articles? In the workshop, we showed students how to search for that. You do that, of course, by getting frequency data based on the corpus you're using to compare the rate of occurrence of the different forms. ChatGPT gives you the different forms – indicate, indicates, indicated, indicating – but it gives you more information than you actually asked for again, telling you how these forms are used (Figure 5). On the other hand, it can't give you relative actual frequency data, because it's not working with a specific corpus.

Figure 5. Prompt 3: Which are the most frequent forms of the verb indicate in research articles?

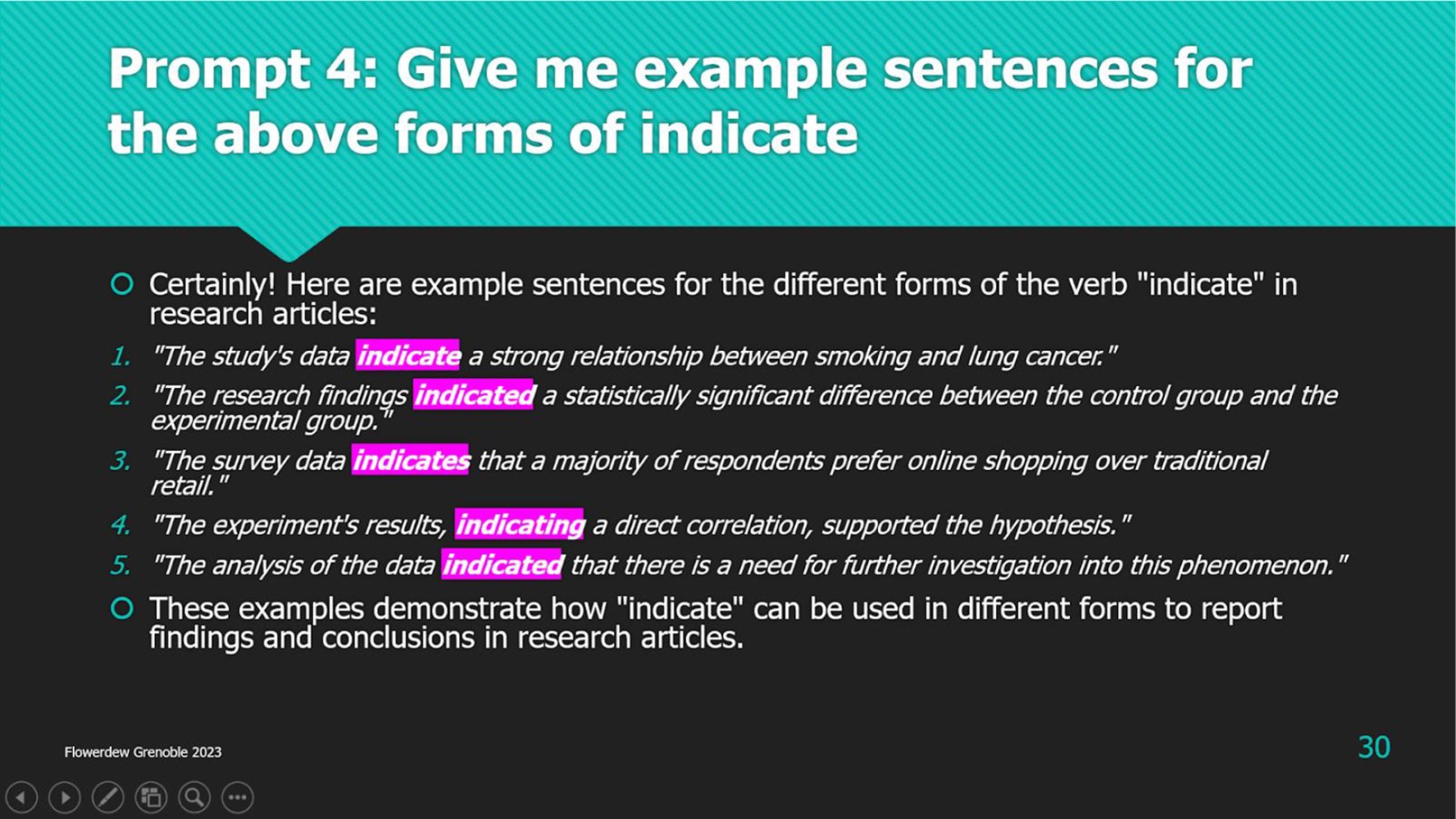

The next task is to provide examples of the above forms of indicate (Figure 6), which it does accurately, with one example each for indicate, indicates, and indicating, and two examples of indicated. Perhaps, if the prompt had been worded better, it might have provided more examples for each form.

Figure 6. Prompt 4: Give me example sentences for the above forms of indicate

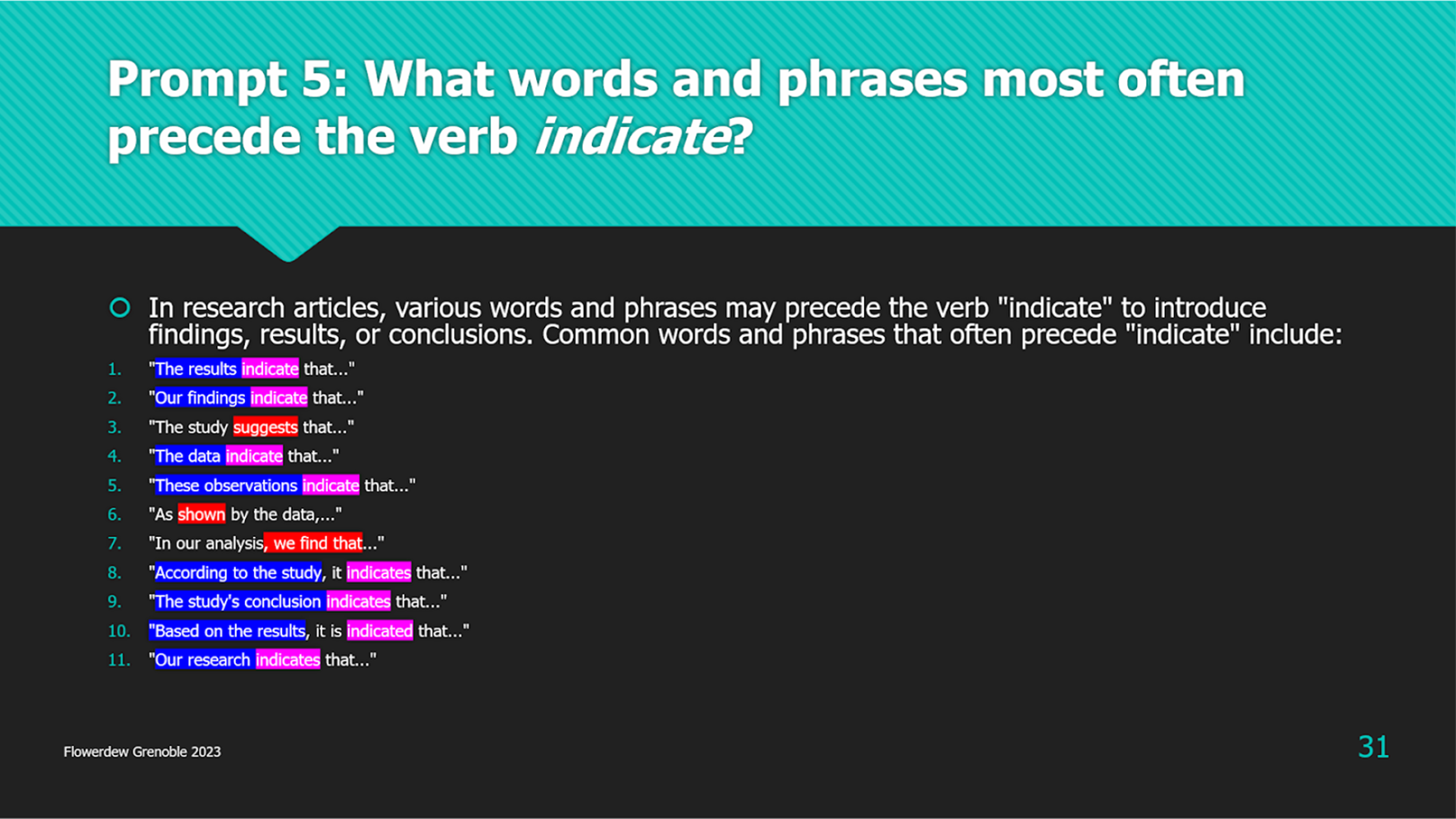

Moving on to the next prompt – What words and phrases most often precede the verb indicate? – we begin to see a bit of hallucinating (Figure 7). We see that as well as providing appropriate words – results, findings, study, data, observations, and so forth (highlighted), we also have examples in context, which is actually rather useful. However, three of the 11 examples provided (3, 6, and 7) are not with the verb indicate, but with other reporting verbs (suggests, shown, and find, respectively). Maybe it's been looking in Academic Phrasebank and/or other secondary sources and gone to the section with reporting verbs and found these examples. Furthermore, although the prompt asked for the most frequent verbs, there is no indication of relative frequency. This is again probably because it is not working on a dedicated corpus but is relying on secondary sources.

Figure 7. Prompt 5: What words and phrases most often precede the verb indicate?

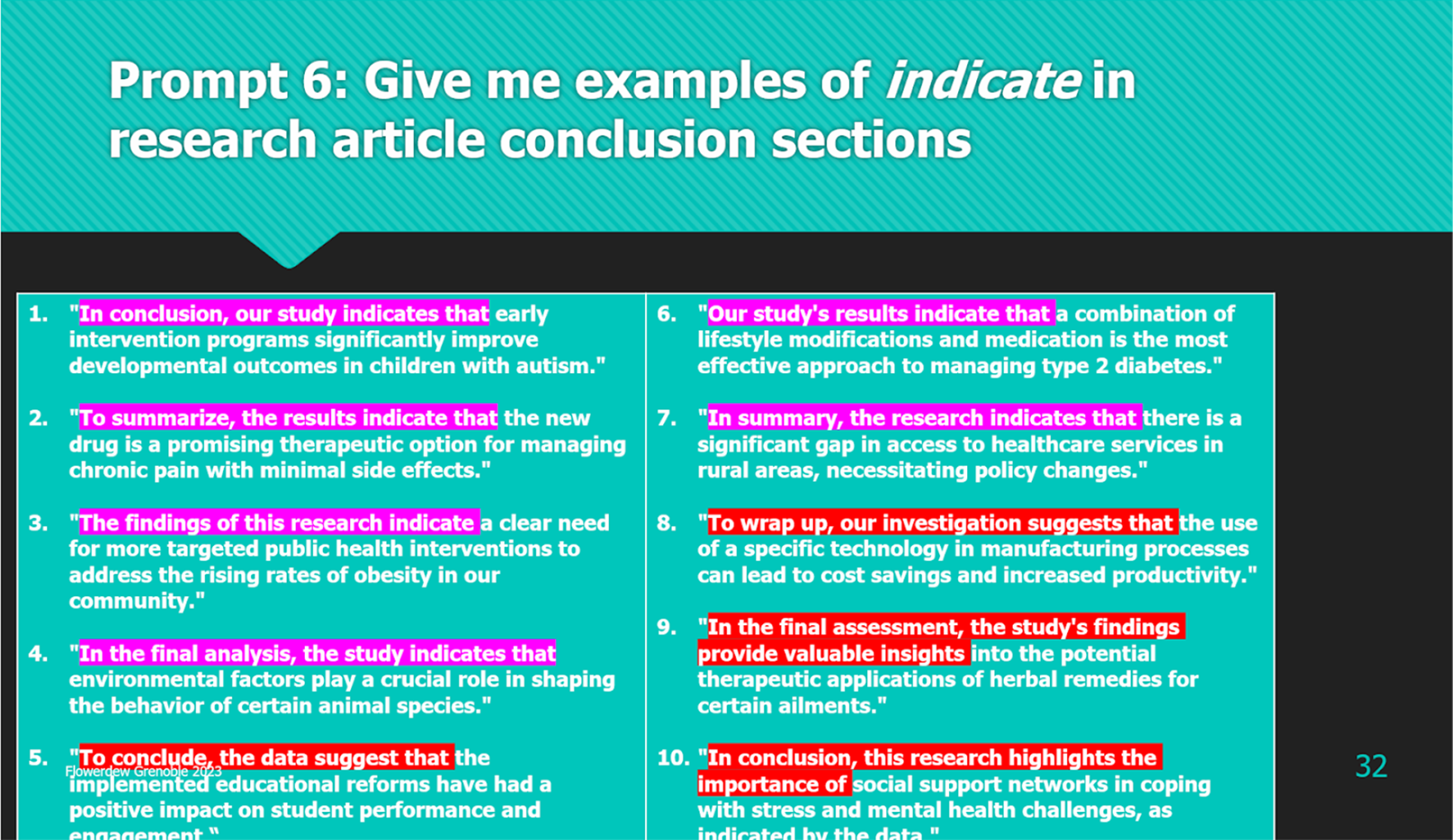

Hallucinations are again apparent with the next task/prompt – give me examples of indicate in research article conclusion sections (Figure 8).

Figure 8. Prompt 6: Give me examples of indicate in research article conclusion sections

Again, it's given us some good examples with this prompt. You can see that these examples could be used in conclusion sections – in conclusion, our study indicates that; to summarise, the results indicate; the findings of the research indicate – but if you look at those that I've highlighted (5, 8, 9, 10) – the data suggest; our investigation suggests; the study's findings provide valuable insights; this research highlights the importance of – while these are all very useful also in conclusions, they're not with the verb indicate. So, again ChatGPT has fallen down here.

In summary, for case study 3, we've seen that LLMs (mainly ChatGPT, but also Microsoft Copilot) can perform all of the tasks selected from the workshop. The findings are impressive, but there are some misleading results. No doubt, more rigorous testing in future will give a much better evaluation. You might argue that it's not fair to evaluate LLMs on criteria for DDL. Some of the other things that they can do are incredible, including in the educational field. However, when commentators like Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023), whom I'll refer to shortly, are calling into question the whole future of DDL as a result of LLMs, it's a worthwhile exercise.

8. Affordances and limitations of LLMs

In this final section, let's talk a bit about the affordances and limitations of LLMs in more general terms, but still bearing DDL in mind. You may be interested in or already be familiar with these articles.

• Crosthwaite, P. & Baisa, V. (2023). Generative AI and the end of corpus-assisted data-driven learning? Not so fast. Applied Corpus Linguistics, 3(3), 100066. https://doi.org/10.1016/j.acorp.2023.100066

• Lin, P. (2023). ChatGPT: Friend or foe (to corpus linguists)? Applied Corpus Linguistics, 3(3), 100065. https://doi.org/10.1016/j.acorp.2023.100065

• Kohnke, L., Moorhouse, B.L., & Zou. D (2023). ChatGPT for language teaching and learning. RELC Journal 54(2), 537−550. https://doi.org.10.1177/00336882231162868

They've all appeared very recently, talking about GPT and data driven learning with corpora. All of them are very positive about how you might use ChatGPT, either directly in the classroom, as a direct application of DDL, or indirectly to design curriculum materials. I mean, ChatGPT can also design your lesson plans for you. Just search on the internet or use ChatGPT to find links showing you how to do it.

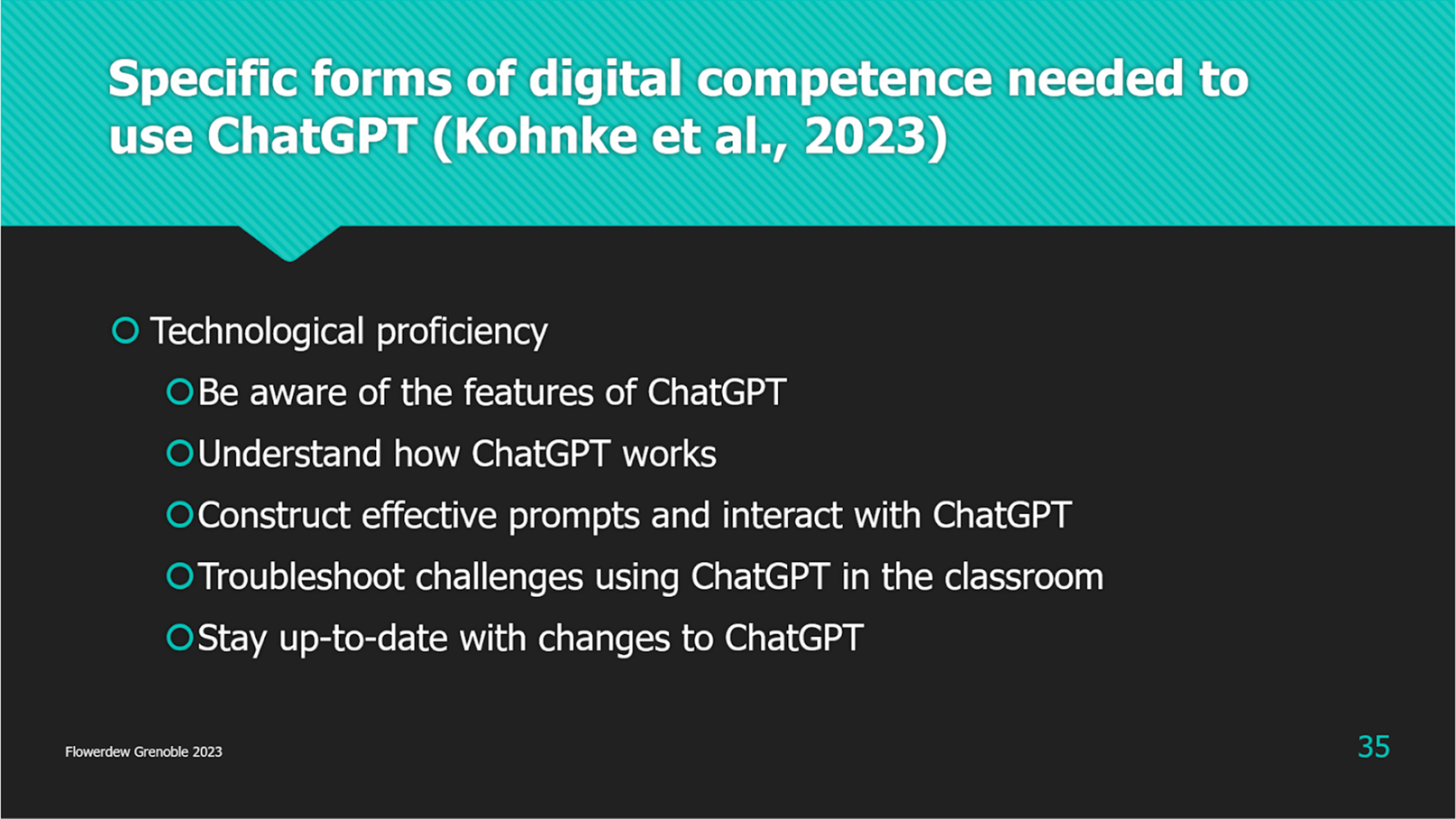

Kohnke et al. (Reference Kohnke, Moorhouse and Zou2023) talk about the technological competencies that students and teachers would need to use ChatGPT (Figure 9).

Figure 9. Specific forms of digital competence needed to use ChatGPT (Kohnke et al., Reference Kohnke, Moorhouse and Zou2023).Footnote 5

I think they were talking more about teachers, but these points can be applied to both teachers and students. Kohnke et al. (Reference Kohnke, Moorhouse and Zou2023) refer to ‘technological proficiency’ on this Figure. I think this a little misleading, because one of the great strengths of ChatGPT is that you can use ordinary language; you don't really need any special technological proficiency. Under this heading, you need to be aware of what ChatGPT can do; you need to understand how it works; you need to be able to construct effective prompts. I think that last point is crucial and there's an important role for the teacher here. You need to troubleshoot any issues that arise. When I showed you where ChatGPT gives you suggest, instead of indicate you're going to get that sort of thing turning up, referred to as, as I said, hallucination. Again, there's an important role for the teacher here. You need to stay up to date with changes. Yes, it's changing every day. What I'm telling you today is going to be out of date by tomorrow, if it's not already. None of these competencies are highly ‘technological’; except perhaps the second one understand how ChatGPT works, although even here, you only need a general understanding; they're just common sense or to do with general (first) language skills. However, I would emphasise that constructing effective prompts is the key to getting useful results (Lin, Reference Lin2023).

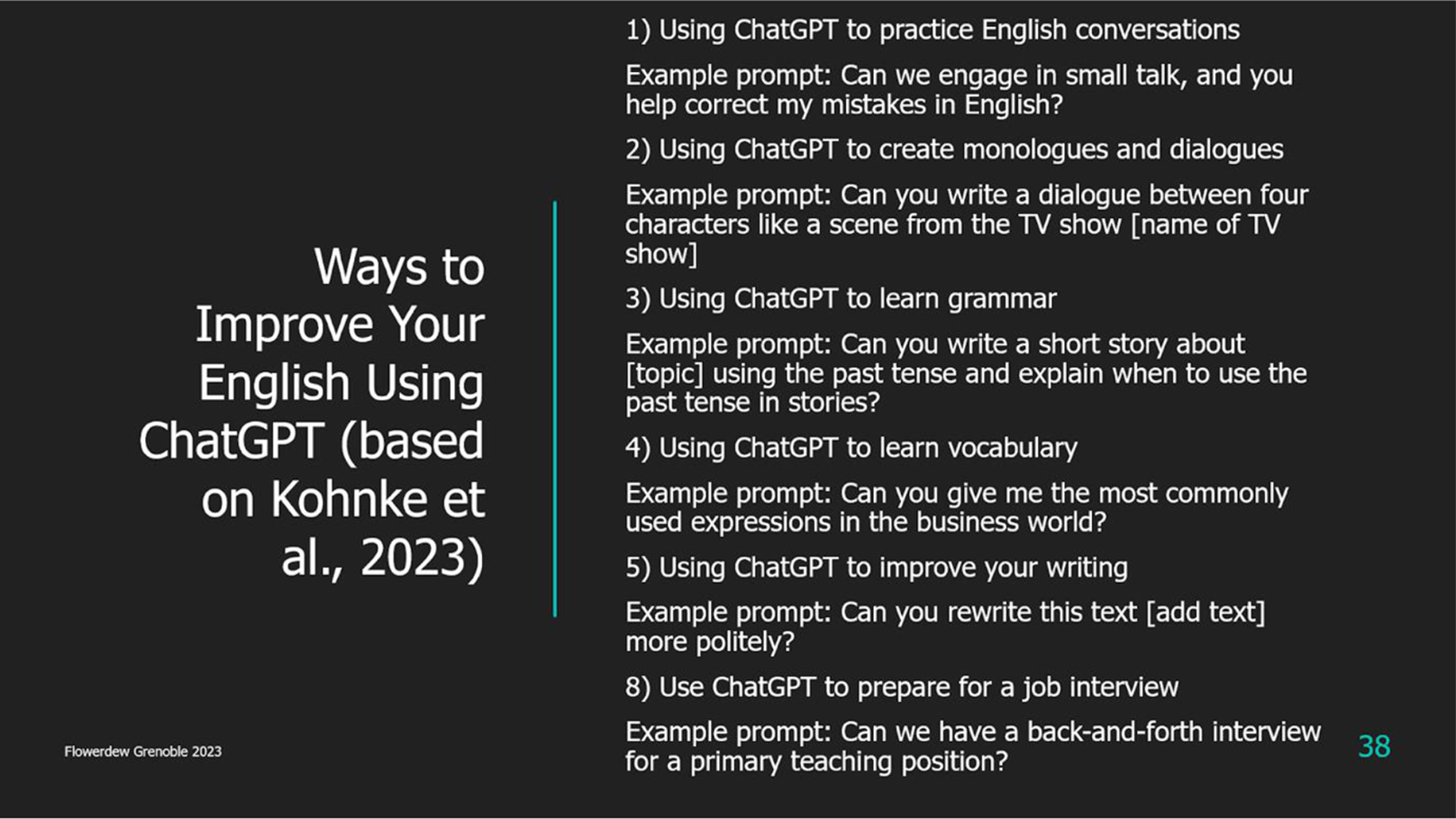

Figure 10, which is also based on Kohnke et al. (Reference Kohnke, Moorhouse and Zou2023), is a list of possible ways a student might use ChatGPT in their language learning.

Figure 10. Ways to improve your English using ChatGPT (based on Kohnke et al. (Reference Kohnke, Moorhouse and Zou2023))

You can see that these activities are not DDL as we know it. However, some of the suggested tasks could also be done with corpora. For example, number 4: [G]ive me the most commonly used expressions in the business world. You could use the frequency function on a concordancer to do this if you had a large business corpus; or number 3: [E]xplain when to use the past tense in stories. You could certainly search for past tense forms in a corpus of stories; however, the learner would have to infer how to use them from the concordance lines provided rather than be presented with any ‘rules’. But then you could also argue that such inductive tasks would have pedagogical benefits.

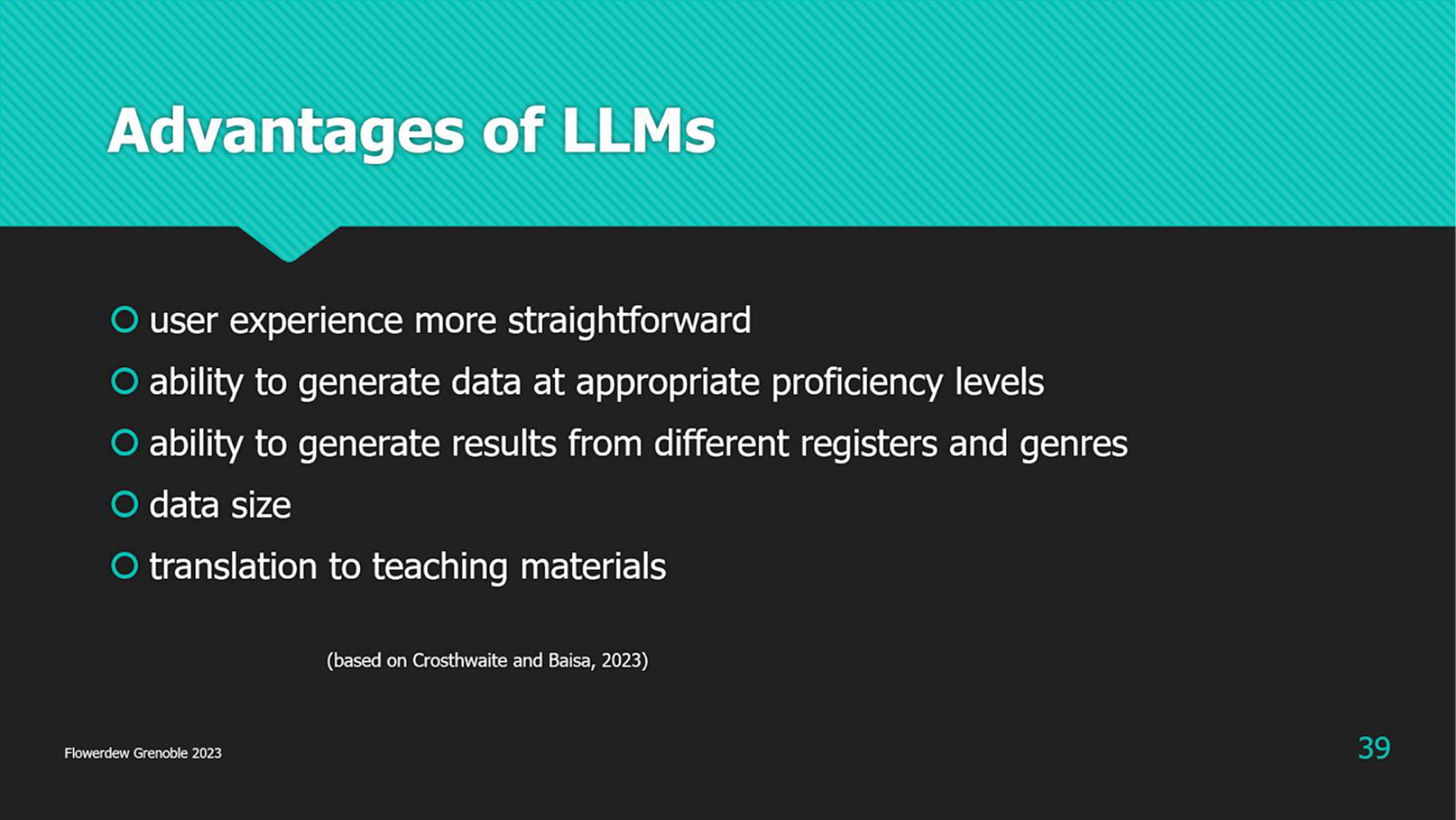

A number of generalisations can perhaps be made about the affordances and limitations of ChatGPT as a language learning tool, based on what these authors have to say. Figure 11 shows some of the advantages Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023) see for ChatGPT over corpus tools.

Figure 11. Advantages of ChatGPT (based on Crosthwaite & Baisa (Reference Crosthwaite and Baisa2023))

The first is the user experience. There is no doubt that ChatGPT is very easy to use, with millions of people already exploiting it daily with no specific training. Common criticisms of DDL are that the interfaces are difficult to use and that even after learners have been taught how to use them, they don't continue when they are left to their own devices (although see Charles (Reference Charles2014) on this second point). Another drawback with DDL is that the examples provided by corpora and presented as corpus lines may be difficult for learners with limited linguistic proficiency to understand (Lin, Reference Lin2023). Given the right prompts, ChatGPT can tailor its output at an appropriate linguistic level. You can ask it to write in simple English or more sophisticated English, American English, Indian English, or even Cockney, for example. Indeed, as a default, Copilot asks you to select a conversational style – more creative, more balanced, or more precise. Furthermore, because they draw on huge databases, LLMs can produce output according to myriad styles – email, financial report, advertisement copy, and so forth (although some specialist genres that we are interested in with Languages for Specific Purposes (LSP) may not be represented (at least for the time being). Finally, Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023) cite the ability to create teaching materials as an advantage of LLMs, although this is not directly an issue for DDL.

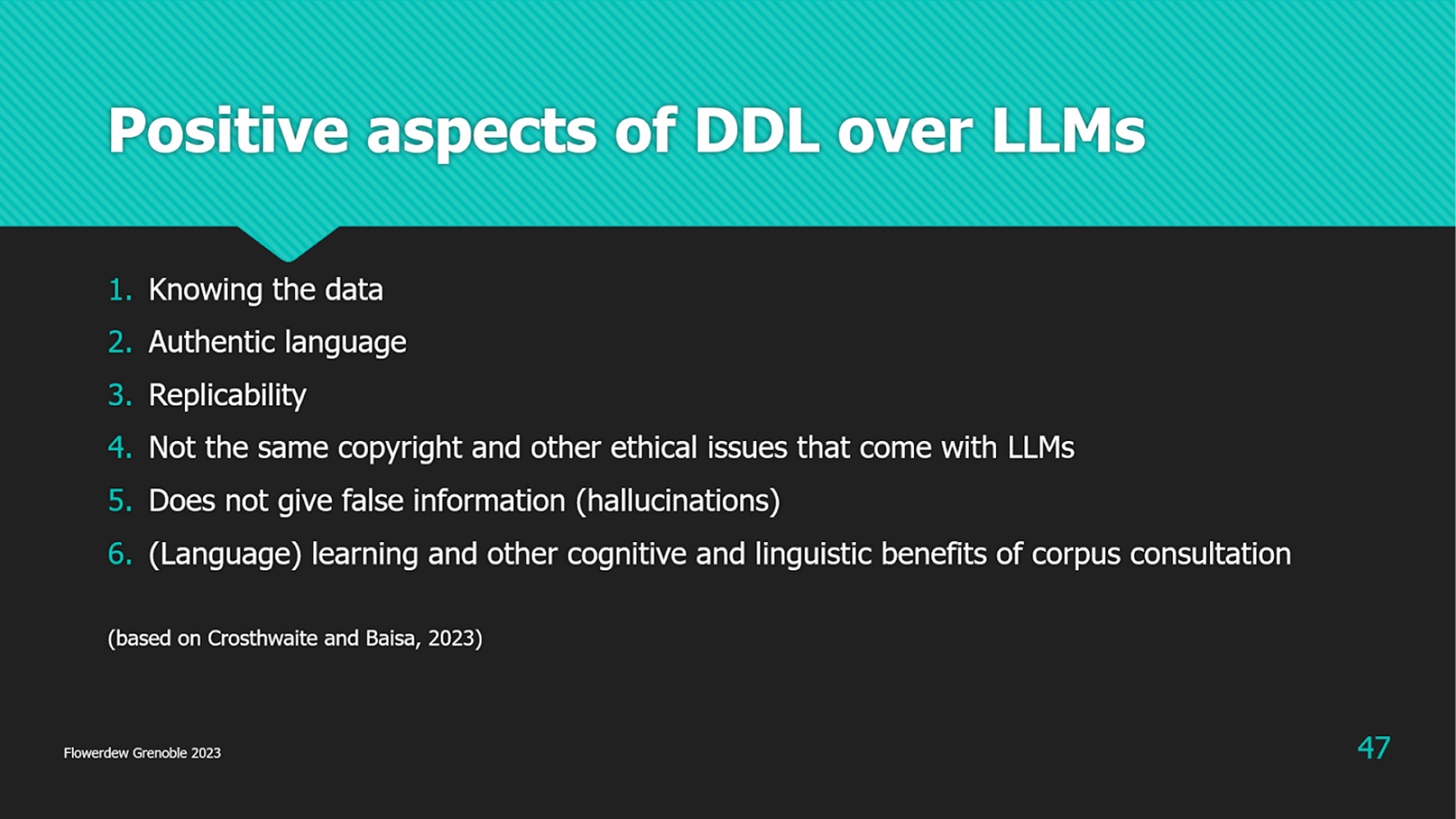

In spite of these advantages of LLMs, Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023) still see a role for DDL as we know it, as shown in Figure 12.

Figure 12. Positive aspects of DDL (based on Crosthwaite & Baisa (Reference Crosthwaite and Baisa2023))

Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023) argue that an advantage DDL has over LLMs is that with the former you know the data that you are working with. As already noted, the sources of the outputs produced by LLMs are unknown (or we only make guesses as to what they might be), in contrast to DDL, where we can be confident that the language that we are asking learners to work with is appropriate to our goals. Learners are also able to consult the broader context of the corpus lines they are perusing, if they so wish, by clicking on the key word in context. Furthermore, learners may build their own personalised corpora, as illustrated in Chen and Flowerdew (Reference Chen and Flowerdew2018) (see also Lee & Swales, Reference Lee and Swales2006).

Related to knowing the data, users of corpora can be sure that the language they are working with is authentic. Authentic language, indeed, was one of the original arguments in favour of using corpus-derived data in language teaching and learning in the first place (Gilmore, Reference Gilmore2007). As already noted, we are not sure where the data comes from with LLMs and, unlike with corpora, the language generated with LLMs does not correspond to actual attested use but is created according to statistical relationships. Related to this point also, corpus queries are replicable. We can do the same search and come up with the same findings, unlike with LLMs, which give a different answer even if you give it the same query, something that can be confusing to learners. Furthermore, corpora do not make mistakes, whereas LLMs are liable to hallucinate and give false information, such as when in our third case study ChatGPT gave us examples of the use of the verb indicate in conclusion sections that did not include the word indicate! Of course, with corpora, the user can make mistakes (in choosing the corpus and tool, in formulating queries, or interpreting the results) and the corpus is only as good as what it contains. But, the corpus itself does not give false information.

Finally – and this is the point that, along with Crosthwaite and Baisa (Reference Crosthwaite and Baisa2023), I want to emphasise – the procedures learners perform in DDL promote language learning in a way that LLM prompts do not. In DDL, learners discover how to search for patterns and induce meanings from many instances of use. These patterns of form and meaning can be added to the learner's linguistic repertoire. As various authors have argued, by analysing recurrent patterns, learners apply inductive processes involving noticing, language awareness, hypothesis-making and -testing, sensitivity to linguistic variation, and various data skills (e.g., Flowerdew, Reference Flowerdew, Leńko-Szymańska and Boulton2015; O'Keeffe, Reference O'Keeffe2021; Pérez-Paredes et al., Reference Pérez-Paredes, Sánchez-Tornel, Aguado-Jiménez, Sullivan, Lantz and Sullivan2019; O'Sullivan, Reference O'Sullivan2007). With LLMs, in contrast, users are provided with answers to queries, but there is no inductive learning. It is a transmission model, with the learner asking the question and the LLM providing the information. There is little cognitive work needed on the part of the learner.Footnote 6

9. Conclusion

In this talk, I've presented three case studies – an early ground-breaking indirect application of DDL with the Collins Cobuild Dictionary (along with some other examples using the same insights employed in Cobuild); a more recent direct application, with the workshop I conducted with Meilin Chen; and a replication of some of the queries from that workshop but using LLMs (mainly ChaptGPT) – and made some generalisations about DDL and LLMs. One wonders what Sinclair might have thought of ChatGPT if he had lived to see its development. As I previously mentioned, he favoured large corpora, and ChatGPT certainly uses a very large corpus indeed. Sinclair required a large corpus, however, because he wanted to corroborate linguistic patterns and meanings that he found by means of large numbers of examples. In this way, he achieved deterministic results. Having found many examples in his (large) corpus, he could be confident that he would likely find the same patterns and meanings in other linguistic data. LLMs are not deterministic like this, however; they are probabilistic rather than deterministic. You can ask them the same question many times and each time they will give you a different answer. Furthermore, as we have seen, they may provide you with false data and linguistic examples that have been made up. It is not surprising, therefore, that in his memorial paper on the contribution of John Sinclair, Stubbs (Reference Stubbs2009) tells us that Sinclair was dubious about Natural Language Processing (the precursor to LLMs).

I don't think, however, that Sinclair could have predicted the paradigm shift that we are experiencing today with LLMs. There is still a place for DDL as we know it, especially in indirect approaches, where deterministic results from linguistic analysis feed into learning materials, as with the Cobuild dictionary, but also in classroom concordancing, where, along with Tim Johns (Reference Johns, Wichmann, Fligelstone, McEnery and Knowles1997), we want every learner to be a Sherlock Holmes and (guided by the teacher) find out for themselves how the target language works and add that knowledge to their linguistic repertoire.

The answer lies in a synthesis of both approaches. In the article I cited by Lin (Reference Lin2023), she describes how she has experimented to produce prompts for ChatGPT to make it act like a concordance (although the prompts she had to produce through trial and error were complicated and their production extremely time-consuming). ‘I was particularly interested in exploring the feasibility of using ChatGPT as a concordancer,’ she states, ‘because this might allow learners and teachers to benefit from both concordancing and ChatGPT’ (p. 5). Similarly, Laurence Anthony told me in an email yesterday that he is introducing a function into his AntConc app that allows the use of ChatGPT alongside the usual concordancer functions. This is surely the way to go. Future research and practice will be required, of course, however, to show how such a synthesis can come about and what it would look like. How does one know when to use one or the other? What are the advantages of each (in terms of producing textual output and in terms of language acquisition), and how do they relate to theories of language learning?

Laurence also told me something that will make a good quote on which to close this talk:

Generally speaking, I think most people in linguistics don't really understand how LLMs work and what their potential is. So much about LLMs is a mystery, but they are incredibly powerful and will change everybody's lives. As linguists, it seems reasonable to me that we should be at least somewhere near the front line when it comes to understanding how these large LANGUAGE models are working, proposing new ways to use them, and improving them for the future. (Laurence Anthony, personal communication, 4 December 2023)

So, there you have it.

Declaration of use of generative AI and AI-assisted technologies in the preparation of this article

During the preparation of this work, the author used Microsoft Copilot in the production of the summary used in the second case study and ChatGPT and Microsoft Copilot for the production of the data used in the third case study. He also used ChatGPT and Copilot for literature search. He furthermore tried the two apps for reference production, but they were very inaccurate.

Acknowledgment

The author gratefully acknowledges discussion about LLMs with Laurence Anthony, Peter Crosthwaite, and Richard Forest prior to making this presentation. The author takes full responsibility for its content. He also gratefully acknowledges feedback from three reviewers and the editor of Language Teaching in revising the speech for publication.

John Flowerdew is a visiting professor at Lancaster University and an honorary research fellow at Birkbeck, University of London. Previously he was a professor at City University of Hong Kong and at University of Leeds. Notable more recent books are Introducing English for research publication purposes (with P. Habibie) (Routledge); Signalling nouns in discourse: A corpus-based discourse approach (with R.W. Forest) (CUP); The Routledge handbook of critical discourse studies (with J. Richardson); and Discourse in English language education (Routledge). He is active in research and publication and is regularly invited to speak at international conferences.