1. Introduction

The variable and transient radio sky encompasses a vast range of astrophysical phenomena. Notable examples include pulsars (Keane Reference Keane and van Leeuwen2013), fast radio bursts (FRBs; Lorimer et al. Reference Lorimer, Bailes, McLaughlin, Narkevic and Crawford2007; Thornton et al. Reference Thornton2013), magnetars and their potential link to FRBs (Bochenek et al. Reference Bochenek2020), rotating radio transients (RRAT McLaughlin et al. Reference McLaughlin2006), gamma-ray burst (GRB) afterglows, Jovian and solar bursts, flare stars, cataclysmic variables (CVs), exoplanet and stellar outbursts (Zhang et al. Reference Zhang2023), X-ray binaries, novae, supernovae, active galactic nuclei (AGNs), blazars, tidal disruption events, counterparts to gravitational wave (GW) events, and several phenomena yet to be classified (Thyagarajan et al. Reference Thyagarajan, Helfand, White and Becker2011). Their timescales span orders of magnitude, from nanoseconds to days, across wide frequency ranges and polarisation states (Pietka, Fender, & Keane Reference Pietka, Fender and Keane2015; Chandra et al. Reference Chandra2016; Nimmo et al. Reference Nimmo2022, and references therein). For example, pulsars and their giant radio pulses can operate on timescales from milliseconds to seconds, with millisecond pulsars on milliseconds to tens of milliseconds, while FRBs are active on sub-millisecond to tens of millisecond timescales (Crawford et al. Reference Crawford2022; Gupta et al. Reference Gupta2022; Snelders et al. Reference Snelders2023). Extremely energetic and ultra-fast phenomena, such as sub-millisecond pulsars and magnetars (Du et al. Reference Du, Xu, Qiao and Han2009; Haskell et al. Reference Haskell2018), and nanosecond-scale giant pulses from the Crab pulsar (Hankins et al. Reference Hankins, Kern, Weatherall and Eilek2003; Hankins & Eilek Reference Hankins and Eilek2007; Eilek & Hankins Reference Eilek and Hankins2016; Philippov et al. Reference Philippov, Uzdensky, Spitkovsky and Cerutti2019), can reveal the fundamental nature of matter and exotic physics. The effectiveness of discovering these transients depends on the instrument’s field of view and the duration of on-sky observation (Cordes Reference Cordes2007), ideally requiring continuous real-time operation over a large instantaneous field of view to capture phenomena ranging from microseconds to days.

Another significant goal of modern radio astronomy is the exploration of structure formation and constraining of cosmological parameters in the early Universe using the redshifted 21 cm line of neutral Hydrogen as a tracer of large-scale structures (Morales & Wyithe Reference Morales and Wyithe2010; Pritchard & Loeb Reference Pritchard and Loeb2012, and references therein). One prominent example includes probing the Cosmic Dawn and the Epoch of Reionisation signifying the appearance of the first luminous objects in the Universe and their impact on structure formation in the Universe at

![]() $z\gtrsim 6$

. Another example is to probe the Dark Energy and acceleration of the Universe at

$z\gtrsim 6$

. Another example is to probe the Dark Energy and acceleration of the Universe at

![]() $1\lesssim z\lesssim 4$

using intensity mapping of redshifted 21 cm from Hi bound to galaxies (Cosmic Visions 21 cm Collaboration et al. 2018). Such cosmological radio observations probing large-scale structures through spatial power spectrum or imaging require interferometer arrays of large fields of view (hundreds to thousands of square degrees), dense sampling of the large-scale spatial modes (tens of kpc to tens of Mpc), and high sensitivity (

$1\lesssim z\lesssim 4$

using intensity mapping of redshifted 21 cm from Hi bound to galaxies (Cosmic Visions 21 cm Collaboration et al. 2018). Such cosmological radio observations probing large-scale structures through spatial power spectrum or imaging require interferometer arrays of large fields of view (hundreds to thousands of square degrees), dense sampling of the large-scale spatial modes (tens of kpc to tens of Mpc), and high sensitivity (

![]() $\lesssim1$

mK noise levels).

$\lesssim1$

mK noise levels).

Based on the requirements for transients and observational cosmology, radio interferometers are witnessing a paradigm shift towards packing a large number of relatively small-sized collecting elements densely in a compact region. Modern radio arrays tend to be aperture arrays that operate interferometrically and hierarchically on multiple spatial scales, involving spatial dimensions of the collecting element, a spatial grouping of elements forming a virtual telescope typically referred to as a station or a tile, and a spatial grouping of stations comprising the full array. Examples of multi-scale, hierarchical aperture arrays include the Murchison Widefield Array (MWA; Tingay et al. Reference Tingay2013), the Hydrogen Epoch of Reionization Array (HERA; DeBoer et al. Reference DeBoer2017), the Low Frequency Array (LOFAR; van Haarlem et al. Reference van Haarlem2013), the swarm of Long Wavelength Arrays (LWA Swarm; Dowell & Taylor Reference Dowell and Taylor2018), the Hydrogen Intensity and Real-time Analysis Experiment (HIRAX; Crichton et al. Reference Crichton2022), and the SKA-low (Dewdney et al. Reference Dewdney, Hall, Schilizzi and Lazio2009; Braun et al. Reference Braun, Bonaldi, Bourke, Keane and Wagg2019).

This new paradigm poses severe computational and data rate challenges necessitated by the processing of large numbers of data streams in short cadence intervals. The choice of data processing architecture is usually sensitive to the array layout, cadence, angular resolution, and field-of-view coverage. For example, a correlator-based architecture is generally more suited for relatively smaller number of interferometer elements sparsely distributed over a large area and processing on a slower cadence, whereas a direct imaging architecture based on the fast Fourier transform (FFT) has far greater efficiency for regularly arranged elements (Daishido et al. Reference Daishido, Cornwell and Perley1991; Otobe et al. Reference Otobe1994; Tegmark & Zaldarriaga Reference Tegmark and Zaldarriaga2009; Tegmark & Zaldarriaga Reference Tegmark and Zaldarriaga2010; Foster et al. Reference Foster, Hickish, Magro, Price and Zarb Adami2014; Masui et al. Reference Masui2019). A generalised version of FFT-based direct imaging like the E-field Parallel Imaging ‘Correlator’ (EPIC; Thyagarajan et al. Reference Thyagarajan, Beardsley, Bowman and Morales2017; Thyagarajan et al. Reference Thyagarajan2019; Krishnan et al. Reference Krishnan2023) is expected to be highly efficient for large-N, densely packed arrays with or without regularly placed elements from computational and data rate perspectives. Pre- or post-correlation beamforming is expected to be efficient when the elements and the image locations are few in number. Thus, the choice of an optimal imaging architecture is critical for maximising the discovery potential. If the chosen architecture is inadequate, one or more discovery parameters such as time resolution, on-sky observing time, bandwidth, field of view, or angular resolution must be curtailed to obtain a compromised optimum (for example, Price Reference Price2024). For multi-scale arrays that involve hierarchical data processing, a single architecture may not be optimal on all scales. A hybrid architecture that is optimal at various levels of data processing hierarchy is required.

In this paper, the primary motivation is to explore different imaging architectures and their combinations appropriate for aperture arrays spanning a multi-dimensional space of array layout parameters and cadence intervals from a computational viewpoint. Conversely, the paper also provides a guide to choosing the array layout parameters and cadence intervals where a given architecture, or combinations thereof, will remain computationally efficient. Several upcoming and hypothetical multi-scale aperture arrays are used as examples.

The paper is organised as follows. Section 2 describes the parametrisation of multi-scale aperture arrays studied. Section 3 describes the metric of computational cost density in discovery phase space based on which different imaging architectures described in Section 4 are compared. The multi-scale, intra- and inter-station imaging architecture options are described in Sections 5 and 6, respectively. Section 7 contains a discussion and summary of the results, and conclusions are presented in Section 8.

2. Multi-scale, hierarchical aperture arrays

The hierarchical, multi-scale aperture array scenario considered involves spatial scales corresponding to the dimensions of the collecting element, station (a grouping of elements), and the array (a grouping of stations), denoted by

![]() $D_\textrm{e}$

,

$D_\textrm{e}$

,

![]() $D_\textrm{s}$

, and

$D_\textrm{s}$

, and

![]() $D_\textrm{A}$

, respectively. Fig. 1 illustrates the geometry and notation for hierarchical multi-scale aperture arrays studied in this paper.

$D_\textrm{A}$

, respectively. Fig. 1 illustrates the geometry and notation for hierarchical multi-scale aperture arrays studied in this paper.

Figure 1. Geometric view of a hierarchical multi-scale aperture array, which consists of

![]() $N_\textrm{s}$

stations, each a collection of

$N_\textrm{s}$

stations, each a collection of

![]() $N_\textrm{eps}$

elements. Stations, m and n, at locations,

$N_\textrm{eps}$

elements. Stations, m and n, at locations,

![]() $\boldsymbol{r}_m$

and

$\boldsymbol{r}_m$

and

![]() $\boldsymbol{r}_n$

, respectively, relative to the origin,

$\boldsymbol{r}_n$

, respectively, relative to the origin,

![]() $\mathcal{O}$

, are separated by

$\mathcal{O}$

, are separated by

![]() $\Delta\boldsymbol{r}_{mn}$

. Elements, a and b, in stations, m and n, are denoted by

$\Delta\boldsymbol{r}_{mn}$

. Elements, a and b, in stations, m and n, are denoted by

![]() $a_m$

and

$a_m$

and

![]() $b_n$

, located at

$b_n$

, located at

![]() $\boldsymbol{r}_{a_m}$

and

$\boldsymbol{r}_{a_m}$

and

![]() $\boldsymbol{r}_{b_n}$

, respectively.

$\boldsymbol{r}_{b_n}$

, respectively.

![]() $\Delta\boldsymbol{r}_{a_m b_n}$

is the separation between these elements. The spatial sizes of the element, station, and the array in the aperture plane are denoted by

$\Delta\boldsymbol{r}_{a_m b_n}$

is the separation between these elements. The spatial sizes of the element, station, and the array in the aperture plane are denoted by

![]() $D_\textrm{e}$

,

$D_\textrm{e}$

,

![]() $D_\textrm{s}$

, and

$D_\textrm{s}$

, and

![]() $D_\textrm{A}$

, respectively. The solid angle,

$D_\textrm{A}$

, respectively. The solid angle,

![]() $\Omega_\textrm{e}\sim (\lambda/D_\textrm{e})^2$

, corresponding to the element size determines the overall field of view in the sky plane accessible by the aperture array. Intra-station architectures produce station-sized virtual telescopes that simultaneously produce beams of solid angle,

$\Omega_\textrm{e}\sim (\lambda/D_\textrm{e})^2$

, corresponding to the element size determines the overall field of view in the sky plane accessible by the aperture array. Intra-station architectures produce station-sized virtual telescopes that simultaneously produce beams of solid angle,

![]() $\Omega_\textrm{s}\sim (\lambda/D_\textrm{s})^2$

, in multiple directions,

$\Omega_\textrm{s}\sim (\lambda/D_\textrm{s})^2$

, in multiple directions,

![]() $\hat{\boldsymbol{s}}_k$

, that can fill

$\hat{\boldsymbol{s}}_k$

, that can fill

![]() $\Omega_\textrm{e}$

. Inter-station processing towards any of these beams produces virtual telescopes of size,

$\Omega_\textrm{e}$

. Inter-station processing towards any of these beams produces virtual telescopes of size,

![]() $D_\textrm{A}$

, that simultaneously produce beams of solid angle,

$D_\textrm{A}$

, that simultaneously produce beams of solid angle,

![]() $\Omega_\textrm{A}\sim (\lambda/D_\textrm{A})^2$

, in multiple directions,

$\Omega_\textrm{A}\sim (\lambda/D_\textrm{A})^2$

, in multiple directions,

![]() $\boldsymbol{\sigma}_\ell$

, relative to

$\boldsymbol{\sigma}_\ell$

, relative to

![]() $\hat{\boldsymbol{s}}_k$

, that can fill the solid angle of that beam.

$\hat{\boldsymbol{s}}_k$

, that can fill the solid angle of that beam.

The number of stations in the array and the number of elements per station (indexed by m) are denoted by

![]() $N_\textrm{s}$

and

$N_\textrm{s}$

and

![]() $N_{\textrm{eps}_m}$

, respectively. The number of elements in all stations are assumed to be equal,

$N_{\textrm{eps}_m}$

, respectively. The number of elements in all stations are assumed to be equal,

![]() $N_{\textrm{eps}_m} = N_\textrm{eps}$

, for convenience. Without loss of generality, this study can be extended to arrays with heterogeneous station densities and layouts such as LOFAR, but the idiosyncrasies of the array will have to be taken into account.

$N_{\textrm{eps}_m} = N_\textrm{eps}$

, for convenience. Without loss of generality, this study can be extended to arrays with heterogeneous station densities and layouts such as LOFAR, but the idiosyncrasies of the array will have to be taken into account.

Table 1. Table of hierarchical, multi-scale interferometer array parameters.

aNominal operating wavelength of the array. Results may differ at other wavelengths depending on aperture efficiency, filling factor, etc.

bArray filling factor,

![]() $\,f_\textrm{A}=N_\textrm{s}\,(D_\textrm{s}/D_\textrm{A})^2$

.

$\,f_\textrm{A}=N_\textrm{s}\,(D_\textrm{s}/D_\textrm{A})^2$

.

cStation filling factor,

![]() $\,f_\textrm{s}=N_\textrm{eps}\,(D_\textrm{e}/D_\textrm{s})^2$

.

$\,f_\textrm{s}=N_\textrm{eps}\,(D_\textrm{e}/D_\textrm{s})^2$

.

dOnly the 6 km core of the proposed array (Polidan et al. Reference Polidan2024) is considered here.

The specific aperture array examples considered are the SKA-low, the core of the SKA-low (SKA-low-core), the first phase of the concept of a long baseline extension to SKA-low called Low-frequency Australian Megametre Baseline Demonstrator Array (LAMBDA-I Footnote a ), the Compact All-Sky Phased Array (CASPA Footnote b ; Luo et al. Reference Luo2024), and the core of a lunar array (FarView; Polidan et al. Reference Polidan2024) which is hereafter referred to as FarView-core. LAMBDA-I, CASPA, and FarView-core are hypothetical interferometers that are still in concept phase.

The parameters for the chosen examples are summarised in Table 1. Nominal wavelengths,

![]() $\lambda$

, at which the arrays are planned to operate are listed. The computational costs are typically independent of the wavelength and only depend on the spatial frequencies as shown in Table 1. However, the aperture efficiencies can, in general, vary with wavelength. For a fair comparison, the apertures are all assumed to be 100% efficient at the nominal wavelengths chosen, and that the arrays are used for real-time imaging.

$\lambda$

, at which the arrays are planned to operate are listed. The computational costs are typically independent of the wavelength and only depend on the spatial frequencies as shown in Table 1. However, the aperture efficiencies can, in general, vary with wavelength. For a fair comparison, the apertures are all assumed to be 100% efficient at the nominal wavelengths chosen, and that the arrays are used for real-time imaging.

Note that, traditionally, cosmological applications such as probing the epoch of reionisation (EoR) using redshifted 21 cm have relied on storing the visibilities and processing them offline. In such cases, although imaging costs like gridding, Discrete Fourier Transform (DFT), or FFT will not apply, the correlations must still be obtained in real-time. With large arrays, the traditional correlator approach may be too expensive. For example, the Packed Ultra-wideband Mapping Array (PUMA; Slosar et al. Reference Slosar2019), a planned radio telescope for cosmology and transients with

![]() $\sim$

5 000–32 000 elements, is considering an FFT correlator architecture, a specific adaptation of the EPIC architecture for redundant arrays. This study, with a breakdown of costs for different operations, is also relevant for such cosmological arrays.

$\sim$

5 000–32 000 elements, is considering an FFT correlator architecture, a specific adaptation of the EPIC architecture for redundant arrays. This study, with a breakdown of costs for different operations, is also relevant for such cosmological arrays.

3. Cost metric

The primary metric for computational cost used in this study is the number of real floating point operations (FLOP) and the density of such operations in the discovery phase space volume whose dimensions span time, frequency, polarisation, and location on the sky. The computational cost will be estimated per independent interval of time (in FLOP per second or FLOPS) for all possible polarisation states per frequency channel (

![]() $\delta\nu$

) per independent angular resolution element (

$\delta\nu$

) per independent angular resolution element (

![]() $\delta\Omega$

). In this paper, a discovery phase space volume element comprising of a single frequency channel at a single two-dimensional angular resolution element (independent pixel) will be referred to as a voxel (

$\delta\Omega$

). In this paper, a discovery phase space volume element comprising of a single frequency channel at a single two-dimensional angular resolution element (independent pixel) will be referred to as a voxel (

![]() $\delta\nu\,\delta\Omega$

).

$\delta\nu\,\delta\Omega$

).

The cost estimates rely on the following floating point operations. A complex addition consists of two FLOPs, and a complex multiplication consists of four real multiplications and two real additions and therefore six FLOPs. A complex multiply-accumulate (CMAC) operation includes one complex multiplication and addition, therefore requiring eight FLOPs.

Besides the computational metric employed in this paper, a practical implementation of any of these architectures requires careful consideration of several other practical factors such as memory bandwidth, input and output data rates, power consumption, and other processing costs such as calibration. These factors, elaborated further in Section 7.4, will vary significantly with real-life implementations and hardware capabilities available during deployment. Therefore, in a complex space of multi-dimensional factors, this work adopts a simple theoretical approach as a first step wherein the budget of floating point operations will form an irreducible requirement regardless of any particular implementation.

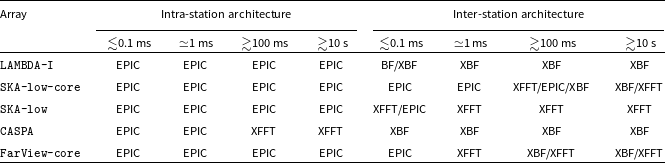

4. Fast imaging architectures

Two broad classes of two-stage fast imaging architectures are considered. In both classes, the first stage is intra-station interferometry of the element electric fields using the options of voltage beamforming (BF), E-field Parallel Imaging Correlator (EPIC), beamforming of correlations (XBF), or correlation followed by FFT (XFFT). The second stage involves inter-station combination of the data products from the first stage. The main difference between the two classes is in whether the second stage uses the first stage products coherently or incoherently. Although aperture arrays consisting of only three hierarchical scales are considered here, the analysis can be extended without loss of generality to arrays of even larger levels of spatial hierarchy. A schematic of these hybrid architectures, their computational cost components, and their extension methodology to multiple stages are illustrated in Fig. 2.

Figure 2. A hybrid, two-stage architecture for hierarchical aperture arrays showing the methodology for extension to multiple stages. Black arrows indicate pathways where the signal consists of electric fields with phase coherence. Grey arrows indicate pathways of a ‘squared’ or a correlated signal with no absolute but only relative phase information. At the top are intra-station architectures, namely, BF, correlator, and EPIC. The top box denotes intra-station imaging options. The bottom box denotes inter-station imaging architectures that process coherent outputs obtained from multiple stations deploying intra-station BF and/or EPIC architectures. The electric fields phase coherently synthesised by BF or EPIC at any stage can form the input for the next stage, thereby allowing the extension of the hierarchy.

Fast imaging involves processing data on multiple time scales. The channelisation of a voltage time stream of length

![]() $\delta t$

with a time resolution of

$\delta t$

with a time resolution of

![]() $t_\textrm{s}$

will correspond to spectral channels of width

$t_\textrm{s}$

will correspond to spectral channels of width

![]() $\delta\nu=1/\delta t$

and a bandwidth of

$\delta\nu=1/\delta t$

and a bandwidth of

![]() $B=1/t_\textrm{s}$

. The number of spectral channels is

$B=1/t_\textrm{s}$

. The number of spectral channels is

![]() $N_\nu=B/\delta\nu=\delta t/t_\textrm{s}$

. Images are expected to be produced at a cadence of

$N_\nu=B/\delta\nu=\delta t/t_\textrm{s}$

. Images are expected to be produced at a cadence of

![]() $t_\textrm{acc}$

, which is typically larger than

$t_\textrm{acc}$

, which is typically larger than

![]() $\delta t$

and smaller than the time scale on which calibration needs to be updated. In this paper, the nominal value for

$\delta t$

and smaller than the time scale on which calibration needs to be updated. In this paper, the nominal value for

![]() $\delta t$

is 25

$\delta t$

is 25

![]() $\mu$

s, and the possibilities for

$\mu$

s, and the possibilities for

![]() $t_\textrm{acc}$

explored range from 100

$t_\textrm{acc}$

explored range from 100

![]() $\mu$

s to 10 s.

$\mu$

s to 10 s.

In the discussions and equations that follow, a real-time calibration is assumed to have been performed on the input voltages. By assuming the system is reasonably stable, a much less frequent (

![]() $\ll 1/t_\textrm{acc}$

) calibration will be sufficient, which therefore will not significantly affect the total cost budget (Beardsley et al. Reference Beardsley, Thyagarajan, Bowman and Morales2017; Gorthi, Parsons, & Dillon Reference Gorthi, Parsons and Dillon2021). Although in principle an accurate calibration is possible in real time, applications such as EoR that require high-accuracy calibration have, for practical reasons, relied on offline calibration.

$\ll 1/t_\textrm{acc}$

) calibration will be sufficient, which therefore will not significantly affect the total cost budget (Beardsley et al. Reference Beardsley, Thyagarajan, Bowman and Morales2017; Gorthi, Parsons, & Dillon Reference Gorthi, Parsons and Dillon2021). Although in principle an accurate calibration is possible in real time, applications such as EoR that require high-accuracy calibration have, for practical reasons, relied on offline calibration.

5. Intra-station imaging architectures

This first stage essentially synthesises station-sized apertures with simultaneous, multiple virtual pointings. These station-sised synthesised apertures from the first stage will act as building blocks for the second stage of inter-station processing. The element, a, in station, m is denoted by

![]() $a_m$

. The element and station locations are denoted by

$a_m$

. The element and station locations are denoted by

![]() $\boldsymbol{r}_{a_m}$

and

$\boldsymbol{r}_{a_m}$

and

![]() $\boldsymbol{r}_m$

, respectively. Similarly,

$\boldsymbol{r}_m$

, respectively. Similarly,

![]() $\Delta\boldsymbol{r}_{a_m b_n}$

is the separation between an elements, a and b, in stations, m and n, respectively.

$\Delta\boldsymbol{r}_{a_m b_n}$

is the separation between an elements, a and b, in stations, m and n, respectively.

![]() $\Delta\boldsymbol{r}_{mn}$

denotes the station separation. This notation is illustrated in Fig. 1. A summary of the intra-station costs are provided in Table 2.

$\Delta\boldsymbol{r}_{mn}$

denotes the station separation. This notation is illustrated in Fig. 1. A summary of the intra-station costs are provided in Table 2.

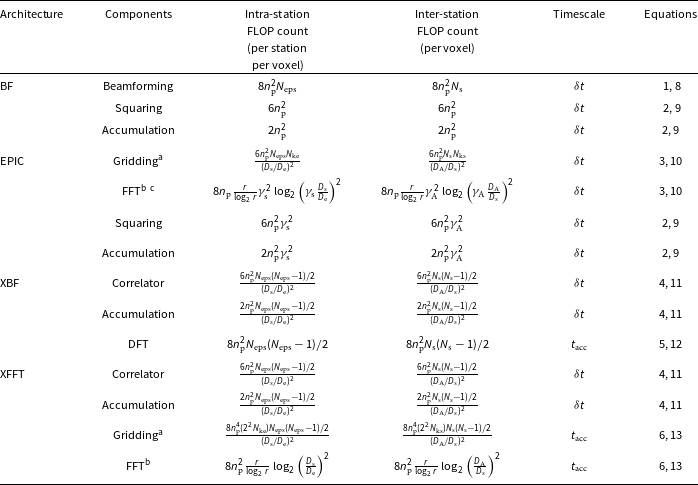

Table 2. Computational budget of intra- and inter-station coherent imaging architectures.

a

![]() $N_\textrm{ke}$

and

$N_\textrm{ke}$

and

![]() $N_\textrm{ks}$

denote the sizes (in number of cells) of the gridding kernels of the antenna elements and the stations, respectively. In this paper,

$N_\textrm{ks}$

denote the sizes (in number of cells) of the gridding kernels of the antenna elements and the stations, respectively. In this paper,

![]() $N_\textrm{ke}=1^2$

and

$N_\textrm{ke}=1^2$

and

![]() $N_\textrm{ks}=1^2$

. For XFFT, compared to pre-correlated data used in EPIC, the gridding sizes for intra- and inter-station correlations are quadrupled to

$N_\textrm{ks}=1^2$

. For XFFT, compared to pre-correlated data used in EPIC, the gridding sizes for intra- and inter-station correlations are quadrupled to

![]() $2^2 N_\textrm{ke}$

and

$2^2 N_\textrm{ke}$

and

![]() $2^2 N_\textrm{ks}$

, respectively, to account for the expansion of the correlated kernels and to remove the aliasing effects within the respective fields of view. If the gridding kernels incorporate w-projection, the kernel sizes will be correspondingly larger.

$2^2 N_\textrm{ks}$

, respectively, to account for the expansion of the correlated kernels and to remove the aliasing effects within the respective fields of view. If the gridding kernels incorporate w-projection, the kernel sizes will be correspondingly larger.

b

r denotes the radix of the FFT algorithm (Cooley & Tukey Reference Cooley and Tukey1965). Here,

![]() $r=2$

.

$r=2$

.

c

![]() $\gamma_\textrm{s}$

and

$\gamma_\textrm{s}$

and

![]() $\gamma_\textrm{A}$

denote padding factors for station- and array-level FFT, respectively, in EPIC. Here,

$\gamma_\textrm{A}$

denote padding factors for station- and array-level FFT, respectively, in EPIC. Here,

![]() $\gamma_\textrm{s}=2$

and

$\gamma_\textrm{s}=2$

and

![]() $\gamma_\textrm{A}=2$

along each dimension to achieve identical image and pixel sizes as that from XFFT.

$\gamma_\textrm{A}=2$

along each dimension to achieve identical image and pixel sizes as that from XFFT.

5.1. Voltage Beamforming (BF)

Let

![]() $\widetilde{E}_{a_m}^{p}(\nu)$

represent the calibrated and noise-weighted electric field at frequency,

$\widetilde{E}_{a_m}^{p}(\nu)$

represent the calibrated and noise-weighted electric field at frequency,

![]() $\nu$

, in polarisation state, p, measured by a station element,

$\nu$

, in polarisation state, p, measured by a station element,

![]() $a_m$

, in station, m. Because each spectral channel is assumed to be processed independently, specifying the frequency can be dropped for convenience to write

$a_m$

, in station, m. Because each spectral channel is assumed to be processed independently, specifying the frequency can be dropped for convenience to write

![]() $\widetilde{E}_{a_m}^{p}(\nu)\equiv \widetilde{E}_{a_m}^{p}$

. Then, such electric fields from individual station elements can be phase-coherently superposed to obtain the electric field in polarisation state,

$\widetilde{E}_{a_m}^{p}(\nu)\equiv \widetilde{E}_{a_m}^{p}$

. Then, such electric fields from individual station elements can be phase-coherently superposed to obtain the electric field in polarisation state,

![]() $\alpha$

, towards any desired direction as

$\alpha$

, towards any desired direction as

where,

![]() $\hat{\boldsymbol{s}}_k$

denotes the direction of the superposed beam, k, and

$\hat{\boldsymbol{s}}_k$

denotes the direction of the superposed beam, k, and

![]() $\widetilde{\mathcal{W}}_{a_m}^{{\alpha p}^*}(\hat{\boldsymbol{s}}_k)$

denotes a complex-valued directional weightingFootnote

c

with the superscript indices

$\widetilde{\mathcal{W}}_{a_m}^{{\alpha p}^*}(\hat{\boldsymbol{s}}_k)$

denotes a complex-valued directional weightingFootnote

c

with the superscript indices

![]() $\alpha, p$

, representing the contribution of polarisation state, p in the station element,

$\alpha, p$

, representing the contribution of polarisation state, p in the station element,

![]() $a_m$

, to polarisation state,

$a_m$

, to polarisation state,

![]() $\alpha$

, towards direction,

$\alpha$

, towards direction,

![]() $\hat{\boldsymbol{s}}_k$

. The * denotes complex conjugate. Equation (1) resembles a DFT of the calibrated Electric field measurement after applying a complex-valued weighting.

$\hat{\boldsymbol{s}}_k$

. The * denotes complex conjugate. Equation (1) resembles a DFT of the calibrated Electric field measurement after applying a complex-valued weighting.

The polarised intensity in the beamformed pixel is then obtained by

where, angular brackets denote a temporal averaging across an interval of

![]() $t_\textrm{acc}$

.

$t_\textrm{acc}$

.

![]() $\widetilde{\mathcal{I}}^{\alpha\beta}_m(\hat{\boldsymbol{s}}_k)$

results from the outer product of indices

$\widetilde{\mathcal{I}}^{\alpha\beta}_m(\hat{\boldsymbol{s}}_k)$

results from the outer product of indices

![]() $\alpha$

and

$\alpha$

and

![]() $\beta$

. Superscript,

$\beta$

. Superscript,

![]() $\alpha\beta$

, thus denotes the four pairwise combinations of polarisation states of the intensity. Even though it is an outer product over the polarisation indices, it will be referred to as a ‘squaring’ operation, hereafter, for convenience because of what it reduces to if

$\alpha\beta$

, thus denotes the four pairwise combinations of polarisation states of the intensity. Even though it is an outer product over the polarisation indices, it will be referred to as a ‘squaring’ operation, hereafter, for convenience because of what it reduces to if

![]() $\alpha=\beta$

.

$\alpha=\beta$

.

The solid angle of the beamformed pixel using the intra-station data is given by

![]() $\Omega_\textrm{s} \simeq (\lambda/D_\textrm{s})^2$

. Beamforming can be applied to all independent beams (

$\Omega_\textrm{s} \simeq (\lambda/D_\textrm{s})^2$

. Beamforming can be applied to all independent beams (

![]() $n_\textrm{bs}$

) filling the field of view with solid angle,

$n_\textrm{bs}$

) filling the field of view with solid angle,

![]() $\Omega_\textrm{e} \simeq (\lambda/D_\textrm{e})^2$

. Thus,

$\Omega_\textrm{e} \simeq (\lambda/D_\textrm{e})^2$

. Thus,

![]() $n_\textrm{bs} \simeq \Omega_\textrm{e}/\Omega_\textrm{s}=(D_\textrm{s}/D_\textrm{e})^2$

. This beamforming step has to be executed at a cadence of

$n_\textrm{bs} \simeq \Omega_\textrm{e}/\Omega_\textrm{s}=(D_\textrm{s}/D_\textrm{e})^2$

. This beamforming step has to be executed at a cadence of

![]() $\delta t$

.

$\delta t$

.

Fig. 3 shows a breakdown of the computational cost per station per voxel for voltage beamforming as a function of the different station parameters. In each panel, all parameters except the one on the x-axis are kept fixed at the characteristic values of the LAMBDA-I, SKA-low, and SKA-low-core stations as highlighted in cyan. The total cost is dominated by the DFT beamforming in Equation (1) denoted by black dashed lines. The DFT beamforming cost per voxel scales linearly with the number of elements per station. The costs of squaring and temporal averaging in Equation (2) are subdominant. Because the squaring and temporal averaging are performed on a per-pixel basis and not dependent on

![]() $N_\textrm{eps}$

, they remain constant across

$N_\textrm{eps}$

, they remain constant across

![]() $N_\textrm{eps}$

. And because the number of independent pixels covering the field-of-view scales as

$N_\textrm{eps}$

. And because the number of independent pixels covering the field-of-view scales as

![]() $n_\textrm{bs} \simeq (D_\textrm{s}/D_\textrm{e})^2$

, the cost per independent pixel remains constant across

$n_\textrm{bs} \simeq (D_\textrm{s}/D_\textrm{e})^2$

, the cost per independent pixel remains constant across

![]() $D_\textrm{s}$

and

$D_\textrm{s}$

and

![]() $D_\textrm{e}$

. All these operations have to be performed at every time step,

$D_\textrm{e}$

. All these operations have to be performed at every time step,

![]() $\delta t$

, and hence remain constant regardless of

$\delta t$

, and hence remain constant regardless of

![]() $t_\textrm{acc}$

. Although spatial averaging across multiple stations (orange dot-dashed line) is not a station-level operation, it is shown to emphasise that it constitutes only a negligible cost relative to the other components, noting that it needs to be only performed on a slower cadence of

$t_\textrm{acc}$

. Although spatial averaging across multiple stations (orange dot-dashed line) is not a station-level operation, it is shown to emphasise that it constitutes only a negligible cost relative to the other components, noting that it needs to be only performed on a slower cadence of

![]() $t_\textrm{acc}$

on a per-pixel basis, and thus scales inversely with

$t_\textrm{acc}$

on a per-pixel basis, and thus scales inversely with

![]() $t_\textrm{acc}$

.

$t_\textrm{acc}$

.

Figure 3. A breakdown of the computational cost density function over the station parameters for the voltage beamforming (BF) architecture at the station level. Each panel shows the variation with the respective parameter keeping the rest fixed at the characteristic values of the LAMBDA-I, SKA-low-core, and SKA-low stations (cyan lines). The beamforming cost in Equation (1) denoted by black dashed lines dominates the squaring (pink dot-dashes) and temporal averaging (blue dot-dashes) costs in Equation (2). Orange dot-dashes denote the incoherent spatial average of the intensities across stations and has negligible contribution to the total cost.

Fig. 4 shows a covariant view of the total computational cost density per station per voxel (after summing the DFT, squaring, and averaging components) for the beamforming architecture taken two station parameters at a time while keeping the rest fixed at the nominal values of a LAMBDA-I, SKA-low, or a SKA-low-core station (grey dashed lines). The variation in the cost per voxel is dominated by the beamforming cost and exhibits the same trends observed in Fig. 3 – linear in

![]() $N_\textrm{eps}$

while remaining constant with

$N_\textrm{eps}$

while remaining constant with

![]() $D_\textrm{e}$

and

$D_\textrm{e}$

and

![]() $D_\textrm{s}$

.

$D_\textrm{s}$

.

Figure 4. A multi-dimensional covariant view of the net computational cost density function over the station parameters for the voltage beamforming (BF) architecture at the station level by varying two parameters at a time while fixing the rest at the nominal values of the LAMBDA-I, SKA-low-core, and SKA-low stations shown in grey dashed lines. The empty portions in the panels denote parts of parameter space physically impossible to sample given the constraints of the station parameters. Contours corresponding to the colour scale are shown in cyan.

5.2. E-field Parallel Imaging Correlator (EPIC)

Instead of DFT beamforming at discrete arbitrary locations as described above, forming independent beams that simultaneously fill the entire field of view can be achieved by a two-dimensional spatial FFT of the calibrated electric field measurements on the aperture plane. Typically, this has been associated with a regularly gridded array layout (Daishido et al. Reference Daishido, Cornwell and Perley1991; Otobe et al. Reference Otobe1994; Tegmark & Zaldarriaga Reference Tegmark and Zaldarriaga2009; Tegmark & Zaldarriaga Reference Tegmark and Zaldarriaga2010; Foster et al. Reference Foster, Hickish, Magro, Price and Zarb Adami2014; Masui et al. Reference Masui2019). However, it can be enabled even for an irregular layout of station elements by gridding the calibrated electric fields measured by the station elements. The Optimal Map Making (OMM; Tegmark Reference Tegmark1997) formalism adopted by Morales (Reference Morales2011) is the basis of the architecture called E-field Parallel Imaging Correlator (EPIC; Thyagarajan et al. Reference Thyagarajan, Beardsley, Bowman and Morales2017),

\begin{align} \widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k) &= \sum_j \delta^2 \boldsymbol{r}_j \, e^{i\frac{2\pi}{\lambda} \hat{\boldsymbol{s}}_k\cdot\boldsymbol{r}_j} \left(\sum_{a_m} \sum_p \widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r}_j-\boldsymbol{r}_{a_m}) \, \widetilde{E}_{a_m}^p \right) \, .\end{align}

\begin{align} \widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k) &= \sum_j \delta^2 \boldsymbol{r}_j \, e^{i\frac{2\pi}{\lambda} \hat{\boldsymbol{s}}_k\cdot\boldsymbol{r}_j} \left(\sum_{a_m} \sum_p \widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r}_j-\boldsymbol{r}_{a_m}) \, \widetilde{E}_{a_m}^p \right) \, .\end{align}

The expression within the parenthesis represents the gridding operation of each calibrated electric field measurement at an arbitrary location,

![]() $\boldsymbol{r}_{a_m}$

, of element

$\boldsymbol{r}_{a_m}$

, of element

![]() $a_m$

onto a common grid at locations,

$a_m$

onto a common grid at locations,

![]() $\boldsymbol{r}_j$

, using a gridding kernel,

$\boldsymbol{r}_j$

, using a gridding kernel,

![]() $\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})$

corresponding to that element. The outer summation denotes the Fourier transform of the weighted and gridded electric fields implemented through FFT.

$\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})$

corresponding to that element. The outer summation denotes the Fourier transform of the weighted and gridded electric fields implemented through FFT.

Application of the FFT will have the effect of simultaneously beamforming over the entire field of view,

![]() $\Omega_\textrm{e}$

. The convolution with

$\Omega_\textrm{e}$

. The convolution with

![]() $\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})$

in the aperture, which is the Fourier dual of

$\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})$

in the aperture, which is the Fourier dual of

![]() $\widetilde{\mathcal{W}}_{a_m}^{{\alpha p}^*}(\hat{\boldsymbol{s}}_k)$

in Equation (1), has several purposes. Firstly, it can be chosen to optimise specific properties in the synthesised image. For example, choosing

$\widetilde{\mathcal{W}}_{a_m}^{{\alpha p}^*}(\hat{\boldsymbol{s}}_k)$

in Equation (1), has several purposes. Firstly, it can be chosen to optimise specific properties in the synthesised image. For example, choosing

![]() $\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})=W_{a_m}^{\alpha p*}(\boldsymbol{r})$

, the complex conjugate of the holographic illumination pattern of the antenna element will optimise the signal-to-noise ratio in the image (Morales Reference Morales2011). Secondly, it can incorporate w-projection for non-coplanar element spacings (Cornwell, Golap, & Bhatnagar Reference Cornwell, Golap and Bhatnagar2008), direction-dependent ionospheric effects, wide-field distortions from refractive and scintillating atmospheric distortions, etc. (Morales & Matejek Reference Morales and Matejek2009; Morales Reference Morales2011). Finally, through gridding convolution it transforms data from discrete and arbitrary locations onto a regular grid, thereby enabling the application of a two-dimensional spatial FFT. Finally, the polarised intensities are obtained by application of Equation (2), that is pixelwise ‘squaring’ (outer product of polarisation states) and temporal averaging.

$\widetilde{W}_{a_m}^{\alpha p*}(\boldsymbol{r})=W_{a_m}^{\alpha p*}(\boldsymbol{r})$

, the complex conjugate of the holographic illumination pattern of the antenna element will optimise the signal-to-noise ratio in the image (Morales Reference Morales2011). Secondly, it can incorporate w-projection for non-coplanar element spacings (Cornwell, Golap, & Bhatnagar Reference Cornwell, Golap and Bhatnagar2008), direction-dependent ionospheric effects, wide-field distortions from refractive and scintillating atmospheric distortions, etc. (Morales & Matejek Reference Morales and Matejek2009; Morales Reference Morales2011). Finally, through gridding convolution it transforms data from discrete and arbitrary locations onto a regular grid, thereby enabling the application of a two-dimensional spatial FFT. Finally, the polarised intensities are obtained by application of Equation (2), that is pixelwise ‘squaring’ (outer product of polarisation states) and temporal averaging.

Fig. 5 shows a breakdown of the costs per station per voxel of the EPIC architecture, with nominal values of a LAMBDA-I, SKA-low, or a SKA-low-core station marked in cyan. The dominant cost is from the two-dimensional FFT (black dashed lines) in Equation (3). The spatial FFT cost depends only on the grid dimensions. So, it is independent of

![]() $N_\textrm{eps}$

as the grid can hold as many elements as physically possible. The computational cost of the spatial FFT scales roughly as

$N_\textrm{eps}$

as the grid can hold as many elements as physically possible. The computational cost of the spatial FFT scales roughly as

![]() $(\gamma_\textrm{s} \, D_\textrm{s}/D_\textrm{e})^2\log_2(\gamma_\textrm{s}\, D_\textrm{s}/D_\textrm{e})^2$

, where,

$(\gamma_\textrm{s} \, D_\textrm{s}/D_\textrm{e})^2\log_2(\gamma_\textrm{s}\, D_\textrm{s}/D_\textrm{e})^2$

, where,

![]() $\gamma_\textrm{s}$

is a constant padding factor.

$\gamma_\textrm{s}$

is a constant padding factor.

![]() $\gamma_\textrm{s}$

is used to control the pixel scale in the image. For example,

$\gamma_\textrm{s}$

is used to control the pixel scale in the image. For example,

![]() $\gamma_\textrm{s}=2$

(adopted in this paper) along each dimension will provide identical pixel scale and image size as that formed from gridded visibilities. Notably, because the spatial FFT produces an image over the entire field of view at every independent pixel instantly, it is not a pixelwise operation, and therefore, its cost per voxel scales as

$\gamma_\textrm{s}=2$

(adopted in this paper) along each dimension will provide identical pixel scale and image size as that formed from gridded visibilities. Notably, because the spatial FFT produces an image over the entire field of view at every independent pixel instantly, it is not a pixelwise operation, and therefore, its cost per voxel scales as

![]() $\sim \log_2(D_\textrm{s}/D_\textrm{e})$

, which exhibits a weak dependence on

$\sim \log_2(D_\textrm{s}/D_\textrm{e})$

, which exhibits a weak dependence on

![]() $D_\textrm{s}$

and

$D_\textrm{s}$

and

![]() $D_\textrm{e}$

. The squaring (pink dot-dashed lines) and temporal averaging (blue dot-dashed lines) in Equation (2) operate on a per-pixel basis. Thus, those costs per voxel remain constant with

$D_\textrm{e}$

. The squaring (pink dot-dashed lines) and temporal averaging (blue dot-dashed lines) in Equation (2) operate on a per-pixel basis. Thus, those costs per voxel remain constant with

![]() $N_\textrm{eps}$

,

$N_\textrm{eps}$

,

![]() $D_\textrm{s}$

and

$D_\textrm{s}$

and

![]() $D_\textrm{e}$

similar to the voltage beamforming architecture. And they are subdominant. The gridding (black dotted lines) with weights in Equation (3) is a per-element operation and depends only on

$D_\textrm{e}$

similar to the voltage beamforming architecture. And they are subdominant. The gridding (black dotted lines) with weights in Equation (3) is a per-element operation and depends only on

![]() $N_\textrm{eps}$

and the size of the gridding kernel,

$N_\textrm{eps}$

and the size of the gridding kernel,

![]() $N_\textrm{ke}$

. Here,

$N_\textrm{ke}$

. Here,

![]() $N_\textrm{ke}=1^2$

is chosen to provide unaliased full field-of-view images. The incorporation of w-projection in the gridding kernel will correspondingly increase

$N_\textrm{ke}=1^2$

is chosen to provide unaliased full field-of-view images. The incorporation of w-projection in the gridding kernel will correspondingly increase

![]() $N_\textrm{ke}$

. Nevertheless, the gridding cost, scales linearly with

$N_\textrm{ke}$

. Nevertheless, the gridding cost, scales linearly with

![]() $N_\textrm{eps}$

and inverse squared with

$N_\textrm{eps}$

and inverse squared with

![]() $D_\textrm{s}$

per voxel. Overall, the gridding cost is insignificant. Again, the spatial averaging of pixels across multiple stations (orange dot-dashed line) is not a station-level operation, but is only shown to emphasise that it operates on a much slower cadence of

$D_\textrm{s}$

per voxel. Overall, the gridding cost is insignificant. Again, the spatial averaging of pixels across multiple stations (orange dot-dashed line) is not a station-level operation, but is only shown to emphasise that it operates on a much slower cadence of

![]() $t_\textrm{acc}$

, scaling inversely with

$t_\textrm{acc}$

, scaling inversely with

![]() $t_\textrm{acc}$

, and adds only negligible cost to the overall cost budget.

$t_\textrm{acc}$

, and adds only negligible cost to the overall cost budget.

Figure 5. A breakdown of the computational cost density function over the station parameters for the EPIC architecture at the station level. Each panel shows the variation with the respective parameter keeping the rest fixed at the characteristic values of the LAMBDA-I, SKA-low-core, and SKA-low stations (cyan lines). The spatial FFT cost in Equation (3) denoted by black dashed lines dominates the squaring (pink dot-dashes) and temporal averaging (blue dot-dashes) costs in Equation (2). The gridding operation (black dotted lines) in Equation (3) contributes even lesser to the cost budget. The incoherent spatial averaging of the intensities across stations (orange dot-dashed line) has negligible contribution to the total cost.

Figure 6. (Left): Two-dimensional slices of the computational cost per station per voxel for imaging using station-level EPIC. Because the spatial FFT dominates the overall cost budget, the computational cost density is relatively insensitive to

![]() $N_\textrm{eps}$

,

$N_\textrm{eps}$

,

![]() $D_\textrm{s}$

, and

$D_\textrm{s}$

, and

![]() $D_\textrm{e}$

as seen in Fig. 5, thereby not showing much variation in the colour scale. (Right): Ratio of computational cost of EPIC to voltage beamforming. For

$D_\textrm{e}$

as seen in Fig. 5, thereby not showing much variation in the colour scale. (Right): Ratio of computational cost of EPIC to voltage beamforming. For

![]() $N_\textrm{eps}\gtrsim 100$

, EPIC has a significant advantage over voltage beamforming (BF). Grey dashed lines indicate nominal values for LAMBDA-I, SKA-low-core, and SKA-low stations. Logarithmic contour levels corresponding to the colour scale are shown in cyan.

$N_\textrm{eps}\gtrsim 100$

, EPIC has a significant advantage over voltage beamforming (BF). Grey dashed lines indicate nominal values for LAMBDA-I, SKA-low-core, and SKA-low stations. Logarithmic contour levels corresponding to the colour scale are shown in cyan.

Fig. 6a shows a covariant view of the total computational cost density per station per voxel (sum of gridding, FFT, squaring and temporal averaging components) for the EPIC architecture taken two station parameters at a time while keeping the rest fixed at the nominal values of a LAMBDA-I, SKA-low, or a SKA-low-core station denoted by grey dashed lines. The variation in the cost per voxel is dominated by the spatial FFT cost which is relatively constant in all these parameters and displays the same trends observed in Fig. 5, namely, nearly constant in

![]() $N_\textrm{eps}$

,

$N_\textrm{eps}$

,

![]() $D_\textrm{e}$

, and

$D_\textrm{e}$

, and

![]() $D_\textrm{s}$

. Fig. 6b shows the ratio of the computational cost of the EPIC architecture relative to the voltage beamforming architecture in Fig. 4. It is clearly noted that the cost of the EPIC architecture is significantly less than that of voltage beamforming for

$D_\textrm{s}$

. Fig. 6b shows the ratio of the computational cost of the EPIC architecture relative to the voltage beamforming architecture in Fig. 4. It is clearly noted that the cost of the EPIC architecture is significantly less than that of voltage beamforming for

![]() $N_\textrm{eps} \gtrsim 100$

, and continues to decrease with increasing

$N_\textrm{eps} \gtrsim 100$

, and continues to decrease with increasing

![]() $N_\textrm{eps}$

, implying that for full field-of-view imaging, EPIC has a clear and significant computational advantage over voltage DFT beamforming as

$N_\textrm{eps}$

, implying that for full field-of-view imaging, EPIC has a clear and significant computational advantage over voltage DFT beamforming as

![]() $N_\textrm{eps}$

and packing density of elements in a station increase.

$N_\textrm{eps}$

and packing density of elements in a station increase.

The EPIC architecture has been implemented on the Long Wavelength Array (LWA) station in Sevilleta (NM, USA) (Kent et al. Reference Kent2019, Reference Kent2020; Krishnan et al. Reference Krishnan2023) using a GPU framework and operates on a commensal mode. With recent optimisations, the EPIC pipeline on LWA-Sevilleta computes

![]() $128\times 128$

all-sky (visible hemisphere) images at 25 000 frames per second and outputs at a cadence of 40–80 ms accumulations (Reddy et al. Reference Reddy, Ibsen and Chiozzi2024). For reference, the software correlator and visibility-based imager can produce images at 5 s cadence (Taylor et al. Reference Taylor2012).

$128\times 128$

all-sky (visible hemisphere) images at 25 000 frames per second and outputs at a cadence of 40–80 ms accumulations (Reddy et al. Reference Reddy, Ibsen and Chiozzi2024). For reference, the software correlator and visibility-based imager can produce images at 5 s cadence (Taylor et al. Reference Taylor2012).

The EPIC architecture will be relevant for large cosmological arrays like PUMA that are considering a FFT-based correlator architecture. Obtaining visibilities from an EPIC architecture will involve an additional step of an inverse-FFT or an inverse-DFT back to the aperture plane from the accumulated images (not included in Fig. 5). However, the inverse-FFT or DFT operation to obtain visibilities needs to be performed only once every accumulation interval and is thus not expected to add significantly to the total cost budget.

Figure 7. A breakdown of the computational cost density function over the station parameters for the correlator beamforming architecture (XBF) at the station level. Each panel shows the variation with the respective parameter keeping the rest fixed at the characteristic values of the LAMBDA-I, SKA-low-core, and SKA-low stations (cyan lines). The two-dimensional DFT (dashed lines) dominates the cost for the chosen parameters at an imaging cadence of

![]() $t_\textrm{acc}=1$

ms over correlation/X-engine (double dot-dashed) and temporal averaging of the correlations (dot-dashed).

$t_\textrm{acc}=1$

ms over correlation/X-engine (double dot-dashed) and temporal averaging of the correlations (dot-dashed).

Figure 8. (Left): Two-dimensional slices of the computational cost for imaging using a DFT beamforming of station-level cross-correlations (XBF) for LAMBDA-I, SKA-low-core, and SKA-low station parameters (dashed grey lines). Cyan lines denote contours of the colour scale in logarithmic increments. (Right): Same as the left but relative to a voltage beamformer (BF) architecture. The computational cost density is dominated by the two-dimensional DFT cost for

![]() $t_\textrm{acc}=1$

ms and is lower than that of voltage beamforming (BF) for

$t_\textrm{acc}=1$

ms and is lower than that of voltage beamforming (BF) for

![]() $N_\textrm{eps}\lesssim 100$

while getting more expensive with increasing

$N_\textrm{eps}\lesssim 100$

while getting more expensive with increasing

![]() $N_\textrm{eps}$

.

$N_\textrm{eps}$

.

5.3. Correlator Beamforming (XBF)

In the traditional correlator approach, a pair of electric field measurements,

![]() $E_{a_m}^p$

and

$E_{a_m}^p$

and

![]() $E_{b_m}^q$

, in polarisation states, p and q, at station elements,

$E_{b_m}^q$

, in polarisation states, p and q, at station elements,

![]() $a_m$

and

$a_m$

and

![]() $b_m$

, respectively, in station, m, can be cross-correlated and temporally averaged to obtain calibrated visibilities,

$b_m$

, respectively, in station, m, can be cross-correlated and temporally averaged to obtain calibrated visibilities,

![]() $\widetilde{V}_{a_m b_m}^{pq}$

. It can be written as

$\widetilde{V}_{a_m b_m}^{pq}$

. It can be written as

The visibilities in the station can be beamformed using a DFT to obtain polarised intensities towards

![]() $\boldsymbol{s}_k$

as:

$\boldsymbol{s}_k$

as:

![]() $\widetilde{\mathcal{B}}_{a_m b_m}^{\alpha\beta;pq*}(\hat{\boldsymbol{s}}_k)$

, is the counterpart of

$\widetilde{\mathcal{B}}_{a_m b_m}^{\alpha\beta;pq*}(\hat{\boldsymbol{s}}_k)$

, is the counterpart of

![]() $\widetilde{\mathcal{W}}_{a_m}^{{p\alpha}^*}(\hat{\boldsymbol{s}}_k)$

in Equation (1) for a correlated quantity, and it denotes a complex-valued directional weighting applied to the calibrated visibility representing the contribution of polarisation state, pq, to a state,

$\widetilde{\mathcal{W}}_{a_m}^{{p\alpha}^*}(\hat{\boldsymbol{s}}_k)$

in Equation (1) for a correlated quantity, and it denotes a complex-valued directional weighting applied to the calibrated visibility representing the contribution of polarisation state, pq, to a state,

![]() $\alpha\beta$

, in the measurement and sky planes, respectively. It can be chosen to produce images with desired characteristics (Masui et al. Reference Masui2019).

$\alpha\beta$

, in the measurement and sky planes, respectively. It can be chosen to produce images with desired characteristics (Masui et al. Reference Masui2019).

Fig. 7 shows the computational cost density breakdown for correlator beamforming at the station level. The correlation and temporal averaging in Equation (4) are performed on every pair of station elements and scale as

![]() $N_\textrm{eps}(N_\textrm{eps}-1)/2$

, with no dependence on

$N_\textrm{eps}(N_\textrm{eps}-1)/2$

, with no dependence on

![]() $D_\textrm{e}$

or

$D_\textrm{e}$

or

![]() $D_\textrm{s}$

. Hence, their costs per voxel scale as

$D_\textrm{s}$

. Hence, their costs per voxel scale as

![]() $(D_\textrm{s}/D_\textrm{e})^{-2}$

. The two-dimensional DFT in Equation (5) also scales as

$(D_\textrm{s}/D_\textrm{e})^{-2}$

. The two-dimensional DFT in Equation (5) also scales as

![]() $N_\textrm{eps}(N_\textrm{eps}-1)/2$

, but being a per-pixel operation, stays constant with

$N_\textrm{eps}(N_\textrm{eps}-1)/2$

, but being a per-pixel operation, stays constant with

![]() $D_\textrm{e}$

and

$D_\textrm{e}$

and

![]() $D_\textrm{s}$

. The correlation and temporal averaging have to be performed at every interval of

$D_\textrm{s}$

. The correlation and temporal averaging have to be performed at every interval of

![]() $\delta t$

and are therefore, independent of

$\delta t$

and are therefore, independent of

![]() $t_\textrm{acc}$

, whereas the DFT needs to be performed at a cadence of

$t_\textrm{acc}$

, whereas the DFT needs to be performed at a cadence of

![]() $t_\textrm{acc}$

, and thus scales as

$t_\textrm{acc}$

, and thus scales as

![]() $t_\textrm{acc}^{-1}$

. For the chosen parameters of LAMBDA-I, SKA-low, and SKA-low-core, at

$t_\textrm{acc}^{-1}$

. For the chosen parameters of LAMBDA-I, SKA-low, and SKA-low-core, at

![]() $t_\textrm{acc}=1$

ms, the computational cost density is dominated by the cost of the DFT. However, for imaging on a slower cadence,

$t_\textrm{acc}=1$

ms, the computational cost density is dominated by the cost of the DFT. However, for imaging on a slower cadence,

![]() $t_\textrm{acc}\gtrsim 10$

ms, the cost of correlation begins to dominate.

$t_\textrm{acc}\gtrsim 10$

ms, the cost of correlation begins to dominate.

Fig. 8a is a covariant view of the total computational cost density per station per voxel (sum of correlator, temporal averaging, and DFT components) for the correlator beamformer architecture taken two station parameters at a time while keeping the rest fixed at the nominal values of a SKA-low, SKA-low-core, or a LAMBDA-I station (grey dashed lines). The variation in the cost per voxel for

![]() $t_\textrm{acc}=1$

ms is dominated by the spatial DFT cost which is relatively constant in

$t_\textrm{acc}=1$

ms is dominated by the spatial DFT cost which is relatively constant in

![]() $D_\textrm{e}$

and

$D_\textrm{e}$

and

![]() $D_\textrm{s}$

, and scales as

$D_\textrm{s}$

, and scales as

![]() $\sim N_\textrm{eps}^2$

, displaying the same trends observed in Fig. 7. Fig. 8b shows the ratio of the computational cost of the XBF architecture relative to the voltage beamforming architecture in Fig. 4. It is clear that the cost of the XBF architecture is less expensive only for

$\sim N_\textrm{eps}^2$

, displaying the same trends observed in Fig. 7. Fig. 8b shows the ratio of the computational cost of the XBF architecture relative to the voltage beamforming architecture in Fig. 4. It is clear that the cost of the XBF architecture is less expensive only for

![]() $N_\textrm{eps}\lesssim 100$

and gets worse with increasing

$N_\textrm{eps}\lesssim 100$

and gets worse with increasing

![]() $N_\textrm{eps}$

.

$N_\textrm{eps}$

.

5.4. Correlation and FFT (XFFT)

In this approach,

![]() $\widetilde{V}_{a_m b_m}^{pq}$

, the calibrated and inverse noise covariance weighted visibilities in station, m, obtained after the correlator in Equation (4) are not weighted and beamformed using a DFT. Instead, they are gridded with weights,

$\widetilde{V}_{a_m b_m}^{pq}$

, the calibrated and inverse noise covariance weighted visibilities in station, m, obtained after the correlator in Equation (4) are not weighted and beamformed using a DFT. Instead, they are gridded with weights,

![]() $\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

, which is the Fourier dual of

$\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

, which is the Fourier dual of

![]() $\widetilde{\mathcal{B}}_{a_m b_m}^{\alpha\beta;pq*}(\hat{\boldsymbol{s}}_k)$

in Equation (5), onto a grid in the aperture plane. The gridded visibilities are Fourier transformed using an FFT rather than a DFT used in correlator beamforming,

$\widetilde{\mathcal{B}}_{a_m b_m}^{\alpha\beta;pq*}(\hat{\boldsymbol{s}}_k)$

in Equation (5), onto a grid in the aperture plane. The gridded visibilities are Fourier transformed using an FFT rather than a DFT used in correlator beamforming,

\begin{align} \widetilde{\mathcal{I}}_m^{\alpha\beta}(\hat{\boldsymbol{s}}_k) &= \sum_j \delta^2 \Delta\boldsymbol{r}_j \, e^{i\frac{2\pi}{\lambda} \hat{\boldsymbol{s}}_k\cdot\Delta\boldsymbol{r}_j} \nonumber\\ &\quad \times \left(\sum_{a_m,b_m} \sum_p \widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r}_j-\Delta\boldsymbol{r}_{a_m b_m}) \, \widetilde{V}_{a_m b_m}^{pq} \right) \, .\end{align}

\begin{align} \widetilde{\mathcal{I}}_m^{\alpha\beta}(\hat{\boldsymbol{s}}_k) &= \sum_j \delta^2 \Delta\boldsymbol{r}_j \, e^{i\frac{2\pi}{\lambda} \hat{\boldsymbol{s}}_k\cdot\Delta\boldsymbol{r}_j} \nonumber\\ &\quad \times \left(\sum_{a_m,b_m} \sum_p \widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r}_j-\Delta\boldsymbol{r}_{a_m b_m}) \, \widetilde{V}_{a_m b_m}^{pq} \right) \, .\end{align}

The gridding and Fourier transform (using FFT) are represented by the parenthesis and outer summation, respectively.

![]() $\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

denotes the polarimetric gridding kernel that is used to place the visibility data in polarisation state, pq, centred at location,

$\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

denotes the polarimetric gridding kernel that is used to place the visibility data in polarisation state, pq, centred at location,

![]() $\Delta\boldsymbol{r}_{a_m b_m}$

, onto a grid of locations,

$\Delta\boldsymbol{r}_{a_m b_m}$

, onto a grid of locations,

![]() $\Delta\boldsymbol{r}_j$

in polarisation state,

$\Delta\boldsymbol{r}_j$

in polarisation state,

![]() $\alpha\beta$

. The purpose of

$\alpha\beta$

. The purpose of

![]() $\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

is similar (Morales & Matejek Reference Morales and Matejek2009; Bhatnagar et al. Reference Bhatnagar, Cornwell, Golap and Uson2008) to its counterpart gridding operator in the EPIC architecture (Morales Reference Morales2011) and can also incorporate the w-projection kernel (Cornwell et al. Reference Cornwell, Golap and Bhatnagar2008).

$\widetilde{B}_{a_m b_m}^{\alpha\beta;pq*}(\Delta\boldsymbol{r})$

is similar (Morales & Matejek Reference Morales and Matejek2009; Bhatnagar et al. Reference Bhatnagar, Cornwell, Golap and Uson2008) to its counterpart gridding operator in the EPIC architecture (Morales Reference Morales2011) and can also incorporate the w-projection kernel (Cornwell et al. Reference Cornwell, Golap and Bhatnagar2008).

This is the mathematical counterpart to the operations performed on calibrated electric fields from the elements in EPIC, with few key differences – (1) the calibrated visibilities can be accumulated and allowed for Earth rotation and bandwidth synthesis, (2) the gridding and spatial Fourier transform operate not on electric fields but visibilities, and (3) the spatial Fourier transform can occur on a slower cadence of

![]() $t_\textrm{acc}$

. In the radio interferometric context,

$t_\textrm{acc}$

. In the radio interferometric context,

![]() $\widetilde{\mathcal{I}}_m^{\alpha\beta}(\hat{\boldsymbol{s}}_k)$

is referred to as the ‘dirty image’ (Thompson, Moran, & Swenson Reference Thompson, Moran and Swenson2017; Taylor, Carilli, & Perley Reference Taylor, Carilli and Perley1999).

$\widetilde{\mathcal{I}}_m^{\alpha\beta}(\hat{\boldsymbol{s}}_k)$

is referred to as the ‘dirty image’ (Thompson, Moran, & Swenson Reference Thompson, Moran and Swenson2017; Taylor, Carilli, & Perley Reference Taylor, Carilli and Perley1999).

Fig. 9 shows the computational cost density breakdown for XFFT at the station level. The correlation and temporal averaging in Equation (4) are the same as the correlator beamformer, scaling as

![]() $N_\textrm{eps}(N_\textrm{eps}-1)/2$

and have no dependence on

$N_\textrm{eps}(N_\textrm{eps}-1)/2$

and have no dependence on

![]() $D_\textrm{e}$

or

$D_\textrm{e}$

or

![]() $D_\textrm{s}$

. Hence, their costs per voxel scale as

$D_\textrm{s}$

. Hence, their costs per voxel scale as

![]() $(D_\textrm{s}/D_\textrm{e})^{-2}$

. The gridding in Equation (9) also scales as

$(D_\textrm{s}/D_\textrm{e})^{-2}$

. The gridding in Equation (9) also scales as

![]() $N_\textrm{eps}(N_\textrm{eps}-1)/2$

and the size of the gridding kernel,

$N_\textrm{eps}(N_\textrm{eps}-1)/2$

and the size of the gridding kernel,

![]() $2^2 N_\textrm{ke}$

, where,

$2^2 N_\textrm{ke}$

, where,

![]() $N_\textrm{ke}$

was the size of the kernel used in EPIC. The quadrupling is because the extent of the visibility gridding kernel is expected to be twice as that of the element gridding kernel along each dimension. Thus,

$N_\textrm{ke}$

was the size of the kernel used in EPIC. The quadrupling is because the extent of the visibility gridding kernel is expected to be twice as that of the element gridding kernel along each dimension. Thus,

![]() $2^2 N_\textrm{ke}=4$

is chosen to provide unaliased full field-of-view images for the calculations here. The incorporation of w-projection in the gridding kernel will correspondingly increase the kernel size, but being independent of grid dimensions, the gridding cost per voxel scales as

$2^2 N_\textrm{ke}=4$

is chosen to provide unaliased full field-of-view images for the calculations here. The incorporation of w-projection in the gridding kernel will correspondingly increase the kernel size, but being independent of grid dimensions, the gridding cost per voxel scales as

![]() $(D_\textrm{s}/D_\textrm{e})^{-2}$

. The two-dimensional FFT in Equation (9) depends only on the grid size and is independent of

$(D_\textrm{s}/D_\textrm{e})^{-2}$

. The two-dimensional FFT in Equation (9) depends only on the grid size and is independent of

![]() $N_\textrm{eps}$

. The FFT scales as

$N_\textrm{eps}$

. The FFT scales as

![]() $(D_\textrm{s}/D_\textrm{e})^2\log_2(D_\textrm{s}/D_\textrm{e})^2$

, and thus its cost per voxel scales only weakly as

$(D_\textrm{s}/D_\textrm{e})^2\log_2(D_\textrm{s}/D_\textrm{e})^2$

, and thus its cost per voxel scales only weakly as

![]() $\log_2(D_\textrm{s}/D_\textrm{e})$

. The correlation and temporal averaging have to be performed at every interval of

$\log_2(D_\textrm{s}/D_\textrm{e})$

. The correlation and temporal averaging have to be performed at every interval of

![]() $\delta t$

and are therefore, independent of

$\delta t$

and are therefore, independent of

![]() $t_\textrm{acc}$

, whereas the gridding and FFT need to be performed at a cadence of

$t_\textrm{acc}$

, whereas the gridding and FFT need to be performed at a cadence of

![]() $t_\textrm{acc}$

, and thus scale as

$t_\textrm{acc}$

, and thus scale as

![]() $t_\textrm{acc}^{-1}$

. For the chosen parameters of LAMBDA-I, SKA-low, and SKA-low-core, and

$t_\textrm{acc}^{-1}$

. For the chosen parameters of LAMBDA-I, SKA-low, and SKA-low-core, and

![]() $t_\textrm{acc}=1$

ms, gridding dominates the overall cost, followed by the correlator, temporal averaging of correlations, and FFT. However, for imaging on a slower cadence,

$t_\textrm{acc}=1$

ms, gridding dominates the overall cost, followed by the correlator, temporal averaging of correlations, and FFT. However, for imaging on a slower cadence,

![]() $t_\textrm{acc}\gtrsim$

5–10 ms, the cost of correlation begins to dominate.

$t_\textrm{acc}\gtrsim$

5–10 ms, the cost of correlation begins to dominate.

Figure 9. A breakdown of the computational cost density function over the station parameters for the correlator FFT architecture (XFFT) at the station level. Each panel shows the variation with the respective parameter keeping the rest fixed at the characteristic values of the LAMBDA-I, SKA-low-core, and SKA-low stations (cyan lines). The correlator/X-engine (double dot- dashed lines) and gridding (dotted lines) costs are comparable and dominate over the temporal averaging (dot dashed lines) and two-dimensional FFT (dashed lines) costs for the chosen LAMBDA-I parameters at an imaging cadence of

![]() $t_\textrm{acc}=1$

ms.

$t_\textrm{acc}=1$

ms.

Fig. 10a shows a covariant view of the total computational cost density per station per voxel (sum of correlator, temporal averaging, gridding, and FFT components) for the correlator FFT architecture taken two station parameters at a time while keeping the rest fixed at the nominal values of a LAMBDA-I, SKA-low, or a SKA-low-core station denoted by grey dashed lines. The variation in the cost per voxel for

![]() $t_\textrm{acc}=1$

ms is dominated by the gridding cost, displaying the same trends observed in Fig. 9. Fig. 10b shows the ratio of the computational cost of the XFFT architecture relative to the voltage beamforming architecture in Fig. 4. Clearly, the cost of the XFFT architecture is less expensive than the BF in most of the parameter space, particularly for larger

$t_\textrm{acc}=1$

ms is dominated by the gridding cost, displaying the same trends observed in Fig. 9. Fig. 10b shows the ratio of the computational cost of the XFFT architecture relative to the voltage beamforming architecture in Fig. 4. Clearly, the cost of the XFFT architecture is less expensive than the BF in most of the parameter space, particularly for larger

![]() $D_\textrm{s}/D_\textrm{e}$

. For the chosen parameters (grey dashed lines), the XFFT cost roughly matches that of BF.

$D_\textrm{s}/D_\textrm{e}$

. For the chosen parameters (grey dashed lines), the XFFT cost roughly matches that of BF.

Figure 10. (Left): Two-dimensional slices of the computational cost for imaging using an FFT of station-level cross-correlations (XFFT) for LAMBDA-I, SKA-low-core, and SKA-low station parameters (dashed grey lines). Cyan contours denote logarithmic levels in the colour scale.

For applications where visibilities are processed offline and real-time imaging is not required, like traditional cosmology experiments including the EoR, only the computational cost of correlations in forming the visibilities will apply, which is still one of the dominant components of the overall cost in Figs. 7 and 9. The DFT, gridding, and FFT costs can be ignored for real-time processing.

6. Inter-station imaging architectures

The products from the intra-station architectures form the inputs for inter-station imaging. Here, the inter-station processing is also assumed to occur in real time.

6.1. Incoherent architectures

Incoherent inter-station imaging simply involves accumulating the images from each station at a cadence of

![]() $t_\textrm{acc}$

. The angular resolution remains the same as the intra-station images (assuming all stations have equal angular resolution) obtained through any of the four approaches described in Section 5. The weighted averaging of intra-station intensities gives

$t_\textrm{acc}$

. The angular resolution remains the same as the intra-station images (assuming all stations have equal angular resolution) obtained through any of the four approaches described in Section 5. The weighted averaging of intra-station intensities gives

where,

![]() $w_{m}^{\alpha\beta}(\hat{\boldsymbol{s}}_k)$

represents station weights for a beam pointed towards

$w_{m}^{\alpha\beta}(\hat{\boldsymbol{s}}_k)$

represents station weights for a beam pointed towards

![]() $\hat{\boldsymbol{s}}_k$

. The incoherent nature of this inter-station intensity accumulation implies that it does not depend on inter-station spacing or the array diameter,

$\hat{\boldsymbol{s}}_k$

. The incoherent nature of this inter-station intensity accumulation implies that it does not depend on inter-station spacing or the array diameter,

![]() $D_A$

.

$D_A$

.

6.2. Coherent architectures

A coherent combination of data between stations requires electric field data products with phase information from the first stage of processing on individual stations. Thus, intra-station visibilities in which absolute phases have been removed and only phase differences remain, as well as the image intensity outputs of XBF and XFFT, are unusable for coherent processing at the inter-station level. Hence, the two remaining inputs are considered, namely, the electric fields,

![]() $\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, from BF and EPIC from Equations (1) and (3), respectively.

$\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, from BF and EPIC from Equations (1) and (3), respectively.

The intra-station coherent combinations,

![]() $\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, produced through BF and EPIC, respectively, in Equations (1) and (3), effectively convert the station, m, to act as virtual station-sized telescopes measuring the electric fields but simultaneously pointed towards multiple locations, denoted by

$\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, produced through BF and EPIC, respectively, in Equations (1) and (3), effectively convert the station, m, to act as virtual station-sized telescopes measuring the electric fields but simultaneously pointed towards multiple locations, denoted by

![]() $\hat{\boldsymbol{s}}_k$

. Each of these virtual station-sized telescopes has a field of view determined by inverse of the station size as

$\hat{\boldsymbol{s}}_k$

. Each of these virtual station-sized telescopes has a field of view determined by inverse of the station size as

![]() $\sim (\lambda/D_\textrm{s})^2$

. Together, with all the different virtual pointings, they can cover a field of view given by the inverse of the element size as

$\sim (\lambda/D_\textrm{s})^2$

. Together, with all the different virtual pointings, they can cover a field of view given by the inverse of the element size as

![]() $\sim (\lambda/D_\textrm{e})^2$

. Using these electric fields as the input measurements from the virtually synthesised telescopes pointed towards any given direction,

$\sim (\lambda/D_\textrm{e})^2$

. Using these electric fields as the input measurements from the virtually synthesised telescopes pointed towards any given direction,

![]() $\hat{\boldsymbol{s}}_k$

, the inter-station imaging architecture can now employ any of the four architectures that were previously discussed in Section 5, for all possible directions filling the entire field of view.

$\hat{\boldsymbol{s}}_k$

, the inter-station imaging architecture can now employ any of the four architectures that were previously discussed in Section 5, for all possible directions filling the entire field of view.

For inter-station processing, all element- and station-related quantities in intra-station processing will be replaced with station- and array-related quantities, respectively. Similarly, the element-based measurements of electric fields,

![]() $\widetilde{E}_{a_m}^p$

, will be replaced with the synthesised electric fields,

$\widetilde{E}_{a_m}^p$

, will be replaced with the synthesised electric fields,

![]() $\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, measured by the virtual station-sized telescopes obtained either through BF (Equation 1) or EPIC (Equation 3). Since the computational costs of the inter-station processing now depend on the array layout and that of intra-station processing on the station layout, it necessitates hybrid architectures appropriate for multi-scale aperture arrays. Table 2 summarises the computational costs of inter-station processing.

$\widetilde{\mathcal{E}}_m^\alpha(\hat{\boldsymbol{s}}_k)$

, measured by the virtual station-sized telescopes obtained either through BF (Equation 1) or EPIC (Equation 3). Since the computational costs of the inter-station processing now depend on the array layout and that of intra-station processing on the station layout, it necessitates hybrid architectures appropriate for multi-scale aperture arrays. Table 2 summarises the computational costs of inter-station processing.

6.2.1. Beamforming

Using the electric fields,

![]() $\widetilde{\mathcal{E}}_m^p(\hat{\boldsymbol{s}}_k)$

, synthesised from each intra-station processing as input, the inter-station DFT beamforming can be written very similar to Equation (1), as

$\widetilde{\mathcal{E}}_m^p(\hat{\boldsymbol{s}}_k)$

, synthesised from each intra-station processing as input, the inter-station DFT beamforming can be written very similar to Equation (1), as

![]() $\boldsymbol{\sigma}_\ell$

denotes locations within the beam solid angle synthesised by the virtual station-sized telescopes pointed towards

$\boldsymbol{\sigma}_\ell$