1. Introduction

In this study, we propose a data-driven manifold learner of the flows for a large range of operating conditions demonstrated by a low-dimensional manifold for the actuated fluidic pinball. A cornerstone of theoretical fluid mechanics is the low-dimensional representation of coherent structures. This representation is a basis for understanding dynamic modelling, sensor-based flow estimation, model-based control and optimisation.

Many avenues of reduced-order representations have been proposed. Leonardo da Vinci presented coherent structures and vortices artfully as sketches in a time when the Navier–Stokes equations were not known (Marusic & Broomhall Reference Marusic and Broomhall2021). A quantitative pathway of low-dimensional modelling was started by Hermann von Helmholtz (Reference Helmholtz1858) with his vortex laws. Highlights are the von Kármán (Reference von Kármán1912) model of the vortex street and derivation of feedback wake stabilisation from the Föppl's vortex model by Protas (Reference Protas2004). Orr and Sommerfeld added a stability framework culminating in Stuart's mean-field theory (Stuart Reference Stuart1958) to incorporate non-linear Reynolds stress effects. The proper orthogonal decomposition (POD) has quickly become the major data-driven approach to compress snapshot data into a low-dimensional Galerkin expansion (Berkooz, Holmes & Lumley Reference Berkooz, Holmes and Lumley1993). Cluster-based modelling is an alternative data-driven flow compression, coarse-graining snapshot data into a small set of representative centroids (Kaiser et al. Reference Kaiser, Noack, Cordier, Spohn, Segond, Abel, Daviller, Östh, Krajnović and Niven2014). All these low-dimensional flow representations are the kinematic prelude to dynamic models, e.g. point vortex models, modal stability approaches (Theofilis Reference Theofilis2011), POD Galerkin models (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012) and cluster-based network models (Fernex, Noack & Semaan Reference Fernex, Noack and Semaan2021).

Data-driven manifold learners are a recent highly promising avenue of reduced-order representations. A two-dimensional manifold may, for instance, accurately resolve transient cylinder wakes: the manifold dimension is a tiny fraction of the number of vortices, POD modes and clusters required for a similar resolution. This manifold may be obtained by mean-field considerations (Noack et al. Reference Noack, Afanasiev, Morzyński, Tadmor and Thiele2003), by dynamic features (Loiseau, Noack & Brunton Reference Loiseau, Noack and Brunton2018), by locally linear embedding (Noack et al. Reference Noack, Ehlert, Nayeri and Morzynski2023) and by isometric mapping (ISOMAP, Farzamnik et al. Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023). In all these approaches, the manifolds have been determined for a single operating condition. Haller et al. (Reference Haller, Kaszás, Liu and Axås2023) emphasised a key challenge, namely, it remains ‘unclear if these manifolds are robust under parameter changes or under the addition of external, time-dependent forcing’. In this study, we thus aim to identify a manifold representing fluid flows for a rich set of control actions by incorporating the actuation commands.

We choose the fluidic pinball as our benchmark problem for the proposed manifold learning. This configuration has been proposed by Ishar et al. (Reference Ishar, Kaiser, Morzyński, Fernex, Semaan, Albers, Meysonnat, Schröder and Noack2019) as a geometrically simple two-dimensional configuration with a rich dynamic complexity under cylinder actuation and Reynolds number change. The transition scenario is described and modelled by Deng et al. (Reference Deng, Noack, Morzyński and Pastur2020) comprising a sequence of bifurcations before passing to chaotic behaviour. The feedback stabilisation achieved through cylinder rotation has been accomplished with many different approaches (Raibaudo et al. Reference Raibaudo, Zhong, Noack and Martinuzzi2020; Cornejo Maceda et al. Reference Cornejo Maceda, Li, Lusseyran, Morzyński and Noack2021; Li et al. Reference Li, Cui, Jia, Li, Yang, Morzyński and Noack2022; Wang et al. Reference Wang, Deng, Cornejo Maceda and Noack2023). Farzamnik et al. (Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023) have demonstrated that the unforced dynamics can be described on a low-dimensional manifold.

In this study, we illustrate the development of the control-oriented manifold, called an ‘actuation manifold’ in the following, for the fluidic pinball with three steady cylinder rotations as independent inputs. ISOMAP is chosen for its superior compression capability and manifold interpretability over other techniques such as POD (Farzamnik et al. Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023).

The rest of the article is structured as follows. In § 2 we introduce the dataset of the actuated fluidic pinball while in § 3 we discuss the methodology employed to distill the actuation manifold and to use it for flow-estimation purposes. The results are presented and discussed in § 4, before highlighting the main conclusions of the work in § 5.

2. Flow control dataset

The dataset is based on two-dimensional incompressible uniform flow past three cylinders of equal diameter ![]() $D$. Their vertices form an equilateral triangle with one vertex pointing upstream and its median aligned with the streamwise direction. The origin of the Cartesian reference system is located in the midpoint between the two rightmost cylinders. The streamwise and crosswise directions are indicated with

$D$. Their vertices form an equilateral triangle with one vertex pointing upstream and its median aligned with the streamwise direction. The origin of the Cartesian reference system is located in the midpoint between the two rightmost cylinders. The streamwise and crosswise directions are indicated with ![]() $x$ and

$x$ and ![]() $y$, respectively. The corresponding velocity components are indicated with

$y$, respectively. The corresponding velocity components are indicated with ![]() $u$ and

$u$ and ![]() $v$. The computational domain

$v$. The computational domain ![]() $\varOmega$ is bounded in

$\varOmega$ is bounded in ![]() $[-6,20]\times [-6,6]$, and the unstructured grid used has

$[-6,20]\times [-6,6]$, and the unstructured grid used has ![]() $4225$ triangles and

$4225$ triangles and ![]() $8633$ nodes. The snapshots are linearly interpolated on a structured grid with a spacing of

$8633$ nodes. The snapshots are linearly interpolated on a structured grid with a spacing of ![]() $0.05$ in both

$0.05$ in both ![]() $x$ and

$x$ and ![]() $y$ directions to maintain a format similar to the experimental data and for greater simplicity.

$y$ directions to maintain a format similar to the experimental data and for greater simplicity.

The Reynolds number (![]() $Re$), which is based on

$Re$), which is based on ![]() $D$ and the incoming velocity

$D$ and the incoming velocity ![]() $U_\infty$, is set to

$U_\infty$, is set to ![]() $30$ for all cases. A reference time scale

$30$ for all cases. A reference time scale ![]() $D/U_\infty$ is set as a convective unit (c.u.). The force coefficients

$D/U_\infty$ is set as a convective unit (c.u.). The force coefficients ![]() $C_L$ and

$C_L$ and ![]() $C_D$ are obtained by normalising respectively lift and the drag forces with

$C_D$ are obtained by normalising respectively lift and the drag forces with ![]() $\frac {1}{2} \rho U_\infty ^2 D$, being

$\frac {1}{2} \rho U_\infty ^2 D$, being ![]() $\rho$ the density of the fluid.

$\rho$ the density of the fluid.

The control is achieved with independent cylinder rotations included in the vector ![]() ${\boldsymbol {b} = (b_1, b_2, b_3)^{\rm T}}$, where

${\boldsymbol {b} = (b_1, b_2, b_3)^{\rm T}}$, where ![]() $b_1$,

$b_1$, ![]() $b_2$ and

$b_2$ and ![]() $b_3$ refer to the tangential speeds of the front, top and bottom cylinder, respectively. Here, ‘T’ denotes the transpose operator. Positive actuation values correspond to counter-clockwise rotations. Combinations of rotational speed ranging from

$b_3$ refer to the tangential speeds of the front, top and bottom cylinder, respectively. Here, ‘T’ denotes the transpose operator. Positive actuation values correspond to counter-clockwise rotations. Combinations of rotational speed ranging from ![]() $-3$ to

$-3$ to ![]() $3$ by steps of

$3$ by steps of ![]() $1$ (i.e.

$1$ (i.e. ![]() $-3, -2, \ldots, 3$) have been used, for a total of 343 configurations, including the unforced case. Each of these simulation is run for

$-3, -2, \ldots, 3$) have been used, for a total of 343 configurations, including the unforced case. Each of these simulation is run for ![]() $800$ c.u. to reach the steady state and snapshots with

$800$ c.u. to reach the steady state and snapshots with ![]() $1$ c.u. separation in the last

$1$ c.u. separation in the last ![]() $20$ c.u. for each steady state have been sampled. This time is chosen to approximately cover two shedding cycles of the unforced case. This dataset therefore consists of

$20$ c.u. for each steady state have been sampled. This time is chosen to approximately cover two shedding cycles of the unforced case. This dataset therefore consists of ![]() $20$ post-transient snapshots for each of the

$20$ post-transient snapshots for each of the ![]() $343$ combinations of actuations explored, for a total of

$343$ combinations of actuations explored, for a total of ![]() $M = 6860$ snapshots.

$M = 6860$ snapshots.

The actuations are condensed into a three-parameter vector, denoted as ![]() $\boldsymbol {p} = (p_1, p_2, p_3)^{\rm T}$, and referred to as Kiki parameters (Lin Reference Lin2021). These three parameters describe the three mechanisms of boat-tailing (

$\boldsymbol {p} = (p_1, p_2, p_3)^{\rm T}$, and referred to as Kiki parameters (Lin Reference Lin2021). These three parameters describe the three mechanisms of boat-tailing (![]() $p_1=(b_3-b_2)/2$), Magnus effect (

$p_1=(b_3-b_2)/2$), Magnus effect (![]() $p_2=b_1+b_2+b_3$) and forward stagnation point control (

$p_2=b_1+b_2+b_3$) and forward stagnation point control (![]() $p_3 = b_1$). The Kiki parameters are introduced for an easier physical interpretation of the actuation commands.

$p_3 = b_1$). The Kiki parameters are introduced for an easier physical interpretation of the actuation commands.

3. Methodology

A ![]() $n$-dimensional manifold is a topological space in which every point has a neighbourhood homeomorphic to Euclidean space

$n$-dimensional manifold is a topological space in which every point has a neighbourhood homeomorphic to Euclidean space ![]() $\mathbb {R}^n$. The approach proposed here involves employing an encoder–decoder strategy to acquire actuation manifolds, incorporating ISOMAP (Tenenbaum, de Silva & Langford Reference Tenenbaum, de Silva and Langford2000), neural networks and

$\mathbb {R}^n$. The approach proposed here involves employing an encoder–decoder strategy to acquire actuation manifolds, incorporating ISOMAP (Tenenbaum, de Silva & Langford Reference Tenenbaum, de Silva and Langford2000), neural networks and ![]() $k$-nearest-neighbour (

$k$-nearest-neighbour (![]() $k$NN) algorithms (Fix & Hodges Reference Fix and Hodges1989). The collected dataset undergoes ISOMAP to discern a low-dimensional embedding. Then, the flow reconstruction procedure involves mapping actuation and sensor information to the manifold coordinates, followed by interpolation among the

$k$NN) algorithms (Fix & Hodges Reference Fix and Hodges1989). The collected dataset undergoes ISOMAP to discern a low-dimensional embedding. Then, the flow reconstruction procedure involves mapping actuation and sensor information to the manifold coordinates, followed by interpolation among the ![]() $k$NNs to estimate the corresponding snapshot. An overview of this procedure is illustrated in figure 1 and detailed in the following.

$k$NNs to estimate the corresponding snapshot. An overview of this procedure is illustrated in figure 1 and detailed in the following.

Figure 1. Illustration of the methodology for actuation-manifold learning and full-state estimation. The diagram highlights key steps, from flow data collection to data-driven actuation manifold discovery (upper section). A neural network, incorporating actuation parameters (![]() $p_1$,

$p_1$, ![]() $p_2$ and

$p_2$ and ![]() $p_3$) and sensor information (

$p_3$) and sensor information (![]() $s_1$ and

$s_1$ and ![]() $s_2$), determines ISOMAP coordinates (

$s_2$), determines ISOMAP coordinates (![]() $\gamma _1,\gamma _2, \ldots, \gamma _n$) and a

$\gamma _1,\gamma _2, \ldots, \gamma _n$) and a ![]() $k$NN decoder is used for the full-state flow reconstruction.

$k$NN decoder is used for the full-state flow reconstruction.

If we consider our flow fields as vector functions ![]() $\boldsymbol {u}(\boldsymbol {x})=(u(x,y),v(x,y))$ belonging to a Hilbert space, the inner product between two snapshots

$\boldsymbol {u}(\boldsymbol {x})=(u(x,y),v(x,y))$ belonging to a Hilbert space, the inner product between two snapshots ![]() $\boldsymbol {u}_i$ and

$\boldsymbol {u}_i$ and ![]() $\boldsymbol {u}_j$ is defined by

$\boldsymbol {u}_j$ is defined by

where ‘![]() $\boldsymbol{\cdot}$’ refers to the scalar product in the two-dimensional vector space. Norms are canonically defined by

$\boldsymbol{\cdot}$’ refers to the scalar product in the two-dimensional vector space. Norms are canonically defined by ![]() $\|{\boldsymbol {u}}\| = \sqrt {\langle \boldsymbol {u},\boldsymbol {u}\rangle }$ and distances between snapshots are consistent with this norm.

$\|{\boldsymbol {u}}\| = \sqrt {\langle \boldsymbol {u},\boldsymbol {u}\rangle }$ and distances between snapshots are consistent with this norm.

For our study, we have a collection of ![]() $M$ flow field snapshots. In the encoder procedure, the ISOMAP algorithm necessitates determining the square matrix

$M$ flow field snapshots. In the encoder procedure, the ISOMAP algorithm necessitates determining the square matrix ![]() ${\boldsymbol{\mathsf{D}}_{\rm G}\in \mathbb {R}^{M \times M}}$ containing the geodesic distances among snapshots. Geodesic distances are approximated by selecting a set of neighbours for each snapshot to create a

${\boldsymbol{\mathsf{D}}_{\rm G}\in \mathbb {R}^{M \times M}}$ containing the geodesic distances among snapshots. Geodesic distances are approximated by selecting a set of neighbours for each snapshot to create a ![]() $k$NN graph. Within these neighbourhoods, snapshots are linked by paths whose lengths correspond to Hilbert-space distances. Geodesic distances between non-neighbours are then obtained as the shortest path through neighbouring snapshots in the

$k$NN graph. Within these neighbourhoods, snapshots are linked by paths whose lengths correspond to Hilbert-space distances. Geodesic distances between non-neighbours are then obtained as the shortest path through neighbouring snapshots in the ![]() $k$NN graph (Floyd Reference Floyd1962). At this stage, selecting the number of neighbours, which in the encoding part we denote by

$k$NN graph (Floyd Reference Floyd1962). At this stage, selecting the number of neighbours, which in the encoding part we denote by ![]() $k_e$, is crucial for creating the

$k_e$, is crucial for creating the ![]() $k$NN graph and thus approximating the geodesic distance matrix.

$k$NN graph and thus approximating the geodesic distance matrix.

Existing techniques are mostly empirical as in Samko, Marshall & Rosin (Reference Samko, Marshall and Rosin2006). The method proposed by Samko et al. (Reference Samko, Marshall and Rosin2006) was successfully used by Farzamnik et al. (Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023). However, due to the particularity of our dataset, as explained later in the article, standard empirical methods did not yield acceptable results. For this reason, we use the approach based on the Frobenius norm (denoted by ![]() $\|\cdot\|_F$) of the geodesic distance matrix

$\|\cdot\|_F$) of the geodesic distance matrix ![]() $\boldsymbol{\mathsf{D}}_{\rm G}$ (Shao & Huang Reference Shao and Huang2005) to determine

$\boldsymbol{\mathsf{D}}_{\rm G}$ (Shao & Huang Reference Shao and Huang2005) to determine ![]() $k_e$. Opting for a small

$k_e$. Opting for a small ![]() $k_e$ can lead to disconnected regions and undefined distances within the dataset. Thus, there exists a minimum value of

$k_e$ can lead to disconnected regions and undefined distances within the dataset. Thus, there exists a minimum value of ![]() $k_e$ ensuring that all snapshots are linked in the

$k_e$ ensuring that all snapshots are linked in the ![]() $k$NN graph. Starting from this minimum, increasing

$k$NN graph. Starting from this minimum, increasing ![]() $k_e$ results in more connections in the

$k_e$ results in more connections in the ![]() $k$NN graph, causing the Frobenius norm of the geodesic distance matrix to decrease monotonically. However, using an excessively large value may cause a short-circuiting within the

$k$NN graph, causing the Frobenius norm of the geodesic distance matrix to decrease monotonically. However, using an excessively large value may cause a short-circuiting within the ![]() $k$NN graph. Eventually, this yields an Euclidean representation, thus losing ISOMAP's capability to unfold nonlinear relationships within the dataset. Such short-circuiting is indicated by a sudden drop in

$k$NN graph. Eventually, this yields an Euclidean representation, thus losing ISOMAP's capability to unfold nonlinear relationships within the dataset. Such short-circuiting is indicated by a sudden drop in ![]() $\|{\boldsymbol{\mathsf{D}}}_{{\rm G}}\|_F$ with increasing

$\|{\boldsymbol{\mathsf{D}}}_{{\rm G}}\|_F$ with increasing ![]() $k_e$. Thus, the minimum and the value at which short-circuiting first occurs define a suitable range of

$k_e$. Thus, the minimum and the value at which short-circuiting first occurs define a suitable range of ![]() $k_e$ to be selected to approximate the geodesic distance matrix. The choice of

$k_e$ to be selected to approximate the geodesic distance matrix. The choice of ![]() $k_e$ within this range is generally based on how well the high-dimensional data are represented in the low-dimensional space. This is measured in terms of residual variance, as done by Kouropteva, Okun & Pietikäinen (Reference Kouropteva, Okun and Pietikäinen2002).

$k_e$ within this range is generally based on how well the high-dimensional data are represented in the low-dimensional space. This is measured in terms of residual variance, as done by Kouropteva, Okun & Pietikäinen (Reference Kouropteva, Okun and Pietikäinen2002).

After constructing the geodesic distance matrix ![]() $\boldsymbol{\mathsf{D}}_{{\rm G}}$, multidimensional scaling (Torgerson Reference Torgerson1952) is employed to construct the low-dimensional embedding

$\boldsymbol{\mathsf{D}}_{{\rm G}}$, multidimensional scaling (Torgerson Reference Torgerson1952) is employed to construct the low-dimensional embedding ![]() $\boldsymbol {\varGamma } = (\tilde {\gamma }_{ij})_{1\,\leqslant\, i\,\leqslant\, M,\, 1\,\leqslant\, j\,\leqslant\, n}$, whose dimension is

$\boldsymbol {\varGamma } = (\tilde {\gamma }_{ij})_{1\,\leqslant\, i\,\leqslant\, M,\, 1\,\leqslant\, j\,\leqslant\, n}$, whose dimension is ![]() $n$. The

$n$. The ![]() $j$th column of the matrix

$j$th column of the matrix ![]() $\boldsymbol {\varGamma }$ is the

$\boldsymbol {\varGamma }$ is the ![]() $j$th ISOMAP coordinate for all the

$j$th ISOMAP coordinate for all the ![]() $M$ flow field snapshots. We refer to the ISOMAP coordinates with

$M$ flow field snapshots. We refer to the ISOMAP coordinates with ![]() $\gamma _{j}$,

$\gamma _{j}$, ![]() $j = 1,\ldots,n$. Consequently,

$j = 1,\ldots,n$. Consequently, ![]() $\tilde {\gamma }_{ij} = \gamma _j(i)$,

$\tilde {\gamma }_{ij} = \gamma _j(i)$, ![]() $i = 1,\ldots, M$.

$i = 1,\ldots, M$.

More specifically, to obtain the low-dimensional embedding, we need to minimise the cost function:

where ![]() $\boldsymbol{\mathsf{B}}$ is the double-centred squared-geodesic distance matrix

$\boldsymbol{\mathsf{B}}$ is the double-centred squared-geodesic distance matrix ![]() $\boldsymbol{\mathsf{B}} = -1/2 \boldsymbol{\mathsf{H}}^T(\boldsymbol{\mathsf{D}}_{{\rm G}} \odot \boldsymbol{\mathsf{D}}_{\rm G})\boldsymbol{\mathsf{H}}$. The centring matrix

$\boldsymbol{\mathsf{B}} = -1/2 \boldsymbol{\mathsf{H}}^T(\boldsymbol{\mathsf{D}}_{{\rm G}} \odot \boldsymbol{\mathsf{D}}_{\rm G})\boldsymbol{\mathsf{H}}$. The centring matrix ![]() $\boldsymbol{\mathsf{H}}$ is defined as

$\boldsymbol{\mathsf{H}}$ is defined as ![]() $\boldsymbol{\mathsf{H}} = \boldsymbol{\mathsf{I}}_M - (1/M)\boldsymbol{\mathsf{1}}_M$. Here

$\boldsymbol{\mathsf{H}} = \boldsymbol{\mathsf{I}}_M - (1/M)\boldsymbol{\mathsf{1}}_M$. Here ![]() $\boldsymbol{\mathsf{I}}_M$ and

$\boldsymbol{\mathsf{I}}_M$ and ![]() $\boldsymbol{\mathsf{1}}_M$ are the identity matrix and the matrix with all unit components of size

$\boldsymbol{\mathsf{1}}_M$ are the identity matrix and the matrix with all unit components of size ![]() ${M\times M}$, respectively. The symbol

${M\times M}$, respectively. The symbol ![]() $\odot$ refers to the Hadamard product. The solution of the minimisation term in (3.2) involves the eigenvalues and eigenvectors arising from the decomposition of

$\odot$ refers to the Hadamard product. The solution of the minimisation term in (3.2) involves the eigenvalues and eigenvectors arising from the decomposition of ![]() $\boldsymbol{\mathsf{B}}$, specifically

$\boldsymbol{\mathsf{B}}$, specifically ![]() $\boldsymbol{\mathsf{B}} = \boldsymbol{\mathsf{V}} \boldsymbol {\varLambda } \boldsymbol{\mathsf{V}}^T$. For a given

$\boldsymbol{\mathsf{B}} = \boldsymbol{\mathsf{V}} \boldsymbol {\varLambda } \boldsymbol{\mathsf{V}}^T$. For a given ![]() $n$, we select the

$n$, we select the ![]() $n$ largest positive eigenvalues, i.e.

$n$ largest positive eigenvalues, i.e. ![]() $\boldsymbol {\varLambda }_n$, and the corresponding eigenvectors

$\boldsymbol {\varLambda }_n$, and the corresponding eigenvectors ![]() $\boldsymbol{\mathsf{V}}_n$. The coordinates are obtained by scaling these eigenvectors by the square roots of their corresponding eigenvalues. Thus, the coordinates are given by

$\boldsymbol{\mathsf{V}}_n$. The coordinates are obtained by scaling these eigenvectors by the square roots of their corresponding eigenvalues. Thus, the coordinates are given by ![]() $\boldsymbol {\varGamma } = \boldsymbol{\mathsf{V}}_n \boldsymbol {\varLambda }_n^{1/2}$, where

$\boldsymbol {\varGamma } = \boldsymbol{\mathsf{V}}_n \boldsymbol {\varLambda }_n^{1/2}$, where ![]() $\boldsymbol {\varLambda }_n^{1/2}$ is a diagonal matrix containing the square roots of the

$\boldsymbol {\varLambda }_n^{1/2}$ is a diagonal matrix containing the square roots of the ![]() $n$ largest eigenvalues.

$n$ largest eigenvalues.

In this instance, following the methodology outlined by Tenenbaum et al. (Reference Tenenbaum, de Silva and Langford2000), the evaluation of data representation quality within the ISOMAP technique is conducted through the residual variance ![]() $R_v = 1 - R^2(\text {vec}(\boldsymbol{\mathsf{D}}_{{\rm G}}), \text {vec}(\boldsymbol{\mathsf{D}}_{{\rm E}}))$. Here

$R_v = 1 - R^2(\text {vec}(\boldsymbol{\mathsf{D}}_{{\rm G}}), \text {vec}(\boldsymbol{\mathsf{D}}_{{\rm E}}))$. Here ![]() $R^2({\cdot },{\cdot })$ denotes the squared correlation coefficient, ‘vec’ is the vectorisation operator and

$R^2({\cdot },{\cdot })$ denotes the squared correlation coefficient, ‘vec’ is the vectorisation operator and ![]() $\boldsymbol{\mathsf{D}}_{{\rm E}}$ represents the matrix of the Euclidean distances between the low-dimensional representation of all the snapshots, retaining only the first

$\boldsymbol{\mathsf{D}}_{{\rm E}}$ represents the matrix of the Euclidean distances between the low-dimensional representation of all the snapshots, retaining only the first ![]() $n$ ISOMAP coordinates. The residual variance indicates the ability of the low-dimensional embedding to reproduce the geodesic distances in the high-dimensional space. The dimension

$n$ ISOMAP coordinates. The residual variance indicates the ability of the low-dimensional embedding to reproduce the geodesic distances in the high-dimensional space. The dimension ![]() $n$ of the low-dimensional embedding is typically determined by identifying an elbow in the residual variance plot.

$n$ of the low-dimensional embedding is typically determined by identifying an elbow in the residual variance plot.

After the actuation manifold identification, the objective is to perform a flow reconstruction from the knowledge of a reduced number of sensors and actuation parameters. This process is carried out in two steps. First, a regression model is trained to identify the low-dimensional representation of a snapshot. Specifically, we employ a fully connected multi-layer perceptron (MLP) to map the actuation parameters and sensor information to the ISOMAP coordinates. It is possible to employ as network input either the actuation or the Kiki parameters. In the following, we employ the Kiki parameters since we deem them more elegant, although this does not substantially affect the results, being the Kiki parameters a linear combination of the actuation parameters. Second, a decoding procedure is conducted through linear interpolation among a fixed number (denoted by ![]() $k_d$) of nearest neighbours, following the methodology established by Farzamnik et al. (Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023). An alternative to this mapping is presented in Appendix A, where a two-step

$k_d$) of nearest neighbours, following the methodology established by Farzamnik et al. (Reference Farzamnik, Ianiro, Discetti, Deng, Oberleithner, Noack and Guerrero2023). An alternative to this mapping is presented in Appendix A, where a two-step ![]() $k$NN regression with distance-weighted averaging is employed. It is important to clarify that the decoding step is applicable only for interpolation cases, meaning for actuation cases that fall within the limits explored during the dataset generation, i.e.

$k$NN regression with distance-weighted averaging is employed. It is important to clarify that the decoding step is applicable only for interpolation cases, meaning for actuation cases that fall within the limits explored during the dataset generation, i.e. ![]() $|b_i| < 3,\ \forall i = 1,2,3$, with

$|b_i| < 3,\ \forall i = 1,2,3$, with ![]() $|{\cdot }|$ being the absolute value.

$|{\cdot }|$ being the absolute value.

4. Results

4.1. Identification and physical interpretation of the low-dimensional embedding

The results of the encoding step are represented in figure 2. First, the neighbourhood size ![]() $k_e$ for the encoding must be determined. The Frobenius norm of the geodesic distance matrix

$k_e$ for the encoding must be determined. The Frobenius norm of the geodesic distance matrix ![]() $||\boldsymbol{\mathsf{D}}_{\rm G}||_F$ as a function of

$||\boldsymbol{\mathsf{D}}_{\rm G}||_F$ as a function of ![]() $k_e$ is reported in figure 2(a). A minimum

$k_e$ is reported in figure 2(a). A minimum ![]() $k_e = 40$ is required to ensure that all the neighbourhoods are connected. Figure 2(b) reports the percentage of connected snapshots in the

$k_e = 40$ is required to ensure that all the neighbourhoods are connected. Figure 2(b) reports the percentage of connected snapshots in the ![]() $k$NN graph. This percentage reaches

$k$NN graph. This percentage reaches ![]() $100\,\%$ for

$100\,\%$ for ![]() $k_e = 40$. Below this value, the manifold is divided into disjointed regions. By increasing

$k_e = 40$. Below this value, the manifold is divided into disjointed regions. By increasing ![]() $k_e$ starting from the minimum value, the Frobenius norm decreases due to improved connections between neighbourhoods. This also raises the probability of short-circuits, which drastically reduces

$k_e$ starting from the minimum value, the Frobenius norm decreases due to improved connections between neighbourhoods. This also raises the probability of short-circuits, which drastically reduces ![]() $||\boldsymbol{\mathsf{D}}_{\rm G}||_F$. Indeed, the first short-circuiting is identified for

$||\boldsymbol{\mathsf{D}}_{\rm G}||_F$. Indeed, the first short-circuiting is identified for ![]() ${k_e = 60}$. Choosing a value of

${k_e = 60}$. Choosing a value of ![]() $k_e$ within the range from the minimum value up to the point where the first significant drop in

$k_e$ within the range from the minimum value up to the point where the first significant drop in ![]() $||\boldsymbol{\mathsf{D}}_{\rm G}||_F$ occurs does not result in substantial alterations of the geometry of the manifold or the physical interpretation of its coordinates. Therefore, in this paper, we select the minimum value, that is

$||\boldsymbol{\mathsf{D}}_{\rm G}||_F$ occurs does not result in substantial alterations of the geometry of the manifold or the physical interpretation of its coordinates. Therefore, in this paper, we select the minimum value, that is ![]() $k_e = 40$.

$k_e = 40$.

Figure 2. (a) The Frobenius norm of the geodesic distance matrix plotted against the number of neighbours employed in Floyd's algorithm. (b) The ratio between the connected snapshots and the total number of snapshots as ![]() $k_e$ increases. Results of the manifold obtained for

$k_e$ increases. Results of the manifold obtained for ![]() $k_e = 40$ are presented in panels (c,d). The former illustrates the residual variance of the first

$k_e = 40$ are presented in panels (c,d). The former illustrates the residual variance of the first ![]() $10$ ISOMAP coordinates, whereas the latter showcases all possible manifold sections identified by the first five coordinates.

$10$ ISOMAP coordinates, whereas the latter showcases all possible manifold sections identified by the first five coordinates.

Then the residual variance is used to search for the true dimensionality of the dataset. Employing ![]() $n = 5$ coordinates leads to a residual variance of less than

$n = 5$ coordinates leads to a residual variance of less than ![]() $10\,\%$, thus

$10\,\%$, thus ![]() $n = 5$ dimensions are deemed sufficient to describe the manifold, as shown in figure 2(c). Further increase in the number of dimensions provides only marginal changes in the residual variance.

$n = 5$ dimensions are deemed sufficient to describe the manifold, as shown in figure 2(c). Further increase in the number of dimensions provides only marginal changes in the residual variance.

The representation of the five-dimensional embedding is undertaken in figure 2(d) in the form of two-dimensional projections on the ![]() $\gamma _i-\gamma _j$ planes with

$\gamma _i-\gamma _j$ planes with ![]() $i,j = 1,\ldots,5$ and

$i,j = 1,\ldots,5$ and ![]() $i < j$. A first visual observation of the projections highlights interesting physical interpretations. The projection on the

$i < j$. A first visual observation of the projections highlights interesting physical interpretations. The projection on the ![]() $\gamma _3-\gamma _4$ plane unveils a circular shape, suggesting that these two coordinates describe a periodic feature, i.e. the vortex shedding in the wake of the pinball. The fact that the circle is full suggests that different control actions result in an enhanced or attenuated vortex shedding. Each steady state describes a circle in the

$\gamma _3-\gamma _4$ plane unveils a circular shape, suggesting that these two coordinates describe a periodic feature, i.e. the vortex shedding in the wake of the pinball. The fact that the circle is full suggests that different control actions result in an enhanced or attenuated vortex shedding. Each steady state describes a circle in the ![]() $\gamma _3-\gamma _4$ plane. The radius of each circle explains the amplitude of vortex shedding, while the angular position provides information about the phase. Cases that do not exhibit vortex shedding collapse into points where

$\gamma _3-\gamma _4$ plane. The radius of each circle explains the amplitude of vortex shedding, while the angular position provides information about the phase. Cases that do not exhibit vortex shedding collapse into points where ![]() $\gamma _3, \gamma _4 \approx 0$. This is evident from the observation of the projections on the planes

$\gamma _3, \gamma _4 \approx 0$. This is evident from the observation of the projections on the planes ![]() $\gamma _1-\gamma _3$ and

$\gamma _1-\gamma _3$ and ![]() $\gamma _1-\gamma _4$, both returning a champagne coupe shape, suggesting that smaller values of

$\gamma _1-\gamma _4$, both returning a champagne coupe shape, suggesting that smaller values of ![]() $\gamma _1$ are a prerogative of the cases with limit cycle of smaller amplitude.

$\gamma _1$ are a prerogative of the cases with limit cycle of smaller amplitude.

This feature also suggests that ![]() $\gamma _1$ is correlated with the boat tailing parameter

$\gamma _1$ is correlated with the boat tailing parameter ![]() $p_1$ and thus with the drag coefficient

$p_1$ and thus with the drag coefficient ![]() $C_D$, as visualised in figure 3. This plot also shows a correlation between

$C_D$, as visualised in figure 3. This plot also shows a correlation between ![]() $\gamma _2$, the Magnus parameter

$\gamma _2$, the Magnus parameter ![]() $p_2$ and the lift coefficient

$p_2$ and the lift coefficient ![]() $C_L$. The fifth coordinate of the low-dimensional embedding

$C_L$. The fifth coordinate of the low-dimensional embedding ![]() $\gamma _5$, on the other hand, appears to be correlated with the stagnation point parameter

$\gamma _5$, on the other hand, appears to be correlated with the stagnation point parameter ![]() $p_3$ and partially explains the lift produced by the pinball. Intriguingly, all ISOMAP coordinates are physically meaningful and allow us to discover the three Kiki parameters without human input.

$p_3$ and partially explains the lift produced by the pinball. Intriguingly, all ISOMAP coordinates are physically meaningful and allow us to discover the three Kiki parameters without human input.

Figure 3. Three-dimensional projections of the manifold colour-coded with actuation parameters and forces coefficients. The first (boat-tailing) and second (Magnus) actuation parameters are plotted against lift and drag coefficients to understand physical control mechanisms.

Another interesting physical interpretation arises observing the manifold section ![]() ${\gamma _1-\gamma _2}$, as shown in figure 4. This provides insights into the horizontal symmetry of the data. In the figure, semicircles repeat, increasing in number as

${\gamma _1-\gamma _2}$, as shown in figure 4. This provides insights into the horizontal symmetry of the data. In the figure, semicircles repeat, increasing in number as ![]() $\gamma _1$ increases. Within each of them,

$\gamma _1$ increases. Within each of them, ![]() $b_1$ varies from

$b_1$ varies from ![]() $-3$ to

$-3$ to ![]() $3$, whereas

$3$, whereas ![]() $b_2$ and

$b_2$ and ![]() $b_3$ remain fixed. The branches symmetrically positioned with respect to the axis

$b_3$ remain fixed. The branches symmetrically positioned with respect to the axis ![]() $\gamma _2 = 0$ are associated with symmetric actuation

$\gamma _2 = 0$ are associated with symmetric actuation ![]() $b_2$ and

$b_2$ and ![]() $b_3$.

$b_3$.

Figure 4. At the top, representation of the manifold section identified by the coordinates ![]() $\gamma _1$ and

$\gamma _1$ and ![]() $\gamma _2$ colour coded with

$\gamma _2$ colour coded with ![]() $b_1$ (a),

$b_1$ (a), ![]() $b_2$ (b) and

$b_2$ (b) and ![]() $b_3$ (c). At the bottom, a diagram explaining how the coordinate

$b_3$ (c). At the bottom, a diagram explaining how the coordinate ![]() $\gamma _2$ provides indications of horizontal symmetry in the flow field.

$\gamma _2$ provides indications of horizontal symmetry in the flow field.

The low-dimensional embedding, being the result of the eigenvector decomposition of the double-centred squared-geodesic distance matrix, is made of orthogonal vectors. While this might represent a disadvantage to autoencoders (Otto & Rowley Reference Otto and Rowley2022), this also allows projecting the snapshots on this basis and obtaining spatial modes that can be used to corroborate the physical interpretation of the manifold coordinates. The ![]() $j$th spatial mode

$j$th spatial mode ![]() $\boldsymbol {\phi }_j, \, j = 1,\ldots, n$ is a linear combination of the snapshots

$\boldsymbol {\phi }_j, \, j = 1,\ldots, n$ is a linear combination of the snapshots ![]() $\boldsymbol {u}_i$, i.e.

$\boldsymbol {u}_i$, i.e. ![]() $({\sum _{i=1}^{M}\gamma _{ij}^2})^{1/2}\boldsymbol {\phi }_j= \sum _{i=1}^{M} \tilde {\gamma }_{ij} \boldsymbol {u}_i$.

$({\sum _{i=1}^{M}\gamma _{ij}^2})^{1/2}\boldsymbol {\phi }_j= \sum _{i=1}^{M} \tilde {\gamma }_{ij} \boldsymbol {u}_i$.

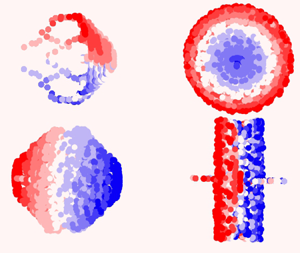

The first five ISOMAP modes are visualised in figure 5 with a line integral convolution (LIC; Forssell & Cohen Reference Forssell and Cohen1995) plot superimposed on a velocity magnitude contour plot. The visual observation of the modes confirms the interpretation of the physical meaning of the coordinates of the low-dimensional embedding. Three modes, namely ![]() $\boldsymbol {\phi }_1$,

$\boldsymbol {\phi }_1$, ![]() $\boldsymbol {\phi }_2$ and

$\boldsymbol {\phi }_2$ and ![]() $\boldsymbol {\phi }_5$ are representative of the actuation parameters. The first ISOMAP mode is characterised by the presence of a jet (wake) downstream of the pinball due to boat-tailing (base bleeding). The second ISOMAP mode represents a circulating motion around the pinball, responsible for positive or negative lift, depending on the circulation direction. The fifth mode is characterised by a net circulation around the front cylinder, determining the position of the front cylinder stagnation point, if added to the mean field. The two spatial modes

$\boldsymbol {\phi }_5$ are representative of the actuation parameters. The first ISOMAP mode is characterised by the presence of a jet (wake) downstream of the pinball due to boat-tailing (base bleeding). The second ISOMAP mode represents a circulating motion around the pinball, responsible for positive or negative lift, depending on the circulation direction. The fifth mode is characterised by a net circulation around the front cylinder, determining the position of the front cylinder stagnation point, if added to the mean field. The two spatial modes ![]() $\boldsymbol {\phi }_3$ and

$\boldsymbol {\phi }_3$ and ![]() $\boldsymbol {\phi }_4$, instead, have the classical aspect of vortex shedding modes. Together, they describe the wake response to actuation parameters (far field), providing information on the intensity and phase of vortex shedding.

$\boldsymbol {\phi }_4$, instead, have the classical aspect of vortex shedding modes. Together, they describe the wake response to actuation parameters (far field), providing information on the intensity and phase of vortex shedding.

Figure 5. LIC representations of the normalised actuation modes. The shadowed contour represents the local velocity magnitude of the pseudomodes.

4.2. Flow estimation

For the identification of the low-dimensional representation of a snapshot, we employ a fully connected MLP. The mapping is done having as inputs the Kiki parameters and two sensors. The mapping also includes sensor information because each actuation vector is associated with a limit cycle, which in our case is described by ![]() $20$ post-transient snapshots. Therefore, the actuation vector provides comprehensive knowledge of the coordinates representing the near field (

$20$ post-transient snapshots. Therefore, the actuation vector provides comprehensive knowledge of the coordinates representing the near field (![]() $\gamma _1$,

$\gamma _1$, ![]() $\gamma _2$ and

$\gamma _2$ and ![]() $\gamma _5$). Far-field coordinates (

$\gamma _5$). Far-field coordinates (![]() $\gamma _3$ and

$\gamma _3$ and ![]() $\gamma _4$) identification requires information about the phase of vortex shedding, which is provided by the sensors. Two different alternatives are proposed here. In the first, we utilise the lift coefficient and its one-quarter mean shedding period delay (we refer to this case with MLP

$\gamma _4$) identification requires information about the phase of vortex shedding, which is provided by the sensors. Two different alternatives are proposed here. In the first, we utilise the lift coefficient and its one-quarter mean shedding period delay (we refer to this case with MLP![]() $_1$). In the second, crosswise components of velocity are measured at two positions in the wake, specifically at points

$_1$). In the second, crosswise components of velocity are measured at two positions in the wake, specifically at points ![]() $\boldsymbol {x}_1 = (7,1.25)$ and

$\boldsymbol {x}_1 = (7,1.25)$ and ![]() $\boldsymbol {x}_2 = (10,1.25)$ (MLP

$\boldsymbol {x}_2 = (10,1.25)$ (MLP![]() $_2$). The sensors are located in the crosswise position at the edge of the shear layer of the unforced case, i.e. at the top of the upper cylinder. The streamwise location is selected such that the vortex shedding is already developed. The streamwise separation between the two sensors is such that the flight time between them is approximately one-quarter of the shedding period in the unforced case. This ensures that the sensor data collect relevant information for the flow reconstruction, even though a systematic position optimisation has not been performed. The characteristics of the employed networks are summarised in table 1. The training of the neural networks is performed with the dataset used to construct the data-driven manifold randomly removing all snapshots related to

$_2$). The sensors are located in the crosswise position at the edge of the shear layer of the unforced case, i.e. at the top of the upper cylinder. The streamwise location is selected such that the vortex shedding is already developed. The streamwise separation between the two sensors is such that the flight time between them is approximately one-quarter of the shedding period in the unforced case. This ensures that the sensor data collect relevant information for the flow reconstruction, even though a systematic position optimisation has not been performed. The characteristics of the employed networks are summarised in table 1. The training of the neural networks is performed with the dataset used to construct the data-driven manifold randomly removing all snapshots related to ![]() $20\,\%$ of the actuation cases and using the remaining snapshots for validation.

$20\,\%$ of the actuation cases and using the remaining snapshots for validation.

Table 1. Structure of the neural networks used for latent coordinate identification.

To test the accuracy of flow estimation, we use ![]() $22$ additional simulations with randomly selected actuation, not present in the training nor the validation dataset. As done for the training dataset, the last

$22$ additional simulations with randomly selected actuation, not present in the training nor the validation dataset. As done for the training dataset, the last ![]() $20$ c.u. are sampled every

$20$ c.u. are sampled every ![]() $1$ c.u. for a total of

$1$ c.u. for a total of ![]() $440$ snapshots. The neural networks are used to identify the low-dimensional representation of the snapshots in the test dataset and then the

$440$ snapshots. The neural networks are used to identify the low-dimensional representation of the snapshots in the test dataset and then the ![]() $k$NN decoder is applied to reconstruct the full state. In the decoding phase, we select

$k$NN decoder is applied to reconstruct the full state. In the decoding phase, we select ![]() $k_d = 220$.

$k_d = 220$.

The value of ![]() $k_d$ was chosen by performing a parametric study. In particular, we selected the

$k_d$ was chosen by performing a parametric study. In particular, we selected the ![]() $k_d$ to minimise the reconstruction error of the snapshots in the validation dataset. The selected

$k_d$ to minimise the reconstruction error of the snapshots in the validation dataset. The selected ![]() $k_d$ is considerably higher than the

$k_d$ is considerably higher than the ![]() $k_e$. A reason for this result lies in the particular topology of the present dataset. For some specific control actions, vortex shedding is completely suppressed, resulting in a stationary post-transient solution. The cases with no shedding have

$k_e$. A reason for this result lies in the particular topology of the present dataset. For some specific control actions, vortex shedding is completely suppressed, resulting in a stationary post-transient solution. The cases with no shedding have ![]() $20$ snapshots sampled in the post-transient identical to each other. Likewise, their representation in low-dimensional space is identical. When decoding using the

$20$ snapshots sampled in the post-transient identical to each other. Likewise, their representation in low-dimensional space is identical. When decoding using the ![]() $k$NN interpolation, for snapshots close to those without shedding, considering a low

$k$NN interpolation, for snapshots close to those without shedding, considering a low ![]() $k_d$ is equivalent to considering a small number of directions for the estimation of the gradients, which is not sufficient and results in a reconstruction with considerable error. To get a practical idea, considering

$k_d$ is equivalent to considering a small number of directions for the estimation of the gradients, which is not sufficient and results in a reconstruction with considerable error. To get a practical idea, considering ![]() $k_d<20$ would be equivalent to considering only one direction, which is insufficient for decoding. Such a dataset topology greatly complicates the choice of

$k_d<20$ would be equivalent to considering only one direction, which is insufficient for decoding. Such a dataset topology greatly complicates the choice of ![]() $k_d$, suggesting the need to identify a

$k_d$, suggesting the need to identify a ![]() $k_d$ that is locally optimal. Such a choice, however, goes beyond the scope of the present research and is deferred to future work.

$k_d$ that is locally optimal. Such a choice, however, goes beyond the scope of the present research and is deferred to future work.

The accuracy of the estimation between the true (![]() $\boldsymbol {u}_i$) and estimated (

$\boldsymbol {u}_i$) and estimated (![]() $\hat {\boldsymbol {u}}_i$) snapshots is quantified in terms of cosine similarity

$\hat {\boldsymbol {u}}_i$) snapshots is quantified in terms of cosine similarity ![]() $S_G(\boldsymbol {u}_i,\hat {\boldsymbol {u}}_i)= \langle \boldsymbol {u}_i,\hat {\boldsymbol {u}}_i\rangle / (\|{\boldsymbol {u}}_i\|\|\hat {\boldsymbol {u}}_i\|)$. Results can be observed in figure 6, the knowledge of the manifold enables a full state estimation with few sensors and minimal reconstruction error. When performing the flow reconstruction using MLP

$S_G(\boldsymbol {u}_i,\hat {\boldsymbol {u}}_i)= \langle \boldsymbol {u}_i,\hat {\boldsymbol {u}}_i\rangle / (\|{\boldsymbol {u}}_i\|\|\hat {\boldsymbol {u}}_i\|)$. Results can be observed in figure 6, the knowledge of the manifold enables a full state estimation with few sensors and minimal reconstruction error. When performing the flow reconstruction using MLP![]() $_1$, the median cosine similarity is

$_1$, the median cosine similarity is ![]() $0.9977$, corresponding to a median root-mean-square error (RMSE) of

$0.9977$, corresponding to a median root-mean-square error (RMSE) of ![]() $0.0512$. Using MLP

$0.0512$. Using MLP![]() $_2$, instead, the median cosine similarity is

$_2$, instead, the median cosine similarity is ![]() $0.9990$ (

$0.9990$ (![]() ${\rm RMSE} = 0.0336$).

${\rm RMSE} = 0.0336$).

Figure 6. Cosine similarity between real and reconstructed snapshots for all ![]() $22$ actuation cases in the test dataset. Reconstruction error is plotted against the distance of each actuation case (

$22$ actuation cases in the test dataset. Reconstruction error is plotted against the distance of each actuation case (![]() $\boldsymbol {b}_i$) to its nearest neighbour in the training dataset

$\boldsymbol {b}_i$) to its nearest neighbour in the training dataset ![]() $\boldsymbol {b}_{i(1)}$. In the centre, a comparison between the true snapshot and its estimation is presented for the median error case. Figure presented with LIC and color-coded by velocity magnitude. The number of neighbours is fixed to

$\boldsymbol {b}_{i(1)}$. In the centre, a comparison between the true snapshot and its estimation is presented for the median error case. Figure presented with LIC and color-coded by velocity magnitude. The number of neighbours is fixed to ![]() $k_d = 220$. Results are shown for MLP

$k_d = 220$. Results are shown for MLP![]() $_1$ (a) and MLP

$_1$ (a) and MLP![]() $_2$ (b).

$_2$ (b).

5. Conclusions

In this study, we have addressed a challenge of reduced-order modelling: accurate low-dimensional representations for a large range of operating conditions. The flow data are compressed in a manifold using ISOMAP as encoder and ![]() $k$NN as decoder. The methodology is demonstrated for the fluidic pinball as an established benchmark problem of modelling and control. The cylinder rotations are used as three independent steady control parameters. The starting point of the reduced-order modelling is a dataset with 20 statistically representative post-transient snapshots at

$k$NN as decoder. The methodology is demonstrated for the fluidic pinball as an established benchmark problem of modelling and control. The cylinder rotations are used as three independent steady control parameters. The starting point of the reduced-order modelling is a dataset with 20 statistically representative post-transient snapshots at ![]() $Re=30$ for

$Re=30$ for ![]() $343$ sets of cylinder rotations covering uniformly a box with circumferential velocities between

$343$ sets of cylinder rotations covering uniformly a box with circumferential velocities between ![]() $-3$ and

$-3$ and ![]() $3$.

$3$.

The ISOMAP manifold describes all snapshots with ![]() $5$ latent variables and few per cent representation errors. To the best of the authors’ knowledge, this is the first demonstration of data-driven manifold learning for a flow under multiple input control. Intriguingly, all latent variables are aligned with clear physical meanings. Three coordinates correspond to near-field actuation effects. More precisely, these coordinates are strongly aligned with the Kiki parameters

$5$ latent variables and few per cent representation errors. To the best of the authors’ knowledge, this is the first demonstration of data-driven manifold learning for a flow under multiple input control. Intriguingly, all latent variables are aligned with clear physical meanings. Three coordinates correspond to near-field actuation effects. More precisely, these coordinates are strongly aligned with the Kiki parameters ![]() $\boldsymbol {p}$ introduced by Lin (Reference Lin2021), describing (i) the level of boat-tailing (base bleeding)

$\boldsymbol {p}$ introduced by Lin (Reference Lin2021), describing (i) the level of boat-tailing (base bleeding) ![]() $p_1$ leading to drag reduction (increase), (ii) the strength of the Magnus effect

$p_1$ leading to drag reduction (increase), (ii) the strength of the Magnus effect ![]() $p_2$ leading to a steady lift and characterising (iii) the forward stagnation point

$p_2$ leading to a steady lift and characterising (iii) the forward stagnation point ![]() $p_3$. Two further coordinates correspond to the wake response to actuation, i.e. the amplitude and phase of vortex shedding. The distillation of physically meaningful parameters from a fully automated manifold is surprising and inspiring (see figure 1).

$p_3$. Two further coordinates correspond to the wake response to actuation, i.e. the amplitude and phase of vortex shedding. The distillation of physically meaningful parameters from a fully automated manifold is surprising and inspiring (see figure 1).

Our low-rank model is a key enabler for flow estimation with a minimum number of sensors. It is possible to estimate the full flow state with small reconstruction errors just by knowing the actuation parameters and employing two additional measurements, namely the aerodynamic forces or the flow velocity in two points of the wake.

We emphasise that the low-dimensional characterisation of post-transient dynamics as a function of three actuations constitutes a modelling challenge and encourages numerous further applications, e.g. vortex-induced vibration of a cylinder at different spring stiffnesses and cylinder masses, aerodynamic flutter of an elastic airfoil with different wing elasticities, combustion instabilities for different reaction parameters, just to name a few. A low-dimensional manifold representation can be utilised for understanding, estimation, prediction and model-based control. In summary, the presented results can be expected to inspire a large range of applications and complementary manifold learning methods.

Acknowledgements

We thank Q. ‘Kiki’ Lin for laying the groundwork of this study with her pioneering master thesis.

Funding

This work is supported by the National Science Foundation of China (NSFC) through grants 12172109 and 12302293, by the Guangdong Basic and Applied Basic Research Foundation under grant 2022A1515011492, and by the Shenzhen Science and Technology Program under grants JCYJ20220531095605012, KJZD20230923115210021 and 29853MKCJ202300205, by the projects PITUFLOW-CM-UC3M, funded by the call ‘Programa de apoyo a la realización de proyectos interdisciplinares de I+D para jóvenes investigadores de la Universidad Carlos III de Madrid 2019-2020’ under the frame of the Convenio Plurianual Comunidad de Madrid, Universidad Carlos III de Madrid and PREDATOR-CM-UC3M funded by the call ‘Estímulo a la Investigación de Jóvenes Doctores/as’ within the frame of the Convenio Plurianual CM-UC3M and the V PRICIT (V Regional Plan for Scientific Research and Technological Innovation), by the project ARTURO, ref. PID2019-109717RB-I00/AEI/10.13039/501100011033, funded by the Spanish State Research Agency, by the project ACCREDITATION (Grant No TED2021-131453B-I00), funded by MCIN/AEI/ 10.13039/501100011033 and by the ‘European Union NextGenerationEU/PRTR’, by the funding under ‘Orden 3789/2022, del Vicepresidente, Consejero de Educación y Universidades, por la que se convocan ayudas para la contratación de personal investigador predoctoral en formación para el año 2022’, by the European Research Council (ERC) under the European Union's Horizon H2020 research and innovation programme (grant agreement No 949085) and by the project EXCALIBUR (Grant No PID2022-138314NB-I00), funded by MCIU/AEI/ 10.13039/501100011033 and by ‘ERDF A way of making Europe’. Funding for APC: Universidad Carlos III de Madrid (Agreement CRUE-Madroño 2024).

Declaration of interests

The authors report no conflict of interest.

Data availability statement

The code used in this work is made available at: https://github.com/Lmarra1/Actuation-manifold-from-snapshot-data.git. The dataset is openly available in Zenodo, accessible through the following link: https://zenodo.org/doi/10.5281/zenodo.12802191.

Appendix A. Comparison between neural network and  $k$NN performances in the decoding step

$k$NN performances in the decoding step

In this appendix, we propose an alternative to the decoding as illustrated in figure 1. In this approach, the decoding is carried out only utilising a ![]() $k$NN regression with weighted averaging with the inverse of distances between neighbours. We transition from actuation parameters and sensor data to the low-dimensional representation of a snapshot using an initial

$k$NN regression with weighted averaging with the inverse of distances between neighbours. We transition from actuation parameters and sensor data to the low-dimensional representation of a snapshot using an initial ![]() $k$NN regression, with a number of neighbours indicated as

$k$NN regression, with a number of neighbours indicated as ![]() $k_{d_1}$. Subsequently, we move from the manifold coordinates to the full state reconstruction using another

$k_{d_1}$. Subsequently, we move from the manifold coordinates to the full state reconstruction using another ![]() $k$NN regression, with a number of neighbours indicated as

$k$NN regression, with a number of neighbours indicated as ![]() $k_{d_2}$.

$k_{d_2}$.

Consider the estimation of a snapshot ![]() $\boldsymbol {u}_i$ based on the actuation vector and sensor information in the vector

$\boldsymbol {u}_i$ based on the actuation vector and sensor information in the vector ![]() $\boldsymbol {z}_i = (p_1, p_2, p_3, s_1, s_2)^{\rm T}$. We estimate the low-dimensional representation of this snapshot, which we denoted as

$\boldsymbol {z}_i = (p_1, p_2, p_3, s_1, s_2)^{\rm T}$. We estimate the low-dimensional representation of this snapshot, which we denoted as ![]() $\hat {\boldsymbol {y}}_i$, utilising the first

$\hat {\boldsymbol {y}}_i$, utilising the first ![]() $k$NN regression. Therefore, starting from

$k$NN regression. Therefore, starting from ![]() $\boldsymbol {z}_i$, we consider its

$\boldsymbol {z}_i$, we consider its ![]() $k_{d_1}$-nearest vectors in the dataset, denoted as

$k_{d_1}$-nearest vectors in the dataset, denoted as ![]() $\boldsymbol {z}_{i(1)}, \ldots, \boldsymbol {z}_{i(k_{d_1})}$, and their counterparts in the low-dimensional embedding

$\boldsymbol {z}_{i(1)}, \ldots, \boldsymbol {z}_{i(k_{d_1})}$, and their counterparts in the low-dimensional embedding ![]() $\boldsymbol {y}_{i(1)}, \ldots, \boldsymbol {y}_{i(k_{d_1})}$ to derive

$\boldsymbol {y}_{i(1)}, \ldots, \boldsymbol {y}_{i(k_{d_1})}$ to derive ![]() $\hat {\boldsymbol {y}}_i$ as follows:

$\hat {\boldsymbol {y}}_i$ as follows:

\begin{equation} \hat{\boldsymbol{y}}_i = \frac{\sum_{j=1}^{k_{d_1}} \boldsymbol{y}_{i(j)}||\boldsymbol{z}_{i(j)} - \boldsymbol{z}_i||^{{-}1}}{\sum_{j=1}^{k_{d_1}} ||\boldsymbol{z}_{i(j)} - \boldsymbol{z}_i||^{{-}1}}. \end{equation}

\begin{equation} \hat{\boldsymbol{y}}_i = \frac{\sum_{j=1}^{k_{d_1}} \boldsymbol{y}_{i(j)}||\boldsymbol{z}_{i(j)} - \boldsymbol{z}_i||^{{-}1}}{\sum_{j=1}^{k_{d_1}} ||\boldsymbol{z}_{i(j)} - \boldsymbol{z}_i||^{{-}1}}. \end{equation} Subsequently, the same procedure is applied to estimate the snapshot, denoted as ![]() $\hat {\boldsymbol {u}}_i$. We consider the

$\hat {\boldsymbol {u}}_i$. We consider the ![]() $k_{d_2}$ nearest vectors to

$k_{d_2}$ nearest vectors to ![]() $\hat {\boldsymbol {y}}_i$, labelled as

$\hat {\boldsymbol {y}}_i$, labelled as ![]() $\hat {\boldsymbol {y}}_{i(1)}, \ldots, \hat {\boldsymbol {y}}_{i(k_{d_2})}$, and their counterparts in the high-dimensional space

$\hat {\boldsymbol {y}}_{i(1)}, \ldots, \hat {\boldsymbol {y}}_{i(k_{d_2})}$, and their counterparts in the high-dimensional space ![]() $\boldsymbol {u}_{i(1)}, \ldots, \boldsymbol {u}_{i(k_{d_2})}$ to obtain the estimate as follows:

$\boldsymbol {u}_{i(1)}, \ldots, \boldsymbol {u}_{i(k_{d_2})}$ to obtain the estimate as follows:

\begin{equation} \hat{\boldsymbol{u}}_i = \frac{\sum_{j=1}^{k_{d_2}} \boldsymbol{u}_{i(j)}||\hat{\boldsymbol{y}}_{i(j)} - \hat{\boldsymbol{y}}_i||^{{-}1}}{\sum_{j=1}^{k_{d_2}} ||\hat{\boldsymbol{y}}_{i(j)} - \hat{\boldsymbol{y}}_i||^{{-}1}}. \end{equation}

\begin{equation} \hat{\boldsymbol{u}}_i = \frac{\sum_{j=1}^{k_{d_2}} \boldsymbol{u}_{i(j)}||\hat{\boldsymbol{y}}_{i(j)} - \hat{\boldsymbol{y}}_i||^{{-}1}}{\sum_{j=1}^{k_{d_2}} ||\hat{\boldsymbol{y}}_{i(j)} - \hat{\boldsymbol{y}}_i||^{{-}1}}. \end{equation} For the selection of ![]() $k_{d_1}$ and

$k_{d_1}$ and ![]() $k_{d_2}$ we have to solve the minimisation problems in (A3):

$k_{d_2}$ we have to solve the minimisation problems in (A3):

\begin{equation} \begin{cases}

\underset{k_{d_1}}{{\rm argmin}}\sum\limits_{i=1}^{M}\left(

\hat{\boldsymbol{y}}_i^{({-}m), k_{d_1}} - \boldsymbol{y}_i

\right)^2,\\ \underset{k_{d_2}}{{\rm

argmin}}\sum\limits_{i=1}^{M}\left(

\hat{\boldsymbol{u}}_i^{({-}m), k_{d_2}} - \boldsymbol{u}_i

\right)^2, \end{cases}

\end{equation}

\begin{equation} \begin{cases}

\underset{k_{d_1}}{{\rm argmin}}\sum\limits_{i=1}^{M}\left(

\hat{\boldsymbol{y}}_i^{({-}m), k_{d_1}} - \boldsymbol{y}_i

\right)^2,\\ \underset{k_{d_2}}{{\rm

argmin}}\sum\limits_{i=1}^{M}\left(

\hat{\boldsymbol{u}}_i^{({-}m), k_{d_2}} - \boldsymbol{u}_i

\right)^2, \end{cases}

\end{equation}

where ![]() $\hat {\boldsymbol {y}}_i^{(-m), k_{d_1}}$ and

$\hat {\boldsymbol {y}}_i^{(-m), k_{d_1}}$ and ![]() $\hat {\boldsymbol {u}}_i^{(-m), k_{d_2}}$ are the estimations of

$\hat {\boldsymbol {u}}_i^{(-m), k_{d_2}}$ are the estimations of ![]() $\boldsymbol {y}_i$ and

$\boldsymbol {y}_i$ and ![]() $\boldsymbol {u}_i$ using the

$\boldsymbol {u}_i$ using the ![]() $k$NN regression with

$k$NN regression with ![]() $k_{d_1}$ and

$k_{d_1}$ and ![]() $k_{d_2}$ neighbours, respectively, and using as the training dataset all snapshots except the

$k_{d_2}$ neighbours, respectively, and using as the training dataset all snapshots except the ![]() $m = 20$ snapshots of the limit cycle to which the

$m = 20$ snapshots of the limit cycle to which the ![]() $i$th snapshot, being estimated, belongs. The procedure follow the same principles of the leave-one-out cross-validation (Wand & Jones Reference Wand and Jones1994), but instead of eliminating only one case for the estimation, all snapshots of the limit cycle corresponding to the execution are eliminated. The results of the snapshot reconstruction using

$i$th snapshot, being estimated, belongs. The procedure follow the same principles of the leave-one-out cross-validation (Wand & Jones Reference Wand and Jones1994), but instead of eliminating only one case for the estimation, all snapshots of the limit cycle corresponding to the execution are eliminated. The results of the snapshot reconstruction using ![]() $k$ NN regression in the two steps of the decoding process are presented in figure 7.

$k$ NN regression in the two steps of the decoding process are presented in figure 7.

Figure 7. Cosine similarity between real and reconstructed snapshots for all ![]() $22$ actuation cases in the test dataset. Reconstruction error is plotted against the distance of each actuation case to its nearest neighbour in the training dataset. In the centre, a comparison between the true snapshot and its estimation is presented for the median error case. Figure presented with LIC and color-coded by velocity magnitude. Results are shown force for sensors (a) and velocity sensors (b).

$22$ actuation cases in the test dataset. Reconstruction error is plotted against the distance of each actuation case to its nearest neighbour in the training dataset. In the centre, a comparison between the true snapshot and its estimation is presented for the median error case. Figure presented with LIC and color-coded by velocity magnitude. Results are shown force for sensors (a) and velocity sensors (b).

Table 2 lists the values of ![]() $k_{d_1}$ and

$k_{d_1}$ and ![]() $k_{d_2}$ used for reconstructing the snapshots in the testing dataset for both sensing cases. In addition, the table also includes the reconstruction errors in terms of median cosine similarity and RMSE. The reconstruction performance is only slightly worse if compared with the case where a neural network followed by

$k_{d_2}$ used for reconstructing the snapshots in the testing dataset for both sensing cases. In addition, the table also includes the reconstruction errors in terms of median cosine similarity and RMSE. The reconstruction performance is only slightly worse if compared with the case where a neural network followed by ![]() $k$NN interpolation is used.

$k$NN interpolation is used.

Table 2. Comparison of reconstruction errors in terms of cosine similarity and RMSE for both sensing cases using the two decoding methodologies: two-step ![]() $k$NN regression (left) and neural network and

$k$NN regression (left) and neural network and ![]() $k$NN interpolation (right). The table also includes the values of

$k$NN interpolation (right). The table also includes the values of ![]() $k_{d_1}$ and

$k_{d_1}$ and ![]() $k_{d_2}$ used in the two-step

$k_{d_2}$ used in the two-step ![]() $k$NN regression decoding method.

$k$NN regression decoding method.