1 The Case for String Diagrams

The Algebraic Structure of Programs

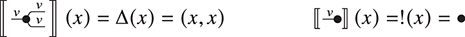

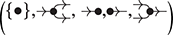

When learning a programming language, one of the most basic tasks is understanding how to correctly write programs in the language’s syntax. This syntax is often specified inductively, as a context-free grammar. For instance, the grammar shown in (1.1) defines the syntax of a very elementary imperative programming language, where variables ![]() and natural numbers

and natural numbers ![]() may occur:

may occur:

(1.1)

(1.1)

With the second row of the grammar, we can write arbitrary programs ![]() featuring assignment of a value to a variable, while loops, and program concatenation. In particular, while loops will depend upon a Boolean expression

featuring assignment of a value to a variable, while loops, and program concatenation. In particular, while loops will depend upon a Boolean expression ![]() , whose construction is dictated by the first row of the grammar. For practitioners, this information is essential to correctly write code in the given language: an interpreter will only execute programs that are written according to the grammar. For computer scientists, who are interested in formal analysis of programs, this information has deeper consequences: it gives us a powerful tool to prove mathematical properties of the language by induction over the syntax. This principle is a generalisation of how we usually reason about the natural numbers. Indeed, the set

, whose construction is dictated by the first row of the grammar. For practitioners, this information is essential to correctly write code in the given language: an interpreter will only execute programs that are written according to the grammar. For computer scientists, who are interested in formal analysis of programs, this information has deeper consequences: it gives us a powerful tool to prove mathematical properties of the language by induction over the syntax. This principle is a generalisation of how we usually reason about the natural numbers. Indeed, the set ![]() of natural numbers can also be specified via a grammar:

of natural numbers can also be specified via a grammar:

(1.2)

(1.2)

When proving properties of ![]() by induction, what we are really doing is reasoning by case analysis on the clauses of grammar (1.2). For instance, suppose we wish to prove by induction that, for each

by induction, what we are really doing is reasoning by case analysis on the clauses of grammar (1.2). For instance, suppose we wish to prove by induction that, for each ![]() ,

, ![]() . In the base case, we assume that

. In the base case, we assume that ![]() is

is ![]() ; we can verify that

; we can verify that ![]() . In the inductive step, we consider the case that

. In the inductive step, we consider the case that ![]() is

is ![]() for some

for some ![]() . If we assume

. If we assume ![]() , then we can show the statement for

, then we can show the statement for ![]() , as follows:

, as follows: ![]() .

.

In the same way, we can reason by induction on programs, whenever their syntax is specified by a grammar such as (1.1). For example, we can prove that a certain property ![]() holds for any program

holds for any program ![]() defined by (1.1), as follows: first, we need to show that

defined by (1.1), as follows: first, we need to show that ![]() holds for skip,

holds for skip, ![]() ,

, ![]() , and

, and ![]() . Then, assuming

. Then, assuming ![]() holds for

holds for ![]() , we show that it holds for while b to p. Finally, assuming

, we show that it holds for while b to p. Finally, assuming ![]() holds for

holds for ![]() and

and ![]() , we show that it holds for

, we show that it holds for ![]() .

.

This style of reasoning is extremely useful for several tasks. For instance, we may prove by induction important properties of our program, such as its correctness, safety, or liveness, as studied in the research area of formal verification. We may also define the semantics by induction, that is, assign programs their behaviour in a way that respects their structure. In programming language theory, there are usually two different ways of defining the semantics of a language: operational and denotational. The former specifies directly how to execute every expression, while the latter specifies what an expression means by assigning it a mathematical object that abstracts its intended behaviour. An inductively defined semantics is particularly important because it enables compositional (or modular) reasoning: the meaning of a complex program may be entirely understood in terms of the semantics of its more elementary expressions. For instance, if our semantics associates a function ![]() to each program

to each program ![]() , and associates to

, and associates to ![]() the composite function

the composite function ![]() , that means that the semantics of the expression

, that means that the semantics of the expression ![]() exclusively depends on the semantics of the simpler expressions

exclusively depends on the semantics of the simpler expressions ![]() and

and ![]() .

.

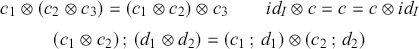

Moreover, the description of a language as a syntax equipped with a compositional semantics informs us about the algebraic structures underpinning program behaviour. For instance, in any sensible semantics, the program constructs; and ![]() of the grammar (1.1) acquire a monoid structure, with the binary operation; as its multiplication and the constant skip as its identity element. Indeed, the laws of monoids, namely that

of the grammar (1.1) acquire a monoid structure, with the binary operation; as its multiplication and the constant skip as its identity element. Indeed, the laws of monoids, namely that ![]() (associativity) and

(associativity) and ![]() (unitality), will usually hold for the semantics of these operations.

(unitality), will usually hold for the semantics of these operations.

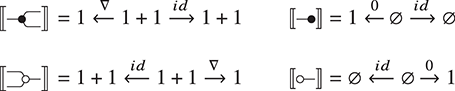

Graphical Models of Computation

As we have seen, defining a formal language via an inductively defined syntax brings clear benefits. However, not all computational phenomena may be adequately captured via this kind of formalism. Think for instance about data flowing through a digital controller. In this model, information propagates through components in complex ways, requiring constraints on how resources are processed. For example, a gate may only receive a certain quantity of data at a time, or a deadlock could occur. Sophisticated forms of interaction, such as entanglement in quantum processes, or conditional (in)dependence between random variables in probabilistic systems, also require a language capable of capturing resource exchange between components in a clear and expressive manner.

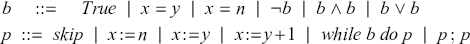

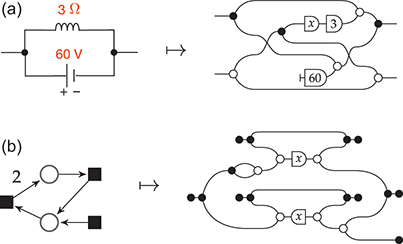

Historically, scientists have adopted graphical formalisms to properly visualise and reason about these phenomena. Graphs provide a simple pictorial representation of how information flows through a component-based system, which would otherwise be difficult to encode into a conventional textual notation. Notable examples of these formalisms include electrical and digital circuits, quantum circuits, signal flow graphs (used in control theory), Petri nets (used in concurrency theory), probabilistic graphical models like Bayesian networks and factor graphs, and neural networks. (See Figure 1.1.)

Figure 1.1 Some examples of graphical formalisms: a quantum circuit, an electric circuit, a Petri net, a Bayesian network, and a neural network.

On the other hand, graphical models have clear drawbacks compared to syntactically defined formal languages. Our ability to reason mathematically about combinatorial, graph-like structures is limited. We typically miss a formal theory of how to decompose these models into simpler components, and also of how to compose them together to create more complex models. In short, graphical models are often treated as monolithic rather than modular entities. In turn, this means that we cannot use induction on the model structure to prove their properties, as we would with a standard program syntax. Crucial features of program analysis, such as the definition of a compositional semantics, and the investigation of algebraic structures underpinning model behaviour, face significant obstacles when adapted to graphical formalisms.

String Diagrams: The Best of Both Worlds

String diagrams originate in the abstract mathematical framework of category theory, as a pictorial notation to describe the morphisms in a monoidal category. However, over the past three decades their use has expanded significantly in computer science and related fields, extending far beyond their initial purpose.

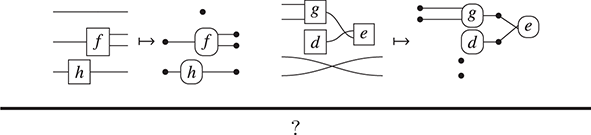

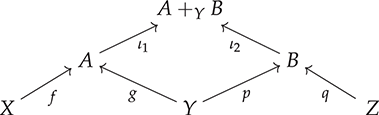

What makes string diagrams so appealing is their dual nature. Just like graphical models, they are a pictorial formalism: we can specify and reason about a string diagram as if it was a graph, with nodes and edges. However, just like programming languages, string diagrams may also be regarded as a formal syntax; we can think of them as made of elementary components (akin to the gates of a circuit, but a lot more general than that), composed via syntactically defined operations.

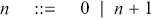

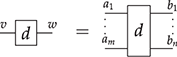

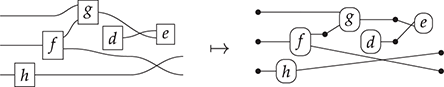

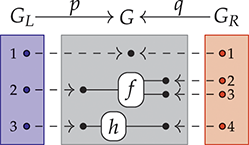

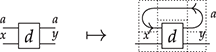

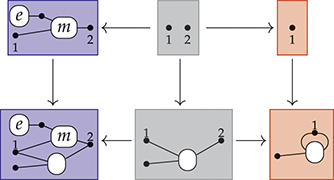

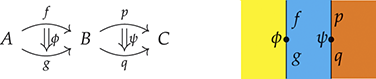

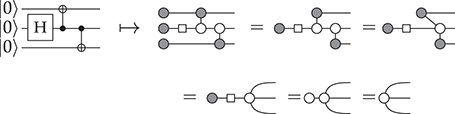

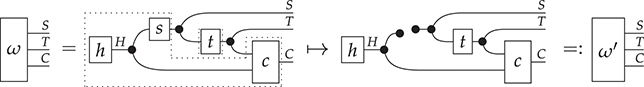

Remarkably, understanding string diagrams as syntactically defined objects does not require switching to a different (textual) formalism – the graphical representation itself is made of syntax (Figure 1.2). The theory of monoidal categories provides a rigorous formalisation of how to switch between the combinatorial and the syntactic perspective on string diagrams, as well as a rich framework to investigate their semantics and algebraic properties. Indeed, like programming languages, we can assign a semantics to string diagrams compositionally, as a functor between categories. This gives us a modular way to specify and reason about the behaviour of the models that they represent.

Figure 1.2 An example of a string diagram regarded as a (hyper)graph (a), with the side boxes signalling the interfaces for composing with other string diagrams, and the same string diagram regarded as a piece of syntax (b), with dotted boxes placed to emphasise where elementary components compose, vertically and horizontally.

String Diagrams in Contemporary Research

Thanks to their versatility, string diagrams are increasingly adopted as a reasoning tool by scientists across various research fields. We may identify two major trends in the use of string diagrams: as a way to reason about graphical models syntactically, and as a way to reason about (textual) formal languages in a more visual, resource-sensitive manner.

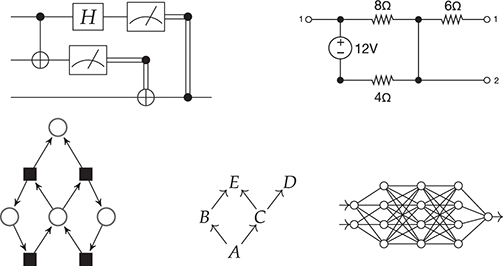

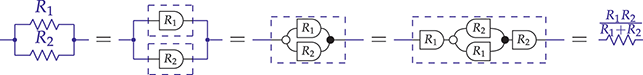

Within the first trend, string diagrammatic approaches have enabled the adoption of compositional semantics and algebraic reasoning for graphical formalisms that previously lacked these features. Examples include Petri nets [Reference Bonchi, Holland, Piedeleu, Sobociński and Zanasi16], linear dynamical systems [Reference Baez and Erbele6, Reference Bonchi, Sobociński and Zanasi20, Reference Fong, Sobociński and Rapisarda55], quantum circuits [Reference van de Wetering109], electrical circuits [Reference Boisseau, Sobociński and Kishida11], and Bayesian networks [Reference Fong51, Reference Fritz57, Reference Jacobs, Kissinger and Zanasi75], amongst others (see Figure 1.3). Besides providing a unifying mathematical perspective on these models, string diagrams have also demonstrated the ability to produce tangible outcomes, employable at industrial scale. A convincing example is the ZX-calculus, a diagrammatic language that generalises quantum circuits. It now serves as the basis for the development of state-of-the-art quantum circuit optimisation algorithms [Reference Duncan, Kissinger, Perdrix and Van De Wetering48], and is seeing widespread adoption by companies dealing with quantum computing.

Figure 1.3 String diagrams representing the behaviour of an electrical circuit (a) and a Petri net (b). The abstract perspective offered by the diagrammatic approach reveals that seemingly very different phenomena may be captured via the same set of elementary components.

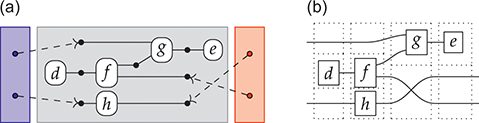

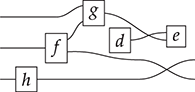

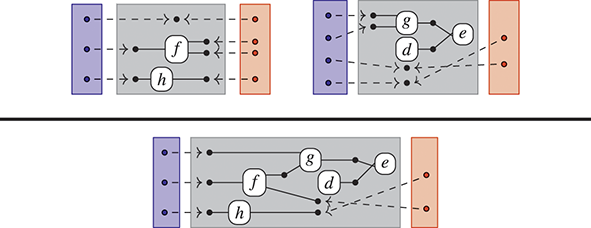

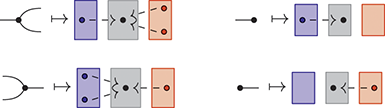

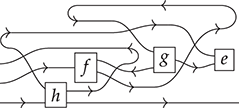

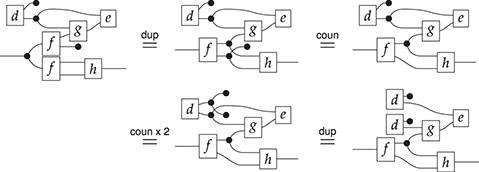

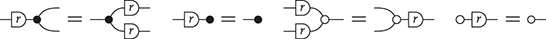

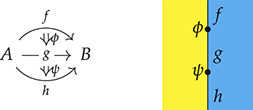

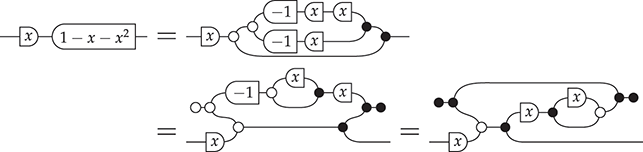

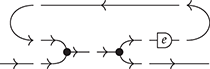

As examples of the second trend, string diagrams have been instrumental in the development of compilers [Reference Muroya and Dan95] for higher-order functional languages, and in a provably sound algorithm for reverse-mode automatic differentiation [Reference Alvarez-Picallo, Ghica, Sprunger, Zanasi, Klin and Pimentel3]. In both these examples, string diagrams serve as an intermediate formalism that sits between high-level programming languages and lower-level implementations. As the former, they can be manipulated syntactically. As the latter, they explicitly represent information propagation and other structural properties of systems (see Figure 1.4).

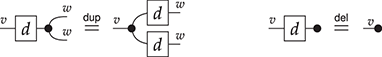

Figure 1.4 A major appeal of string diagrams is resource sensitivity: they uncover any implicit assumption on how resources are handled during a computation. For instance, in these string diagrams resources ![]() and

and ![]() are being fed to processes

are being fed to processes ![]() and

and ![]() . Suppose applying

. Suppose applying ![]() to

to ![]() is an expensive computation. In the scenario where

is an expensive computation. In the scenario where ![]() receives the value

receives the value ![]() twice, we are able to distinguish the case where we duplicate

twice, we are able to distinguish the case where we duplicate ![]() and then feed it to

and then feed it to ![]() (a), and the more efficient way, where we duplicate

(a), and the more efficient way, where we duplicate ![]() (b). Note that traditional algebraic syntax would represent both cases as the same term,

(b). Note that traditional algebraic syntax would represent both cases as the same term, ![]() .

.

Outline

This introduction provides a basic overview of string diagrams and their applications. Section 2 introduces the formal syntax (for the most common variant) of string diagrams, the rules to manipulate them, and equational theories. Section 3 shows how string diagrams may also be thought of as certain (hyper)graphs, thus providing an equivalent combinatorial perspective on these objects. In Section 4, we consider other flavours of string diagrams, which correspond to a different syntax and can be manipulated in more permissive or restrictive ways. Section 5 explains how to assign semantics to string diagrams; we cover common examples of semantics and their equational properties. Section 6 contains pointers to different trends that we do not cover in detail in this introduction. Finally, Section 7 is a non-exhaustive list of applications of string diagrams, both inside and outside of computer science.

The use of category theory is kept to a bare minimum, and we have prioritised intuition over technicalities whenever possible. For the reader’s convenience, we have included an appendix containing the most rudimentary definitions of category theory. However, it should not be treated as an introduction to the topic, for which we recommend [Reference Leinster86].

2 String Diagrams as Syntax

We have seen a couple of examples, (1.1) and (1.2), of how to specify expressions of a formal language via a grammar. In order to generalise this technique to diagrammatic expressions, it is best viewed through the lens of abstract algebra. From an algebraic viewpoint, a grammar is a means of presenting the signature of our language: the list of operations which we may use as the building blocks to construct more complex expressions. Each operation comes with its type, which remained implicit in (1.1) and (1.2). For instance, we may regard ![]() (program composition) in (1.1) as a binary operation, which takes as inputs two programs

(program composition) in (1.1) as a binary operation, which takes as inputs two programs ![]() and

and ![]() as arguments and returns as output a program

as arguments and returns as output a program ![]() as value. The type of this operation is thus

as value. The type of this operation is thus ![]() . Analogously,

. Analogously, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() may be seen as constants (operations with no inputs) of type

may be seen as constants (operations with no inputs) of type ![]() , and the while loop yields an operation of type

, and the while loop yields an operation of type ![]() : given a Boolean expression

: given a Boolean expression ![]() and a program

and a program ![]() , we obtain a program while b do p.

, we obtain a program while b do p.

This example suggests that, more generally, a signature ![]() should consist of two pieces of information: a set

should consist of two pieces of information: a set ![]() of generating operations, and a set

of generating operations, and a set ![]() of generating objects (e.g.

of generating objects (e.g. ![]() ,

, ![]() ), which may be used to indicate the type of operations. Once we fix

), which may be used to indicate the type of operations. Once we fix ![]() , we may construct the expressions over

, we may construct the expressions over ![]() the same way we would build the valid programs out of the grammar (1.1). In algebra, such expressions are called

the same way we would build the valid programs out of the grammar (1.1). In algebra, such expressions are called ![]() -terms.

-terms.

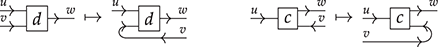

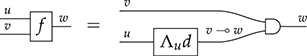

This process works in a fairly similar way for string diagrams, with some key differences. String diagrams will be built from signatures, except that now the type of operations may feature multiple outputs as well as multiple inputs, as displayed pictorially in (2.1). We will also see that variables, usually a building block of ![]() -terms, are not a native concept, but rather something that may be encoded in the diagrammatic representation. More on this point in Example 2.14, and Remarks 2.15 and 5.2.

-terms, are not a native concept, but rather something that may be encoded in the diagrammatic representation. More on this point in Example 2.14, and Remarks 2.15 and 5.2.

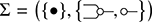

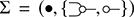

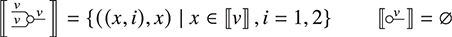

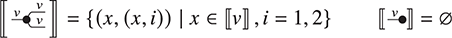

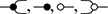

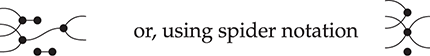

Signatures

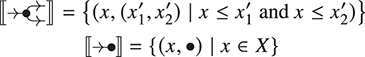

A string diagrammatic syntax may be specified starting from a signature ![]() , with a set

, with a set ![]() of generating objects and a set

of generating objects and a set ![]() of generating operations. We will refer to either simply as generators when it is clear from the context whether we mean a generating object or operation. Each generating operation

of generating operations. We will refer to either simply as generators when it is clear from the context whether we mean a generating object or operation. Each generating operation ![]() has a type

has a type ![]() , where

, where ![]() (the set of words on alphabet

(the set of words on alphabet ![]() ) is called the arity, and

) is called the arity, and ![]() the co-arity of

the co-arity of ![]() . Pictorially, an operation

. Pictorially, an operation ![]() with arity

with arity ![]() and co-arity

and co-arity ![]() will be represented as

will be represented as

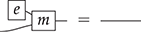

or simply  when we do not need to name the list of generating objects in the arity and co-arity.

when we do not need to name the list of generating objects in the arity and co-arity.

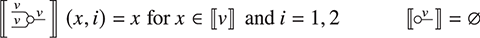

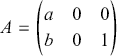

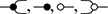

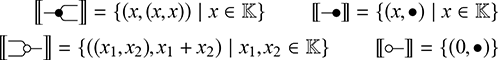

Example 2.1. We may form a signature ![]() where

where ![]() and

and  We may think of string diagrams on

We may think of string diagrams on ![]() as operations of a simple stack machine which can perform simple arithmetic on integers.

as operations of a simple stack machine which can perform simple arithmetic on integers.

Terms

Terms are generated by combining the generators of the signature in a certain way. Once again, let us look first at how terms would be specified in traditional algebra. One would start with a set ![]() of variables and a signature

of variables and a signature ![]() of operations, and define terms inductively as follows:

of operations, and define terms inductively as follows:

For each

,

,  is a term.

is a term.For each

, say of arity

, say of arity  , if

, if  are terms, then

are terms, then  is a term.

is a term.

For string diagrammatic syntax, terms are generated in a similar fashion, with two important differences: (i) it is a variable-free approach, and (ii) the way operations in ![]() are combined in the inductive step depends on the richness of the graphical structure we want to express.

are combined in the inductive step depends on the richness of the graphical structure we want to express.

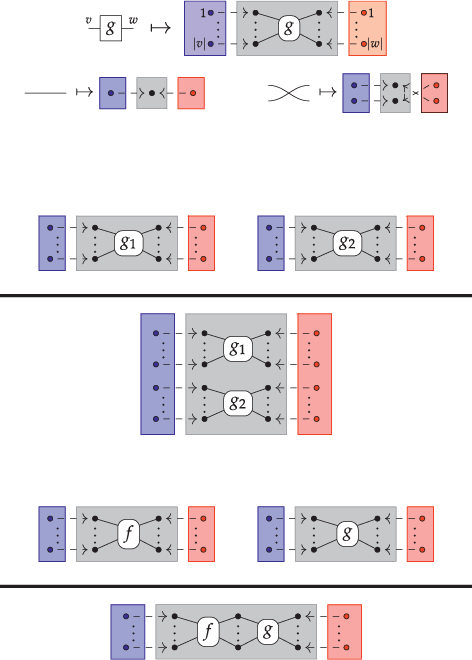

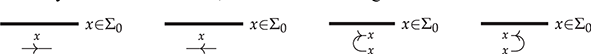

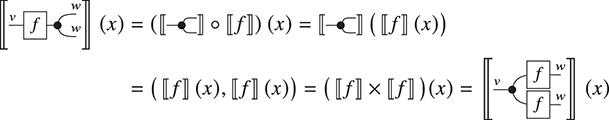

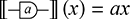

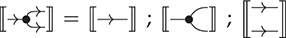

A standard choice for string diagrams is to rely on symmetric monoidal structure. This means that the generating operations in ![]() will be augmented with some ‘built-in’ operations (‘identity’, ‘symmetry’, and ‘null’), and combined via two forms of composition (‘sequential’ and ‘parallel’). As a preliminary intuition, we may think of the built-in operations as the minimal structure needed to express graphical manipulations of terms, such as ‘stretching’ a wire or crossing two wires. Fixing a signature

will be augmented with some ‘built-in’ operations (‘identity’, ‘symmetry’, and ‘null’), and combined via two forms of composition (‘sequential’ and ‘parallel’). As a preliminary intuition, we may think of the built-in operations as the minimal structure needed to express graphical manipulations of terms, such as ‘stretching’ a wire or crossing two wires. Fixing a signature ![]() , the

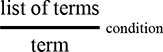

, the ![]() -terms are generated by a few simple derivation rules or term formation rules: these are written in the form

-terms are generated by a few simple derivation rules or term formation rules: these are written in the form

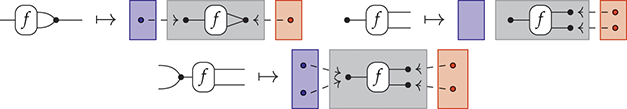

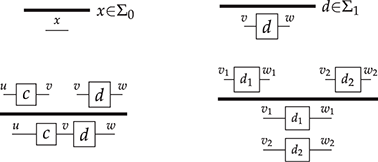

Here, we may regard the list of terms above the line as hypotheses needed to form the term in conclusion of the rule, below the line, provided that the side-condition is satisfied. For symmetric monoidal diagrams, the rules are as follows.

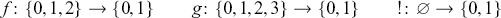

1. First, we have that every generating operation in

yields a term:

yields a term:

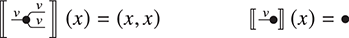

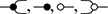

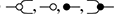

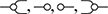

2. Next, each built-in operation (from left to right below: identity, symmetry, and null) also yields a term:

3. For the inductive step, a new term may be built by combining two terms, either sequentially (left) or in parallel (right). Note that, for sequential composition, the output of the leftmost term needs to match the input of the rightmost term. For parallel composition, there is no such requirement, and the resulting term has input (output) the concatenation of the words forming the inputs (outputs) of the starting terms.

Using the composition rules, we can define by induction ‘identities’ and ‘symmetries’ for arbitrary words ![]() in

in ![]() .

.

Varying the set of built-in terms (second clause) and the ways of combining terms (last two clauses) will capture structures different from symmetric monoidal, as illustrated in Section 4.

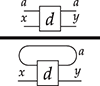

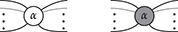

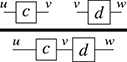

Another important point: notice that null, the identity over the empty word ![]() is not depicted (or is depicted as the empty diagram), which is shown in the preceding as an empty dotted box. Furthermore, since the type of terms is a pair of words over some generating alphabet, they can have the empty word

is not depicted (or is depicted as the empty diagram), which is shown in the preceding as an empty dotted box. Furthermore, since the type of terms is a pair of words over some generating alphabet, they can have the empty word ![]() as arity or co-arity. A term of type

as arity or co-arity. A term of type ![]() , sometimes called a state, has an empty left boundary,

, sometimes called a state, has an empty left boundary,

while a term of type ![]() , sometimes called an effect, has an empty right boundary,

, sometimes called an effect, has an empty right boundary,

Consequently, a term of type ![]() , which is sometimes called a scalar, or a closed term, by analogy with the corresponding algebraic notion of terms containing no free variables, has no boundary at all; it is thus depicted as just a box, with no wires:

, which is sometimes called a scalar, or a closed term, by analogy with the corresponding algebraic notion of terms containing no free variables, has no boundary at all; it is thus depicted as just a box, with no wires:

The names ‘state’, ‘effect’, and ‘scalar’ originate from the role played by string diagrams of this type in quantum theory [Reference Coecke and Kissinger35].

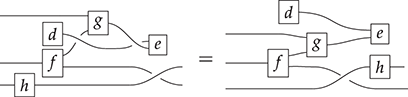

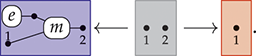

From Terms to String Diagrams

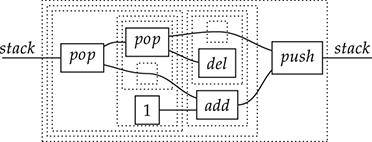

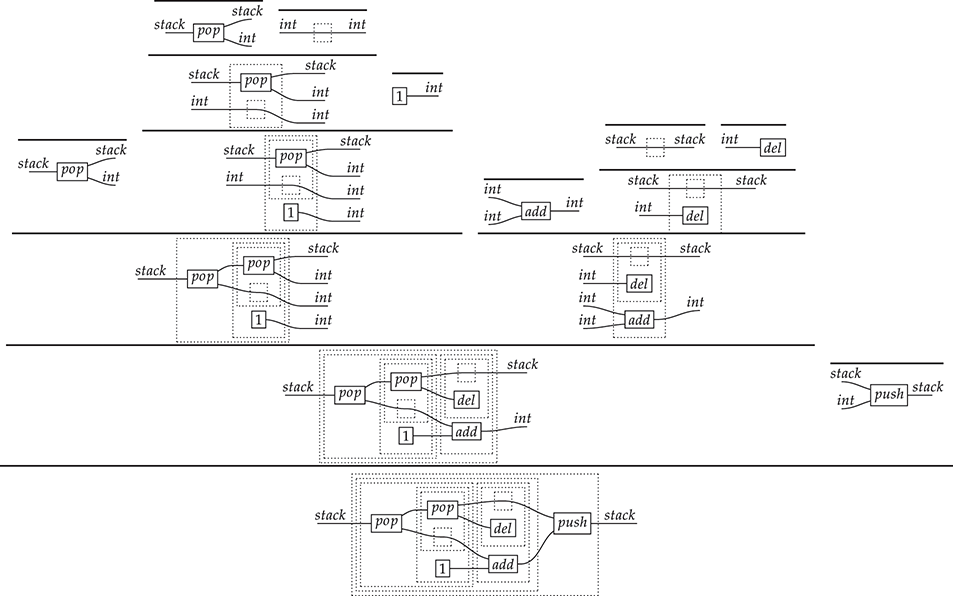

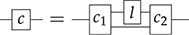

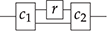

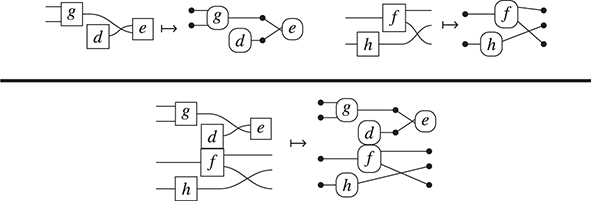

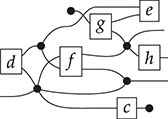

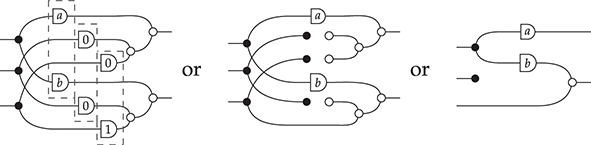

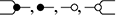

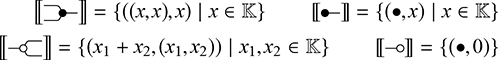

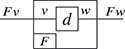

Terms are not quite the same as string diagrams. As soon as we consider more elaborate terms, we realise that the preceding definition requires us to decorate pictures with extra notation to keep track of the order in which we have applied the different forms of composition. For instance, (2.2) is a term from the signature in Example 2.1.

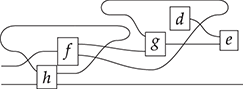

It denotes a very simple protocol, which pops two values of the stack, deletes the second one, and increments the first by one, before pushing it back onto the stack. We have only kept outer object labels for readability. Its full derivation tree is given in Figure 2.1. Notice that a bracketing by dotted frames fully specifies the corresponding derivation tree.

Figure 2.1 An example derivation tree.

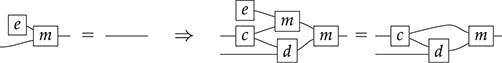

This example makes apparent that ![]() -terms come with lots of extra information on how the graphical representation has been put together: the dotted boxes keep track of the order in which sequential and parallel composition have been applied. The move to string diagrams allows us to abstract away this information and focus solely on how the term components are wired together. More formally, a string diagram on

-terms come with lots of extra information on how the graphical representation has been put together: the dotted boxes keep track of the order in which sequential and parallel composition have been applied. The move to string diagrams allows us to abstract away this information and focus solely on how the term components are wired together. More formally, a string diagram on ![]() is defined as an equivalence class of

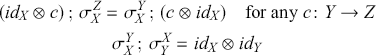

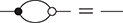

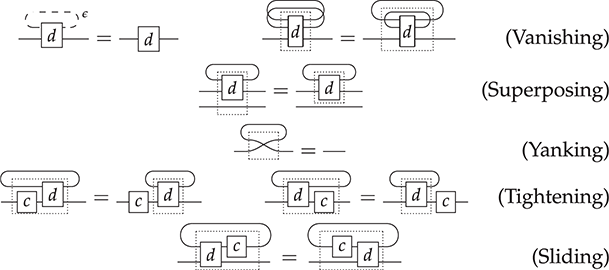

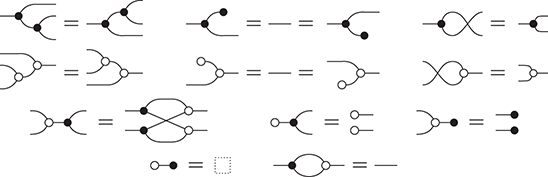

is defined as an equivalence class of ![]() -terms, where the quotient is taken with respect to the reflexive, symmetric, and transitive closure of the following equations (where object labels are omitted for readability, and

-terms, where the quotient is taken with respect to the reflexive, symmetric, and transitive closure of the following equations (where object labels are omitted for readability, and ![]() ,

, ![]() range over

range over ![]() -terms of the appropriate arity/co-arity):

-terms of the appropriate arity/co-arity):

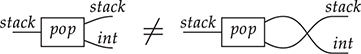

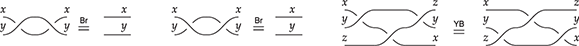

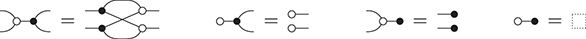

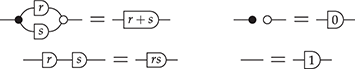

If we think of the dotted frames as two-dimensional brackets, these laws tell us that the specific bracketing of a term does not matter. This is similar to how, in algebra, ![]() for an associative operation, justifying the use of the unbracketed expression

for an associative operation, justifying the use of the unbracketed expression ![]() . In fact, there’s an even better notation: when dealing with a single associative binary operation, we can simply forget it and write any product as a concatenation,

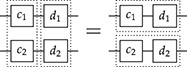

. In fact, there’s an even better notation: when dealing with a single associative binary operation, we can simply forget it and write any product as a concatenation, ![]() ! This is a simple instance of the same key insight that allows us to draw string diagrams. It is helpful to think of these diagrammatic rules as a higher-dimensional version of associativity.Footnote 1 The rightmost identity of the first line in (2.3) is the interchange law, which concerns the interplay between the two forms of composition: we can take the parallel composition of two sequentially composed terms, or vice versa, and the resulting string diagram will be the same. The remaining axioms of the first two lines encode the associativity and unitality of the two forms of composition. The last line contains two axioms involving wire crossings

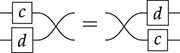

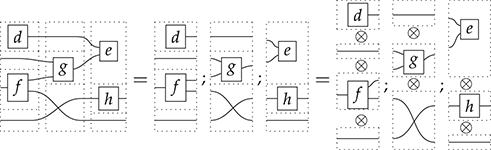

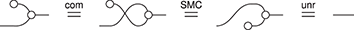

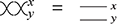

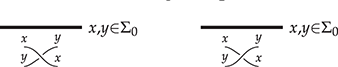

! This is a simple instance of the same key insight that allows us to draw string diagrams. It is helpful to think of these diagrammatic rules as a higher-dimensional version of associativity.Footnote 1 The rightmost identity of the first line in (2.3) is the interchange law, which concerns the interplay between the two forms of composition: we can take the parallel composition of two sequentially composed terms, or vice versa, and the resulting string diagram will be the same. The remaining axioms of the first two lines encode the associativity and unitality of the two forms of composition. The last line contains two axioms involving wire crossings  : the first, called the naturality of

: the first, called the naturality of  , tells us that boxes can be pulled across wires; the second, that the wire crossing is self-inverse. As a result, wires can be entangled or disentangled as long as we do not modify how the boxes are connected. We call these axioms the laws of symmetric monoidal categories (SMC).

, tells us that boxes can be pulled across wires; the second, that the wire crossing is self-inverse. As a result, wires can be entangled or disentangled as long as we do not modify how the boxes are connected. We call these axioms the laws of symmetric monoidal categories (SMC).

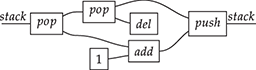

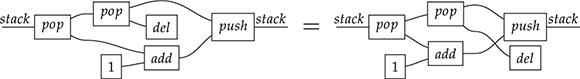

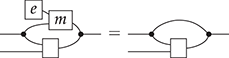

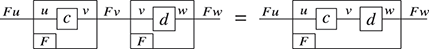

Thanks to the laws of SMC, we can safely remove the brackets from the term in (2.2) to obtain the corresponding string diagram:

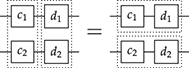

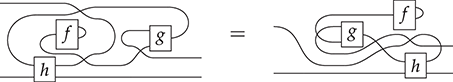

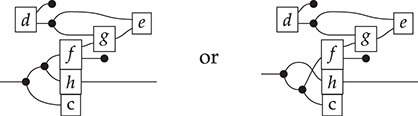

This representation is now unambiguous because the axioms in (2.3) imply that any way of placing dotted frames around components of (2.4) leads to equivalent ![]() -terms. For instance, the following two bracketed terms are equivalent as string diagrams:

-terms. For instance, the following two bracketed terms are equivalent as string diagrams:

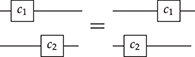

There is an important subtlety: if, formally, a string diagram is an equivalence class of terms quotiented by the laws of (2.3), there is not a unique way to depict a string diagram. In other words, the graphical representation (even without dotted frames) sits in between terms and string diagrams, as it does not distinguish certain equivalent terms. In some cases the depiction absorbs the laws of SMC, for example, for the two sides of the interchange law:

In other cases, the way we draw them distinguishes string diagrams that are equivalent under the laws of (2.3). Consider, for example,

This equality can be seen as an instance of the interchange law (2.5) with identity wires or as a consequence of the unitality of identity wires, which allows us to stretch wires as much as we like.

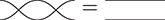

A related point is that string diagrams do not distinguish different ways of braiding wires, even if our drawings do:

The laws of (2.3) guarantee that any two string diagrams made entirely of wire crossings over the same number of wires are equal when they define the same permutation of the wires. If the other rules are two-dimensional versions of associativity, the wire-crossing axioms are two-dimensional generalisations of commutativity. In ordinary algebra, when we have a commutative and associative binary operation, we can write products using any ordering of its elements: ![]() . For string diagrams, the vertical juxtaposition of boxes is not strictly commutative; nevertheless, we are allowed to move boxes across wires, which is the next best thing:

. For string diagrams, the vertical juxtaposition of boxes is not strictly commutative; nevertheless, we are allowed to move boxes across wires, which is the next best thing:

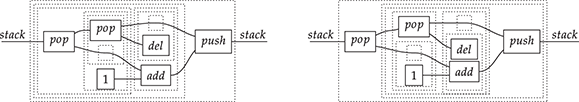

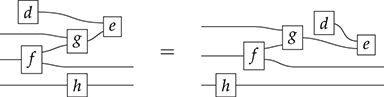

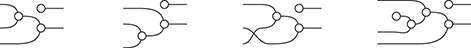

(We invite the reader to show this as their first exercise in diagrammatic reasoning.) Furthermore, we need to keep track of how boxes are wired, but only the specific permutation of the wires matters, not how we have constructed it. Coming back to our stack-machine example, the following are equivalent string diagrams:

While this situation may appear slightly confusing at first, these examples show that in practice the distinction between string diagrams (as equivalence classes) and how we depict them is harmless. The topological moves that are captured by the equations of (2.3) are designed to be intuitive. They are often summarised by the following slogan: only the connectivity matters. The rule of thumb is that any deformation that preserves the connectivity between the boxes and does not require us to bend the wires backwards will give two equivalent string diagrams.

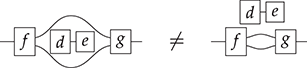

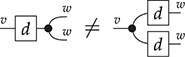

Finally, keep in mind that the connection points from which we attach wires to boxes are ordered, so that the following two string diagrams are not equivalent:

Definition 2.2 (String diagrams over Σ). String diagrams over ![]() are

are ![]() -terms quotiented by the equations in (2.3).

-terms quotiented by the equations in (2.3).

Example 2.3. Following the preceding discussion, the reader should convince themselves that the following two (unframed) terms depict the same string diagram:

(Free) Symmetric Monoidal Categories

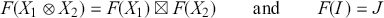

In algebra, the collection of terms obtained from a signature, without any additional operations or equations, is often called the free structure over that signature. The diagrammatic language of string diagrams comes with an associated notion of free structure: the free symmetric monoidal category (SMC) over a given signature.Footnote 2

At this point, the more mathematically inclined reader might object that we still have not defined rigorously what an SMC is. Somewhat circularly, we could say that an SMC is a structure in which we can interpret string diagrams! Less tautologically, it is a category with an additional operation – the monoidal product – on objects and morphisms that satisfies the laws shown in (2.3). To state them without string diagrams, we need to introduce explicit notation for composition and the monoidal product. We do so in the following definition. Note that we assume basic knowledge of what a category is. The definition, along with related notions, can be found in the Appendix.

Definition 2.4. A (strict) symmetric monoidal category ![]() is a category

is a category ![]() equipped with a distinguished object

equipped with a distinguished object ![]() , a binary operation

, a binary operation ![]() on objects, an operation of type

on objects, an operation of type ![]() on morphisms which we also write as

on morphisms which we also write as ![]() , such that

, such that ![]() and

and

and a family of morphisms ![]() for any two objects

for any two objects ![]() , such that

, such that

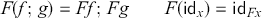

Observe that these are exactly the laws shown in (2.3) in symbolic form: we can simply replace ‘ ![]() ’ by vertical composition and ‘ ; ’ by horizontal composition.

’ by vertical composition and ‘ ; ’ by horizontal composition.

It is possible to translate any string diagrams into symbolic notation. For instance, the diagram of Example 2.3 can be written as ![]() . This expression can be obtained by successively decomposing the diagrams into horizontal and vertical layers as follows:

. This expression can be obtained by successively decomposing the diagrams into horizontal and vertical layers as follows:

As for string diagrams, there are multiple ways to write a given morphism in symbolic notation. In fact, because string diagrams absorb some of the laws of SMCs into the notation, there are usually more ways of writing a given morphism in symbolic notation than there are diagrammatic representations for it.

Remark 2.5 (On strictness). The last definition is not the one that the reader is likely to encounter in the literature when looking up the term ‘symmetric monoidal category’. It defines what is called a strict monoidal category; the usual notion is more general and allows for the equalities to be replaced by isomorphisms. We will not give a rigorous definition of this more general notion and refer the reader to any standard textbook on category theory for a general introduction to SMCs [Reference Mac Lane89: chapter XI]. Our approach is nevertheless theoretically motivated by the following fundamental result: every SMC is equivalent (in a sense that we will not cover here in detail, but do recall in the Appendix, at Definition A.7) to a strict SMC. This fact is what allows us to draw string diagrams. It is known as the coherence theorem for SMCs. Put differently, the coherence theorem allows us to forget explicit symbols for ‘ ![]() ’ and ‘

’ and ‘ ![]() ’, replacing them by vertical juxtaposition and horizontal composition without any brackets to denote the order of application.Footnote 3 Once again, the reader is invited to think about this as a two-dimensional generalisation of well-known facts about monoids: just like we can we can simply concatenate elements of a monoid and omit the symbol for the multiplication and the parentheses to bracket its application, we can use string diagrams to represent morphisms of a SMC. It is then natural that more composition operations require more dimensions to represent. In fact, some of the earliest appearances of string diagramsFootnote 4 occurred to construct free SMCs with additional structure and prove a coherence theorem for them [Reference Maxwell Kelly, Kelly, Laplaza, Lewis and Lane79; Reference Maxwell Kelly and Laplaza80].

’, replacing them by vertical juxtaposition and horizontal composition without any brackets to denote the order of application.Footnote 3 Once again, the reader is invited to think about this as a two-dimensional generalisation of well-known facts about monoids: just like we can we can simply concatenate elements of a monoid and omit the symbol for the multiplication and the parentheses to bracket its application, we can use string diagrams to represent morphisms of a SMC. It is then natural that more composition operations require more dimensions to represent. In fact, some of the earliest appearances of string diagramsFootnote 4 occurred to construct free SMCs with additional structure and prove a coherence theorem for them [Reference Maxwell Kelly, Kelly, Laplaza, Lewis and Lane79; Reference Maxwell Kelly and Laplaza80].

Definition 2.6 (Free SMC on a signature Σ). The symmetric monoidal category ![]() is formed by letting objects be elements of

is formed by letting objects be elements of ![]() and morphisms be string diagrams over

and morphisms be string diagrams over ![]() , that is,

, that is, ![]() -terms quotiented by (2.3). The monoidal product is defined as word concatenation on objects. Composition and product of string diagrams are defined respectively by sequential and parallel composition of (some arbitrary representative of each equivalence class of)

-terms quotiented by (2.3). The monoidal product is defined as word concatenation on objects. Composition and product of string diagrams are defined respectively by sequential and parallel composition of (some arbitrary representative of each equivalence class of) ![]() -terms.

-terms.

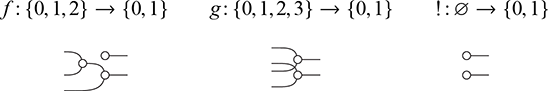

Example 2.7 (Free SMC over a single object). The free SMC over the signature ![]() is easy to describe explicitly. Its string diagrams are generated by horizontal and vertical compositions of

is easy to describe explicitly. Its string diagrams are generated by horizontal and vertical compositions of

(where we omit labels for the single generating object ![]() ), modulo the laws of SMCs. Here are a few examples:

), modulo the laws of SMCs. Here are a few examples:

In other words, they are permutations of the wires! If we write ![]() for the concatenation of

for the concatenation of ![]() bullets, a string diagram

bullets, a string diagram ![]() is a permutation of

is a permutation of ![]() elements, and there are no diagrams

elements, and there are no diagrams ![]() for

for ![]() .

.

Free SMCs on a single generating object (and arbitrary generating operations) are usually called PROPs (Product and Permutation categories) [Reference Mac Lane88]. The PROP of permutations, which we just described, is the ‘simplest’ possible PROP. More formally, it is the initial object in the category of PROPs. Sometimes, the notion of a ‘coloured’ PROP is encountered: this is nothing but a (strict) SMC whose set of objects is freely generated from any set of generating objects, instead of just a single generating object as in the case of plain PROPs.

When we encounter PROPs, we will use natural numbers as objects, since all objects are of the form ![]() , and write the type of a string diagram

, and write the type of a string diagram ![]() simply as

simply as ![]() .

.

Symmetric Monoidal Functors

Whenever we define a new mathematical structure, it is good practice to introduce a corresponding notion of mapping between them. For SMCs, this is the notion of a symmetric monoidal functor. We will need it when giving string diagrams a semantic interpretation in Section 5.

Definition 2.8. Let ![]() and

and ![]() be two SMCs. A (strict) symmetric monoidal functor

be two SMCs. A (strict) symmetric monoidal functor ![]() is a mapping from objects of

is a mapping from objects of ![]() to those of

to those of ![]() that satisfies

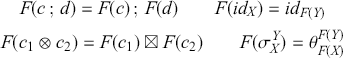

that satisfies

and a mapping from morphisms of ![]() to those of

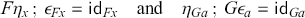

to those of ![]() that satisfies

that satisfies

In this introduction, for pedagogical reasons, we will mostly use strict monoidal functors, that is, functors that preserve the monoidal structure on the nose. The reader should know that it is possible, and sometimes necessary, to relax this requirement, replacing the equalities ![]() and

and ![]() by isomorphisms (which then have to satisfy certain compatibility conditions). See [Reference Mac Lane89] for a standard treatment and [Reference Selinger106] for the connections with string diagrams.

by isomorphisms (which then have to satisfy certain compatibility conditions). See [Reference Mac Lane89] for a standard treatment and [Reference Selinger106] for the connections with string diagrams.

If we have two such functors ![]() and

and ![]() that are inverses to each other –

that are inverses to each other – ![]() and

and ![]() are identity functors – we say that the two SMCs are isomorphic. We will also use the notion equivalence of SMCs. This is a more relaxed notion than that of isomorphism, where

are identity functors – we say that the two SMCs are isomorphic. We will also use the notion equivalence of SMCs. This is a more relaxed notion than that of isomorphism, where ![]() and

and ![]() are merely isomorphic to identity functors. It is more appropriate in some cases, in particular when the categories involved are not strict monoidal (see Remark 2.5, for example). We will not dwell on equivalences of categories much in this Element, but refer the reader to Definition A.7 and Remark A.8 in the Appendix.

are merely isomorphic to identity functors. It is more appropriate in some cases, in particular when the categories involved are not strict monoidal (see Remark 2.5, for example). We will not dwell on equivalences of categories much in this Element, but refer the reader to Definition A.7 and Remark A.8 in the Appendix.

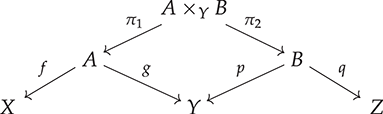

Remark 2.9 (On functors from free SMCs). When defining a functor ![]() out of a free SMC

out of a free SMC ![]() , there is a clear recipe to follow: we only need to specify to which object we want to map elements of

, there is a clear recipe to follow: we only need to specify to which object we want to map elements of ![]() , and to which morphism

, and to which morphism ![]() we want to map each element of

we want to map each element of ![]() of

of ![]() . This is because of the universal property of free constructions: if we have a mapping from the set of generating operations of some signature

. This is because of the universal property of free constructions: if we have a mapping from the set of generating operations of some signature ![]() to morphisms of some SMC

to morphisms of some SMC ![]() , there is a unique way of extending this mapping to a symmetric monoidal functor

, there is a unique way of extending this mapping to a symmetric monoidal functor ![]() . This observation will come in handy when defining the semantics of string diagrammatic theories, in Section 5.

. This observation will come in handy when defining the semantics of string diagrammatic theories, in Section 5.

Example 2.10. In Example 2.7, we saw that the morphisms/string diagrams of the free SMC over a single object looked a lot like permutations. There is a way of making this precise, by establishing an isomorphism between this SMC and another whose morphisms are permutations of finite sets. Let ![]() be the category whose objects are natural numbers, and morphisms

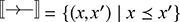

be the category whose objects are natural numbers, and morphisms ![]() are permutations of

are permutations of ![]() . We can equip it with a monoidal product, given by addition on objects, and on morphisms

. We can equip it with a monoidal product, given by addition on objects, and on morphisms ![]() and

and ![]() by

by ![]() if

if ![]() and

and ![]() otherwise. The unit of the monoidal structure is the number

otherwise. The unit of the monoidal structure is the number ![]() and the symmetry is the permutation over two elements, which we write as

and the symmetry is the permutation over two elements, which we write as ![]() , given by

, given by ![]() and

and ![]() . The isomorphism is straightforward. In one direction, let

. The isomorphism is straightforward. In one direction, let ![]() be given by

be given by ![]() on objects and

on objects and  . This is enough to describe

. This is enough to describe ![]() fully because all string diagrams of

fully because all string diagrams of ![]() are vertical or horizontal composites of

are vertical or horizontal composites of  and

and ![]() has to preserve these two forms of composition, by Definition 2.8. Furthermore, the required properties are immediately satisfied. To build its inverse, we need to know that we can factor any permutation into a composition of adjacent transpositions (this fact is fairly intuitive and usually covered in introductory algebra courses, so we will not prove it here). Then, notice that the transposition

has to preserve these two forms of composition, by Definition 2.8. Furthermore, the required properties are immediately satisfied. To build its inverse, we need to know that we can factor any permutation into a composition of adjacent transpositions (this fact is fairly intuitive and usually covered in introductory algebra courses, so we will not prove it here). Then, notice that the transposition ![]() over

over ![]() elements should clearly be mapped to the string diagram that is the identity everywhere and

elements should clearly be mapped to the string diagram that is the identity everywhere and  at the

at the ![]() -th and

-th and ![]() -th wires. Call this diagram

-th wires. Call this diagram ![]() . Then, let

. Then, let ![]() be given by

be given by ![]() on objects and on morphisms by

on objects and on morphisms by ![]() where

where ![]() is a decomposition of

is a decomposition of ![]() into adjacent transpositions. One can check that this is well-defined and satisfies all the equations of Definition 2.8. Moreover the two are inverses of each other. For example, to see that

into adjacent transpositions. One can check that this is well-defined and satisfies all the equations of Definition 2.8. Moreover the two are inverses of each other. For example, to see that ![]() it is sufficient to check that equality for

it is sufficient to check that equality for  . It holds clearly as

. It holds clearly as  . The other direction is a bit more lengthy, but without any major difficulties.

. The other direction is a bit more lengthy, but without any major difficulties.

Thus ![]() gives a semantic account of the free SMC over a single object. Conversely, the latter can be seen as diagrammatic syntax for the former.

gives a semantic account of the free SMC over a single object. Conversely, the latter can be seen as diagrammatic syntax for the former.

In fact, this SMC is also equivalent to the non-strict SMC of finite sets and bijections between them, with the disjoint sum as monoidal product. The equivalence is also straightforward to establish, but requires us to fix a total ordering on every finite set.

This example is just a taster of an idea that we will develop further in Section 5, dedicated to the semantics – that is, the interpretation – of string diagrams.

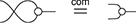

2.1 Adding Equations

The equations shown in (2.3) only capture a very basic notion of equivalence between string diagrams. When describing computational processes for example, it is useful to include more equations, specific to the domain of interest. In string diagram theory, these additional equations are encapsulated in the notion of symmetric monoidal theory. More formally, a symmetric monoidal theory – or simply theory when no ambiguity can arise – is a pair ![]() , where

, where ![]() is a signature and

is a signature and ![]() is a set of equalities

is a set of equalities ![]() between string diagrams of the same type over

between string diagrams of the same type over ![]() . We write

. We write ![]() for the smallest congruence relation (w.r.t. sequential and parallel composition) containing

for the smallest congruence relation (w.r.t. sequential and parallel composition) containing ![]() . We will see many examples of symmetric monoidal theories in Section 2.2.

. We will see many examples of symmetric monoidal theories in Section 2.2.

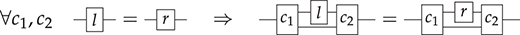

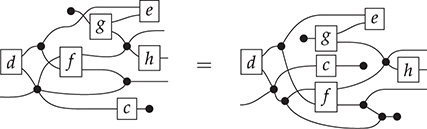

Remark 2.11 (Equations and diagrammatic rewriting). It might be helpful to see equations as two-way rewriting rules that can be applied in an arbitrary context. More precisely, assume that we have some equation of the form ![]() , where

, where ![]() have the same type; to apply it in context, we need to identify

have the same type; to apply it in context, we need to identify ![]() in a larger string diagram

in a larger string diagram ![]() , that is, find

, that is, find ![]() and

and ![]() such that

such that

and simply replace ![]() by

by ![]() , forming the new diagram

, forming the new diagram

. This is just the diagrammatic version of standard algebraic reasoning. We can summarise this process as follows:

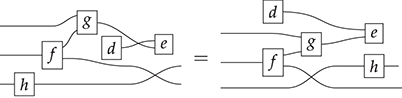

For example,

where the context is

We will come back to this point, in the context of graph-rewriting, in Section 6.1.

In the same way that string diagrams corresponded to a free structure, the free symmetric monoidal category (SMC) over ![]() , quotienting by further equations also determines a free structure: given a signature

, quotienting by further equations also determines a free structure: given a signature ![]() and a theory

and a theory ![]() , we can form the free SMC

, we can form the free SMC ![]() obtained by quotienting the free SMC

obtained by quotienting the free SMC ![]() by the equivalence relation over string diagrams given by

by the equivalence relation over string diagrams given by ![]() .

.

Definition 2.12 (Free SMC over a theory (Σ,E)). The symmetric monoidal category ![]() is formed by letting objects be elements of

is formed by letting objects be elements of ![]() and morphisms be equivalence classes of string diagrams over

and morphisms be equivalence classes of string diagrams over ![]() quotiented by

quotiented by ![]() . The monoidal product is defined as word concatenation on objects; composition and product of morphisms are defined respectively by sequential and parallel composition of arbitrary representatives of each equivalence class.

. The monoidal product is defined as word concatenation on objects; composition and product of morphisms are defined respectively by sequential and parallel composition of arbitrary representatives of each equivalence class.

2.2 Common Equational Theories

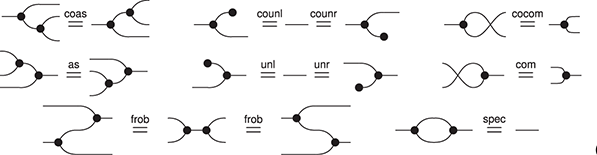

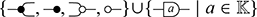

Some theories occur frequently in the literature. Many authors assume familiarity with the axioms hiding behind the words ‘monoids’, ‘comonoids’, ‘bimonoids/bialgebras’, or ‘Frobenius monoid/algebra’, and how all of these theories relate to one another. For this reason it is valuable to know them well, especially when trying to distinguish routine moves from key steps in diagrammatic proofs. This section describes a few of the most commonly found examples.

Example 2.13 (Monoids). Let us begin with the deceptively easy example of monoids. Many readers will undoubtedly be familiar with the algebraic theory of monoids, which can be presented by two generating operations, say ![]() of arity

of arity ![]() and

and ![]() of arity

of arity ![]() (in other words, a constant) satisfying the following three axioms:

(in other words, a constant) satisfying the following three axioms:

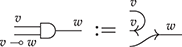

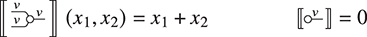

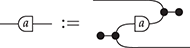

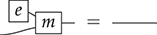

Analogously, the symmetric monoidal theory of monoids can be presented by a signature

, based on a single object-type ![]() , a multiplication

, a multiplication

, and a unit

, and three axioms, for associativity and (two-sided) unitality:

Observe that we have just replaced variables with wires and algebraic operations with diagrammatic generators. As in ordinary algebra, two terms/diagrams are equal if one can be obtained from the other by applying some sequence of these three equations (recall Remark 2.11).

We can also present commutative monoids in the same way. Recall that commutative monoids are those that satisfy ![]() ; diagrammatically, we can present the corresponding symmetric monoidal theory with the same signature and a single additional equality (note the use of the symmetry

; diagrammatically, we can present the corresponding symmetric monoidal theory with the same signature and a single additional equality (note the use of the symmetry

):

Of course, in the presence of commutativity, each of the unitality laws are derivable from the other. In the usual algebraic theory of monoids we would show this as follows: if ![]() , then

, then ![]() where the last step is right-unitality. The corresponding diagrammatic proof is very similar, with one additional step:

where the last step is right-unitality. The corresponding diagrammatic proof is very similar, with one additional step:

The second equality is a simple instance of the bottom left axiom of (2.3), for a string diagram with no wires on the left (that is, of type ![]() for some

for some ![]() ).

).

This makes an important point: two theories ![]() and

and ![]() might present the same structure, in the sense that the corresponding free SMCs

might present the same structure, in the sense that the corresponding free SMCs ![]() and

and ![]() might be isomorphic. It is also a good place to mention that theories do not have to be minimal in any way; they can contain axioms that are derivable from the others. There are various reasons one might prefer a theory that contains redundant axioms: to highlight some of the symmetries, to avoid having to re-derive some equalities as a lemma later on, and others.

might be isomorphic. It is also a good place to mention that theories do not have to be minimal in any way; they can contain axioms that are derivable from the others. There are various reasons one might prefer a theory that contains redundant axioms: to highlight some of the symmetries, to avoid having to re-derive some equalities as a lemma later on, and others.

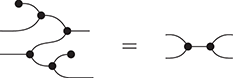

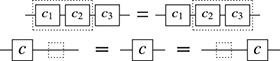

When dealing with monoids, there are several straightforward syntactic simplifications that the reader is likely to encounter in the literature. First, a simple observation: in standard algebraic syntax, the associativity axiom ![]() implies that any two ways of applying monoid multiplication to the same list of elements are all equal. Therefore it is unambiguous to introduce a generalised monoid operation for any finite arity, for example

implies that any two ways of applying monoid multiplication to the same list of elements are all equal. Therefore it is unambiguous to introduce a generalised monoid operation for any finite arity, for example ![]() , to denote all possible ways of applying

, to denote all possible ways of applying ![]() to these three elements, and avoid a flurry of parentheses. (Note that, with this syntactic sugar, the unit

to these three elements, and avoid a flurry of parentheses. (Note that, with this syntactic sugar, the unit ![]() denotes the application of

denotes the application of ![]() to zero elements.) The same trick works for an associative

to zero elements.) The same trick works for an associative

: we can define a generalised ![]() -ary operation as a dot with

-ary operation as a dot with ![]() -many wires

-many wires

as syntactic sugar to denote multiple applications of

. For this reason, the reader might also encounter diagrammatic proofs that identify different ways of applying a monoid operation to the same list of elements, much like a practitioner well-versed in ordinary algebra will usually omit parentheses where they can do so unambiguously.

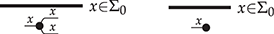

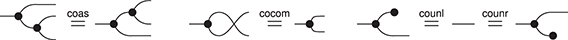

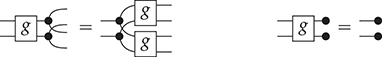

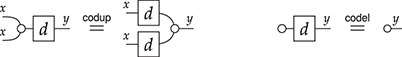

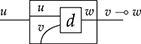

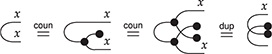

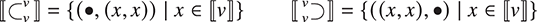

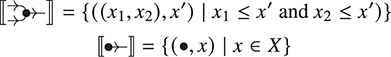

Example 2.14 (Comonoids). Unlike algebraic syntax, string diagrams allow for operations with co-arity different from ![]() , manifested by multiple (or no) right boundary wires. It is therefore possible to flip the generators and axioms of the theory of monoids to obtain the symmetric monoidal theory of comonoids! Unsurprisingly, it is represented by a signature with a single object (which we therefore omit in diagrams), two generators, called comultiplication and counit,

, manifested by multiple (or no) right boundary wires. It is therefore possible to flip the generators and axioms of the theory of monoids to obtain the symmetric monoidal theory of comonoids! Unsurprisingly, it is represented by a signature with a single object (which we therefore omit in diagrams), two generators, called comultiplication and counit,

and the following three axioms:

called coassociativity and counitality. As one can see immediately, string diagrams for comonoids are just the mirrored version of those for monoids. Therefore, any diagrammatic statement involving only comonoids can be proved by simply flipping the corresponding proof about monoids along the vertical axis. For example, as we did for monoids, it is possible to reason silently modulo coassociativity and introduce syntactic sugar for a generalised comultiplication node with co-arity ![]() for any natural number:

for any natural number:

A comonoid is furthemore cocommutative if

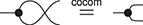

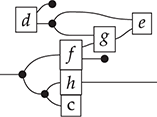

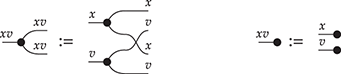

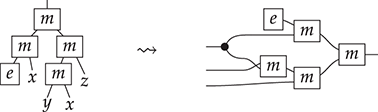

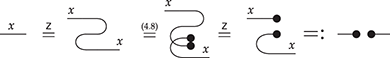

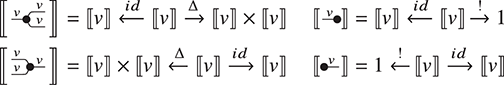

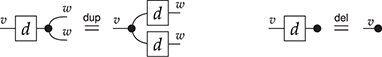

As we will see, distinguished cocommutative comonoid structures play a special role in many theories: for example, they can be used to represent a form of copying and discarding, which allows us to interpret the wires of our diagrams as variables in standard algebraic syntax. The comultiplication allows us to reference a variable multiple times and the counit gives us the right to omit some variable in a string diagram. Following this intuition, we may, for instance, depict the term ![]() in the context given by variables

in the context given by variables ![]() , as follows:

, as follows:

For this reason, from the diagrammatic perspective, algebraic theories (or Lawvere theories, their categorical cousins, see [Reference Hyland, Power, Cardelli, Fiore and Winskel74]) always carry a chosen cocommutative comonoid structure [Reference Bonchi, Sobociński and Zanasi23], even though this structure does not appear in the usual symbolic notation for variables (which relies instead on an infinite supply of unique names to serve as identifiers for variables). We will come back to this point in Remark 5.2.

Remark 2.15 (Symmetric monoidal theories and linearity). Much like monoids in ordinary algebra, monoids or comonoids in symmetric monoidal theories can have additional properties. We have already encountered commutative monoids and cocommutative comonoids. However, the analogy between symmetric monoidal theories and algebraic theories hides an important subtlety: if, in the former, the wires play the role of variables, they have to be used precisely once. Unlike variables in ordinary algebra, we cannot use wires more than once or omit to use them at all! This restriction – termed resource-sensitivity – is an important feature of diagrammatic syntax. Properties that do not involve multiple uses of variables can be specified completely analogously, as we saw for commutativity. Axioms that use each variable precisely once on each side of the equality sign are called linear axioms. Non-linear axioms cannot be translated directly in the diagrammatic context, however. For example, it makes no sense to refer to the symmetric monoidal theory of idempotent monoids: those monoids that satisfy ![]() . Indeed, to even state the idempotency axiom one requires the ability to duplicate wires. As we will see, idempotency can also be expressed diagrammatically, but as a property of a more complex algebraic structure than a monoid; it can be stated as a property of a bimonoid, which is our next example. This example is an instance of a more general pattern that allows us recover the resource-insensitivity of ordinary algebraic syntax. We will explore this correspondence more systematically in Section 4.2.5.

. Indeed, to even state the idempotency axiom one requires the ability to duplicate wires. As we will see, idempotency can also be expressed diagrammatically, but as a property of a more complex algebraic structure than a monoid; it can be stated as a property of a bimonoid, which is our next example. This example is an instance of a more general pattern that allows us recover the resource-insensitivity of ordinary algebraic syntax. We will explore this correspondence more systematically in Section 4.2.5.

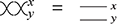

The respective theories of monoids and comonoids can interact in different ways, as the next two examples illustrate. By ‘interact’ in this context, we mean that there are different equations that one can impose when considering a signature that contains both the generators of monoids and those of comonoids with their respective theories.

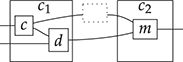

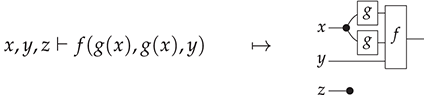

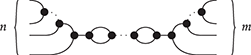

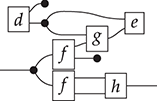

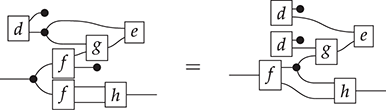

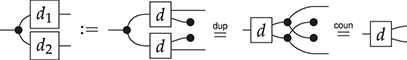

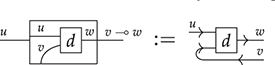

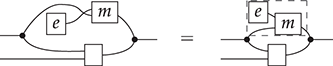

Example 2.16 (Co/commutative bimonoids). One possible theory axiomatises a structure called a bimonoid. It is presented by the generators of monoids and comonoids

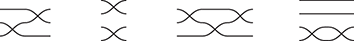

together with their respective axioms, and the following additional four equations:

Intuitively, these equalities can be seen as instances of the same general principle: whenever one of the monoid generators is composed horizontally with one of the comonoid generators, they pass through one another, producing multiple copies of each other. This is a two-dimensional form of distributivity. For example, when the unit meets the comultiplication, the latter duplicates the former; when the multiplication meets the comultiplication, they duplicate each other (notice how this requires the symmetry, the ability to cross wires). Using the generalised monoid and comonoid operations introduced in the previous examples, we can formulate a generalised bimonoid axiom scheme that captures all four axioms (and more):

Then, the four defining axioms can be recovered for the particular cases where the number of wires on each side is zero or two.

As we have already mentioned in Example 2.14, comonoids can mimic the multiple use of variables in ordinary algebra. Thus, in the context of bimonoids, we can state ordinary equations that involve more or less than one occurrence of the same variable. For example, a bimonoid is idempotent when it satisfies the following additional equality, which clearly translates the usual ![]() into a diagrammatic axiom:

into a diagrammatic axiom:

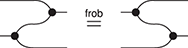

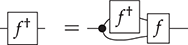

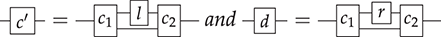

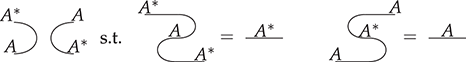

Example 2.17 (Frobenius monoids). Bimonoids are not the only way that monoids and comonoids can interact – there is another structure that frequently appears in the literature, under the name of Frobenius monoid, or Frobenius algebra.Footnote 5 This structure is presented by the generators of monoids and comonoids. We will write them using nodes of the same colour, as this is how Frobenius monoids tend to appear in the literature, and this will allow us to distinguish them from bimonoids in the rest of the Element:

together with their respective axioms, and the following additional axiom, called Frobenius’ law:

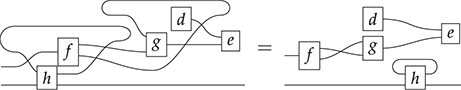

This equality provides an alternative way for the multiplication and comultiplication of the monoid and comonoid structures to interact: unlike the case of bimonoids, this time they do not duplicate each other, but simply slide past one another, on either side. This is a fundamental difference which, in fact, turns out to be incompatible with the bimonoid axioms. We will examine this incompatibility more closely in Section 4.2.10.

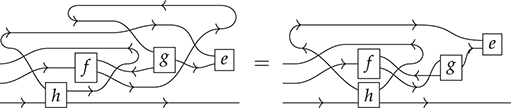

The reader might encounter other versions of this axiom in the literature, such as:

In the presence of the other axioms (namely counitality and coassociativity), these two equalities are derivable from (2.7). To get a feel for diagrammatic reasoning, let us prove it:

At first, the string diagram novice may find it difficult to internalise all the laws that make up a theory such as that of Frobenius monoids. When proving some equality, it is not always clear which axiom to apply at which point to reach the desired goal and it is easy to get overwhelmed by all the choices available. However, in some nice cases, as we saw for (co)monoids, there are high-level principles that allow us to simplify reasoning and see more clearly the key steps ahead. For example, reasoning up to associativity becomes second nature after enough practice and one no longer sees two different composites of (co)multiplication as different objects.

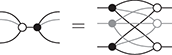

In the same way, the Frobenius law can be thought of as a form of two-dimensional associativity; it simplifies reasoning about complex composites of monoid and comonoid operations even further and allows us to identify at a glance when any two string diagrams for this theory are equal. To explain this, it is helpful to think of string diagrams for the theory of Frobenius monoids as (undirected) graphs, whose vertices are any of the black dots, and edges are wires. We say that a string diagram is connected if there is a path between any two vertices in the corresponding graph. It turns out that, for Frobenius monoids, any connected string diagram composed out of (finitely many)

,

,

, or

using vertical or horizontal composition (without wire crossings) is equal to one of the following form [Reference Heunen and Vicary73: section 5.2.1]:

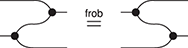

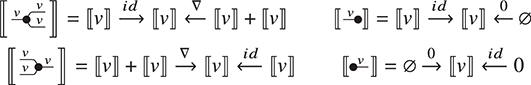

where we use an ellipsis to represent an arbitrarily large composite following the same pattern. In other words, the only relevant structure for a connected string diagram in the theory of Frobenius monoids is the number of left and right wires it has, and how many paths there are from any left leg to any right leg (how many loops it has in the normal form just depicted). This observation is sometimes called the spider theorem and justifies introducing generalised vertices we call spiders as syntactic sugar:

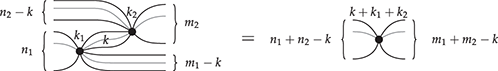

where the natural number ![]() represents the number of inner loops in the normal form just depicted. All the laws of Frobenius monoids can now be summarised into a single convenient axiom scheme:

represents the number of inner loops in the normal form just depicted. All the laws of Frobenius monoids can now be summarised into a single convenient axiom scheme:

where ![]() is the number of middle wires that connect the two spiders on the left-hand side of the equality. As a result, we need only keep track of the number of open wires and loops for any complicated string diagram; this greatly reduces the mental load of reasoning about this theory.

is the number of middle wires that connect the two spiders on the left-hand side of the equality. As a result, we need only keep track of the number of open wires and loops for any complicated string diagram; this greatly reduces the mental load of reasoning about this theory.

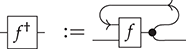

Frobenius monoids that satisfy the following idempotency axiom occur frequently in the literature:

In this case, the Frobenius monoid is called special (or sometimes, separable). The normal form given by the spider theorem simplifies even further in this case, since we can now forget about the inner loops:

The only relevant structure of any connected string diagram in the theory of special Frobenius monoids is the number of its left and right wires. We can thus introduce the same syntactic sugar, omitting the number of loops above the spider. The spider fusion scheme also simplifies further, as we no longer need to keep track of the number of legs that connect the two fusing spiders.

Example 2.18 (Special and commutative Frobenius monoids). The commutative and special Frobenius monoids are very common in the literature, as they are an algebraic structure one finds naturally when reasoning about relations (as we will see when we study the semantics of string diagrams in Section 5). We summarise here the full equational theory for future reference:

In what follows, we will refer to this theory as ![]() .

.

When adding commutativity in the picture, the spider theorem still holds and includes string diagrams composed out of (finitely many)  ,

,  ,

,  ,

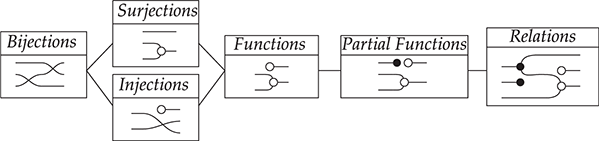

,  and wire crossings, using vertical or horizontal composition. Any string diagram in the free SMC over the theory of commutative and special Frobenius monoids is fully determined by a list of spiders, and to where each of their respective legs are connected on the left and on the right boundary. In other words, string diagrams with

and wire crossings, using vertical or horizontal composition. Any string diagram in the free SMC over the theory of commutative and special Frobenius monoids is fully determined by a list of spiders, and to where each of their respective legs are connected on the left and on the right boundary. In other words, string diagrams with ![]() left wires and

left wires and ![]() right wires are in one-to-one correspondence with maps

right wires are in one-to-one correspondence with maps ![]() for some

for some ![]() . This result will be the basis of a concrete description of the free SMC on the theory of a special commutative Frobenius monoid in Example 5.11.

. This result will be the basis of a concrete description of the free SMC on the theory of a special commutative Frobenius monoid in Example 5.11.

Example 2.19. A special Frobenius monoid that moreover satisfies the following axiom is sometimes called extra-special:

This means that we can forget about networks of black nodes without any dangling wires – they can always be eliminated. In this case, string diagrams with ![]() left wires and

left wires and ![]() right wires in the (free SMC over the) theory of a commutative extra-special Frobenius monoid are in one-to-one correspondence with partitions of

right wires in the (free SMC over the) theory of a commutative extra-special Frobenius monoid are in one-to-one correspondence with partitions of ![]() . Intriguingly, one may think of (2.9) as a ‘garbage collector’; in the relational interpretation of string diagrams, it allows us to capture equivalence relations, as it eliminates empty equivalence classes. We will come back to the case of extra-special commutative Frobenius monoids in Example 5.12.

. Intriguingly, one may think of (2.9) as a ‘garbage collector’; in the relational interpretation of string diagrams, it allows us to capture equivalence relations, as it eliminates empty equivalence classes. We will come back to the case of extra-special commutative Frobenius monoids in Example 5.12.

The reader will frequently encounter the theories we covered here as the building blocks of more complex diagrammatic calculi, designed to capture different kinds of phenomena. For example, the ZX-calculus, a theory that generalises quantum circuits (see Section 7), contains not one, but two special commutative Frobenius monoids, often denoted by a red and a green dot respectively. They interact together to form two bimonoids: the green monoid with the red comonoid forms a bimonoid, and so does the red monoid with the green comonoid. At first, this seems like a lot of structure to absorb, but quickly, one learns to use the spider theorem to think of monochromatic string diagrams, so that most of the complexity comes from the interaction of the two colours. And even then, the generalised bimonoid law we saw in Example 2.16 helps a lot. In fact, modern presentations of the ZX-calculus prefer to give the theory using spiders of arbitrary arity and co-arity as operations and the spider fusion rules as axioms. Strikingly, very similar equational theories can be found ubiquitously in a number of different applications, across different fields of science: it appears there is something fundamental to the interaction of monoid–comonoid pairs in the way we model computational phenomena. We will see several example applications in Section 7.

Remark 2.20 (Distributive Laws). It is noteworthy that both the equations of bimonoids (Example 2.16) and of Frobenius monoids (Example 2.17) describe the interaction of a monoid and a comonoid, even though they do it in different ways. A way to put it is in terms of factorisations: the bimonoid laws allow us to factorise any string diagram as one where all the comonoid generators precede the monoid generators, as in (2.6); conversely, the Frobenius laws yield a monoid-followed-by-comonoid factorisation, as in the spider theorem. More abstractly, the two equational theories can be described as different specifications of a distributive law involving the monoid and the comonoid. Distributive laws are a familiar concept in algebra: the chief example is the one of a ring, whose equations describe the distributivity of a monoid over an abelian group. In the context of symmetric monoidal theories, distributive laws are even more powerful, as they can be used to study the interaction of theories with generators with arbitrary co-arity, such as comonoids, Frobenius monoids, and so on. The systematic study of distributive laws of symmetric monoidal theories has been initiated by Lack [Reference Lack84] and expanded in more recent works [Reference Bonchi, Sobocinski and Zanasi22, Reference Bonchi, Sobociński and Zanasi23, Reference Zanasi116]. Understanding a theory as the result of a distributive law allows us to obtain a factorisation theorem for its string diagrams, such as (2.6) and the spider theorem. Moreover, it provides insights on a more concrete representation (a semantics) for syntactically specified theories of string diagrams – a theme which we will explore in Section 5. For example, the phase-free fragment of the aforementioned ZX-calculus can be understood in terms of a distributive law between two bimonoids. This observation is instrumental in showing that the free model of the phase-free ZX-calculus is a category of linear subspaces [Reference Bonchi, Sobociński, Zanasi and Muscholl19]. We refer to [Reference Zanasi116] for a more systematic introduction to distributive laws of symmetric monoidal theories, as well as other ways of combining together theories of string diagrams.

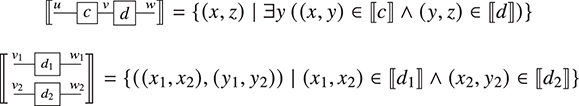

3 String Diagrams as Graphs

The previous section introduced string diagrams as a syntax. However, a strength of the formalism is that string diagrams may be also treated as graphs, with nodes and edges. This perspective is often convenient to investigate properties of string diagrams having to do with their combinatorial rather than syntactic structure, such as whether there is a path between two components. Another important reason to explore a combinatorial perspective to string diagrams is that their graph representation ‘absorbs’ the laws of symmetric monoidal categories shown in (2.3). It is thus more adapted than the syntactic representation for certain computation tasks, such as rewriting (see Section 6.1). The goal of this section is to illustrate how string diagrams can be formally interpreted as graphs.

As a starting point, let us take, for example, a string diagram we have previously considered:

If we forget about the term structure that underpins this representation, and try to understand it as a graph-like structure, the seemingly most natural approach is to think of boxes as nodes and the wires as edges of a graph. In fact, this is usually the intended interpretation adopted in the early days of string diagrams as a mathematical notation; see, for example, [Reference Joyal and Street77]. An immediate challenge for this approach is that ‘vanilla’ graphs do not suffice: string diagrams present loose, open-ended edges, which only connect to a node on one side, or even on no side, as, for instance, the graph representation of the ‘identity’ wires: ![]() . Historically, a solution to this problem has been to consider as interpretation a more sophisticated notion of graph, endowed with a topology from which one can define when edges are ‘loose’, ‘half-loose’, ‘pinned’, and so on; see [Reference Joyal and Street77]. Another, more recent approach understands string diagrams as graphs with two sorts of nodes, where the second sort just plays the bureaucratic role of giving an end to edges that otherwise would be drawn as loose [Reference Dixon and Kissinger46].

. Historically, a solution to this problem has been to consider as interpretation a more sophisticated notion of graph, endowed with a topology from which one can define when edges are ‘loose’, ‘half-loose’, ‘pinned’, and so on; see [Reference Joyal and Street77]. Another, more recent approach understands string diagrams as graphs with two sorts of nodes, where the second sort just plays the bureaucratic role of giving an end to edges that otherwise would be drawn as loose [Reference Dixon and Kissinger46].