1. Introduction

Service robots are personal or professional use robots that perform practical tasks for humans, such as handling objects, transporting them from and to different places and providing physical support, guidance or information (ISO 8373:ISO, 2021 Robotics Vocabulary; Fosch-Villaronga & Roig, Reference Fosch-Villaronga and Roig2017). For instance, Roomba is a vacuum cleaner robot that autonomously navigates domestic spaces to clean the floors, or delivery robots like those from Starship used in university campuses or office buildings to transport food or packages from one location to another without human intervention. Given their usefulness in both domestic and professional contexts, service robots are expected to be widely deployed for different facets of human life, which range from simple tasks to more complicated ones in care, such as improving children and vulnerable populations’ quality of life (Boada, Maestre & Genís, Reference Boada, Maestre and Genís2021).

Despite the significant contributions service robots bring to daily applications, legal, ethical and societal concerns arise due to the various contexts of application, which range from public spaces to personal and intimate spheres, multiple embodiments and interactions with different populations, including vulnerable users such as the elderly and children (Boada et al., Reference Boada, Maestre and Genís2021; Salvini, Paez-Granados & Billard, Reference Salvini, Paez-Granados and Billard2021; Wangmo, Lipps, Kressig & Ienca, Reference Wangmo, Lipps, Kressig and Ienca2019). These issues involve complex dimensions, including safety, transparency, opacity, trust and accountability, often context-sensitive (Felzmann, Fosch-Villaronga, Lutz & Tamò-Larrieux, Reference Felzmann, Fosch-Villaronga, Lutz and Tamò-Larrieux2020; Winfield, Reference Winfield2019). Given that regulations tend to be technology-neutral to ensure applicability over time, as a result, these concepts have multiple meanings and result in a problematic understanding of how they operationalize in concrete embodied robotic systems such as service robots, including socially and physically assistive robots (Giger et al., Reference Giger, Piçarra, Pochwatko, Almeida and Almeida2019; Danielescu, Reference Danielescu2020; Liu, Nie, Yu, Xie & Song, Reference Liu, Nie, Yu, Xie and Song2021; Felzmann, Fosch-Villaronga, Lutz & Tamo-Larrieux, Reference Felzmann, Fosch-Villaronga, Lutz and Tamo-Larrieux2019a). This regulatory ambiguity becomes especially pressing when service robots are deployed in socially sensitive contexts – such as eldercare settings, where a robot assisting with daily routines might unintentionally reinforce gendered assumptions by defaulting to female voices or caregiver roles – where their decisions and behaviors can reinforce or exacerbate existing societal biases (Groppe & Schweda, Reference Groppe and Schweda2023).

This brings us to the question of fairness – a foundational but often ambiguously defined concept in both ethical and legal discussions around robotics. Fairness is a contemporary ethical and legal concern that is fundamental for safe robots and mitigates the effects of other concerns (Boada et al., Reference Boada, Maestre and Genís2021). Fairness is a multifaceted term, enclosing nondiscrimination and equality as the main components (Caton & Haas, Reference Caton and Haas2024). Its prominence in existing frameworks is linked to the risks and power asymmetries of the deployment of artificial intelligence (AI), errors in algorithmic and human decision-making, socioeconomic inequalities, lack of diversity, privacy concerns and inadequate policies in society (Calleja, Drukarch & Fosch-Villaronga, Reference Calleja, Drukarch and Fosch-Villaronga2022; Leslie et al., Reference Leslie, Rincón, Briggs, Perini, Jayadeva, Borda and Kherroubi Garcia2023; Wangmo et al., Reference Wangmo, Lipps, Kressig and Ienca2019). Its importance lies in ensuring safety and preventing unjust outcomes, including discriminatory consequences and social concerns like gender stereotypes (Londoño et al., Reference Londoño, Hurtado, Hertz, Kellmeyer, Voeneky and Valada2024).

While there has been significant research on fairness and technology in general (Schwartz et al., Reference Schwartz, Schwartz, Vassilev, Greene, Perine, Burt and Hall2022; Whittaker et al., Reference Whittaker, Alper, Bennett, Hendren, Kaziunas, Mills and West2019), there is a gap in comprehensive frameworks that assess fairness at multiple levels within the context of service robots. Indeed, in complex cyber-physical systems such as service robots, fairness issues may not be evident, as it could be attributable to causes like robot designer bias, model prototyping, data, algorithmic or systemic biases in legislation and standards (Winfield, Reference Winfield2019; Fosch-Villaronga & Drukarch, Reference Fosch-Villaronga and Drukarch2023; Leslie et al., Reference Leslie, Rincón, Briggs, Perini, Jayadeva, Borda and Kherroubi Garcia2023). For instance, if the data used to train service robots contain biases – such as gender or sex stereotypes, discriminatory information about certain demographics, or underrepresentation of certain groups – the robots might behave unfairly for different populations. This would explain why speech recognition systems might work better for people with certain accents while struggling with others (Fossa & Sucameli, Reference Fossa and Sucameli2022) (i.e., American-English robots in a low-income Indian context, Singh, Kumar, Fosch-Villaronga, Singh & Shukla, Reference Singh, Kumar, Fosch-Villaronga, Singh and Shukla2023), or why systems might recognize better light and male faces rather than dark-skinned female faces (Addison, Bartneck & Yogeeswaran, Reference Addison, Bartneck and Yogeeswaran2019). The difference with other AI-based systems is that the physical design or appearance of these robots can also introduce bias. A robot’s embodiment may lead to unintended preferences or exclusions. For example, a robot designed to look like a female nurse may reinforce stereotypical gender roles at work (the wrong idea that nurses are reserved jobs for women) (Fosch-Villaronga & Poulsen, Reference Fosch-Villaronga, Poulsen, Custers and Fosch-Villaronga2022). In more specific examples, excluding anatomical differences between genders (i.e., breast and pelvis) in certain robots (exoskeletons, for instance), may also lead to the design of robots that may provide better assistance to men than women (Fosch-Villaronga & Drukarch, Reference Fosch-Villaronga and Drukarch2023). And, on the last note, the safety standards that govern how these robots should be built and used can also be biased. Since many of these standards focus on one aspect (typically safety, which traditionally refers to physical safety, Martinetti, Chemweno, Nizamis & Fosch-Villaronga, Reference Martinetti, Chemweno, Nizamis and Fosch-Villaronga2021) and are created by private companies or small groups of stakeholders, they might not consider the needs and perspectives of diverse groups (e.g., people from different cultures, with disabilities, or various socioeconomic backgrounds) (Haidegger et al., Reference Haidegger, Barreto, Gonçalves, Habib, Ragavan, Li and Prestes2013). This could lead to robots that do not serve all people fairly – an especially critical concern given the close proximity these technologies have with end users and the influence they may exert on social norms.

Our contribution addresses this gap by providing a comprehensive framework for understanding fairness within the domain of robotics. As indicated, robots represent a complex intersection of various elements (software and physical embodiment), which implies that multiple factors can influence their performance and potentially lead to unfair outcomes. To that end, we have conducted a study to gain a more comprehensive understanding of the problem of fairness in robotics. Doctrinal research has been used to clarify the various definitions of fairness used within the specific context of service robots. This type of analysis allows us to identify and catalog the underlying themes and perspectives associated with the semantics of the term “fairness.” An interdisciplinary framework that integrates insights from law, ethics, social sciences and human–computer interaction (HCI) has been used. In this regard, building on previous research (Leslie et al., Reference Leslie, Rincón, Briggs, Perini, Jayadeva, Borda and Kherroubi Garcia2023), our approach has been to dissect the different elements that make up robots, identifying those that may result in unfair performance.

The structure of the paper is as follows. After explaining the methodological approach followed, we explore the dimensions of the phenomenon of fairness within the domain of service robots. In this section, we examine various understandings of fairness that we consider are mutually complementary and are necessary for achieving fairness. In concrete: fairness (i) as objectivity and legal certainty; (ii) as prevention of bias and discrimination; (iii) as prevention of exploitation of users; and, (iv) finally, as transparency and accountability. We then propose a framework for fairness in service robotics, which builds upon the identified dimensions and provides a working definition. The design of our study is conditioned by several key factors. First, the implementation of service robotics is still in its early stages, as is its intersection with AI. This means that there is a limited amount of data available on unfair behavior exhibited by robots (Cao & Chen, Reference Cao and Chen2024; Hundt, Agnew, Zeng, Kacianka & Gombolay, Reference Hundt, Agnew, Zeng, Kacianka and Gombolay2022) or discriminatory practices toward robots (Barfield, Reference Barfield2023b). Additionally, existing literature primarily focuses on the identification of biases and potential solutions, but with a particular emphasis on data-related issues, often overlooking other relevant aspects such as the influence on embodiment in stereotypes or unfair outcomes (Lucas, Poston, Yocum, Carlson & Feil-Seifer, Reference Lucas, Poston, Yocum, Carlson and Feil-Seifer2016). Finally, we close the paper with our conclusions, reflecting on the implications of the proposed framework and identifying avenues for future research and application.

2. Methods

We conducted multidisciplinary doctrinal research to develop a comprehensive framework for understanding fairness in the domain of service robotics. Doctrinal research, traditionally rooted in legal scholarship, involves the critical analysis, interpretation and synthesis of existing theories and normative principles. It enables the abstraction of concepts and their systematic integration into coherent structures of meaning (Bhat, Reference Bhat2020). This methodological strength makes it particularly well suited for addressing fairness – a concept that is both value-laden and context-dependent (Stamenkovic, Reference Stamenkovic2024) – by allowing us to examine its diverse articulations across domains and distill them into a structured, unified framework.

In our case, doctrinal analysis provides the foundation for identifying, comparing and reconciling how fairness is conceptualized in law, ethics, social sciences and technical disciplines. Rather than proposing a new empirical model, we aim to build conceptual clarity and normative depth – outcomes for which doctrinal research is especially well equipped. Thus, this approach directly supports our goal of constructing a framework that not only maps existing understandings of fairness but also offers guidance for its application in the complex sociotechnical environment of service robotics. This capacity is further amplified when doctrinal research is combined with interdisciplinary perspectives, which help surface context-specific dimensions and variations in meaning (Ishwara, 2020).

In line with this, we examined how fairness is conceptualized not only in legal literature but also across a range of research domains, in order to construct a framework that reflects its multifaceted role in service robotics. In particular, we reviewed literature from five key areas: (1) Robotics and Human–Robot Interaction (HRI), (2) Artificial Intelligence (AI) and Machine Learning (ML), (3) Law and Regulation, (4) Ethics and Philosophy of Technology and finally (5) Social Sciences and Psychology. Although scientific literature constitutes our primary source, we also considered legal texts, ethical guidelines and regulatory sources. Examining these areas of knowledge allowed us to identify the diverse and intersecting factors that shape the concept of fairness, emphasizing its multidimensional nature in the context of robotic systems and HRIs.

From a legal perspective, the principles that emerged from our analysis include legal certainty (Braithwaite, Reference Braithwaite2002; Shcherbanyuk, Gordieiev & Bzova, Reference Shcherbanyuk, Gordieiev and Bzova2023), antidiscrimination (Council Directive 2005/29/EC; Rigotti & Fosch-Villaronga, Reference Rigotti and Fosch-Villaronga2024) and consumer protection (Cartwright, Reference Cartwright2015; Lim & Letkiewicz, Reference Lim and Letkiewicz2023). Ethically, our analysis highlighted fairness theories grounded in equity (Adams, (Reference Adams and Miner2015)) and justice (Folger & Cropanzano, Reference Folger and Cropanzano2001; Rawls, Reference Rawls1971) as core normative foundations that consistently inform debates on responsibility, distribution and legitimacy in technological contexts. Similarly, our examination of the sociotechnical literature revealed recurring concerns related to bias and user vulnerability, particularly in robot-mediated environments (Addison et al., Reference Addison, Bartneck and Yogeeswaran2019; Fosch-Villaronga & Poulsen, Reference Fosch-Villaronga, Poulsen, Custers and Fosch-Villaronga2022). In the psychological and HCI domains, empirical studies highlighted perceptions of fairness, trust and accountability as central themes in HRI (Cao & Chen, Reference Cao and Chen2024; Chang, Pope, Short & Thomaz, Reference Chang, Pope, Short and Thomaz2020; Claure, Candon, Shin & Vázquez, Reference Claure, Candon, Shin and Vázquez2024).

3. Identifying the dimensions of fairness in service robotics

Mulligan, Kroll, Kohli and Wong (Reference Mulligan, Kroll, Kohli and Wong2019) extensively discussed the different conceptualizations of fairness across disciplines and how this creates confusion owing to the lack of a common vocabulary. For instance, a computer scientist might think about fairness in terms of how an algorithm processes data equally for everyone; a sociologist might focus on whether the system perpetuates inequality in society; and a legal expert might consider fairness from the standpoint of individual rights and whether the system abides by laws. Even though they all use the word “fairness,” these different perspectives can lead to confusion because they do not mean the same thing in different contexts. In other words, fairness is a context-specific concept that is universally difficult to define (McFarlane v McFarlane (2006) UKHL 24; Mehrabi, Morstatter, Saxena, Lerman and Galstyan (Reference Mehrabi, Morstatter, Saxena, Lerman and Galstyan2021); Londoño et al., Reference Londoño, Hurtado, Hertz, Kellmeyer, Voeneky and Valada2024) and that has interdisciplinary connotations that can have different meanings based on how one approaches it (Rigotti & Fosch-Villaronga, Reference Rigotti and Fosch-Villaronga2024).

In this sense, fairness has been viewed in terms of justice (Rawls, Reference Rawls1971; Colquitt & Zipay, Reference Colquitt and Zipay2015), proportionate reward from work (Adams, Reference Adams and Miner2015), accountability (Folger & Cropanzano, Reference Folger and Cropanzano2001) and so on, resulting in diverging discussions and understandings, each with its merit. For instance, in robotics literature, fairness has been analyzed in the context of human–robot collaboration, where certain studies examine fairness from the angle of how humans perceive the fairness of task allocation while in human–robot teams (Chang et al., Reference Chang, Pope, Short and Thomaz2020; Chang, Trafton, McCurry & Thomaz, Reference Chang, Trafton, McCurry and Thomaz2021). In Cao and Chen (Reference Cao and Chen2024), the authors study how robots’ fair behaviors impact the human partner’s attitude and their relationship with the robots, highlighting that humans are likely to reward robots that demonstrate cooperative behaviors and penalize those exhibiting self-serving or unfair actions, much like they do with other humans.

Conceptualizing fairness for service robotics is challenging inter alia because this field often involves elements of the spheres above, such as law, social sciences, computer science and engineering. Technological advancements have resulted in these robots having sophisticated capabilities (Lee, Reference Lee2021), and these robots usually operate in an ever-changing environment among humans (Sprenger & Mettler, Reference Sprenger and Mettler2015). As a gamut of laws and standards governs these robots, as they interact with humans physically and socially, and incorporate various hardware and software elements including AI, various interdisciplinary fields are relevant to fairness in service robots. Thus, adopting the fairness frameworks of any of these areas would be insufficient.

Despite the difficulty in identifying fairness for service robotics, it is still the need of the hour. In service robotics, the increasing gap between policy development and technological advancements leads to a regulatory mismatch, which may cause robot developers to overlook crucial safety considerations in their designs, affecting the well-being of many users (Calleja et al., Reference Calleja, Drukarch and Fosch-Villaronga2022; Fosch-Villaronga et al., Reference Fosch-Villaronga, Shaffique, Schwed-Shenker, Mut-Piña, van der Hof and Custers2025). This, in turn, leads to issues such as the legal frameworks overly emphasizing the need for physical safety while not paying due heed to other essential aspects, including cybersecurity, privacy and psychological safety (Martinetti et al., Reference Martinetti, Chemweno, Nizamis and Fosch-Villaronga2021). Additionally, one is confronted with the reality that service robots might not interact with the users in a “fair” manner because of not accounting for culture-specific designs (Mansouri & Taylor, Reference Mansouri and Taylor2024). Thus, four dimensions of fairness in service robotics as distilled from analysis of the literature are presented in this section.

3.1. Fairness as legal certainty

The challenges present with laws being unable to keep pace with the rapid growth of technology adequately termed the Collingridge dilemma (Collingridge, Reference Collingridge1980) or the pacing problem (Marchant, Reference Marchant2011), is a pressing issue for service robotics. Even though robotic technology is a critical sphere, given the close cyber-physical interaction robots have with users, the existing regulatory framework does not adequately address all aspects (Goffin and Palmeri, Fosch-Villaronga, Reference Fosch-Villaronga2019; Goffin & Palmieri, Reference Goffin and Palmieri2024; Palmerini et al., Reference Palmerini, Bertolini, Battaglia, Koops, Carnevale and Salvini2016). The problem becomes even more pronounced when we consider that the software powering autonomous systems introduces an added layer of complexity, particularly in terms of meeting compliance standards and regulatory requirements. While cloud-based services – such as speech recognition, navigation and AI – can help offload computational tasks and make robots more modular, they also fragment system responsibility across multiple actors. This dispersion complicates compliance, as traditional regulatory frameworks tend to operate in silos and may not adequately address the interconnected nature of these systems. As a result, ensuring regulatory alignment across hardware manufacturers, software providers and cloud service operators becomes an increasingly complex challenge (Fosch-Villaronga & Millard, Reference Fosch-Villaronga and Millard2019; Setchi, Dehkordi & Khan, Reference Setchi, Dehkordi and Khan2020; Wangmo et al., Reference Wangmo, Lipps, Kressig and Ienca2019).

EU law does not define robots, and no comprehensive law targets robots (Fosch-Villaronga & Heldeweg, Reference Fosch-Villaronga and Heldeweg2018). A number of legislations could apply to service robotics depending on the uses and functionalities of the robots, such as the General Data Protection Regulation (GDPR) (Regulation (EU) 2016/679) (if the robot processes personal data), the Toy Safety Directive (if the robot is a toy) and the Medical Devices Regulation (if the robot is a medical device). While recently enacted legislations such as the Machinery Regulation and the Artificial Intelligence Act (AIA) can also apply to robots, these are still not statutes targeted at robotics and providing a comprehensive regulatory framework (Mahler, Reference Mahler2024). This lack of clarity on the law governing robotics presents dilemmas regarding whether the current legal framework is sufficient or whether a new specific law is needed for robotics (Fosch-Villaronga & Heldeweg, Reference Fosch-Villaronga and Heldeweg2018). Further, as mentioned before in this paper, the lack of proper regulation can lead to safety and inclusivity issues in service robotics.

Having legal clarity in this sphere can lead to a better position for all concerned stakeholders so that manufacturers know how to produce legally compliant robots, users know their rights and duties while interacting with robots, and regulators know how to supervise the robots placed in the European Union (EU) market and further the safety for users. Therefore, we argue that the principle of legal certainty, as recognized in Europe, can be a crucial plank for further fairness in service robotics. Legal certainty is an essential facet of the rule of law, a fundamental principle of EU law – both for the Council of Europe (2011, 2016) and the EU (European Commission, n.d.-b). It is a legal theory “aimed at protecting and preserving the fair expectations of the people” (Shcherbanyuk et al., Reference Shcherbanyuk, Gordieiev and Bzova2023). Several Court of Justice of the EU judgments have evoked this principle in diverging contexts, and the scope of this principle has expanded over the years (van Meerbeeck, Reference van Meerbeeck2016). A vital component of the legal certainty principle is that laws should be clear and precise so that natural and legal persons know their rights and duties and can foresee the consequences of their actions (European Commission, n.d.-b; Shcherbanyuk et al., Reference Shcherbanyuk, Gordieiev and Bzova2023). When applied to the service robotics context, stakeholders should clarify their roles, rights and responsibilities. Of course, we do not mean to suggest that manufacturers ought to be allowed to use the lack of regulatory clarity as a justification for not ensuring the safety of their robots, and they should still be fully accountable for the robots they develop.

3.2. Fairness as preventing bias and discrimination

Fairness in ML is an extensively researched field (Pessach & Shmueli, Reference Pessach and Shmueli2022). There is yet to be a consensus on what fairness is in this context, too, given the complexity of the issue and the different dimensions associated with it (Caton & Haas, Reference Caton and Haas2024). In this context, the FAIR Principles – guidelines aimed at ensuring that data is Findable, Accessible, Interoperable and Reusable – specifically highlight the importance of enabling machines to automatically discover and utilize data (Wilkinson et al., Reference Wilkinson, Dumontier, Aalbersberg, Appleton, Axton, Baak and Mons2016). In Rigotti and Fosch-Villaronga (Reference Rigotti and Fosch-Villaronga2024), it was highlighted that when it comes to the use of AI in recruitment, different stakeholders, such as job applicants and human resource practitioners, view fairness in markedly different ways. Fairness has been defined in algorithmic decision-making as an absence of prejudice or favoritism based on intrinsic or acquired traits (Mehrabi et al., Reference Mehrabi, Morstatter, Saxena, Lerman and Galstyan2021). Therefore, many studies in this sphere view fairness from the prism of bias and discrimination. Some studies deal with fairness in ML, which explicitly acknowledge that unfairness, bias and discrimination are interchangeable terms (Pessach & Shmueli, Reference Pessach and Shmueli2022).

Coming to service robotics, robots are fundamentally designed to serve humans, making it essential to address ethical considerations, among which fairness and, in particular, biases have become critical issues that have recently gained significant attention not only among scholars (Wang et al., Reference Wang, Phan, Li, Wang, Peng, Chen and Liu2022) but also among policymakers and regulators.Footnote 1 Similar to the concept of fairness, the term “bias” takes on different interpretations depending on the context and the specific academic discipline in which it is applied (FRA, 2022). In AI, for example, bias is usually defined as “a systematic error in the decision-making process that results in an unfair outcome” (Ferrara, Reference Ferrara2023). To some extent, this definition can be applied to the field of robotics; however, it remains overly simplistic as it fails to consider the significant impact of a robot’s physical embodiment and the specific context in which it operates. For example, Fosch-Villaronga and Özcan (2019) highlight design challenges in certain lower-limb exoskeletons, demonstrating the importance of realizing these concepts within the context of these hardware factors. In this regard, robots are inherently complex entities, and their design can be shaped by various sources of bias, for instance, if robots that simulate nurses include breasts, skirts or talk in a female voice tone (Fosch-Villaronga & Poulsen, Reference Fosch-Villaronga, Poulsen, Custers and Fosch-Villaronga2022; Londoño et al., Reference Londoño, Hurtado, Hertz, Kellmeyer, Voeneky and Valada2024; Tay, Jung & Park, Reference Tay, Jung and Park2014). This complexity arises from the interplay between technological, social and regulatory factors that influence how robots are conceived and function (Boyd & Holton, Reference Boyd and Holton2018; Šabanović, Reference Šabanović2010). In this paper, we identify two primary sources of bias that impact robot design and, consequently, could lead to unfair performance: (1) design choices and physical embodiment and (2) the data used for training and decision-making processes.

The role of embodied AI or virtual assistant, changes the game’s rules. As humans, we associate human-like machines with intelligence or empathy, unknowingly creating deep bonds (Scheutz, Reference Scheutz, Lin, Abney and Bekey2012). The eye region can decide the level of trustworthiness, and facial color cues and luminance can increase the level of likability, attractiveness and trustworthiness in machines. Young or old appearance controls trustworthiness and explicit facial ethnicity (Song & Luximon, Reference Song and Luximon2020). An embodied agent can produce positive emotions better than a virtual one, especially anthropomorphism, imbuing them with a higher sense of vitality (Yang & Xie, Reference Yang and Xie2024). An example can be found in education, as a humanoid robot promotes … secondary responses conducive to learning” and sets a level of expectations for it to be able to engage socially (Belpaeme, Kennedy, Ramachandran, Scassellati & Tanaka, Reference Belpaeme, Kennedy, Ramachandran, Scassellati and Tanaka2018).

A significant aspect of embodiment can be found in color. In a survey to determine the emotional response to color, 55 percent saw white as “clean,” followed by 27 percent who chose blue (Babin, Reference Babin2013). It is mentioned in the article that many associate black with mourning in Western cultures, and that is why it is absent from medical facilities and equipment (Babin, Reference Babin2013). Most robots will be designed in light colors, to convey cleanliness and familiarity and to promote positive user perceptions. Such design choices may help reduce discomfort by enhancing perceived safety and warmth (Rosenthal-Von Der Pütten& Krämer, Reference Rosenthal-Von Der Pütten and Krämer2014).Footnote 2 However, such choices are not neutral. The overrepresentation of white-colored robots may reinforce implicit racial biases, as users tend to project human stereotypes – including racial ones – onto robots. In experiments, people were more likely to ascribe negative traits or act aggressively toward darker-skinned robots compared to white ones, even when the robots were functionally identical (Addison et al., Reference Addison, Bartneck and Yogeeswaran2019; Bartneck et al., Reference Bartneck, Yogeeswaran, Ser, Woodward, Sparrow, Wang and Eyssel2018).A robot’s height also plays a role in emotional response, with studies suggesting that taller robots can elicit intimidation or fear, whereas robots positioned lower than eye level tend to feel less threatening and more approachable (Hiro & Ito, Reference Hiroi and Ito2016). Bias in color can also be found in the Robot Shooter Bias, where it is more likely to shoot faster at a darker-colored robot than a lighter one (Addison et al., Reference Addison, Bartneck and Yogeeswaran2019). Moreover, a white-colored robot will receive less discrimination than a black or rainbow-colored one of the same type (Barfield, Reference Barfield2021).

Social bias comes in many forms and may lead to discrimination. Discrimination can be presented in the form of sexism, racism, stereotypes and xenophobia. This can manifest in various ways, such as hospitality and tourism companies that use service robots, where the robot’s embodiment and design choices (i.e., gender, color and uniform) can, in turn, awaken negative emotions from employees (Seyitoğlu & Ivanov, Reference Seyitoğlu and Ivanov2023). Discrimination can also manifest through large language model (LLM)-driven robots engaging in decision-making processes and discriminatory behavior based on significant, well-known ethical concerns perpetuating existing social injustices. In this regard, Zhou (Reference Zhou2024) demonstrates that LLM-driven robots exhibit negative performance when interacting with racial minorities, certain nationalities or individuals with disabilities.

In addition, the rapid progress in ML and the expansion of computing power in recent years have significantly enhanced the learning capabilities of robots (Hitron, Megidish, Todress, Morag & Erel, Reference Hitron, Megidish, Todress, Morag and Erel2022). These advancements have facilitated the development of more complex robot behavior, enabling robots to perform tasks that require sequential decision-making in dynamic environments. Thus, ML has propelled the growth of robotic domains across the spectrum, from simple automation to complex autonomous systems (Londoño et al., Reference Londoño, Hurtado, Hertz, Kellmeyer, Voeneky and Valada2024). Most robot learning algorithms follow a data-driven paradigm (Londoño et al., Reference Londoño, Hurtado, Hertz, Kellmeyer, Voeneky and Valada2024), allowing robots to learn automatically from vast datasets with guidance or supervision (Nwana, Reference Nwana1996; Rani, Liu, Sarkar & Vanman, Reference Rani, Liu, Sarkar and Vanman2006). The learning process optimizes models to perform specific tasks, such as navigation (Singamaneni et al., Reference Singamaneni, Bachiller-Burgos, Manso, Garrell, Sanfeliu, Spalanzani and Alami2024). These methods are essential in helping robots generalize their behavior to various contexts and environments, making learning a key component in developing intelligent robotic systems (Soori, Arezoo & Dastres, Reference Soori, Arezoo and Dastres2023).

However, with this reliance on data and ML comes the introduction of biases, which can emerge both in the data used to train these models (Shahbazi, Lin, Asudeh & Jagadish, Reference Shahbazi, Lin, Asudeh and Jagadish2023) and the algorithms that process this data – in this sense, algorithmic bias primarily stems from the software used in the ML process (Takan et al., Reference Takan, TAKAN, Yaman and Kılınççeker2023) – . As a result, the ethical implications of these biases, particularly in the domain of robot learning, are becoming increasingly significant (Alarcon et al., Reference Alarcon, Capiola, Hamdan, Lee and Jessup2023). The risks associated with AI bias could be even more severe when applied to robots, as they are often seen as autonomous entities and operate without direct human intervention (Hitron et al., Reference Hitron, Megidish, Todress, Morag and Erel2022). Data and algorithm biases in the functioning of AI have been widely detected across various fields. In healthcare, biases have been identified in automated diagnosis and treatment systems (Obermeyer, Powers, Vogeli & Mullainathan, Reference Obermeyer, Powers, Vogeli and Mullainathan2019). In applicant tracking systems, these biases can influence candidate selection and exclusion (Frissen, Adebayo & Nanda, Reference Frissen, Adebayo and Nanda2023). In online advertising, algorithms may target ads unequally across different groups (Lambrech et al., Reference Lambrecht and Tucker2024). Additionally, in image generation tools, biases have been found to perpetuate visual stereotypes (Naik, Gostu & Sharma, Reference Naik, Gostu and Sharma2024). Lastly, predictive policing tools have shown discriminatory tendencies, exacerbating social justice issues (Alikhademi et al., Reference Alikhademi, Drobina, Prioleau, Richardson, Purves and Gilbert2022).

3.3. Fairness as preventing exploitation of users

In European consumer law, unfairness primarily addresses imbalances in contractual relationships. Following the EU’s framework, such imbalances may stem from the terms of a contract or the commercial practices surrounding it. The concept of “unfair” is central in two primary Directives within the EU’s consumer protection framework: Directive 93/13 on unfair terms in consumer contracts and (Directive 2005/29/EC) on unfair business-to-consumer practices in the internal market. These Directives aim to protect consumers from exploitative practices, acknowledging the structural asymmetries in information and bargaining power between consumers and businesses (Willett, Reference Willett2010). Additionally, in the specific area of consumer law focused on product safety, fairness is also regarded as a central objective of regulation. In this sense, the Recommendation of the Council on Consumer Product Safety of the Convention on the Organisation for Economic Co-operation and Development recognizes that “compliance with product safety requirements by all economic operators can support a safe, fair and competitive consumer product marketplace.”

In this context, the term “unfairness” emerges in two specific areas within the sphere of economic relations between consumers and businesses. First, it reflects the informational and economic asymmetries between businesses and consumers (fairness in consumer relations), and second, it serves as a condition for ensuring consumer safety in the marketplace (fairness in markets). The former used to arise through abusive clauses and practices, while the latter arose when minimum safety standards were not guaranteed. Thus, in the specific field of robotics, unfairness, understood as the failure to prevent the exploitation of users, could arise in both contexts.

Although the robot market is still in its early stages, robots may become suitable for widespread consumer adoption in the near future (Randall & Šabanović, Reference Randall and Šabanović2024). If many consumers could access robotic markets, market asymmetry issues will likely become a significant concern. As demonstrated, informational asymmetries are particularly pronounced in technical contexts such as financial services (Cartwright, Reference Cartwright2015; Lim & Letkiewicz, Reference Lim and Letkiewicz2023). In robotics, as with other high-tech products, making an optimal and efficient purchasing decision requires a deep understanding of numerous complex technical aspects. In this sense, most consumers could be vulnerable to unfair commercial practices related to the functionality or performance of a particular robot, or they could be pressured into accepting unfair clauses in consumer contracts (Hartzog, Reference Hartzog2014).

Furthermore, significant concerns about unfairness, especially regarding the prevention of consumer exploitation, would likely stem from security and safety issues. This issue can also be seen as a problem of informational asymmetry (Choi & Spier, Reference Choi and Spier2014; Edelman, Reference Edelman2009). Without adequate safety regulations, consumers face an adverse selection problem, as they cannot discern which products (robotic devices, in this case) may pose economic or physical risks.

The issue of unfairness in robot performance becomes significantly more concerning when consumer vulnerability is considered. Nowadays, consumer vulnerability is “a dynamic state that varies along a continuum as people experience more or less susceptibility to harm due to varying conditions and circumstances” (Salisbury et al., Reference Salisbury, Blanchard, Brown, Nenkov, Hill and Martin2023). In this sense, older adults, children or individuals with disabilities may have limited capacity to recognize or respond to inconsistencies or deficiencies in a robot’s functionality (Søraa & Fosch-Villaronga, Reference Søraa and Fosch-Villaronga2020). For instance, if a robotic device designed to assist elderly users fails to perform accurately, the consequences could range from diminished quality of life to serious physical harm. Furthermore, vulnerable users may be less likely to understand or challenge technical failures, especially if the system lacks transparency features or provides insufficient recourse for complaints. This amplifies the potential for exploitation and places these consumers at a heightened risk, making robust regulatory standards and clear accountability for robot performance essential to protect their well-being. In this sense, fairness could also be understood as protecting consumers from deceptive robots. Leong and Selinger (Reference Leong and Selinger2019) show how deceptive anthropomorphism can lead users to trust or bond with robots in ways that may not be in their best interest. As a countermeasure, robots should be designed with transparency cues that clearly indicate they are machines – such as maintaining robotic voice patterns or using visual indicators of artificiality – especially in contexts involving vulnerable populations (Felzmann et al., Reference Felzmann, Fosch-Villaronga, Lutz and Tamo-Larrieux2019a).

3.4. Fairness as transparency and accountability

At its core, transparency entails rendering AI systems’ decision-making processes and underlying algorithms understandable and accessible to users and stakeholders (Felzmann et al., Reference Felzmann, Fosch-Villaronga, Lutz and Tamò-Larrieux2020; Larsson & Heinz, Reference Larsson and Heintz2020). This is particularly needed given the complexity and opacity often inherent in AI systems and the significant risks they can pose to fundamental rights and public interests (Eschenbach, Reference Eschenbach2021). Transparency helps users understand how decisions are made, which is essential for building trust and ensuring accountability (Felzmann, Villaronga, Lutz & Tamò-Larrieux, Reference Felzmann, Villaronga, Lutz and Tamò-Larrieux2019b).

In HRI, research on transparency has mainly focused on the explainability of systems, looking at either how easily the robot’s actions can be understood (intelligibility) or how well users grasp the robot’s behavior (understandability) (Fischer, Reference Fischer2018). The results are mixed: some studies report no significant findings, while others point out the drawbacks of transparency (Felzmann et al., Reference Felzmann, Villaronga, Lutz and Tamò-Larrieux2019b). These include disrupting smooth interactions and leading to misunderstandings or inaccurate assumptions about the robot’s abilities in specific contexts if it is too transparent about its course of action. Other research emphasizes technical limitations, showing that transparency can create misleading distinctions, prioritize visibility over proper comprehension, and sometimes even cause harm (Ananny & Crawford, Reference Ananny and Crawford2018).

In the context of robotics, transparency also can refer to users knowing how robots collect, process and use data (Felzmann et al., Reference Felzmann, Fosch-Villaronga, Lutz and Tamo-Larrieux2019a). For example, robots that assist vulnerable populations, like children or older adults, must be clear about how decisions are made, particularly if those decisions impact users’ well-being. Unlike privacy regulations, transparency is not just about disclosing information but ensuring that this information is meaningful and understandable to users and stakeholders. This is especially important because, as research shows, transparency can improve accountability by providing insights into decision-making processes (Lepri, Oliver, Letouzé, Pentland & Vinck, Reference Lepri, Oliver, Letouzé, Pentland and Vinck2018). Accountability requires that those responsible for the robot – manufacturers, developers or deployers – are answerable for the robot’s decisions. Although part of the community has reasoned about whether there is a responsibility gap if the robot learns as it operates (Johnson, Reference Johnson2015), it is the contemporary understanding, also as reflected from the EU regulations (the AIA in concrete) that transparency plays a key role by enabling different stakeholders, including authorities to inspect and users to challenge decisions that are significant for them. This is particularly important when robots are used in sensitive areas like healthcare, where biased or inaccurate decisions could have serious consequences (Cirillo et al., Reference Cirillo, Catuara-Solarz, Morey, Guney, Subirats, Mellino and Mavridis2020). However, as noted in the literature, accountability is not automatic, even with transparency. Systems can be transparent but still avoid accountability if there are no mechanisms to process and act on the disclosed information (Felzmann et al., Reference Felzmann, Fosch-Villaronga, Lutz and Tamò-Larrieux2020).

4. Furthering fairness in service robotics

Against this background, in this paper, we understand fairness as the broad and all-encompassing concept that it is without attempting to define it exhaustively. As mentioned, while much discussion on fairness is centered around bias and discrimination, and these are, in fact, essential elements, this paper does not address fairness solely from this lens. Other elements, such as the weaker party protection rationale inherent in consumer protection law, are also relevant for fairness in service robotics because manufacturers could be better financially and information-wise than the users (Kotrotsios, Reference Kotrotsios2021). Therefore, in line with the four identified dimensions, the definition of fairness in service robotics proposed in this paper, which is primarily centered on a user safety perspective, is as follows:

Fairness in service robotics refers to the responsible and context-aware approach to the design, deployment, and use of robotic systems, with a focus on preventing harm, reducing systemic and individual inequalities, and upholding individual rights.

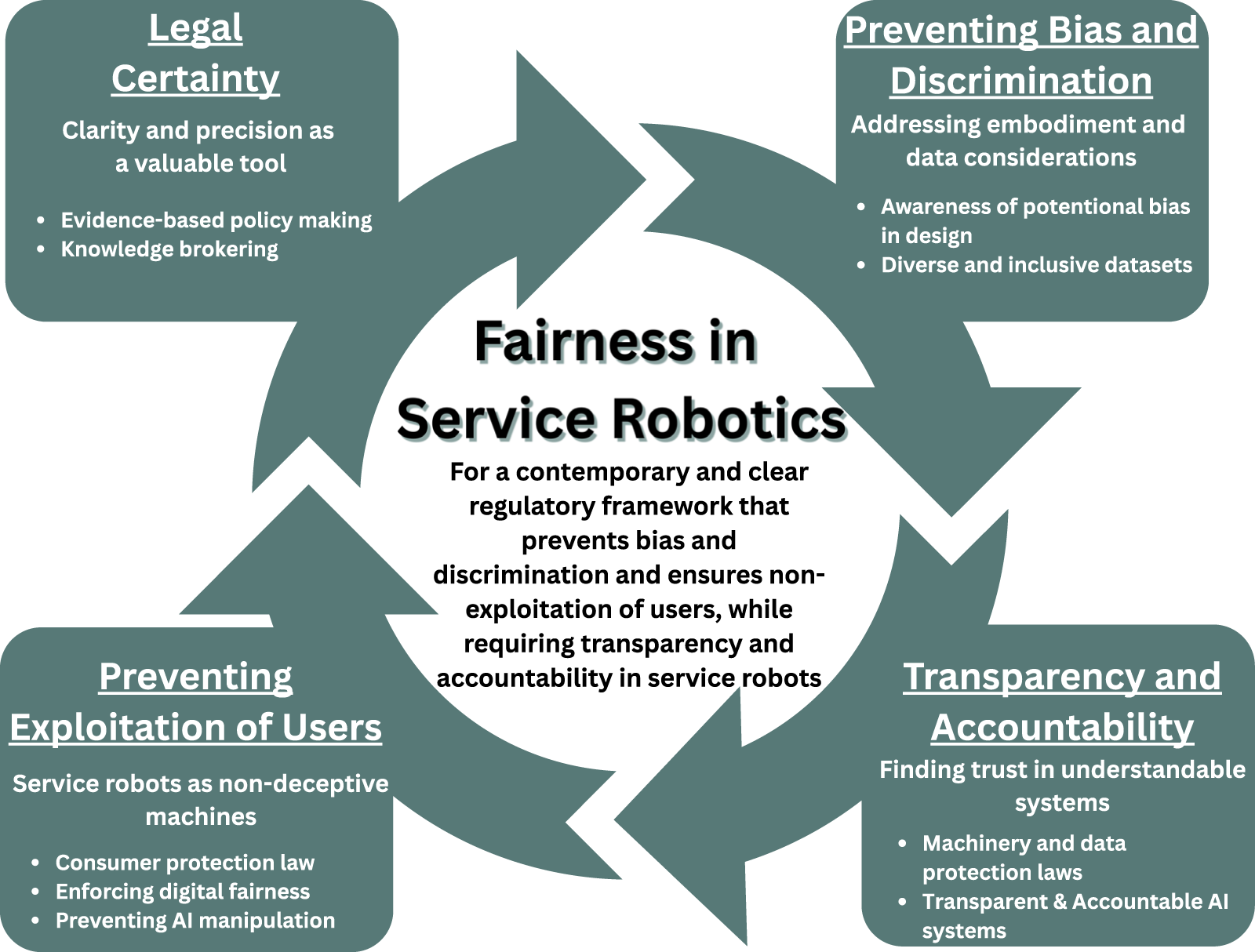

This broad approach is essential to preserve fairness’s expansive and evolving nature, which remains a fundamental regulatory and ethical goal. To give practical shape to this definition, we propose a framework based on four dimensions: legal certainty, prevention of bias and discrimination, protection against user exploitation and the promotion of transparency and accountability. The remainder of this section outlines specific measures aimed at furthering these dimensions, as depicted in Fig. 1:

Figure 1. Fairness in service robotics.

4.1. Legal certainty: clarity and precision as a valuable tool

Legal certainty is a complex regulatory goal. While overly detailed rules might prove ineffective, broader guiding principles might provide more adaptable and meaningful direction (Braithwaite, Reference Braithwaite2002). Principles alone, however, are not sufficient; legal certainty depends on their integration into a well-defined legal regime that enables actors to anticipate legal outcomes. In the context of service robotics, this requires coordinated efforts from academia, industry, and policymakers and can be furthered in two ways.

First, the evidence-based policymaking efforts of the EU, as outlined in the “Better Regulation agenda” (European Commission, n.d.-c) and the “Knowledge 4 Policy” platform (European Commission, n.d.-e), offer promising foundations for robotics regulation. Evidence-based policymaking seeks to ground legal decisions in scientific data and analysis (Pflücke, Reference Pflücke and Pflücke2024), helping ensure that regulatory choices are guided by objective and rational criteria rather than other considerations such as political ideologies (Princen, Reference Princen2022). In the context of robotics, this would mean using cogent scientific evidence to promote legal certainty and fairness (Calleja et al., Reference Calleja, Drukarch and Fosch-Villaronga2022).

Second, knowledge brokering – the practice of linking research and policy through science communication and multi-stakeholder engagement – can also contribute to robotics regulation design (Bielak, Campbell, Pope, Schaefer & Shaxson, Reference Bielak, Campbell, Pope, Schaefer, Shaxson, Cheng, Claessens and Gascoigne2008; Turnhout, Reference Turnhout, Stuiver, Klostermann, Harms and Leeuwis2013). Knowledge brokers act as intermediaries between researchers and policymakers, helping translate scientific insights into actionable guidance while retaining trust, transparency and legitimacy (Gluckman, Bardsley & Kaiser, Reference Gluckman, Bardsley and Kaiser2021). Several EU-funded projects have already contributed to this goal, including RoboLaw (RoboLaw, 2014), INBOTS (European Commission, n.d.-f), SIENNA (European Commission, n.d.-g), H2020 COVR LIAISON (Leiden University, n.d.-a) and H2020 Eurobench PROPELLING (Leiden University, n.d.-b). More in particular, advancing fairness and achieving regulatory certainty in service robotics using scientific methods for policy formation is the core of the (omitted for reviewing purposes) project (Leiden University, n.d.-c).

4.2. Bias and discrimination: addressing embodiment and data considerations

Bias in AI systems has long been recognized as a major fairness challenge, particularly when algorithms are trained on datasets that underrepresent or misrepresent specific demographic groups (Verhoef & Fosch-Villaronga, Reference Verhoef and Fosch-Villaronga2023). In such cases, models may reproduce and even amplify existing social inequalities (Howard & Borenstein, Reference Howard and Borenstein2018; Yapo & Weiss, Reference Yapo and Weiss2018). While expanding datasets and increasing diversity may help, algorithms optimized to identify patterns in historical data are still prone to reflecting the structural imbalances embedded within that data.

To detect these biases, scholars in ML rely on fairness metrics such as equal opportunity difference, odds difference, statistical parity difference, disparate impact and the Theil index (González-Sendino, Serrano, Bajo & Novais, Reference González-Sendino, Serrano, Bajo and Novais2023). These mathematical tools offer structured ways of identifying disparities in how models perform across groups. However, such metrics often fall short of capturing the broader societal and contextual harms that biased systems can produce – particularly when fairness is reduced to statistical parity alone (Carey & Wu, Reference Carey and Wu2022, Reference Carey and Wu2023).

These limitations become even more pronounced in service robotics, where bias is not confined to data or decision outputs but also emerges through physical design and human interaction. Unlike purely virtual AI, service robots are embodied agents – they move through physical space, engage with people face-to-face and signal meaning through voice, gesture and form. Their hardware and interface design can unintentionally convey stereotypes or reinforce normative assumptions about gender, race, ability or cultural identity.

Howard and Borenstein (Reference Howard and Borenstein2018) highlight such risks through examples including a robot peacekeeper, a self-driving car and a medical assistant – each demonstrating how bias in design or behavior can have tangible, unequal impacts depending on the context of deployment.

Given this complexity, mitigating bias in service robotics must begin at the conceptualization and design stage. Manufacturers need to account for how robots are perceived and how different users might experience them. Robots such as social robots and exoskeletons should be developed with attention to diversity and inclusion, especially regarding sex, gender and cultural representation (Barfield, Reference Barfield2023a; Fosch-Villaronga & Drukarch, Reference Fosch-Villaronga and Drukarch2023; Søraa & Fosch-Villaronga, Reference Søraa and Fosch-Villaronga2020). Reembodiment – adapting a robot’s physical or behavioral presentation based on the people it interacts with – has been proposed as one way to foster cultural sensitivity and reduce stereotyping (Reig et al., Reference Reig, Luria, Forberger, Won, Steinfeld, Forlizzi and Zimmerman2021).

This area is evolving rapidly, and several promising technical and policy responses continue to emerge. The Regulation (EU) 2024/1689 (AIA) takes an important step in this direction, explicitly addressing fairness through the lens of bias and non-discrimination. Recital 27 emphasizes that AI systems must be developed inclusively to avoid “discriminatory impacts and unfair biases that are prohibited by Union or national law.”

4.3. Preventing exploitation of users: service robots as nondeceptive machines

Preventing the exploitation of users is a key component of fairness, particularly in contexts where individuals may be in a weaker informational or bargaining position compared to manufacturers. This concern is especially relevant in service robotics, where automated systems can subtly influence user behavior, exploit cognitive biases or obscure information through complex interfaces or persuasive design.

The existing and upcoming legal initiatives at the EU level provide a strong foundation for addressing these risks. Consumer protection law looks at fairness as a principle designed to prevent exploitation of consumers who have a weaker bargaining position and less information than businesses, whether such exploitation is by way of mala fide contractual clauses (Council Directive 93/13/EEC), or material distortion of consumer behavior (Directive 2005/29/EC), or concluding online contracts from a distance without providing adequate information (Directive (EU) 2023/2673).

Recently, the European Commission (EC) published its report on the fitness check related to “digital fairness” for consumers, which culminated in the observation that further measures, including legal certainty and simplification of rules, were required to protect consumers online (European Commission, n.d.-a). These measures can go a long way in service robotics, where users may not fully understand how decisions are made, or how their behavior is shaped by the system (Felzmann et al., Reference Felzmann, Fosch-Villaronga, Lutz and Tamo-Larrieux2019a).

The AIA also provides a regulatory mechanism to address manipulation and deception risks. Article 5(1)(a) prohibits the use of AI systems that exploit vulnerabilities of specific user groups in ways that cause harm. As service robots increasingly integrate AI capabilities, the effective implementation and enforcement of these provisions will be essential to ensuring that such systems do not mislead, manipulate or otherwise take unfair advantage of users (e.g. Article 5(1)(a), AIA).

4.4. Transparency and accountability: finding trust in understandable systems

Effective enforcement of EU legislations applicable to service robotics are foundational for ensuring transparency and accountability in these systems, particularly since recent legal initiatives have sought to address different elements of these robots. For instance, transparency and accountability are core elements highlighted in the recently adopted AIA, which can address these aspects in service robots that have AI systems. All embodied service robots made available in the EU market are also likely to fall under the scope of the Machinery Regulation 2023 (Regulation (EU) 2023/1230), (similar to the Machinery Directive 2006), which prescribes obligations for the economic operators (manufacturers, importers and distributors) of these robots. These economic operators are inter alia required to ensure that certain essential health and safety requirements are met by the robots (e.g., Article 10(1), Machinery Regulation 2023). Further, Article 5(1)(a) of the GDPR specifies that personal data should be processed in a “fair” manner. The meaning of fairness itself is unclear and not further elaborated (Clifford & Ausloos, Reference Clifford and Ausloos2018). However, the European Data Protection Board (EDPB) recently stated regarding transparency under the GDPR that transparency is fundamentally linked to fairness and not being transparent about the processing of personal data is likely to be unlawful (EDPB, 2025). The overall framework provided by GDPR also ensures accountability of entities when it comes to the processing of personal data by service robots.

5. Conclusions

This paper has explored what fairness means in the context of service robotics, aiming to capture its normative, legal, technical and social dimensions. Through doctrinal research, supported by a multidisciplinary review, we proposed a working definition of fairness that considers both software and physical embodiment in robotics. Our analysis focused on four key dimensions of fairness that can advance service robotics in a fair manner: (i) Furthering legal certainty, (ii) Preventing bias and discrimination, (iii) Preventing exploitation of users and (iv) Ensuring transparency and accountability. These dimensions serve not as a rigid checklist, but as guiding principles for fostering fairer practices in the design, deployment and governance of service robots.

At the regulatory level, service robotics presents specific challenges that often fall between the cracks of broadly defined, technology-neutral legal frameworks. While technology neutrality in regulation ensures broad applicability, it can also obscure the specific risks posed by service robots. The principle holds that regulations should avoid privilege or disadvantage particular technologies, and should instead focus on the functions they perform or the outcomes they produce (Greenberg, Reference Greenberg2015). However, service robots – operating in both personal and professional environments – where fairness concerns are deeply entangled with social vulnerability, human–machine interaction and embedded power asymmetries. In these environments, fairness becomes a core component of both safety and legitimacy, raising questions around bias, exclusion, privacy and the adequacy of current policy frameworks.

Central to this approach is a recognition that fairness cannot be addressed solely through legal or technical mechanisms. It also requires reflexivity – a continuous awareness of how researchers’ own values, assumptions and social positions shape the knowledge they produce (Palaganas, Sanchez, Molintas & Caricativo, Reference Palaganas, Sanchez, Molintas and Caricativo2017). The reflection process involves a continuous dialogue (through introspection) between the assumptions and values that researchers bring into their field of study and the social ecosystems in which they are embedded. This, in turn, enriches the process and results (Palaganas et al., Reference Palaganas, Sanchez, Molintas and Caricativo2017). Reflexive researchers engage in the production of evidence-based scientific findings with an awareness that there is no true objectivity, only aspirations. It is therefore necessary for authors to critically examine their appeal for “pure” legal certainty, acknowledging the influence of their own worldview throughout the research process.

This need for reflexivity extends to knowledge brokers as well, who mediate between academic evidence and policy decisions (Fosch-Villaronga et al., Reference Fosch-Villaronga, Shaffique, Schwed-Shenker, Mut-Piña, van der Hof and Custers2025). It is also imperative that policymakers engage with this knowledge in a reflective manner, free from political bias, recognizing that their decisions carry tangible consequences in the real world. Complementing reflexivity, efforts undertaken by researchers to minimize biases and to embark on long-term projects can also be helpful to achieve fairer outcomes. In this regard, reviewing each other’s work as peers can help academics minimize their biases in their work, consider how their worldview is presented in their articles, and, ultimately, achieve a greater and fairer model for the next generation of scientists in quantitative and qualitative methods. Using slow science and embarking on long-term projects can, in turn, foster collaborations and can also help assess the quality of research and entrust academics to reflect on their work and biases (Frith, Reference Frith2020). In short, while complete objectivity is unattainable, acknowledging one’s positionality can support more transparent, inclusive and responsible policymaking.

Ultimately, while perfect fairness may remain aspirational, critically engaging with its complexities – conceptually, legally and ethically – can help create more equitable and trustworthy HRIs.

Funding statement

This work was funded by the ERC StG Safe and Sound project, a project that has received funding from the European Union’s Horizon-ERC program, Grant Agreement No. 101076929.

Competing interests

The authors declare no competing interests.

Dr. Eduard Fosch-Villaronga Ph.D. LL.M M.A. is Associate Professor and Director of Research at the eLaw–Center for Law and Digital Technologies at Leiden University (NL). Eduard is an ERC Laureate who investigates the legal and regulatory aspects of robot and AI technologies, focusing on healthcare, governance, diversity, and privacy. Eduard Fosch-Villaronga is the Principal Investigator (PI) of his personal ERC Starting Grant SAFE & SOUND where he works on science for robot policies. Eduard is also the PI of eLaw’s contribution to the Horizon Europe BIAS Project: Mitigating Diversity Biases of AI in the Labour Market. In 2023, Eduard received the EU Safety Product Gold Award for his contribution to making robots safer by including diversity considerations. Eduard is part of the Royal Netherlands Standardization Institute (NEN) as an expert and the International Standard Organization (ISO) as a committee member in the ISO/TC 299/WG 2 laying down Safety Requirements for Service Robots (ISO/CD 13,482).

Dr. Antoni Mut Piña is a postdoctoral researcher at the eLaw–Center for Law and Digital Technologies at Leiden University, investigating science for robot policy at the ERC StG SAFE & Sound project. He completed his Ph.D. at the University of Barcelona, focusing on empirical legal analysis and consumer behavior. Antoni’s work employs advanced data analysis techniques to generate policy-relevant data. He holds degrees in Law, Business Administration, along with postgraduate studies in Political Analysis and Consumer Contract Law, with experience in research on consumer vulnerability and regulatory policies.

Mohammed Raiz Shaffique is a PhD candidate at the eLaw–Center for Law and Digital Technologies at Leiden University researching law and robotics. Raiz investigates the generation and use of policy-relevant data to improve the regulatory framework governing physical assistant robots and wearable robots, as part of the ERC StG Safe & Sound project. Previously, he worked on age assurance and online child safety under the EU’s Better Internet for Kids+ initiative. Raiz earned his Advanced Master’s in Law and Digital Technologies at Leiden University with top honors and authored a book on white-collar crime in India. He also has six years of legal experience and expertise in cyber law and dispute resolution.

Marie Schwed-Shenker is a PhD candidate at the eLaw–Center for Law and Digital Technologies at Leiden University researching law and robotics. Her work focuses on science for social assistive robot policy as part of the ERC StG Safe & Sound project. Previously, she studied Practical Criminology at Hebrew University and Sociology and Anthropology at Tel Aviv University. Marie’s work has focused on ethical issues in youth volunteering and the role of media in radicalization. In previous research, she examined stereotypes of female prisoners in media and language’s role in group radicalization.