1. Introduction

In the seminal work by Taylor (Reference Taylor1934), a two-dimensional fluid system, now termed four-roll mill (FRM), was designed to analyse the deformation of drops and the formation of emulsions. In this set-up, four identical cylinders submerged in a viscous liquid are driven by electric motors. By adjusting the rotational speeds of the rollers, the flow can vary from purely extensional to shear-dominated to purely rotational. The attributes of FRM have made it popular in various applications. For example, the four-roll mill or similar device has been used to generate controlled extensional flows, facilitating the study of droplet deformation and suspension dynamics in microfluidic environments (Hudson et al. Reference Hudson, Phelan, Handler, Cabral, Migler and Amis2004; Lee et al. Reference Lee, Dylla-Spears, Teclemariam and Muller2007), allowing for precise manipulation of cells, particles and drops, which is essential for applications in material science and chemical engineering (Rumscheidt & Mason Reference Rumscheidt and Mason1961; Bentley & Leal Reference Bentley and Leal1986b

). The FRM has also been instrumental in studying the behaviour of polymer solutions under various flow conditions. By adjusting the flow type and rate, researchers can investigate polymer chain stretching and orientation, which are critical for optimising industrial processes like extrusion and moulding. Relevant works include Fuller & Leal (Reference Fuller and Leal1981), Feng & Leal (Reference Feng and Leal1997) and Mackley (Reference Mackley2010). Notably, Bentley & Leal (Reference Bentley and Leal1986a

) designed a computer-controlled FRM that is capable of producing arbitrary linear flow fields. An automated control mechanism was proposed to stabilise droplets in the centre of the flow cell. Higdon (Reference Higdon1993) systematically investigated the extensional and rotational rates under different combinations of characteristic length ratios in a square box based on a two-dimensional simulation. For detailed reviews of the application of FRM in fluid mechanics, see Rallison (Reference Rallison1984) and Stone (Reference Stone1994). More recently, Vona & Lauga (Reference Vona and Lauga2021) used reinforcement learning (RL) to search for an optimal control policy that can drive a droplet to the centre via modulation of roller speeds at vanishingly small Reynolds number

![]() $Re$

.

$Re$

.

Given the unique importance of FRM in both academic research and real-world applications, it is of great interest to explore the accurate and robust control of the droplet in the FRM. Previous papers by Bentley & Leal (Reference Bentley and Leal1986a

) and Vona & Lauga (Reference Vona and Lauga2021) have laid a solid foundation. Based on these works, this study aims to extend the FRM control in the following two key aspects. First, we will consider moderate inertial effects with

![]() $Re\sim \mathcal{O}(1)$

in the FRM, a case seldom studied in the control of FRM. The effect of inertia on the control results will be elucidated in our task and this will help understand how the nonlinearity can be controlled in FRM. Second, we will leverage the geometric symmetry in FRM to facilitate the training and testing of the control policy. To the best of our knowledge, past works have not used the geometric symmetry in controlling the flow in FRM. Embedding and using intrinsic symmetry in machine learning algorithms represents a significant trend in the recent development (van der Pol et al. Reference van der Pol, Worrall, van Hoof, Oliehoek and Welling2020; Otto et al. Reference Otto, Zolman, Kutz and Brunton2023). With these improvements, our work aims to further test the applicability of deep RL (DRL) in guiding a droplet to the centre of the FRM using a direct numerical simulation method. The reasons for choosing the DRL as the control method are twofold. First, Bentley & Leal (Reference Bentley and Leal1986a

) demonstrated that a linear PID-type controller failed to stabilise a droplet, which will drift exponentially away from the stagnation point if uncontrolled. A PID-type controller regulates a process by combining proportional, integral and derivative actions, reacting to errors and correcting past offsets in a linear manner. Its inability to control the extensional flow is likely due to the inherently linear nature of the controller, which may be insufficient for managing the complex, nonlinear dynamics of such a flow system. Second, DRL has been applied successfully in controlling nonlinear flows (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019). It also represents the state of the art in the application of machine learning algorithms in controlling the unstable extensional flow in FRM, as first explored by Vona & Lauga (Reference Vona and Lauga2021).

$Re\sim \mathcal{O}(1)$

in the FRM, a case seldom studied in the control of FRM. The effect of inertia on the control results will be elucidated in our task and this will help understand how the nonlinearity can be controlled in FRM. Second, we will leverage the geometric symmetry in FRM to facilitate the training and testing of the control policy. To the best of our knowledge, past works have not used the geometric symmetry in controlling the flow in FRM. Embedding and using intrinsic symmetry in machine learning algorithms represents a significant trend in the recent development (van der Pol et al. Reference van der Pol, Worrall, van Hoof, Oliehoek and Welling2020; Otto et al. Reference Otto, Zolman, Kutz and Brunton2023). With these improvements, our work aims to further test the applicability of deep RL (DRL) in guiding a droplet to the centre of the FRM using a direct numerical simulation method. The reasons for choosing the DRL as the control method are twofold. First, Bentley & Leal (Reference Bentley and Leal1986a

) demonstrated that a linear PID-type controller failed to stabilise a droplet, which will drift exponentially away from the stagnation point if uncontrolled. A PID-type controller regulates a process by combining proportional, integral and derivative actions, reacting to errors and correcting past offsets in a linear manner. Its inability to control the extensional flow is likely due to the inherently linear nature of the controller, which may be insufficient for managing the complex, nonlinear dynamics of such a flow system. Second, DRL has been applied successfully in controlling nonlinear flows (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019). It also represents the state of the art in the application of machine learning algorithms in controlling the unstable extensional flow in FRM, as first explored by Vona & Lauga (Reference Vona and Lauga2021).

In the following, we will first introduce the flow problem and explain the numerical methods in § 2. The results will then be discussed and compared with those of Vona & Lauga (Reference Vona and Lauga2021) in § 3. The section also explains the advantage of leveraging the geometric symmetry in the FRM and discusses the effect of inertia. In § 4, we conclude the work with some discussions. Five appendices provide additional information on the delay in flow response due to inertia, effect of thermal noise, global policy regarding different initial conditions, hyperparameter fine-tuning and effects of different state definitions. Our code will be shared online upon the acceptance of the work.

2. Problem formulation and numerical methods

2.1. Direct numerical simulation and validation

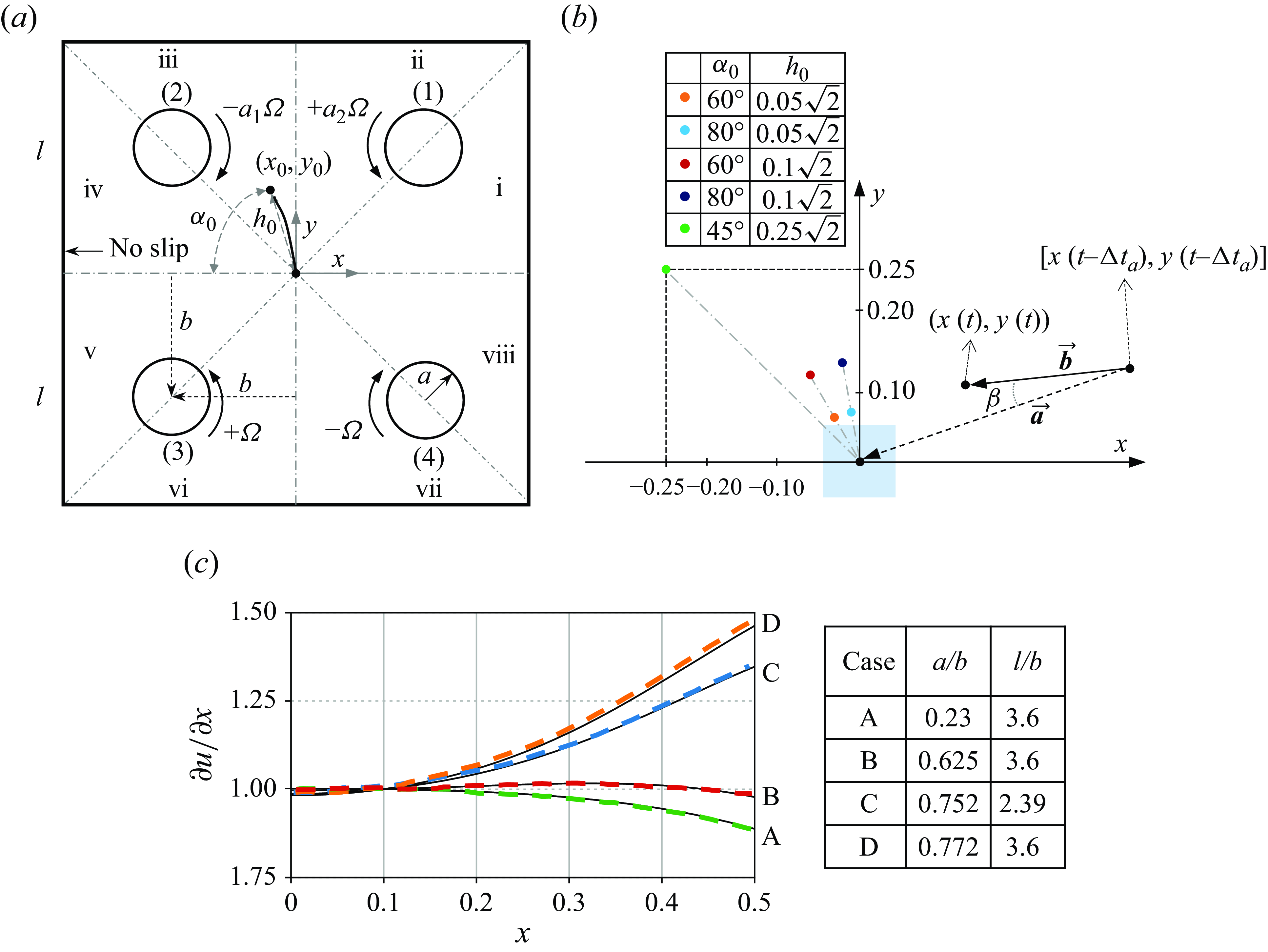

The diagram in figure 1(

![]() $a$

) depicts the two-dimensional (2-D) four-roll mill (FRM) instrument filled with a Newtonian fluid. The Cartesian coordinate originates from the centre of a square domain with side length

$a$

) depicts the two-dimensional (2-D) four-roll mill (FRM) instrument filled with a Newtonian fluid. The Cartesian coordinate originates from the centre of a square domain with side length

![]() $2l$

. The domain is divided into eight sub-quadrants, which will be discussed in the section on the geometric symmetry. Positioned at

$2l$

. The domain is divided into eight sub-quadrants, which will be discussed in the section on the geometric symmetry. Positioned at

![]() $(\pm b, \pm b)$

are four rollers, each with a radius

$(\pm b, \pm b)$

are four rollers, each with a radius

![]() $a$

. The rollers are indexed (1) to (4), corresponding to the first to the fourth quadrants, respectively. The baseline rotation rate of the rollers is denoted by

$a$

. The rollers are indexed (1) to (4), corresponding to the first to the fourth quadrants, respectively. The baseline rotation rate of the rollers is denoted by

![]() $\pm \Omega$

, with the positive (negative) sign representing the anticlockwise (clockwise) direction. To generate an extensional flow, the baseline rotation rates of the rollers from (1) to (4) are designated as

$\pm \Omega$

, with the positive (negative) sign representing the anticlockwise (clockwise) direction. To generate an extensional flow, the baseline rotation rates of the rollers from (1) to (4) are designated as

![]() ${\omega }_B=[+\Omega,\,-\Omega,\,+\Omega,\,-\Omega ]$

, respectively. A droplet is initially positioned at

${\omega }_B=[+\Omega,\,-\Omega,\,+\Omega,\,-\Omega ]$

, respectively. A droplet is initially positioned at

![]() $(x_0,y_0)$

in Cartesian coordinate or

$(x_0,y_0)$

in Cartesian coordinate or

![]() $(h_0,\alpha _0)$

in polar coordinate, where

$(h_0,\alpha _0)$

in polar coordinate, where

![]() $\alpha _0$

denotes the initial angle between the droplet and the negative

$\alpha _0$

denotes the initial angle between the droplet and the negative

![]() $x$

-axis and

$x$

-axis and

![]() $h_0$

is the initial radial distance. The two coordinates are related by

$h_0$

is the initial radial distance. The two coordinates are related by

![]() $x_0=-h_0\cos (\alpha _0)$

and

$x_0=-h_0\cos (\alpha _0)$

and

![]() $y_0=h_0\sin (\alpha _0)$

. Following Vona & Lauga (Reference Vona and Lauga2021), we make an assumption that the droplet is represented as a rigid fluid particle, meaning that it will not deform. In addition, the passive droplet experiences no external forces and its movement will not affect the flow field.

$y_0=h_0\sin (\alpha _0)$

. Following Vona & Lauga (Reference Vona and Lauga2021), we make an assumption that the droplet is represented as a rigid fluid particle, meaning that it will not deform. In addition, the passive droplet experiences no external forces and its movement will not affect the flow field.

Although flow with

![]() $Re\sim \mathcal{O}(1)$

can be approximated as Stokes flow, to faithfully capture the inertial effect, we solve the 2-D dimensionless incompressible Navier–Stokes equations, contrary to the linear framework adopted previously (Bentley & Leal Reference Bentley and Leal1986a

; Vona & Lauga Reference Vona and Lauga2021),

$Re\sim \mathcal{O}(1)$

can be approximated as Stokes flow, to faithfully capture the inertial effect, we solve the 2-D dimensionless incompressible Navier–Stokes equations, contrary to the linear framework adopted previously (Bentley & Leal Reference Bentley and Leal1986a

; Vona & Lauga Reference Vona and Lauga2021),

where

![]() $\boldsymbol{u}=(u, v)^{T}$

denotes the velocity,

$\boldsymbol{u}=(u, v)^{T}$

denotes the velocity,

![]() $p$

the pressure and

$p$

the pressure and

![]() $Re= {b\Omega a}/{\nu }$

, where

$Re= {b\Omega a}/{\nu }$

, where

![]() $\nu$

is kinematic viscosity. Our length scale is

$\nu$

is kinematic viscosity. Our length scale is

![]() $b$

, velocity scale is

$b$

, velocity scale is

![]() $\Omega a$

, time scale is

$\Omega a$

, time scale is

![]() $ {b}/{\Omega a}$

and pressure scale is

$ {b}/{\Omega a}$

and pressure scale is

![]() $\rho \Omega ^2 a^2$

. When

$\rho \Omega ^2 a^2$

. When

![]() $Re$

is small, the convective terms can be neglected and analytical solutions exist for the induced Stokes flow, as adopted by Vona & Lauga (Reference Vona and Lauga2021). In our study, however, we will retain all the terms even though the Reynolds number is small. This will facilitate the investigation of the (weak) inertial effect. Specifically, we will consider

$Re$

is small, the convective terms can be neglected and analytical solutions exist for the induced Stokes flow, as adopted by Vona & Lauga (Reference Vona and Lauga2021). In our study, however, we will retain all the terms even though the Reynolds number is small. This will facilitate the investigation of the (weak) inertial effect. Specifically, we will consider

![]() $Re=10^{-9}, 0.4, 2$

and 3. According to the data of Bentley & Leal (Reference Bentley and Leal1986a

), it is possible to realise these Reynolds numbers in experiments by adjusting the roller rotation rate and choosing a proper fluid. For simplicity, the descriptions in this section will be based on the representative case

$Re=10^{-9}, 0.4, 2$

and 3. According to the data of Bentley & Leal (Reference Bentley and Leal1986a

), it is possible to realise these Reynolds numbers in experiments by adjusting the roller rotation rate and choosing a proper fluid. For simplicity, the descriptions in this section will be based on the representative case

![]() $Re=0.4$

, as they remain the same for the other cases unless otherwise noted.

$Re=0.4$

, as they remain the same for the other cases unless otherwise noted.

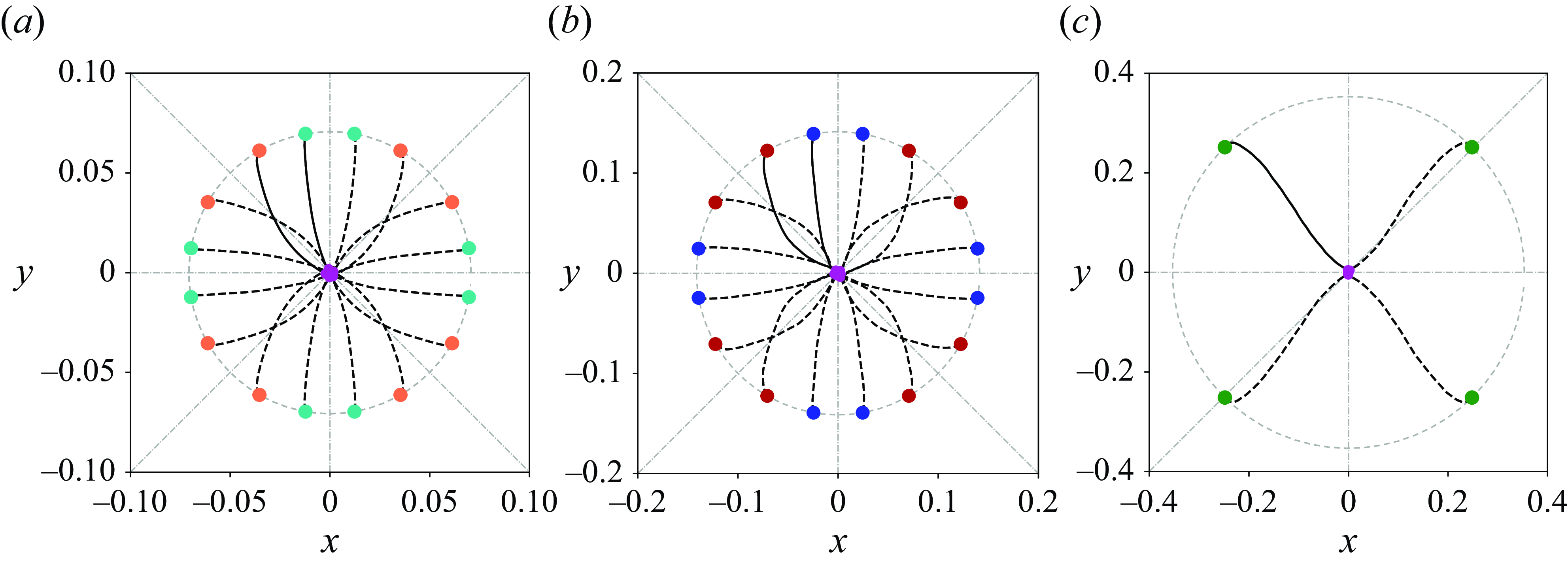

Figure 1. (

![]() $a$

) Schematic diagram of four-roll mill showing the control set-up for a droplet initially positioned in the sub-quadrant iii. (

$a$

) Schematic diagram of four-roll mill showing the control set-up for a droplet initially positioned in the sub-quadrant iii. (

![]() $b$

) The five initial positions of the droplet to be studied in the present work with different

$b$

) The five initial positions of the droplet to be studied in the present work with different

![]() $h_0$

and

$h_0$

and

![]() $\alpha _0$

. The blue shade encircles the initial positions considered by Vona & Lauga (Reference Vona and Lauga2021). The definition of the angle

$\alpha _0$

. The blue shade encircles the initial positions considered by Vona & Lauga (Reference Vona and Lauga2021). The definition of the angle

![]() $\beta$

in the reward definition (2.2) is also illustrated. (

$\beta$

in the reward definition (2.2) is also illustrated. (

![]() $c$

) Validation of our DNS results (black solid lines) against those (dashed lines) of Higdon (Reference Higdon1993) at vanishingly small

$c$

) Validation of our DNS results (black solid lines) against those (dashed lines) of Higdon (Reference Higdon1993) at vanishingly small

![]() $Re$

. The letters represent the cases of different

$Re$

. The letters represent the cases of different

![]() $a/b$

and

$a/b$

and

![]() $l/b$

as summarised in the table.

$l/b$

as summarised in the table.

The roller rotation is realised by imposing a velocity boundary condition on the rollers. On the boundary of a square domain, we consider no-slip boundary conditions following Higdon (Reference Higdon1993). To solve (2.1), the open-source code Nek5000 based on the spectral element method (Fischer, Lottes & Kerkemeier Reference Fischer, Lottes and Kerkemeier2017) is used. We have verified the mesh convergence of our FRM simulations, and chosen a mesh composed of 1436 elements of the order 7 for a good balance between accuracy and computational cost. Regarding time integration, the two-step backward differentiation scheme is adopted with a time step

![]() $\Delta t=10^{-3}$

time units.

$\Delta t=10^{-3}$

time units.

The past works (Fuller et al. Reference Fuller, Rallison, Schmidt and Leal1980; Fuller & Leal Reference Fuller and Leal1981; Higdon Reference Higdon1993) have identified

![]() ${a}/{b}$

and

${a}/{b}$

and

![]() ${l}/{b}$

as two principle design parameters for determining a proper approximation of extensional flow in the FRM test region. We have validated our numerical simulations at angular velocity amplitude of all rollers

${l}/{b}$

as two principle design parameters for determining a proper approximation of extensional flow in the FRM test region. We have validated our numerical simulations at angular velocity amplitude of all rollers

![]() $\Omega =1.6\ \mathrm{rad}\, \mathrm{s}^{-1}$

within a range of

$\Omega =1.6\ \mathrm{rad}\, \mathrm{s}^{-1}$

within a range of

![]() ${a}/{b}$

and

${a}/{b}$

and

![]() ${l}/{b}$

against the results of Higdon (Reference Higdon1993) at vanishingly small

${l}/{b}$

against the results of Higdon (Reference Higdon1993) at vanishingly small

![]() $Re$

, as shown in figure 1(

$Re$

, as shown in figure 1(

![]() $b$

). More precisely, for the case

$b$

). More precisely, for the case

![]() $B$

of

$B$

of

![]() $ {a}/{b}=0.625$

and

$ {a}/{b}=0.625$

and

![]() $ {l}/{b}=3.6$

, Higdon (Reference Higdon1993) reported that the extension rate at the origin under extensional flow is 0.7064 and the vorticity at the origin under rotational flow is 0.8250. Our numerical results yield 0.7065 and 0.8250, respectively. This case is chosen for the DRL control in our work.

$ {l}/{b}=3.6$

, Higdon (Reference Higdon1993) reported that the extension rate at the origin under extensional flow is 0.7064 and the vorticity at the origin under rotational flow is 0.8250. Our numerical results yield 0.7065 and 0.8250, respectively. This case is chosen for the DRL control in our work.

2.2. Control set-up

Vona & Lauga (Reference Vona and Lauga2021) assumed a rotlet solution of the flow induced by the rotation of rollers in an idealised Stokes flow, where the drop could respond to the changes of roller speed instantaneously. In their control set-up, they controlled the rotation rate of one roller with the other three rotating at a default angular velocity. However, in our simulations, we discovered that adjusting only one roller is overly restrictive and inefficient in the finite-

![]() $Re$

regime due to the existence of non-negligible inertia. As a result, our focus shifted to actuating two adjacent rollers closest to the initial position of the droplet. Note that for the case of

$Re$

regime due to the existence of non-negligible inertia. As a result, our focus shifted to actuating two adjacent rollers closest to the initial position of the droplet. Note that for the case of

![]() $Re=3$

, control using three rollers is necessary. For clarity, the following explanation of the control set-up will focus on two rollers, with the expansion to three rollers discussed later in § 3.3.2.

$Re=3$

, control using three rollers is necessary. For clarity, the following explanation of the control set-up will focus on two rollers, with the expansion to three rollers discussed later in § 3.3.2.

The chosen rollers will not change within a single control task. For example, given

![]() ${\omega }_B$

, for a droplet initially placed in the sub-quadrant iii, the two adjacent rollers chosen to control its trajectory are roller (1) and roller (2), see figure 1(

${\omega }_B$

, for a droplet initially placed in the sub-quadrant iii, the two adjacent rollers chosen to control its trajectory are roller (1) and roller (2), see figure 1(

![]() $a$

). We will modulate the baseline rotation rate of the roller closest to the droplet by multiplying it with an adjustable signal

$a$

). We will modulate the baseline rotation rate of the roller closest to the droplet by multiplying it with an adjustable signal

![]() $a_1(t)$

and similarly for that less close, multiply it by

$a_1(t)$

and similarly for that less close, multiply it by

![]() $a_2(t)$

. The DRL-controlled rotation rates of the rollers in this case then become

$a_2(t)$

. The DRL-controlled rotation rates of the rollers in this case then become

![]() ${\omega }^{iii}(t)=[+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega,\,-\Omega ]$

. The DRL algorithm aims to determine the signals

${\omega }^{iii}(t)=[+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega,\,-\Omega ]$

. The DRL algorithm aims to determine the signals

![]() $a_1(t),a_2(t)$

in time, to be elucidated shortly.

$a_1(t),a_2(t)$

in time, to be elucidated shortly.

Five different initial positions of the droplet are considered, see figure 1(

![]() $b$

). Among them, four initial positions are located within the sub-quadrant iii with varying distances to the origin and angles, i.e.

$b$

). Among them, four initial positions are located within the sub-quadrant iii with varying distances to the origin and angles, i.e.

![]() $[h_0,\alpha _0]=[0.1\sqrt {2},\ 60^{\circ } \text{ or } 80^{\circ }]$

,

$[h_0,\alpha _0]=[0.1\sqrt {2},\ 60^{\circ } \text{ or } 80^{\circ }]$

,

![]() $[0.05\sqrt {2},\ 60^{\circ } \text{ or } 80^{\circ }]$

. Vona & Lauga (Reference Vona and Lauga2021)confined the initial positions of the drops in the region of

$[0.05\sqrt {2},\ 60^{\circ } \text{ or } 80^{\circ }]$

. Vona & Lauga (Reference Vona and Lauga2021)confined the initial positions of the drops in the region of

![]() $x\in (-0.05,0.05),y\in (-0.05,0.05)$

(they normalised the length in the same way as we did). Thus, their drops were placed within

$x\in (-0.05,0.05),y\in (-0.05,0.05)$

(they normalised the length in the same way as we did). Thus, their drops were placed within

![]() $0.05\sqrt {2}$

of the origin. It is also noted that their normalised radius of the rollers is 0.8, which is slightly greater than ours. Our fifth case with

$0.05\sqrt {2}$

of the origin. It is also noted that their normalised radius of the rollers is 0.8, which is slightly greater than ours. Our fifth case with

![]() $[h_0,\alpha _0]=[0.25\sqrt {2},\ 45^{\circ }]$

defines a challenging task since the droplet is positioned substantially far away from the origin. For this case, we also vary the

$[h_0,\alpha _0]=[0.25\sqrt {2},\ 45^{\circ }]$

defines a challenging task since the droplet is positioned substantially far away from the origin. For this case, we also vary the

![]() $Re$

to investigate the inertial effect.

$Re$

to investigate the inertial effect.

2.3. Deep reinforcement learning

DRL is a machine-learning algorithm that leverages deep learning techniques and reinforcement learning principles to automate the decision-making process (Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020). The core concept of DRL-based control relies on an agent, approximated by an artificial neural network, learning to identify the optimal control policy through continuous interaction with the environment. By assessing the outcomes of its actions as either desirable or undesirable, the agent learns and adapts from these experiences according to a user-defined reward function.

The state input in our DRL algorithm includes the droplet’s position, velocity and acceleration, together defined as

![]() $s_t=[x(t),\,y(t),\,u(t),\,v(t),\,k_x(t),\,k_y(t)]\in \mathcal{S}$

. The position is obtained by integrating the velocity signal and the acceleration is calculated by differentiating the velocity signal in time. In contrast, Vona & Lauga (Reference Vona and Lauga2021) used solely the position as the state. In our simulations, using only the position as the input failed in the finite-

$s_t=[x(t),\,y(t),\,u(t),\,v(t),\,k_x(t),\,k_y(t)]\in \mathcal{S}$

. The position is obtained by integrating the velocity signal and the acceleration is calculated by differentiating the velocity signal in time. In contrast, Vona & Lauga (Reference Vona and Lauga2021) used solely the position as the state. In our simulations, using only the position as the input failed in the finite-

![]() $Re$

regime, which may be related to the non-negligible inertial effect in our case. The actions at time

$Re$

regime, which may be related to the non-negligible inertial effect in our case. The actions at time

![]() $t$

are

$t$

are

![]() $a_t=[a_1(t),\ a_2(t)]\in \mathcal{A}$

, adjusting the baseline rotation rates of the two adjacent rollers as explained earlier. The values of

$a_t=[a_1(t),\ a_2(t)]\in \mathcal{A}$

, adjusting the baseline rotation rates of the two adjacent rollers as explained earlier. The values of

![]() $a_1(t),\ a_2(t)$

are sampled between

$a_1(t),\ a_2(t)$

are sampled between

![]() $[-\eta ,\ \eta ]$

, where

$[-\eta ,\ \eta ]$

, where

![]() $\eta$

is a predefined constant. The reward function

$\eta$

is a predefined constant. The reward function

![]() $r(t)\in \mathbb{R}^+$

consists of

$r(t)\in \mathbb{R}^+$

consists of

![]() $r_1(t)$

,

$r_1(t)$

,

![]() $r_2(t)$

and

$r_2(t)$

and

![]() $r'$

, i.e.

$r'$

, i.e.

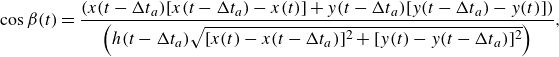

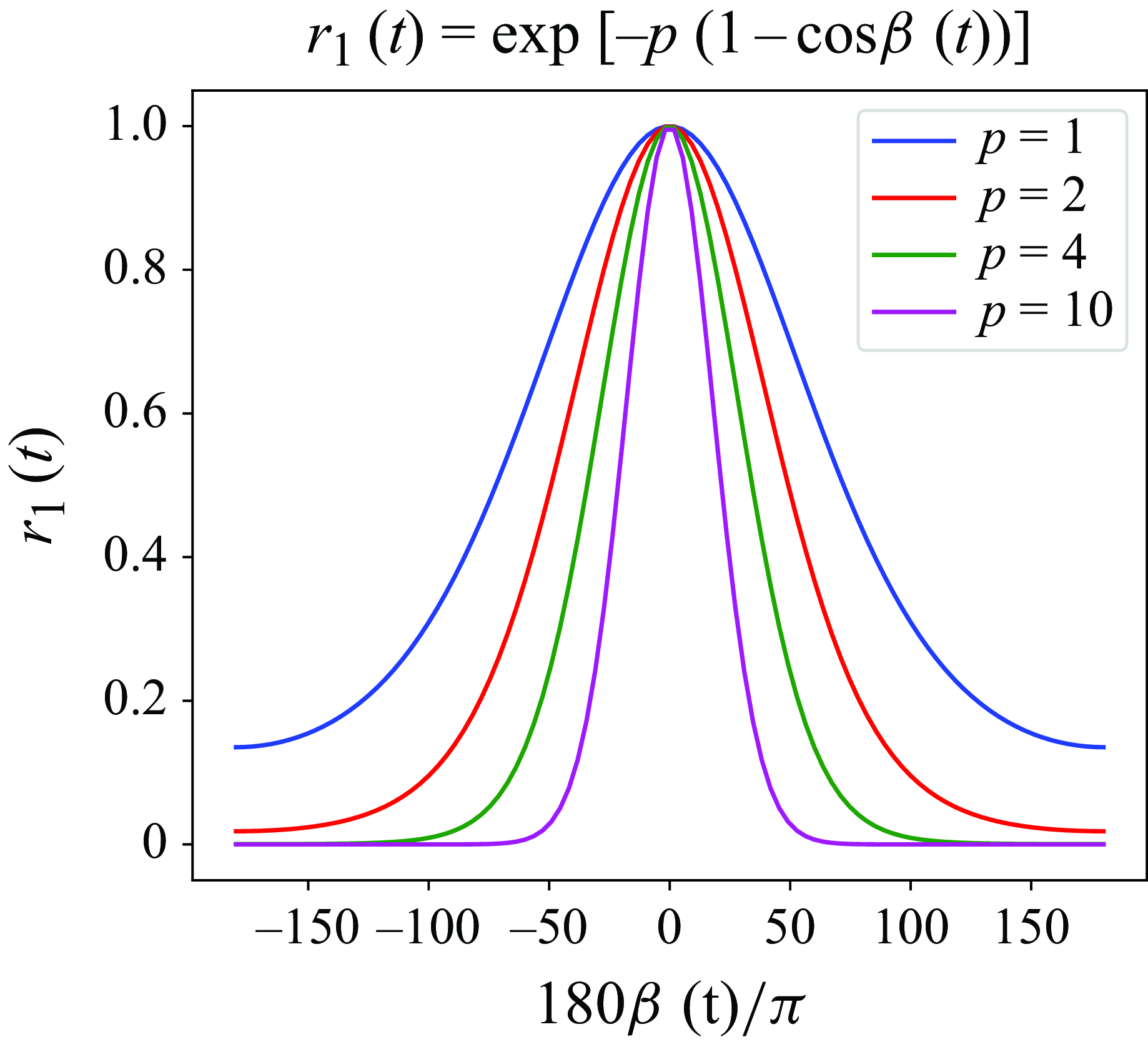

The definition of the reward

![]() $r_1(t)$

follows the work of Vona & Lauga (Reference Vona and Lauga2021) and is related to

$r_1(t)$

follows the work of Vona & Lauga (Reference Vona and Lauga2021) and is related to

![]() $\beta (t)$

, defined as the angle between the displacement vector

$\beta (t)$

, defined as the angle between the displacement vector

![]() $\boldsymbol{b}= [x(t)-x(t-{\Delta t_a}), \ y(t)-y(t-{\Delta t_a}) ]$

and the inward vector

$\boldsymbol{b}= [x(t)-x(t-{\Delta t_a}), \ y(t)-y(t-{\Delta t_a}) ]$

and the inward vector

![]() $\boldsymbol{a}= [-x(t-{\Delta t_a}),\ -y(t-{\Delta t_a}) ]$

, as illustrated in figure 1(

$\boldsymbol{a}= [-x(t-{\Delta t_a}),\ -y(t-{\Delta t_a}) ]$

, as illustrated in figure 1(

![]() $b$

), where

$b$

), where

\begin{align} \cos \beta (t) & = \frac{\left(x(t-{\Delta t_a}) [ x(t-{\Delta t_a})-x(t) ] + y(t-{\Delta t_a}) [ y(t-{\Delta t_a}) -y(t) ]\right)}{\left({h(t-{\Delta t_a}) \sqrt { [x(t)-x(t-{\Delta t_a}) ]^2+ [y(t)-y(t-{\Delta t_a}) ]^2}} \right)}, \end{align}

\begin{align} \cos \beta (t) & = \frac{\left(x(t-{\Delta t_a}) [ x(t-{\Delta t_a})-x(t) ] + y(t-{\Delta t_a}) [ y(t-{\Delta t_a}) -y(t) ]\right)}{\left({h(t-{\Delta t_a}) \sqrt { [x(t)-x(t-{\Delta t_a}) ]^2+ [y(t)-y(t-{\Delta t_a}) ]^2}} \right)}, \end{align}

and

and

![]() $\Delta t_{a}$

is the time interval for updating actions, i.e. the time interval between two control steps. The function

$\Delta t_{a}$

is the time interval for updating actions, i.e. the time interval between two control steps. The function

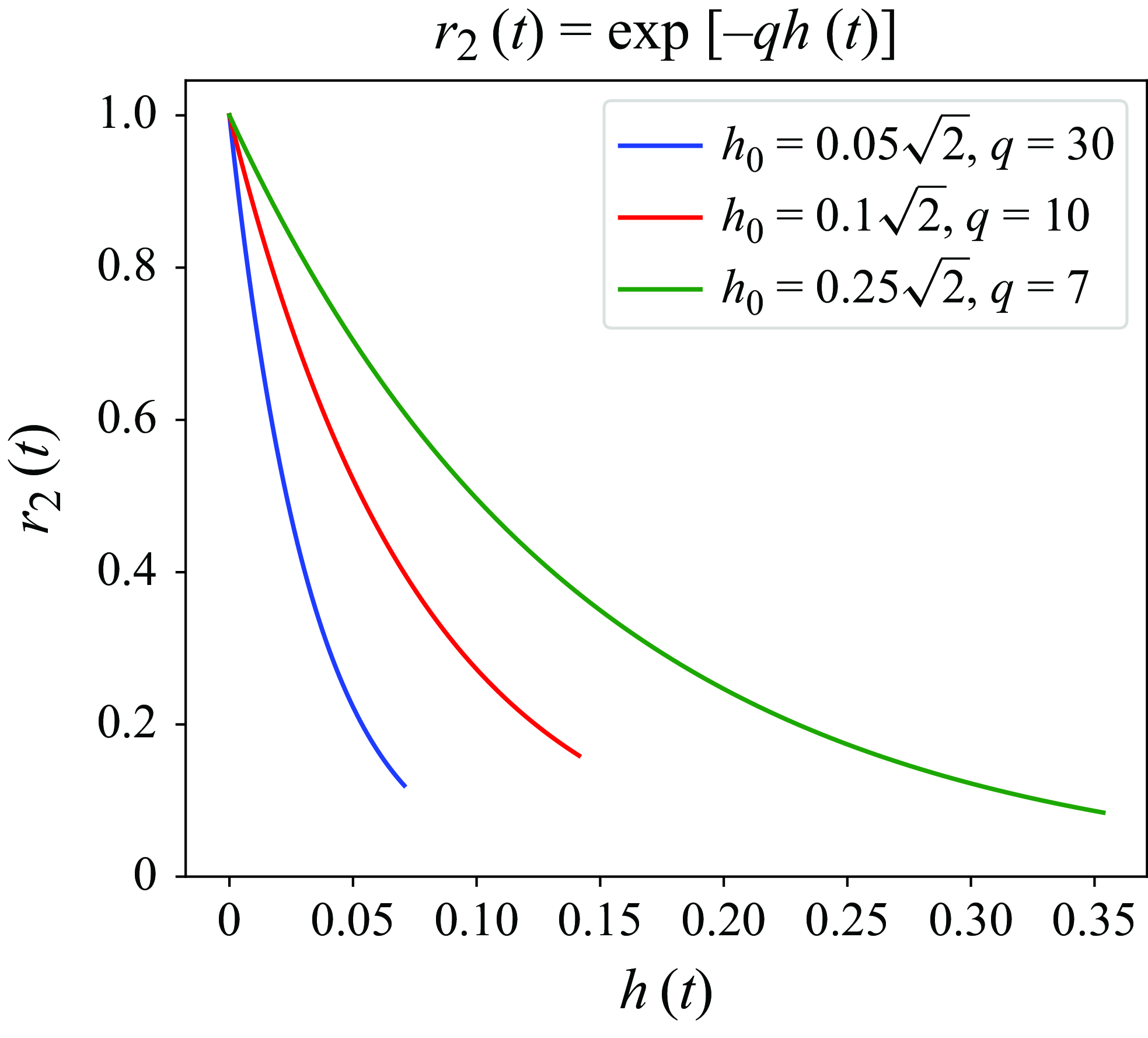

![]() $r_2(t)$

measures the droplet’s radial distance to the origin. The last term

$r_2(t)$

measures the droplet’s radial distance to the origin. The last term

![]() $r'$

is defined as

$r'$

is defined as

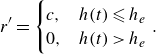

which is relevant only when the droplet reaches the target radial distance denoted by

![]() $h_e$

and

$h_e$

and

![]() $c$

is the final reward for the droplet reaching the target. Vona & Lauga (Reference Vona and Lauga2021) solely used

$c$

is the final reward for the droplet reaching the target. Vona & Lauga (Reference Vona and Lauga2021) solely used

![]() $r_1(t)$

in their reward function, which did not work well in our experiments of finite-

$r_1(t)$

in their reward function, which did not work well in our experiments of finite-

![]() $Re$

flows. Thus, we added

$Re$

flows. Thus, we added

![]() $r_2(t)$

to further incentivise the DRL controller to continuously increase the rewards as

$r_2(t)$

to further incentivise the DRL controller to continuously increase the rewards as

![]() $h(t)$

decreases and also

$h(t)$

decreases and also

![]() $r'$

for the terminal reward. In the above definition,

$r'$

for the terminal reward. In the above definition,

![]() $p,q$

are user-defined constants. A parametric study on

$p,q$

are user-defined constants. A parametric study on

![]() $p$

has been conducted by Vona & Lauga (Reference Vona and Lauga2021), which indicates that

$p$

has been conducted by Vona & Lauga (Reference Vona and Lauga2021), which indicates that

![]() $p=[0.5,\ 1,\ 1.5,\ 2]$

do not differ significantly and they chose

$p=[0.5,\ 1,\ 1.5,\ 2]$

do not differ significantly and they chose

![]() $p=1$

. In our DRL training, we found that

$p=1$

. In our DRL training, we found that

![]() $p$

can affect the convergence of training and the stability of the policy. As detailed in Appendix D, we studied the effect of hyperparameters in the reward functions and set

$p$

can affect the convergence of training and the stability of the policy. As detailed in Appendix D, we studied the effect of hyperparameters in the reward functions and set

![]() $p=2$

to encourage the droplet to move in the direction pointing to the origin. The values of the aforementioned parameters are summarised in table 1 with their meanings explained in table 2.

$p=2$

to encourage the droplet to move in the direction pointing to the origin. The values of the aforementioned parameters are summarised in table 1 with their meanings explained in table 2.

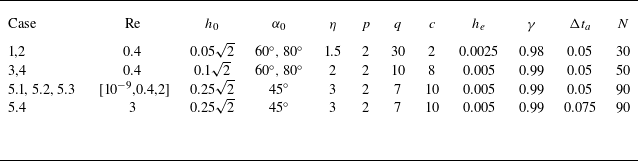

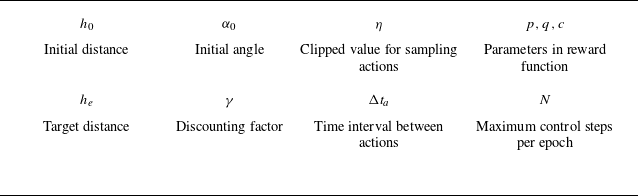

Table 1. The cases considered in this work and the parameters selected in each case under

![]() $a/b=0.625$

,

$a/b=0.625$

,

![]() $l/b=3.6$

.

$l/b=3.6$

.

![]() $h_0$

, initial radial distance to origin;

$h_0$

, initial radial distance to origin;

![]() $\alpha _0$

, initial angle;

$\alpha _0$

, initial angle;

![]() $\eta$

, clipped value for sampling actions;

$\eta$

, clipped value for sampling actions;

![]() $p,q,c$

, parameters in the reward function (2.2);

$p,q,c$

, parameters in the reward function (2.2);

![]() $h_e$

, target distance to origin;

$h_e$

, target distance to origin;

![]() $\gamma$

, discounting factor;

$\gamma$

, discounting factor;

![]() $\Delta t_a$

, time interval between adjacent control actions;

$\Delta t_a$

, time interval between adjacent control actions;

![]() $N$

, maximum control steps per epoch.

$N$

, maximum control steps per epoch.

Table 2. Explanations for the parameters in table 1.

The time interval between two consecutive actions, denoted as

![]() $\Delta t_a$

, appears to be an important parameter. Its effect on the flow control can be similarly studied as done by Bentley & Leal (Reference Bentley and Leal1986a

) by varying the time interval. We will consider fixed values of

$\Delta t_a$

, appears to be an important parameter. Its effect on the flow control can be similarly studied as done by Bentley & Leal (Reference Bentley and Leal1986a

) by varying the time interval. We will consider fixed values of

![]() $\Delta t_a$

. Specifically, the action is updated every 50 time steps, or

$\Delta t_a$

. Specifically, the action is updated every 50 time steps, or

![]() $\Delta t_a=0.05$

, in the cases

$\Delta t_a=0.05$

, in the cases

![]() $Re=[10^{-9},0.4,2]$

, whereas the action is updated every 75 time steps, or

$Re=[10^{-9},0.4,2]$

, whereas the action is updated every 75 time steps, or

![]() $\Delta t_a=0.075$

, in the case of

$\Delta t_a=0.075$

, in the case of

![]() $Re=3$

, as shown in table 1. Appendix A shows a heuristic approach for determining

$Re=3$

, as shown in table 1. Appendix A shows a heuristic approach for determining

![]() $\Delta t_a$

. Unlike in Vona & Lauga (Reference Vona and Lauga2021), wherein actions ramp up to their new values on a finite time scale, the actions here are constantly applied and unchanged during a control step, until it is updated at the next control step.

$\Delta t_a$

. Unlike in Vona & Lauga (Reference Vona and Lauga2021), wherein actions ramp up to their new values on a finite time scale, the actions here are constantly applied and unchanged during a control step, until it is updated at the next control step.

Proximal policy optimisation (PPO) is used as the training algorithm, which has a typical policy-based actor–critic network structure (Sutton & Barto Reference Sutton and Barto2018). The actor network

![]() $\pi _\theta (a_t \mid s_t )$

takes the state

$\pi _\theta (a_t \mid s_t )$

takes the state

![]() $s_t$

as the input and generates a probability distribution from which the action

$s_t$

as the input and generates a probability distribution from which the action

![]() $a_t$

is sampled. The critic network

$a_t$

is sampled. The critic network

![]() $V_\phi (s_t)$

predicts the value function of state

$V_\phi (s_t)$

predicts the value function of state

![]() $s_t$

, i.e. the discounted rewards starting from the state

$s_t$

, i.e. the discounted rewards starting from the state

![]() $s_t$

. The critic aims to provide an accurate prediction to minimise the objective function. For more technical details on PPO, the reader is referred to Schulman et al. (Reference Schulman, Wolski, Dhariwal, Radford and Klimov2017). This method has been used in previous DRL works applied to the flow control problems (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019) and also in our past work (Li & Zhang Reference Li and Zhang2022). Both the actor and the critic networks consist of two hidden layers each with 300 neurons using ReLU as the activation function. The networks are updated using the Adam-optimiser (Kingma & Ba Reference Kingma and Ba2014) with the learning rate of 0.0001 and of 0.0002 respectively. The allowable steps

$s_t$

. The critic aims to provide an accurate prediction to minimise the objective function. For more technical details on PPO, the reader is referred to Schulman et al. (Reference Schulman, Wolski, Dhariwal, Radford and Klimov2017). This method has been used in previous DRL works applied to the flow control problems (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019) and also in our past work (Li & Zhang Reference Li and Zhang2022). Both the actor and the critic networks consist of two hidden layers each with 300 neurons using ReLU as the activation function. The networks are updated using the Adam-optimiser (Kingma & Ba Reference Kingma and Ba2014) with the learning rate of 0.0001 and of 0.0002 respectively. The allowable steps

![]() $N$

in each epoch and the discount factor

$N$

in each epoch and the discount factor

![]() $\gamma$

are case-dependent, as summarised in table 1.

$\gamma$

are case-dependent, as summarised in table 1.

It is worthy mentioning that in addition to PPO, there are other algorithms in RL control. Based on how the DRL algorithms collect and use data, they can generally be classified into two categories, i.e. on-policy and off-policy methods. On-policy algorithms, such as PPO, learn exclusively from data generated by the current policy being trained. While this ensures that the training data are always aligned with the policy, it can lead to lower sample efficiency since past experiences are discarded. Off-policy algorithms, such as SAC (soft actor–critic) and DDPG (deep deterministic policy gradient), can learn from historical data collected by any policy, including those different from the current one. This reuse of past experiences makes off-policy methods more sample-efficient. However, their off-policy nature often introduces challenges in stability and convergence, requiring careful hyperparameter tuning and additional optimisation techniques.

In the fluid dynamics community, PPO has been widely adopted due to its stability and robustness, as demonstrated in studies such as Rabault et al. (Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019), Fan et al. (Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020), Ren, Rabault & Tang (Reference Ren, Rabault and Tang2021) and Li & Zhang (Reference Li and Zhang2022). DDPG has also been successfully applied in works like Bucci et al. (Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019), Zeng & Graham (Reference Zeng and Graham2021), Kim et al. (Reference Kim, Kim, Kim and Lee2022) and Xu & Zhang (Reference Xu and Zhang2023). For this study, we opted for PPO primarily because of its stability and lower sensitivity to hyperparameter variations. Furthermore, our approach leverages geometric symmetry, which already enhances the sample efficiency. This reduces the importance of the trade-off between stability and sample efficiency, making PPO a suitable choice for our framework. While other DRL algorithms might offer performance improvements, we believe that the core novelty of our work, that is, investigating inertial effects and geometric symmetry, is effectively captured within our current numerical framework. This will be demonstrated in § 3.

In the end, we explain the other theme of the work, that is, how to use the geometric symmetry in FRM for DRL control. In general, leveraging symmetry to enhance models in machine learning has recently emerged as a prominent research trend (Otto et al. Reference Otto, Zolman, Kutz and Brunton2023). In DRL-related works, similar concepts have demonstrated improved model performance, such as the invariance of locality discussed by Belus et al. (Reference Belus, Rabault, Viquerat, Che, Hachem and Reglade2019), Vignon et al. (Reference Vignon, Rabault, Vasanth, Alcántara-Ávila, Mortensen and Vinuesa2023), Vasanth, Rabault & Vinuesa (Reference Vasanth, Rabault, Alcántara-Ávila, Mortensen and Vinuesa2024) and Suárez et al. (Reference Suárez, lcantara-Á vila, Rabault, Miró, Font, Lehmkuhl and Vinuesa2024, Reference Suárez, lcantara-Á vila, Miró, Rabault, Font, Lehmkuhl and Vinuesa2025). In these works, the reward function is densely defined within specific local regions influenced by control actions. However, we emphasise that the geometric symmetry employed in this study is distinct from these approaches. It is derived from the global transformation framework introduced by van der Pol et al. (Reference van der Pol, Worrall, van Hoof, Oliehoek and Welling2020). Thanks to the geometric symmetry of FRM, the whole domain can be evenly divided into eight sub-quadrants from i to viii, as shown in figure 1(

![]() $a$

). Following the notation of van der Pol et al. (Reference van der Pol, Worrall, van Hoof, Oliehoek and Welling2020), for state

$a$

). Following the notation of van der Pol et al. (Reference van der Pol, Worrall, van Hoof, Oliehoek and Welling2020), for state

![]() $s\in \mathcal{S}$

, action

$s\in \mathcal{S}$

, action

![]() $a\in \mathcal{A}$

and reward

$a\in \mathcal{A}$

and reward

![]() $r\in \mathbb{R}^+$

, under transformation operators

$r\in \mathbb{R}^+$

, under transformation operators

![]() $L_g:\mathcal{S}\rightarrow \mathcal{S}$

and

$L_g:\mathcal{S}\rightarrow \mathcal{S}$

and

![]() $K_g^s:\mathcal{A}\rightarrow \mathcal{A}$

, a symmetry-enhanced DRL algorithm can be constructed as

$K_g^s:\mathcal{A}\rightarrow \mathcal{A}$

, a symmetry-enhanced DRL algorithm can be constructed as

where

![]() $L_g$

or

$L_g$

or

![]() $K_g^s$

defines the transformation of state or action using the inherent symmetry. The equation implies that the immediate reward of the state–action pair remains the same after the transformation. So,

$K_g^s$

defines the transformation of state or action using the inherent symmetry. The equation implies that the immediate reward of the state–action pair remains the same after the transformation. So,

![]() $s$

(or

$s$

(or

![]() $a$

) and

$a$

) and

![]() $L_g[s]$

(or

$L_g[s]$

(or

![]() $K_g^s[a]$

) are equivalent in

$K_g^s[a]$

) are equivalent in

![]() $\mathcal{S}$

(or

$\mathcal{S}$

(or

![]() $\mathcal{A}$

). One can train a control policy in the lifted space (i.e. one of the eight sub-quadrants) and, once the training process is converged, map the policy back and apply it to the entire domain (see § 3.2 for details). Note that this concept is different from constraining the numerical simulations of FRM in a sub-quadrant.

$\mathcal{A}$

). One can train a control policy in the lifted space (i.e. one of the eight sub-quadrants) and, once the training process is converged, map the policy back and apply it to the entire domain (see § 3.2 for details). Note that this concept is different from constraining the numerical simulations of FRM in a sub-quadrant.

3. Results and discussion

In the following, we will first present the typical training results of DRL for

![]() $Re=0.4$

. Then, the DRL policy leveraging the geometric symmetry of FRM will be constructed to demonstrate the advantage of symmetry consideration. Finally, we will focus on the other theme of the paper, i.e. the characterisation of the inertial effect in the framework of DRL control.

$Re=0.4$

. Then, the DRL policy leveraging the geometric symmetry of FRM will be constructed to demonstrate the advantage of symmetry consideration. Finally, we will focus on the other theme of the paper, i.e. the characterisation of the inertial effect in the framework of DRL control.

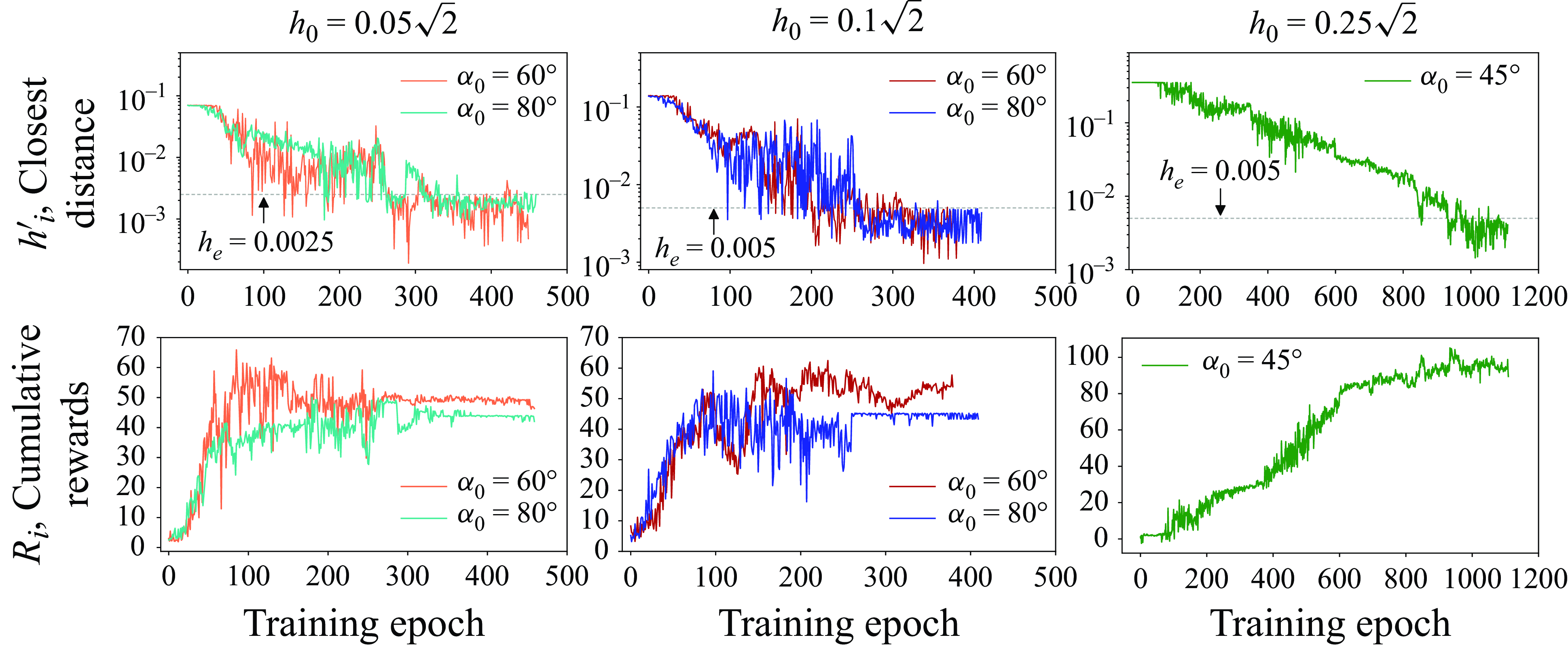

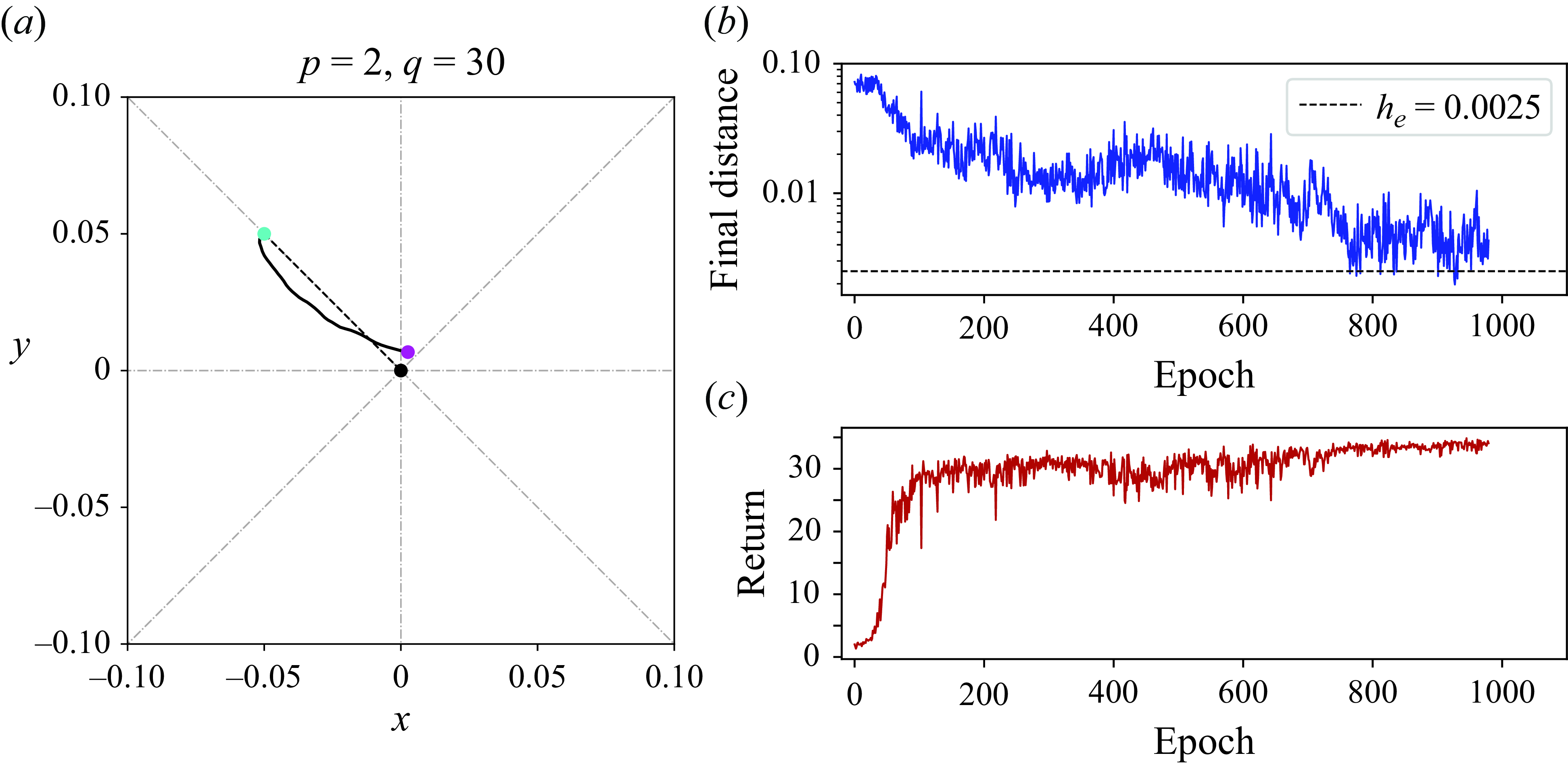

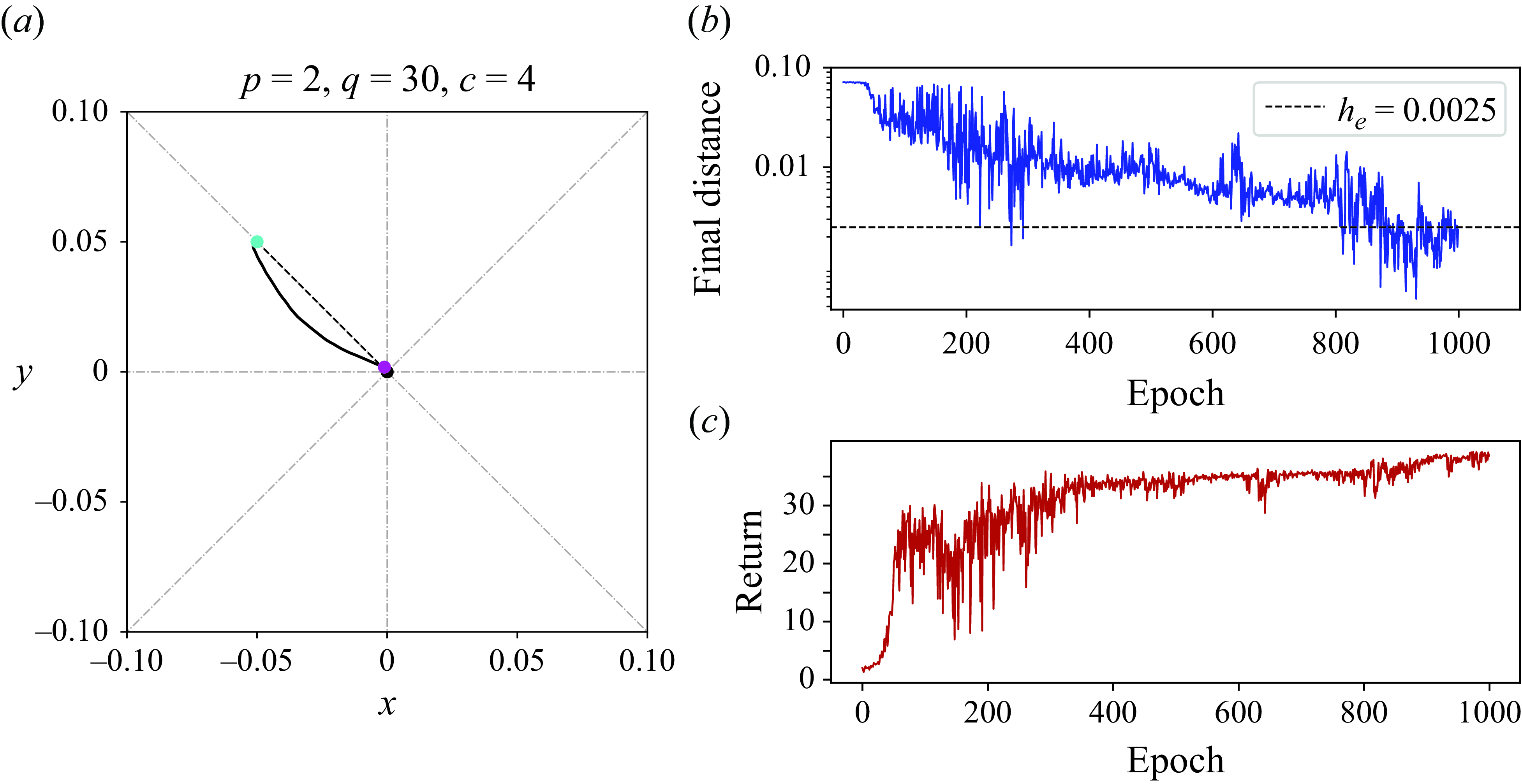

Figure 2. Agent training history for droplets at different initial positions. The first row corresponds to the closest radial distance to the origin. The second row shows the cumulative rewards of each epoch.

3.1. Training results of DRL for

$Re=0.4$

$Re=0.4$

This section explains the controlled results using the DRL method for a droplet initially placed in the sub-quadrant iii. Note that in this case, it is the roller (1) and (2) of which we implement the modulation of the rotation rates. Figure 2 displays the agent training history in terms of epochs for all the five considered cases. In the first row,

![]() $h'_i$

measures the minimum distance of the droplet to the origin in the

$h'_i$

measures the minimum distance of the droplet to the origin in the

![]() $i$

th epoch. As the training proceeds, the value of

$i$

th epoch. As the training proceeds, the value of

![]() $h'_i$

gradually decreases and consistently stays below

$h'_i$

gradually decreases and consistently stays below

![]() $h_e$

in the end. During the training, there are occasional ‘downward overshoots’ of

$h_e$

in the end. During the training, there are occasional ‘downward overshoots’ of

![]() $h'_i$

falling below

$h'_i$

falling below

![]() $h_e$

. We consider the training to have converged when there are more than 30–50 consecutive epochs of successful control, indicated by

$h_e$

. We consider the training to have converged when there are more than 30–50 consecutive epochs of successful control, indicated by

![]() $h'_i\lt h_e$

. Overall,

$h'_i\lt h_e$

. Overall,

![]() ${\sim}400$

epochs are commonly required to train cases 1–4 and

${\sim}400$

epochs are commonly required to train cases 1–4 and

![]() ${\sim}1100$

epochs for case 5. This difference additionally testifies to the difficulty in controlling the last case, which is substantially far from the origin. The second row of the figure shows the cumulative rewards

${\sim}1100$

epochs for case 5. This difference additionally testifies to the difficulty in controlling the last case, which is substantially far from the origin. The second row of the figure shows the cumulative rewards

![]() $R_i$

, which adds up all the immediate rewards of the training steps in the

$R_i$

, which adds up all the immediate rewards of the training steps in the

![]() $i$

th epoch. In cases 1–4, the values of

$i$

th epoch. In cases 1–4, the values of

![]() $R_i$

initially rise rapidly, followed by significant fluctuations, and eventually stabilise as the droplet nears the origin. Case 5 presents a continuous climbing-up trend in the training process. Combined together, the results of

$R_i$

initially rise rapidly, followed by significant fluctuations, and eventually stabilise as the droplet nears the origin. Case 5 presents a continuous climbing-up trend in the training process. Combined together, the results of

![]() $h_i$

and

$h_i$

and

![]() $R_i$

imply that the agent learns from the interactions with the flow environment and updates its policy to guide the droplet to move towards the origin. With a sufficient amount of training epochs, the droplet is capable of reaching the target distance indicated by

$R_i$

imply that the agent learns from the interactions with the flow environment and updates its policy to guide the droplet to move towards the origin. With a sufficient amount of training epochs, the droplet is capable of reaching the target distance indicated by

![]() $h_e$

. These results extend those of Vona & Lauga (Reference Vona and Lauga2021), where initial positions of drops typically within

$h_e$

. These results extend those of Vona & Lauga (Reference Vona and Lauga2021), where initial positions of drops typically within

![]() $h_0\lt 0.05\sqrt {2}$

were considered, and demonstrate that droplets placed further away from the origin can also be controlled successfully by DRL.

$h_0\lt 0.05\sqrt {2}$

were considered, and demonstrate that droplets placed further away from the origin can also be controlled successfully by DRL.

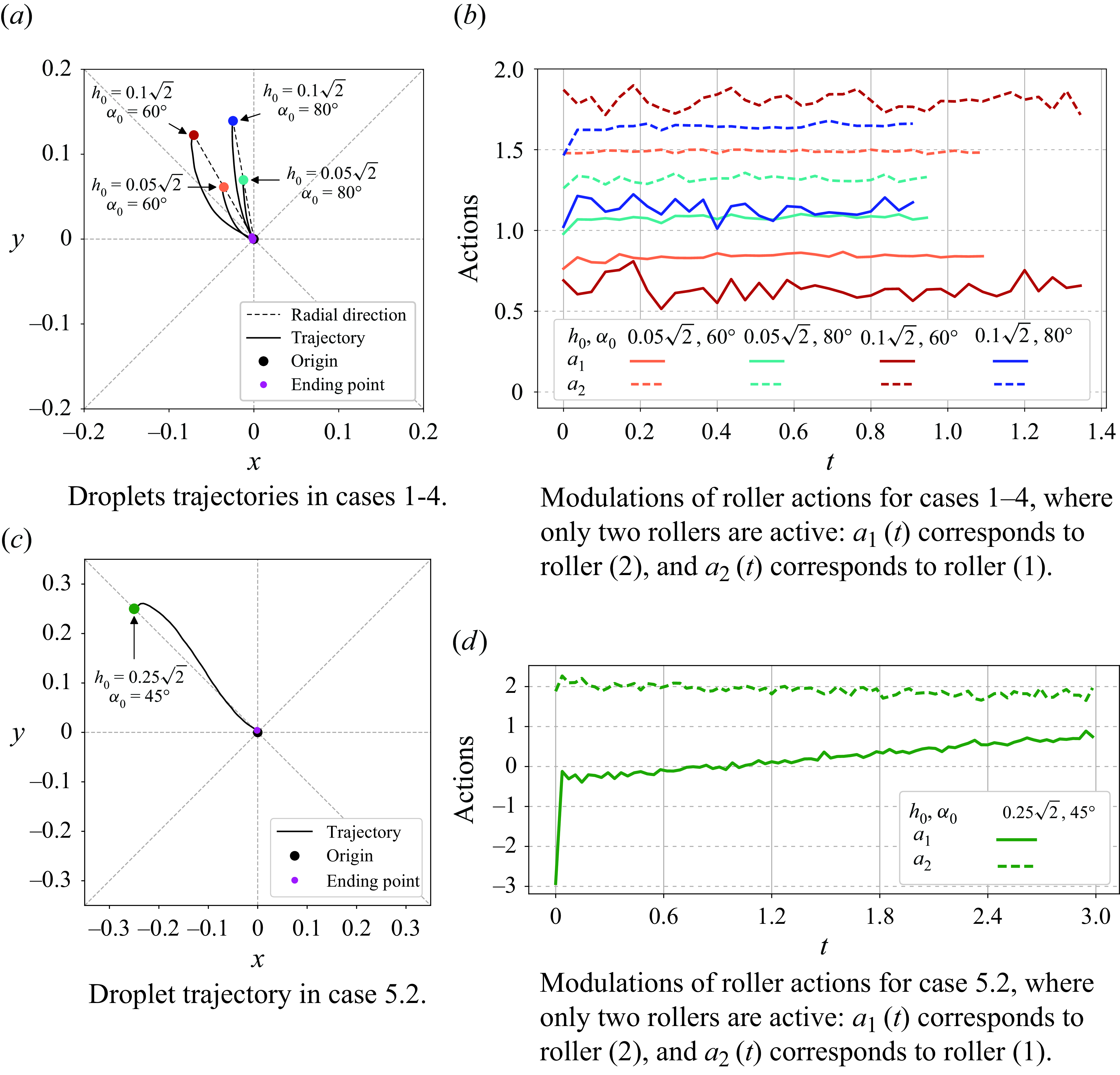

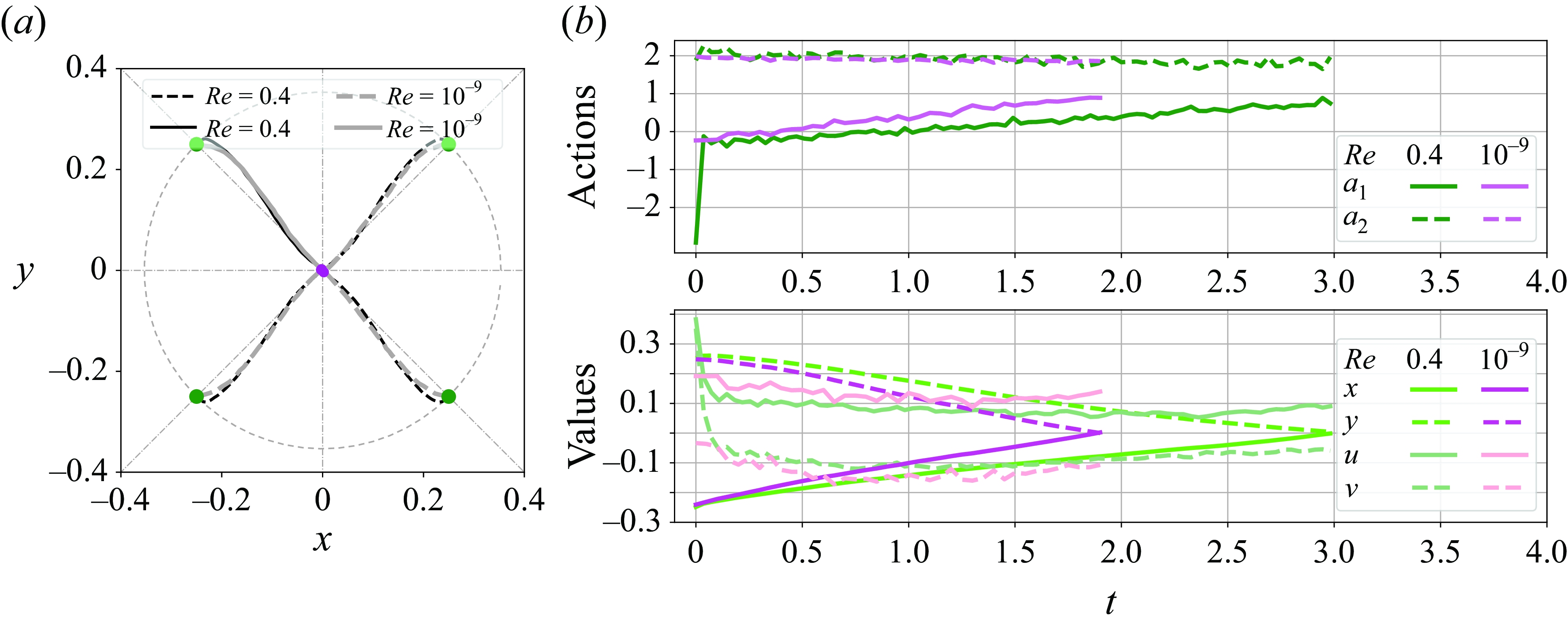

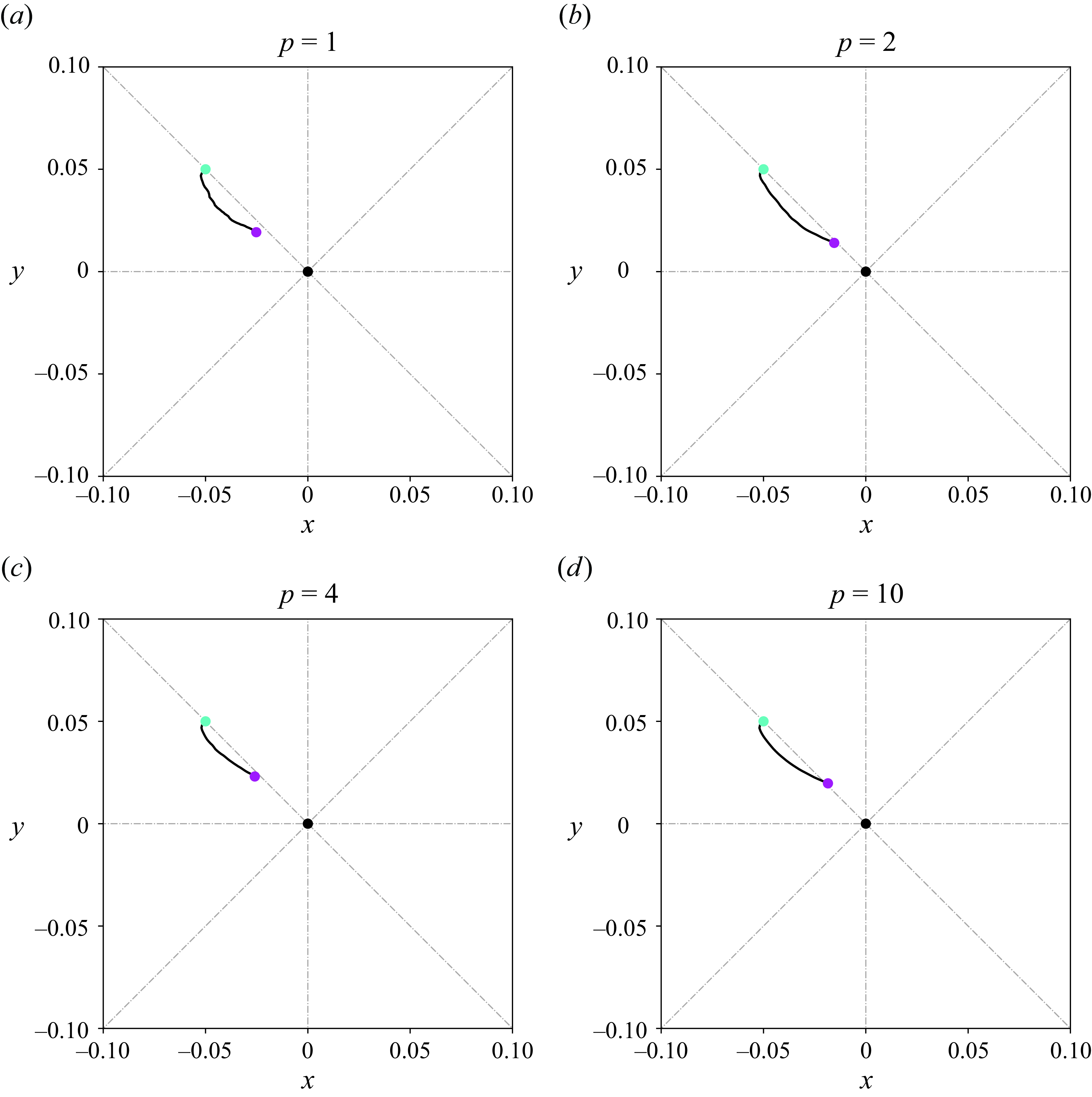

Figure 3. Converged policies in figure 2 are run for

![]() $50$

episodes at

$50$

episodes at

![]() $Re=0.4$

, all droplets are successfully driven to the target radial distance. Panels (a), (c) demonstrate representative trajectories for all the considered cases. Panels (b), (d) display the corresponding actions. Note that only two rollers (1) and (2) are acted on, and an action is followed by a waiting time

$Re=0.4$

, all droplets are successfully driven to the target radial distance. Panels (a), (c) demonstrate representative trajectories for all the considered cases. Panels (b), (d) display the corresponding actions. Note that only two rollers (1) and (2) are acted on, and an action is followed by a waiting time

![]() $\Delta t_a=50\Delta t$

until the next one, during which it is unchanged. Therefore, in each control step shown in panels (b), (d), actions are actually step functions, and continuous lines in these panels are guides for the eye.

$\Delta t_a=50\Delta t$

until the next one, during which it is unchanged. Therefore, in each control step shown in panels (b), (d), actions are actually step functions, and continuous lines in these panels are guides for the eye.

To test the effectiveness of trained policies, the converged ones have been run for an additional

![]() $50$

epochs and we find that in all the tests, the droplets can be driven back to the origin within the target radial distance

$50$

epochs and we find that in all the tests, the droplets can be driven back to the origin within the target radial distance

![]() $h_e$

. Figure 3 draws the representative trajectories and the associated actions for cases 1–4 in panel (

$h_e$

. Figure 3 draws the representative trajectories and the associated actions for cases 1–4 in panel (

![]() $a,b$

) and for case 5 in panel (

$a,b$

) and for case 5 in panel (

![]() $c,d$

). It can be noticed that these trajectories exhibit smooth transitions from their starting point to the ending point, which are in contrast to the results of Vona & Lauga (Reference Vona and Lauga2021) where the paths manifest zigzags. Possible reasons may include that Vona & Lauga (Reference Vona and Lauga2021) studied a model of inertia-less Stokes flow without a time derivative term, while our work is based on DNS with finite inertial effect. Another possible reason might be that Vona & Lauga (Reference Vona and Lauga2021) reset the roller velocity to its default right before the next action, giving rise to zigzags, whereas we continuously apply the action in all steps.

$c,d$

). It can be noticed that these trajectories exhibit smooth transitions from their starting point to the ending point, which are in contrast to the results of Vona & Lauga (Reference Vona and Lauga2021) where the paths manifest zigzags. Possible reasons may include that Vona & Lauga (Reference Vona and Lauga2021) studied a model of inertia-less Stokes flow without a time derivative term, while our work is based on DNS with finite inertial effect. Another possible reason might be that Vona & Lauga (Reference Vona and Lauga2021) reset the roller velocity to its default right before the next action, giving rise to zigzags, whereas we continuously apply the action in all steps.

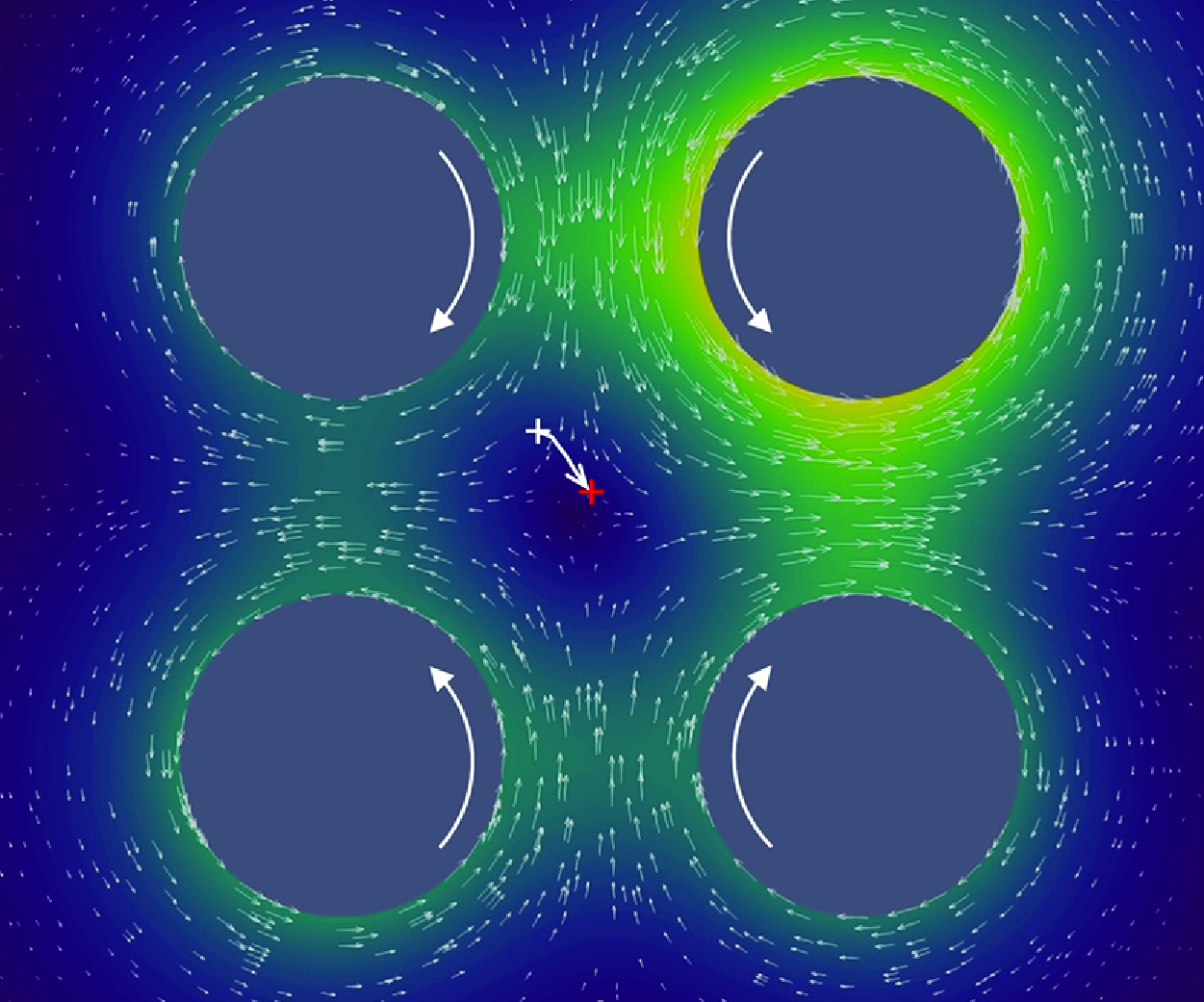

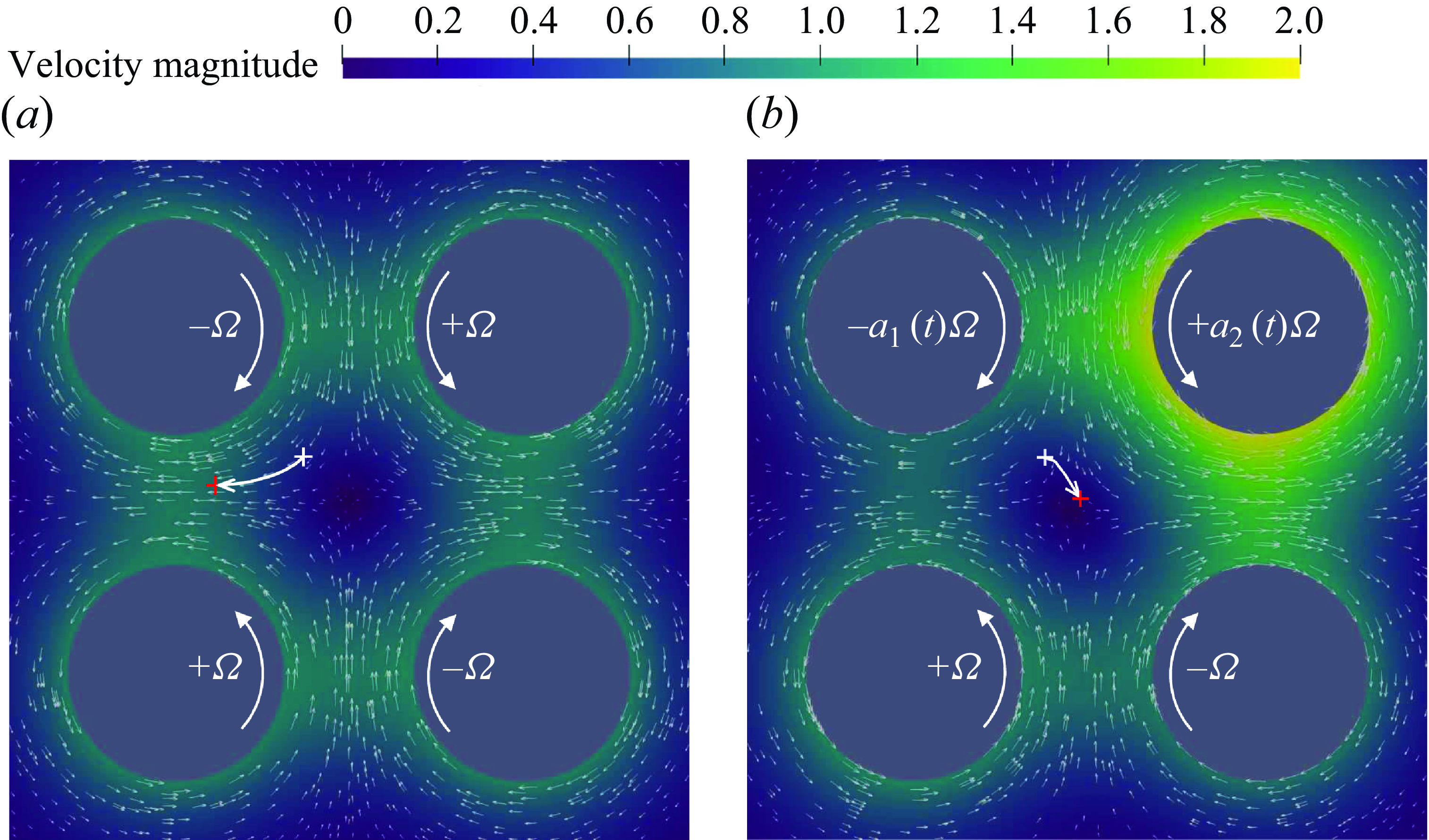

Figure 4. Instantaneous quiver plots for the velocity field in case 5.2 at

![]() $Re=0.4$

. (a) Without control. The droplet tends to be swept away. The flow is inherently symmetric. (b) The last time step of an epoch for a converged policy. The white cross represents the starting position of the droplet and the red one the ending position in this control case of

$Re=0.4$

. (a) Without control. The droplet tends to be swept away. The flow is inherently symmetric. (b) The last time step of an epoch for a converged policy. The white cross represents the starting position of the droplet and the red one the ending position in this control case of

![]() $h_0=0.25\sqrt {2}$

and

$h_0=0.25\sqrt {2}$

and

![]() $\alpha _0=45^{\circ }$

.

$\alpha _0=45^{\circ }$

.

The actions exerted by the agent reflect a controlling logic, which is consistent with the underlying flow physics. Specifically, the difference between

![]() $a_1$

and

$a_1$

and

![]() $a_2$

becomes larger with smaller

$a_2$

becomes larger with smaller

![]() $\alpha _0$

, see figure 3(

$\alpha _0$

, see figure 3(

![]() $b$

). Note that for all the considered droplets initiated in sub-quadrant iii,

$b$

). Note that for all the considered droplets initiated in sub-quadrant iii,

![]() $a_1$

is assigned to roller (2) and

$a_1$

is assigned to roller (2) and

![]() $a_2$

is assigned to roller (1). The extensional flow generated by the baseline rotation rate

$a_2$

is assigned to roller (1). The extensional flow generated by the baseline rotation rate

![]() ${\omega }_B$

presents influx from the top/bottom and outflux towards the left/right in the regions between the rollers (see figure 4). The droplet initially positioned with smaller

${\omega }_B$

presents influx from the top/bottom and outflux towards the left/right in the regions between the rollers (see figure 4). The droplet initially positioned with smaller

![]() $\alpha _0$

tends to be swept away by the outflux with a stronger left-pulling force; to counteract the left-pulling force exerted by the outflux, the roller (1) modulated by

$\alpha _0$

tends to be swept away by the outflux with a stronger left-pulling force; to counteract the left-pulling force exerted by the outflux, the roller (1) modulated by

![]() $a_2(t)$

should work ‘harder’ to steer the droplet to move clockwise. Thus, the value of

$a_2(t)$

should work ‘harder’ to steer the droplet to move clockwise. Thus, the value of

![]() $a_2(t)$

is in general larger in the case of smaller

$a_2(t)$

is in general larger in the case of smaller

![]() $\alpha _0$

for the same

$\alpha _0$

for the same

![]() $h_0$

. This also explains why the DRL algorithm yields a controlled trajectory which deviates more from the straight direction (the dashed lines, see figure 3

$h_0$

. This also explains why the DRL algorithm yields a controlled trajectory which deviates more from the straight direction (the dashed lines, see figure 3

![]() $a$

) in the smaller

$a$

) in the smaller

![]() $\alpha _0$

cases. This effect is less severe in the cases of larger

$\alpha _0$

cases. This effect is less severe in the cases of larger

![]() $\alpha _0$

, resulting in a smaller difference between

$\alpha _0$

, resulting in a smaller difference between

![]() $a_1$

and

$a_1$

and

![]() $a_2$

in panel (

$a_2$

in panel (

![]() $b$

). In case 5.2, the sign of

$b$

). In case 5.2, the sign of

![]() $a_1$

is even negative at the beginning (see figure 3

$a_1$

is even negative at the beginning (see figure 3

![]() $d$

), reversing its rotation direction, which also aims to counteract the local outflux pulling the droplet to the left and, together with

$d$

), reversing its rotation direction, which also aims to counteract the local outflux pulling the droplet to the left and, together with

![]() $a_2$

, generate a trajectory as shown in figure 3(

$a_2$

, generate a trajectory as shown in figure 3(

![]() $c$

). Figure 4 shows exemplary instantaneous quiver plots of the velocity field in case 5.2. Without control, the droplet will move exponentially away from the initial position, as shown in panel (

$c$

). Figure 4 shows exemplary instantaneous quiver plots of the velocity field in case 5.2. Without control, the droplet will move exponentially away from the initial position, as shown in panel (

![]() $a$

). That the roller (1)’s action is stronger is also consistent with the roller choice of Vona & Lauga (Reference Vona and Lauga2021). The inertial effect will be further compared with small-

$a$

). That the roller (1)’s action is stronger is also consistent with the roller choice of Vona & Lauga (Reference Vona and Lauga2021). The inertial effect will be further compared with small-

![]() $Re$

flows in § 3.3.1.

$Re$

flows in § 3.3.1.

In the end, we would like to discuss the robustness of the trained policy under random noise and its generalisability to other initial conditions. Appendix B demonstrates the effectiveness of the policies in noisy environments by introducing a thermal noise term into the NS equation. Additionally, Appendix C explores the potential for a global policy by applying the policy trained for the specific initial condition

![]() $\boldsymbol{x}_0 = (-0.03, 0.02)$

to other points in a nearby region. The results reveal that while droplets released from initial positions close to that used for training the policy can sometimes be successfully controlled, the policy remains sensitive to initial conditions otherwise. Several factors may contribute to this sensitivity. First, in regions of extensional flow with steep flow gradients, small perturbations in the initial conditions can lead to significant trajectory deviations, complicating the control task. Second, since the DRL training is tailored to a specific initial position, the trained policy may not generalise well to regions with distinct flow characteristics, increasing the likelihood of control failure for significantly different initial conditions. Nonetheless, our findings indicate that the policy can effectively manage certain nearby initial positions, as shown by the green dots in figure 12 in Appendix C.

$\boldsymbol{x}_0 = (-0.03, 0.02)$

to other points in a nearby region. The results reveal that while droplets released from initial positions close to that used for training the policy can sometimes be successfully controlled, the policy remains sensitive to initial conditions otherwise. Several factors may contribute to this sensitivity. First, in regions of extensional flow with steep flow gradients, small perturbations in the initial conditions can lead to significant trajectory deviations, complicating the control task. Second, since the DRL training is tailored to a specific initial position, the trained policy may not generalise well to regions with distinct flow characteristics, increasing the likelihood of control failure for significantly different initial conditions. Nonetheless, our findings indicate that the policy can effectively manage certain nearby initial positions, as shown by the green dots in figure 12 in Appendix C.

3.2. DRL policy leveraging geometric symmetry of FRM

In § 2.2, we have briefly mentioned that the geometric symmetry of FRM enables the control policies trained in one sub-quadrant to be applied to the entire flow domain. This section elaborates on the utilisation of this symmetry using policies trained in § 3.1. To begin, considering a droplet initially positioned in the sub-quadrant iii, represented by the blue dot in figure 5, we denote the state of the droplet at time

![]() $t$

as

$t$

as

![]() $s^{iii}(t)=[x(t),\ y(t),\ u(t),\ v(t),\ k_x(t),\ k_y(t)]$

, and the actions determined by the agent at time

$s^{iii}(t)=[x(t),\ y(t),\ u(t),\ v(t),\ k_x(t),\ k_y(t)]$

, and the actions determined by the agent at time

![]() $t$

as

$t$

as

![]() $a(t)=[a_1(t),\ a_2(t)]$

as in

$a(t)=[a_1(t),\ a_2(t)]$

as in

![]() ${\omega }^{iii}(t)=[+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega,\,-\Omega ]$

. The optimal policy is thus denoted as

${\omega }^{iii}(t)=[+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega,\,-\Omega ]$

. The optimal policy is thus denoted as

![]() $\pi _\theta (a(t) | s^{iii}(t) )$

. By using the proper transformations based on the geometric symmetry, this policy

$\pi _\theta (a(t) | s^{iii}(t) )$

. By using the proper transformations based on the geometric symmetry, this policy

![]() $\pi _\theta$

can be applied to the entire domain, as explained below.

$\pi _\theta$

can be applied to the entire domain, as explained below.

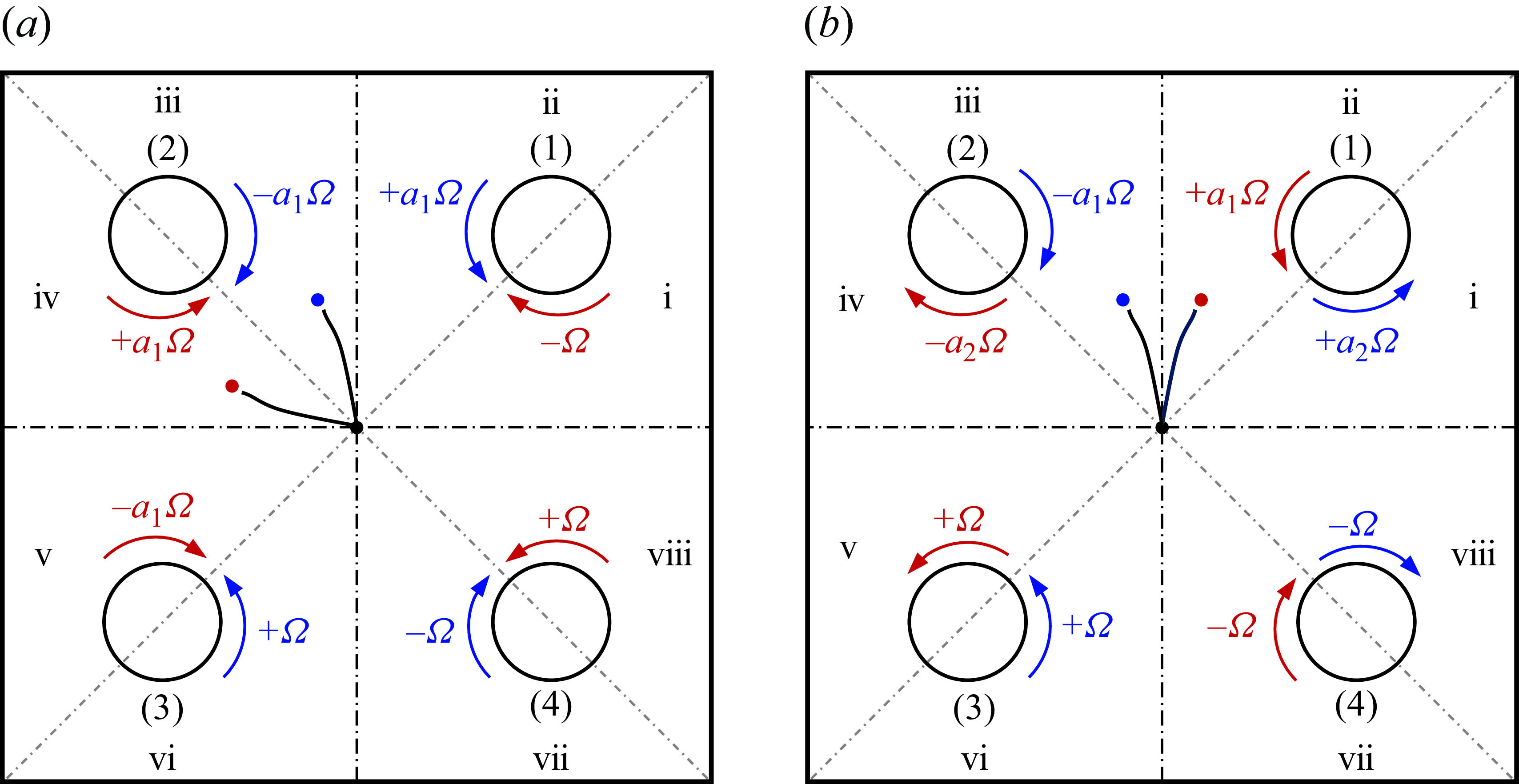

Figure 5. The domain of FRM is evenly divided into eight sub-quadrants. By the geometric symmetry, the control policy trained in one of the sub-quadrants can be applied to the entire domain. For example, panel (

![]() $a$

) shows that the dynamics of the blue droplet in sub-quadrant iii and that of the red droplet in the sub-quadrant iv is symmetric with respect to the antidiagonal line (

$a$

) shows that the dynamics of the blue droplet in sub-quadrant iii and that of the red droplet in the sub-quadrant iv is symmetric with respect to the antidiagonal line (

![]() ${-}45^\circ$

). Panel (

${-}45^\circ$

). Panel (

![]() $b$

) shows that symmetry with respect to the vertical axis for the droplets in sub-quadrants ii and iii.

$b$

) shows that symmetry with respect to the vertical axis for the droplets in sub-quadrants ii and iii.

For instance, in figure 5

![]() $(a)$

, the red droplet in sub-quadrant iv is symmetric to the blue droplet in sub-quadrant iii about the antidiagonal line (

$(a)$

, the red droplet in sub-quadrant iv is symmetric to the blue droplet in sub-quadrant iii about the antidiagonal line (

![]() $-45^\circ$

). In this case, the state of the red droplet can be expressed in terms of that of the blue droplet by

$-45^\circ$

). In this case, the state of the red droplet can be expressed in terms of that of the blue droplet by

![]() $s^{iv}(t)=[-y(t),\,-x(t),\,-v(t),\,-u(t),\,-k_y(t),\,-k_x(t)]$

. The relation can also be written in a matrix form (with

$s^{iv}(t)=[-y(t),\,-x(t),\,-v(t),\,-u(t),\,-k_y(t),\,-k_x(t)]$

. The relation can also be written in a matrix form (with

![]() $s^{iv}(t), s^{iii}(t)$

interpreted as columns)

$s^{iv}(t), s^{iii}(t)$

interpreted as columns)

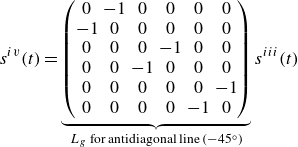

\begin{align} s^{iv}(t)=\underbrace {\begin{pmatrix} 0 & -1 & 0 & 0 & 0 & 0 \\ -1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 & 0 & 0 \\ 0 & 0 & -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & -1 \\ 0 & 0 & 0 & 0 & -1 & 0 \\ \end{pmatrix}}_{L_g\text{ for antidiagonal line } (-45^\circ )} s^{iii}(t) \end{align}

\begin{align} s^{iv}(t)=\underbrace {\begin{pmatrix} 0 & -1 & 0 & 0 & 0 & 0 \\ -1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 & 0 & 0 \\ 0 & 0 & -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & -1 \\ 0 & 0 & 0 & 0 & -1 & 0 \\ \end{pmatrix}}_{L_g\text{ for antidiagonal line } (-45^\circ )} s^{iii}(t) \end{align}

The

![]() $L_g$

’s using the symmetry with respect to the horizontal axis, vertical axis and diagonal line read respectively

$L_g$

’s using the symmetry with respect to the horizontal axis, vertical axis and diagonal line read respectively

\begin{align} \underbrace {\begin{pmatrix} 1 & 0 & 0 & 0 & 0 & 0 \\ 0 & -1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 & 0 & 0 \\ 0 & 0 & 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 0 & 0 & -1 \\ \end{pmatrix}}_{L_g \text{ for horizontal line}}, \!\!\!\!\!\! \quad \underbrace {\begin{pmatrix} -1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 & 0 \\ 0 & 0 & 0 & 0 & -1 & 0 \\ 0 & 0 & 0 & 0 & 0 & 1 \\ \end{pmatrix}}_{L_g \text{ for vertical line}}\!\!\!\quad \text{and} \!\!\!\quad \underbrace {\begin{pmatrix} 0 & 1 & 0 & 0 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 & 1 & 0 \\ \end{pmatrix}}_{L_g \text{ for diagonal line}\ (45^\circ )}\! . \\[-24pt] \nonumber \end{align}

\begin{align} \underbrace {\begin{pmatrix} 1 & 0 & 0 & 0 & 0 & 0 \\ 0 & -1 & 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 & 0 & 0 \\ 0 & 0 & 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 0 & 0 & -1 \\ \end{pmatrix}}_{L_g \text{ for horizontal line}}, \!\!\!\!\!\! \quad \underbrace {\begin{pmatrix} -1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 & 0 & 0 \\ 0 & 0 & -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 & 0 \\ 0 & 0 & 0 & 0 & -1 & 0 \\ 0 & 0 & 0 & 0 & 0 & 1 \\ \end{pmatrix}}_{L_g \text{ for vertical line}}\!\!\!\quad \text{and} \!\!\!\quad \underbrace {\begin{pmatrix} 0 & 1 & 0 & 0 & 0 & 0 \\ 1 & 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 & 1 & 0 \\ \end{pmatrix}}_{L_g \text{ for diagonal line}\ (45^\circ )}\! . \\[-24pt] \nonumber \end{align}

Note that the diagonal line in this work is defined as that with an angle of

![]() $45^\circ$

.

$45^\circ$

.

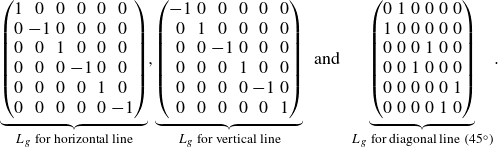

To apply the policy

![]() $\pi _\theta$

already trained in sub-quadrant iii to iv, we also need to consider the action in sub-quadrant iii to be transformed to

$\pi _\theta$

already trained in sub-quadrant iii to iv, we also need to consider the action in sub-quadrant iii to be transformed to

![]() ${\omega }^{iv}(t)=[-\Omega,\,+a_1(t)\Omega, -a_2(t)\Omega,\,+\Omega ]$

according to the symmetry with respect to the antidiagonal line, or

${\omega }^{iv}(t)=[-\Omega,\,+a_1(t)\Omega, -a_2(t)\Omega,\,+\Omega ]$

according to the symmetry with respect to the antidiagonal line, or

![]() ${\omega }^{iv}(t)=K_g^s{\omega }^{iii}(t)$

,

${\omega }^{iv}(t)=K_g^s{\omega }^{iii}(t)$

,

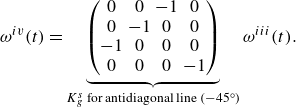

\begin{align} {\omega }^{iv}(t)=\underbrace {\begin{pmatrix} 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ \end{pmatrix}}_{K_g^s \text{ for antidiagonal line } (-45^\circ )} {\omega }^{iii}(t). \end{align}

\begin{align} {\omega }^{iv}(t)=\underbrace {\begin{pmatrix} 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ \end{pmatrix}}_{K_g^s \text{ for antidiagonal line } (-45^\circ )} {\omega }^{iii}(t). \end{align}

In this case, the rotation directions of all rollers are reversed as indicated by the red arrows in panel (

![]() $a$

). Similarly, figure 5(

$a$

). Similarly, figure 5(

![]() $b$

) shows that the symmetry with respect to the vertical axis can be leveraged to control the droplet initially positioned in sub-quadrant ii based on the control policy trained in sub-quadrant iii. The

$b$

) shows that the symmetry with respect to the vertical axis can be leveraged to control the droplet initially positioned in sub-quadrant ii based on the control policy trained in sub-quadrant iii. The

![]() $K_g^s$

’s using the symmetry with respect to the horizontal axis, vertical axis and diagonal line read respectively

$K_g^s$

’s using the symmetry with respect to the horizontal axis, vertical axis and diagonal line read respectively

\begin{align} \underbrace {\begin{pmatrix} 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for horizontal line}}, \quad \underbrace {\begin{pmatrix} 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for vertical line}} \quad \text{ and } \quad \underbrace {\begin{pmatrix} -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for diagonal line } (45^\circ )}. \end{align}

\begin{align} \underbrace {\begin{pmatrix} 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for horizontal line}}, \quad \underbrace {\begin{pmatrix} 0 & -1 & 0 & 0 \\ -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for vertical line}} \quad \text{ and } \quad \underbrace {\begin{pmatrix} -1 & 0 & 0 & 0 \\ 0 & 0 & 0 & -1 \\ 0 & 0 & -1 & 0 \\ 0 & -1 & 0 & 0 \\ \end{pmatrix}}_{K_g^s \text{ for diagonal line } (45^\circ )}. \end{align}

The idea can be further extended to the remaining sub-quadrants. To sum up, the corresponding states and actions can be summarised as

-

(i) i:

$s^{i}(t)=[y(t),\ -x(t),\ v(t),\ -u(t),\ k_{y}(t),\ -k_{x}(t)]$

;

$s^{i}(t)=[y(t),\ -x(t),\ v(t),\ -u(t),\ k_{y}(t),\ -k_{x}(t)]$

;

${\omega }^{i}(t)=[-a_1(t)\Omega,\,+\Omega,\,-\Omega,\,+a_2(t)\Omega ]$

;

${\omega }^{i}(t)=[-a_1(t)\Omega,\,+\Omega,\,-\Omega,\,+a_2(t)\Omega ]$

; -

(ii) ii:

$s^{ii}(t)=[-x(t),\ y(t),\ -u(t),\ v(t),\ -k_{x}(t),\ k_{y}(t)]$

;

$s^{ii}(t)=[-x(t),\ y(t),\ -u(t),\ v(t),\ -k_{x}(t),\ k_{y}(t)]$

;

${\omega }^{ii}(t)=[+a_1(t)\Omega , -a_2(t)\Omega,\,+\Omega,\,-\Omega ]$

;

${\omega }^{ii}(t)=[+a_1(t)\Omega , -a_2(t)\Omega,\,+\Omega,\,-\Omega ]$

; -

(iii) v:

$s^{v}(t)=[-y(t),\ x(t),\ -v(t),\ u(t),\ -k_{y}(t),\ k_{x}(t)]$

;

$s^{v}(t)=[-y(t),\ x(t),\ -v(t),\ u(t),\ -k_{y}(t),\ k_{x}(t)]$

;

${\omega }^{v}(t)=[-\Omega,\,+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega ]$

;

${\omega }^{v}(t)=[-\Omega,\,+a_2(t)\Omega,\,-a_1(t)\Omega,\,+\Omega ]$

; -

(iv) vi:

$s^{vi}(t)=[x(t),\ -y(t),\ u(t),\ -v(t),\ k_{x}(t),\ -k_{y}(t)]$

;

$s^{vi}(t)=[x(t),\ -y(t),\ u(t),\ -v(t),\ k_{x}(t),\ -k_{y}(t)]$

;

${\omega }^{vi}(t)=[+\Omega,\,-\Omega,\,+a_1(t)\Omega,\,-a_2(t)\Omega ]$

;

${\omega }^{vi}(t)=[+\Omega,\,-\Omega,\,+a_1(t)\Omega,\,-a_2(t)\Omega ]$

; -

(v) vii:

$s^{vii}(t)=[-x(t),\ -y(t),\ -u(t),\ -v(t),\ -k_{x}(t),\ -k_{y}(t)]$

;

$s^{vii}(t)=[-x(t),\ -y(t),\ -u(t),\ -v(t),\ -k_{x}(t),\ -k_{y}(t)]$

;

${\omega }^{vii}(t)=[+\Omega,\,-\Omega , +a_2(t)\Omega,\,-a_1(t)\Omega ]$

;

${\omega }^{vii}(t)=[+\Omega,\,-\Omega , +a_2(t)\Omega,\,-a_1(t)\Omega ]$

; -

(vi) viii:

$s^{viii}(t)=[y(t),\ x(t),\ v(t),\ u(t),\ k_{y}(t),\ k_{x}(t)]$

;

$s^{viii}(t)=[y(t),\ x(t),\ v(t),\ u(t),\ k_{y}(t),\ k_{x}(t)]$

;

${\omega }^{viii}(t)=[-a_2(t)\Omega,\,+\Omega,\,-\Omega,\,+a_1(t)\Omega ]$

.

${\omega }^{viii}(t)=[-a_2(t)\Omega,\,+\Omega,\,-\Omega,\,+a_1(t)\Omega ]$

.

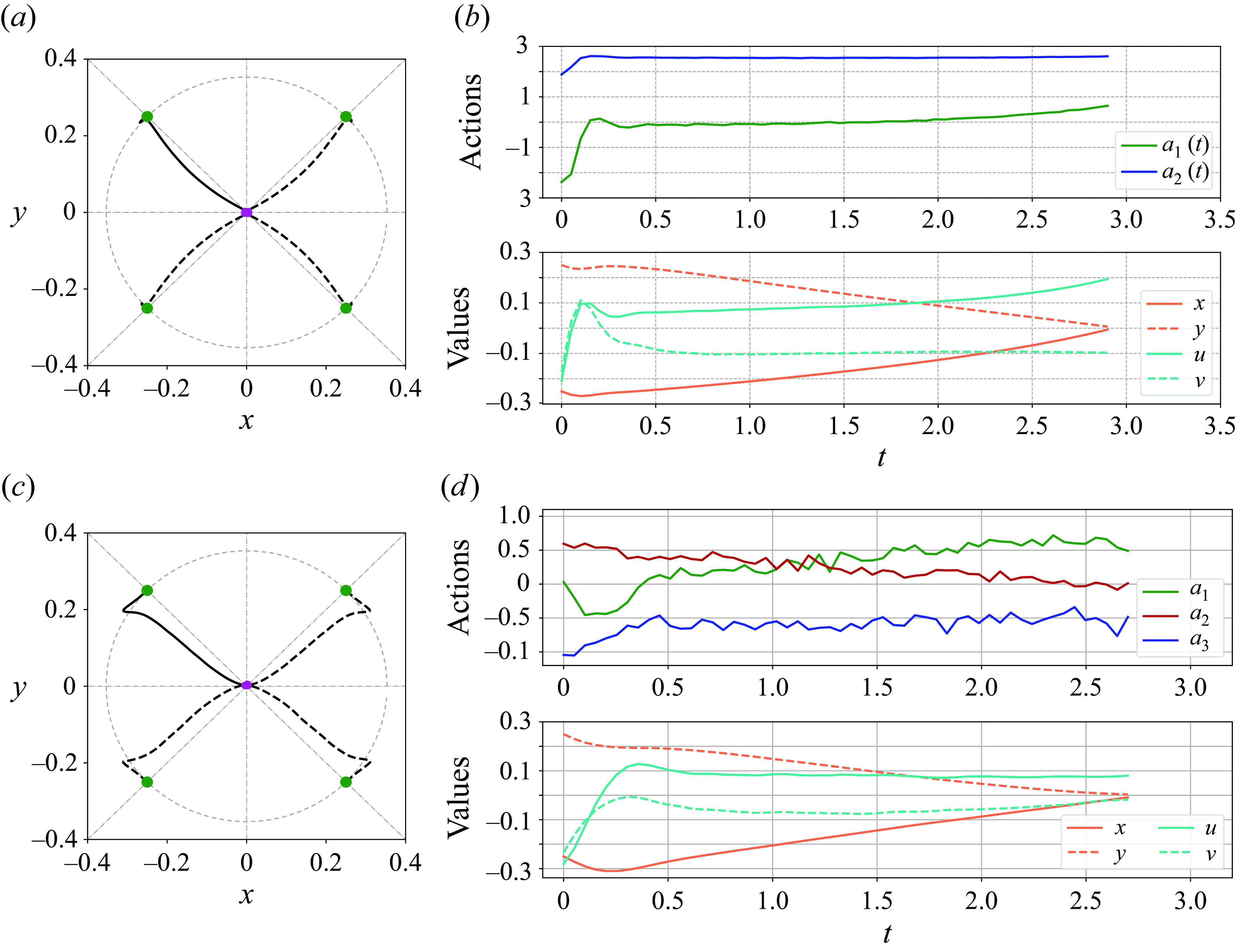

To validate the idea based on the geometric symmetry, we apply the policies obtained in § 3.1 for sub-quadrant iii to the entire flow domain directly without new training. As illustrated in figure 6, the dashed lines represent the direct application of the trained policies described in § 3.1. It is evident that all the droplets are successfully guided to the origin.

Figure 6. Trajectories by applying the policies trained in § 3.1 based on geometric symmetry in FRM. Note that the polices trained in sub-quadrant iii (see the solid lines) are applied directly to other sub-quadrants (the dashed lines) without new training. (

![]() $a$

)

$a$

)

![]() $h_0=0.05\sqrt {2}$

and

$h_0=0.05\sqrt {2}$

and

![]() $\alpha _0=60^{\circ },80^{\circ }$

. (

$\alpha _0=60^{\circ },80^{\circ }$

. (

![]() $b$

)

$b$

)

![]() $h_0=0.1\sqrt {2}$

and

$h_0=0.1\sqrt {2}$

and

![]() $\alpha _0=60^{\circ },80^{\circ }$

. (

$\alpha _0=60^{\circ },80^{\circ }$

. (

![]() $c$

)

$c$

)

![]() $h_0=0.25\sqrt {2}$

and

$h_0=0.25\sqrt {2}$

and

![]() $\alpha _0=45^{\circ }$

.

$\alpha _0=45^{\circ }$

.

3.3. Effect of inertia on the DRL control of FRM

In this study, inertia is numerically modelled and its effects on the DRL control will be discussed in this section. The

![]() $Re$

values investigated are

$Re$

values investigated are

![]() $Re = 10^{-9}, 0.4, 2$

and

$Re = 10^{-9}, 0.4, 2$

and

![]() $3$

. One may wonder why we limit the study to relatively small values of

$3$

. One may wonder why we limit the study to relatively small values of

![]() $Re$

. The reason is that, as

$Re$

. The reason is that, as

![]() $Re$

increases, control becomes progressively more challenging. At higher

$Re$

increases, control becomes progressively more challenging. At higher

![]() $Re$

, the delay in flow response caused by the increased inertia disrupts the action–reward relationship in DRL, ultimately leading to failed control. This will be explained next.

$Re$

, the delay in flow response caused by the increased inertia disrupts the action–reward relationship in DRL, ultimately leading to failed control. This will be explained next.

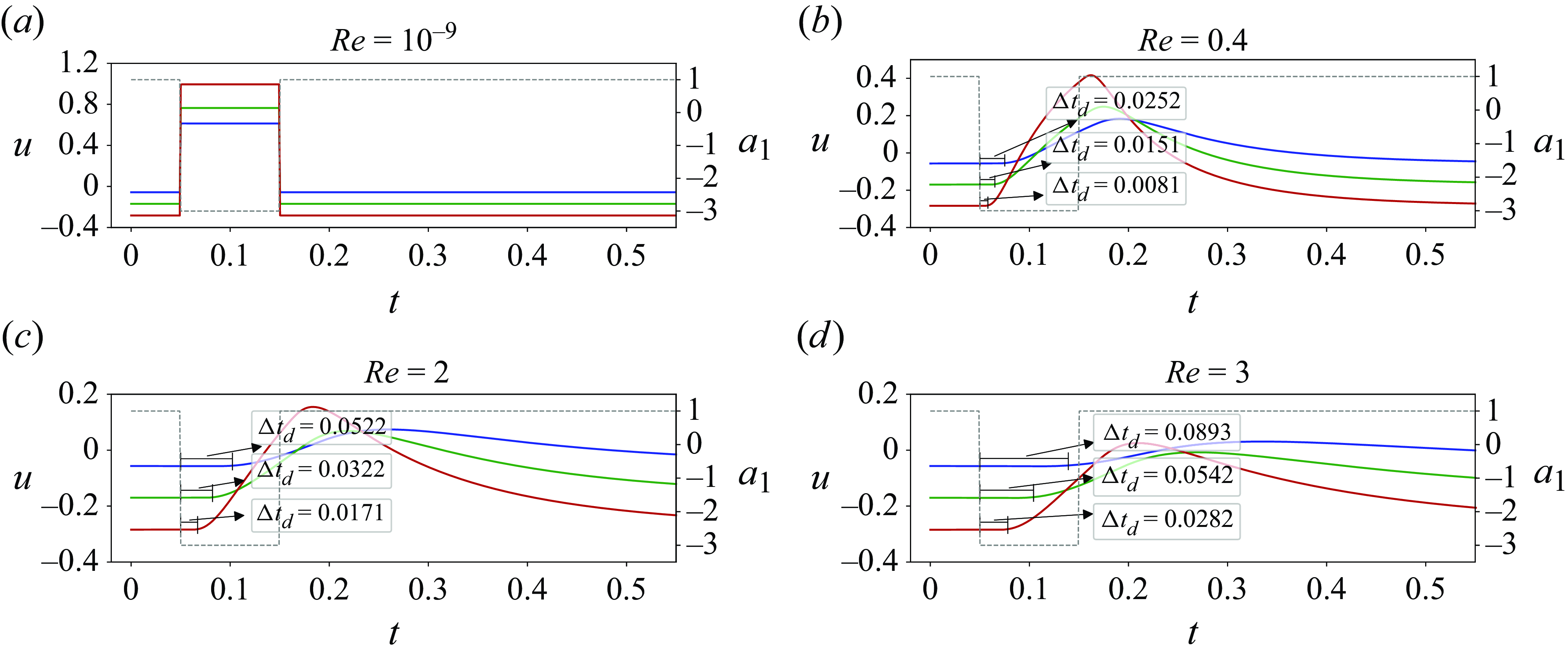

Figure 7. Effect of inertia on the droplet trajectory subject to ad hoc action variation. The blue dot marks the initial position of the droplet at

![]() $[-0.25, 0.25]$

. Panel (

$[-0.25, 0.25]$

. Panel (

![]() $a$

) illustrates the action history of the roller (labeled as roller 2), where different colours represent the variations in the applied control action over time. In other panels, the black dashed lines show the trajectories without control, corresponding to the case where

$a$

) illustrates the action history of the roller (labeled as roller 2), where different colours represent the variations in the applied control action over time. In other panels, the black dashed lines show the trajectories without control, corresponding to the case where

![]() $a_1(t) = 1$

for

$a_1(t) = 1$

for

![]() $t \in [0, 2]$

. The curve segments in multiple colours show the trajectories of the droplet under the corresponding actions.

$t \in [0, 2]$

. The curve segments in multiple colours show the trajectories of the droplet under the corresponding actions.

Figure 7 illustrates the effect of inertia on the delay in flow response by examining the droplet trajectory as the applied action is varied. This demonstration is an ad hoc test and does not correspond to the control cases in table 1. In panels (

![]() $b$

)–(

$b$

)–(

![]() $f$

), the black dashed line represents the trajectory of a droplet started at the initial position

$f$

), the black dashed line represents the trajectory of a droplet started at the initial position

![]() $[-0.25, 0.25]$

without control, following the baseline roller action

$[-0.25, 0.25]$

without control, following the baseline roller action

![]() $[+\Omega , -\Omega , +\Omega , -\Omega ]$

. Since the

$[+\Omega , -\Omega , +\Omega , -\Omega ]$

. Since the

![]() $Re$

values are small, all these black dashed trajectories appear similar, although minor differences exist that are difficult to discern. However, when the roller action is varied to

$Re$

values are small, all these black dashed trajectories appear similar, although minor differences exist that are difficult to discern. However, when the roller action is varied to

![]() $[+\Omega , -a_1(t)\Omega , +\Omega , -\Omega ]$

, with the profile of

$[+\Omega , -a_1(t)\Omega , +\Omega , -\Omega ]$

, with the profile of

![]() $a_1(t)$

shown in panel

$a_1(t)$

shown in panel

![]() $(a)$

, the variation in the trajectories across the considered

$(a)$

, the variation in the trajectories across the considered

![]() $Re$

values becomes significant, even though the values of

$Re$

values becomes significant, even though the values of

![]() $Re$

are generally small. This is depicted by the curves in multiple colours. Notably, for

$Re$

are generally small. This is depicted by the curves in multiple colours. Notably, for

![]() $Re = 10^{-9}$

, where inertia is negligible, the droplet instantly adjusts its motion in response to changes in the roller action. As

$Re = 10^{-9}$

, where inertia is negligible, the droplet instantly adjusts its motion in response to changes in the roller action. As

![]() $Re$

increases, the effects of inertia become more pronounced. The droplet exhibits greater resistance to changes in direction, despite the roller action being reversed with a large amplitude. This is particularly evident in high-

$Re$

increases, the effects of inertia become more pronounced. The droplet exhibits greater resistance to changes in direction, despite the roller action being reversed with a large amplitude. This is particularly evident in high-

![]() $Re$

cases, and leads to significant delay in flow response, potentially disrupting the action–reward relationship in the DRL control. For example, at

$Re$

cases, and leads to significant delay in flow response, potentially disrupting the action–reward relationship in the DRL control. For example, at

![]() $Re=3$

and

$Re=3$

and

![]() $Re=5$

, the red segments of the trajectories appear to follow the continuation of the blue segments, even though the red signal represents a reversed rotation with relatively large amplitude. As the DRL agent interprets the outcome of each control action and refines the policy based on the perceived flow state, it may mistakenly infer that the red action has no influence on the droplet’s position. This misinterpretation can ultimately lead to a failed control attempt if the state space is defined solely based on position. In our DRL set-up, the state includes position, velocity and acceleration. However, even with this comprehensive state representation, the current approach fails to achieve successful control for

$Re=5$

, the red segments of the trajectories appear to follow the continuation of the blue segments, even though the red signal represents a reversed rotation with relatively large amplitude. As the DRL agent interprets the outcome of each control action and refines the policy based on the perceived flow state, it may mistakenly infer that the red action has no influence on the droplet’s position. This misinterpretation can ultimately lead to a failed control attempt if the state space is defined solely based on position. In our DRL set-up, the state includes position, velocity and acceleration. However, even with this comprehensive state representation, the current approach fails to achieve successful control for

![]() $Re=5$

in our FRM flow. Consequently, results for

$Re=5$

in our FRM flow. Consequently, results for

![]() $Re=5$

are not included in this manuscript.

$Re=5$

are not included in this manuscript.

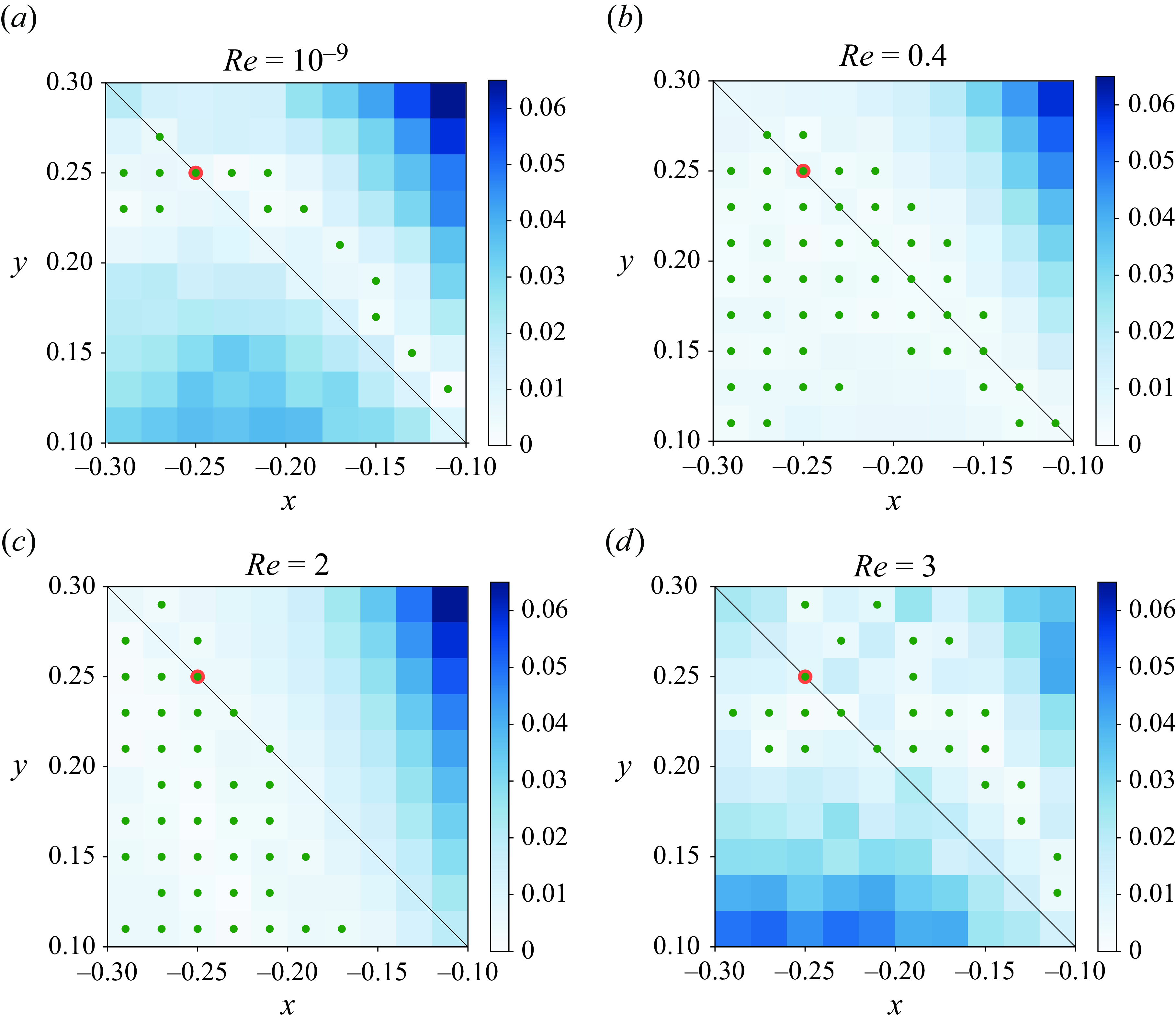

In the following, we will provide the control performance of the DRL agent for

![]() $Re=10^{-9}$

and

$Re=10^{-9}$

and

![]() $Re=2,3$

, to be compared with the results in § 3.1 for

$Re=2,3$

, to be compared with the results in § 3.1 for

![]() $Re=0.4$

.

$Re=0.4$

.

3.3.1. Vanishingly small

$Re$

$Re$

For completeness, we report control results for a vanishingly small-

![]() $Re$

(

$Re$

(

![]() $=10^{-9}$

) flow in FRM to elucidate the differences in the numerical settings and results between the vanishingly small-

$=10^{-9}$