12.1 Research Narratives and Narratives of Nature

In 1945, George Beadle, who was to receive the Nobel Prize in Physiology or Medicine in 1958, together with Edward Tatum, published a long review article on the state of biochemical genetics. In one section, entitled ‘Eye pigments in insects’, he summarized results stemming to a large extent from his own work, which he had initiated with Boris Ephrussi in 1935, preceding his collaboration with Tatum. Beadle and Ephrussi used the fruit fly Drosophila melanogaster, which at the time was already a well-established experimental organism. Their experiments, however, introduced a novel approach based on tissue transplants between flies carrying different combinations of mutations. The results of these and similar experiments, and further biochemical efforts to characterize the substances involved, led to the following account of the physiological process of the formation of brown eye pigment and the roles of genes therein:

Dietary tryptophan is the fly’s initial precursor of the two postulated hormones. This is converted to alpha-oxytryptophan through a reaction controlled by the vermilion gene. A further oxidation to kynurenine occurs. […] This is the so-called v+ substance of Ephrussi and Beadle. This is still further oxidized to the cn+ substance, which Kikkawa believes to be the chromogen of the brown pigment. The transformation of kynurenine to cn+ substance is subject to the action of the normal allele of the cinnabar gene.

This text constitutes a small narrative. It relates several events which occur in temporal order and are causally connected. The sequence has a beginning (the precursor is ingested), a middle (it is transformed in several reactions controlled by genes) and an end (the implied formation of brown pigment). Yet, this narrative does not recount particular events, but rather a type of event happening countless times in fruit flies (and similarly in many other insects); it is a generic narrative.Footnote 1

In the natural sciences, such narratives are often found in review articles and textbooks, but also in summaries of the state of knowledge on a given subject in the introduction to research articles; they state what is taken as fact. Addressing scientific facts as narratives acknowledges that they are typically presented as complex and ordered accounts of a subject rather than single propositions. It is striking that no human agents, observers or cognizers are present in such narratives. They are accounts of events that are taken to happen ‘in nature’ when no researcher is intervening or even watching. Such narratives can thus be called ‘narratives of nature’.Footnote 2

Historians and philosophers of science no longer see the question of epistemology to be concerned with the truth of such knowledge claims alone, but also with the practices from which they emerge, and which enable, shape and delimit these claims. The references in Beadle’s text make it clear that each proposition can be traced back to an episode of research. Narratives of nature emerge gradually from the research literature as facts accepted in a community. Accounts of the methods by which the knowledge was achieved are abandoned like ladders once the new state of knowledge is reached. The facts are turned into ‘black boxes’, which can, however, be reopened any time; methods are called into question when facts are challenged (Reference LatourLatour 1987).

To account for how a hypothesis derived from research eventually enters a ‘narrative of nature’, it is necessary to show how a hypothesis comes to be known and understood by members of a community in the first place. I will argue here that this requires peers to understand the research approach – which aligns a method and a problem, and in the context of which the hypothesis was formulated. Familiarization with the approach is achieved by using another type of narrative, realized primarily in research articles. The function of research articles is thus not only and primarily to convince readers that a hypothesis is supported by evidence, so that they will accept it.Footnote 3 Instead, by making readers familiar with the approach, the article enables them to understand how one gets in the position to formulate and support a hypothesis of this kind in the first place, its relevance regarding a problem recognized in the community, and the meaning of the terms used (i.e., to grasp the epistemic objects in question).Footnote 4

An approach is a movement, it involves positioning oneself towards a phenomenon and accessing it from a particular direction and in a particular way.Footnote 5 The phenomenon, the experimental system employed for accessing it, the activities of intervention and observation afforded by the system, and the ways to make inferences from observations, including the recognition of invisible entities, make up what I call the ‘practice-world’ of researchers. The research article introduces the reader to this world and to the way that researchers position themselves by interpreting a problem pertaining to a phenomenon, to access the phenomenon, materially and cognitively, generate data and draw inference – in other words it makes the reader familiar with an approach. Only then can the hypothesis be understood; but it does not need to be accepted. Any criticism, refinement or amendment of the hypothesis is articulated in terms that are meaningful in the context of the approach and often involve the recreation of the approach by members of the community, introducing more or less substantial variation.Footnote 6

In this chapter, I will show how research articles employ narrative to familiarize readers with an approach. Reporting the material (intervention and observation) and cognitive (inference) activities of researchers, research articles on the whole are narratives (even if they often contain non-narrative passages) and might be referred to as ‘research narratives’. Like narratives of nature, research narratives are factual narratives, but in contrast to the former, they recount particular events, which happened at a specific site (e.g., a given laboratory) and a specific time; they are not generic. And yet, as will become clear, they do not present these events as unique either, but rather as exemplary.

In section 12.2, I will introduce several narratological concepts pertinent to the analysis. In 12.3, I will then trace back some of the elements of the narrative of nature above to an original research article by Beadle and Ephrussi, which I take to be representative of this genre in twentieth-century experimental life sciences. Section 12.4 will then return to the ways in which the particular implementation of an approach is rendered exemplary.Footnote 7

12.2 Research Articles as Factual Narratives

Subsection 12.2.1 will argue that modern research articles are indeed narrative texts. As they are generally taken to be factual narratives, I will briefly address the question of how they relate to real-world events. Subsection 12.2.2 will clarify the relation of researchers in their double role as agents and authors, and as narrator and character. It will then relate these roles to the narratee and the implied and actual reader. I will also introduce two metaphors: narrative as path and narrating as guidance, to further characterize the relation of narrator and narratee.

12.2.1 Research Articles are Factual Narratives

When talking about narrative, one often thinks of fictional texts or accounts of personal experience.Footnote 8 Research articles might not meet common expectations about what a narrative is. Nonetheless, research articles should be seen as narratives. Before showing why, I will address some ways in which they depart from more typical narrative texts.

First, research articles have a unique structure in that they typically separate the accounts of various aspects of the same events. This partitioning of information is often realized in the canonical ‘introduction, methods, results and discussion’ (IMRaD) structure.Footnote 9 In the Introduction, researchers state where they see themselves standing in relation to various disciplines and theoretical commitments, thereby positioning themselves towards a problem recognized in the community they address and motivating the activities to be narrated. The detailed description of the activities, including preparation, intervention and observation, is presented in the Material and Methods section collectively for all experimental events. The structured performance of these activities is reported in the Results section, albeit not necessarily according to their actual temporal order. Finally, the Discussion section recounts cognitive operations in which the material activities are revisited, often as involving entities which are inferred from patterns in the data.

Second, research articles tend to exhibit a characteristic style. As is often noted, they use impersonal language, i.e., various devices such as passive voice, adjectival participles, nominalization, abstract rhetors and impersonal pronouns to conceal the agent in an event (e.g., Reference Harré and NashHarré 1990; Reference MyersMyers 1990). Furthermore, events are often reported in the present tense. These strategies give the impression of a generic narrative, even though (unlike a narrative of nature) the statements in fact refer to particular events. Such narratives are thus pseudo-generic, but in this way represent events as exemplary.

Taken together, these organizational and stylistic features result in the fact that research articles do not resemble other text types that are more often addressed in terms of narrative. And yet they should indeed be seen as narratives.

Most definitions of narrative or criteria for narrativity of a text include the notion that narratives relate connected events. The verb ‘to relate’ can be read in a double sense here: narratives recount the events and they also establish relationships between them. There is some dispute about the nature of the connections among events that lend themselves to being narrated – for example, whether connections need to be temporal or causal (Reference MorganMorgan and Wise 2017). It is, however, almost universally agreed that a mere assortment of event descriptions or a mere chronological list of events does not constitute a narrative. Another central criterion is the involvement of human-like or intelligent agents in the events. Again, further aspects of agency might be required, such as the representation of the mental life of the agents or the purposefulness of actions (Reference Ryan and HermanRyan 2007).

Depending on whether the latter condition is taken to be necessary, or how one interprets ‘human-like’, one might doubt the status of narratives of nature discussed above. Research articles, however, are clearly narratives in the light of these core criteria. They report connected events, and they report them as connected. Indeed, many of the events are temporally ordered, with previous determining subsequent events. Furthermore, the events involve the researchers as agents, and their actions are purposeful and accompanied by cognitive operations.

Although it has been observed that research articles often do not provide a faithful representation of the research process which they appear to report, research articles are not typically perceived as works of fiction either (Reference SchickoreSchickore 2008).Footnote 10 Instead, they are generally presented and perceived as factual narratives (Reference Fludernik, Fludernik and RyanFludernik 2020). Accordingly, an account of factual narrative is required.

One of the most robust theoretical tenets of narratology is the distinction between story and discourse.Footnote 11 Seymour Chatman, for instance, states that

each narrative has two parts: a story (histoire), the content or chain of events (actions, happenings), plus what may be called the existents (characters, items of setting); and a discourse (discours), that is, the expression, the means by which the content is communicated. In simple terms, the story is the what in a narrative that is depicted, discourse the how.

It cannot be assumed, however, that in the case of factual narratives the real-world chain of events constitutes the story. If the observation of common mismatch between research process and report is accurate, then for research articles, at least, it is clear that the chain of events reconstructed from the discourse, the story, is not necessarily equivalent to the chain of events that make up the research process. The story as the sequence of events reconstructed from the discourse by the reader is a mental representation, as cognitive narratologists maintain (Reference Ryan and HermanRyan 2007). I will thus assume a semiotic model of factual narrative according to which the discourse invokes a story in the mind of the reader, and the narrative (discourse + story) represents real-world events, whether or not the events of the story fully match the represented events.Footnote 12 I will speak of the represented events as being part of a ‘practice-world’, however, to avoid false contrasts, as discourses and minds are, of course, part of reality, and to point out that these narratives represent only a fragment of the world which is inhabited by the actual researchers.

12.2.2 Communicating and Narrating

By putting their names in the title section, researchers as authors of scientific articles clearly assume responsibility for what they write, and they will be held accountable by others. Yet even if the narrator is identified with the author of these and other factual narratives, it cannot be equated with the author.Footnote 13 Authors will carefully craft the narrator and adorn it with properties which they need not necessarily ascribe to themselves. In fact, as many articles – including Beadle and Ephrussi’s – are co-authored, it would be challenging to construct a narrative voice that is faithful to the ways each of the authors perceives themselves or the group. In research articles, narrators are homodiegetic, i.e., they are also characters in the story (Reference GenetteGenette 1980). Hence, by crafting the narrator, authors also craft the character of the researcher on the level of the story (for instance, as an able, attentive and accurate experimenter).

On the recipient side, the reader of a research article can be anyone, of course, even a philosopher of science looking at the text 80 years later to make it an example for narrative in science. There is also an implied reader, which can be inferred from paratextual as well as textual features (Reference IserIser 1978). Regarding the former, the journal in which an article is published is a key indicator. Textual features include the knowledge the authors take for granted – the kind of claims that do not need further justification or terminology, used without definition. The actual reader who matches the features of the implied reader is the addressee of the communicative act of the author.

Reference GenetteGenette (1980) distinguishes the act of narrating from the discourse and the story. This act is performed by the narrator and is not part of the story; the addressee of this act can be called the ‘narratee’. By creating the discourse, the author creates the voice of a narrator as if it (the narrator) had produced this discourse, and a narratee as the addressee implied in the discourse.Footnote 14 Thus the narratee cannot be equated with the reader addressed by the author. Furthermore, while the way the narratee is construed is informative of the way the implied reader is construed, these two categories need not necessarily overlap.

Based on the above model of factual narrative, I propose the following account of narrating. The narrator in the act of narrating represents the researchers in their role as authors in the precise sense that it is construed as having the same knowledge as the latter. The researcher-character, who is identified with the narrator, represents the researchers in their role as experimenters and reasoners in the practice-world. The narrator addresses the narratee to recount events in which it was involved as a character and which thus represent events in the practice-world of the researchers. A reader can cognitively and epistemically adopt the position of the narratee and thereby learn about these events. A reader who matches the implied reader will be more willing and able to do so. In this way, researchers as authors communicate information about the practice-world they inhabit as agents to a reader who might inhabit similar practice-worlds.

Narratologists routinely analyse differences regarding time (order, duration and frequency of events) between discourse and story.Footnote 15 Note, however, that if the story is distinguished from the practice-world events in factual narratives, the difference between these two regarding time is an entirely different issue. Take the order of events. The discourse might introduce events in the order B, A, C, while it can be inferred from the textual cues that the order in the story is A, B, C. The discourse then does not misrepresent the order – in fact, by means of the cues it does represent the events in the order A, B, C, and as the story is an effect of the discourse, the two levels cannot be compared independently. If the narrative (discourse + story) presents events in a given order A, B, C, while the practice-world order of events was in fact C, A, B, then this, instead, constitutes a mismatch (e.g., between research process and report). The above semiotic model maintains that the narrative still represents the practice-world events. By manipulating order, duration and frequency in the discourse, authors can create certain effects in the perception of the story. In the case of factual narrative, developing a story that misrepresents practice-world events in one aspect can help to highlight other important aspects of these events such that the overall representation might become even more adequate with respect to a given purpose.

The purpose of the research narrative, or so I argue, is to represent the practice-world events as an approach to a given problem. Seen from the perspective of the act of narrating, the discourse not only presents events which are reconstructed on the story level, but it consists of events of narrating. If the discourse introduces narrated events in the order B, A, C (including cues that indicate the order on the story level is A, B, C), then there will be three sequential events: narrating B, then A, and then C. The temporal order on the level of narrating might be employed to highlight an order of elements in the story world other than temporal (e.g., a conceptual order).Footnote 16

On the level of narrating, the narrative might be described as a path through scenes in the story world which are considered in turn. By laying out a path, the narrator guides the narratee through the story-world. If this metaphor has a somewhat didactic ring, it is important to remember that it does not describe the relation of author and reader. The narratee in the research narrative is construed not so much as a learner who knows less about a subject but more as an apprentice who knows less about how to approach the subject. The narrator (who is also the researcher-character) will create a path connecting several diegetic scenes in which the character has certain beliefs, performs activities and observations, and reasons on their basis. The narratee qua guidee is thus introduced to the epistemic possibilities of the approach. A reader willing and able to adopt the position of the narratee can thereby learn about the approach.

12.3 Familiarizing a Community with an Approach through Research Narratives

12.3.1 The Case: A Research Article on Physiological Genetics from the 1930s

I now turn to the work of George Beadle (1903–89) and Boris Ephrussi (1901–79) and in particular to one article, which can be analysed based on the considerations in 12.2. The article in question was published in the journal Genetics in 1936.Footnote 17 It was entitled ‘The Differentiation of Eye Pigments in Drosophila as Studied by Transplantation’ and reported research the authors had performed mainly in 1935, when Beadle, who was at Caltech at the time, visited Ephrussi in his lab at the Institut de Biologie Physico-Chimique, Paris.Footnote 18

Leading up to Beadle’s Nobel Prize-winning work with Tatum, which is usually associated with a the ‘one gene – one enzyme hypothesis’ and thus considered an important step in the history of genetics, the article is relatively well known, at least to historians of genetics, as well as philosophers of biology. While firmly embedded in the genetic discourse and practice of its time, it presents enough novelty to display clearly the work it takes to familiarize peers with a novel approach and the novel epistemic objects emerging from it. Finally, in employing the IMRaD structure and an impersonal style, it conforms to salient conventions of much scientific writing in twentieth-century life sciences. It is thus well suited for such an analysis.

Many geneticists at the time aimed to understand the physiological role of genes, an enterprise that was often referred to as ‘physiological genetics’.Footnote 19 This was the kind of problem Beadle and Ephrussi set out to engage with. Their starting point was an observation made by Alfred Sturtevant. Sturtevant had studied genetic mosaics naturally occurring in Drosophila flies, that is, organisms which are composed of tissues with different genotypes.Footnote 20 In some flies it appeared that the eyes did not exhibit the eye colour that would be expected given their mutant genotypic constitution (indicated by other phenotypic markers), but rather the colour-phenotype associated with the normal (wild type) genotype present in other parts of the body. From this Sturtevant concluded that a substance might circulate in the body of the fly, affecting the development of the eye, and that the gene, which was mutated in the eye, but was functioning in other parts of the body, was involved in the production of this substance (Reference Sturtevant and JonesSturtevant 1932).

Beadle and Ephrussi developed an experimental system based on implanting larval structures that would give rise to the adult eye (imaginal eye discs) into host larvae. The procedure resulted in adult host flies which harboured an additional eye in their abdominal cavity. This allowed them to create mosaics artificially and thus in larger numbers, and to produce adequate experimental controls. They clearly began with Sturtevant’s hypothesis regarding the existence of a circulating, gene-related substance. Furthermore, hypotheses about the nature of gene action, in particular, the idea that genes affected biochemical reactions (either because they were enzymes or because they played a role in their production) were common (Reference RavinRavin 1977). Nonetheless, Beadle and Ephrussi’s article did not frame the work as testing any specific hypothesis about the relation of these entities, but rather as exploratory. Their project aimed at producing evidence for the existence and interactions of further elements in the biochemical system.

The epistemic objects they dealt with were thus on the one hand a well-established one, the gene, of which, however, little was yet known regarding its physiological function in somatic contexts, and on the other hand the assumed circulating substances, which were presumably involved in physiological reactions and in some way connected to the action of genes. The article reported the approach through which they achieved material and cognitive access to these epistemic objects and thereby established novel concepts referring to them. The approach enabled the formulation of hypotheses pertaining to these objects.

12.3.2 The Analysis: The Research Narrative as Path through Epistemic Scenes

In the following, I will reconstruct the research article by Beadle and Ephrussi as a narrative. The narrative draws a path through several scenes in which the researcher-character performs material or cognitive activities in a story-world which in turn represents the practice-world of the researchers as experimenters. The researchers as authors construct the narrator to guide the narratee through these scenes in a way that enables an understanding of the epistemic possibilities of the approach they have developed and thus an understanding of the hypothesis put forward. I will identify four types of epistemic scenes (concerning what is known and what can be known through the approach), which roughly coincide with the canonical IMRaD sections.

I The Positioning Scene: Interpreting a Problem Shared by a Disciplinary Community

Both the journal in which Beadle and Ephrussi published their article (Genetics), as well as the things they take for granted, clearly indicate that their text implies geneticists as readers, as opposed to, say, embryologists.

The article does not begin with a hypothesis to be tested, but with a question or research problem to be explored, which pertains to the discipline of genetics, and more specifically to the subfield of physiological genetics.Footnote 21

Prominent among the problems confronting present day geneticists are those concerning the nature of the action of specific genes – when, where and by what mechanisms are they active in developmental processes?

With respect to this question, an assessment is made of the state of research at the time, which has a theoretical aspect (what is known or assumed about gene action) and a methodological aspect (how the problem has been approached). Regarding the former, it is asserted that ‘relatively little has been done toward answering [these questions]’ (Reference Beadle and EphrussiBeadle and Ephrussi 1936: 225). Regarding the latter, advances that have been made are acknowledged:

Even so, promising beginnings are being made; from the gene end by the methods of genetics, and from the character end by bio-chemical methods.

However, a methodological obstacle to theoretical progress is identified in the fact that those organisms, which are well-characterized genetically, are not studied from a developmental perspective, and vice versa. It is suggested that this impasse be confronted by studying developmental processes in a genetically well-characterized organism (Drosophila), and in particular regarding the formation of pigment in the eye, because many eye-colour mutants were known in this species (and because of Sturtevant’s previous findings).

As these considerations are written in an impersonal style, one could see them as considerations of the authors in the moment of writing. And yet they are narrated as considerations of the researchers at the time of setting up the project, as indicated by formulations such as this: ‘Several facts have led us to begin such a study’ (Reference Beadle and EphrussiBeadle and Ephrussi 1936: 225). As such, they are events in the story-world (whatever was in fact considered in the practice-world). They constitute the beginning of the story, the initial epistemic scene in which the researcher-character (‘we’) – as a member of a discipline – finds itself.Footnote 22 The narrator guides the narratee through the scene to let it understand how one can position oneself in the field characterized by certain problems and available methods, and to realize the advantages of the chosen approach.Footnote 23 This will resonate in particular with readers who are members of the community the authors belong to.

II The Methodology Scene: Having and Mastering an Approach

The introduction of a new approach changes the situation in the field. It results in new possibilities for these researchers, and with them for everyone in their community. The new situation is characterized by the availability of the new experimental method, the new interpretation of the problem such that it can be addressed by the method, and the evidence and conclusions it affords. The narrator has already led the narratee to consider this new approach by setting it off against previous work in the Introduction section.

In the Material and Methods section, then, experimental events, consisting in applying a technique, are presented as generic, repeatable activities:

In brief, the desired organ or imaginal disc, removed from one larva, the donor, is drawn into a micro-pipette and injected into the body cavity of the host. As a rule, operations were made on larvae cultured at 25°C for three days after hatching from the eggs.

In general, one function of this section can be to enable other researchers to reproduce the techniques in their own lab. In that sense, the text functions like a recipe (or ‘protocol’, in the language of experimental sciences). In this case, however, the detailed description of the technique has been relegated to an extra method article (Reference Ephrussi and BeadleEphrussi and Beadle 1936). The information given in the Material and Methods section of the present article is possibly too sparse to allow for reproducing the experiments. This points to the fact that there must be another function: this section is similar to the exposition in a fictional text.Footnote 24 It introduces the reader to various elements (‘existents’) of the story, such as flies, fly larvae, donors, hosts, imaginal discs, various mutant lines and other things, and, furthermore, to the ‘habitual’ activities involving these elements performed by the researcher-character.

In the quotation, the first sentence uses the present tense. It is prescriptive in the sense of a protocol, but more importantly expresses the fact that the experiments can be performed by anyone who has the skills and access to the material. The second sentence is in the past tense, making it clear that the narrative nonetheless represents particular events when the researchers have performed these actions and indeed varied the conditions and found one that worked best. Following the contrastive presentation of the approach in the Introduction, the narrative in the Material and Methods section presents the character in a scene where it equips itself with a reliable method with which to approach the problem identified in the positioning scene.

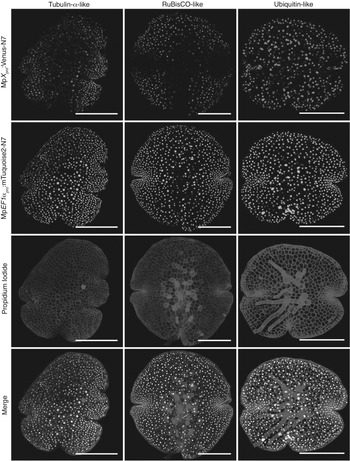

III The Experimentation Scene: Addressing Questions Pertaining to the Problem through the Approach

In the Experimental Results section, the narrative proceeds through questions which generate several epistemic scenes within the context of the broader disciplinary situation. These scenes are characterized by specific instances of ignorance (e.g., regarding the action of specific genes known through mutations) relative to the overarching research problem (gene action in general). These questions in turn have to be expressed in terms of the behaviours of the material in the context of the experimental interventions possible in the framework of the novel approach.

The path along which the narrator guides the narratee through these scenes is not fully determined by the temporal order in which the experiments were performed. Some questions can only be formulated if the data of previous experiments are obtained (or indeed only after conclusions are drawn from it, which are only presented in the Discussion section). But for many experiments the order in which they are performed is not relevant and hence also not represented in the text. The ordering created by the path is thus not always that of a sequence of events, but, instead, the intervention events are also ordered into series according to the logic of the experiments, in this case the combinatoric logic regarding donor and host genotype. The subsections have titles such as Mutant eye discs in wild type hosts, Wild type discs in mutant hosts, Vermilion discs in mutant hosts, etc.

For instance, the first subsection shows how the approach provides an assay to answer the question of which mutants are autonomous (i.e., when serving as donor, are not affected by the host tissue). The result that v and cn are the only exceptions, in that they are not autonomous, leads to a new epistemic scene. In the subsection Vermilion discs in mutant hosts, then, the narrative moves forward by means of a new question the researcher-character asks itself, and which can be addressed through the approach:

data should be considered which bear on the question of whether other eye color mutants have anything to do with this ‘body-to-eye’ phase of the v reaction [i.e., the influence of the host]. This question can be answered by implanting v eye discs into hosts which differ from wild type by various eye color mutants. Such data are given in table 3.

[Table 3]

These data show that, when implanted in certain mutant hosts ([list of mutants]), a v optic disc gives rise to a wild type eye; in others ([list of mutants]), it gives an eye with v pigmentation.

Most of the researcher-character’s activities of intervention (implanting) and observation (dissecting and comparing eye colours) are compressed into one sentence and relegated to the table. Again, the formulation in the present tense and the impersonal style suggest that for any researcher, at any time, these interventions would result in these observations. And yet these sentences clearly refer to particular events in the story. The table, for instance, lists the number of individuals tested. We learn, for instance, that a v disk has been implanted in a bo host only a single time, while it has been implanted in 18 ca hosts. By having the researcher-character note the regularities and notable exceptions in the data, the narrator enables the narratee to grasp what can be done with the experimental method within the approach.

IV The Interpretation Scene: Formulating Hypotheses in the Context of the Problem and Approach

Already in the Experimental Results section, cognitive operations of the researcher-character are narrated:

From the data present above, it is seen that, in the cases of cn in wild type, v in wild type, and wild type in ca, the developing eye implant is influenced in its pigmentation by something that either comes or fails to come from some part or parts of the host. Just what this is, whether or not, for example, it is of the nature of a hormone, we cannot yet say. We shall therefore refer to it by the noncommittal term ‘substance’.

In this scene, the narratee is shown how the approach enables cognitive access to new epistemic objects through interpreting data resulting from past activities. It is in the context of the approach that the term ‘substance’ refers to new objects. It can then be used to formulate a new set of questions, which no longer concern the visible effects of the interventions in the materials, but the assumed entities which are not directly observable: ‘[I]s there only one substance? If not, are the different substances related and in what way? What is their relation to the genes concerned in their production?’ (Reference Beadle and EphrussiBeadle and Ephrussi 1936: 233).

These epistemic objects are thus introduced as objects of interaction, appearing when acting in the framework of the approach. For this purpose, in the Discussion section, events reported in the Experimental Results section are revisited:

Since the pigmentation of a genetically v eye can be modified to v+ by transplanting it to a host which supplies it with what may be called the v+ substance, it follows that v differs from wild type by the absence of this substance. Evidently there is no change in the v eye itself which prevents its pigmentation from assuming wild type characteristics. It follows that the mutation v+ → v has resulted in a change such that v+ substance is no longer formed.

The events of experimental intervention (implanting a v disk) are retold, but this time the unobservable events on the molecular level that are thought to link the intervention and observation made by the researcher are added. Yet the scene inhabited by the researcher-character is not one of experimentation but of reconsidering past experimental action. Together, the experimental scene, in which the narrator recounts what has been observed upon intervention, and the interpretation scene, which narrates the reconstruction by the character of what was actually happening on a hidden level, are akin to an ‘epistemic plot’.Footnote 25 The narratee is led to understand the way activities in the context of the approach can be interpreted in terms of interactions with the epistemic objects.

12.4 Conclusion: Exemplification of an Approach, between the Particular and the Generic

If the hypothesized entities and relations in the research article are compared with the narrative of nature in the review article quoted above, then it is clear that some – for instance, regarding the roles of the v and cn genes – achieved the status of accepted facts. Other propositions never went beyond the status of ‘preliminary hypothesis’. Regarding the relation of substances, Beadle and Ephrussi provide the following hypothesis:

Such an hypothesis assumes that the ca+, v+, and cn+ substances are successive products in a chain reaction. The relations of these substances can be indicated in a simple diagrammatic way as follows:

→ ca+ substance → v+ substance → cn+ substance

The entities and relations after the second arrow are conserved in the narrative of nature. For sure, Beadle and Ephrussi can claim to have discovered these substances and the relations holding among them and between the substances and genes.Footnote 26 But the details of the hypothesis do not matter much, nor which elements are conserved. When it turned out that the existence of an entity that would match their hypothesized ca+ substance could be established, this by no means diminished the value of the work. To criticize the hypothesis on its own terms required understanding the approach from which it emerged. Further results of that sort would come from the application of a more or less substantially modified version of the approach. Indeed, the research (Reference ClancyClancy 1942) which led to the abandonment of the ca+ substance, was ‘undertaken in order to repeat and supplement the experiments of Beadle and Ephrussi’ and ‘[t]ransplantation operations were performed by the method of Reference Ephrussi and BeadleEphrussi and Beadle [1936]’. The author also added a novel technique for the ‘extraction and measurement of the eye-color pigments’ to the approach (Reference ClancyClancy 1942: 417, 419). Hence, amending Beadle and Ephrussi’s hypothesis depended on understanding, applying and modifying their approach.

The approach to the problem faced by the discipline, rather than the hypothesis, was thus the main achievement of Beadle and Ephrussi’s work. As stated right at the beginning of their article:

In this paper we shall present the detailed results of preliminary investigations […] which we hope will serve to point out the lines along which further studies will be profitable.

The actual process, the contingencies and detours are not the subject of the narrative. The activities are reported as they would have been performed if the researchers had known better from the beginning. This explains the common mismatch between research process and report. The result is an approach that works and that enables researchers to make certain kinds of claims. Understanding the approach is a condition for understanding the terms and the significance of the hypothesis, no matter how well supported it is by the evidence. Furthermore, it is this kind of knowledge researchers can employ to design new research projects (Reference MeunierMeunier 2019). It is anticipated that further research ‘along these lines’ will lead to modifications of the theoretical claims. The purpose of the narrative is to make readers as members of the relevant community (geneticists) familiar with the approach, such that they understand ‘some of the possibilities in the application of the method of transplantation’ with regard to the shared problem of gene action (Reference Beadle and EphrussiBeadle and Ephrussi 1936: 245). Accordingly, the hypotheses about these epistemic objects which might or might not enter the narrative of nature are not the only or even primary result.

In order to present the approach as universally applicable to the problem faced by the community, the narrative takes on the character of a generic narrative, even though it is in fact about particular events. It is thus pseudo-generic. More positively, the particular events are presented as exemplary; the research article constitutes an exemplifying narrative.

A significant stylistic difference between research narratives and many other accounts of personal experience is the use of an impersonal style and the present tense. These literary devices remove ‘indexicality’ (Reference Harré and NashHarré 1990). In sentences of the type ‘when implanted into a x host, a y disk gives rise to a z eye’, the researcher-character is hidden by omitting the pronoun, while the present-tense detaches the activities from time and site. On the level of narrating, this has the effect that the narratee, guided through the experimental scene as an apprentice, can occupy the vacant position of the agent and perceive the event from the character’s point of view (or rather point of action). A reader can then adopt the narratee’s and thereby the character’s position.

Grammatically, the character is only referentially absent but performatively present as the agent of implantation. Hiding the character thus renders the narrated events universal experiences of an unspecified agent. However, the occasional use of ‘we’, reference to individual instances (flies), and the use of the past tense anchor the narrative in particular events experienced by the character. Semiotically, the character as a complex sign denotes Beadle and Ephrussi. In so far as their experience is represented by the narrative, they are construed as exemplars of researchers in their community, who could all have similar experiences when performing the approach exemplified in the activities in which Beadle and Ephrussi engaged.Footnote 27

Members of the community can read the text as narrating what Beadle and Ephrussi did or as stating what can be done regarding the problem. This ambiguity is indeed necessary. An approach is seen as universally applicable to a type of problem, just like a hypothesis is seen as universally answering to a problem. But, an approach, unlike a hypothesis, is not justified; it is not shown to be true, but it is shown to work. This is achieved by guiding the narratee along a path through various epistemic scenes, to see that one can do these things because they have been done.

In conclusion, while understanding the terms and the significance of a hypothesis (and not least the degree to which it is supported by the evidence) through understanding the approach is the condition for members of the community to accept the hypothesis as fact and incorporate it into emerging narratives of nature, the primary result communicated through the research narrative is the approach itself, as exemplified in the particular activities reported. Rendering the events generic, by stylistic means, helps members of the community to familiarize themselves with the approach as generally applicable to a shared problem.Footnote 28

13.1 Introduction

This chapter is about the role of narratives in chemistry. Recent studies by historians and philosophers of science have argued that narratives play an important part in shaping scientific explanations; narratives are not, according to this view, only concerned with rhetoric or communication, and not an added extra, but integral to the work of social and natural sciences. In Mary Morgan’s concise definition, ‘what narratives do above all else is create a productive order amongst materials with the purpose to answer why and how questions’ (Reference MorganMorgan 2017: 86).

Notions of narrative are not alien to existing discussions of chemistry: most notably, the Nobel Prize-winning organic chemist Roald Hoffmann has argued that chemical findings should be given narrative form, and similar arguments are present (or at least implicit) in some chemical publications, process ontologies of chemistry and historians’ and social scientists’ critical accounts of chemistry. Despite their differences, these claims are based on a shared understanding of the purpose of narrative which goes beyond attention to productive order: they suggest that narratives should be used to challenge the conventional demarcations of chemical accounts and ‘let the world back in’ by incorporating contingencies, aspects of decision-making, social dynamics and the interactions between humans and chemical substances which are not usually included within the chemical literature. All continue to bring materials together, to answer questions – they are thus still narratives in Morgan’s sense – but they also proceed contrastively, by trying to offer something beyond the conventions of writing in chemistry. These more capacious narratives contrast with the extremely terse form usually adopted by chemical publications. I will call the conventional presentation of chemical findings, ‘thin narratives’, and the more capacious ones recommended by some chemists, philosophers and historians, ‘thick narratives’.

My distinction between the thick and the thin is modelled on the anthropologist Clifford Reference GeertzGeertz’s (1973) celebrated discussion of ‘thick description’. Geertz gave the example of describing someone who was winking, first developed by the philosopher Gilbert Ryle. We could describe a wink in physiological terms – through a very specific sequence of muscle contractions, or more simply in terms of what we observe directly. Or we could say something like, the man winked conspiratorially, according to a cue we had agreed beforehand, and I was delighted. The former confines its description to a single plane: that of observable physiological phenomena – Reference RyleRyle (1947) called it a ‘thin’ description. The latter incorporates context and intentionality, which cannot just be read directly, but require additional elucidation and the incorporation of considerations behind the immediately observable. It is a ‘thick’ description. By extension, a thin narrative is a sequence or productive order, all of whose materials are presented as closely interrelated and conducing to the same purpose, and which can readily be transferred from one situation to another.Footnote 1 The thin narrative may also be presented in a formal language, which encodes relations and interactions between the entities involved in the narrative. A thick narrative, by contrast, is one which incorporates more context and considerations which may not be directly related to the explanatory task at hand, and which may be more difficult to move around.

The distinction between thin and thick descriptions carries normative implications. Geertz thought that anthropology needed thick descriptions; that its accounts would be incomplete and misleading without them. Similarly, the chemists and writers in chemistry who have called for the use of narrative form argue that understanding of chemical processes and chemists’ decision-making will be impoverished without the incorporation of elements which are usually not found in works of chemistry. But the difference between the thick and the thin has been understood in a much wider sense as well. The historian Ted Reference PorterPorter (2012) argues that the institutional and bureaucratic structures of modernity tend to privilege thin descriptions and to denigrate thick ones, and that natural sciences have been justified through an appeal to thinness, sometimes even changing their own thickets of practices and overlooking the persistence of skilled judgement in response to the pressure to offer thin descriptions.

I think that Porter is right to claim that thin descriptions (and thin narratives) are characteristic products of modernity, and that it has often been a chief aim of historical and sociological analysis to restore a measure of thickness. The views of chemistry discussed in this chapter are examples of arguments which have exactly this goal in mind. Nevertheless, Porter’s view requires two qualifications. First, we should not give the impression that thin descriptions and narratives are impoverished, because this risks overlooking the functions which they serve, such as providing a condensed, unitary record of chemical reactions, or shared format for planning out new chemical syntheses. Those functions may come with considerable problems, but that does not imply they are unimportant, and indeed they are of considerable utility to working chemists.

Second, thickening can be seen as an end in its own right, an obvious good. But, as the examples discussed in this chapter indicate, different attempts to thicken a thin narrative can have rather divergent aspirations, incorporate details of different kinds, and also make significant omissions. As a result, even thick narratives can look somewhat thin if the goal is to provide a completely comprehensive account. This can be a strength, as long as thickening in itself is not seen as a way to escape the troubles of thinness, or a way to offer the ‘whole story’ which lurks behind the thin surface.

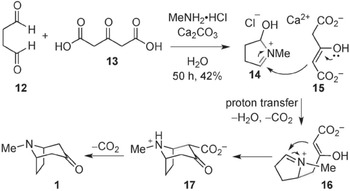

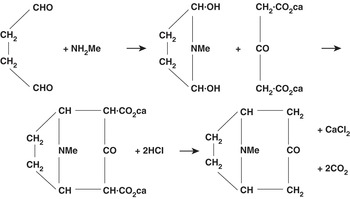

In this chapter, I describe and analyse thin and thick chemical narratives, using the example of synthetic reaction schemes linked to a ‘classic’ synthesis from the history of chemistry: Robert Robinson’s ‘one pot’ production of tropinone, which was accomplished in 1917. In section 13.2, I present a twenty-first-century rendering of the tropinone reaction scheme, as well as its Reference Robinson1917 counterpart, and use work by the chemist-historian Pierre Laszlo to indicate some of the reasons that chemists may prefer to present their findings in such a thin form. Sections 13.3 and 13.4 contrast two kinds of arguments that conventional presentations of chemical results are deficient on the grounds of their thinness – those employed by chemists and those advocated by analysts of the science, respectively – and explore how such attempts played out in repeated retellings of Robinson’s tropinone synthesis. This leads me, finally, to consider some implications of thinking in terms of thick and thin narratives for historical and philosophical writing about chemistry.

Before I do so, however, I want to introduce my historical case study of a thin chemical narrative which has repeatedly been thickened. The example is drawn from the career of celebrated organic chemist Robert Robinson. Born in Derbyshire in 1886, Robinson (d. 1975) would acquire a reputation as one of the foremost organic chemists of the first half of the twentieth century and become President of the Royal Society and an advisor to the British government on a range of chemical topics, including colonial development. In 1917, Robinson achieved a synthesis of the alkaloid tropinone that significantly simplified the previous multi-stage, and therefore highly inefficient, scheme. Robinson’s scientific paper on the synthesis was published in the same year and detailed how he used counter-intuitive chemical starting products to produce tropinone at room temperature, and without any extremes of alkalinity or acidity. Furthermore, the process involved several reactions which led on from one another without requiring further intervention on the part of the chemist. These features of the synthesis led to its becoming one of the foundational works for Robinson’s reputation as a significant synthetic chemist, and to its elevation to the status of a synthetic ‘classic’ – discussed in textbooks and cited as an inspiration by chemists even now (Reference Medley and MovassaghiMedley and Movassaghi 2013). As we will see in section 13.3, Robinson’s tropinone synthesis has been repeatedly retold by chemists, and was the subject of a sustained historical investigation by Robinson’s one-time student, the Australian biochemist Arthur Birch.

13.2 Synthetic Reaction Schemes as Thin Narratives

Reaction schemes are one of the characteristic ways in which organic chemists plan and record their activities; it is therefore not surprising that Robinson’s landmark publication on the one-pot synthesis of tropinone included such a scheme. Drawing on discussions by Robert Meunier (Chapter 12), Line Andersen (Chapter 19), Norton Wise (Chapter 22) and Andrew Hopkins (Chapter 4) from elsewhere in this volume, this section will discuss some of the features which make reaction schemes distinctive as thin narratives, as well as ways in which they are similar to scientific narratives found in other domains.

Figure 13.1 is taken from a 2013 reconsideration of Robinson’s ‘landmark’ synthesis of tropinone and records a reaction scheme for the synthesis according to twenty-first-century conventions. Read in a clockwise direction, starting in the top left, the scheme shows the ways in which two starting products are subjected to various operations – diluted, reacted with other chemical substances, and so on – which change them into a series of intermediate forms, which gradually become more and more similar to the desired final product (tropinone – see molecule labelled 1 in Figure 13.1). The synthesis of complex natural products can involve many hundreds of separate stages, although this version of the tropinone synthesis only involves three intermediate stages. Indeed, from a chemist’s point of view, what is striking about this reaction is that a considerable amount of change happens in only a few stages. Each stage consists of one or several structural formulae: diagrams through which chemists represent both the composition of chemical substances and their spatial arrangement; knowledge of composition and structure helps chemists to construct explanations about how chemical substances will react with one another. Stages in the scheme occur in a particular sequence of reactions, where structural formulae indicate both the protagonists of the synthesis (the chemical substances which play a part in it) and the functions which these chemical substances can play. The transition between the different steps of the synthetic sequence is indicated by straight arrows, while the intermediary reactions are animated, so to speak, by the curved arrows that join together different chemical structures and show the movement of electrons. These curly arrows, which came into widespread use in the second and third decades of the twentieth century, allow the reaction sequence to offer an indication of what is happening at a molecular level to form the desired final chemical substance.

Figure 13.1 Modern representation of Robinson’s ‘landmark’ synthesis of tropinone

If the reaction scheme provides an ordered sequence of chemical events leading to a single goal (the end product), it is also important to note what the scheme does not show. It does not give an indication of what happens to any chemical substances which do not play a role in subsequent stages of the synthesis, and which are treated as waste products. Similarly, the scheme does not give any indication of the process by which the sequence was arrived at. It also presents a series of operations and reactions which may occur within an organism, or in a laboratory, as though they followed on naturally from each other – the role of the human chemist in performing the synthesis does not appear as distinct from the reactions of chemical substances.

Considered in this way, it makes sense to consider chemical reaction schemes as thin narratives: ordered sequences of chemical events conducing to a single, unified end, in which human intervention is flattened onto the same plane as chemical interactions. Moreover, the reaction scheme resembles a ‘narrative of nature’, in Robert Meunier’s sense. As Meunier describes such a narrative (Chapter 12), it ‘relates several events which occur in temporal order and are causally connected’, and which is structured into a beginning, middle and end; like the narratives which Meunier discusses, the reaction scheme ‘does not recount particular events, but rather a type of event happening countless times’. And the sequence appears to be self-evident: it does not foreground the role of a human experimenter or observer. In other ways, however, the sequence is rather unlike the examples which Meunier gives. It is told in a formal visual language (the structural formulae), which requires a chemical training to understand, rather than providing a neat compact set of events that are (potentially) intelligible to non-scientists. It is not that the reaction sequence cannot be paraphrased, or its events presented verbally; instead, a verbal paraphrase of the sequence of chemical events presented in the reaction scheme would be just as terse and technical as the reaction scheme, just as thin a narrative.

Here, for example, is one such verbal description (of a different synthetic reaction), presented by the chemist, historian and philosopher Pierre Laszlo:

L-Proline was esterified (12) by treating it with MeOH and thionyl chloride at 0°C, followed by Boc protection of secondary amine in dry tetrahydrofuran (THF) using triethyl amine as base at rt, furnishing (13), which on LAH reduction at 0°C in dry THF provided alcohol (14).

Unpacking the meaning of this extremely terse sentence, Laszlo argues, relies on the implicit knowledge of the chemist. He attempts two glosses of this piece of ‘chemese’. The first seeks to define the provenance of the chemical substances mentioned in the paper – indicating how they would be obtained – and a description of the verbs, suggesting what is turning into what.

The chemical recipient of this treatment is the amino acid proline, as the (natural) L-enantiomer. It can be bought from suppliers of laboratory chemicals. Its esterification means formation of an ester between its carboxylic COOH group and the simplest of alcohols, methanol (here written as MeOH), another commercial chemical, in the presence of thionyl chloride (SOCl2), also commercial. The reaction scheme bears the instruction ‘0°C-rt, 4 h’, in other words, ‘dissolve proline and thionyl chloride in methanol, held in a cooling bath, made of water with floating ice cubes, at 0°C and let this mixture return to room temperature (rt) over four hours, before extracting the desired product’.

Laszlo goes on to unpack the sentence’s other implicit meanings, in a manner which draws them out towards the laboratory routines of the chemist:

[T]he stated ‘room temperature’ in fact has a meaning more elaborate than ‘the temperature in the laboratory’. It means ‘about 20°C’, hence if the actual room temperature is markedly different, one ought to switch on either heating or air-conditioning.

Laszlo’s commentaries give one perspective from which to unpack the sentence, which works outward from the various materials employed in the experimental process to the routines of the laboratory and the chemist’s view of her workflow and the conditions in which she is working. Different explications could be given. Laszlo’s larger point is that the cognition of chemists involves associative processes, ‘molecular polysemy’, characterized by continually shifting horizons: new chemical discoveries add extra layers of association to the sentence’s existing stock of substances by positing new relations between them. Sentences, such as Laszlo analyses, lack, even as an aspiration, an attempt to fix the meanings of their key terms.

The use of structural formulae and of the terse language of ‘chemese’ are the reasons that I think we should consider chemical syntheses, as typically presented, as thin narratives, even in their verbal form. The powerful and polysemous formal languages of organic chemistry provide a rich but also restrictive vocabulary for describing what has happened or can happen, in chemical terms – for keeping track of how chemical substances change and the reasons for thinking that they may be used to serve chemists’ purposes. Chemists’ use of diagrammatic sequences and of language bring accounts of chemical syntheses into a single plane, with all relevant chemical actions and events describable in the same terms. And structural formulae can be used not only to explain what has happened, to record synthetic achievements or to investigate synthetic pathways in living organisms; the formulae can also be used to plan novel syntheses, with the information encoded in the formulae giving a good idea of what approaches might or might not be workable within the laboratory.Footnote 3 On their own terms, such ‘narratives of nature’ are meant to be self-sufficient, a robust and portable sequence of events which can be unpacked by a skilled chemist.

My attempt to consider such terse and formalized sequences as narratives in their own right, however, also indicates their potential instability – reasons that others might call for them to be ‘thickened’. Other chapters in this book have aligned narrative with the experiential dimension of interpreting a formalized sequence; this is the gist of Line Andersen’s discussion of mathematical proofs (Chapter 19), which Norton Wise (Chapter 22) describes as follows: ‘Reading a proof in experiential terms changes what looks to an outsider like a purely formal structure into a natural narrative for the reader; so too the experiential reading enriches the formal language of rigorous proof with the natural language of narrative, for it calls up meanings that the unaided formal language, lacking background and context, cannot convey’. If the opposition is drawn between formal language, on the one hand, and natural language, on the other, then thin narratives only become narrative when they are interpreted by a skilled reader, who is able to supply context and detail that may be absent from the plane of the formal representation itself. In the absence of a reader who possesses such ‘scripts’, reaction sequences cannot function as narratives. Even so – and I wish to insist on this – organic chemists do not simply animate the dry bones of their thin narratives with their competence, background knowledge and experience; chemists have also argued, explicitly, that the formal languages in which chemical research is presented provide an inadequate account of chemists’ reasoning and the character of the interactions between chemical substances which they employ. I will discuss chemists’ calls for thickening in the next section of this chapter.

For now, I wish to follow Meunier’s lead and ask to what extent these thin chemical narratives might encode their origins in experimental research practices. Meunier (Chapter 12) emphasizes that each part of a narrative of nature ‘can be traced back to an episode of research’, with narratives of nature ‘emerg[ing] gradually from the research literature as facts accepted in a community’, with the experimental aspects of ‘the methods by which the knowledge was achieved […] abandoned like ladders once the new state of knowledge is reached’. Is something similar happening with the narrative of nature provided by the reaction scheme? The answer to this question is a qualified yes: indeed, the narrative of nature is related to past experimental work, but in organic chemical synthesis the experimental narrative retains a stronger presence in an organic chemical reaction scheme than would be the case for the biological narratives which Meunier examines.

We can see this with reference to Figure 13.2, which shows the tropinone reaction scheme as presented by Robinson in his Reference Robinson1917 publication. As before, the scheme bears features of a thin narrative: a sequence of chemical events leading to a single outcome (the tropinone molecule – see bottom right), presented in the formal language of structural formulae, with no explicit indication of the researcher’s interventions or the laboratory context. But, if we compare Figure 13.2 with Figure 13.1, we note an important difference in the way the structural formulae are presented. As Reference Laszlo and KleinLaszlo (2001) remarks, to the eye of the present-day chemist, the structural formulae found in Figure 13.2 and similar publications look like primitive attempts to capture the spatial arrangement of chemical substances. But this is a historical mirage. The way in which late nineteenth- and early twentieth-century chemists used structural formulae, Laszlo argues, was primarily to relate their experimental investigations to the edifice of organic chemistry, to situate new findings in relation to existing work, and to draw the map of relations between chemical substances. The formula ‘spelled out to its proponent a historical account of how it came to be, of how it had been slowly and carefully wrought. A formula was the sum total of the work, of the practical operations, of the inter-relating to already known compounds, which had gone into its elucidation’ (Reference Laszlo and KleinLaszlo 2001: 55). As such, the formula amounted to a kind of ‘condense[d] […] narrative’ (Reference Laszlo and KleinLaszlo 2001: xx), whose history would need to be unpacked by a skilled chemist familiar with the relevant literature.Footnote 4 In other words, the structural formulae of the late nineteenth and early twentieth centuries encoded what Meunier terms ‘research narratives’ – although once again their narrative qualities were not obvious to the non-specialist, and had to be unpacked. It is only on the basis of hindsight, Laszlo says, that present-day chemists might see the structural formulae of the late nineteenth and early twentieth centuries as continuous with those of present-day chemistry. We might even say that these historical research narratives are so thin, bound so tightly into a single plane, that their practically and epistemically significant details cannot easily be recovered by today’s skilled practitioner in synthesis.

Figure 13.2 Robinson’s original representation of ‘A Synthesis of Tropinone’

Before examining the different styles of thickening, I want to note some of the distinctive uses which a thin narrative could play in the hands of a chemist like Robinson. The same year that Robinson published his laboratory synthesis, he wrote and published a second paper proposing that he might have found a plausible pathway for the formation of alkaloids in living plants. This claim was absent from his first paper, which instead positioned tropinone as a precursor to a number of products of commercial and medical significance. Robinson’s new claim relied on the reaction’s status as a thin narrative. That is, it was a scheme that could be picked up from one context and inserted into another, without changing significantly. Contemporary textbooks show Robinson’s speculations being reported respectfully, and alongside the proposals of other chemists; in the 1910s and 1920s, experimental methods were not available to trace the formation of chemical substances directly. This changed in the early 1930s, with the development of carbon tracing techniques; initially, Robinson’s proposal appeared to have been borne out in practice, although subsequent experimental findings cast doubt on its correctness.

Robinson maintained his distance from experimental attempts to confirm his speculation and was even a little scornful of them. The Australian natural products chemist Arthur Birch, who was at one time Robinson’s student, recalled that Robinson was reluctant to take ‘pedestrian, even if obviously necessary steps beyond initial inspiration’, and would even claim to be disappointed if his findings were confirmed. As a result, ‘if Robinson correctly “conceived and envisaged” a reaction mechanism […] he thought he had “proved” it’ (Reference BirchBirch 1993: 282). For Robinson, the venturesome daring of the thin narratives of organic chemistry was all-important: a way to avoid becoming bogged down in the minutiae of subsequent development.

13.3 The Pot Thickens: Chemists’ Claims

In this section, I will discuss some of the ways in which chemists have sought to thicken the thin narratives described in the previous section, beginning with arguments by the Nobel laureate, poet and playwright Roald Hoffmann. Then I will look at two other sorts of narrative thickening which chemists have employed, which proceed by emphasizing contrastive and contingent aspects of the chemical story.

Roald Reference HoffmannHoffmann (2012: 88) argues that narrative gives a way to ‘construct with ease an aesthetic of the complicated, by adumbrating reasons and causes […] structuring a narrative to make up for the lack of simplicity’. In other words, the interactions between chemical substances which characterize chemical explanations and the decisions of human chemists which impact on chemical research programmes are highly particular, involving contingencies and speculations and evaluations in terms of human interest in order to make sense. Hoffmann aligns scientific narratives with literary ones on three grounds – a shared approach to temporality, causation and human interest – and he particularly emphasizes the greater narrative satisfactions which are often found in oral seminar presentations than in published scientific papers. In drawing his distinction between narrative and information, Hoffman quotes from the philosopher Walter Benjamin: information can only communicate novelty, whereas a story ‘does not expend itself. It preserves and concentrates its strength and is capable of releasing it even after a long time’ (Reference BenjaminBenjamin 1968: 81). In the terms which I am using in this chapter, Hoffmann offers a call for narrative thickening – for getting behind the surface of the conventional chemical article to explain the human dynamics and non-human particularities that have shaped chemical research. In Hoffmann’s view, the role of narratives in chemistry should be taken seriously as a way for chemists to be clearer about how they actually think and work (as opposed to idealizations which would present chemistry as an affair of discovering universally applicable laws). Hoffmann’s position has both descriptive and normative implications. He suggests that if we scratch the surface we will see that chemists do use narratives as a matter of course; but also that if chemists reflect on how they use narratives this will contribute to a better understanding of their work.

‘Classic’ syntheses, like Robinson’s production of tropinone, come to take on the attributes of narratives in Hoffmann’s sense. They are retold for their ingenuity and human interest, to motivate further inquiry, to suggest imitable problem-solving strategies and as part of chemists’ professional memory; some chemists also argue that they are worth revisiting repeatedly to allow new lessons to be drawn. In this sense, they are more like stories than like information, in Hoffmann’s terms. So, for example, the chemists Reference Medley and MovassaghiJonathan Medley and Mohammad Movassaghi (2013: 10775) wrote almost a hundred years after Robinson’s initial synthesis that it had ‘continue[d] to serve as an inspiration for the development of new and efficient strategies for complex molecule synthesis’. The tropinone synthesis has been retold by chemists on a number of different occasions over the past century, and these retellings have drawn out a variety of meanings from the synthesis and related it to subsequent chemical work in a number of different ways. In the process, chemists have used contrasts to emphasize different aspects of the synthesis, or tried to restore contingent historical details or aspects of context which would not be apparent from the elegant, but notably thin, reaction schemes discussed in the previous section.

A similar discontent to the one which Hoffmann expresses with the terseness of conventional chemical publications can be detected in some twentieth-century publications on organic chemical synthesis. Complex, multi-stage syntheses can take many researchers many years to achieve, but the final publication may ignore possible routes which were not taken, or which were successful but proved to be less efficient or in other ways less desirable than the final synthetic pathway. In an article from 1976, the chemist Ivan Ernest explicitly tries to challenge this tendency by reconstructing in some detail the plans which the research group made and the obstacles which led them to give up the approaches which had initially appeared promising. Rather than presenting the final synthesis as an edifice which could only adopt one form, this method of presentation emphasizes the chemists’ decision-making, and the interaction between their plans and what they found in the laboratory. And rather than presenting the structural formulae of the reaction sequence simply as stepping stones towards a pre-determined end, Ernest’s article (1976) emphasizes that each stage of the synthesis should be considered as a node, a moment when several different decisions may be possible. Like Hoffmann’s view of narrative in chemistry, Ernst’s article emphasizes contingency and the human interest of chemical decision-making in the laboratory, giving a more complex and nuanced human story about what this kind of experimentation involves. In other respects, though, it does not diverge significantly from the conventional presentation of thin chemical narratives – it is still presented chiefly in the form of structural formulae, and its presentation is based chiefly on laying different routes alongside each other, giving additional clarity to the decisions made in the final synthesis by comparing it with paths not taken – what could have happened but did not. I call this contrastive thickening because it contributes to the scientific explanation by allowing for a contrast between the final decisions which were made and other paths which could have been taken. Every event in the narrative thus exists in the shadow of some other possibility; what did happen can be compared with what did not.

Beyond telling different ways in which things can happen, chemically, to allow the desired outcome to be reached, contrastive thickening also introduces a different way of thinking about the shape of the whole synthesis and what motivates the relations between its different stages. For example, when Robinson’s reaction scheme for synthesizing tropinone is contrasted with that proposed by German organic chemist Richard Willstätter in 1887, contemporary chemists evoke notions of ‘brute force’ and an ‘old style’ of synthesis to describe Willstätter’s approach. Robinson’s scheme contrasts as a far more efficient experimental methodology, and the first glimpse of a more rational approach towards synthetic planning, which is based on starting with the final form of a molecule and then dividing it up.

Contrastive thickening tries to show that the final form of a chemical synthesis could have turned out differently, but does not make significant changes to the terse manner in which chemical syntheses are presented – Ernst’s article is still narrated primarily in ‘chemese’. Contingent thickening, in contrast, proceeds by fuller narration. For instance, Ernst’s sense that conventional publications on synthesis failed to give the whole story was also cited as inspiration in the first volume of the book series Strategies and Tactics in Organic Synthesis, a collection of papers in which chemists were invited to reflect on the contingencies, human factors and tangled paths of their experimental work. The chapters adopt an avowedly narrative style, and emphasize the prolonged difficulty of synthetic work as well as its eventual achievements. Details include serendipitous discoveries in the chemical literature; and discussions of sequencing syntheses so that their more tricky or untested parts are not attempted at the end, putting previous work into jeopardy. These narrative approaches are intended to stir reflection on problem-solving, and how chemists do not rely on the formal language of structural formulae and planning primarily in their synthetic work. They also share with Hoffmann the goal of keeping chemists motivated and the less codifiable aspects of synthetic knowledge in clear view. The Harvard chemist E. J. Corey writes in his preface to the third volume of the series that

the book conveys much more of the history, trials, tribulations, surprise events (both negative and positive), and excitement of synthesis than can be found in the original publications of the chemical literature. One can even appreciate the personalities and the human elements that have shaped the realities of each story. But, above all, each of these chapters tells a tale of what is required for success when challenging problems are attacked at the frontiers of synthetic science.

In Corey’s view, it is easy to think of synthetic chemistry as ‘mature’ because it has grown more ‘sophisticated and powerful’ over the past two centuries. But the impression of maturity belies the fact ‘that there is still much to be done’ and that the ‘chemistry of today will be viewed as primitive a century from now’. As such, it is important that ‘accurate and clear accounts of the events and ideas of synthetic chemistry’ should be available to the chemists of the future, lest they be misled into thinking that chemistry has become routine. Thickening, in this contingent form, reintroduces research narratives alongside the thin narratives of nature for the benefit of the discipline of chemistry: motivation and inculcation of junior researchers into the culture of synthetic research.

13.4 Analysts’ Narratives: Processual and Contextual Thickening