1. Introduction

A Hamilton cycle in a graph is a cycle that passes through all the vertices of the graph exactly once, and a graph containing a Hamilton cycle is called Hamiltonian. Even though a Hamilton cycle is a relatively simple structure, determining whether a certain graph is Hamiltonian was included in the list of

![]() $21$

NP-hard problems by Karp [Reference Karp21]. Thus, there is significant interest in deriving conditions that ensure Hamiltonicity in a given graph. For instance, the celebrated Dirac’s theorem [Reference Dirac9] states that every graph on

$21$

NP-hard problems by Karp [Reference Karp21]. Thus, there is significant interest in deriving conditions that ensure Hamiltonicity in a given graph. For instance, the celebrated Dirac’s theorem [Reference Dirac9] states that every graph on

![]() $n \geq 3$

vertices with a minimum degree of

$n \geq 3$

vertices with a minimum degree of

![]() $n/2$

is Hamiltonian. For more results on Hamiltonicity, readers can refer to the surveys [Reference Frieze12, Reference Kühn and Osthus27, Reference Kühn and Osthus28].

$n/2$

is Hamiltonian. For more results on Hamiltonicity, readers can refer to the surveys [Reference Frieze12, Reference Kühn and Osthus27, Reference Kühn and Osthus28].

Most classical sufficient conditions for a graph to be Hamiltonian are only applicable to relatively dense graphs, such as those considered in Dirac’s Theorem. Establishing sufficient conditions for Hamiltonicity in sparse graphs is known to be much more challenging. Sparse random graphs are natural objects to consider as starting points, and they have attracted a lot of attention in the past few decades. In 1976, Pósa [Reference Pósa34] proved that for some large constant

![]() $C$

, the binomial random graph model

$C$

, the binomial random graph model

![]() $G(n, p)$

with

$G(n, p)$

with

![]() $p \geq C\log n/n$

is typically Hamiltonian. In the following few years, Korshunov [Reference Korshunov24] refined Pósa’s result, and in 1983, Bollobás [Reference Bollobás5], and independently Komlós and Szemerédi [Reference Komlós and Szemerédi23] showed a more precise threshold for Hamiltonicity. Their results demonstrate that if

$p \geq C\log n/n$

is typically Hamiltonian. In the following few years, Korshunov [Reference Korshunov24] refined Pósa’s result, and in 1983, Bollobás [Reference Bollobás5], and independently Komlós and Szemerédi [Reference Komlós and Szemerédi23] showed a more precise threshold for Hamiltonicity. Their results demonstrate that if

![]() $p = (\log n + \log \log n + \omega (1))/n$

, then the probability of the random graph

$p = (\log n + \log \log n + \omega (1))/n$

, then the probability of the random graph

![]() $G(n, p)$

being Hamiltonian tends to 1 (we say such an event happens with high probability, or whp for brevity).

$G(n, p)$

being Hamiltonian tends to 1 (we say such an event happens with high probability, or whp for brevity).

Following the fruitful study of random graphs, it is natural to explore families of deterministic graphs that behave in some ways like random graphs; these are sometimes called pseudorandom graphs. A natural candidate to begin with is the following: suppose that we sample a random graph

![]() $G\sim G(n,p)$

, and then allow an adversary to delete a constant fraction of the edges incident to each vertex. The resulting subgraph

$G\sim G(n,p)$

, and then allow an adversary to delete a constant fraction of the edges incident to each vertex. The resulting subgraph

![]() $H\subseteq G$

loses all its randomness. Thus, we cannot use, for example, a multiple exposure trick and concentration inequalities, which were heavily used in the proof of Hamiltonicity of a typical

$H\subseteq G$

loses all its randomness. Thus, we cannot use, for example, a multiple exposure trick and concentration inequalities, which were heavily used in the proof of Hamiltonicity of a typical

![]() $G\sim G(n,p)$

. Under such a model, one of the central problems to consider is quantifying the local resilience of the random graph

$G\sim G(n,p)$

. Under such a model, one of the central problems to consider is quantifying the local resilience of the random graph

![]() $G$

with respect to Hamiltonicity. In [Reference Sudakov and Vu36], Sudakov and Vu initiated the study of local resilience of random graphs, and they showed that for any

$G$

with respect to Hamiltonicity. In [Reference Sudakov and Vu36], Sudakov and Vu initiated the study of local resilience of random graphs, and they showed that for any

![]() ${\varepsilon } \gt 0$

, if

${\varepsilon } \gt 0$

, if

![]() $p$

is somewhat greater than

$p$

is somewhat greater than

![]() $\log ^4n/n$

, then

$\log ^4n/n$

, then

![]() $G(n, p)$

typically has the property that every spanning subgraph with a minimum degree of at least

$G(n, p)$

typically has the property that every spanning subgraph with a minimum degree of at least

![]() $(1 + {\varepsilon })np/2$

contains a Hamilton cycle. They also conjectured that this remains true as long as

$(1 + {\varepsilon })np/2$

contains a Hamilton cycle. They also conjectured that this remains true as long as

![]() $p=(\log n+\omega (1))/n$

, which was solved by Lee and Sudakov [Reference Lee and Sudakov29]. Later, an even stronger result, the so-called “hitting-time” statement, was shown by Nenadov, Steger and Trujić [Reference Nenadov, Steger and Trujić32], and Montgomery [Reference Montgomery30], independently.

$p=(\log n+\omega (1))/n$

, which was solved by Lee and Sudakov [Reference Lee and Sudakov29]. Later, an even stronger result, the so-called “hitting-time” statement, was shown by Nenadov, Steger and Trujić [Reference Nenadov, Steger and Trujić32], and Montgomery [Reference Montgomery30], independently.

Exploring the properties of pseudorandom graphs, which has attracted many researchers in the area, is much more challenging than studying random graphs. The first quantitative notion of pseudorandom graphs was introduced by Thomason [Reference Thomason37, Reference Thomason38]. He initiated the study of pseudorandom graphs by introducing the so-called

![]() $(p,\lambda )$

-jumbled graphs, which satisfy

$(p,\lambda )$

-jumbled graphs, which satisfy

![]() $|e(U)-p\binom {|U|}{2}|\leq \lambda |U|$

for every vertex subset

$|e(U)-p\binom {|U|}{2}|\leq \lambda |U|$

for every vertex subset

![]() $U\subseteq V$

. Since then, there has been a great deal of investigation into different types and various properties of pseudorandom graphs, for example, [Reference Allen, Böttcher, Hàn, Kohayakawa and Person1, Reference Conlon, Fox and Zhao8, Reference Hàn, Han and Morris15, Reference Han, Kohayakawa, Morris and Person16, Reference Kohayakawa, Rödl, Schacht, Sissokho and Skokan22, Reference Nenadov31]. This remains a very active area of research in graph theory.

$U\subseteq V$

. Since then, there has been a great deal of investigation into different types and various properties of pseudorandom graphs, for example, [Reference Allen, Böttcher, Hàn, Kohayakawa and Person1, Reference Conlon, Fox and Zhao8, Reference Hàn, Han and Morris15, Reference Han, Kohayakawa, Morris and Person16, Reference Kohayakawa, Rödl, Schacht, Sissokho and Skokan22, Reference Nenadov31]. This remains a very active area of research in graph theory.

One special class of pseudorandom graphs which has been studied extensively is the class of spectral expander graphs, also known as

![]() $(n,d,\lambda )$

-graphs. Given a graph

$(n,d,\lambda )$

-graphs. Given a graph

![]() $G$

on vertex set

$G$

on vertex set

![]() $V=\{v_1,\ldots, v_n\}$

, its adjacency matrix

$V=\{v_1,\ldots, v_n\}$

, its adjacency matrix

![]() $A\,:\!=\,A(G)$

is an

$A\,:\!=\,A(G)$

is an

![]() $n\times n$

,

$n\times n$

,

![]() $0/1$

matrix, defined by

$0/1$

matrix, defined by

![]() $A_{ij}=1$

if and only if

$A_{ij}=1$

if and only if

![]() $v_iv_j\in E(G)$

. Let

$v_iv_j\in E(G)$

. Let

![]() $s_1(A)\geq s_2(A)\geq \cdots \geq s_n(A)$

be the singular values of

$s_1(A)\geq s_2(A)\geq \cdots \geq s_n(A)$

be the singular values of

![]() $A$

(see Definition 3.3). Observe that for a

$A$

(see Definition 3.3). Observe that for a

![]() $d$

-regular graph

$d$

-regular graph

![]() $G$

, we always have

$G$

, we always have

![]() $s_1(G)\,:\!=\,s_1(A(G))=d$

, so the largest singular value is not a very interesting quantity. We say that

$s_1(G)\,:\!=\,s_1(A(G))=d$

, so the largest singular value is not a very interesting quantity. We say that

![]() $G$

is an

$G$

is an

![]() $(n,d,\lambda )$

-graph if it is a

$(n,d,\lambda )$

-graph if it is a

![]() $d$

-regular graph on

$d$

-regular graph on

![]() $n$

vertices with

$n$

vertices with

![]() $s_2(G) \leq \lambda$

.

$s_2(G) \leq \lambda$

.

The celebrated Expander Mixing Lemma (see, e.g. Chapter 9 in [Reference Alon and Spencer3]) provides a powerful formula to estimate the edge distribution of an

![]() $(n, d, \lambda )$

-graph, which suggests that

$(n, d, \lambda )$

-graph, which suggests that

![]() $(n,d,\lambda )$

-graphs are indeed special cases of jumbled graphs, and that

$(n,d,\lambda )$

-graphs are indeed special cases of jumbled graphs, and that

![]() $G$

has stronger expansion properties for smaller values of

$G$

has stronger expansion properties for smaller values of

![]() $\lambda$

. Thus, it is natural to seek for the best possible condition on the spectral gap (defined as the ratio

$\lambda$

. Thus, it is natural to seek for the best possible condition on the spectral gap (defined as the ratio

![]() $\lambda /d$

) which guarantees certain properties. Examples of such results can be found e.g. in [Reference Alon, Krivelevich and Sudakov2, Reference Balogh, Csaba, Pei and Samotij4, Reference Han and Yang17, Reference Pavez-Signé33]. For more on

$\lambda /d$

) which guarantees certain properties. Examples of such results can be found e.g. in [Reference Alon, Krivelevich and Sudakov2, Reference Balogh, Csaba, Pei and Samotij4, Reference Han and Yang17, Reference Pavez-Signé33]. For more on

![]() $(n,d,\lambda )$

-graphs and their many applications, we refer the reader to the surveys of Hoory, Linial and Wigderson [Reference Hoory, Linial and Wigderson19], Krivelevich and Sudakov [Reference Krivelevich and Sudakov26], the book of Brouwer and Haemers [Reference Brouwer and Haemers6], and the references therein.

$(n,d,\lambda )$

-graphs and their many applications, we refer the reader to the surveys of Hoory, Linial and Wigderson [Reference Hoory, Linial and Wigderson19], Krivelevich and Sudakov [Reference Krivelevich and Sudakov26], the book of Brouwer and Haemers [Reference Brouwer and Haemers6], and the references therein.

Hamiltonicity of

![]() $(n,d,\lambda )$

-graphs was first studied by Krivelevich and Sudakov [Reference Krivelevich and Sudakov25], who proved a sufficient condition on the spectral gap forcing Hamiltonicity. More precisely, they showed that for sufficiently large

$(n,d,\lambda )$

-graphs was first studied by Krivelevich and Sudakov [Reference Krivelevich and Sudakov25], who proved a sufficient condition on the spectral gap forcing Hamiltonicity. More precisely, they showed that for sufficiently large

![]() $n$

, any

$n$

, any

![]() $(n,d,\lambda )$

-graph with

$(n,d,\lambda )$

-graph with

has a Hamilton cycle. In the same paper, Krivelevich and Sudakov made the following conjecture.

Conjecture 1.1.

There exists an absolute constant

![]() $c \gt 0$

such that for any sufficiently large integer

$c \gt 0$

such that for any sufficiently large integer

![]() $n$

, any

$n$

, any

![]() $(n,d,\lambda )$

-graph with

$(n,d,\lambda )$

-graph with

![]() $\lambda /d\leq c$

contains a Hamilton cycle.

$\lambda /d\leq c$

contains a Hamilton cycle.

Although there are numerous related results in this direction, there had been no improvement on the original bound until the recent result given by Glock, Correia, and Sudakov [Reference Glock, Correia and Sudakov14]. In their paper, they improved the above result in two different ways:

![]() $(i)$

they demonstrated that the spectral gap

$(i)$

they demonstrated that the spectral gap

![]() $\lambda /d\leq c/(\log n)^{1/3}$

already guarantees Hamiltonicity;

$\lambda /d\leq c/(\log n)^{1/3}$

already guarantees Hamiltonicity;

![]() $(ii)$

they confirmed Conjecture 1.1 in the case where

$(ii)$

they confirmed Conjecture 1.1 in the case where

![]() $d\geq n^{\alpha }$

for every fixed constant

$d\geq n^{\alpha }$

for every fixed constant

![]() $\alpha \gt 0$

.

$\alpha \gt 0$

.

In this paper, we improve the second result in [Reference Glock, Correia and Sudakov14].

Theorem 1.2.

There exists an absolute constant

![]() $c \gt 0$

such that for any sufficiently large integer

$c \gt 0$

such that for any sufficiently large integer

![]() $n$

, any

$n$

, any

![]() $(n,d,\lambda )$

-graph with

$(n,d,\lambda )$

-graph with

![]() $\lambda /d\leq c$

and

$\lambda /d\leq c$

and

![]() $d \geq \log ^{6}n$

contains a Hamilton cycle.

$d \geq \log ^{6}n$

contains a Hamilton cycle.

Our proof works for

![]() $c=\frac {1}{70000}$

, although we made no attempt to optimize this constant.

$c=\frac {1}{70000}$

, although we made no attempt to optimize this constant.

It is worth mentioning that Draganić, Montgomery, Correia, Pokrovskiy, and Sudakov independently verified Conjecture 1.1 in [Reference Draganić, Montgomery, Correia, Pokrovskiy and Sudakov10], and in particular, they proved a stronger statement than our main result. Their approach relies on extensions of the Pósa rotation-extension technique and sorting networks, and utilizes a result in [Reference Hyde, Morrison, Müyesser and Pavez-Signé20] to obtain a linking structure. In contrast, while our work utilizes a previous result on closing vertex-disjoint paths into a cycle, it is primarily based on new machinery introduced in this paper, as summarized in Theorem6.2. Specifically, we show that the spectral gap of a random induced subgraph of an

![]() $(n,d,\lambda )$

-graph is typically bounded above by the spectral gap of the original graph, up to a constant factor.

$(n,d,\lambda )$

-graph is typically bounded above by the spectral gap of the original graph, up to a constant factor.

To achieve this, we utilize results on norms of principal submatrices, such as the Rudelson–Vershynin Theorem [Reference Rudelson and Vershynin35] (see Section 6), and demonstrate that, with probability

![]() $1 - n^{-\Theta (1)}$

, the spectral gap of the induced subgraph remains

$1 - n^{-\Theta (1)}$

, the spectral gap of the induced subgraph remains

![]() $O(\lambda /d)$

for a sufficiently large random vertex subset. We believe that this result will have further applications.

$O(\lambda /d)$

for a sufficiently large random vertex subset. We believe that this result will have further applications.

The paper is organized as follows. In Section 2, we provide an outline of the proof. Section 3 contains the proof of the expander mixing lemma for matrices, followed by an analysis of the special case for almost

![]() $(n,d,\lambda )$

-graphs in Section 4. In Section 5, we introduce the extendability property and reference a useful result from [Reference Hyde, Morrison, Müyesser and Pavez-Signé20], which ensures that vertex-disjoint paths can be used to connect designated pairs of vertices in

$(n,d,\lambda )$

-graphs in Section 4. In Section 5, we introduce the extendability property and reference a useful result from [Reference Hyde, Morrison, Müyesser and Pavez-Signé20], which ensures that vertex-disjoint paths can be used to connect designated pairs of vertices in

![]() $(n,d,\lambda )$

-graphs. Our key lemma, which concerns the second singular value of a random-induced subgraph of an

$(n,d,\lambda )$

-graphs. Our key lemma, which concerns the second singular value of a random-induced subgraph of an

![]() $(n,d,\lambda )$

-graph, is presented in Section 6. Finally, in Section 7, we prove our main result, Theorem1.2, along with a generalized version, Theorem7.1, for “almost”

$(n,d,\lambda )$

-graph, is presented in Section 6. Finally, in Section 7, we prove our main result, Theorem1.2, along with a generalized version, Theorem7.1, for “almost”

![]() $(n,d,\lambda )$

-graphs. For the reader’s convenience, we also include some standard tools from linear algebra and several technical proofs in the Appendix.

$(n,d,\lambda )$

-graphs. For the reader’s convenience, we also include some standard tools from linear algebra and several technical proofs in the Appendix.

1.1 Notation

For a graph

![]() $G=(V,E)$

, let

$G=(V,E)$

, let

![]() $e(G)\,:\!=\,|E(G)|$

. We mostly assume that

$e(G)\,:\!=\,|E(G)|$

. We mostly assume that

![]() $V=[n]$

for simplicity. For a subset

$V=[n]$

for simplicity. For a subset

![]() $A\subseteq V$

of size

$A\subseteq V$

of size

![]() $m$

, we simply call it an

$m$

, we simply call it an

![]() $m$

-set, and we denote the family of all

$m$

-set, and we denote the family of all

![]() $m$

-sets of

$m$

-sets of

![]() $V$

by

$V$

by

![]() $\binom {V}{m}$

. For two vertex sets

$\binom {V}{m}$

. For two vertex sets

![]() $A, B \subseteq V (G)$

, we define

$A, B \subseteq V (G)$

, we define

![]() $E_G(A,B)$

to be the set of all edges

$E_G(A,B)$

to be the set of all edges

![]() $xy\in E(G)$

with

$xy\in E(G)$

with

![]() $x\in A$

and

$x\in A$

and

![]() $y\in B$

, and set

$y\in B$

, and set

![]() $e_G(A,B)\,:\!=\,|E_G(A,B)|$

. For two disjoint subsets

$e_G(A,B)\,:\!=\,|E_G(A,B)|$

. For two disjoint subsets

![]() $X,Y\subseteq V$

, we write

$X,Y\subseteq V$

, we write

![]() $G[X,Y]$

to denote the induced bipartite subgraph of

$G[X,Y]$

to denote the induced bipartite subgraph of

![]() $G$

with parts

$G$

with parts

![]() $X$

and

$X$

and

![]() $Y$

. Moreover, we define

$Y$

. Moreover, we define

![]() $N_G(v)$

to be the neighbourhood of a vertex

$N_G(v)$

to be the neighbourhood of a vertex

![]() $v$

, and define

$v$

, and define

![]() $N_G(A) \,:\!=\, \bigcup _{v\in A} N_G(v) \setminus A$

for a subset

$N_G(A) \,:\!=\, \bigcup _{v\in A} N_G(v) \setminus A$

for a subset

![]() $A\subseteq V$

. We write

$A\subseteq V$

. We write

![]() $N_G(A, B) = N_G(A) \cap B$

and for a vertex

$N_G(A, B) = N_G(A) \cap B$

and for a vertex

![]() $v$

, let

$v$

, let

![]() $N_G(v, B) = N_G(v) \cap B$

. We also write

$N_G(v, B) = N_G(v) \cap B$

. We also write

![]() $\deg _G(v)\,:\!=\,|N_G(v)|$

and

$\deg _G(v)\,:\!=\,|N_G(v)|$

and

![]() $\deg _G(v,B)\,:\!=\,|N_G(v,B)|$

. Finally, let

$\deg _G(v,B)\,:\!=\,|N_G(v,B)|$

. Finally, let

![]() $\delta (G)$

be the minimum degree of

$\delta (G)$

be the minimum degree of

![]() $G$

and let

$G$

and let

![]() $\Delta (G)$

be the maximum degree of

$\Delta (G)$

be the maximum degree of

![]() $G$

.

$G$

.

The adjacency matrix of

![]() $G$

, denoted by

$G$

, denoted by

![]() $A\,:\!=\,A(G)$

, is a

$A\,:\!=\,A(G)$

, is a

![]() $0/1$

,

$0/1$

,

![]() $n\times n$

matrix such that

$n\times n$

matrix such that

![]() $A_{i,j}=1$

if and only if

$A_{i,j}=1$

if and only if

![]() $ij\in E(G)$

. Moreover, given any subset

$ij\in E(G)$

. Moreover, given any subset

![]() $X\subseteq V$

, its characteristic vector

$X\subseteq V$

, its characteristic vector

![]() $\unicode {x1D7D9}_X\in \mathbb {R}^n$

is defined by

$\unicode {x1D7D9}_X\in \mathbb {R}^n$

is defined by

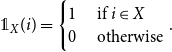

\begin{equation*}\unicode {x1D7D9}_X(i)=\begin{cases} 1 &\textrm { if } i\in X\\ 0 &\textrm { otherwise} \end{cases}.\end{equation*}

\begin{equation*}\unicode {x1D7D9}_X(i)=\begin{cases} 1 &\textrm { if } i\in X\\ 0 &\textrm { otherwise} \end{cases}.\end{equation*}

We will often omit the subscript to ease the notation, unless otherwise stated. Since all of our calculations are asymptotic, we will often omit floor and ceiling functions whenever they are not crucial.

2. Proof outline

Our strategy for finding a Hamilton cycle in an

![]() $(n,d,\lambda )$

-graph

$(n,d,\lambda )$

-graph

![]() $G$

consists of two main phases. First, taking two disjoint vertex subsets

$G$

consists of two main phases. First, taking two disjoint vertex subsets

![]() $X,Y\subseteq V(G)$

of the same size

$X,Y\subseteq V(G)$

of the same size

![]() $\Theta (n/\log ^4n)$

, we find a subgraph

$\Theta (n/\log ^4n)$

, we find a subgraph

![]() $S_{res}\subseteq G$

with

$S_{res}\subseteq G$

with

![]() $|V(S_{res})|=\Theta (n/\log n)$

covering

$|V(S_{res})|=\Theta (n/\log n)$

covering

![]() $X$

and

$X$

and

![]() $Y$

, using a recent result (see Lemma 5.3 later) of Hyde, Morrison, M FC;yesser and Pavez-Signé [Reference Hyde, Morrison, Müyesser and Pavez-Signé20]. This subgraph includes various path factors for later use, where each path has one endpoint in

$Y$

, using a recent result (see Lemma 5.3 later) of Hyde, Morrison, M FC;yesser and Pavez-Signé [Reference Hyde, Morrison, Müyesser and Pavez-Signé20]. This subgraph includes various path factors for later use, where each path has one endpoint in

![]() $X$

and the other in

$X$

and the other in

![]() $Y$

. Then, we cover

$Y$

. Then, we cover

![]() $V(G)\setminus (V(S_{res})\setminus (X\cup Y))$

by vertex-disjoint paths, with one endpoint in

$V(G)\setminus (V(S_{res})\setminus (X\cup Y))$

by vertex-disjoint paths, with one endpoint in

![]() $X$

and the other in

$X$

and the other in

![]() $Y$

. Now, we are allowed to close the paths into a cycle by using one path factor in the prepared subgraph

$Y$

. Now, we are allowed to close the paths into a cycle by using one path factor in the prepared subgraph

![]() $S_{res}$

. Since all the vertices are used and passed through exactly once, the cycle is indeed a Hamilton cycle.

$S_{res}$

. Since all the vertices are used and passed through exactly once, the cycle is indeed a Hamilton cycle.

We now explain our method thoroughly. First, we take two random disjoint subsets

![]() $X,Y\subseteq V(G)$

of equal size

$X,Y\subseteq V(G)$

of equal size

![]() $\Theta (n/\log ^4 n)$

. Using Proposition 5.2, we can deduce that whp, the empty graph

$\Theta (n/\log ^4 n)$

. Using Proposition 5.2, we can deduce that whp, the empty graph

![]() $I(X\cup Y)$

is “extendable” (see Definition 5.1), which further produces a crucial subgraph

$I(X\cup Y)$

is “extendable” (see Definition 5.1), which further produces a crucial subgraph

![]() $S_{res}\subseteq G$

on

$S_{res}\subseteq G$

on

![]() $\Theta (n/\log n)$

vertices such that

$\Theta (n/\log n)$

vertices such that

![]() $X\cup Y\subseteq V(S_{res})$

(see Lemma 5.3). The powerful property of

$X\cup Y\subseteq V(S_{res})$

(see Lemma 5.3). The powerful property of

![]() $S_{res}$

that we will use is the following: for any ordering of the pairs in

$S_{res}$

that we will use is the following: for any ordering of the pairs in

![]() $(X,Y)$

, there exists a path factor in

$(X,Y)$

, there exists a path factor in

![]() $S_{res}$

connecting such pairs. This property will be used to connect the paths with endpoints in

$S_{res}$

connecting such pairs. This property will be used to connect the paths with endpoints in

![]() $X$

and

$X$

and

![]() $Y$

obtained in the second phase.

$Y$

obtained in the second phase.

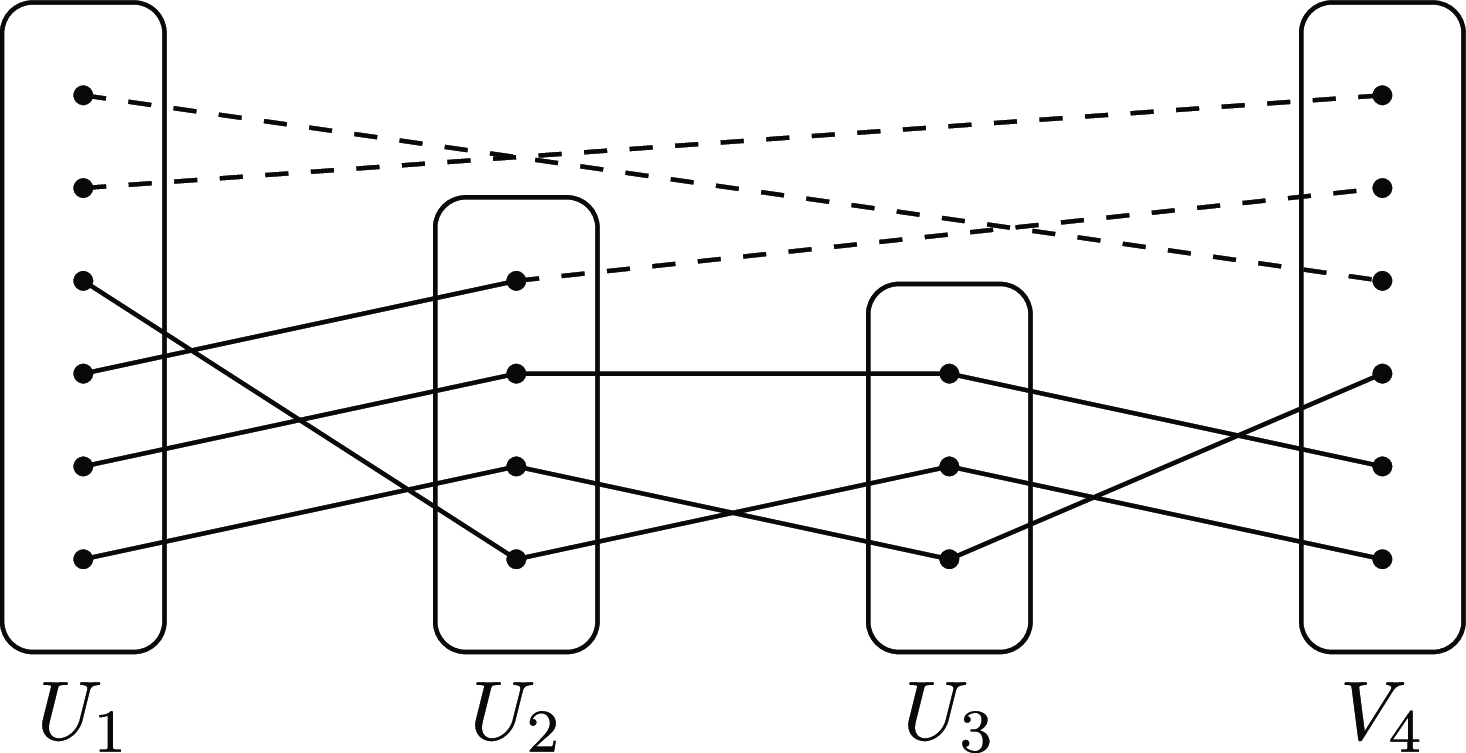

Next, since

![]() $|V(S_{res})|=\Theta (n/\log n)$

, we can utilize its randomness in a way so that after removing it, the graph is still pseudorandom. Thus, by randomly partitioning

$|V(S_{res})|=\Theta (n/\log n)$

, we can utilize its randomness in a way so that after removing it, the graph is still pseudorandom. Thus, by randomly partitioning

![]() $V(G)\setminus V(S_{res})$

into

$V(G)\setminus V(S_{res})$

into

![]() $|X|$

-sets, if we can find a perfect matching between each two consecutive parts, we will obtain the desired vertex-disjoint paths

$|X|$

-sets, if we can find a perfect matching between each two consecutive parts, we will obtain the desired vertex-disjoint paths

![]() $P_i$

connecting

$P_i$

connecting

![]() $x_i\in X$

and

$x_i\in X$

and

![]() $y_i\in Y$

. Now, using the path factor in

$y_i\in Y$

. Now, using the path factor in

![]() $S_{res}$

connecting

$S_{res}$

connecting

![]() $(x_i,y_i)$

s, we can concatenate all the paths

$(x_i,y_i)$

s, we can concatenate all the paths

![]() $P_i$

into a cycle.

$P_i$

into a cycle.

It remains to ensure, whp, perfect matchings between two random disjoint subsets in an expander graph. To prove this, we demonstrate that the bipartite subgraph induced by each two consecutive parts is a good expander. Equivalently, it suffices to study the spectral properties of random induced subgraphs of

![]() $G$

, and this is the main contribution of this paper. It is crucial to remark that although there are some previous results on randomly selecting edges, e.g. [Reference Chung and Horn7], we randomly pick vertex subsets instead of picking edges. Using results on norms of principal matrices, e.g. Rudelson-Vershynin theorem in [Reference Rudelson and Vershynin35], we show that with probability at least

$G$

, and this is the main contribution of this paper. It is crucial to remark that although there are some previous results on randomly selecting edges, e.g. [Reference Chung and Horn7], we randomly pick vertex subsets instead of picking edges. Using results on norms of principal matrices, e.g. Rudelson-Vershynin theorem in [Reference Rudelson and Vershynin35], we show that with probability at least

![]() $1-n^{-\Theta (1)}$

, the spectral gap of a random induced subgraph of

$1-n^{-\Theta (1)}$

, the spectral gap of a random induced subgraph of

![]() $(n,d,\lambda )$

-graph is still

$(n,d,\lambda )$

-graph is still

![]() $O(\lambda /d)$

(see Theorem6.2).

$O(\lambda /d)$

(see Theorem6.2).

3. Expander mixing lemma for matrices

One of the most useful tools in spectral graph theory is the expander mixing lemma, which asserts that an

![]() $(n,d,\lambda )$

-graph is an expander (see, e.g., [Reference Hoory, Linial and Wigderson19]).

$(n,d,\lambda )$

-graph is an expander (see, e.g., [Reference Hoory, Linial and Wigderson19]).

Theorem 3.1 (Expander mixing lemma). Let

![]() $G=(V,E)$

be an

$G=(V,E)$

be an

![]() $(n,d,\lambda )$

-graph. Then, for any two subsets

$(n,d,\lambda )$

-graph. Then, for any two subsets

![]() $S,T\subseteq V$

, we have

$S,T\subseteq V$

, we have

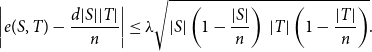

\begin{equation*} \left |e(S,T)-\frac {d|S||T|}{n}\right | \leq \lambda \sqrt {|S| \left ( 1-\frac {|S|}{n} \right ) \, |T| \left ( 1-\frac {|T|}{n} \right )}. \end{equation*}

\begin{equation*} \left |e(S,T)-\frac {d|S||T|}{n}\right | \leq \lambda \sqrt {|S| \left ( 1-\frac {|S|}{n} \right ) \, |T| \left ( 1-\frac {|T|}{n} \right )}. \end{equation*}

We will need a more general version of the expander mixing lemma which can be applied to non-regular graphs, digraphs, and even to general

![]() $m \times n$

matrices

$m \times n$

matrices

![]() $A$

. To state such a general result, it is convenient to normalize

$A$

. To state such a general result, it is convenient to normalize

![]() $A$

in the following way:

$A$

in the following way:

Definition 3.2 (Normalized matrix). Let

![]() $A$

be an

$A$

be an

![]() $m\times n$

matrix. Let

$m\times n$

matrix. Let

![]() $L=L(A)$

be the

$L=L(A)$

be the

![]() $m\times m$

diagonal matrix with

$m\times m$

diagonal matrix with

![]() $L_{i,i}=\sum _{j}A_{i,j}$

for all

$L_{i,i}=\sum _{j}A_{i,j}$

for all

![]() $i$

(that is, the sum of entries in the

$i$

(that is, the sum of entries in the

![]() $i$

th row), and

$i$

th row), and

![]() $R=R(A)$

be the

$R=R(A)$

be the

![]() $n\times n$

diagonal matrix with

$n\times n$

diagonal matrix with

![]() $R_{j,j}=\sum _{i}A_{i,j}$

(that is, the sum of entries in the

$R_{j,j}=\sum _{i}A_{i,j}$

(that is, the sum of entries in the

![]() $j$

th column). The normalized matrix of the matrix

$j$

th column). The normalized matrix of the matrix

![]() $A$

is defined as

$A$

is defined as

In particular, if

![]() $A$

is a symmetric

$A$

is a symmetric

![]() $n \times n$

matrix, then the diagonal matrix

$n \times n$

matrix, then the diagonal matrix

![]() $L(A)=R(A)=: D(A)$

is called the degree matrix of

$L(A)=R(A)=: D(A)$

is called the degree matrix of

![]() $A$

.

$A$

.

Since the notion of eigenvalues is undefined for non-square matrices, it would be convenient for us to work with singular values which are defined as follows for all matrices.

Definition 3.3 (Singular values). Let

![]() $A$

be a real

$A$

be a real

![]() $m\times n$

matrix. The singular values of

$m\times n$

matrix. The singular values of

![]() $A$

are the nonnegative square roots of the eigenvalues of the symmetric positive semidefinite matrix

$A$

are the nonnegative square roots of the eigenvalues of the symmetric positive semidefinite matrix

![]() $A^{\mathsf {T}} A$

. We will always assume that

$A^{\mathsf {T}} A$

. We will always assume that

![]() $s_k(A)$

is the

$s_k(A)$

is the

![]() $k$

th singular value of

$k$

th singular value of

![]() $A$

in nonincreasing order. In particular, the singular values and the eigenvalues of a symmetric positive semidefinite matrix

$A$

in nonincreasing order. In particular, the singular values and the eigenvalues of a symmetric positive semidefinite matrix

![]() $A$

coincide.

$A$

coincide.

We are now ready to state a more general version of the expander mixing lemma.

Theorem 3.4 (Expander mixing lemma for matrices). Let

![]() $A$

be an

$A$

be an

![]() $m\times n$

matrix with nonnegative entries, and let

$m\times n$

matrix with nonnegative entries, and let

![]() $\bar {A}$

be the normalized matrix of

$\bar {A}$

be the normalized matrix of

![]() $A$

. Then, for any two subsets

$A$

. Then, for any two subsets

![]() $S\subseteq [m]$

and

$S\subseteq [m]$

and

![]() $T\subseteq [n]$

, we have

$T\subseteq [n]$

, we have

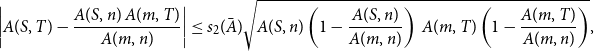

\begin{equation*}\left |A(S,T)-\frac {A(S,n) \, A(m,T)}{A(m,n)}\right | \leq s_2(\bar {A})\sqrt {A(S,n)\left (1-\frac {A(S,n)}{A(m,n)}\right ) \, A(m,T) \left (1-\frac {A(m,T)}{A(m,n)}\right )},\end{equation*}

\begin{equation*}\left |A(S,T)-\frac {A(S,n) \, A(m,T)}{A(m,n)}\right | \leq s_2(\bar {A})\sqrt {A(S,n)\left (1-\frac {A(S,n)}{A(m,n)}\right ) \, A(m,T) \left (1-\frac {A(m,T)}{A(m,n)}\right )},\end{equation*}

where we adopt the notation

![]() $A(S,T) \,:\!=\, \sum _{i \in S, j \in T} A_{i,j}$

, and we abbreviate

$A(S,T) \,:\!=\, \sum _{i \in S, j \in T} A_{i,j}$

, and we abbreviate

![]() $A(S,n) \,:\!=\, A(S,[n])$

,

$A(S,n) \,:\!=\, A(S,[n])$

,

![]() $A(m,T) \,:\!=\, A([m],T)$

, and

$A(m,T) \,:\!=\, A([m],T)$

, and

![]() $A(m,n) \,:\!=\, A([m],[n])$

.

$A(m,n) \,:\!=\, A([m],[n])$

.

Observe that Theorem3.4 trivially implies Theorem3.1, since the adjacency matrix of a

![]() $d$

-regular graph satisfies

$d$

-regular graph satisfies

The proof of Theorem3.4 is almost identical to the standard proof of Theorem3.1 that can be found e.g. as Proposition 4.3.2 in [Reference Brouwer and Haemers6]. Since we could not find a reference for this specific statement and its proof, we include the proof of Theorem3.4 for the convenience of the reader, without claiming any originality. It is based on the following crucial observation.

Observation 3.5.

Let

![]() $A$

be an

$A$

be an

![]() $m \times n$

matrix with nonnegative entries. Let

$m \times n$

matrix with nonnegative entries. Let

![]() $a \,:\!=\, A(m,n)$

and let

$a \,:\!=\, A(m,n)$

and let

![]() $\unicode {x1D7D9}_n$

denote the vector in

$\unicode {x1D7D9}_n$

denote the vector in

![]() ${\mathbb {R}}^n$

whose all coordinates are equal to

${\mathbb {R}}^n$

whose all coordinates are equal to

![]() $1$

. Consider the vectors

$1$

. Consider the vectors

![]() $\mathbf {u}_1 \,:\!=\, a^{-1/2} L^{1/2} \unicode {x1D7D9}_m$

and

$\mathbf {u}_1 \,:\!=\, a^{-1/2} L^{1/2} \unicode {x1D7D9}_m$

and

![]() ${\mathbf {v}}_1 \,:\!=\, a^{-1/2} R^{1/2} \unicode {x1D7D9}_n$

. Then:

${\mathbf {v}}_1 \,:\!=\, a^{-1/2} R^{1/2} \unicode {x1D7D9}_n$

. Then:

-

1. both

$\mathbf {u}_1$

and

$\mathbf {u}_1$

and

${\mathbf {v}}_1$

are unit vectors;

${\mathbf {v}}_1$

are unit vectors;

-

2.

$\bar {A} {\mathbf {v}}_1=\mathbf {u}_1$

;

$\bar {A} {\mathbf {v}}_1=\mathbf {u}_1$

; -

3.

$s_1(\bar {A}) = \|{\bar {A}}\| = \mathbf {u}_1^{\mathsf {T}} \bar {A} {\mathbf {v}}_1 = 1$

.

$s_1(\bar {A}) = \|{\bar {A}}\| = \mathbf {u}_1^{\mathsf {T}} \bar {A} {\mathbf {v}}_1 = 1$

.

Proof.

The first two parts readily follow from the definitions of

![]() $a$

,

$a$

,

![]() $L$

,

$L$

,

![]() $R$

, and

$R$

, and

![]() $\bar {A}$

. As for the third part, the equation

$\bar {A}$

. As for the third part, the equation

![]() $s_1(\bar {A}) = \|{\bar {A}}\|$

holds for any matrix. Let us show that

$s_1(\bar {A}) = \|{\bar {A}}\|$

holds for any matrix. Let us show that

![]() $\|\bar {A}\|\leq 1$

. For every

$\|\bar {A}\|\leq 1$

. For every

![]() $\|\mathbf {x}\|_2=\|\mathbf {y}\|_2=1$

, we have

$\|\mathbf {x}\|_2=\|\mathbf {y}\|_2=1$

, we have

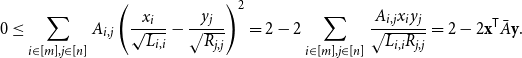

\begin{equation*}0\leq \sum _{i\in [m], j \in [n]}A_{i,j}\left (\frac {x_i}{\sqrt {L_{i,i}}}-\frac {y_j}{\sqrt {R_{j,j}}}\right )^2=2-2\sum _{i\in [m], j \in [n]}\frac {A_{i,j}x_iy_j}{\sqrt {L_{i,i}R_{j,j}}}=2-2\mathbf {x}^{\mathsf {T}} \bar {A} \mathbf {y}.\end{equation*}

\begin{equation*}0\leq \sum _{i\in [m], j \in [n]}A_{i,j}\left (\frac {x_i}{\sqrt {L_{i,i}}}-\frac {y_j}{\sqrt {R_{j,j}}}\right )^2=2-2\sum _{i\in [m], j \in [n]}\frac {A_{i,j}x_iy_j}{\sqrt {L_{i,i}R_{j,j}}}=2-2\mathbf {x}^{\mathsf {T}} \bar {A} \mathbf {y}.\end{equation*}

This implies that

![]() $\mathbf {x}^{\mathsf {T}} \bar {A} \mathbf {y}\leq 1$

for all unit vectors

$\mathbf {x}^{\mathsf {T}} \bar {A} \mathbf {y}\leq 1$

for all unit vectors

![]() $\mathbf {x}$

and

$\mathbf {x}$

and

![]() $\mathbf {y}$

, which yields

$\mathbf {y}$

, which yields

![]() $\|\bar {A}\|\leq 1$

.

$\|\bar {A}\|\leq 1$

.

Moreover, by definition of

![]() $\bar {A}$

, we have

$\bar {A}$

, we have

![]() $\mathbf {u}_1^{\mathsf {T}} \bar {A} {\mathbf {v}}_1=1$

. Therefore, by definition of the operator norm, it follows that

$\mathbf {u}_1^{\mathsf {T}} \bar {A} {\mathbf {v}}_1=1$

. Therefore, by definition of the operator norm, it follows that

![]() $\|{\bar {A}}\| \ge 1$

. The observation is proved.

$\|{\bar {A}}\| \ge 1$

. The observation is proved.

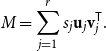

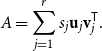

Now we are ready to prove Theorem3.4.

Proof of Theorem 3.4. Let

![]() $r={rank}(\bar {A})$

, and let

$r={rank}(\bar {A})$

, and let

![]() $1=s_1 \geq s_2 \geq \ldots \geq s_r\gt 0$

be all the positive singular values of

$1=s_1 \geq s_2 \geq \ldots \geq s_r\gt 0$

be all the positive singular values of

![]() $\bar {A}$

in nonincreasing order. Applying the singular value decomposition theorem (TheoremA.3) combined with Observation 3.5, we can find orthonormal bases

$\bar {A}$

in nonincreasing order. Applying the singular value decomposition theorem (TheoremA.3) combined with Observation 3.5, we can find orthonormal bases

![]() $\{\mathbf {u}_1,\ldots, \mathbf {u}_m\}$

of

$\{\mathbf {u}_1,\ldots, \mathbf {u}_m\}$

of

![]() $\mathbb {R}^m$

and

$\mathbb {R}^m$

and

![]() $\{{\mathbf {v}}_1,\ldots, {\mathbf {v}}_n\}$

of

$\{{\mathbf {v}}_1,\ldots, {\mathbf {v}}_n\}$

of

![]() $\mathbb {R}^n$

with vectors

$\mathbb {R}^n$

with vectors

![]() ${\mathbf {v}}_1$

and

${\mathbf {v}}_1$

and

![]() $\mathbf {u}_1$

defined in Observation 3.5, and such that

$\mathbf {u}_1$

defined in Observation 3.5, and such that

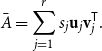

\begin{equation*} \bar {A}=\sum _{j=1}^r s_j \mathbf {u}_j {\mathbf {v}}_j^{\mathsf {T}}. \end{equation*}

\begin{equation*} \bar {A}=\sum _{j=1}^r s_j \mathbf {u}_j {\mathbf {v}}_j^{\mathsf {T}}. \end{equation*}

In particular,

![]() $\bar {A}{\mathbf {v}}_j=s_j\mathbf {u}_j$

for

$\bar {A}{\mathbf {v}}_j=s_j\mathbf {u}_j$

for

![]() $j=1,\ldots, r$

and

$j=1,\ldots, r$

and

![]() $\bar {A}{\mathbf {v}}_j=\mathbf {0}$

for

$\bar {A}{\mathbf {v}}_j=\mathbf {0}$

for

![]() $j\gt r$

. Now, let

$j\gt r$

. Now, let

![]() $S\subseteq [m]$

and

$S\subseteq [m]$

and

![]() $T\subseteq [n]$

be two arbitrary subsets. Then

$T\subseteq [n]$

be two arbitrary subsets. Then

Expanding both vectors as

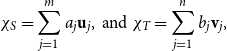

\begin{equation*}\chi _S=\sum _{j=1}^m a_j\mathbf {u}_j, \textrm { and } \chi _T=\sum _{j=1}^n b_j{\mathbf {v}}_j,\end{equation*}

\begin{equation*}\chi _S=\sum _{j=1}^m a_j\mathbf {u}_j, \textrm { and } \chi _T=\sum _{j=1}^n b_j{\mathbf {v}}_j,\end{equation*}

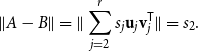

we obtain

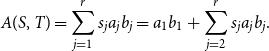

\begin{equation*} A(S,T) = \sum _{j=1}^r s_j a_j b_j = a_1 b_1 + \sum _{j=2}^r s_j a_j b_j. \end{equation*}

\begin{equation*} A(S,T) = \sum _{j=1}^r s_j a_j b_j = a_1 b_1 + \sum _{j=2}^r s_j a_j b_j. \end{equation*}

Recall from Observation 3.5 that all singular values of

![]() $\bar {A}$

are bounded by

$\bar {A}$

are bounded by

![]() $1$

, and

$1$

, and

![]() $r = {rank}(\bar {A}) \le \min \{m,n\}$

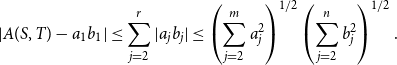

. Thus, by Cauchy–Schwarz inequality, we have

$r = {rank}(\bar {A}) \le \min \{m,n\}$

. Thus, by Cauchy–Schwarz inequality, we have

\begin{equation} |A(S,T) - a_1 b_1 | \le \sum _{j=2}^r|a_j b_j| \le \left ( \sum _{j=2}^m a_j^2 \right )^{1/2} \left ( \sum _{j=2}^n b_j^2 \right )^{1/2}. \end{equation}

\begin{equation} |A(S,T) - a_1 b_1 | \le \sum _{j=2}^r|a_j b_j| \le \left ( \sum _{j=2}^m a_j^2 \right )^{1/2} \left ( \sum _{j=2}^n b_j^2 \right )^{1/2}. \end{equation}

Now observe that

![]() $a_1=\left \langle \chi _S,\mathbf {u}_1\right \rangle = a^{-1/2} A(S,n)$

and

$a_1=\left \langle \chi _S,\mathbf {u}_1\right \rangle = a^{-1/2} A(S,n)$

and

![]() $b_1=\left \langle \chi _T,{\mathbf {v}}_1\right \rangle = a^{-1/2} A(m,T)$

, so

$b_1=\left \langle \chi _T,{\mathbf {v}}_1\right \rangle = a^{-1/2} A(m,T)$

, so

Moreover,

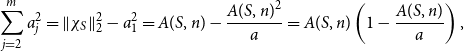

\begin{equation*} \sum _{j=2}^m a_j^2 = \|{\chi _S}\|_2^2 - a_1^2 = A(S,n) - \frac {A(S,n)^2}{a} = A(S,n)\left (1-\frac {A(S,n)}{a}\right ), \end{equation*}

\begin{equation*} \sum _{j=2}^m a_j^2 = \|{\chi _S}\|_2^2 - a_1^2 = A(S,n) - \frac {A(S,n)^2}{a} = A(S,n)\left (1-\frac {A(S,n)}{a}\right ), \end{equation*}

and similarly

\begin{equation*} \sum _{j=2}^n b_j^2 = A(m,T) \left (1-\frac {A(m,T)}{a}\right ). \end{equation*}

\begin{equation*} \sum _{j=2}^n b_j^2 = A(m,T) \left (1-\frac {A(m,T)}{a}\right ). \end{equation*}

Substitute the last three identities into (1) to complete the proof.

4. Almost regular expanders

Our argument relies on some spectral properties of random subgraphs of

![]() $(n,d,\lambda )$

-graphs. Since random subgraphs are not expected to be exactly regular, we extend the definition of

$(n,d,\lambda )$

-graphs. Since random subgraphs are not expected to be exactly regular, we extend the definition of

![]() $(n,d,\lambda )$

-graphs as follows:

$(n,d,\lambda )$

-graphs as follows:

Definition 4.1 (Almost

![]() $(n,d,\lambda )$

-graphs). Let

$(n,d,\lambda )$

-graphs). Let

![]() $d,\lambda \gt 0$

and

$d,\lambda \gt 0$

and

![]() $\gamma \in [0,1)$

. We say that a graph

$\gamma \in [0,1)$

. We say that a graph

![]() $G$

is an

$G$

is an

![]() $(n, (1\pm \gamma )d, \lambda )$

-graph if

Footnote

1

$(n, (1\pm \gamma )d, \lambda )$

-graph if

Footnote

1

![]() $G$

is a graph on

$G$

is a graph on

![]() $n$

vertices whose all degrees are

$n$

vertices whose all degrees are

![]() $(1\pm \gamma )d$

and the second singular value of the adjacency matrix

$(1\pm \gamma )d$

and the second singular value of the adjacency matrix

![]() $A$

of

$A$

of

![]() $G$

satisfies

$G$

satisfies

![]() $s_2(A) \le \lambda$

.

$s_2(A) \le \lambda$

.

Almost

![]() $(n,d,\lambda )$

-graphs behave similar to exact

$(n,d,\lambda )$

-graphs behave similar to exact

![]() $(n,d,\lambda )$

-graphs in many ways. If

$(n,d,\lambda )$

-graphs in many ways. If

![]() $G$

is an (exactly)

$G$

is an (exactly)

![]() $d$

-regular graph with adjacency matrix

$d$

-regular graph with adjacency matrix

![]() $A$

, its normalized adjacency matrix is obviously

$A$

, its normalized adjacency matrix is obviously

according to Definition 3.2. If

![]() $G$

is an almost

$G$

is an almost

![]() $d$

-regular graph, its degree matrix

$d$

-regular graph, its degree matrix

![]() $D=diag(d_1,\ldots, d_n)$

is close to

$D=diag(d_1,\ldots, d_n)$

is close to

![]() $dI$

, and we can expect that

$dI$

, and we can expect that

in some sense. Below we show that such an approximation indeed holds in the sense of the closeness of all singular values.

Corollary 4.2 (Singular values of almost regular graphs). Let

![]() $\gamma \in [0,1)$

and

$\gamma \in [0,1)$

and

![]() $d\gt 0$

. Let

$d\gt 0$

. Let

![]() $G$

be a graph whose all vertices have degrees

$G$

be a graph whose all vertices have degrees

![]() $(1\pm \gamma )d$

. Then the adjacency matrix

$(1\pm \gamma )d$

. Then the adjacency matrix

![]() $A$

and the normalized adjacency matrix

$A$

and the normalized adjacency matrix

![]() $\bar {A}$

of the graph

$\bar {A}$

of the graph

![]() $G$

satisfy

$G$

satisfy

Proof. Using the chain rule for singular values (Lemma A.5), we obtain

Since

![]() $\|{D^{1/2}}\|^2 = \|{D}\| = \max _i d_i \le (1+\gamma )d$

, the lower bound in Corollary 4.2 follows. The upper bound can be proved similarly.

$\|{D^{1/2}}\|^2 = \|{D}\| = \max _i d_i \le (1+\gamma )d$

, the lower bound in Corollary 4.2 follows. The upper bound can be proved similarly.

4.1 Expander mixing lemma for almost regular expanders

Let us specialize Theorem3.4 for almost

![]() $(n,d,\lambda )$

-graphs.

$(n,d,\lambda )$

-graphs.

Corollary 4.3 (Expander mixing lemma for almost

![]() $(n,d,\lambda )$

-graphs). Let

$(n,d,\lambda )$

-graphs). Let

![]() $G$

be an

$G$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-graph. Then, for any two subsets

$(n,(1\pm \gamma )d,\lambda )$

-graph. Then, for any two subsets

![]() $S,T\subseteq V(G)$

, we have

$S,T\subseteq V(G)$

, we have

where

Proof.

Let

![]() $A$

and

$A$

and

![]() $\bar {A}$

be the adjacency and the normalized adjacency matrices of

$\bar {A}$

be the adjacency and the normalized adjacency matrices of

![]() $G$

, respectively. Theorem3.4 yields

$G$

, respectively. Theorem3.4 yields

By Corollary 4.2 and assumption, we have

Moreover, since

![]() $A$

is an adjacency matrix, we have

$A$

is an adjacency matrix, we have

![]() $A(S,T)=e(S,T)$

,

$A(S,T)=e(S,T)$

,

![]() $A(S,n) = \sum _{v\in S}\deg (v) = (1\pm \gamma )d |S|$

,

$A(S,n) = \sum _{v\in S}\deg (v) = (1\pm \gamma )d |S|$

,

![]() $A(n,T) = \sum _{v\in T}\deg (v) = (1\pm \gamma )d |T|$

,

$A(n,T) = \sum _{v\in T}\deg (v) = (1\pm \gamma )d |T|$

,

![]() $A(n,n) = \sum _{v\in V(G)}\deg (v) = (1\pm \gamma )d |V(G)| = (1\pm \gamma )dn$

. Substitute all this into (3) and use triangle inequality to complete the proof.

$A(n,n) = \sum _{v\in V(G)}\deg (v) = (1\pm \gamma )d |V(G)| = (1\pm \gamma )dn$

. Substitute all this into (3) and use triangle inequality to complete the proof.

Sometimes all we need is at least one edge between disjoint sets of vertices

![]() $S$

and

$S$

and

![]() $T$

. Corollary 4.3 provides a convenient sufficient condition for this:

$T$

. Corollary 4.3 provides a convenient sufficient condition for this:

Corollary 4.4 (At least one edge). Let

![]() $G$

be an

$G$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-graph. Let

$(n,(1\pm \gamma )d,\lambda )$

-graph. Let

![]() $S,T\subseteq V(G)$

be two disjoint subsets with

$S,T\subseteq V(G)$

be two disjoint subsets with

Then

![]() $e(S,T)\gt 0$

.

$e(S,T)\gt 0$

.

Proof. Under our assumptions, the lower bound in (2) is strictly positive.

The following statement, which is another simple corollary of the expander mixing lemma, allows us to translate minimum degree conditions into an expansion property for small sets.

Lemma 4.5.

Let

![]() $\gamma \in [0,1/20]$

be a constant, and let

$\gamma \in [0,1/20]$

be a constant, and let

![]() $\lambda \leq d/700$

. Let

$\lambda \leq d/700$

. Let

![]() $G$

be an

$G$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-graph which contains subsets

$(n,(1\pm \gamma )d,\lambda )$

-graph which contains subsets

![]() $S,T\subset V(G)$

such that for every

$S,T\subset V(G)$

such that for every

![]() $v\in S$

,

$v\in S$

,

![]() $d(v,T)\geq d/6$

. Then, every subset

$d(v,T)\geq d/6$

. Then, every subset

![]() $X\subset S$

of size

$X\subset S$

of size

![]() $|X|\le \frac {4\lambda n}{d}$

satisfies

$|X|\le \frac {4\lambda n}{d}$

satisfies

![]() $|N(X, T)|\geq \frac {d}{700\lambda }|X|$

.

$|N(X, T)|\geq \frac {d}{700\lambda }|X|$

.

Proof.

Let

![]() $D=\frac {d}{700\lambda }\geq 1$

. Suppose that there exists a subset

$D=\frac {d}{700\lambda }\geq 1$

. Suppose that there exists a subset

![]() $X\subset S$

of size

$X\subset S$

of size

![]() $1\le |X|\le \frac {4\lambda n}{d}$

such that

$1\le |X|\le \frac {4\lambda n}{d}$

such that

![]() $|N(X,T)|\lt D|X|$

. Let

$|N(X,T)|\lt D|X|$

. Let

![]() $Y=N(X,T)$

. Corollary 4.3 implies that

$Y=N(X,T)$

. Corollary 4.3 implies that

\begin{equation*} \begin{aligned} \frac {d|X|}{6}\leq e(X,Y) &\leq \frac {(1+\gamma )^2d|X||Y|}{(1-\gamma )n}+\frac {1+\gamma }{1-\gamma }\cdot \lambda \sqrt {|X||Y|}\\ &\le 5\lambda D|X|+2\lambda \sqrt {D}|X|\\ &\leq 7\lambda D|X|\\ &=\frac {d|X|}{100}, \end{aligned}\end{equation*}

\begin{equation*} \begin{aligned} \frac {d|X|}{6}\leq e(X,Y) &\leq \frac {(1+\gamma )^2d|X||Y|}{(1-\gamma )n}+\frac {1+\gamma }{1-\gamma }\cdot \lambda \sqrt {|X||Y|}\\ &\le 5\lambda D|X|+2\lambda \sqrt {D}|X|\\ &\leq 7\lambda D|X|\\ &=\frac {d|X|}{100}, \end{aligned}\end{equation*}

which is a contradiction. Therefore, every subset

![]() $X\subset S$

of size

$X\subset S$

of size

![]() $|X|\le \frac {4\lambda n}{d}$

satisfies

$|X|\le \frac {4\lambda n}{d}$

satisfies

![]() $|N(X,T)|\geq \frac {d}{700\lambda }|X|$

. The proof is completed.

$|N(X,T)|\geq \frac {d}{700\lambda }|X|$

. The proof is completed.

4.2 Matchings in almost regular expanders

In this section, we use the expander mixing lemma to get some corollaries for matchings in almost

![]() $(n,d,\lambda )$

-graphs. For our convenience, we define the bipartite spectral expanders as below.

$(n,d,\lambda )$

-graphs. For our convenience, we define the bipartite spectral expanders as below.

Definition 4.6.

We say that a bipartite graph

![]() $H = (V_1 \cup V_2, E)$

is an

$H = (V_1 \cup V_2, E)$

is an

![]() $(n, (1 \pm \gamma )d, \lambda )$

-bipartite expander if

$(n, (1 \pm \gamma )d, \lambda )$

-bipartite expander if

![]() $H$

is an induced bipartite subgraph of an

$H$

is an induced bipartite subgraph of an

![]() $(n, (1 \pm \gamma )d, \lambda )$

-graph

$(n, (1 \pm \gamma )d, \lambda )$

-graph

![]() $G$

with

$G$

with

![]() $V(G) = V_1 \cup V_2$

, and for each

$V(G) = V_1 \cup V_2$

, and for each

![]() $i = 1, 2$

and every

$i = 1, 2$

and every

![]() $v \in V_i$

, the degree of

$v \in V_i$

, the degree of

![]() $v$

in

$v$

in

![]() $H$

satisfies

$H$

satisfies

![]() $\deg _H(v) = (1 \pm \gamma ) \frac {d |V_{3-i}|}{n}$

.

$\deg _H(v) = (1 \pm \gamma ) \frac {d |V_{3-i}|}{n}$

.

First, we prove the existence of perfect matchings in a bipartite spectral expander with a balanced bipartition.

Lemma 4.7.

Let

![]() $\gamma \in [0,1/6]$

be a constant, let

$\gamma \in [0,1/6]$

be a constant, let

![]() $d\gt 0$

and let

$d\gt 0$

and let

![]() $\lambda \leq d/200$

. Let

$\lambda \leq d/200$

. Let

![]() $G=(V,E)$

be an

$G=(V,E)$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-bipartite expander with parts

$(n,(1\pm \gamma )d,\lambda )$

-bipartite expander with parts

![]() $V=V_1\cup V_2$

such that

$V=V_1\cup V_2$

such that

![]() $|V_1|=|V_2|$

. Then

$|V_1|=|V_2|$

. Then

![]() $G$

contains a perfect matching.

$G$

contains a perfect matching.

Proof.

It is enough to verify the following condition which is equivalent to Hall’s condition (see Theorem 3.1.11 in [Reference West42]): for all

![]() $i\in [2]$

and

$i\in [2]$

and

![]() $S\subseteq V_i$

of size

$S\subseteq V_i$

of size

![]() $|S|\leq |V_i|/2$

, we have

$|S|\leq |V_i|/2$

, we have

![]() $|N(S)|\geq |S|$

.

$|N(S)|\geq |S|$

.

Suppose to the contrary that there exists

![]() $i\in [2]$

and an

$i\in [2]$

and an

![]() $S\subseteq V_i$

, such that the set

$S\subseteq V_i$

, such that the set

![]() $T\,:\!=\, N(S)$

is of size less than

$T\,:\!=\, N(S)$

is of size less than

![]() $|S|$

. Since

$|S|$

. Since

![]() $G$

is an

$G$

is an

![]() $(n,(1\pm \gamma )d,\lambda )$

-bipartite expander and since

$(n,(1\pm \gamma )d,\lambda )$

-bipartite expander and since

![]() $|V_1|=|V_2|=n/2$

, we have that

$|V_1|=|V_2|=n/2$

, we have that

On the other hand, using the assumption that

![]() $\gamma \leq 1/6$

and the expander mixing lemma for almost regular expanders (Corollary 4.3), we obtain that

$\gamma \leq 1/6$

and the expander mixing lemma for almost regular expanders (Corollary 4.3), we obtain that

where we also used

![]() $|T|\lt |S|\le n/4$

and

$|T|\lt |S|\le n/4$

and

![]() $\lambda \le d/200$

.

$\lambda \le d/200$

.

Combining these two estimates we obtain a contradiction. This completes the proof.

If finding a perfect matching is not necessary, then following from Corollary 4.4, we can use a greedy algorithm to find a matching that avoids a not-too-large subset in each part of a bipartite expander.

Lemma 4.8.

Let

![]() $G$

be an

$G$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-graph, and let

$(n,(1\pm \gamma )d,\lambda )$

-graph, and let

![]() $V(G)=V_1\cup V_2$

be a partition. For each

$V(G)=V_1\cup V_2$

be a partition. For each

![]() $i=1,2$

, let

$i=1,2$

, let

![]() $S_i\subseteq V_i$

be a subset of size

$S_i\subseteq V_i$

be a subset of size

![]() $0\leq k_i\leq |V_i|-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

. Then there exists a matching of size

$0\leq k_i\leq |V_i|-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

. Then there exists a matching of size

in

![]() $G$

between

$G$

between

![]() $V_1\setminus S_1$

and

$V_1\setminus S_1$

and

![]() $V_2\setminus S_2$

.

$V_2\setminus S_2$

.

Proof.

We find the matching between

![]() $V_1\setminus S_1$

and

$V_1\setminus S_1$

and

![]() $V_2\setminus S_2$

greedily. Initially, let

$V_2\setminus S_2$

greedily. Initially, let

![]() $M\,:\!=\, \emptyset$

, and let

$M\,:\!=\, \emptyset$

, and let

![]() $U_1\,:\!=\, V_1\setminus S_1$

and

$U_1\,:\!=\, V_1\setminus S_1$

and

![]() $U_2\,:\!=\, V_2\setminus S_2$

. If

$U_2\,:\!=\, V_2\setminus S_2$

. If

![]() $|U_1|\leq \frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

or

$|U_1|\leq \frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

or

![]() $|U_2|\leq \frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

, then we stop. Otherwise, by Corollary 4.4, there is an edge

$|U_2|\leq \frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}$

, then we stop. Otherwise, by Corollary 4.4, there is an edge

![]() $e\in E(G)$

between

$e\in E(G)$

between

![]() $U_1$

and

$U_1$

and

![]() $U_2$

. Let

$U_2$

. Let

![]() $M\,:\!=\, M\cup \{e\}$

, and let

$M\,:\!=\, M\cup \{e\}$

, and let

![]() $U_1\,:\!=\, U_1\setminus V(e)$

and

$U_1\,:\!=\, U_1\setminus V(e)$

and

![]() $U_2\,:\!=\, U_2\setminus V(e)$

. Continuing in this fashion, we obtain a matching

$U_2\,:\!=\, U_2\setminus V(e)$

. Continuing in this fashion, we obtain a matching

![]() $M$

of size

$M$

of size

![]() $\min \left \{|V_1|-k_1-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d},|V_2|-k_2-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}\right \}$

in

$\min \left \{|V_1|-k_1-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d},|V_2|-k_2-\frac {(1+\gamma )^2}{(1-\gamma )^3} \cdot \frac {\lambda n}{d}\right \}$

in

![]() $G$

between

$G$

between

![]() $V_1\setminus S_1$

and

$V_1\setminus S_1$

and

![]() $V_2\setminus S_2$

. The proof is completed.

$V_2\setminus S_2$

. The proof is completed.

5. Extendability

In [Reference Hyde, Morrison, Müyesser and Pavez-Signé20], the classical tree embedding technique, introduced by Friedman and Pippenger in [Reference Friedman and Pippenger11], was used as one of the tools to prove a useful result on connecting designated pairs of vertices in expander graphs. The result shows that given two “nice” disjoint small subsets of an

![]() $(n,d,\lambda )$

-graph, one can find a small subgraph, disjoint from these subsets, such that for any designated ordering of vertex pairs from the subsets, there exists a path factor in the subgraph that connects the pairs.

$(n,d,\lambda )$

-graph, one can find a small subgraph, disjoint from these subsets, such that for any designated ordering of vertex pairs from the subsets, there exists a path factor in the subgraph that connects the pairs.

Definition 5.1.

Let

![]() $D,m\in \mathbb N$

with

$D,m\in \mathbb N$

with

![]() $D\ge 3$

. Let

$D\ge 3$

. Let

![]() $G$

be a graph and let

$G$

be a graph and let

![]() $S\subset G$

be a subgraph with

$S\subset G$

be a subgraph with

![]() $\Delta (S) \leq D$

. We say that

$\Delta (S) \leq D$

. We say that

![]() $S$

is

$S$

is

![]() $(D,m)$

-extendable if for all

$(D,m)$

-extendable if for all

![]() $U\subset V(G)$

with

$U\subset V(G)$

with

![]() $1\le |U|\le 2m$

we have

$1\le |U|\le 2m$

we have

The following result says that it is enough to control the external neighbourhood of small sets in order to verify extendability.

Proposition 5.2.

[Reference Hyde, Morrison, Müyesser and Pavez-Signé20] Let

![]() $D,m\in \mathbb N$

with

$D,m\in \mathbb N$

with

![]() $D\ge 3$

. Let

$D\ge 3$

. Let

![]() $G$

be a graph and let

$G$

be a graph and let

![]() $S\subset G$

be a subgraph with

$S\subset G$

be a subgraph with

![]() $\Delta (S)\le D$

. If for all

$\Delta (S)\le D$

. If for all

![]() $U\subset V(G)$

with

$U\subset V(G)$

with

![]() $1\leq |U|\leq 2m$

we have

$1\leq |U|\leq 2m$

we have

then

![]() $S$

is

$S$

is

![]() $(D,m)$

-extendable in

$(D,m)$

-extendable in

![]() $G$

.

$G$

.

Before stating the lemma, we introduce some necessary definitions. We denote by

![]() $I(S)$

the edgeless subgraph with vertex set

$I(S)$

the edgeless subgraph with vertex set

![]() $S$

. A graph

$S$

. A graph

![]() $G$

is said to be

$G$

is said to be

![]() $m$

-joined if for any disjoint sets

$m$

-joined if for any disjoint sets

![]() $A, B \subseteq V(G)$

with

$A, B \subseteq V(G)$

with

![]() $|A|, |B| \geq m$

, there is at least one edge between

$|A|, |B| \geq m$

, there is at least one edge between

![]() $A$

and

$A$

and

![]() $B$

, i.e.,

$B$

, i.e.,

![]() $e(A, B) \geq 1$

.

$e(A, B) \geq 1$

.

Now we are ready to state the result from [Reference Hyde, Morrison, Müyesser and Pavez-Signé20].

Lemma 5.3.

There is an absolute constant

![]() $C\gt 0$

with the following property. Let

$C\gt 0$

with the following property. Let

![]() $n$

be a sufficiently large integer, and let

$n$

be a sufficiently large integer, and let

![]() $20C\leq K\leq n/\log ^3n$

. Let

$20C\leq K\leq n/\log ^3n$

. Let

![]() $D,m\in \mathbb {N}$

satisfy

$D,m\in \mathbb {N}$

satisfy

![]() $m\leq n/100D$

and

$m\leq n/100D$

and

![]() $D\ge 100$

. Let

$D\ge 100$

. Let

![]() $G$

be an

$G$

be an

![]() $m$

-joined graph on

$m$

-joined graph on

![]() $n$

vertices which contains disjoint subsets

$n$

vertices which contains disjoint subsets

![]() $V_1, V_2\subseteq V(G)$

with

$V_1, V_2\subseteq V(G)$

with

![]() $|V_1|=|V_2|\leq n/K\log ^{3}n$

, and set

$|V_1|=|V_2|\leq n/K\log ^{3}n$

, and set

![]() $\ell \,:\!=\,\lfloor C \log ^3 n \rfloor$

. Suppose that

$\ell \,:\!=\,\lfloor C \log ^3 n \rfloor$

. Suppose that

![]() $I(V_1\cup V_2)$

is

$I(V_1\cup V_2)$

is

![]() $(D,m)$

-extendable in

$(D,m)$

-extendable in

![]() $G$

.

$G$

.

Then, there exists a

![]() $(D,m)$

-extendable subgraph

$(D,m)$

-extendable subgraph

![]() $S_{res}\subseteq G$

such that for any bijection

$S_{res}\subseteq G$

such that for any bijection

![]() $\phi \colon V_1\to V_2$

, there exists a

$\phi \colon V_1\to V_2$

, there exists a

![]() $P_\ell$

-factor of

$P_\ell$

-factor of

![]() $S_{res}$

where each copy of

$S_{res}$

where each copy of

![]() $P_\ell$

has as its endpoints some

$P_\ell$

has as its endpoints some

![]() $v\in V_1$

and

$v\in V_1$

and

![]() $\phi (v)\in V_2$

.

$\phi (v)\in V_2$

.

6. Random subgraphs of almost regular expanders

In this section, we show that a random induced subgraph of an almost

![]() $(n,d,\lambda )$

-graph or a bipartite spectral expander is typically a spectral expander by itself. This serves as our main tool in the proof of our main result.

$(n,d,\lambda )$

-graph or a bipartite spectral expander is typically a spectral expander by itself. This serves as our main tool in the proof of our main result.

6.1 Chernoff’s bounds

We extensively use the following well-known Chernoff’s bounds for the upper and lower tails of the hypergeometric distribution throughout the paper. The following lemma was proved by Hoeffding [Reference Hoeffding18] (also see Section 23.5 in [Reference Frieze and Karoński13]).

Lemma 6.1 (Chernoff’s inequality for hypergeometric distribution). Let

![]() $X\sim \mathrm {Hypergeometric} (N,K,n)$

and let

$X\sim \mathrm {Hypergeometric} (N,K,n)$

and let

![]() $\mathbb {E}[X]=\mu$

. Then

$\mathbb {E}[X]=\mu$

. Then

-

•

$\mathbb {P} \left [ X\lt (1-a)\mu \right ]\lt e^{-a^2\mu /2}$

for every

$\mathbb {P} \left [ X\lt (1-a)\mu \right ]\lt e^{-a^2\mu /2}$

for every

$a\gt 0$

;

$a\gt 0$

; -

•

$\mathbb {P} \left [ X\gt (1+a)\mu \right ]\lt e^{-a^2\mu /3}$

for every

$\mathbb {P} \left [ X\gt (1+a)\mu \right ]\lt e^{-a^2\mu /3}$

for every

$a\in (0,\frac {3}{2})$

.

$a\in (0,\frac {3}{2})$

.

6.2 Random-induced subgraphs

The following theorem is the main result of this section. It asserts that with probability at least

![]() $1-n^{-\Theta (1)}$

, random (induced) subgraphs of spectral expanders are also spectral expanders.

$1-n^{-\Theta (1)}$

, random (induced) subgraphs of spectral expanders are also spectral expanders.

Theorem 6.2 (Random subgraphs of spectral expanders). Let

![]() $\gamma \in (0,1/200]$

be a constant. There exists an absolute constant

$\gamma \in (0,1/200]$

be a constant. There exists an absolute constant

![]() $C$

such that the following holds for sufficiently large

$C$

such that the following holds for sufficiently large

![]() $n$

. Let

$n$

. Let

![]() $d,\lambda \gt 0$

, let

$d,\lambda \gt 0$

, let

![]() $\sigma \in [1/n,1)$

, and let

$\sigma \in [1/n,1)$

, and let

![]() $G$

be an

$G$

be an

![]() $(n,(1\pm \gamma )d,\lambda )$

-graph. Let

$(n,(1\pm \gamma )d,\lambda )$

-graph. Let

![]() $X\subseteq V(G)$

with

$X\subseteq V(G)$

with

![]() $|X|=\sigma n$

be a subset chosen uniformly at random, and let

$|X|=\sigma n$

be a subset chosen uniformly at random, and let

![]() $H \,:\!=\, G[X]$

be the subgraph of

$H \,:\!=\, G[X]$

be the subgraph of

![]() $G$

induced by

$G$

induced by

![]() $X$

. Assume that

$X$

. Assume that

Then with probability at least

![]() $1-n^{-1/6}$

,

$1-n^{-1/6}$

,

![]() $H$

is a

$H$

is a

![]() $\left (\sigma n, (1\pm 2\gamma )\sigma d, 6\sigma \lambda \right )$

-graph.

$\left (\sigma n, (1\pm 2\gamma )\sigma d, 6\sigma \lambda \right )$

-graph.

Let us briefly discuss the two conditions in Theorem6.2. The first condition permits the random subgraph to be quite sparse – with degrees on the order of

![]() $\log n$

– but not sparser than that. Below this threshold, the degrees of the random subgraph become unstable, and it will no longer be approximately regular. The second condition is essentially the Alon-Boppana bound, up to a logarithmic factor, which dictates that the second singular value of an approximately

$\log n$

– but not sparser than that. Below this threshold, the degrees of the random subgraph become unstable, and it will no longer be approximately regular. The second condition is essentially the Alon-Boppana bound, up to a logarithmic factor, which dictates that the second singular value of an approximately

![]() $\sigma d$

-regular graph must be at least

$\sigma d$

-regular graph must be at least

![]() $\Omega (\sqrt {\sigma d})$

. In other words, the conditions in Theorem6.2 are nearly necessary for a subgraph

$\Omega (\sqrt {\sigma d})$

. In other words, the conditions in Theorem6.2 are nearly necessary for a subgraph

![]() $H$

to be an almost regular expander. Additionally, we did not optimise the constant factor in

$H$

to be an almost regular expander. Additionally, we did not optimise the constant factor in

![]() $s_2(H)$

, though we believe it should be

$s_2(H)$

, though we believe it should be

![]() $1 + o(1)$

.

$1 + o(1)$

.

The proof of Theorem6.2 is based on bounds of the spectral norm of a random submatrix, which is obtained from a given

![]() $n \times n$

matrix

$n \times n$

matrix

![]() $B$

by choosing a uniformly random subset of rows and a uniformly random subset of columns of

$B$

by choosing a uniformly random subset of rows and a uniformly random subset of columns of

![]() $B$

.

$B$

.

There are two natural ways to choose a random subset of the set

![]() $[n]$

. We can make a random subset

$[n]$

. We can make a random subset

![]() $I$

by selecting every element of

$I$

by selecting every element of

![]() $[n]$

independently at random with probability

$[n]$

independently at random with probability

![]() $\sigma \in (0,1)$

. In this case, we write

$\sigma \in (0,1)$

. In this case, we write

Alternatively, we can choose any

![]() $m$

-set

$m$

-set

![]() $J$

of

$J$

of

![]() $[n]$

with the same probability

$[n]$

with the same probability

![]() $1/\binom {n}{m}$

. In this case, we write

$1/\binom {n}{m}$

. In this case, we write

Note that if

![]() $m = \sigma n$

, the models

$m = \sigma n$

, the models

![]() $\textrm {Subset}(n,\sigma )$

and

$\textrm {Subset}(n,\sigma )$

and

![]() $\textrm {Subset}(n,m)$

are closely related but not identical. It should be clear from the context which one we consider.

$\textrm {Subset}(n,m)$

are closely related but not identical. It should be clear from the context which one we consider.

For a given subset

![]() $I \subset [n]$

, we denote by

$I \subset [n]$

, we denote by

![]() $P_I$

the orthogonal projection in

$P_I$

the orthogonal projection in

![]() ${\mathbb {R}}^n$

onto

${\mathbb {R}}^n$

onto

![]() ${\mathbb {R}}^I$

. In other words,

${\mathbb {R}}^I$

. In other words,

![]() $P_I$

is the diagonal matrix with

$P_I$

is the diagonal matrix with

![]() $P_{ii}=1$

if

$P_{ii}=1$

if

![]() $i \in I$

and

$i \in I$

and

![]() $P_{ii}=0$

if

$P_{ii}=0$

if

![]() $i \not \in I$

.

$i \not \in I$

.

The main tool of this section is the following bound. It is worth mentioning that several similar results have been proved before, for example, in [Reference Rudelson and Vershynin35] and [Reference Tropp41].

Theorem 6.3 (Norms of random submatrices). Let

![]() $B$

be an

$B$

be an

![]() $n\times n$

matrix. Let

$n\times n$

matrix. Let

![]() $I,I' \sim \textrm {Subset}(n,\sigma )$

be two independent subsets, where

$I,I' \sim \textrm {Subset}(n,\sigma )$

be two independent subsets, where

![]() $\sigma \in (0,1)$

. Let

$\sigma \in (0,1)$

. Let

![]() $p\geq 2$

and let

$p\geq 2$

and let

![]() $q=\max \{p,2\log n\}$

. Then

$q=\max \{p,2\log n\}$

. Then

Here

![]() $\mathbb {E}_p[X]=(\mathbb {E}|X|^p)^{1/p}$

is the

$\mathbb {E}_p[X]=(\mathbb {E}|X|^p)^{1/p}$

is the

![]() $L_p$

norm of the random variable

$L_p$

norm of the random variable

![]() $X$

; the norm

$X$

; the norm

![]() $\|{\mkern 2mu\cdot \mkern 2mu}\|_{1\to 2}$

denotes the norm of a matrix as an

$\|{\mkern 2mu\cdot \mkern 2mu}\|_{1\to 2}$

denotes the norm of a matrix as an

![]() $\ell _1 \to \ell _2$

linear operator, which equals to the maximum value among the

$\ell _1 \to \ell _2$

linear operator, which equals to the maximum value among the

![]() $\ell _2$

norm of each column; and

$\ell _2$

norm of each column; and

![]() $\|{\cdot }\|_\infty$

denotes the maximum absolute entry of a matrix.

$\|{\cdot }\|_\infty$

denotes the maximum absolute entry of a matrix.

We use the following results to derive Theorem6.3.

Lemma 6.4 (Rudelson-Vershynin [Reference Rudelson and Vershynin35]). Let

![]() $A$

be an

$A$

be an

![]() $m\times n$

matrix with rank

$m\times n$

matrix with rank

![]() $r$

. Let

$r$

. Let

![]() $I \sim \textrm {Subset}(n,\sigma )$

, where

$I \sim \textrm {Subset}(n,\sigma )$

, where

![]() $\sigma \in (0,1)$

. Let

$\sigma \in (0,1)$

. Let

![]() $p \ge 2$

and let

$p \ge 2$

and let

![]() $q=\max \{p,2\log r\}$

. Then

$q=\max \{p,2\log r\}$

. Then

Theorem 6.5 (Tropp [Reference Tropp41]). Let

![]() $A$

be an

$A$

be an

![]() $m\times n$

matrix with rank

$m\times n$

matrix with rank

![]() $r$

. Let

$r$

. Let

![]() $I \sim \textrm {Subset}(n,\sigma )$

, where

$I \sim \textrm {Subset}(n,\sigma )$

, where

![]() $\sigma \in (0,1)$

. Let

$\sigma \in (0,1)$

. Let

![]() $p \ge 2$

and let

$p \ge 2$

and let

![]() $q=\max \{p,2\log r\}$

. Then

$q=\max \{p,2\log r\}$

. Then

Proof of Theorem 6.3. First, we apply Lemma 6.4 twice (in the same manner as in [Reference Tropp41]), where we first take

![]() $P_IB,P_{I'}$

in place of

$P_IB,P_{I'}$

in place of

![]() $A,P_I$

, and then take

$A,P_I$

, and then take

![]() $B^{\mathsf {T}},P_I$

in place of

$B^{\mathsf {T}},P_I$

in place of

![]() $A,P_I$

. So we obtain

$A,P_I$

. So we obtain

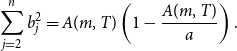

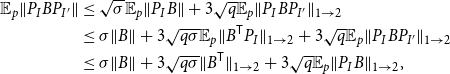

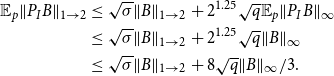

\begin{equation*} \begin{aligned} \mathbb {E}_p\|{P_I B P_{I'}}\| &\le \sqrt {\sigma }\mathbb {E}_p\|{P_I B}\| + 3\sqrt {q}\mathbb {E}_p\|{P_I B P_{I'}}\|_{1\rightarrow 2}\\ &\le \sigma \|{B}\| + 3\sqrt {q\sigma }\mathbb {E}_p\|{B^{\mathsf {T}} P_I}\|_{1\rightarrow 2} + 3\sqrt {q}\mathbb {E}_p\|{P_I B P_{I'}}\|_{1\rightarrow 2} \\ &\le \sigma \|{B}\| + 3\sqrt {q\sigma }\|{B^{\mathsf {T}}}\|_{1\rightarrow 2} + 3\sqrt {q}\mathbb {E}_p\|{P_I B}\|_{1\rightarrow 2}, \end{aligned} \end{equation*}

\begin{equation*} \begin{aligned} \mathbb {E}_p\|{P_I B P_{I'}}\| &\le \sqrt {\sigma }\mathbb {E}_p\|{P_I B}\| + 3\sqrt {q}\mathbb {E}_p\|{P_I B P_{I'}}\|_{1\rightarrow 2}\\ &\le \sigma \|{B}\| + 3\sqrt {q\sigma }\mathbb {E}_p\|{B^{\mathsf {T}} P_I}\|_{1\rightarrow 2} + 3\sqrt {q}\mathbb {E}_p\|{P_I B P_{I'}}\|_{1\rightarrow 2} \\ &\le \sigma \|{B}\| + 3\sqrt {q\sigma }\|{B^{\mathsf {T}}}\|_{1\rightarrow 2} + 3\sqrt {q}\mathbb {E}_p\|{P_I B}\|_{1\rightarrow 2}, \end{aligned} \end{equation*}

where the last inequality follows since the

![]() $1 \to 2$

norm of a submatrix is bounded by the

$1 \to 2$

norm of a submatrix is bounded by the

![]() $1 \to 2$

norm of a matrix. We then use Theorem6.5 to complete the proof:

$1 \to 2$

norm of a matrix. We then use Theorem6.5 to complete the proof:

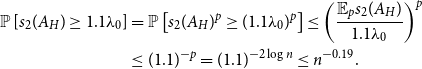

\begin{align*} \mathbb {E}_p\|P_I B\|_{1\rightarrow 2} &\le \sqrt {\sigma }\|{B}\|_{1\rightarrow 2} + 2^{1.25}\sqrt {q}\mathbb {E}_p\|{P_IB}\|_\infty \\ &\leq \sqrt {\sigma }\|{B}\|_{1\rightarrow 2} + 2^{1.25}\sqrt {q}\|{B}\|_\infty \\ &\leq \sqrt {\sigma }\|{B}\|_{1\rightarrow 2} + 8\sqrt {q}\|{B}\|_\infty /3. \end{align*}