1 Introduction

A tree is, informally, an infinite graph in which each node but one (the root of the tree) has exactly

![]() $m+1$

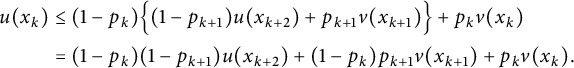

connected nodes, m successors and one predecessor (see below for a precise description of a regular tree). Regular trees and mean value averaging operators on them play the role of being a discrete model analogous to the unit ball and continuous partial differential equations (PDEs) in it. In this sense, linear and nonlinear mean value properties on trees are models that are close (and related to) to linear and nonlinear PDEs. The literature dealing with models and equations given by mean value formulas on trees is quite large but mainly focused on single equations. We quote [Reference Alvarez, Rodríguez and Yakubovich1–Reference Bjorn, Bjorn, Gill and Shanmugalingam3, Reference Del Pezzo, Mosquera and Rossi5–Reference Del Pezzo, Mosquera and Rossi7, Reference Kaufman, Llorente and Wu10, Reference Kaufman and Wu11, Reference Manfredi, Oberman and Sviridov14, Reference Sviridov20, Reference Sviridov21] and the references therein for references that are closely related to our results, but the list is far from being complete.

$m+1$

connected nodes, m successors and one predecessor (see below for a precise description of a regular tree). Regular trees and mean value averaging operators on them play the role of being a discrete model analogous to the unit ball and continuous partial differential equations (PDEs) in it. In this sense, linear and nonlinear mean value properties on trees are models that are close (and related to) to linear and nonlinear PDEs. The literature dealing with models and equations given by mean value formulas on trees is quite large but mainly focused on single equations. We quote [Reference Alvarez, Rodríguez and Yakubovich1–Reference Bjorn, Bjorn, Gill and Shanmugalingam3, Reference Del Pezzo, Mosquera and Rossi5–Reference Del Pezzo, Mosquera and Rossi7, Reference Kaufman, Llorente and Wu10, Reference Kaufman and Wu11, Reference Manfredi, Oberman and Sviridov14, Reference Sviridov20, Reference Sviridov21] and the references therein for references that are closely related to our results, but the list is far from being complete.

Our main goal here is to look for existence and uniqueness of solutions to systems of mean value formulas on regular trees. When dealing with systems two main difficulties arise: the first one comes from the operators used to obtain the equations that govern the components of the system and the second one comes from the coupling between the components. Here, we deal with linear couplings with coefficients in each equation that may change form one point to another and with linear or nonlinear mean value properties given in terms of averaging operators involving the successors together with a possible linear dependence on the predecessor.

Our main result can be summarized as follows: for a general system of averaging operators with linear coupling on a regular tree, we find the sharp conditions (necessary and sufficient conditions) on the coefficients of the coupling and the contribution of the predecessor/successors in such a way that the Dirichlet problem for the system with continuous boundary data has existence and uniqueness of solutions.

Now, let us introduce briefly some definitions and notations needed to make precise the statements of our main results.

The ambient space, a regular tree. Given

![]() $m\in \mathbb {N}_{\ge 2},$

a tree

$m\in \mathbb {N}_{\ge 2},$

a tree

![]() $\mathbb {T}$

with regular m-branching is an infinite graph that consists of a root, denoted as the empty set

$\mathbb {T}$

with regular m-branching is an infinite graph that consists of a root, denoted as the empty set

![]() $\emptyset $

, and an infinite number of nodes, labeled as all finite sequences

$\emptyset $

, and an infinite number of nodes, labeled as all finite sequences

![]() $(a_1,a_2,\dots ,a_k)$

with

$(a_1,a_2,\dots ,a_k)$

with

![]() $k\in \mathbb {N},$

whose coordinates

$k\in \mathbb {N},$

whose coordinates

![]() $a_i$

are chosen from

$a_i$

are chosen from

![]() $\{0,1,\dots ,m-1\}.$

$\{0,1,\dots ,m-1\}.$

The elements in

![]() $\mathbb {T}$

are called vertices. Each vertex x has m successors, obtained by adding another coordinate. We will denote by

$\mathbb {T}$

are called vertices. Each vertex x has m successors, obtained by adding another coordinate. We will denote by

the set of successors of the vertex

![]() $x.$

If x is not the root then x has a only an immediate predecessor, which we will denote

$x.$

If x is not the root then x has a only an immediate predecessor, which we will denote

![]() $\hat {x}.$

The segment connecting a vertex x with

$\hat {x}.$

The segment connecting a vertex x with

![]() $\hat {x}$

is called an edge and denoted by

$\hat {x}$

is called an edge and denoted by

![]() $(\hat {x},x).$

$(\hat {x},x).$

A vertex

![]() $x\in \mathbb {T}$

has level

$x\in \mathbb {T}$

has level

![]() $k\in \mathbb {N}$

if

$k\in \mathbb {N}$

if

![]() $x=(a_1,a_2,\dots ,a_k)$

. The level of x is denoted by

$x=(a_1,a_2,\dots ,a_k)$

. The level of x is denoted by

![]() $|x|$

and the set of all k-level vertices is denoted by

$|x|$

and the set of all k-level vertices is denoted by

![]() $\mathbb {T}^k.$

We say that the edge

$\mathbb {T}^k.$

We say that the edge

![]() $e=(\hat {x},x)$

has k-level if

$e=(\hat {x},x)$

has k-level if

![]() $x\in \mathbb {T}^k.$

$x\in \mathbb {T}^k.$

A branch of

![]() $\mathbb {T}$

is an infinite sequence of vertices starting at the root, where each of the vertices in the sequence is followed by one of its immediate successors. The collection of all branches forms the boundary of

$\mathbb {T}$

is an infinite sequence of vertices starting at the root, where each of the vertices in the sequence is followed by one of its immediate successors. The collection of all branches forms the boundary of

![]() $\mathbb {T}$

, denoted by

$\mathbb {T}$

, denoted by

![]() $\partial \mathbb {T}$

. Observe that the mapping

$\partial \mathbb {T}$

. Observe that the mapping

![]() $\psi :\partial \mathbb {T}\to [0,1]$

defined as

$\psi :\partial \mathbb {T}\to [0,1]$

defined as

is surjective, where

![]() $\pi =(a_1,\dots , a_k,\dots )\in \partial \mathbb {T}$

and

$\pi =(a_1,\dots , a_k,\dots )\in \partial \mathbb {T}$

and

![]() $a_k\in \{0,1,\dots ,m-1\}$

for all

$a_k\in \{0,1,\dots ,m-1\}$

for all

![]() $k\in \mathbb {N}.$

Whenever

$k\in \mathbb {N}.$

Whenever

![]() $x=(a_1,\dots ,a_k)$

is a vertex, we set

$x=(a_1,\dots ,a_k)$

is a vertex, we set ![]()

Averaging operators. Let

![]() $F\colon \mathbb {R}^m\to \mathbb {R}$

be a continuous function. We call F an averaging operator if it satisfies the following:

$F\colon \mathbb {R}^m\to \mathbb {R}$

be a continuous function. We call F an averaging operator if it satisfies the following:

$$ \begin{align*}\begin{array}{l} \displaystyle F(0,\dots,0)=0 \mbox{ and } F(1,\dots,1)=1; \\[5pt] \displaystyle F(tx_1,\dots,tx_m)=t F(x_1,\dots,x_m); \\[5pt] \displaystyle F(t+x_1,\dots,t+x_m)=t+ F(x_1,\dots,x_m),\qquad \mbox{for all } t\in\mathbb{R}; \\[5pt] \displaystyle F(x_1,\dots,x_{m})<\max\{x_1,\dots,x_{m}\},\qquad \mbox{if not all } x_j\text{'s are equal;} \\[5pt] \displaystyle F \mbox{ is nondecreasing with respect to each variable.} \end{array} \end{align*} $$

$$ \begin{align*}\begin{array}{l} \displaystyle F(0,\dots,0)=0 \mbox{ and } F(1,\dots,1)=1; \\[5pt] \displaystyle F(tx_1,\dots,tx_m)=t F(x_1,\dots,x_m); \\[5pt] \displaystyle F(t+x_1,\dots,t+x_m)=t+ F(x_1,\dots,x_m),\qquad \mbox{for all } t\in\mathbb{R}; \\[5pt] \displaystyle F(x_1,\dots,x_{m})<\max\{x_1,\dots,x_{m}\},\qquad \mbox{if not all } x_j\text{'s are equal;} \\[5pt] \displaystyle F \mbox{ is nondecreasing with respect to each variable.} \end{array} \end{align*} $$

In addition, we will assume that F is permutation invariant, that is,

for each permutation

![]() $\tau $

of

$\tau $

of

![]() $\{1,\dots ,m\}$

and that there exists

$\{1,\dots ,m\}$

and that there exists

![]() $0<\kappa <1$

such that

$0<\kappa <1$

such that

for all

![]() $(x_1,\dots ,x_m)\in \mathbb {R}^m$

and for all

$(x_1,\dots ,x_m)\in \mathbb {R}^m$

and for all

![]() $c>0.$

$c>0.$

As examples of averaging operators we mention the following ones. The first example is taken from [Reference Kaufman, Llorente and Wu10]. For

![]() $1<p<+\infty ,$

the operator

$1<p<+\infty ,$

the operator

![]() $F^p(x_1,\dots ,x_m)=t$

from

$F^p(x_1,\dots ,x_m)=t$

from

![]() $\mathbb {R}^m$

to

$\mathbb {R}^m$

to

![]() $\mathbb {R}$

defined implicitly by

$\mathbb {R}$

defined implicitly by

$$\begin{align*}\sum_{j=1}^m (x_j-t)|x_j-t|^{p-2}=0 \end{align*}$$

$$\begin{align*}\sum_{j=1}^m (x_j-t)|x_j-t|^{p-2}=0 \end{align*}$$

is a permutation invariant averaging operator. Next, we consider, for

![]() $0\le \alpha \le 1$

and

$0\le \alpha \le 1$

and

![]() $0<\beta \leq 1$

with

$0<\beta \leq 1$

with

![]() $\alpha +\beta =1$

$\alpha +\beta =1$

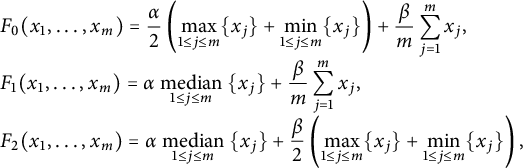

$$ \begin{align*}\begin{array}{ll} \displaystyle F_0(x_1,\dots,x_m)=\frac{\alpha}2 \left(\max_{1\le j\le m} \{x_j\}+\min_{1\le j\le m}\{x_j\} \right) + \frac{\beta}m\sum_{j=1}^m x_j,\\ \displaystyle F_1(x_1,\dots,x_m)=\alpha \underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}+ \frac{\beta}m\sum_{j=1}^m x_j,\\ \displaystyle F_2(x_1,\dots,x_m)=\alpha \underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}+ \frac{\beta}2 \left(\max_{1\le j\le m} \{x_j\}+\min_{1\le j\le m}\{x_j\} \right), \end{array} \end{align*} $$

$$ \begin{align*}\begin{array}{ll} \displaystyle F_0(x_1,\dots,x_m)=\frac{\alpha}2 \left(\max_{1\le j\le m} \{x_j\}+\min_{1\le j\le m}\{x_j\} \right) + \frac{\beta}m\sum_{j=1}^m x_j,\\ \displaystyle F_1(x_1,\dots,x_m)=\alpha \underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}+ \frac{\beta}m\sum_{j=1}^m x_j,\\ \displaystyle F_2(x_1,\dots,x_m)=\alpha \underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}+ \frac{\beta}2 \left(\max_{1\le j\le m} \{x_j\}+\min_{1\le j\le m}\{x_j\} \right), \end{array} \end{align*} $$

where

$$\begin{align*}\underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}\, := \begin{cases} y_{\frac{m+1}2}, & \text{ if }m \text{ is even}, \\ \displaystyle\frac{y_{\frac{m}2}+ y_{(\frac{m}2 +1)}}2, & \text{ if }m \text{ is odd}, \end{cases} \end{align*}$$

$$\begin{align*}\underset{{1\le j\le m}}{\operatorname{\mbox{ median }}}\{x_j\}\, := \begin{cases} y_{\frac{m+1}2}, & \text{ if }m \text{ is even}, \\ \displaystyle\frac{y_{\frac{m}2}+ y_{(\frac{m}2 +1)}}2, & \text{ if }m \text{ is odd}, \end{cases} \end{align*}$$

where

![]() $\{y_1,\dots , y_m\}$

is a nondecreasing rearrangement of

$\{y_1,\dots , y_m\}$

is a nondecreasing rearrangement of

![]() $\{x_1,\dots , x_m\}.$

$\{x_1,\dots , x_m\}.$

![]() $F_0, F_1$

, and

$F_0, F_1$

, and

![]() $F_2$

are permutation invariant averaging operators. For mean value formulas on trees and in for PDEs in the Euclidean space we refer to [Reference Alvarez, Rodríguez and Yakubovich8, Reference Hartenstine and Rudd9, Reference Kesten, Durrett and Kesten12, Reference Manfredi, Parviainen and Rossi15–Reference Oberman17]

$F_2$

are permutation invariant averaging operators. For mean value formulas on trees and in for PDEs in the Euclidean space we refer to [Reference Alvarez, Rodríguez and Yakubovich8, Reference Hartenstine and Rudd9, Reference Kesten, Durrett and Kesten12, Reference Manfredi, Parviainen and Rossi15–Reference Oberman17]

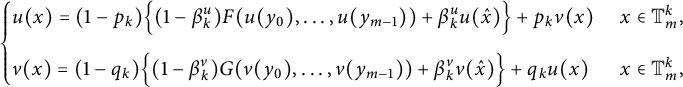

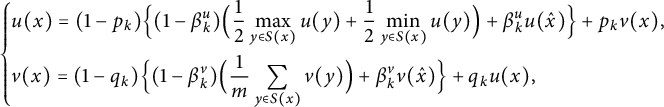

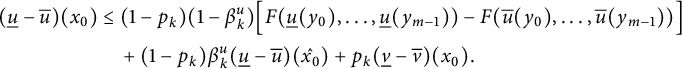

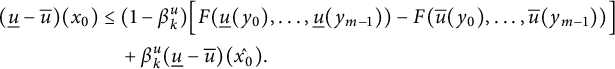

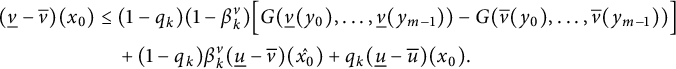

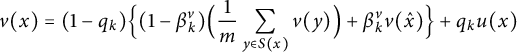

Given two averaging operators F and G, we deal with the system

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle (1-\beta_k^u ) F(u(y_0),\dots,u(y_{m-1})) + \beta_k^u u (\hat{x}) \Big\}+p_k v(x) & \ x\in\mathbb{T}_m^k , \\[10pt] \displaystyle v(x)=(1-q_k)\Big\{ (1-\beta_k^v ) G(v(y_0),\dots,v(y_{m-1})) + \beta_k^v v (\hat{x}) \Big\}+q_k u(x) & \ x \in\mathbb{T}_m^k , \end{array} \right. \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle (1-\beta_k^u ) F(u(y_0),\dots,u(y_{m-1})) + \beta_k^u u (\hat{x}) \Big\}+p_k v(x) & \ x\in\mathbb{T}_m^k , \\[10pt] \displaystyle v(x)=(1-q_k)\Big\{ (1-\beta_k^v ) G(v(y_0),\dots,v(y_{m-1})) + \beta_k^v v (\hat{x}) \Big\}+q_k u(x) & \ x \in\mathbb{T}_m^k , \end{array} \right. \end{align} $$

for

![]() $k\ge 1$

, here

$k\ge 1$

, here

![]() $(y_i)_{i=0,\dots ,m-1} $

are the successors of x. We assume that

$(y_i)_{i=0,\dots ,m-1} $

are the successors of x. We assume that

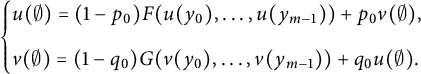

![]() $\beta _0^u=\beta _0^v=0$

, then at the root of the tree the equations are

$\beta _0^u=\beta _0^v=0$

, then at the root of the tree the equations are

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle u(\emptyset)=(1-p_0) F(u(y_0),\dots,u(y_{m-1})) +p_0 v(\emptyset) , \\[10pt] \displaystyle v(\emptyset)=(1-q_0)G(v(y_0),\dots,v(y_{m-1}))+q_0 u(\emptyset). \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle u(\emptyset)=(1-p_0) F(u(y_0),\dots,u(y_{m-1})) +p_0 v(\emptyset) , \\[10pt] \displaystyle v(\emptyset)=(1-q_0)G(v(y_0),\dots,v(y_{m-1}))+q_0 u(\emptyset). \end{array} \right. \end{align*} $$

In order to have a probabilistic interpretation of the equations in this system (see below), we will assume that

![]() $\beta _k^u $

,

$\beta _k^u $

,

![]() $\beta _k^v$

,

$\beta _k^v$

,

![]() $p_k$

,

$p_k$

,

![]() $q_k$

are all in

$q_k$

are all in

![]() $[0,1]$

and moreover, we will also assume that

$[0,1]$

and moreover, we will also assume that

![]() $\beta _k^u$

and

$\beta _k^u$

and

![]() $\beta _k^v$

are bounded away from 1, that is,

$\beta _k^v$

are bounded away from 1, that is,

![]() $\beta _k^u, \beta _k^v \leq c < 1$

and that there is no k such that

$\beta _k^u, \beta _k^v \leq c < 1$

and that there is no k such that

![]() $p_k=q_k=1$

.

$p_k=q_k=1$

.

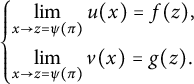

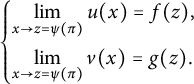

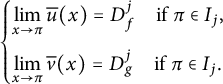

We supplement (1.1) with boundary data. We take two continuous functions

![]() $f,g:[0,1] \mapsto \mathbb {R}$

and impose that along any branch of the tree we have that

$f,g:[0,1] \mapsto \mathbb {R}$

and impose that along any branch of the tree we have that

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow z = \psi (\pi)}u(x) = f(z) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z = \psi(\pi)}v(x)=g(z). \end{array} \right. \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow z = \psi (\pi)}u(x) = f(z) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z = \psi(\pi)}v(x)=g(z). \end{array} \right. \end{align} $$

Here, the limits are understood along the nodes in the branch as the level goes to infinity. That is, if the branch is given by the sequence

![]() $\pi =\{x_n\} \subset \mathbb {T}_m$

,

$\pi =\{x_n\} \subset \mathbb {T}_m$

,

![]() $x_{n+1} \in S(x_{n})$

, then we ask for

$x_{n+1} \in S(x_{n})$

, then we ask for

![]() $u(x_n) \to f(\psi (\pi ))$

as

$u(x_n) \to f(\psi (\pi ))$

as

![]() $n\to \infty $

.

$n\to \infty $

.

Our main result is to obtain necessary and sufficient conditions on the coefficients

![]() $\beta _k^u$

,

$\beta _k^u$

,

![]() $\beta _k^v$

,

$\beta _k^v$

,

![]() $p_k$

and

$p_k$

and

![]() $q_k$

in order to have solvability of the Dirichlet problem, (1.1)–(1.2).

$q_k$

in order to have solvability of the Dirichlet problem, (1.1)–(1.2).

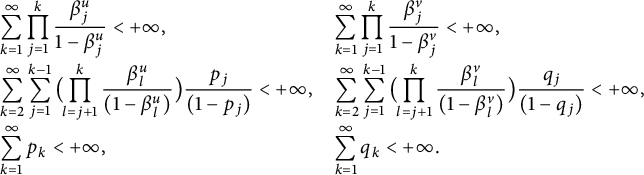

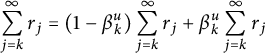

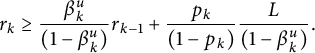

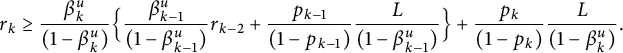

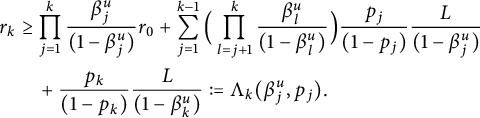

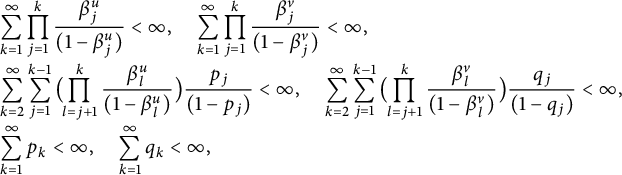

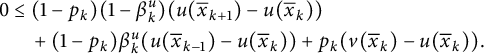

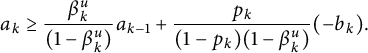

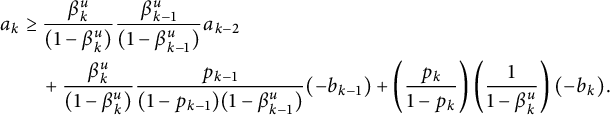

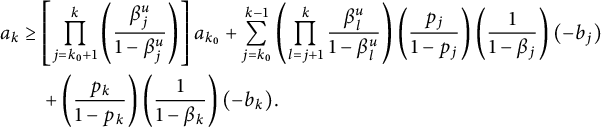

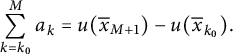

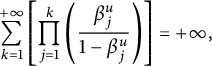

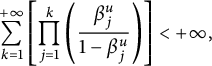

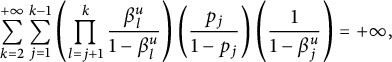

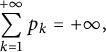

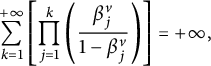

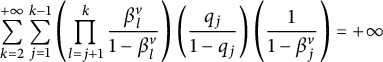

Theorem 1.1 For every

![]() $f,g:[0,1] \mapsto \mathbb {R}$

continuous functions, the system (1.1) has a unique solution satisfying (1.2) if and only if the coefficients

$f,g:[0,1] \mapsto \mathbb {R}$

continuous functions, the system (1.1) has a unique solution satisfying (1.2) if and only if the coefficients

![]() $\beta _k^u$

,

$\beta _k^u$

,

![]() $\beta _k^v$

,

$\beta _k^v$

,

![]() $p_k$

and

$p_k$

and

![]() $q_k$

satisfy the following conditions:

$q_k$

satisfy the following conditions:

$$ \begin{align} \begin{array}{ll} \displaystyle \sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j^u}{1- \beta_j^u} < + \infty, & \displaystyle \sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j^v}{1- \beta_j^v} < + \infty, \\[10pt] \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^u}{(1-\beta_l^u)}\big)\frac{p_j}{(1-p_j)}<+\infty, & \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^v}{(1-\beta_l^v)}\big)\frac{q_j}{(1-q_j)}<+\infty,\\[10pt] \displaystyle \sum_{k=1}^\infty p_k < + \infty,& \displaystyle \sum_{k=1}^\infty q_k < + \infty. \end{array}\nonumber\\ \end{align} $$

$$ \begin{align} \begin{array}{ll} \displaystyle \sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j^u}{1- \beta_j^u} < + \infty, & \displaystyle \sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j^v}{1- \beta_j^v} < + \infty, \\[10pt] \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^u}{(1-\beta_l^u)}\big)\frac{p_j}{(1-p_j)}<+\infty, & \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^v}{(1-\beta_l^v)}\big)\frac{q_j}{(1-q_j)}<+\infty,\\[10pt] \displaystyle \sum_{k=1}^\infty p_k < + \infty,& \displaystyle \sum_{k=1}^\infty q_k < + \infty. \end{array}\nonumber\\ \end{align} $$

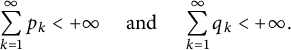

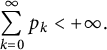

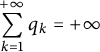

Remark 1.2 Notice that when

![]() $\beta _k^u = \beta _k^v \equiv 0$

the conditions (1.3) are reduced to

$\beta _k^u = \beta _k^v \equiv 0$

the conditions (1.3) are reduced to

$$ \begin{align*} \displaystyle \sum_{k=1}^\infty p_k < + \infty \quad \mbox{ and } \quad \displaystyle \sum_{k=1}^\infty q_k < + \infty. \end{align*} $$

$$ \begin{align*} \displaystyle \sum_{k=1}^\infty p_k < + \infty \quad \mbox{ and } \quad \displaystyle \sum_{k=1}^\infty q_k < + \infty. \end{align*} $$

When

![]() $\beta _k^u$

is a constant

$\beta _k^u$

is a constant

the first condition in (1.3) reads as

$$ \begin{align*}\sum_{k=1}^{\infty} \prod_{j=1}^k \frac{\beta_j^u}{1-\beta_j^u} = \sum_{k=1}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k <+\infty, \end{align*} $$

$$ \begin{align*}\sum_{k=1}^{\infty} \prod_{j=1}^k \frac{\beta_j^u}{1-\beta_j^u} = \sum_{k=1}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k <+\infty, \end{align*} $$

and hence, we get

as the right condition for existence of solutions when

![]() $\beta _k^u$

is constant,

$\beta _k^u$

is constant,

![]() $\beta _k^u \equiv \beta $

. Analogously,

$\beta _k^u \equiv \beta $

. Analogously,

![]() $\beta ^v_j < 1/2$

is the right condition when

$\beta ^v_j < 1/2$

is the right condition when

![]() $\beta ^v_j$

is constant.

$\beta ^v_j$

is constant.

Also in this case (

![]() $\beta _k^u$

constant), the second condition in (1.3) can be obtained from the first and the third one, since in this case, we have that

$\beta _k^u$

constant), the second condition in (1.3) can be obtained from the first and the third one, since in this case, we have that

![]() $p_j \to 0$

(this follows from the third condition) and then we have that the second condition can be bounded by

$p_j \to 0$

(this follows from the third condition) and then we have that the second condition can be bounded by

$$ \begin{align*}\displaystyle \sum_{k=2}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k \Big(\sum_{j=1}^{k-1}\frac{p_j}{(1-p_j)} \Big) \leq \Big( \sum_{k=2}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k \Big) \Big(\sum_{j=1}^{\infty}\frac{p_j}{(1-p_j)} \Big) <+\infty. \end{align*} $$

$$ \begin{align*}\displaystyle \sum_{k=2}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k \Big(\sum_{j=1}^{k-1}\frac{p_j}{(1-p_j)} \Big) \leq \Big( \sum_{k=2}^{\infty} \Big(\frac{\beta}{1-\beta} \Big)^k \Big) \Big(\sum_{j=1}^{\infty}\frac{p_j}{(1-p_j)} \Big) <+\infty. \end{align*} $$

The first series converges since

![]() $\beta < 1/2 $

(this follows from the first condition) and the second series converges since

$\beta < 1/2 $

(this follows from the first condition) and the second series converges since

![]() $p_j \to 0$

and the third condition holds.

$p_j \to 0$

and the third condition holds.

In general, the third condition does not follow from the first and the third. As an example of a set of coefficients that satisfy the first and third conditions but not the second one in (1.3), let us mention

Let us briefly comment on the meaning of the conditions in (1.3). The first condition implies that when x is a node with k large the influence of the predecessor in the value of the components at x is small (hence, there is more influence of the successors). The third condition says that when we are at a point x with k large, then the influence of the other component is small. The second condition couples the set of coefficients in each equation of the system. With these conditions one guarantees that for x with large k the values of the components of (1.1),

![]() $u(x)$

and

$u(x)$

and

![]() $v(x)$

, depend moistly on the values of u and v at successors of x, respectively, and this is exactly what is needed to make possible to fulfill the boundary conditions (1.2).

$v(x)$

, depend moistly on the values of u and v at successors of x, respectively, and this is exactly what is needed to make possible to fulfill the boundary conditions (1.2).

Remark 1.3 Our results can be used to obtain necessary and sufficient conditions for existence and uniqueness of a solution to a single equation,

with

In fact, take as coefficients

![]() $p_k=q_k=0$

and

$p_k=q_k=0$

and

![]() $\beta _k^u=\beta _k^v$

for every k and as boundary data

$\beta _k^u=\beta _k^v$

for every k and as boundary data

![]() $f=g$

in (1.1) to obtain that this problem has a unique solution for every continuous f if and only if

$f=g$

in (1.1) to obtain that this problem has a unique solution for every continuous f if and only if

$$ \begin{align*}\sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j}{1- \beta_j} < + \infty. \end{align*} $$

$$ \begin{align*}\sum_{k=1}^\infty \prod_{j=1}^k \frac{\beta_j}{1- \beta_j} < + \infty. \end{align*} $$

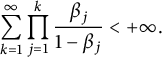

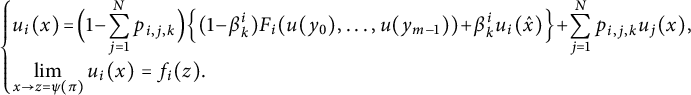

Remark 1.4 Our results can be extended to

![]() $N\times N$

systems with unknowns

$N\times N$

systems with unknowns

![]() $(u_1,\dots ,u_N)$

,

$(u_1,\dots ,u_N)$

,

![]() $\! u_i :\mathbb {T} \mapsto \mathbb {R}$

of the form

$\! u_i :\mathbb {T} \mapsto \mathbb {R}$

of the form

$$ \begin{align} \left\lbrace \begin{array}{@{}l} \displaystyle u_i (x)\! = \! \Big(1 \! - \! \sum_{j=1}^N p_{i,j,k} \Big)\Big\{ \displaystyle (1 \! - \! \beta_k^i ) F_i(u(y_0),\dots,u(y_{m-1})) \! + \! \beta_k^i u_i (\hat{x}) \Big\} \! +\! \sum_{j=1}^N p_{i,j,k} u_j (x) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}u_i (x) = f_i (z). \end{array} \right.\nonumber\\ \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}l} \displaystyle u_i (x)\! = \! \Big(1 \! - \! \sum_{j=1}^N p_{i,j,k} \Big)\Big\{ \displaystyle (1 \! - \! \beta_k^i ) F_i(u(y_0),\dots,u(y_{m-1})) \! + \! \beta_k^i u_i (\hat{x}) \Big\} \! +\! \sum_{j=1}^N p_{i,j,k} u_j (x) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}u_i (x) = f_i (z). \end{array} \right.\nonumber\\ \end{align} $$

Here,

![]() $F_i$

is an averaging operator,

$F_i$

is an averaging operator,

![]() $0\leq p_{i,j,k}\leq 1$

depends on the level of x and on the indexes of the components

$0\leq p_{i,j,k}\leq 1$

depends on the level of x and on the indexes of the components

![]() $i,j$

and on the level of the node in the tree k and are assumed to satisfy

$i,j$

and on the level of the node in the tree k and are assumed to satisfy

![]() $p_{i,i,k} =0$

and

$p_{i,i,k} =0$

and

![]() $0\leq \sum _{j=1}^N p_{i,j,k} < 1$

. The coefficients

$0\leq \sum _{j=1}^N p_{i,j,k} < 1$

. The coefficients

![]() $0 \leq \beta _k^i <1$

depends on the level of x and on the component i. For such general systems, our result says that the system (1.4) has a unique solution if and only if

$0 \leq \beta _k^i <1$

depends on the level of x and on the component i. For such general systems, our result says that the system (1.4) has a unique solution if and only if

$$ \begin{align*} \begin{array}{l} \displaystyle \sum_{k=1}^\infty \prod_{l=1}^k \frac{\beta_l^i}{1- \beta_l^i} < + \infty, \qquad \\[10pt] \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^u}{(1-\beta_l^u)}\big)\frac{\sum_{j=1}^N p_{i,j,k} }{(1- \sum_{j=1}^N p_{i,j,k} )}<+\infty, \\[10pt] \displaystyle \sum_{k=1}^\infty \sum_{j=1}^N p_{i,j,k} < + \infty, \end{array} \end{align*} $$

$$ \begin{align*} \begin{array}{l} \displaystyle \sum_{k=1}^\infty \prod_{l=1}^k \frac{\beta_l^i}{1- \beta_l^i} < + \infty, \qquad \\[10pt] \displaystyle \sum_{k=2}^{\infty}\sum_{j=1}^{k-1}\big(\!\!\prod_{l=j+1}^k \frac{\beta_l^u}{(1-\beta_l^u)}\big)\frac{\sum_{j=1}^N p_{i,j,k} }{(1- \sum_{j=1}^N p_{i,j,k} )}<+\infty, \\[10pt] \displaystyle \sum_{k=1}^\infty \sum_{j=1}^N p_{i,j,k} < + \infty, \end{array} \end{align*} $$

hold for every

![]() $i=1,\dots ,N$

and every k.

$i=1,\dots ,N$

and every k.

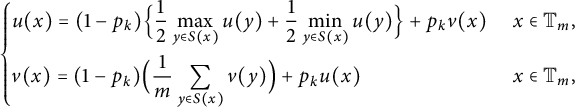

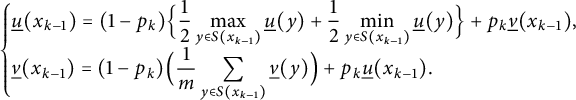

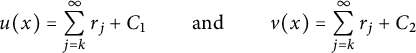

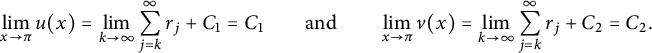

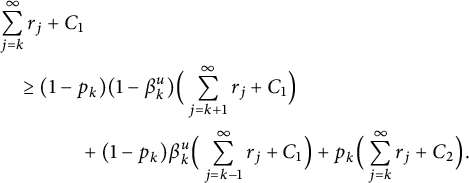

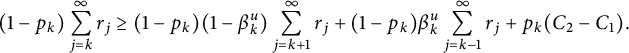

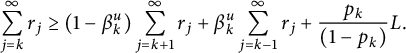

To simplify the presentation, we first prove the main result, Theorem 1.1, in the special case in which

![]() $\beta _k^u = \beta _k^v \equiv 0$

,

$\beta _k^u = \beta _k^v \equiv 0$

,

![]() $p_k=q_k$

, for all k, and the averaging operators are given by

$p_k=q_k$

, for all k, and the averaging operators are given by

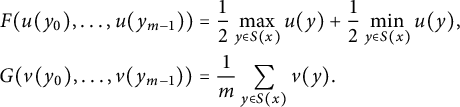

$$ \begin{align} \begin{array}{l} \displaystyle F (u(y_0),\dots,u(y_{m-1})) = \displaystyle\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y), \\[10pt] \displaystyle G (v(y_0),\dots,v(y_{m-1})) = \frac{1}{m}\sum_{y\in S(x)}v(y). \end{array} \end{align} $$

$$ \begin{align} \begin{array}{l} \displaystyle F (u(y_0),\dots,u(y_{m-1})) = \displaystyle\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y), \\[10pt] \displaystyle G (v(y_0),\dots,v(y_{m-1})) = \frac{1}{m}\sum_{y\in S(x)}v(y). \end{array} \end{align} $$

The fact that

![]() $\beta _k^u = \beta _k^v \equiv 0$

simplifies the computations and allows us to find an explicit solution for the special case in which the boundary data f and g are two constants,

$\beta _k^u = \beta _k^v \equiv 0$

simplifies the computations and allows us to find an explicit solution for the special case in which the boundary data f and g are two constants,

![]() $f\equiv C_1$

and

$f\equiv C_1$

and

![]() $g \equiv C_2$

. The choice of the averaging operators in (1.5) has no special relevance but allows us to give a game theoretical interpretation of our equations (see below).

$g \equiv C_2$

. The choice of the averaging operators in (1.5) has no special relevance but allows us to give a game theoretical interpretation of our equations (see below).

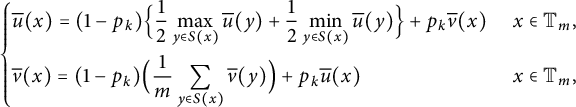

After dealing with this simpler case, we deal with the general case and prove Theorem 1.1 in full generality. Here, we have general averaging operators, F and G,

![]() $\beta _k^u$

and

$\beta _k^u$

and

![]() $\beta _k^v$

can be different from zero (and are allowed to vary depending on the level of the point x) and

$\beta _k^v$

can be different from zero (and are allowed to vary depending on the level of the point x) and

![]() $p_k$

and

$p_k$

and

![]() $q_k$

need not be equal. This case is more involved and now the solution with constant boundary data

$q_k$

need not be equal. This case is more involved and now the solution with constant boundary data

![]() $f\equiv C_1$

and

$f\equiv C_1$

and

![]() $g \equiv C_2$

is not explicit (but in this case, under our conditions for existence, we will construct explicit sub and super solutions that take the boundary values).

$g \equiv C_2$

is not explicit (but in this case, under our conditions for existence, we will construct explicit sub and super solutions that take the boundary values).

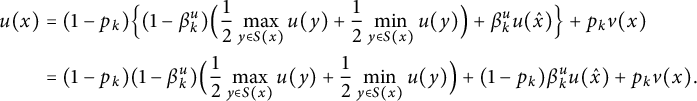

Our system (1.1) with F and G given by (1.5) reads as

$$ \begin{align} \left\lbrace \begin{array}{@{}l} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle (1-\beta_k^u ) \Big(\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y)\Big) + \beta_k^u u (\hat{x}) \Big\}+p_k v(x), \\[10pt] \displaystyle v(x)=(1-q_k)\Big\{ (1-\beta_k^v )\Big( \frac{1}{m}\sum_{y\in S(x)}v(y) \Big) + \beta_k^v v (\hat{x}) \Big\}+q_k u(x), \ \end{array} \right.\nonumber\\ \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}l} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle (1-\beta_k^u ) \Big(\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y)\Big) + \beta_k^u u (\hat{x}) \Big\}+p_k v(x), \\[10pt] \displaystyle v(x)=(1-q_k)\Big\{ (1-\beta_k^v )\Big( \frac{1}{m}\sum_{y\in S(x)}v(y) \Big) + \beta_k^v v (\hat{x}) \Big\}+q_k u(x), \ \end{array} \right.\nonumber\\ \end{align} $$

for

![]() $x \in \mathbb {T}_m$

. This system has a probabilistic interpretation that we briefly describe (see Section 4 for more details). First, assume that

$x \in \mathbb {T}_m$

. This system has a probabilistic interpretation that we briefly describe (see Section 4 for more details). First, assume that

![]() $\beta _k^u=\beta _k^v\equiv 0$

. The game is a two-player zero-sum game played in two boards (each board is a copy of the m-regular tree) with the following rules: the game starts at some node in one of the two trees

$\beta _k^u=\beta _k^v\equiv 0$

. The game is a two-player zero-sum game played in two boards (each board is a copy of the m-regular tree) with the following rules: the game starts at some node in one of the two trees

![]() $(x_0,i)$

with

$(x_0,i)$

with

![]() $x_0\in \mathbb {T}$

and

$x_0\in \mathbb {T}$

and

![]() $i=1,2$

(we add an index to denote in which board is the position of the game). If

$i=1,2$

(we add an index to denote in which board is the position of the game). If

![]() $x_0$

is in the first board, then with probability

$x_0$

is in the first board, then with probability

![]() $p_k$

, the position jumps to the other board and with probability

$p_k$

, the position jumps to the other board and with probability

![]() $(1-p_k),$

the two players play a round of a Tug-of-War game (a fair coin is tossed and the winner chooses the next position of the game at any point among the successors of

$(1-p_k),$

the two players play a round of a Tug-of-War game (a fair coin is tossed and the winner chooses the next position of the game at any point among the successors of

![]() $x_0$

, we refer to [Reference Blanc and Rossi4, Reference Lewicka13, Reference Peres, Schramm, Sheffield and Wilson18, Reference Peres and Sheffield19] for more details concerning Tug-of-War games); in the second board with probability

$x_0$

, we refer to [Reference Blanc and Rossi4, Reference Lewicka13, Reference Peres, Schramm, Sheffield and Wilson18, Reference Peres and Sheffield19] for more details concerning Tug-of-War games); in the second board with probability

![]() $q_k$

, the position changes to the first board, and with probability

$q_k$

, the position changes to the first board, and with probability

![]() $(1-q_k),$

the position goes to one of the successors of

$(1-q_k),$

the position goes to one of the successors of

![]() $x_0$

with uniform probability. We take a finite level L and we end the game when the position arrives to a node at that level that we call

$x_0$

with uniform probability. We take a finite level L and we end the game when the position arrives to a node at that level that we call

![]() $x_\tau $

. We also fix two final payoffs f and g. This means that in the first board Player I pays to Player II, the amount encoded by

$x_\tau $

. We also fix two final payoffs f and g. This means that in the first board Player I pays to Player II, the amount encoded by

![]() $f(\psi (x_\tau ))$

while in the second board, the final payoff is given by

$f(\psi (x_\tau ))$

while in the second board, the final payoff is given by

![]() $g(\psi (x_\tau ))$

. Then the value function for this game is defined as

$g(\psi (x_\tau ))$

. Then the value function for this game is defined as

Here, the

![]() $\inf $

and

$\inf $

and

![]() $\sup $

are taken among all possible strategies of the players (the choice that the players make at every node of what will be the next position if they play (probability

$\sup $

are taken among all possible strategies of the players (the choice that the players make at every node of what will be the next position if they play (probability

![]() $(1-p_k)$

) and they win the coin toss (probability

$(1-p_k)$

) and they win the coin toss (probability

![]() $1/2$

)). The final payoff is given by f or g according to

$1/2$

)). The final payoff is given by f or g according to

![]() $i_\tau =1$

or

$i_\tau =1$

or

![]() $i_\tau =2$

(the final position of the game is in the first or in the second board).

$i_\tau =2$

(the final position of the game is in the first or in the second board).

When

![]() $\beta _k^u$

and/or

$\beta _k^u$

and/or

![]() $\beta _k^v$

are not zero, we add at each turn of the game, a probability of passing to the predecessor of the node.

$\beta _k^v$

are not zero, we add at each turn of the game, a probability of passing to the predecessor of the node.

We have that the pair of functions

![]() $(u_L,v_L)$

given by

$(u_L,v_L)$

given by

![]() $u_L(x) = w_L (x,1)$

and

$u_L(x) = w_L (x,1)$

and

![]() $v_L (x) = w_L (x,2)$

is a solution to the system (1.6) in the finite subgraph of the tree composed by nodes of level less than L. Now, we can take the limit as

$v_L (x) = w_L (x,2)$

is a solution to the system (1.6) in the finite subgraph of the tree composed by nodes of level less than L. Now, we can take the limit as

![]() $L \to \infty $

in the value functions for this game and we obtain that the limit is the unique solution to our system (1.6) that verifies the boundary conditions (1.2) in the infinite tree (see Section 4).

$L \to \infty $

in the value functions for this game and we obtain that the limit is the unique solution to our system (1.6) that verifies the boundary conditions (1.2) in the infinite tree (see Section 4).

Organization of the paper: In the next section, Section 2, we deal with our system in the special case of the directed tree,

![]() $\beta _k^u = \beta _k^v \equiv 0$

with

$\beta _k^u = \beta _k^v \equiv 0$

with

![]() $p_k=q_k$

, and F and G given by (1.5); in Section 3, we deal with the general case of two averaging operators F and G and with general

$p_k=q_k$

, and F and G given by (1.5); in Section 3, we deal with the general case of two averaging operators F and G and with general

![]() $\beta _k^u$

,

$\beta _k^u$

,

![]() $\beta _k^v$

; finally, in Section 4, we include the game theoretical interpretation of our results.

$\beta _k^v$

; finally, in Section 4, we include the game theoretical interpretation of our results.

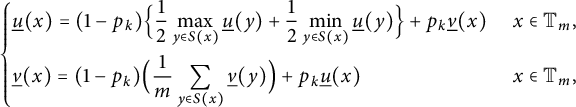

2 A particular system on the directed tree

Our main goal in this section is to find necessary and sufficient conditions on the sequence of coefficients

![]() $\{p_k\}$

in order to have a solution to the system

$\{p_k\}$

in order to have a solution to the system

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y) \Big\}+p_k v(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle v(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}v(y) \Big)+p_k u(x) \qquad & \ x \in\mathbb{T}_m , \end{array} \right. \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle u(x)=(1-p_k)\Big\{ \displaystyle\frac12\max_{y\in S(x)}u(y)+\frac12\min_{y\in S(x)}u(y) \Big\}+p_k v(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle v(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}v(y) \Big)+p_k u(x) \qquad & \ x \in\mathbb{T}_m , \end{array} \right. \end{align} $$

with

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}u(x) = f(z) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}v(x)=g(z). \end{array} \right. \end{align} $$

$$ \begin{align} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}u(x) = f(z) , \\[10pt] \displaystyle \lim_{{x}\rightarrow z=\psi(\pi)}v(x)=g(z). \end{array} \right. \end{align} $$

Here,

![]() $f,g:[0,1]\rightarrow \mathbb {R}$

are continuous functions.

$f,g:[0,1]\rightarrow \mathbb {R}$

are continuous functions.

First, let us prove a lemma where we obtain a solution to our system when the functions f and g are just two constants.

Lemma 2.1 Given

![]() $C_1, C_2 \in \mathbb {R}$

, suppose that

$C_1, C_2 \in \mathbb {R}$

, suppose that

$$ \begin{align*}\sum_{k=0}^{\infty}p_k <+\infty,\end{align*} $$

$$ \begin{align*}\sum_{k=0}^{\infty}p_k <+\infty,\end{align*} $$

then there exists

![]() $(u,v)$

a solution of (2.1) and (2.2) with

$(u,v)$

a solution of (2.1) and (2.2) with

![]() $f\equiv C_1$

and

$f\equiv C_1$

and

![]() $g\equiv C_2.$

$g\equiv C_2.$

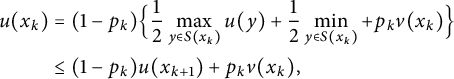

Proof The solution that we are going to obtain will be constant at each level. That is,

(here

![]() $x_k$

is any vertex at level k) for all

$x_k$

is any vertex at level k) for all

![]() $k\geq 0$

. With this simplification, the system (2.1) can be expressed as

$k\geq 0$

. With this simplification, the system (2.1) can be expressed as

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle a_k=(1-p_k)a_{k+1}+p_k b_k, \\[5pt] \displaystyle b_k=(1-p_k)b_{k+1}+p_k a_k, \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle a_k=(1-p_k)a_{k+1}+p_k b_k, \\[5pt] \displaystyle b_k=(1-p_k)b_{k+1}+p_k a_k, \end{array} \right. \end{align*} $$

for each

![]() $k\geq 0$

. Then, we obtain the following system of linear equations:

$k\geq 0$

. Then, we obtain the following system of linear equations:

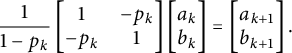

$$ \begin{align*}\frac{1}{1-p_k}\begin{bmatrix} 1 & -p_k \\ -p_k & 1 \end{bmatrix}\begin{bmatrix} a_k \\ b_k \end{bmatrix}=\begin{bmatrix} a_{k+1} \\ b_{k+1} \end{bmatrix}. \end{align*} $$

$$ \begin{align*}\frac{1}{1-p_k}\begin{bmatrix} 1 & -p_k \\ -p_k & 1 \end{bmatrix}\begin{bmatrix} a_k \\ b_k \end{bmatrix}=\begin{bmatrix} a_{k+1} \\ b_{k+1} \end{bmatrix}. \end{align*} $$

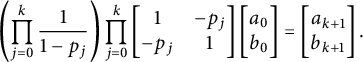

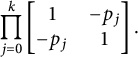

Iterating, we obtain

$$ \begin{align} \left( \prod_{j=0}^{k}\frac{1}{1-p_j} \right) \prod_{j=0}^{k}\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}\begin{bmatrix} a_0 \\ b_0 \end{bmatrix}=\begin{bmatrix} a_{k+1} \\ b_{k+1} \end{bmatrix}. \end{align} $$

$$ \begin{align} \left( \prod_{j=0}^{k}\frac{1}{1-p_j} \right) \prod_{j=0}^{k}\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}\begin{bmatrix} a_0 \\ b_0 \end{bmatrix}=\begin{bmatrix} a_{k+1} \\ b_{k+1} \end{bmatrix}. \end{align} $$

Hence, our next goal is to analyze the convergence of the involved products as

![]() $k\to \infty $

. First, we deal with

$k\to \infty $

. First, we deal with

$$ \begin{align*}\prod_{j=0}^{k}\frac{1}{1-p_j}. \end{align*} $$

$$ \begin{align*}\prod_{j=0}^{k}\frac{1}{1-p_j}. \end{align*} $$

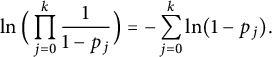

Taking the logarithm, we get

$$ \begin{align*}\ln\Big(\prod_{j=0}^{k}\frac{1}{1-p_j}\Big)=-\sum_{j=0}^{k}\ln(1-p_j). \end{align*} $$

$$ \begin{align*}\ln\Big(\prod_{j=0}^{k}\frac{1}{1-p_j}\Big)=-\sum_{j=0}^{k}\ln(1-p_j). \end{align*} $$

Now, using that

![]() $\lim _{x\rightarrow 0}\frac {-\ln (1-x)}{x}=1,$

as we have

$\lim _{x\rightarrow 0}\frac {-\ln (1-x)}{x}=1,$

as we have

![]() $\sum _{j=0}^{\infty }p_j<\infty $

by hypothesis, we can deduce that the previous series converges,

$\sum _{j=0}^{\infty }p_j<\infty $

by hypothesis, we can deduce that the previous series converges,

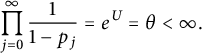

$$ \begin{align*}-\sum_{j=0}^{\infty}\ln(1-p_j)=U<\infty.\end{align*} $$

$$ \begin{align*}-\sum_{j=0}^{\infty}\ln(1-p_j)=U<\infty.\end{align*} $$

Therefore, we have that the product also converges

$$ \begin{align*}\prod_{j=0}^{\infty}\frac{1}{1-p_j}=e^{U}=\theta<\infty. \end{align*} $$

$$ \begin{align*}\prod_{j=0}^{\infty}\frac{1}{1-p_j}=e^{U}=\theta<\infty. \end{align*} $$

Remark that

![]() $U>0$

and hence

$U>0$

and hence

![]() $1<\theta <\infty $

.

$1<\theta <\infty $

.

Next, let us deal with the matrices and study the convergence of

$$ \begin{align*}\prod_{j=0}^{k}\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}. \end{align*} $$

$$ \begin{align*}\prod_{j=0}^{k}\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}. \end{align*} $$

Given

![]() $j\geq 0$

, let us find the eigenvalues of

$j\geq 0$

, let us find the eigenvalues of

$$ \begin{align*}M_j=\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}. \end{align*} $$

$$ \begin{align*}M_j=\begin{bmatrix} 1 & -p_j \\ -p_j & 1 \end{bmatrix}. \end{align*} $$

It is easy to verify that the eigenvalues are

![]() $\{1+p_j,1-p_j\}$

, and the associated eigenvectors are

$\{1+p_j,1-p_j\}$

, and the associated eigenvectors are

![]() $[1 \ 1]$

and

$[1 \ 1]$

and

![]() $[-1 \ 1]$

, respectively. Is important to remark that these vectors are independent of

$[-1 \ 1]$

, respectively. Is important to remark that these vectors are independent of

![]() $p_j$

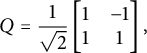

. Then, we introduce the orthogonal matrix (

$p_j$

. Then, we introduce the orthogonal matrix (

![]() $Q^{-1}=Q^T$

)

$Q^{-1}=Q^T$

)

$$ \begin{align*}Q=\frac{1}{\sqrt{2}}\begin{bmatrix} 1 & -1 \\ 1 & 1 \end{bmatrix}, \end{align*} $$

$$ \begin{align*}Q=\frac{1}{\sqrt{2}}\begin{bmatrix} 1 & -1 \\ 1 & 1 \end{bmatrix}, \end{align*} $$

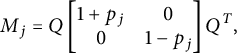

and we have diagonalized

![]() $M_j$

,

$M_j$

,

$$ \begin{align*}M_j=Q\begin{bmatrix} 1+p_j & 0 \\ 0 & 1-p_j \end{bmatrix}Q^T, \end{align*} $$

$$ \begin{align*}M_j=Q\begin{bmatrix} 1+p_j & 0 \\ 0 & 1-p_j \end{bmatrix}Q^T, \end{align*} $$

for all

![]() $j\geq 0$

. Then

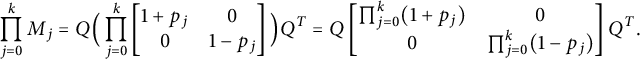

$j\geq 0$

. Then

$$ \begin{align*}\prod_{j=0}^{k}M_j=Q\Big(\prod_{j=0}^{k}\begin{bmatrix} 1+p_j & 0 \\ 0 & 1-p_j \end{bmatrix}\Big)Q^T=Q\begin{bmatrix} \prod_{j=0}^{k}(1+p_j) & 0 \\ 0 & \prod_{j=0}^{k}(1-p_j) \end{bmatrix}Q^T. \end{align*} $$

$$ \begin{align*}\prod_{j=0}^{k}M_j=Q\Big(\prod_{j=0}^{k}\begin{bmatrix} 1+p_j & 0 \\ 0 & 1-p_j \end{bmatrix}\Big)Q^T=Q\begin{bmatrix} \prod_{j=0}^{k}(1+p_j) & 0 \\ 0 & \prod_{j=0}^{k}(1-p_j) \end{bmatrix}Q^T. \end{align*} $$

Using similar arguments as before, we obtain that

$$ \begin{align*}\prod_{j=0}^{\infty}(1+p_j)=\alpha<\infty \qquad \mbox{ and } \qquad \prod_{j=0}^{\infty}(1-p_j)=\frac{1}{\theta}=\beta.\end{align*} $$

$$ \begin{align*}\prod_{j=0}^{\infty}(1+p_j)=\alpha<\infty \qquad \mbox{ and } \qquad \prod_{j=0}^{\infty}(1-p_j)=\frac{1}{\theta}=\beta.\end{align*} $$

Notice that

![]() $0<\beta <1$

and

$0<\beta <1$

and

![]() $1<\alpha <\infty $

. Therefore, taking the limit as

$1<\alpha <\infty $

. Therefore, taking the limit as

![]() $k\rightarrow \infty $

in (2.3), we obtain

$k\rightarrow \infty $

in (2.3), we obtain

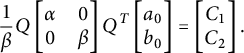

$$ \begin{align*}\frac{1}{\beta}Q\begin{bmatrix} \alpha & 0 \\ 0 & \beta \end{bmatrix}Q^T\begin{bmatrix} a_0 \\ b_0 \end{bmatrix}=\begin{bmatrix} C_1 \\ C_2 \end{bmatrix}. \end{align*} $$

$$ \begin{align*}\frac{1}{\beta}Q\begin{bmatrix} \alpha & 0 \\ 0 & \beta \end{bmatrix}Q^T\begin{bmatrix} a_0 \\ b_0 \end{bmatrix}=\begin{bmatrix} C_1 \\ C_2 \end{bmatrix}. \end{align*} $$

Then, given two constants

![]() $C_1,C_2$

this linear system has a unique solution,

$C_1,C_2$

this linear system has a unique solution,

![]() $[a_0 \ b_0]$

(because the involved matrices are nonsingular). Once we have the value of

$[a_0 \ b_0]$

(because the involved matrices are nonsingular). Once we have the value of

![]() $[a_0 \ b_0]$

, we can obtain the values

$[a_0 \ b_0]$

, we can obtain the values

![]() $[a_k \ b_k]$

at all levels using (2.3). The limits (2.2) are satisfied by this construction.

$[a_k \ b_k]$

at all levels using (2.3). The limits (2.2) are satisfied by this construction.

Now, we need to introduce the following definition.

Definition 2.1 Given

![]() $f,g: [0,1] \to \mathbb {R}$

and a sequence

$f,g: [0,1] \to \mathbb {R}$

and a sequence

![]() $(p_k)_{k\geq 0}$

,

$(p_k)_{k\geq 0}$

,

-

• The pair

$(z,w)$

is a subsolution of (2.1) and (2.2) if

$(z,w)$

is a subsolution of (2.1) and (2.2) if  $$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle z(x)\leq (1-p_k)\Big\{ \displaystyle \frac{1}{2}\max_{y\in S(x)}z(y)+\frac{1}{2}\min_{y\in S(x)}z(y) \Big\}+p_k w(x), & x\in\mathbb{T}_m , \\[10pt] \displaystyle w(x)\leq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}w(y) \Big)+p_k z(x), & x \in\mathbb{T}_m , \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle z(x)\leq (1-p_k)\Big\{ \displaystyle \frac{1}{2}\max_{y\in S(x)}z(y)+\frac{1}{2}\min_{y\in S(x)}z(y) \Big\}+p_k w(x), & x\in\mathbb{T}_m , \\[10pt] \displaystyle w(x)\leq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}w(y) \Big)+p_k z(x), & x \in\mathbb{T}_m , \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \limsup_{{x}\rightarrow \psi(\pi)}z(x) \leq f(\psi(\pi)) , \\[10pt] \displaystyle \limsup_{{x}\rightarrow \psi(\pi)}w(x)\leq g(\psi(\pi)). \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \limsup_{{x}\rightarrow \psi(\pi)}z(x) \leq f(\psi(\pi)) , \\[10pt] \displaystyle \limsup_{{x}\rightarrow \psi(\pi)}w(x)\leq g(\psi(\pi)). \end{array} \right. \end{align*} $$

-

• The pair

$(z,w)$

is a supersolution of (2.1) and (2.2) if

$(z,w)$

is a supersolution of (2.1) and (2.2) if  $$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle z(x)\geq (1-p_k)\Big\{ \displaystyle\frac{1}{2}\max_{y\in S(x)}z(y)+\frac{1}{2}\min_{y\in S(x)}z(y) \Big\}+p_k w(x), & x\in\mathbb{T}_m , \\[10pt] \displaystyle w(x)\geq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}w(y) \Big)+p_k z(x), & x \in\mathbb{T}_m , \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle z(x)\geq (1-p_k)\Big\{ \displaystyle\frac{1}{2}\max_{y\in S(x)}z(y)+\frac{1}{2}\min_{y\in S(x)}z(y) \Big\}+p_k w(x), & x\in\mathbb{T}_m , \\[10pt] \displaystyle w(x)\geq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}w(y) \Big)+p_k z(x), & x \in\mathbb{T}_m , \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \liminf_{{x}\rightarrow \psi(\pi)}z(x) \geq f(\psi(\pi)) , \\[10pt] \displaystyle \liminf_{{x}\rightarrow \psi(\pi)}w(x)\geq g(\psi(\pi)). \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \liminf_{{x}\rightarrow \psi(\pi)}z(x) \geq f(\psi(\pi)) , \\[10pt] \displaystyle \liminf_{{x}\rightarrow \psi(\pi)}w(x)\geq g(\psi(\pi)). \end{array} \right. \end{align*} $$

With these definitions, we are ready to prove a comparison principle.

Lemma 2.2 (Comparison Principle)

Suppose that

![]() $(u_{\ast },v_{\ast })$

be a subsolution of (2.1) and (2.2), and

$(u_{\ast },v_{\ast })$

be a subsolution of (2.1) and (2.2), and

![]() $(u^{\ast },v^{\ast })$

be a supersolution of (2.1) and (2.2), then it holds that

$(u^{\ast },v^{\ast })$

be a supersolution of (2.1) and (2.2), then it holds that

Proof Suppose, arguing by contradiction, that

Let

Claim # 1 If

![]() $x\in Q$

, there exists

$x\in Q$

, there exists

![]() $y\in S(x)$

such that

$y\in S(x)$

such that

![]() $y\in Q$

.

$y\in Q$

.

Proof of the claim

Suppose that

then

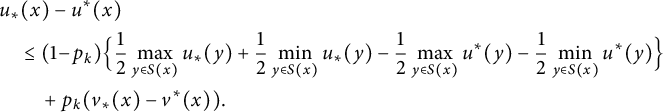

$$ \begin{align*} & u_{\ast}(x)-u^{\ast}(x) \\& \quad \leq \displaystyle (1\! - \! p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)+\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y) \Big\}\\& \qquad + p_k (v_{\ast}(x)-v^{\ast}(x)). \end{align*} $$

$$ \begin{align*} & u_{\ast}(x)-u^{\ast}(x) \\& \quad \leq \displaystyle (1\! - \! p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)+\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y) \Big\}\\& \qquad + p_k (v_{\ast}(x)-v^{\ast}(x)). \end{align*} $$

Using (2.4) in the last term, we obtain

$$ \begin{align*} \displaystyle \displaystyle &(1-p_k)u_{\ast}(x)-u^{\ast}(x) \\\displaystyle & \quad \leq \displaystyle (1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)+\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y) \Big\}. \end{align*} $$

$$ \begin{align*} \displaystyle \displaystyle &(1-p_k)u_{\ast}(x)-u^{\ast}(x) \\\displaystyle & \quad \leq \displaystyle (1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)+\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y) \Big\}. \end{align*} $$

Since

![]() $(1-p_k)$

is different from zero, using again (2.4), we arrive to

$(1-p_k)$

is different from zero, using again (2.4), we arrive to

$$ \begin{align*} &\displaystyle \eta\leq u_{\ast}(x)-u^{\ast}(x)\leq \displaystyle \Big(\frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)\Big) \\&\quad \displaystyle +\Big(\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y)\Big). \end{align*} $$

$$ \begin{align*} &\displaystyle \eta\leq u_{\ast}(x)-u^{\ast}(x)\leq \displaystyle \Big(\frac{1}{2}\max_{y\in S(x)}u_{\ast}(y)- \frac{1}{2}\max_{y\in S(x)}u^{\ast}(y)\Big) \\&\quad \displaystyle +\Big(\frac{1}{2}\min_{y\in S(x)}u_{\ast}(y)-\frac{1}{2}\min_{y\in S(x)}u^{\ast}(y)\Big). \end{align*} $$

Let

![]() $u_{\ast }(y_1)=\max _{y\in S(x)}u_{\ast }(y)$

; it is clear that

$u_{\ast }(y_1)=\max _{y\in S(x)}u_{\ast }(y)$

; it is clear that

![]() $u^{\ast }(y_1)\leq \max _{y\in S(x)}u^{\ast }(y)$

. On the other hand, let

$u^{\ast }(y_1)\leq \max _{y\in S(x)}u^{\ast }(y)$

. On the other hand, let

![]() $u^{\ast }(y_2)=\min _{y\in S(x)}u^{\ast }$

; now, we have

$u^{\ast }(y_2)=\min _{y\in S(x)}u^{\ast }$

; now, we have

![]() $u_{\ast }(y_2)\geq \min _{y\in S(x)}u_{\ast }(y)$

. Hence

$u_{\ast }(y_2)\geq \min _{y\in S(x)}u_{\ast }(y)$

. Hence

This implies that exists

![]() $y\in S(x)$

such that

$y\in S(x)$

such that

![]() $u_{\ast }(y)-u^{\ast }(y)\geq \eta $

. Thus

$u_{\ast }(y)-u^{\ast }(y)\geq \eta $

. Thus

![]() $y\in Q$

.

$y\in Q$

.

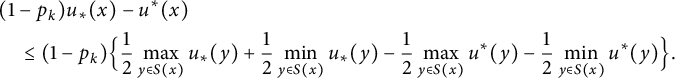

Now suppose the other case, that is,

Now, we use the second equation. We have

$$ \begin{align*} v_{\ast}(x)-v^{\ast}(x)\leq (1-p_k) \Big(\frac{1}{m}\sum_{y\in S(x)}(v_{\ast}(y)-v^{\ast}(y)) \Big) +p_k (u_{\ast}(x)-u^{\ast}(x)). \end{align*} $$

$$ \begin{align*} v_{\ast}(x)-v^{\ast}(x)\leq (1-p_k) \Big(\frac{1}{m}\sum_{y\in S(x)}(v_{\ast}(y)-v^{\ast}(y)) \Big) +p_k (u_{\ast}(x)-u^{\ast}(x)). \end{align*} $$

Using (2.5) again, we obtain

$$ \begin{align*} \eta\leq v_{\ast}(x)-v^{\ast}(x)\leq \frac{1}{m}\sum_{y\in S(x)}(v_{\ast}(y)-v^{\ast}(y)). \end{align*} $$

$$ \begin{align*} \eta\leq v_{\ast}(x)-v^{\ast}(x)\leq \frac{1}{m}\sum_{y\in S(x)}(v_{\ast}(y)-v^{\ast}(y)). \end{align*} $$

Hence, there exists

![]() $y\in S(x)$

such that

$y\in S(x)$

such that

![]() $v_{\ast }(y)-v^{\ast }(y)\geq \eta $

. Thus

$v_{\ast }(y)-v^{\ast }(y)\geq \eta $

. Thus

![]() $y\in Q$

. This ends the proof of the claim.

$y\in Q$

. This ends the proof of the claim.

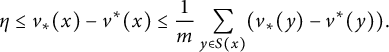

Now, given

![]() $y_0\in Q,$

we have a sequence

$y_0\in Q,$

we have a sequence

![]() $(y_k)_{k\geq 1}$

included in a branch,

$(y_k)_{k\geq 1}$

included in a branch,

![]() $y_{k+1} \in S(y_k)$

, with

$y_{k+1} \in S(y_k)$

, with

![]() $(y_k)_{k\geq 1}\subset Q$

. Hence, we have

$(y_k)_{k\geq 1}\subset Q$

. Hence, we have

Then, there exists a subsequence

![]() $(y_{k_j})_{k\geq 1}$

such that

$(y_{k_j})_{k\geq 1}$

such that

Let us call

![]() $\lim _{k\rightarrow \infty }y_k =\pi $

the branch to which the

$\lim _{k\rightarrow \infty }y_k =\pi $

the branch to which the

![]() $y_k$

belong. Suppose that the first case in (2.6) holds, then

$y_k$

belong. Suppose that the first case in (2.6) holds, then

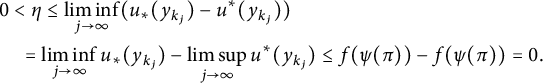

Thus, we have

$$ \begin{align*} &\displaystyle 0<\eta\leq \liminf_{j\rightarrow\infty}(u_{\ast}(y_{k_j})-u^{\ast}(y_{k_j}))\\& \quad \displaystyle =\liminf_{j\rightarrow\infty}u_{\ast}(y_{k_j})-\limsup_{j\rightarrow\infty}u^{\ast}(y_{k_j})\leq f(\psi(\pi))-f(\psi(\pi))=0. \end{align*} $$

$$ \begin{align*} &\displaystyle 0<\eta\leq \liminf_{j\rightarrow\infty}(u_{\ast}(y_{k_j})-u^{\ast}(y_{k_j}))\\& \quad \displaystyle =\liminf_{j\rightarrow\infty}u_{\ast}(y_{k_j})-\limsup_{j\rightarrow\infty}u^{\ast}(y_{k_j})\leq f(\psi(\pi))-f(\psi(\pi))=0. \end{align*} $$

Here, we arrived to a contradiction. The other case is similar. This ends the proof.

To obtain the existence of a solution to our system in the general case (f and g continuous functions), we will use Perron’s method. Hence, let us introduce the following set:

First, we observe that

![]() $\mathscr {A}$

is not empty when f and g are bounded below.

$\mathscr {A}$

is not empty when f and g are bounded below.

Lemma 2.3 Given

![]() $f,g\in C([0,1])$

the set

$f,g\in C([0,1])$

the set

![]() $\mathscr {A}$

verifies

$\mathscr {A}$

verifies

![]() $\mathscr {A}\neq \emptyset $

.

$\mathscr {A}\neq \emptyset $

.

Proof Taking

![]() $z(x_k)=w(x_k)=\min \{\min f,\min g \}$

for all

$z(x_k)=w(x_k)=\min \{\min f,\min g \}$

for all

![]() $k\geq 0$

, this pair is such that

$k\geq 0$

, this pair is such that

![]() $(z,w)\in \mathscr {A}$

.

$(z,w)\in \mathscr {A}$

.

Now, we prove that these functions are uniformly bounded.

Lemma 2.4 Let

![]() $M=\max \{\max f, \max g \}$

. If

$M=\max \{\max f, \max g \}$

. If

![]() $(z,w)\in \mathscr {A}$

, then

$(z,w)\in \mathscr {A}$

, then

Proof Suppose that the statement is false. Then there exists a vertex

![]() $x_0\in \mathbb {T}_m$

(in some level k) such that

$x_0\in \mathbb {T}_m$

(in some level k) such that

![]() $z(x_0)>M$

or

$z(x_0)>M$

or

![]() $w(x_0)>M$

. Suppose, in first case, that

$w(x_0)>M$

. Suppose, in first case, that

![]() $z(x_0)\geq w(x_0)$

. Then

$z(x_0)\geq w(x_0)$

. Then

![]() $z(x_0)>M$

.

$z(x_0)>M$

.

Claim # 1 There exists

![]() $y_0\in S(x_0)$

such that

$y_0\in S(x_0)$

such that

![]() $z(y_0)>M$

. Otherwise,

$z(y_0)>M$

. Otherwise,

$$ \begin{align*} \displaystyle z(x_0)&\leq (1-p_k)\Big\{\frac{1}{2}\max_{y\in S(x_0)}z(y)+\frac{1}{2}\min_{y\in S(x_0)}z(y)\Big\}+p_k w(x_0) \\[10pt] \displaystyle &\leq(1-p_k)\Big\{\frac{1}{2}\max_{y\in S(x_0)}z(y)+\frac{1}{2}\min_{y\in S(x_0)}z(y)\Big\}+p_k z(x_0). \end{align*} $$

$$ \begin{align*} \displaystyle z(x_0)&\leq (1-p_k)\Big\{\frac{1}{2}\max_{y\in S(x_0)}z(y)+\frac{1}{2}\min_{y\in S(x_0)}z(y)\Big\}+p_k w(x_0) \\[10pt] \displaystyle &\leq(1-p_k)\Big\{\frac{1}{2}\max_{y\in S(x_0)}z(y)+\frac{1}{2}\min_{y\in S(x_0)}z(y)\Big\}+p_k z(x_0). \end{align*} $$

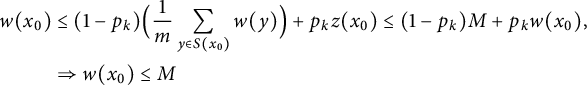

Then

Here, we obtain the contradiction

Suppose the other case:

![]() $w(x_0)\ge z(x_0)$

, then

$w(x_0)\ge z(x_0)$

, then

![]() $w(x_0)>M$

.

$w(x_0)>M$

.

Claim # 2 There exists

![]() $y_0\in S(x_0)$

such that

$y_0\in S(x_0)$

such that

![]() $w(y_0)>M$

. Otherwise,

$w(y_0)>M$

. Otherwise,

$$ \begin{align*} \displaystyle w(x_0)&\leq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x_0)}w(y)\Big)+p_k z(x_0)\le (1-p_k)M+p_kw(x_0),\\ \displaystyle &\Rightarrow w(x_0)\le M \end{align*} $$

$$ \begin{align*} \displaystyle w(x_0)&\leq (1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x_0)}w(y)\Big)+p_k z(x_0)\le (1-p_k)M+p_kw(x_0),\\ \displaystyle &\Rightarrow w(x_0)\le M \end{align*} $$

which is again a contradiction.

With these two claims we can ensure that exists a (infinite) sequence

![]() $x=(x_0,x_0^1, x_0^2, \dots )$

that belongs to a branch such that

$x=(x_0,x_0^1, x_0^2, \dots )$

that belongs to a branch such that

![]() $z(x_0^j)>M$

(or

$z(x_0^j)>M$

(or

![]() $w(x_0^j)>M$

). Then, taking limit along this branch, we obtain

$w(x_0^j)>M$

). Then, taking limit along this branch, we obtain

and we arrive to a contradiction.

Now, let us define

The next result shows that this pair of functions is in fact the desired solution to the system (2.1).

Theorem 2.5 Suppose that

$$ \begin{align*} \sum_{k=0}^{\infty}p_k <+\infty. \end{align*} $$

$$ \begin{align*} \sum_{k=0}^{\infty}p_k <+\infty. \end{align*} $$

The pair

![]() $(u,v)$

given by (2.7) is the unique solution to (2.1) and (2.2).

$(u,v)$

given by (2.7) is the unique solution to (2.1) and (2.2).

Proof Given

![]() $\varepsilon>0$

, there exists

$\varepsilon>0$

, there exists

![]() $\delta>0$

such that

$\delta>0$

such that

![]() $|f(\psi (\pi _1))-f(\psi (\pi _2))|<\varepsilon $

and

$|f(\psi (\pi _1))-f(\psi (\pi _2))|<\varepsilon $

and

![]() $|g(\psi (\pi _1))-g(\psi (\pi _2))|<\varepsilon $

if

$|g(\psi (\pi _1))-g(\psi (\pi _2))|<\varepsilon $

if

![]() $|\psi (\pi _1) - \psi (\pi _2)|<\delta $

. Let

$|\psi (\pi _1) - \psi (\pi _2)|<\delta $

. Let

![]() $k\in \mathbb {N}$

be such that

$k\in \mathbb {N}$

be such that

![]() $\frac {1}{m^k}<\delta $

. We divide the interval

$\frac {1}{m^k}<\delta $

. We divide the interval

![]() $[0,1]$

in the

$[0,1]$

in the

![]() $m^k$

subintervals

$m^k$

subintervals

![]() $I_j=[\frac {j-1}{m^k},\frac {j}{m^k}]$

for

$I_j=[\frac {j-1}{m^k},\frac {j}{m^k}]$

for

![]() $1\leq j\leq m^k$

. Let us consider the constants

$1\leq j\leq m^k$

. Let us consider the constants

for

![]() $1\leq j\leq m^k$

.

$1\leq j\leq m^k$

.

Now, we observe that, if we consider

![]() $\mathbb {T}_m^k=\{x\in \mathbb {T}_m : |x|=k \},$

we have

$\mathbb {T}_m^k=\{x\in \mathbb {T}_m : |x|=k \},$

we have

![]() $\# \mathbb {T}_m^k=m^k$

and given

$\# \mathbb {T}_m^k=m^k$

and given

![]() $x\in \mathbb {T}_m^k$

any branch that have this vertex in the kth level has a limit that belongs to only one segment

$x\in \mathbb {T}_m^k$

any branch that have this vertex in the kth level has a limit that belongs to only one segment

![]() $I_j$

. Then, we have a correspondence one to one the set

$I_j$

. Then, we have a correspondence one to one the set

![]() $\mathbb {T}_m^k$

with the segments

$\mathbb {T}_m^k$

with the segments

![]() $(I_j)_{j=1}^{m^k}$

. Let us call

$(I_j)_{j=1}^{m^k}$

. Let us call

![]() $x^j$

the vertex associated with

$x^j$

the vertex associated with

![]() $I_j$

.

$I_j$

.

Fix now

![]() $1\leq j\leq m^k$

, if we consider

$1\leq j\leq m^k$

, if we consider

![]() $x^j$

as a first vertex we obtain a tree such that the boundary (via

$x^j$

as a first vertex we obtain a tree such that the boundary (via

![]() $\psi $

) is

$\psi $

) is

![]() $I_j$

. Using the Lemma 2.1 in this tree, we can obtain a solution of (2.1) and (2.2) with the constants

$I_j$

. Using the Lemma 2.1 in this tree, we can obtain a solution of (2.1) and (2.2) with the constants

![]() $C_f^j$

and

$C_f^j$

and

![]() $C_g^j$

.

$C_g^j$

.

Thus, doing this in all the vertices of

![]() $T_m^k$

, we have the value of some functions so called

$T_m^k$

, we have the value of some functions so called

![]() $(\underline {u},\underline {v})$

, in all vertex

$(\underline {u},\underline {v})$

, in all vertex

![]() $x\in \mathbb {T}_m$

with

$x\in \mathbb {T}_m$

with

![]() $|x|\geq k$

. Then, using the equation (2.1), we can obtain the values of the

$|x|\geq k$

. Then, using the equation (2.1), we can obtain the values of the

![]() $(k-1)$

-level. In fact, we have

$(k-1)$

-level. In fact, we have

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \underline{u}(x_{k-1})=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x_{k-1})}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x_{k-1})}\underline{u}(y) \Big\}+p_k \underline{v}(x_{k-1}), \\ \displaystyle \underline{v}(x_{k-1})=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x_{k-1})}\underline{v}(y) \Big)+p_k \underline{u}(x_{k-1}). \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \underline{u}(x_{k-1})=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x_{k-1})}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x_{k-1})}\underline{u}(y) \Big\}+p_k \underline{v}(x_{k-1}), \\ \displaystyle \underline{v}(x_{k-1})=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x_{k-1})}\underline{v}(y) \Big)+p_k \underline{u}(x_{k-1}). \end{array} \right. \end{align*} $$

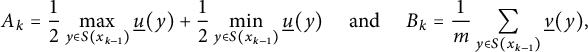

Then, if we call

$$ \begin{align*}A_k=\frac{1}{2}\max_{y\in S(x_{k-1})}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x_{k-1})}\underline{u}(y) \quad \mbox{ and } \quad B_k=\frac{1}{m}\sum_{y\in S(x_{k-1})}\underline{v}(y),\end{align*} $$

$$ \begin{align*}A_k=\frac{1}{2}\max_{y\in S(x_{k-1})}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x_{k-1})}\underline{u}(y) \quad \mbox{ and } \quad B_k=\frac{1}{m}\sum_{y\in S(x_{k-1})}\underline{v}(y),\end{align*} $$

we obtain the system

$$ \begin{align*} \frac{1}{1-p_k}\begin{bmatrix} 1 & -p_k \\ -p_k & 1 \end{bmatrix}\begin{bmatrix} \underline{u}(x_{k-1}) \\ \underline{v}(x_{k-1}) \end{bmatrix}=\begin{bmatrix} A_k \\ B_k \end{bmatrix}. \end{align*} $$

$$ \begin{align*} \frac{1}{1-p_k}\begin{bmatrix} 1 & -p_k \\ -p_k & 1 \end{bmatrix}\begin{bmatrix} \underline{u}(x_{k-1}) \\ \underline{v}(x_{k-1}) \end{bmatrix}=\begin{bmatrix} A_k \\ B_k \end{bmatrix}. \end{align*} $$

Solving this system, we obtain all the values at

![]() $(k-1)$

-level. And so, we continue until obtain values for all the tree

$(k-1)$

-level. And so, we continue until obtain values for all the tree

![]() $\mathbb {T}_m$

. Let us observe that the pair of functions

$\mathbb {T}_m$

. Let us observe that the pair of functions

![]() $(\underline {u},\underline {v})$

verifies

$(\underline {u},\underline {v})$

verifies

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \underline{u}(x)=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x)}\underline{u}(y) \Big\}+p_k \underline{v}(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle \underline{v}(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}\underline{v}(y) \Big)+p_k \underline{u}(x) \qquad & \ x \in\mathbb{T}_m , \\[10pt] \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \underline{u}(x)=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}\underline{u}(y)+\frac{1}{2}\min_{y\in S(x)}\underline{u}(y) \Big\}+p_k \underline{v}(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle \underline{v}(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}\underline{v}(y) \Big)+p_k \underline{u}(x) \qquad & \ x \in\mathbb{T}_m , \\[10pt] \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow \pi}\underline{u}(x) = C_f^j \quad \mbox{if} \ \pi\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\underline{v}(x)=C_g^j \quad \mbox{if} \ \pi\in I_j. \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow \pi}\underline{u}(x) = C_f^j \quad \mbox{if} \ \pi\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\underline{v}(x)=C_g^j \quad \mbox{if} \ \pi\in I_j. \end{array} \right. \end{align*} $$

We can make the same construction but using the constants

to obtain the pair of functions

![]() $(\overline {u},\overline {v})$

that verifies

$(\overline {u},\overline {v})$

that verifies

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \overline{u}(x)=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}\overline{u}(y)+\frac{1}{2}\min_{y\in S(x)}\overline{u}(y) \Big\}+p_k \overline{v}(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle \overline{v}(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}\overline{v}(y) \Big)+p_k \overline{u}(x) \qquad & \ x \in\mathbb{T}_m , \\[10pt] \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \overline{u}(x)=(1-p_k)\Big\{ \frac{1}{2}\max_{y\in S(x)}\overline{u}(y)+\frac{1}{2}\min_{y\in S(x)}\overline{u}(y) \Big\}+p_k \overline{v}(x) \qquad & \ x\in\mathbb{T}_m , \\[10pt] \displaystyle \overline{v}(x)=(1-p_k)\Big(\frac{1}{m}\sum_{y\in S(x)}\overline{v}(y) \Big)+p_k \overline{u}(x) \qquad & \ x \in\mathbb{T}_m , \\[10pt] \end{array} \right. \end{align*} $$

and

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow \pi}\overline{u}(x) = D_f^j \quad \mbox{if} \ \pi\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j \quad \mbox{if} \ \pi\in I_j. \\ \end{array} \right. \end{align*} $$

$$ \begin{align*} \left\lbrace \begin{array}{@{}ll} \displaystyle \lim_{{x}\rightarrow \pi}\overline{u}(x) = D_f^j \quad \mbox{if} \ \pi\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j \quad \mbox{if} \ \pi\in I_j. \\ \end{array} \right. \end{align*} $$

Now, we observe that the pair

![]() $(\underline {u},\underline {v})$

is a subsolution and

$(\underline {u},\underline {v})$

is a subsolution and

![]() $(\overline {u},\overline {v})$

is a supersolution. We only need to observe that

$(\overline {u},\overline {v})$

is a supersolution. We only need to observe that

$$ \begin{align*} \begin{array}{ll} \displaystyle \lim_{{x}\rightarrow \pi}\underline{u}(x) =\limsup_{{x}\rightarrow \pi}\underline{u}(x)= C_f^j\leq f(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\underline{v}(x)=\limsup_{{x}\rightarrow \pi}\underline{v}(x)=C_g^j\leq g(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\ \end{array} \end{align*} $$

$$ \begin{align*} \begin{array}{ll} \displaystyle \lim_{{x}\rightarrow \pi}\underline{u}(x) =\limsup_{{x}\rightarrow \pi}\underline{u}(x)= C_f^j\leq f(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\underline{v}(x)=\limsup_{{x}\rightarrow \pi}\underline{v}(x)=C_g^j\leq g(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\ \end{array} \end{align*} $$

and

$$ \begin{align*} \begin{array}{ll} \displaystyle \lim_{{x}\rightarrow \pi}\overline{u}(x) =\liminf_{{x}\rightarrow \pi}\overline{u}(x)= D_f^j\geq f(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\overline{v}(x)=\limsup_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j\geq g(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j. \end{array} \end{align*} $$

$$ \begin{align*} \begin{array}{ll} \displaystyle \lim_{{x}\rightarrow \pi}\overline{u}(x) =\liminf_{{x}\rightarrow \pi}\overline{u}(x)= D_f^j\geq f(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j, \\[10pt] \displaystyle \lim_{{x}\rightarrow \pi}\overline{v}(x)=\limsup_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j\geq g(\psi(\pi)) \quad \mbox{if} \ \psi(\pi)\in I_j. \end{array} \end{align*} $$

Now, let us see that

![]() $(u,v)\in \mathscr {A}$

. Take

$(u,v)\in \mathscr {A}$

. Take

![]() $(z,w)\in \mathscr {A}$

and fix

$(z,w)\in \mathscr {A}$

and fix

![]() $x\in \mathbb {T}_m$

, then

$x\in \mathbb {T}_m$

, then

As

![]() $z\leq u$

and

$z\leq u$

and

![]() $w\leq v,$

we obtain

$w\leq v,$

we obtain

Taking supremum in the left-hand side, we obtain

Analogously, we obtain the corresponding inequality for v.

On the other hand, using the comparison principle, we have

![]() $z\le \overline {u}$

, and then we conclude that

$z\le \overline {u}$

, and then we conclude that

![]() $u\le \overline {u}$

. Thus,

$u\le \overline {u}$

. Thus,

Using that

![]() $\varepsilon>0$

is arbitrary, we get

$\varepsilon>0$

is arbitrary, we get

and the same with v. Hence, we conclude that

![]() $(u,v)\in \mathscr {A}$

.

$(u,v)\in \mathscr {A}$

.

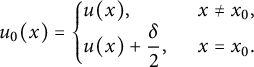

Now, we want to see that

![]() $(u,v)$

verifies the equalities in the equations. We argue by contradiction. First, assume that there is a point

$(u,v)$

verifies the equalities in the equations. We argue by contradiction. First, assume that there is a point

![]() $x_0\in \mathbb {T}_m$

, where an inequality is strict, that is,

$x_0\in \mathbb {T}_m$

, where an inequality is strict, that is,

Let

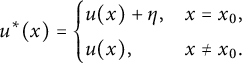

and consider the function

$$\begin{align*}u_{0} (x) =\left\lbrace \begin{array}{@{}ll} u(x), & \ \ x \neq x_{0}, \\ \displaystyle u(x)+\frac{\delta}{2}, & \ \ x =x_{0}. \\ \end{array} \right. \end{align*}$$

$$\begin{align*}u_{0} (x) =\left\lbrace \begin{array}{@{}ll} u(x), & \ \ x \neq x_{0}, \\ \displaystyle u(x)+\frac{\delta}{2}, & \ \ x =x_{0}. \\ \end{array} \right. \end{align*}$$

Observe that

and hence

Then we have that

![]() $(u_{0},v)\in \mathscr {A}$

but

$(u_{0},v)\in \mathscr {A}$

but

![]() $u_{0}(x_{0})>u(x_{0})$

reaching a contradiction. A similar argument shows that we

$u_{0}(x_{0})>u(x_{0})$

reaching a contradiction. A similar argument shows that we

![]() $(u,v)$

also solves the second equation in the system.

$(u,v)$

also solves the second equation in the system.

By construction,

![]() $\underline {u}\leq u$

and

$\underline {u}\leq u$

and

![]() $\underline {v}\leq v$

. On the other hand, given

$\underline {v}\leq v$

. On the other hand, given

![]() $(z,w)\in \mathscr {A}$

, by the comparison principle, we obtain

$(z,w)\in \mathscr {A}$

, by the comparison principle, we obtain

![]() $u\leq \overline {u}$

and

$u\leq \overline {u}$

and

![]() $v\leq \overline {v}$

.

$v\leq \overline {v}$

.

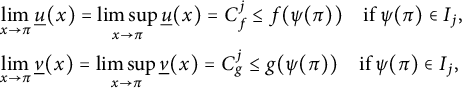

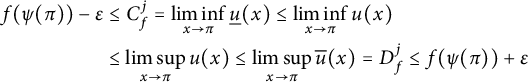

Now observe that for

![]() $\psi (\pi )\in I_j$

, it holds that

$\psi (\pi )\in I_j$

, it holds that

![]() $C_f^j\geq f(\psi (\pi ))-\varepsilon $

and

$C_f^j\geq f(\psi (\pi ))-\varepsilon $

and

![]() $D_f^j\leq f(\psi (\pi ))+\varepsilon $

, and

$D_f^j\leq f(\psi (\pi ))+\varepsilon $

, and

![]() $C_g^j\geq g(\psi (\pi ))-\varepsilon $

and

$C_g^j\geq g(\psi (\pi ))-\varepsilon $

and

![]() $D_g^j\leq g(\psi (\pi ))+\varepsilon $

. Thus, we have

$D_g^j\leq g(\psi (\pi ))+\varepsilon $

. Thus, we have

$$ \begin{align*} \displaystyle f(\psi(\pi))-\varepsilon&\leq C_f^j=\liminf_{{x}\rightarrow \pi}\underline{u}(x)\leq\liminf_{{x}\rightarrow \pi}u(x) \\ \displaystyle &\leq\limsup_{{x}\rightarrow \pi}u(x)\leq\limsup_{{x}\rightarrow \pi}\overline{u}(x)=D_f^j\leq f(\psi(\pi))+\varepsilon \end{align*} $$

$$ \begin{align*} \displaystyle f(\psi(\pi))-\varepsilon&\leq C_f^j=\liminf_{{x}\rightarrow \pi}\underline{u}(x)\leq\liminf_{{x}\rightarrow \pi}u(x) \\ \displaystyle &\leq\limsup_{{x}\rightarrow \pi}u(x)\leq\limsup_{{x}\rightarrow \pi}\overline{u}(x)=D_f^j\leq f(\psi(\pi))+\varepsilon \end{align*} $$

and

$$ \begin{align*} \displaystyle g(\psi(\pi))-\varepsilon&\leq C_g^j=\liminf_{{x}\rightarrow \pi}\underline{v}(x)\leq\liminf_{{x}\rightarrow \pi}v(x)\\ \displaystyle &\leq\limsup_{{x}\rightarrow \pi}v(x)\leq\limsup_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j\leq g(\psi(\pi))+\varepsilon. \end{align*} $$

$$ \begin{align*} \displaystyle g(\psi(\pi))-\varepsilon&\leq C_g^j=\liminf_{{x}\rightarrow \pi}\underline{v}(x)\leq\liminf_{{x}\rightarrow \pi}v(x)\\ \displaystyle &\leq\limsup_{{x}\rightarrow \pi}v(x)\leq\limsup_{{x}\rightarrow \pi}\overline{v}(x)=D_g^j\leq g(\psi(\pi))+\varepsilon. \end{align*} $$

Hence, since

![]() $\varepsilon $

is arbitrary, we obtain

$\varepsilon $

is arbitrary, we obtain

and then conclude that

![]() $(u,v)$

is a solution to (2.1) and (2.2).

$(u,v)$

is a solution to (2.1) and (2.2).

The uniqueness of solutions is a direct consequence of the comparison principle. Suppose that

![]() $(u_1,v_1)$

and

$(u_1,v_1)$

and

![]() $(u_2,v_2)$

are two solutions of (2.1) and (2.2). Then,

$(u_2,v_2)$

are two solutions of (2.1) and (2.2). Then,

![]() $(u_1,v_1)$

is a subsolution and

$(u_1,v_1)$

is a subsolution and

![]() $(u_2,v_2)$

is a supersolution. From the comparison principle, we get

$(u_2,v_2)$

is a supersolution. From the comparison principle, we get

![]() $u_1\leq u_2$

and

$u_1\leq u_2$

and

![]() $v_1\leq v_2$

in

$v_1\leq v_2$

in

![]() $\mathbb {T}_m$

. The reverse inequalities are obtained reversing the roles of

$\mathbb {T}_m$

. The reverse inequalities are obtained reversing the roles of

![]() $(u_1,v_1)$

and

$(u_1,v_1)$

and

![]() $(u_2,v_2)$

.

$(u_2,v_2)$

.

Next, we show that the condition

![]() $\sum _{k=0}^{\infty }p_k<\infty $

is also necessary to have existence of solution for every continuous boundary data.

$\sum _{k=0}^{\infty }p_k<\infty $

is also necessary to have existence of solution for every continuous boundary data.

Theorem 2.6 Let

![]() $(p_k)_{k\geq 0}$

be a sequence of positive numbers such that

$(p_k)_{k\geq 0}$

be a sequence of positive numbers such that

$$ \begin{align*}\sum_{k=1}^{\infty}p_k=+\infty.\end{align*} $$

$$ \begin{align*}\sum_{k=1}^{\infty}p_k=+\infty.\end{align*} $$

Then, for any two constants

![]() $C_1$

and

$C_1$

and

![]() $C_2$

such that

$C_2$

such that

![]() $C_1 \neq C_2$

, the systems (2.1) and (2.2) with

$C_1 \neq C_2$

, the systems (2.1) and (2.2) with

![]() $f\equiv C_1$

and

$f\equiv C_1$

and

![]() $g\equiv C_2$