Introduction

With increasing evidence for effectiveness of psychological interventions implemented by non-specialists (Singla et al., Reference Singla, Kohrt, Murray, Anand, Chorpita and Patel2017; Cuijpers et al., Reference Cuijpers, Karyotaki, Reijnders, Purgato and Barbui2018; Barbui et al., Reference Barbui, Purgato, Abdulmalik, Acarturk, Eaton, Gastaldon, Gureje, Hanlon, Jordans, Lund, Nosè, Ostuzzi, Papola, Tedeschi, Tol, Turrini, Patel and Thornicroft2020), there is a need to demonstrate how task-shifted evidence-based treatments can be scaled-up to reduce the gap between need and availability of mental health care (i.e. treatment gap), especially in low- and middle-income countries (LMICs) (Patel et al., Reference Patel, Saxena, Lund, Thornicroft, Baingana, Bolton, Chisholm, Collins, Cooper, Eaton, Hermann, Herzallah, Huang, Jordans, Kleinman, Medina Mora, Morgan, Niaz, Omigbodun, Prince, Rahman, Saraceno, Sarkar, De Silva, Stein, Sunkel and Unutzer2018). Efforts to reduce the treatment gap typically rely on a non-specialist workforce to increase the availability of care. In addition to availability of services, the consistency and quality of service-provision is essential to achieve the desired outcomes (Jordans and Kohrt, Reference Jordans and Kohrt2020).

An essential feature of quality of care is provider competence, defined as ‘the observable ability of a person, integrating knowledge, skills, and attitudes in their performance of tasks. Competencies are durable, trainable and, through the expression of behaviors, measurable’ (Mills et al., Reference Mills, Middleton, Schafer, Fitzpatrick, Short and Cieza2020). Especially for non-specialist providers in LMICs, there are no mechanisms in place to systematically monitor if providers achieve minimally adequate competency to effectively and safely deliver interventions (Kohrt et al., Reference Kohrt, Schafer, Willhoite, van't Hof, Pedersen, Watts, Ottman, Carswell and van Ommeren2020). A recent systematic review evaluating quality assessments in adolescent mental health services concluded that most studies did not have a clear framework for measuring or defining quality (Quinlan-Davidson et al., Reference Quinlan-Davidson, Roberts, Devakumar, Sawyer, Cortez and Kiss2021). To respond to this challenge the World Health Organization (WHO) has developed the Ensuring Quality in Psychological Support (EQUIP) platform (https://equipcompetency.org/en-gb) to facilitate competency assessment and competency-driven training (CDT) approaches (Kohrt et al., Reference Kohrt, Schafer, Willhoite, van't Hof, Pedersen, Watts, Ottman, Carswell and van Ommeren2020). The platform includes measures that have been developed and evaluated in LMICs, such as the ENhancing Assessment of Common Therapeutic factors (ENACT) in Nepal (Kohrt et al., Reference Kohrt, Jordans, Rai, Shrestha, Luitel, Ramaiya, Singla and Patel2015a), and the Working with children-Assessment of Competencies Tool (WeACT) in Palestine (Jordans et al., Reference Jordans, Coetzee, Steen, Koppenol-Gonzalez, Galayini, Diab and Kohrt2021). This platform and measures can be used by governments and non-governmental organisations to assess whether providers have attained sufficient competencies to provide care, as well as by trainers and supervisors to tailor training and supervision content to the needs of individual or groups of providers to address gaps in competencies. The use of common competency assessment tools through a platform such as EQUIP may also lead to greater consistency of training quality across organisations and contexts.

CDT includes assessment of, and feedback on, the skills and competencies of participants before, during and after a training programme. This will guide the trainer in tailor-making the training (and subsequent supervision) to what is most needed for the group of participants (collectively and as individuals). CDT approaches have already demonstrated positive outcomes in mental health, for example a suicide prevention training (La Guardia et al., Reference La Guardia, Cramer, Brubaker and Long2019), and in low-resource settings in other areas of health (cf. Ameh et al., Reference Ameh, Kerr, Madaj, Mdegela, Kana, Jones, Lambert, Dickinson, White and van den Broek2016; La Guardia et al., Reference La Guardia, Cramer, Brubaker and Long2019). The aim of this study was to evaluate the outcomes of a CDT for improving the quality of facilitators of a psychological treatment for young adolescents with emotional distress compared to training-as-usual (TAU). We hypothesised a CDT to outperform TAU in terms of levels of competencies obtained post-training.

Methods

Context

This proof-of-concept study was conducted in Lebanon. The Syrian crisis has had profound impacts on Lebanon, making it the second-largest refugee-hosting country, according to UNHCR Global Trends for 2021, and the host for the largest number of refugees from Syria (UNHCR, 2021). In an already suffering economy, the impact of war in Syria has added to increasing economic, social, demographic, political and security challenges.

Design

The current analysis is based on the EQUIP study in Lebanon which was a controlled before and after study (registration no. NCT04704362). The objective was to evaluate the outcomes of a CDT in improving the quality of facilitators of a psychological treatment for young adolescents with emotional distress (Early Adolescents Skills for Emotions – EASE) (Dawson et al., Reference Dawson, Watts, Carswell, Shehadeh, Jordans, Bryant, Miller, Malik, Brown and Servili2019), when compared to TAU. The subject of study therefore was the delivery of training, rather than the psychological intervention. Training participants (n = 24) were allocated equally (1 : 1) over the two study arms (CDT and TAU) using simple random sampling. Randomisation sequence was computer generated by an off-site statistician (GKG). Training and competency assessments were originally designed for in-person implementation. However, due to COVID-19 all training and competency assessments were done remotely, and implementation of the intervention was not possible.

Instruments

Facilitator competencies were assessed using the EQUIP tools based on observations by a trained competency rater. The rater was selected from a pool of five. Scoring was done using standardised competency tools, for working with children as the primary aim of EASE (WeACT), for working with adults as EASE also works with the caregivers of enrolled children (ENACT) and for working with groups as EASE is a group intervention (GroupACT). For each of the tools scoring was based on: (i) four levels of competency and (ii) attributes, which are distinct observable behaviours that serve to operationalise each of the four levels for each competency item. All of the tools are available through the online EQUIP platform (https://equipcompetency.org/en-gb).

WeACT

The WeACT aims to assess common competencies of service providers working with children and adolescents, and has been developed for and can be applied to interventions from different sectors (mental health and psychosocial support, child protection and education) (Jordans et al., Reference Jordans, Coetzee, Steen, Koppenol-Gonzalez, Galayini, Diab and Kohrt2021). The WeACT consists of 13 competency items, that can be scored following a four-tiered response system; level 1 (potential harm), level 2 (absence of some basic competencies), level 3 (all basic competencies) and level 4 (all basic + advanced competencies). We excluded one item from the original WeACT (i.e. collaboration with caregivers and other actors) because of the overlap with the ENACT. A previous study of the WeACT in Palestine has demonstrated positive results on reliability and utility (Jordans et al., Reference Jordans, Coetzee, Steen, Koppenol-Gonzalez, Galayini, Diab and Kohrt2021).

ENACT

For the assessment of competencies of facilitators delivering psychosocial and mental health care to adults, the ENACT was used (Kohrt et al., Reference Kohrt, Jordans, Rai, Shrestha, Luitel, Ramaiya, Singla and Patel2015a). The version used in this study consists of 15 competency items with the same four levels of appraisal as mentioned above. The ENACT has been extensively tested and used (Kohrt et al., Reference Kohrt, Ramaiya, Rai, Bhardwaj and Jordans2015b, Reference Kohrt, Mutamba, Luitel, Gwaikolo, Onyango, Nakku, Rose, Cooper, Jordans and Baingana2018).

GroupACT

For assessment of group facilitation competencies, the GroupACT was used. The GroupACT consist of eight items to assess facilitators' competencies in group-based mental health and psychosocial support interventions (Pedersen et al., Reference Pedersen, Sangraula, Shrestha, Laksmin, Schafer, Ghimire, Luitel, Jordans and Kohrt2021). For the purpose of this study we used a six item version. It follows the same structure and scoring system as the WeACT and ENACT.

Sample

For this study, 24 participants were selected to the training (CDT or TAU). Participants were recruited based on: (i) prior experience working with children, but with no specialist prior mental health experience or education and (ii) aged 18 years or above. The group of participants consisted of 15 females (62.5%) and 9 males (37.5%), mean age 26.4 (s.d. = 6.5) and with a mean of 6.0 (s.d. = 3.7) years of work experience. Recruitment was done through advertisement, focusing on Beirut and Mount Lebanon. No power analysis was conducted, as this was a proof-of-concept study.

Study conditions

Participants received either CDT or TAU of the intervention EASE. EASE is a WHO-developed transdiagnostic intervention for children (10–14 years) with severe emotional distress (Dawson et al., Reference Dawson, Watts, Carswell, Shehadeh, Jordans, Bryant, Miller, Malik, Brown and Servili2019). The intervention is designed to be delivered by trained non-specialised facilitators and is delivered using a group format. EASE comprises seven group sessions for children incorporating evidence-based techniques such as psychoeducation, stress management, behavioural activation and problem solving. Moreover, there are three group sessions for caregivers of enrolled children, including strategies such as psychoeducation, active listening, stress management, praise and caregiver self-care.

Participants in both arms received an 8-day training, including basic counselling skills, delivering EASE (child and caregiver sessions), skills in group facilitation and facilitator self-care. The training was based on the course previously developed for a randomised controlled trial (RCT) evaluating the effectiveness of EASE in Lebanon (Brown et al., Reference Brown, Steen, Taha, Aoun, Bryant, Jordans, Malik, van Ommeren, Abualhaija, Aqel, Ghatasheh, Habashneh, Sijbrandij, El Chammay, Watts and Akhtar2019). Training content was the same in the two study arms. The difference between the arms was that in the CDT arm the trainer: (i) utilised the pre-training competency assessment outcome scores of the group to inform the delivery and dosage of training content and as feedback to participants, and (ii) utilised in-training competency assessment outcome scores to help trainers determine what competencies require additional attention in the remaining time and provide tailor-made feedback. See Table 1 for the differences between the two trainings.

Table 1. Differences in training between study arms

Due to COVID-19 restrictions, delivery of training was adapted to be delivered in an online format, using live conference calls. The training in each study arm was provided by a different trainer; both WHO-trained EASE master-trainers (MG, SC), with one trainer (SC) receiving an additional briefing on how to run CDT.

Procedures

All 24 training participants (CDT or TAU) were assessed for demonstrated competencies using standardised role-plays before (T0) and after the training (T1) using the WeACT, ENACT and GroupACT (see Table 1). Assessments (T0 and T1) were conducted by a trained rater observing all role-plays (n = 48 in total). Multiple role-play scripts were developed to ensure that all competencies (for children, groups and adults) being assessed were prompted for and could be demonstrated by the participants, and to ensure variation between the T0 and T1 role-plays. The use of role-plays for ENACT and WeACT assessments has been extensively tested in Nepal, Uganda, Liberia, Pakistan, Ethiopia, South Africa and Palestine. The role-play approach was shown to be feasible and acceptable in these settings (Kohrt et al., Reference Kohrt, Jordans, Rai, Shrestha, Luitel, Ramaiya, Singla and Patel2015a; Jordans et al., Reference Jordans, Coetzee, Steen, Koppenol-Gonzalez, Galayini, Diab and Kohrt2021), also because role-plays are commonly used as a component of training on psychological interventions (Ottman et al., Reference Ottman, Kohrt, Pedersen and Schafer2020).

In this study we trained actors from a local theatre group to perform the live role-plays with the trainees. Competency assessments were adapted to an online role-play, where participants delivered components of the intervention to the trained actors via a live video conference call. The trained rater joined the same conference call to rate the role-play. The trained rater was selected from a competency rater training wherein five raters (all >2 years of experience in facilitating mental health interventions for children) received a 3-day training in rating competencies using the EQUIP platform. The rater with the highest compatibility to the intended scores that were specified for the structured video-taped role-plays was selected for the current study. Raters and actors were kept blind to participants' group membership.

Analyses

The competency assessment scores were analysed at different levels: (i) all the competency levels combined; (ii) only the harmful levels; (iii) only the competent levels and (iv) only the potentially harmful attributes, as well as only the helpful attributes.

For analysis (i) the scores 1 to 4 were summed across all competency items into a total score for each tool (WeACT, ENACT, GroupACT), and the means of the total scores were compared between TAU v. CDT (between groups) as well as T0 v. T1 (within groups). For analysis (ii) we did the same for the total count of scores 1 (harmful level). Equally for analysis (iii) for the total count of scores 3 (all basic competencies) combined with 4 (all basic + advanced competencies). Finally, we examined the scores at the attribute level (iv) by dividing them into potentially harmful attributes (level 1) and helpful attributes (levels 3 and 4). For each tool, the number of harmful and helpful attributes was summed across all competency items into a total of ‘harmful attributes’ score, and the number of helpful attributes was summed across all competency items into a total of ‘helpful attributes’ score, and we ran the same comparisons. We considered analyses (ii) and (iii) to be the primary outcome, and (i) and (iv) as exploratory outcomes.

All scores at the different analysis levels were checked for assumptions of normality and homogeneity of variance. When assumptions were met, repeated measures analyses of variance were conducted with the mean scores as the dependent variable, time (T0 v. T1) as the within-group variable, group (TAU v. CDT) as the between-group variable, a time × group interaction, with the latter as the effect of interest and gender as a covariate. Where assumptions were not met, separate non-parametric tests were conducted for differences between groups (Mann–Whitney U test) and within groups (Wilcoxon signed rank test). Throughout the paper we will adopt a significance threshold of p < 0.05.

Ethics

The study was approved by the Ethics Review Committee from Saint Joseph Université (SJU), Beirut, Lebanon and by the World Health Organization ethics review process, approved on 1 October 2019 (#CEHDF_1490). All study participants provided informed written consent to participate in the study.

Results

All competency levels combined

Assumption checks indicated that the total scores of the GroupACT were positively skewed and did not have equal variances across groups. Therefore, separate non-parametric tests were done. Table 2 shows the mean total scores of all levels combined for each of the tools. The WeACT and ENACT showed an increase in total scores from T0 to T1, F (1, 21) = 27.1, p < 0.001, ηp 2 = 0.56, and overall the CDT group showed higher total scores than the TAU group, F (1, 21) = 8.6, p < 0.01, ηp 2 = 0.29. However, the interaction effect of time and group was not significant, F (1, 22) = 2.99, p = 0.10. The results of GroupACT can be interpreted as an interaction effect with a significant T0 − T1 difference for the CDT group, Wilcoxon signed rank test Z = −2.14, p < 0.05, but not the TAU group, Z = −0.36, p = 0.72, and a significant difference between the groups after the training, Mann–Whitney U = 17.5, p = 0.001, but not before the training, U = 72, p = 0.99.

Table 2. Means and s.d.s of the total scores across competency levels for the three tools

CDT, competency-driven training; TAU, training-as-usual; WeACT, Working with children-Assessment of Competencies Tool; ENACT, ENhancing Assessment of Common Therapeutic factors; GroupACT, Group facilitation: Assessment of Competencies Tool; M diff, mean difference at endline; s.e., standard error.

*p < 0.05, **p < 0.01, ***p < 0.001.

Only competent levels

The assumptions were not met for GroupACT scores, which were analysed with separate non-parametric tests. Table 3 shows the mean total counts of only the competent levels (levels 3 and 4) of each tool. The WeACT showed a clear interaction effect in competent scores, as expected with CDT showing a greater increase after the training, F (1, 22) = 6.49, p = 0.018, ηp 2 = 0.23. The ENACT only showed significant effects of time and group in the expected direction with higher scores after the training compared to before, F (1, 22) = 13.5, p = 0.001, ηp 2 = 0.38, and the CDT group showed higher scores than the TAU group, F (1, 23) = 9.1, p = 0.006, ηp 2 = 0.29. The GroupACT showed only a significant difference between the groups after the training (Mann–Whitney U = 22, p = 0.003).

Table 3. Means and s.d.s of the total counts of only the competent levels (3, 4) for the three tools

CDT, competency-driven training; TAU, training-as-usual; WeACT, Working with children-Assessment of Competencies Tool; ENACT. ENhancing Assessment of Common Therapeutic factors; GroupACT, Group facilitation: Assessment of Competencies Tool; Mdiff, mean difference at endline; s.e., standard error.

*p < 0.05, **p < 0.01, ***p < 0.001.

We demonstrated that CDT outperformed TAU, with a 17% increased improvement of competencies for trainees preparing to work with children (i.e. WeACT); and an 18% increase in the competencies of participants to work with groups (i.e. GroupACT); but a decrease of 6% in competencies for working with adults (i.e. ENACT) (see Fig. 1).

Fig. 1. Change in proportion of items with adequate competency, and the between-group difference. WeACT, Working with children-Assessment of Competencies Tool; ENACT, ENhancing Assessment of Common Therapeutic factors; GroupACT, Group facilitation: Assessment of Competencies Tool; CDT, competency-driven training (n = 12); TAU, training-as-usual (n = 12). ABetween-group difference in pre to post change of percentage of skills at competency; *competency decreased from 13 to 4% pre- to post-training.

Only harmful levels

Because harmful scores did not occur frequently, the total counts at both timepoints and of all the tools were positively skewed and analysed with non-parametric tests. The WeACT showed a non-significant decrease in harmful scores from T0 to T1, for both the CDT and TAU groups which did not differ from each other (all p-values between 0.059 and 0.362). For the ENACT both CDT and TAU groups showed a significant decrease in harmful scores from T0 to T1 (CDT Wilcoxon signed rank test Z = −2.26, p = 0.024 and TAU Z = −2.83, p = 0.005). The groups did not differ significantly from each other on total number of harmful scores, neither before (Mann–Whitney U = 53, p = 0.253) nor after the training (U = 64.5, p = 0.633). For GroupACT only the CDT group showed a significant decrease in harmful scores, Wilcoxon signed rank test Z = −2.66, p = 0.008, however the number of harmful scores was not significantly different from the TAU group, neither before (Mann–Whitney U = 58, p = 0.351) nor after the training (U = 47, p = 0.080).

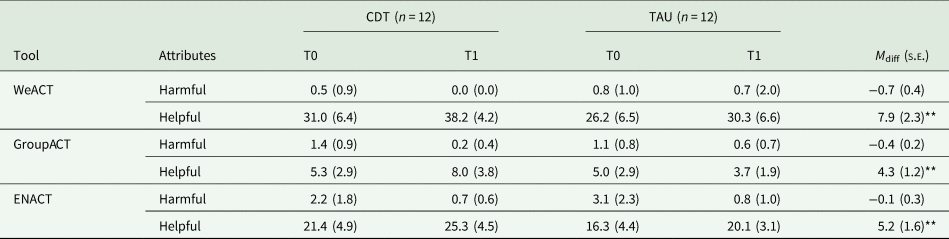

Potentially harmful and helpful attributes

The mean total scores at the attribute levels are shown in Table 4. The harmful attributes did not meet the assumptions and were analysed with non-parametric tests. The TAU group showed a significant decrease in harmful attributes after the training only on the ENACT (Wilcoxon signed rank test Z = −2.69, p = 0.007), while the CDT group showed a significant decrease in harmful attributes on both the ENACT (Wilcoxon signed rank test Z = −2.26, p = 0.02) and the GroupACT (Wilcoxon signed rank test Z = −2.66, p = 0.008). None of the between groups differences were significant (all p-values between 0.160 and 0.932). Regarding the helpful attributes, the results showed a significant increase over time for both the CDT and TAU groups on the WeACT, F (1, 22) = 19.06, p < 0.001, ηp 2 = 0.46, and the ENACT, F (1, 22) = 10.54, p = 0.004, ηp 2 = 0.32, but this effect did not differ between the groups on both tools. However, for the GroupACT, there was a significant interaction effect with an increase in helpful attributes in the CDT group and a slight decrease in the TAU group, F (1, 22) = 5.78, p = 0.025, ηp 2 = 0.21.

Table 4. Means and s.d.s of the total scores for the harmful and helpful attributes for the three tools

CDT, competency-driven training; TAU, training-as-usual; WeACT, Working with children-Assessment of Competencies Tool; ENACT, ENhancing Assessment of Common Therapeutic factors; GroupACT, Group facilitation: Assessment of Competencies Tool; M diff, mean difference at endline; s.e., standard error.

*p < 0.05, **p < 0.01, ***p < 0.001.

Discussion

The main objective of this proof-of-concept study is the between-group comparison between a CDT and a TAU (both remotely delivered) for a psychological intervention for children with severe emotional distress. The primary indicators of success are an increase in the mean number of items scored at adequate competence (levels 3 and 4 on the assessment tools), and a reduction in the mean score of the harmful behaviours (level 1 on the assessment tool) on completion of training. This study shows the potential added value of adopting a competency-driven approach to training non-specialist facilitators of mental health interventions, with up to 18% more improvement in demonstrated competencies post training. These results can be considered as proof-of-concept that requires further investigation to confirm effectiveness.

CDT outperforms TAU on the child-specific competencies (WeACT) and group-specific competencies (GroupACT), though not on adult-specific competencies (ENACT). These findings are in line with expectations given that the focus of the training was primarily for a group intervention for children, with only a small component focusing on adults (i.e. sessions with caregivers of the included children). The absence of improvement in the ENACT can also be explained by the lower pre-training competency in the control arm, allowing for more room for improvement. The results on the reduction of harmful behaviours show that both groups reduced – to near absence – harmful behaviours at post-training. This suggests that both versions of training were equally successful in changing potentially harmful behaviours (most pronounced on the ENACT), which explains the non-significance when comparing the groups based on the harmful level scores. Regardless of between-group differences, for ethical reasons it is important to note that using the competency assessment tools we are able to check that before starting to work with distressed children, none of the participants exhibits potentially harmful behaviours.

While we see improvement in competencies following training, it is also important to note that post-training there is still a significant proportion of items that are not assessed as adequate competency obtained. Hardly any items are assessed as potentially harmful, still one could question whether competency levels achieved in this training are sufficient to offer an intervention of high enough quality to help adolescent with emotional problems. This is an issue that plagues the field because so few studies systematically evaluate the competency to assure that psychological interventions can be safely and effectively delivered by non-specialists (van Ginneken et al., Reference van Ginneken, Tharyan, Lewin, Rao, Meera, Pian, Chandrashekar and Patel2013; Singla et al., Reference Singla, Kohrt, Murray, Anand, Chorpita and Patel2017). Because of this gap, it is vital that tools which capture helpful treatment skills and potentially harmful behaviours are used consistently in the field of global mental health (Jordans and Kohrt, Reference Jordans and Kohrt2020). Our current study aims to contribute to providing more systematic reporting of competency levels achieved, with the ultimate goal that by measuring competency routinely, the quality and safety of care in global mental health will be improved (Kohrt et al., Reference Kohrt, Schafer, Willhoite, van't Hof, Pedersen, Watts, Ottman, Carswell and van Ommeren2020).

The remaining results (i.e. analyses of mean total scores, and of mean number of attributes scored) serve mainly descriptive reasons. The intended use of the competency tools is not to work with total scores. Mean frequency scores are not meant to be used as the primary outcome indicator because this can obscure the movement participants make between the competency levels, which is important information for demonstrating minimal adequacy on each of the competencies. Similarly, the attributes that form part of the competency assessment tools aid raters to determine the level of competency and for trainers to provide feedback during CDT, but mean total attributes scores are not meant to be used as the primary outcome indicator. Still, the results that the CDT group outperformed TAU on improvement in helpful attributes on the GroupACT are further support for the potential benefits of CDT.

These are promising findings for the added value of CDT of mental health and psychosocial support interventions in LMICs. The limited number of mental health professionals and limited budget available for mental health services in LMICs has resulted in a push for brief trainings of non-specialists who will deliver psychological interventions. It is salient that with the same limited resources, we can optimise the impact of training by following a competency-driven approach that uses standardised assessments before and during the training. It therefore has the potential to significantly contribute to better quality, more consistent and cost-effective mental health services in low-resource settings. The approach can be expanded to competency-based supervision (Falender and Shafranske, Reference Falender and Shafranske2007), during the intervention implementation phase. Supervisee competencies can be determined through in-session observation or self-assessment using the standardised competency assessments tool, and used as input for supervision session, much like the assessments and subsequent feedback used in the CDT described in this study.

So what is the added value of a competency-driven approach? A competencies perspective argues that we cannot assume competencies are attained following training. Rather, there is a need for trainees to be able to explicitly demonstrate competence (Falender and Shafranske, Reference Falender and Shafranske2012). Including results of standardised assessment of trainee competencies before and during training, which allows for: (i) tailor-made feedback, one of the key instruments of teaching, by identifying with areas that require improvement (Falender and Shafranske, Reference Falender and Shafranske2012); (ii) increased attunement between trainer and trainee on what are concrete and observable expectations; (iii) more clarity on a benchmark for ‘readiness to start implementing’ and (iv) potentially greater consistency in the quality of intervention services being provided within and across organisations and contexts.

The current study is compatible with other initiatives that aim to augment the quality and impact of training for, and provision of, mental health services. For example, a recent pilot cluster RCT in Nepal shows that collaborating with people with lived experience as part of training primary health workers in integrating mental health services has the potential to result in reduced stigma and improve competence for diagnostic accuracy (Kohrt et al., Reference Kohrt, Jordans, Turner, Rai, Gurung, Dhakal, Bhardwaj, Lamichhane, Singla, Lund, Patel, Luitel and Sikkema2021). These initiatives are in line with a need to shift the global mental health research agenda to evaluate strategies on how evidence-based interventions can best be implemented (Proctor et al., Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman2009), and how we can go about maintaining quality of care when services get scaled up (Jordans and Kohrt, Reference Jordans and Kohrt2020).

This focus on quality of care is important, because it is an essential predictor to adolescents seeking, receiving and continuing mental health care (Quinlan-Davidson et al., Reference Quinlan-Davidson, Roberts, Devakumar, Sawyer, Cortez and Kiss2021). Initiatives to improve quality of health services have identified standards, such as health literacy, community support, adolescent participation, facility characteristics, non-discrimination and provider competencies (WHO, 2015). Provider competence is highlighted as a key standard (Quinlan-Davidson et al., Reference Quinlan-Davidson, Roberts, Devakumar, Sawyer, Cortez and Kiss2021), with evidence demonstrating that competencies such as establishing provider–client relationship and alliance result in more positive outcomes (Gondek et al., Reference Gondek, Edbrooke-Childs, Velikonja, Chapman, Saunders, Hayes and Wolpert2017). While a recent meta-analysis failed to demonstrate the relationship between intervention-specific competencies and outcomes of psychotherapy for children and adolescents, it does suggest that non-specific competencies may be a more important factor (Collyer et al., Reference Collyer, Eisler and Woolgar2020). This is in line with the focus of the measures that were used in the current study, where the WeACT, GroupACT and ENACT all aim to assess common-factors competencies. Moreover, regarding the hypothesised association between quality of care with improvements in clients mental health outcomes, it is salient to note that numerous studies have failed to show a consistent association (Barber et al., Reference Barber, Sharpless, Klostermann and McCarthy2007; Rakovshik and McManus, Reference Rakovshik and Mcmanus2010; Collyer et al., Reference Collyer, Eisler and Woolgar2020). In a prior review, we have shown that gaps in methodological rigour on this topic have contributed to the limited ability to explore this relationship (Ottman et al., Reference Ottman, Kohrt, Pedersen and Schafer2020). One of the main gaps has been failure to systematically measure competency outcomes of trainings using a standardised tool across programmes. Therefore, this study is a step forward in rigorously evaluated observable treatment competencies, as well as providing initial findings that the approach towards training impacts the competency level achieved.

This study is not without limitations. First, the study was implemented in the midst of the COVID-19 pandemic, which meant all activities needed to be done remotely. While challenging, we are pleased we managed to still implement the study. It did demonstrate that remote implementation of the competency framework appears feasible. At the same time, it may have impacted delivery of training or assessment of competencies, albeit equally in both study arms. Second, as mentioned above, given that this was not a RCT and that the study was underpowered due to the small sample size we cannot draw definitive conclusions about the effectiveness of a competency-driven approach to training compared to TAU. It is for that reason that we are now planning a fully-powered RCT. Third, though unavoidable, having different trainers in both study arms may have influenced results.

As this study was part of the EQUIP initiative, it also yielded multiple recommendations to further enhance the promotion of CDT. For example, the EQUIP digital platform now includes visualisations for trainers to easily capture harmful behaviours at the individual trainee level and the competencies rated as a level 1 ‘potentially harmful’ score. Based on lessons learned, the EQUIP platform also added training modules on how to conduct CDT and how to adapt manualised trainings into competency-driven approaches. A module on how to give and receive feedback using the EQUIP platform competency results was developed. As well, a Foundational Helping Skills curriculum that covers common factor skills such as those in WeACT and ENACT, was developed to help in remediation for competencies requiring additional training to reach safe and effective competency levels (Watts et al., Reference Watts, Hall, Pedersen, Ottman, Carswell, van't Hof, Kohrt and Schafer2021). With expansion of these tools and resources, there is potential for even greater benefits to support competency-driven strategies compared to standard training approaches.

Conclusion

With this study we demonstrate the potential of CDT, using standardised assessment of trainee competencies, to contribute to better training outcomes, without extending the duration of training. It holds promise to contribute to greater quality and accountability of psychological treatments implemented by non-specialists in LMICs. Further research is needed to test the effectiveness of such approaches in an RCT, as well as the application of a competency-driven approach to other aspects of the service delivery pathways, such as during supervision.

Acknowledgements

We thank the War Child Lebanon colleagues for their support. We also thank Heba Ghalayini for the Training of Trainers of the EASE intervention.

Financial support

Funding for the WHO EQUIP initiative is provided by USAID. We are grateful to the individuals who participated in the studies in each of the EQUIP sites and to all members of the EQUIP team for their dedication, hard work and insights. The authors alone are responsible for the views expressed in this article and they do not necessarily represent the views, decisions or policies of the institutions with which they are affiliated. BAK and MJDJ receive funding from the U.S. National Institute of Mental Health (R01MH120649).

Conflict of interest

No conflict of interest to be reported.

Ethical standards

The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2000.

Availability of data and materials

The data supporting the findings of this study can be obtained from the corresponding author.