Introduction

Since Neoliberalism took root, market mechanisms have encroached on the organization of policy fields for the provision of public goods such as transportation, healthcare, education, or social security. But oftentimes marketization fails to deliver satisfactory outcomes, inviting experts and regulators to assess market failures and rewrite their organizing rules. An emerging scholarship within the social studies of markets tradition is now theorizing about “the organization of markets for collective concerns and their failure” [see e.g., the special issue of Economy and Society edited by Frankel, Ossandón and Pallesen Reference Frankel, Ossandón and Pallesen2019]. Instead of focusing on political junctures in which market-minded policymakers invoke the general principles of privatization, choice, and competition to oppose central planning within malfunctioning public bureaucracies, these authors look at processes whereby already marketized policy fields become problematized, evaluated, and fixed to correct socially undesirable market failures. A remarkable conclusion drawn from multiple case studies is that little room remains for a politics that includes “the possibility of problematizing existing policies in ways other than as poorly functioning markets” [Frankel, Ossandón and Pallesen Reference Frankel, Ossandón and Pallesen2019, 166]. Market-minded policies and devices have not become immune to evaluation and critique, but, after neoliberalism, the type of knowledge generally mobilized to problematize market-enhancing policy instruments is now much more circumscribed to the actual functioning of these devices and much less inimical to the market organization of policy fields themselves. Neoliberal doxa [Mudge Reference Mudge Stephanie2011, Reference Mudge Stephanie2008; Amable Reference Amable2011] now dictates technocratic common sense.

This paper contributes to the vital question posed by Ossandón and Ureta [Reference Ossandón and Ureta2019, 176] with respect to neoliberal resilience, namely, “How does a critical evaluation of market based policies, rather than triggering a movement, for instance, toward fundamentally different modes of organizing a given area, end up consolidating markets as policy instruments?” Like these authors, my empirical focus is on experts’ work in evaluating and repairing market devices that failed to deliver optimal policy outcomes. However, I give especial attention to the way in which external demands for decommodification shape this technical work. Bringing politics back into the social studies of markets, I argue that, contrary to the idea that neoliberal doxa is fully entrenched, the technical process of market evaluation and repair remains enmeshed in ideological conflict over the moral virtue of marketization. To do so, I lay out a reflexive approach to the politicized uses of social scientific expertise (especially, but not only, economic expertise) for regulatory decision-making within education, a policy domain the sociological literature has largely overlooked despite being increasingly subject to top-down rationalization by technocratic and market forces worldwide.

My case study focuses on the institutionalization of test-based, high-stakes accountability in Chilean education and, specifically, the design and implementation of the so-called School Ordinalization Methodology (SOM)Footnote 1—an official categorization of school quality set out to perform socially optimal market outcomes by fairly holding providers (schools) responsible to consumers (students). In response to mass student protests against the educational inequities produced by the so-called “market model” of education, the Chilean Congress began instituting new regulations aimed at holding both municipal and voucher-funded private schools accountable to quality standards. Thus, the Education Quality Assurance Law of 2011 transformed education governance into a system of specialized agencies in charge of setting, measuring, overseeing, and enforcing these standards. Importantly, lawmakers also mandated the “fair responsibilization” of schools to address mounting criticism that school quality metrics were oblivious to the severe socioeconomic segregation of the school system, and therefore stigmatized public schools with high concentrations of low-socioeconomic status (SES) students, fostering exit to the voucher-funded private sectorFootnote 2. Yet, ambiguity in what precisely “fair responsibilization” meant confronted the education experts in charge of devising an objective, socially sensitive ordinal metric of school quality with a moral conundrum, one that pervades the use of benchmarking rules for high-stake accountability purposes: on the one hand, is it fair (to schools) to assess school effectiveness on the basis of factors for which the schools are not responsible, e.g., SES? On the other hand, is it fair (to students) to set multiple proficiency benchmarks, that is, different standards based on their socioeconomic characteristics?

I take the “valuation problem”—the definition of criteria concerning “the assignment of values to heterogeneous products within the same market” [Beckert Reference Beckert2009, 254]—education experts faced when going about settling the fair responsibilization controversy as an opportunity to integrate and expand existing sociological knowledge on the politics of markets for collective concerns. Specifically, I seek to make sense of a twofold paradox emerging from this controversy that the existing literature is ill-equipped to understand: on the one hand, why, despite appeals to objectivity and impartiality, did the experts weaponize their calculations to suit their subjective conceptions of market education; on the other, how, notwithstanding politicization, did they still manage to ground their disagreements in shared technical procedures and successfully implement the SOM, transforming education quality into an objective, quantifiable, and politically consequential feature of market governability?

To address this twofold paradox, I draw upon the sociology of markets and morality, conceptualizing the SOM as a technology of economic valuation that, as such, embodies an intensely moralized technopolitical project. The quest for fairness in measuring school quality in Chile speaks directly to scholarship that examines the morally controversial valuation of goods such as health, nature, love, or human life. Education fits perfectly into the category of “enrichment” [Boltanski and Esquerre Reference Boltanski and Esquerre2016] or “peculiar” [Fourcade Reference Fourcade2011] goods, the valuation of which typically implies moral quandaries. That the economic status of education as a market good may itself be widely contested means that its commensuration immediately yields claims on incommensurables [Espeland and Stevens Reference Espeland and Stevens1998], and invites public invocations of irreducible orders of worth [Boltanski and Thévenot Reference Boltanski and Thévenot2006]. The appraisal of so unique an object as school quality may easily overlap with aesthetic judgements [Karpik Reference Karpik2010], or simply instantiate preexisting status hierarchies of both producers and consumers [Podolny Reference Podolny Joel1993].

I therefore see the technical construction of valuation devices as embedded in a broader conflict over the moral virtue or evil of markets (in this case, education markets). But this conflict, I show, concerns not only the value of the goods at stake (e.g., private vs. public education), but also the value of the valuation devices themselves, i.e., how much these devices should serve the political purpose of organizing the provision of these goods in a market-like fashion. The fair responsabilization controversy led to a clash between conservative and progressive experts. Though the conservative government staffed the commission that drafted the first ordinalization proposal with market-friendly experts, and took up the majority of seats in the new regulatory agency tasked with implementing the SOM, an ideological battle over the policy instrument’s inherent virtue as a market-enhancing device nonetheless transpired. Skillfully handling this disagreement, the experts reduced it to a technical decision between econometric models (OLS vs. HLM) that empirically identify school quality as a statistical parameter. Experts’ conflicting moral judgments on the intrinsic value attributed to the SOM as a tool for consumer choice and market competition nevertheless grounded their adjudication between regression equations. While conservatives advocated for the model (OLS) that would make the SOM more intuitive for parental choice and better at “nudging” schools (whether private or public), progressives showed little regard for the behavioral properties of the SOM and instead pushed for the model (HLM) that would better compensate public schools for the “quality handicap” associated with educating larger numbers of disadvantaged students.

Still, despite this moral divide, my analysis shows that the experts eventually succeeded in transforming the chosen metric into an impartial, presumably fair, and politically consequential tool for ordinalizing the quality of all Chilean schools. To account for this second half of the paradox, the paper elaborates on Abend’s [Reference Abend2014] concept of “moral background”, shedding light on the practical uses of social-scientific expertise in the reorganization of markets for collective concerns. Instead of asking why first-order agreements can be possible even under very different moral backgrounds, however, I turn Abend’s framework upside down, seeking to establish how second-order moral conventions may facilitate political consensus even in contexts of overt ideological dissensus. In this sense, I treat experts’ agreements and disagreements over the “fairness controversy” as nested in distinct orders of morality that are relatively independent and yet inextricably connected. While disagreements over the role of market devices in the organization of the education policy field belong to a first-order morality, I contend that agreements of a second-order have more consequential implications. Chilean technocrats shared styles of reasoning—i.e., “collections of orienting concepts, ways of thinking about problems, causal assumptions and approaches to methodology” [Hirschman and Berman Reference Hirschman and Berman2014, 794, see also Hacking Reference Hacking2002]—that enabled the commensuration and adjudication of a priori irreducible moral choices. Experts’ common subscription to scientific and technical conventions therefore provided the second-order cognitive tools necessary to effectively settle moral disagreements of first order. Constitutive of technocratic forms of policymaking, this “econometric” moral background proves a pivotal mechanism of neoliberal resilience.

I proceed as follows. The next two sections briefly situate the SOM in the international context, summarize its characteristics, and justify it as a case worth analyzing through the sociology of markets perspective. A methodological note follows. The fifth section contains the bulk of my empirical analysis: a brief historical account of the SOM’s origin, followed by an in-depth examination of how regulators in charge of the new accountability system handled the moral dilemma that arose when operationalizing the legal mandate that school quality scores not be confounded with students’ SES. I conclude by discussing my main theoretical contributions in light of my findings.

The School Ordinalization Methodology in Context

The steady increase in standardized testing for the external evaluation of teachers and schools is perhaps the most salient international trend in education policy of the last four decades. Standards-based evaluation policies in education were first institutionalized in the 1980s in liberal-market economies such as the US and the UK, but have henceforth spread across education systems worldwide as part of a global shift towards quasi-market forms of social service delivery and the diffusion of new public management paradigms emphasizing autonomy, competition, and outcomes-based (instead of process-based) accountability. Institutional reforms have underpinned this process, with governments embarking on the creation of specialized regulatory agencies tasked with developing both evaluation expertise and enforcement capacities [OECD 2016, 2013].Footnote 3

The quest for high-stakes evaluation regimes, scholars have argued, enables technocratic logic to penetrate the field of education, rationalizing schools from the top-down as to make them governable at a distance [Mehta Reference Mehta2013; Gorur Reference Gorur2016; Grek Reference Grek2009]. Two intertwined and mutually reinforcing developments critically contributed to this overall trend. First, the consolidation of a specialized field of education knowledge—with education economics taking a leading role—transformed the conceptualization of human capital from an input of economic growth or earnings functions (e.g., skilled labor) to an output of education production functions (e.g., academic achievement) in which both teacher and school quality can be factored as measurable inputs. Second, the growing recourse to economic experts and devices by international organizations, public bureaucracies, and regulatory agencies produced a paradigmatic shift from equity to efficiency in education policymaking [Griffen Reference Griffen2020; Teixeira Reference Teixeira Pedro2000; Mangez and Hilgers Reference Mangez and Hilgers2012].

Increasing emphasis on outcomes and efficiency in turn has brought to the fore demands for equity in test-based assessments of school performance. Testing organizations behind popularized metrics are now reconsidering their methods to accommodate mounting demands for fairness. To encourage context-sensitive assessments of student preparedness by selective college admission offices, the College Board, a flagship educational testing company in the US, recently began reporting socioeconomic information on students’ neighborhoods and high schools along with their test results. Likewise, to better reflect schools’ efforts at improving the learning outcomes of underserved students, the organization that produces the neighborhood schools’ quality metric used by the most popular real state online portals in the US has recently boosted the weight of growth and equity measures, in light of evidence that the unadjusted scores used in housing transactions increased segregation [Hasan and Kumar Reference Hasan and Kumar2019]. This quest for metrological fairness is now pervasive, cutting across institutional landscapes and national assessment cultures. And a commitment to equity is almost unanimous among experts, to the point that even enthusiastic academic exponents of high-stakes testing recommend the use of adjusted measures that are sensitive to both prior achievement and other contextual factors [Hanushek Reference Hanushek Eric2009; Harris Reference Harris2011], though the statistical complexity of these adjustments reduces model transparency and thereby invites further equity concerns.

In Chile, the institutionalization of high-stakes testing occasioned the adoption of standards-based accountability rules and the subsequent search for a way to equitably mete out the punishments and rewards these rules prescribed. Under the mandates provided by the Law of Education Quality Assurance (LEQA), in March 2014, the Chilean government issued an executive order enacting the “Ordinalization Methodology of all Educational Establishments Recognized by the State.”Footnote 4 The decree details all the indicators, data sources, decision rules, equations, and estimation methods the Agency of Education Quality (AEQ) shall use to classify schools into four “performance categories” (high, medium, medium-low, and insufficient), obtained by partitioning an interval scale of education quality. Furthermore, the regulation stipulates that the ranking position of a particular school be computed by subtraction: the effect (aggregated at the school level) of a set of student-level variables of SES and other characteristics of the school context, minus the predicted student test-scores (also aggregated at the school level) (see equation 16 in the decree). In turn, the two terms of this subtraction—a routine post-estimation adjustment—can be obtained through an ordinary least squares (OLS) regression at the student level that predicts the “unadjusted index” as a function of a set of socioeconomic variables, plus an error term that captures unobserved heterogeneity in student performance (see equation 15).

Strikingly, the decree refers to this error term not to assume its normal distribution, homoscedasticity, exogeneity, and non-autocorrelation—that is, the statistical features that help analysts gauge the likelihood that the estimated coefficients and standard errors in the model are relatively unbiased in the technical sense. Rather, the decree rules—and thus states performatively—that this error term expresses the true value of schools.

In equation N° 15 the individual student score consists of two parts. The first is an estimate of the student score that this methodology attributes to her characteristics and context c + ∑βCA x CANI, which corresponds to the average score of those students that share the same level of student [socioeconomic] characteristics at the individual level. The other part of equation N° 15, εNI, corresponds to the fraction of the student score that is above or below that of an average student with the same level of student [socioeconomic] characteristics at the individual level. It will be considered that this fraction of the student’s score is not attributable to her context, so it will be considered of the school’s responsibility. [author’s translation and italics]

This regulation epitomizes the rationale reigning within the governance bodies in charge of enforcing high-stakes accountability policy. The decree assumes a sharp distinction between providers and external regulators. Schools, all publicly-funded but run either by municipalities or private organizations, provide education services. Rating agencies assess the “value” of the work these schools do, keep families informed about differences in service quality, and allocate positive or negative sanctions and/or support to the schools they rank. Confident enough in their capacity to represent differences in service quality through a single metric and seeing the latter as a function of a transparent combination of independent measurable inputs—“the education production function”, to use the language of education economics [Todd and Wolpin Reference Todd Petra and Wolpin2003; Hanushek Reference Hanushek Eric1986]—the regulators declare by fiat that schools be liable for the variance in test-scores not accounted for in the “class effects” part of the model. Hence the assertion that the error term of the estimated OLS regression is but a non-observable measure of school effects on achievement.Footnote 5

Moreover, what the LEQA stipulates the state must observe and manipulate—statistically, albeit not in practice—is the multiplicity of social conditionings that class inequality exerts on student performance:

[The ordinalization] of schools will consider the student’s characteristics, including, among others, her vulnerability, and, when appropriate, indicators of progress or value added. All in all, gradually, the ordinalization of schools will tend to be performed independently of the socioeconomic characteristics of the students, to the extent to that the [quality assurance] system corrects the achievement gaps attributable to such characteristics. [author’s translation and italics]

Policymakers’ most elemental aspiration is to discount SES effects from assessments of school quality, measured as the degree of compliance with proficiency standards. In a second stage, the expectation is that adjusted quality assessments also include student growth measures to better approximate statistical estimates of effectiveness, understood as the school’s contribution to students’ achievementFootnote 6. In the long term, however, the goal is to progressively deindex school quality valuation from socioeconomic factors, insofar as the incentives set in motion by accountability regulation succeed in reducing the SES achievement gap to a minimum. Meanwhile, the method of holding schools accountable for the service quality they offer must account for “class handicaps.” Not accounting for SES might be unfair; it might unjustly punish schools and families in “vulnerable contexts.” And, yet, the paradox is that the state will nonetheless “value” schools for whatever they do that remains, precisely, unobserved by the valuation device the state is putting in place to bring order and stability to the school market.

Towards a Sociology of School Quality Valuation Devices

What motives were behind the implementation of this peculiar device that explicitly discounts SES from measures of school performance and then assigns quality values to all that goes unobserved? As my analysis will make clear, the SOM resembles other cases of post-marketization policy tools arranged to address a collective concern. Yet, the fact that quality assurance regulation in Chile stemmed from student protests against market education after decades of far-reaching privatization and decentralization makes the enactment of the SOM a somewhat exceptional case as far as education policy is concerned. In the United States, for instance, high-stakes accountability progressed faster than other pro-market policies. While bipartisan consensus made testing and ranking the leitmotif of education reform, advocates of large-scale privatization never achieved a legislative majority [Mehta Reference Mehta2013]. Indeed, since the enactment of No Child Left Behind, accountability has worked as a Trojan horse for pro-market reformers to advance vouchers, charter schools, and choice. Similarly, in England—another early adopter of market-based approaches to education—the use of league tables of school quality became widespread as early as the 1990s. Only recently the government began using test-based performance metrics to promote the conversion of underperforming public schools into privately managed academy trusts. In contrast, policymakers in Chile predicated accountability rules—with the SOM taking centerstage—on the need to fix an already deregulated, largely privatized school market (by the year the LEQA was enacted, private voucher schools accounted for more than half of national student enrollments).

The SOM is therefore a case of what sociologists [Ossandón and Ureta Reference Ossandón and Ureta2019] duly note as a second (post-neoliberalization) moment in the way experts conceive of and practically use market devices. This second moment entails a shift from the standardized description of the market mechanism as an abstract, quasi-natural entity opposed to planning and rational organization, to the technical engineering of problem-solving market devices aimed at delivering specific policy and organizational outcomes. Rather than a panacea for politicized and opaque bureaucracies, markets are now used as organizational tools that “could be skewed in the outcomes favored” [Nik-Khah and Mirowski Reference Nik-Khah and Mirowski2019: 282]. Hence the cleavage that structured 20th century political struggles—and that the neoliberal movement so actively enhanced—has given way to a situation in which the formal distinction between market-like and bureaucratic-like forms of organization is increasingly blurred. Conceptually, the institutionalization of ordinal categories of school quality in Chile can thus be seen as both an attempt at bureaucratic rationalization (holding schools accountable to government standards) as well as an effort at market steering (creating the right incentives to empower parents and make providers compete on the basis of service improvement).

At the backstage of this overall transformation in the political use of markets for organizational advancement, the literature identifies the growing influence of specific kinds of experts (e.g., information, behavioral, or experimental economists), who, laying claims to jurisdiction on noneconomic policy fields, mobilize the tools of market engineering (e.g., accountability metrics, matching algorithms, centralized auctions, behavioral nudges) as optimal technological solutions [Ossandón and Ureta Reference Ossandón and Ureta2019]. Importantly, this represents a form of expertise that naturalizes the organizational form of the market as the only game in town. Everything proceeds as though the experts’ decisions were only about different alternatives for market reorganization [Frankel, Ossandón and Pallesen Reference Frankel, Ossandón and Pallesen2019, 166]. In detailing how markets are evaluated and transformed with the specific tools of economics expertise, the social studies of markets scholarship showcases technocracy’s decisive contribution to neoliberal resilience. Institutionalized as “a policy regime where market-based policy instruments are actively constructed by governments to act upon collective problems” [Ossandón and Ureta Reference Ossandón and Ureta2019, 176], neoliberalism proves resistant to demands for de-commodification inasmuch as the “market engineers” behind these instruments problematize them in a way that precludes market reversal or even discussion of non-market solutions. Implicit in this theorizing is that neoliberalism’s entrenchment hinges on the perseverant vigilance of market-minded experts whose scientific judgement, pace Polanyian-like moral critique of market society, dictates that surgically designed market fixes remain technically superior—hence morally preferable—for delivering socially desired outcomes. Acting as enlightened gatekeepers, technocrats come to embody Davies’ [Reference Davies2016: 6] definition of neoliberalism as “the pursuit of the disenchantment of politics by economics.”

Focusing on the experts’ task of making the market work as a “good” market, social scientists have revealed how technocratic critique of market-based policies paradoxically leads to market reinforcement instead of reversal. Yet, the literature fails to explain why the value judgements supposedly occluded or even erased by marketization—including moral disagreements over the question of the market organization of policy fields—sometimes (as was the case of the SOM) reemerge in a technical fashion, let alone how, despite the politicization of what is not supposed to be political (i.e., the “technical”), the prevailing form of market expertise manages to preserve its performative efficacy (as it did after the SOM experts settled the fair responsabilization controversy). My empirical puzzle, therefore, comprises two apparently contradictory aspects in need of simultaneous elucidation. First, experts’ failure to depoliticize demands for fair responsibilization in market-oriented education, which points to the moral and ideological embeddedness of their scientific judgement. Second, experts’ success in imbuing their market device with the technical capacity to perform presumably objective valuations (as finally did the SOM) even in highly politicized contexts, which points to the conventions and procedures that make expertise efficacious in the first place.

Elaborating on Abend’s [Reference Abend2014] concept of “moral background”, this paper addresses both of these aspects holistically. I contend that Abend’s distinction between nested and yet intertwined orders of morality makes it possible to discern the conditions for subsuming ideological dissent into consensual forms of technocratic decision-making. Scholars have criticized the “moral background” framework for its limited generalizability beyond the two ideal types for which it was originally developed: the “Christian Merchant” and “Standards of Practice” types of business ethics. Others have posited that because the defining features of the moral background sustaining the “Standards of Practice” type (e.g., scientism, naturalism, utilitarianism) have become virtually indisputable in contemporary economic discourse, Abend’s guiding thesis—that first-order moral agreements may be nevertheless grounded in irreducible regimes of moral justification—lacks sociological currency. Specifically, the concept has little to say about opposite ways of rationalizing business conduct (e.g., shareholder vs. stakeholder value) that, albeit rooted in a similar moral background, reflect radically different views of how capitalism should be organized.

Taking heed of said critiques [for an overview, see e.g., Livne et al. Reference Livne, Hirschman, Somers and Berman2018], this research extends Abend’s framework to contemporary instances of technocratic decision-making, yet reverses its analytical logic. Instead of asking how incommensurable moral backgrounds may lead to concurring moral stances, I demonstrate how technocrats’ shared styles of going about translating moral disputes into technical decisions can make consensus possible even under conditions of moral dissent. I thus draw upon Abend’s analytical distinction between first and second orders of morality to theorize the conditions of commensurability of competing moral judgments among the experts in charge of devising the SOM. A first-order morality concerns questions such as what different moral views exist about something and what kinds of behaviors are associated with different moral standpoints. A second-order morality, on the other hand, concerns more fundamental issues such as what type of objects can be morally evaluated, what kinds of reasons can be legitimately invoked to support these evaluations, what repertoires of concepts and ontologies ground such reasons, or whether moral arguments themselves can be arbitrated by recourse to objective procedures. Together, these second-order issues take the form of a shared set of tacit conventions, constituting a common moral background.

As the empirical section makes clear, when devising the SOM, conservative and progressive technocrats clashed over the selection of the statistical method—ordinary least squares vs. hierarchical linear models—yielding ordinalizations that better fit their partisan definitions of fairness. A close reading of experts’ reason-giving shows that what divided their modelling choices was the intrinsic value they attributed to the SOM as a tool for consumer choice and market competition. However sharp, experts’ discrepancy in the fairness controversy was but a first-order disagreement, one that reflected morally charged evaluations of the effectiveness of market mechanisms in the provision of public goods. Still, a common second-order morality facilitated the conditions for the market device’s performative efficacy. Beyond the surreptitious ideological dispute among experts, the divide was not deep enough to splinter a more fundamental consensus, one which allowed them to settle the controversy and get on with creating the SOM. Although their moral judgments on the distributive consequences associated with alternative ordinalizations diverged, they ultimately shared an economic style of reasoning [Hirschman and Berman Reference Hirschman and Berman2014]: an array of tacit conventions—econometric conventions in particular—that aided in reducing the moral conundrum to a tradeoff between proficiency and effectiveness standards. It was the epistemological assumptions, routine measurements, administrative data, and calculation methods assembled in the process that instituted education quality as an objective, quantifiable trait present in all schools in different degrees and, crucially, independent of social context.

Research Methods and Data

To open the “black box” of the SOM as a valuation device, I drew on Latour’s [Reference Latour1987] perspective for studying “science in action.” My method, however, is not ethnographic. Though my goal was to analyze “the SOM in the making”, in practice, I did not “follow education specialists around”—and “back into their research lab.” Still, my inquiry did not simply focus on the SOM’s enactment as a “ready-made” market device. Neither did it take post-hoc justifications of the SOM as evidence of expert consensus. Rather, I considered the executive order enacting a particular ordinalization method as well as the official justifications that supported it as culminating statements in a sequence of expert performances. Precisely because these statements closed—and black-boxed—the fair responsabilization controversy (or at least settled most of its technical aspects), I looked back into the sequence to reconstruct the process through which an issue, i.e. market failure in the provision of quality education, was first made into a problem, then transformed into a quest for measuring school quality fairly, and finally reduced to a technical debate on how this fairness requirement could be satisfied through different modeling techniques. In this reconstruction, another important statement stood out: the public record of the session where the AEQ board members voted and argued for the statistical model used to factor the student socioeconomic status into estimates of school quality. Exposing the Janus-faced character of the ready-made SOM vis-à-vis the SOM in the making, this piece of evidence allowed for politics to remerge in full force.

Hence the bulk of my analysis consisted of a post-hoc, second-order problematization of experts’ real-world application of quantitative methods, as well as experts’ interpretations of these methods’ performative consequences. I paid particular attention to when, how, and why these experts resorted to these methods to settle the fair responsabilization controversy, examining the decision-making process through which they deemed some facts and criteria about school assessment useful or useless and how they rendered effective some valuation criteria and not others. I also looked at how the relevant people with an authoritative say in matters of school assessment were enlisted and convinced. In short, I sought to understand how, when all these people, facts, and methods hold together, the quality assessments performed by the SOM became accepted as objective reality.

To further contextualize this analysis, I delved into an archive of legislative acts, policy documents, official minutes, public statements, and reports I collected and sorted chronologically. This archive includes, of course, the executive order enacting the SOM as well as the publicly available records of the AEQ board meetings. Additionally, I examined the Law of Education Quality Assurance (LEQA), the report of the Presidential Advisory Commission of 2006, the reports issued by the Education Commission in Congress to inform plenary sessions about reform bills, the minutes of the plenary sessions when these bills were addressed, the session minutes of the National Council of Education (NCE), and two key policy documents: the report by the “SOM Commission” submitted to the AEQ and NCE for consideration, and the report by the Taskforce created in 2014 for the revision of the test-based accountability regime. These texts explicitly discuss accountability policy and address the techniques and procedures that were eventually adopted. I examined all these materials for the use of moral judgments (claims of fairness or unfairness) to justify policy positions regarding school assessment methods.

Likewise, to make sense of the positions of the experts directly implicated in the SOM’s design and implementation, I researched who these experts were, making sense of their institutional affiliations, professional backgrounds, and, importantly, their political leanings. I inferred the latter from publicly available information (e.g., blogs, newspapers, websites) about their ties to the private or public sector of education provision; their professional involvement in academic institutions and think tanks publicly known for their conservative or progressive tendencies; and their prior participation in government bodies appointed by conservative or progressive presidents.

I supplemented this archival work with 57 in-depth interviews I conducted in Chile in the summer of 2014 and the fall of 2015. Interviewees were selected through strategic and snowball sampling techniques [Maxwell Reference Maxwell Joseph2012], and included different stakeholders involved in the controversies over the regulation of education markets in Chile. Many of the interviewees were experts who hold (or have held) key positions in the governance structures of education, in think tanks involved in education policy networks, or in leading educational research institutes. Among other key informants, I interviewed the former CEO of the AEQ, the president of the AEQ’s board, the president of the NCE, and the experts in charge of writing some of the hallmark reports and policy documents leading to the implementation and subsequent revision of the quality assurance system. Although the empirical material cited in this paper comes almost exclusively from my archival analysis, many of the conceptual issues, questions, and information that helped me reconstruct the SOM’s genealogy came out of conversations with my informants and interview respondents.

The SOM in the Making

A stylized genealogy of a school accountability device

The education authorities of Chile set out to develop high-stakes accountability regulation in the context of broader transformations in the political economy of school reform in Chile. The decisive shift began in 2006, when hundreds of thousands of high-school students took to the streets and occupied school buildings across the country [Donoso Reference Donoso2013]. Declaring quality education a universal right rather than an exclusive market good, the student movement condemned profit and discriminatory enrollment in the voucher-funded private sector, and demanded the state reassume direct control and funding of public schools. The students’ manifestoFootnote 7 also claimed that education privatization was furthered by the public use of school rankings based on average proficiency in the SIMCE (Sistema de Medición de la Calidad Educativa), a standardized test explicitly created by the Chicago Boys during Pinochet’s dictatorship to inform parental choice in the context of privatization and decentralization [Benveniste Reference Benveniste2002].

The protests provoked intense intra-elite controversy. While progressive reformers sought to give preferential treatment to public schools and set restrictive barriers for private voucher schools to operate in the market, conservatives contended that the state ought to remain impartial regarding the type of provision and focus merely on overseeing and enforcing quality standards [Falabella Reference Falabella2015; Larroulet and Montt Reference Larroulet, Montt, Martinic and Elacqua2010]. After two years of political stalemate, and confronted with the powerful opposition of an alliance of private providers, mainstream media, and influential think tanks against state interference [Corbalán Pössel and Corbalán Carrera Reference Corbalán Pössel and Carrera2012], the progressive government of Michelle Bachelet crafted a bipartisan compromise that paved the way for reform but alienated the social movement that had demanded it.Footnote 8 The consensus was that market failures were to be “repaired” to assure quality education for all.

Political conflict over the regulation of the k-12 education market gave rise not only to the LEQA, but also the SOM itself, with its mandate for fair responsibilization. The urgency of writing explicit safeguards into the law became apparent in the wake of the triumph, for the first time since the democratic transition, of a right-wing coalition in the 2009 Presidential election. Even as the new government reaffirmed its support to the LEQA—still making its way through Congress—to honor bipartisan accords, it implacably insisted on holding failing schools accountable to sovereign consumers. In June 2010, the Ministry of Education (Mineduc), directed by Chicago-trained economist Joaquín Lavín, released the last results of the SIMCE (which measures proficiency) and mailed them to every family in Chile along with a map in which all the schools of the district were colored based on average test-scores in red, yellow, or green, like a stoplight. “Lavín’s stoplights”—los semáforos de Lavín, as they became popularly known—further validated the market-fundamentalist claim that voucher-funded private schools generally outperform municipal schools. For anti-market advocates, the maps showcased quite the contrary: the extreme overlapping of educational opportunity gaps with spatial patterns of class-based segregationFootnote 9. So powerfully did SES predict test scores that some of the poorest districts in dense metropolitan areas did not have a single school in yellow or greenFootnote 10. Anti-market critics pointed to the tautology of performance measures that merely reified pro-market reformers’ trashing of public schools serving mostly poor students.

Public controversy over the implacable application of market discipline to drive school improvement certainly resonated within the still-unfinished legislative debate. This final round of deliberation in Congress remained plagued by skirmishes over the use of “raw” test-scores—that is, percentages of students attaining proficiency—as the yardstick of school quality; petitions to compute adjustments by SES or even incorporate value added measures to capture effectiveness—the specific effect of instruction on student growth; and suspicion of the distortions these statistical manipulations might introduce to the universal enforcement of common core standards. Nevertheless, lawmakers passed the LEQA in early 2011, right before the outbreak of a new wave of student protests further radicalized the conflict over the market model of education, monopolizing the public agenda for the rest of the year.

Simulating commensuration

It was in this polarized atmosphere that the Mineduc and the newly created AEQ undertook the tricky task of developing a “fair” and “socially sensitive” methodology to commensurate schools with a single metric of quality. Already in late 2010, upon the imminent enactment of the LEQA, the government set up an ad hoc commission of specialists and tasked it with proposing a tentative SOM to the soon-to-be AEQ. This “Ordinalization Commission” was headed by conservative economists in charge of the SIMCE administration and other divisions of the Mineduc, and worked in consultation with another economist hired by the United Nations Development Program but institutionally affiliated with a private university well known for its conservative leanings (see figure 1). Over the next three years, the commission reviewed the international literature and surveyed national systems of high-stakes accountability. It also gathered and consolidated all the necessary data sources, revised and calculated all the necessary indicators, and, more crucially, conducted simulations based on alternative model specifications and estimation methods. To validate and review drafts, the commission convened three rounds of meetings with school administrators and principals and organized twelve workshops with independent experts (mainly economists, psychometricians, educational psychologists, and sociologists). In total, the commission consulted with more than 140 people.

Figure 1 Experts’ backgrounds, affiliations, and partisan leanings

Source: Author’s elaboration based on Fundamentos Metodología de Ordenación de Establecimientos, prepared by the Ordinalization Commission and the Research Division of the Agency of Education Quality 2013.

The work of the Ordinalization Commission was documented in detail in the report Fundamentos Metodología de Ordenación de Establecimientos (Foundations of the School Ordinalization Methodology). The bulk of the language and formulae of the 2014’s Executive Order came out of this report.Footnote 11 After recognizing quality and equity education as a political mandate stemming from the student movement of 2006 and then made official by the Presidential Council, as well as the subsequent bipartisan consensus on preserving the voucher-funded public-private scheme of service delivery, the report made it clear that the proposed design of the SOM sought to fulfill simultaneously two different requirements: the “fair responsibilization” of schools, and the achievement of “learning standards” by students [39].

This attempt at reconciling in a single instrument competing claims for valuing school effectiveness and enforcing proficiency benchmarks severely constrained the commission’s decisions and justifications. But it also facilitated the translation of the moral conundrum (proficiency vs. effectiveness) into a technical matter concerning the identification of the “correct” econometric equation.

The statistical aspects of this controversy are at least puzzling, if not downright confusing. Yet they are central to the school valuation problem at stake. That the school performance categories stipulated in the LEQA were drawn from a composite index generated technical consensus among experts. Experts similarly agreed that index scores overwhelmingly reflected schools’ average proficiency in common core standards, measured by routine standardized tests.Footnote 12 Experts further concurred that, through statistical modeling and adjustment, absolute proficiency levels could be rendered relative to individual- and school-level data that roughly approximated student SES, thereby honoring the principle of fair responsibilization. Finally, the alleged requirement of balancing presumably incompatible quality standards with a single ordinal measurement justified a second correction: the introduction of proficiency “thresholds” that, once the SES adjustment had been computed, automatically reassign very high or very low performing schools (in terms of absolute test scores) to the upper and lowest categories of the ordinalization—a sort of automatic exemption from the effectiveness standard.

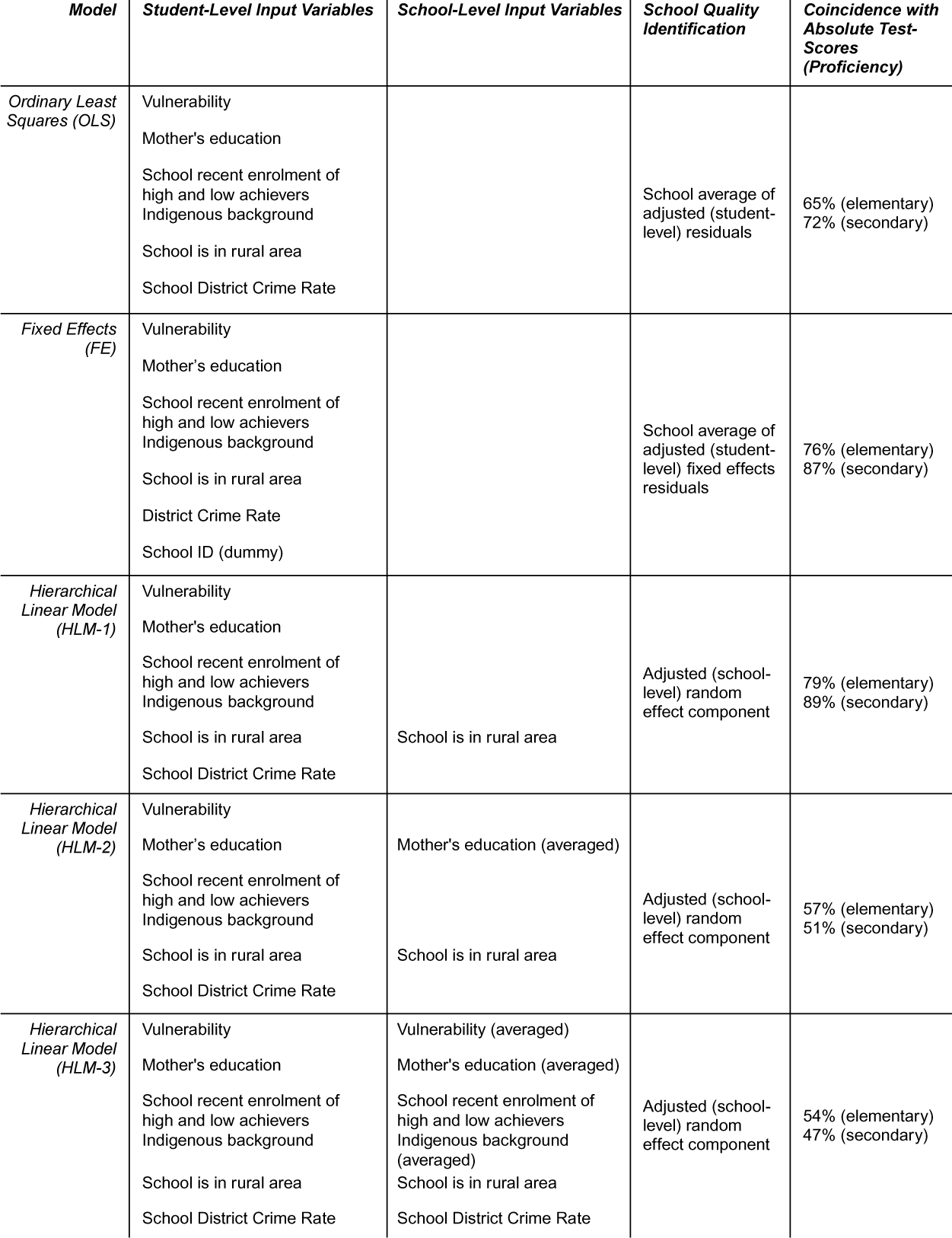

The most crucial decision was which regression equation should be used to estimate the index and then perform balanced post-estimation recalibrations. To aid decision-making, the commission simulated and compared the predicted distribution of five alternative SOMs, each corresponding to a different modeling strategy: Ordinary Least Squares (OLS), Fixed Effects model (FE), and three different specifications of Hierarchical Linear Models (HLM). As Figures 2 and 3 show, the weight of SES adjustments significantly varied according to the estimation method and model specification. While FE and HLM-1 brought the SOM closer to the unadjusted index of absolute test-scores, HLM-2 and HLM-3 returned adjusted rankings that greatly deviated from proficiency scores. OLS fell in the middle, allowing for moderate, Solomonic adjustments.

Figure 2 Characteristics of the models considered to compute SES adjustments

Comment: The main differences between HLM-2 and OLS (the two models subjected to a vote in the final round) is the inclusion of SES indicators averaged at the school level in HLM-2. In OLS, school quality scores are computed by averaging adjusted residuals for each school, while in HLM school quality scores derive from adjusted random effects at the school level.

Source: Author’s elaboration based on Fundamentos Metodología de Ordenación de Establecimientos, prepared by the Ordinalization Commission and the Research Division of the Agency of Education Quality 2013.

Figure 3 Simulations of weights of the SES adjustment produced by the Ordinalization Commission

Comment: This can be interpreted as a measure of the degree to which each model modifies the original distribution of average test scores (proficiency) in elementary schools (left) and secondary schools (right). Note that EF and HLM-1 yield the smallest adjustments, HLM-2 and HLM-3 the highest, and OLS falls somewhat the in the middle.

Source: Fundamentos Metodología de Ordenación de Establecimientos, prepared by the Ordinalization Commission and the Research Division of the Agency of Education Quality 2013.

Although the report never made it explicit, this gap in levels of SES adjustment depended on the degree to which the contextual effects of SES (that is, at the school-level) were made endogenous to the model and estimated separately from the individual effects of SES (at the student level). This contextual effect—namely, the impact of the overall context of poverty or wealth in which a school operates—was better captured by HLM-2 and HLM-3 when key students’ socioeconomic characteristics such as mother’s education or vulnerability were averaged and added back in at the organizational level. In class-segregated education systems (i.e., large between-school SES variation) such as Chile’s, the contextual effect of SES is usually large, so its parametrization and subsequent inclusion in post-estimation adjustments significantly reduces the remaining between-school variance—in this case, the variability of school quality, thus defined. Hence the contrast with the other models was sharp. Although HLM-1 and the FE model did consider the clustering of students into schools, their (mis)specification of school effects simply ignored the contextual dimension of SES. The OLS regression, on the other hand, directly assumed no nesting of students within schools, so the model only partly captured the between-school SES effect but confounded it with the within-school SES effect.

Model choice, and the weight of SES adjustment the decision carries with it, therefore appeared highly consequential for determining the ultimate prevalence of proficiency vis-à-vis effectiveness in the predicted ordinalization, something the report made crystal clear:

The higher β, the higher the adjustment (SES index) and less will be required from the most vulnerable vis-à-vis non-vulnerable schools in the Unadjusted Index [that is, absolute proficiency] in order to move up in the Ordinalization categories. Then, if the adjustment level is high, vulnerable schools may achieve high values in the Final Index with low scores in the Unadjusted Index [486].

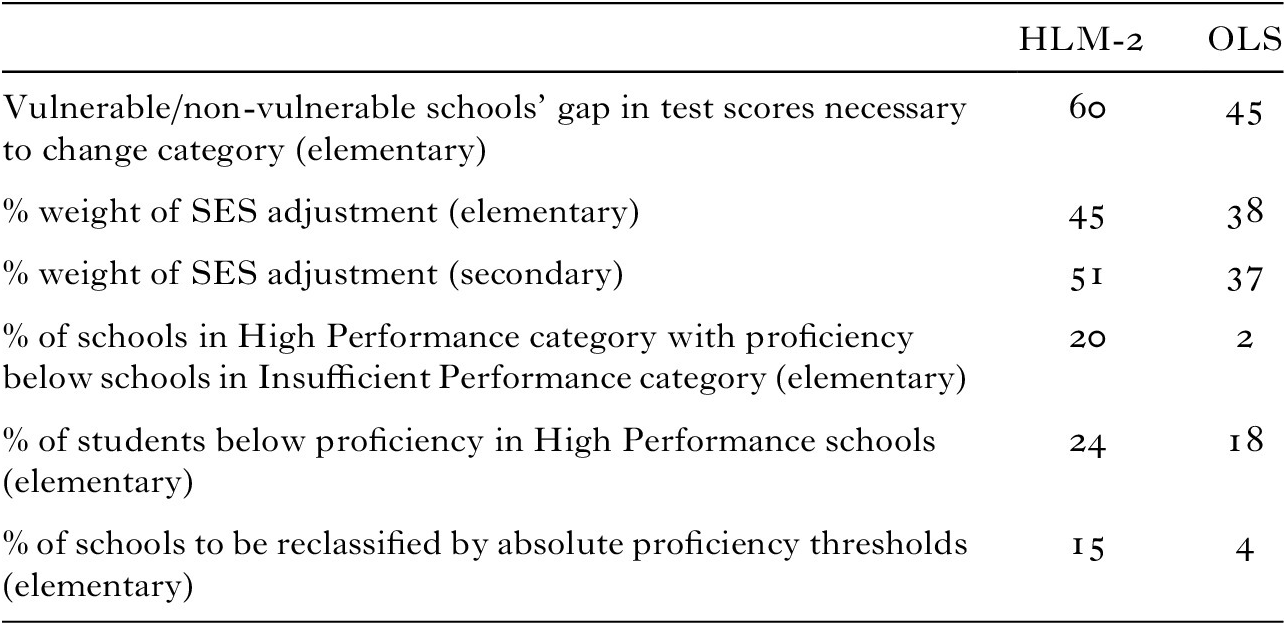

The interpretation of the distributional consequences associated with alternative calibrations of the instrument indicates that fear of effectiveness outweighing proficiency standards prevailed over any other consideration. After sorting the five models by SES effect size and discarding HLM-1 and FE for yielding meager adjustments, the report noted that in HLM-2 and HLM-3 “there are schools that are required up to 60 points less in terms of the Unadjusted Index [a measure of proficiency scores] to move from one Ordinalization category to another” [487]. In these models, “the adjustment level is such that a considerable number of schools with similar test scores will be classified in three different categories” [487].

Most unacceptable to the Ordinalization Commission was the degrading of prestigious elite schools, popularly known in media rankings for their high level of proficiency. In this respect, the report warned that choosing HLM-2 or HLM-3 would mean that “nearly all the least vulnerable schools with the best test scores of the country classify into the Medium Performance”, which “explains why these models require the disproportionate use of [post-adjustment proficiency] thresholds” [490]. Finally, the report contended that analyses of model stability proved HLM-2 or HLM-3 to be the most sensitive to cross-time variation in the number of schools classified at the bottom of the ordinalization. For the commission, adopting such a time-sensitive model meant compromising the high-stakes purposes built into the design of the policy device. For “it will be more unlikely for a school to be systematically classified into the Insufficient Performance category for five years” [515]—the legal requirement to make a school subject to intervention and closure.

Thus construed, the distributional consequences associated with different alternative models made the Ordinalization Commission’s choice of model obvious: OLS, deemed the model that better achieves an equilibrium between too-much and too-little, was preferable. In this model “the weight of SES adjustment is more similar to that of the models that correct the most, and it does not yield counterintuitive results”; per the Commission, counterintuitive results were those that degraded high-proficiency and boosted low-proficiency schools. Therefore, OLS “achieves a better balance between the two objectives of the SOM: to hold schools responsible with fairness and create equal expectations for all the students in the country” [517].

Arbitrating between models’ moral values

In October 2012, the boardFootnote 13 of the AEQ began holding official sessions. The first order of business was to deliberate and decide on the proposal drafted by the Ordinalization Commission. To make a final judgment on the suggested statistical model, the board solicited the opinion of two independent consultantsFootnote 14. The first of these experts was generally sympathetic to the commission’s suggestions. He acknowledged OLS’s weaknesses in terms of endogeneity and misspecification, problems that could be easily fixed if value-added models were used. However, the latter would require panel data, currently unavailable. And anyway—he went on—value-added models would not resolve all these problems. Likewise, for this specialist, hierarchical linear models could offer a reasonable alternative to handling selection biases, but these models “do not necessarily dominate over OLS” [550]. All in all, he fundamentally agreed on the two criteria followed by the commission: classifying according to whether predicted school residuals fall below or above the line produced by regressing test scores to SES, but also reclassifying when schools reach minimum and maximum levels of absolute proficiency.

The second expert, however, was less sympathetic. He expressed support for adjusting the index by factors beyond school control, which “acknowledges the well-known fact that achieving a certain level of quality education is harder or easier depending on conditions such as the ones this proposal considers” [534]. In this regard, he welcomed that the SOM “seeks to represent in fairer fashion what is possible to achieve in different educational context” [534]. And yet, the choice of statistical model concerned him greatly. For him, OLS’s assumptions on the distribution of the error term were simply untenable, which raised serious validity issues concerning what in applied economics is known as “identification strategy”—the procedures adopted to assert that the estimated parameters approximate the real parameters as estimated under experimental conditions. Using HLM, instead, would have at least allowed for a better treatment of residuals to identify the parameter of interest, namely, school quality:

To hold schools responsible for their outcomes, it is necessary to make sure that the utilized analysis model clearly identifies that portion of the examined variability that actually depends on the school and not on other factors. In this sense, the use of residuals of a conventional regression equation (with student-level data), as baseline to establish school effects, in practice confounds the part attributable to the school with another that depends on the student-level error. The use of HLM allows for separating these components […] These models identify a school-level residual that is different from the student-level residual, which provides better statistical support to assert that a particular residual represents the school effect once all the control variables are included (the proposed method averages the individual residuals of all students in the school as expression of the school effect, after having run a regression at the individual level). [pages 535-536, 540-541]

The question at stake, again, was how much of the SES contextual effect was being disguised as school quality, supposedly captured by the residual part of the model. Underlying the apparently esoteric technical quandary about statistical artifacts was a serious first-order disagreement [Abend 2014]: one over the extent to which the four-tiered ranking of quality values performed by the soon-to-be ordinalization device might potentially deviate from the status hierarchies already built into the highly segmented Chilean school market [see e.g., Podolny 1993]. Simply put, should absolute proficiency, better reflected by OLS post-estimation adjustments, prevail as the valuation criterion, the resulting hierarchy of school quality would tacitly validate the class-based tiers in which the market is organized (see Table 1). Should the valuation device be more sensitive to the specific school effect on proficiency—as in HLM-2 and HLM-3 post-estimation adjustments—the performed ordinalization might potentially invert, or at least counter, the market’s status orderFootnote 15.

Table 1 Mean and percentile distribution of within-school % of socioeconomically vulnerable students by school sector

Comment: Municipal public schools have a higher % of vulnerability than voucher private schools, which in turn have a higher % of vulnerability than non-voucher private schools. The higher the SES adjustment, the higher the boost in quality scores for municipal public schools.

Source: Author’s elaboration based on statistics reported in the document Fundamentos Metodología de Ordenación de Establecimientos, prepared by the Ordinalization Commission and the Research Division of the Agency of Education Quality 2013.

In light of this discrepancy, opinions split between board members sympathetic to the conservative government, and those gravitating towards the progressive opposition (Figure 1). They did not, however, openly and explicitly confront this ideological disagreement. Instead, board members, contrasting the statistical simulations based on HLM-2 and OLS—that is, comparing the orders of worth performed by this or that calibration strategy—, resorted to moral judgements to justify their technical decisions for this or that econometric equation.

What did technocrats make of the comparison of ante- and post-adjustment simulations based on both estimation methods? (See Table 2 for a small sample of this comparison.) A minority of board members voiced their preference for HLM-2, under the explicit argument that greater adjustments by SES better captured school effectivenessFootnote 16. School quality, they contended, could only be gauged relative to school practices. A “fair responsibilization” of schools for the service they provide must value schools for effects they can reasonably be assumed to cause. And by no reasonable standard, they claimed, should the responsibilization of schools in disadvantaged communities depend on their students’ social class background.

Table 2 Some characteristics of the simulated ordinalizations based on OLS (model choice) and HLM-2 (alternative)

Source: Author’s elaboration based on statistics reported in the document Fundamentos Metodología de Ordenación de Establecimientos, prepared by the Ordinalization Commission and the Research Division of the Agency of Education Quality 2013.

The board’s majority, however, opted for OLS. Echoing the market-fundamentalist perversity thesis [Hirschman Reference Hirschman Albert1991; Somers and Block Reference Somers Margaret and Block2005] that providing unconditional support to the poor disincentivizes work and exacerbates dependency, one board member warned that larger adjustments by SES would significantly alter low-SES public secondary schools’ rank in the final ordinalization (i.e., move them up two positions, from “insufficient” to “satisfactory”), which might constitute a disincentive for failing schools (in terms of absolute levels of proficiency) to seek improvement.

Correcting [by SES] does justice [to these schools] because it takes into account the hardships these schools face, but on the other hand, this does not necessarily provide the proper signal for the system to improve and take charge of students’ [learning] difficulties. For that reason I choose the model that adjusts to a least extent, knowing that only value added models really allow for a fair classification of schools.

This perspective was seconded by the president of the board, who argued that smaller adjustments by SES prudently prevented too much distortion of gross results, thereby taking account of “vulnerable schools’ greater learning hardships.” Endorsing the president’s position, a third member deemed HLM-2 adjustments “unfair” to the poor, since the same (absolute) score would categorize well-off schools as “unsatisfactory” quality and vulnerable ones as “high” quality; a situation that would “crystalize too-low expectations [for the poor] and result in inadequate targeting of state resources”—again, the perversity thesis.

The argument for adopting a conservative approach to SES adjustment was then made explicit in a policy briefFootnote 17 detailing the School Classification Methodology (note the adoption of the new terminology) released by the AEQ:

Some HLM specifications generate very different achievement expectations between students from different SES contexts, which would go against the principle of quality and equity in education provided by art. 3 of the General Education Law. In practice, using a method that generates very different expectations implies giving a signal that would consider low-SES students’ results as excellent, when those same results would be considered unacceptable for students in more favorable contexts. In other words, the classification generated by some… methods risks masking the inequities in the educational opportunities that schools provide in our country [7-8].

And beyond the performative effects that higher SES adjustments might have on expectations, the AEQ also alleged pragmatic reasons concerning the correct targeting of resources to those who actually need them. As the law mandated that schools classified at the bottom automatically qualify for government supervision and guidance, assigning low-quality status to a larger number of poor schools yielded a “fairer” pattern of resource allocation. Adjusting by HLM-2, however, “would fail in the correct identification of schools that need support”, which would “deprive underperforming low-SES schools from the chance of receiving Orientation and Evaluation Visits and, then, Technical Pedagogical Support, benefiting those schools from less vulnerable contexts” [8].

To base these arguments on evidence, the AEQ presented simulation results of pre- and post-adjustment ordinalizations for different model selections, indicating that adjusted HLMs “with averaged variables” yielded “classifications in which more than 20% of schools in the category High Performance (schools with students relatively more vulnerable), would have worse learning results [in terms of absolute SIMCE scores] than schools with less vulnerable students in the category Insufficient Performance” [14].

Sanctifying common sense

In December 2013, a second regulatory body, the National Council of Education (NCE), released, in accord with the LEQA, a technical reportFootnote 18 on the SOM design proposed by the Ordinalization Commission and approved by the AEQ. The report was based on a study conducted by eight researchers (five national, three international), who evaluated whether the valuation device followed the criterion that “the resulting categories be fair according to the diverse (socioeconomic) contexts, and that they favor mobility [of poorly performing schools] to superior levels” [1]. Overall, the NCE celebrated the careful and responsible work done by the AEQ, and suggested a smooth, cautious implementation of the new classification system, in order to protect its legitimacy. The NCE further acknowledged the technical challenges that constructing these measurements represent in terms of internationally validated scientific standards, which implied “a deep knowledge of psychometric and econometric topics” [6]. The NCE finally stated that it was acutely aware that the SOM’s legitimacy depended not only on technical aspects but also on its appropriation and implementation by users, and on its consequences.

In terms of how the SOM understood quality, the NCE applauded the inclusion of a conception of equity as a key component of quality. It also praised the methodological consideration of definitions of quality commonly used by the empirical literature on effective schools. More or less explicitly, however, the NCE validated a notion of quality reduced to schools’ ability to “produce” students who “overcome Insufficient Learning Levels” [7]. In other words, quality amounted to schools’ meeting cognitive learning benchmarks in specific areas of knowledge, that is, proficiency. Allegedly an inherent component of quality, equity meant nothing but the achievement of such basic proficiency standards by all.

In light of this conception of quality, it is no coincidence that the report approved of the econometric model finally adopted to compute the scores used in the classification. After acknowledging the lack of academic consensus on the best method to separate school and class effects on student learning, the NCE noticed that the AEQ’s decisions were empirically grounded in “an analysis of the obtained classification;” simulations that considered “different educational realities to an important degree” [8]. Hence the NCE endorsed the AEQ in its intent to produce estimates that guarantee that “schools be held responsible [after] controlling for those associated factors that are out of their intervention possibilities” [8]. On the other hand, however, the NCE’s report agreed with the AEQ’s criteria of preempting “different learning expectations for students from different SES backgrounds” [8]. These provisions, ultimately, were necessary “to guarantee a fair classification” [8].

Afterlife: adjusting the adjustment

Controversy subsided, but only briefly. The enactment of the SOM not only implied that public categorizations of school quality would now be rendered official and legally binding; it also entailed the intensification and expansion of mandatory testing to more grades and curricular areas. This was made explicit in the Testing Plan of 2012Footnote 19, wherein the Mineduc predicated the increase of national tests from nine to seventeen in 2015 upon the adequate implementation of the SOM. For the conservative government, making testing ubiquitous was necessary “to carry out a valid and reliable ordinalization of elementary and secondary schools” [7]. Achieving robust measures of school effectiveness amounted to a moral imperative, the fulfillment of which was “fundamental to contributing to a fairer and more equitable ordinalization of schools” [10].

This intensification and expansion of high-stakes testing was the context of Alto al SIMCE (Stop the SIMCE], a public campaign “in rejection of the standardization rationality and the effects of the measurement and quality assurance system.”Footnote 20 Launched in mid-2013—right before the Presidential election—by a small group of young educational researchers, Alto al SIMCE advocated for “a form of evaluation in concord with an education understood as a social right and not as a commodity.” The campaign soon won the endorsement of hundreds of renowned educational researchers, artists, and intellectuals. It also enlisted the support of the teachers’ union, the main student federations, as well as their popularly acclaimed former leaders who were now running for Congress.

In March 2014, a new center-left coalition rose to power with the explicit programmatic commitment of rolling back market-oriented policies in education, including a revision of the testing regime. The new authorities of Mineduc commissioned a group of education specialists of diverse political backgrounds to produce a reportFootnote 21 on possible fixes to accountability policy: the so-called “taskforce for SIMCE revision.” Alto al SIMCE was among the myriad stakeholders invited to the taskforce’s hearings. There the campaign proposed to suspend national testing for three years, stop the implementation of the SOM, and smoothly transition to a new evaluation system based exclusively on survey tests, complemented with formative assessments at the district and school level [28].

Although this proposal received the endorsement of a group of seventeen lawmakers, most of them belonging to the government coalitionFootnote 22, only a minority of the taskforce, linked to the student movement and the teachers’ union, and more sympathetic with Alto al SIMCE, firmly pushed for a significant rollback. This group of experts recommended a two-thirds reduction in annual testing [86-88]. They also argued, straightforwardly, for the suppression of the SOM, and its replacement with a mechanism of targeted assistance for those schools that fall below a proficiency threshold, measured by unadjusted test scores.

The majority report adopted much of the revisionist language, but it approved of moving forward with the pilot implementation of the SOM, highlighting that at least the new ordinalization “takes students’ socioeconomic characteristics into account” [43]. The SOM, therefore, represented “progress relative to communicating only average scores in SIMCE, which induces erroneous interpretations of school effectiveness” [43]. And still, the majority report recognized multiple validity issues regarding the design of the SOM. The taskforce questioned the application of proficiency “thresholds” at the two bounds of the post-adjustment index distribution, which assign “schools with high SIMCE in[to] the highest category, even if they have not been effective when considering their SES.” Thresholds also “leave in the lowest category those schools with very low absolute results, even if they achieve better scores than other schools in the same SES level” [62]. Thus, in what appeared to be a re-instantiation of the moral conundrum pervading the design of the SOM, the report alluded to the dangers of schools being classified in the extreme categories according to two different criteria: their “absolute results” (proficiency), or “their effectiveness” (value added).

As I noted in the previous section, ambiguity of criteria stemmed from the intricate coexistence of apparently incommensurable perspectives on school accountability. Yet, this time experts’ adjudication was crystal clear: using proficiency thresholds in the SOM restricted, if not directly contradicted, the SES adjustment mandate that “seeks to give weight to factors attributable to schools and approximate to an evaluation of their effectiveness as to hold them responsible in a fair way and reflect their performance” [62]. On the closely related but more “technical” matter of whether the estimation method chosen to compute adjustments fairly honored such a mandate, the taskforce made no comments at all.

In October 2019, amid the largest social outbreak in Chilean history, and upon the imminent release of the fourth legally binding ordinalization, the Senate Education Committee began discussing a bill introduced by progressive lawmakers that derogates the LEQA’s mandate to close every school classified four consecutive years in the Insufficient category. In their preambleFootnote 23, the proponents of the bill review the international evidence of the negative consequences of school closures and warn about the pernicious logic of standardized testing, denouncing that public schools, not surprisingly, represent three out of four schools at risk of closure. Opponents of the bill, in turn, hotly defended the punishments and rewards associated with the SOM, arguing that adjustment by SES prevent socioeconomically different schools from being commensurate with the same metric. The indictment or defense of high-stakes accountability notwithstanding, lawmakers offered no comment on the technical validity of the statistical method used to determine which school should be deemed underperforming. The issue would remain absent throughout the committee’s hearings and the plenary session that, in August 2020, approved the bill by a slim majority and sent it to the House. The experts, it seems, had already adjudicated.

Discussion

The long road that goes from the politicization of market-based inequality in education, to the adoption of accountability mechanisms that intentionally factored between-school SES variation into assessments of school quality, reveals that a moral economy was constantly at play in the design of the SOM. Policymakers’ quest for fairness in putatively objective, impersonal assessments of school quality required forming subjective, personal judgment on the potential performative effects associated with different adjustments of the new commensuration instrument. The justificatory rationales at play in this controversy reflected an intricate moral economy, intelligible only in light of the anti-market versus pro-market cleavage that crosscuts the Chilean polity regarding the administration and provision of social services. The clash between two notions of “quality” operationalizing two distinct conceptions of “fairness” became inevitable. And yet, experts’ translation of a priori antithetical conceptions of market regulation and accountability into competing claims for fairness that, nevertheless, were both rooted in shared ways of going about settling their moral disputes, helped bridge the ideological gap. Reducing this disagreement to a tradeoff between proficiency and effectiveness that could be technically handled through econometric adjustments and post-estimation thresholds blurred the ideological divide at stake. Probably the SOM’s most consequential “performativity effect” consisted of rendering commensurable alternative moral ways of making the SOM “performative.”

Eventually, despite warnings and appeals to fair responsibilization by progressive experts, the conservative method of SES adjustment prevailed, better suiting the standpoint of market-minded policymakers. A minority of progressive experts equated quality to measures of school effectiveness. Invoking fairness in relation to providers (especially poor schools) for the sake of a just distribution of responsibility for educating disadvantaged students, primarily served by public institutions, they advocated for large adjustments by SES. Yet the majority of conservative experts, unsuspicious of proficiency rankings, especially jealous of preserving the SOM’s efficacy as an incentive to organizational improvement, and primarily concerned with giving families (poor families in particular) the correct market signals to exercise choice, advocated for moderate adjustments by SES. Because they conceived fairness on behalf of consumers, they understood quality in reference to achievement of proficiency benchmarks, irrespective of the school’s relative contribution to that outcome. The official narrative justifying this decision was that, beyond the scientific convention that evaluations of school quality should rely on unbiased estimates of school effects on student progress, ordinalizations based on greater adjustments by SES might lead to policy inefficient, if not politically illegitimate, results. Models that (at least for some) produced less biased estimates of school effects might have “valued” two schools with very different SES composition as equally effective when in fact there was a wide proficiency gap between them. To put it bluntly, even if proven more robust, classifying according to models that give special treatment to school effects might have conveyed the (false) impression that students in poor but effective schools achieve as much reading, math, and sciences skills as those in rich but ineffective schools, when in reality the opposite held true.

Thus, what the majority of experts in charge of quality assurance tacitly established was that the optimal calibration of the SOM was one that expressed a fine-tuned balance between scientific conventions about the technical aspects of quality valuation and socially accepted conventions that the education that really “qualifies” as “valuable” is, ultimately, the kind of education privileged kids get in the schools they attend. What, from the prevailing standpoint, seemed to make the ordinalization “legitimate” was not (only) that it conformed to the best standards of scientific expertise, but also that it better approximated commonsense expectations about the actually existing social distribution of learning, sanctioning schools’ academic prestige accordingly. The quest for “fairness”, in the end, concerned much more this moral commitment to matching each school’s statistically predicted value with its socially expected value, than honoring the professional maxim of delivering the most scientifically valid measure of school quality.

Still unresolved is the puzzle of why the minority of progressive experts acquiesced to a version of school accountability that fell short of its expectations for fair responsibilization. Analyzing experts’ reason-giving through the lens of Abend’s [Reference Abend2014] theory of nested orders of morality, I have shown that a common moral background facilitated accommodation. Despite their moral qualms about choosing a school valuation method that might not fit a particular conception of fair responsibilization, policymakers’ recourse to the quasi-experimental methods of applied microeconomics—the empirical estimation of an unbiased parameter thought to statistically identify school quality—proved critical to framing both the terms of and solutions to this controversy [Breslau Reference Breslau2013; Panhans and Singleton Reference Panhans Matthew and Singleton2017]. This moral background, I contend, provided “the criteria for morality’s or moral considerations’ being relevant in a situation in the first place” [Abend Reference Abend2014, 30], in this case, whether in the evaluation of the SOM’s fairness morality was at all applicable. In this sense, experts shared a faith in scientific procedures (e.g., econometrics) as appropriate tools for evaluating fairness, a moral category. They differed in their preferred empirical method of performing an index of school quality. Whether an empirical method was necessary to base judgments on fairness was not under question.