1 Introduction

Proof assistants based on dependent type theory rely on the termination of recursive functions and the productivity of corecursive functions to ensure two important properties: logical consistency, so that it is not possible to prove false propositions; and decidability of type checking, so that checking that a program proves a given proposition is decidable.

In proof assistants such as Coq, termination and productivity are enforced by a guard predicate on fixpoints and cofixpoints respectively. For fixpoints, recursive calls must be guarded by destructors; that is, they must be performed on structurally smaller arguments. For cofixpoints, corecursive calls must be guarded by constructors; that is, they must be the structural arguments of a constructor. The following examples illustrate these structural conditions.

In the recursive call to plus, the first argument p is structurally smaller than S p, which is the shape of the original first argument n. Similarly, in const, the constructor Cons is applied to the corecursive call.

The actual implementation of the guard predicate extends beyond the guarded-by-destructors and guarded-by-constructors conditions to accept a larger set of terminating and productive functions. In particular, function calls will be unfolded (i.e. inlined) in the bodies of (co)fixpoints and reduction will be performed if needed before checking the guard predicate. This has a few disadvantages: firstly, the bodies of these functions are required, which hinders modular design; and secondly, as aptly summarized by The Coq Development Team (2018),

… unfold[ing] all the definitions used in the body of the function, do[ing] reductions, etc…. makes typechecking extremely slow at times. Also, the unfoldings can cause the code to bloat by orders of magnitude and become impossible to debug.

Furthermore, changes in the structural form of functions used in (co)fixpoints can cause the guard predicate to reject the program even if the functions still behave the same. The following simple example, while artificial, illustrates this structural fragility.

If we replace

![]() with in minus, the behaviour doesn’t change, but O is not a structurally smaller term of n in the recursive call to div, so div no longer satisfies the guard predicate. The acceptance of div then depends on a separate definition independent of div. While the difference is easy to spot here, for larger programs or programs that use many imported library definitions, this behaviour can make debugging much more difficult. Furthermore, the guard predicate is unaware of the obvious fact that minus never returns a nat larger than its first argument, which the user would have to prove in order for div to be accepted with our alternate definition of minus.

with in minus, the behaviour doesn’t change, but O is not a structurally smaller term of n in the recursive call to div, so div no longer satisfies the guard predicate. The acceptance of div then depends on a separate definition independent of div. While the difference is easy to spot here, for larger programs or programs that use many imported library definitions, this behaviour can make debugging much more difficult. Furthermore, the guard predicate is unaware of the obvious fact that minus never returns a nat larger than its first argument, which the user would have to prove in order for div to be accepted with our alternate definition of minus.

In short, the extended syntactic guard condition long used by Coq is anti-modular, anti-compositional, has poor performance characteristics, and requires the programmer to either avoid certain algorithms or pay a large cost in proof burden.

This situation is particularly unfortunate, as there exists a non-syntactic termination- and productivity-checking method that overcomes these issues, whose theory is nearly as old as the guard condition itself: sized types.

In essence, the (co)inductive type of a construction is annotated with a size, which provides some information about the size of the construction. In this paper, we consider a simple size algebra:

![]() $s := \upsilon \mid \hat{s} \mid \infty$

, where

$s := \upsilon \mid \hat{s} \mid \infty$

, where

![]() $\upsilon$

ranges over size variables. If the argument to a constructor has size s, then the fully-applied constructor would have a successor size

$\upsilon$

ranges over size variables. If the argument to a constructor has size s, then the fully-applied constructor would have a successor size

![]() $\hat{s}$

. For instance, the constructors for the naturals follow the below rules:

$\hat{s}$

. For instance, the constructors for the naturals follow the below rules:

Termination and productivity checking is then just a type checking rule that uses size information. For termination, the recursive call must be done on a construction with a smaller size, so when typing the body of the fixpoint, the reference to itself in the typing context must have a smaller size. For productivity, the returned construction must have a larger size than that of the corecursive call, so the type of the body of the cofixpoint must be larger than the type of the reference to itself in the typing context. In short, they both follow the following (simplified) typing rule, where

![]() $\upsilon$

is an arbitrary fresh size variable annotated on the (co)inductive types, and s is an arbitrary size expression as needed.

$\upsilon$

is an arbitrary fresh size variable annotated on the (co)inductive types, and s is an arbitrary size expression as needed.

We can then assign minus the type

![]() $\text{Nat}^\upsilon \to \text{Nat} \to \text{Nat}^\upsilon$

. The fact that we can assign it a type indicates that it will terminate, and the

$\text{Nat}^\upsilon \to \text{Nat} \to \text{Nat}^\upsilon$

. The fact that we can assign it a type indicates that it will terminate, and the

![]() $\upsilon$

annotations indicate that the function preserves the size of its first argument. Then div uses only the type of minus to successfully type check, not requiring its body. Furthermore, being type-based and not syntax-based, replacing with doesn’t affect the type of minus or the typeability of div. Similarly, some other (co)fixpoints that preserve the size of arguments in ways that aren’t syntactically obvious may be typed to be size preserving, expanding the set of terminating and productive functions that can be accepted. Finally, if additional expressivity is needed, rather than using syntactic hacks like inlining, we could take the semantic approach of enriching the size algebra.

$\upsilon$

annotations indicate that the function preserves the size of its first argument. Then div uses only the type of minus to successfully type check, not requiring its body. Furthermore, being type-based and not syntax-based, replacing with doesn’t affect the type of minus or the typeability of div. Similarly, some other (co)fixpoints that preserve the size of arguments in ways that aren’t syntactically obvious may be typed to be size preserving, expanding the set of terminating and productive functions that can be accepted. Finally, if additional expressivity is needed, rather than using syntactic hacks like inlining, we could take the semantic approach of enriching the size algebra.

It seems perfect; so why doesn’t Coq just use sized types?

That is the question we seek to answer in this paper.

Unfortunately, past work on sized types (Barthe et al. Reference Barthe, Grégoire and Pastawski2006; Sacchini, Reference Sacchini2011, Reference Sacchini2013) for the Calculus of (Co)Inductive Constructions (CIC), Coq’s underlying calculus, have some practical issues:

-

They require nontrivial backward-incompatible additions to the surface language, such as size annotations on (co)fixpoint types and polarity annotations on (co)inductive definitions.

-

They are missing important features found in Coq such as global and local definitions, and universe cumulativity.

-

They restrict size variables from appearing in terms, which precludes, for instance, defining type aliases for sized types.

To resolve these issues, we extend CIC

![]() $\widehat{~}$

(Barthe et al. Reference Barthe, Grégoire and Pastawski2006), CIC

$\widehat{~}$

(Barthe et al. Reference Barthe, Grégoire and Pastawski2006), CIC

![]() $\widehat{_-}$

Gràoire and Sacchini, Reference Grégoire and Sacchini2010; Sacchini, Reference Sacchini2011), and CC

$\widehat{_-}$

Gràoire and Sacchini, Reference Grégoire and Sacchini2010; Sacchini, Reference Sacchini2011), and CC

![]() $\widehat{\omega}$

(Sacchini, Reference Sacchini2013) in our calculus CIC

$\widehat{\omega}$

(Sacchini, Reference Sacchini2013) in our calculus CIC

![]() $\widehat{\ast}$

(“CIC-star-hat”), and design a size inference algorithm from CIC to CIC

$\widehat{\ast}$

(“CIC-star-hat”), and design a size inference algorithm from CIC to CIC

![]() $\widehat{\ast}$

, borrowing from the algorithms in past work (Barthe et al., Reference Barthe, Grégoire and Pastawski2005, Reference Barthe, Grégoire and Pastawski2006; Sacchini, Reference Sacchini2013). Table 1 summarizes the differences between CIC

$\widehat{\ast}$

, borrowing from the algorithms in past work (Barthe et al., Reference Barthe, Grégoire and Pastawski2005, Reference Barthe, Grégoire and Pastawski2006; Sacchini, Reference Sacchini2013). Table 1 summarizes the differences between CIC

![]() $\widehat{\ast}$

and these past works; we give a detailed comparison in Subsection 6.1.

$\widehat{\ast}$

and these past works; we give a detailed comparison in Subsection 6.1.

Table 1. Comparison of the features in CIC

![]() $\widehat{~}$

, CIC

$\widehat{~}$

, CIC

![]() $\widehat{_-}$

, Coq, and CIC

$\widehat{_-}$

, Coq, and CIC

![]() $\widehat{\ast}$

$\widehat{\ast}$

For CIC

![]() $\widehat{\ast}$

we prove confluence and subject reduction. However, new difficulties arise when attempting to prove strong normalization and consistency. Proof techniques from past work, especially from Sacchini (Reference Sacchini2011), don’t readily adapt to our modifications, in particular to universe cumulativity and unrestricted size variables. On the other hand, set-theoretic semantics of type theories that do have these features don’t readily adapt to the interpretation of sizes, either, with additional difficulties due to untyped conversion. We detail a proof attempt on a variant of CIC

$\widehat{\ast}$

we prove confluence and subject reduction. However, new difficulties arise when attempting to prove strong normalization and consistency. Proof techniques from past work, especially from Sacchini (Reference Sacchini2011), don’t readily adapt to our modifications, in particular to universe cumulativity and unrestricted size variables. On the other hand, set-theoretic semantics of type theories that do have these features don’t readily adapt to the interpretation of sizes, either, with additional difficulties due to untyped conversion. We detail a proof attempt on a variant of CIC

![]() $\widehat{\ast}$

and discuss its shortcomings.

$\widehat{\ast}$

and discuss its shortcomings.

Even supposing that the metatheoretical problems can be solved and strong normalization and consistency proven, is an implementation of this system practical? Seeking to answer this question, we have forked Coq (The Coq Development Team and Chan, Reference Chan2021), implemented the size inference algorithm within its kernel, and opened a draft pull request to the Coq repository Footnote 1 . To maximize backward compatibility, the surface language is completely unchanged, and sized typing can be enabled by a flag that is off by default. This flag can be used in conjunction with or without the existing guard checking flag enabled.

While sized typing enables many of our goals, namely increased expressivity with modular and compositional typing for (co)fixpoints, the performance cost is unacceptable. We measure at least a

![]() $5.5 \times$

increase in compilation time in some standard libraries. Much of the performance cost is intrinsic to the size inference algorithm, and thus intrinsic to attempting to maintain backward compatibility. We analyze the performance of our size inference algorithm and our implementation in detail.

$5.5 \times$

increase in compilation time in some standard libraries. Much of the performance cost is intrinsic to the size inference algorithm, and thus intrinsic to attempting to maintain backward compatibility. We analyze the performance of our size inference algorithm and our implementation in detail.

So why doesn’t Coq just use sized types? Because it seems it must either sacrifice backward compatibility or compile-time performance, and the lack of a proof of consistency may be a threat to Coq’s trusted core. While nothing yet leads us to believe that CIC

![]() $\widehat{\ast}$

is inconsistent, the performance sacrifice required for compatibility makes our approach seem wildly impractical.

$\widehat{\ast}$

is inconsistent, the performance sacrifice required for compatibility makes our approach seem wildly impractical.

The remainder of this paper is organized as follows. We formalize CIC

![]() $\widehat{\ast}$

in Section 2, and discuss the desired metatheoretical properties in Section 3. In Section 4, we present the size inference algorithm from unsized terms to sized CIC

$\widehat{\ast}$

in Section 2, and discuss the desired metatheoretical properties in Section 3. In Section 4, we present the size inference algorithm from unsized terms to sized CIC

![]() $\widehat{\ast}$

terms, and evaluate an implementation in our fork in Section 5. While prior sections all handle the formalization metatheory of CIC

$\widehat{\ast}$

terms, and evaluate an implementation in our fork in Section 5. While prior sections all handle the formalization metatheory of CIC

![]() $\widehat{\ast}$

, Section 5 contains the main analysis and results on the performance. Finally, we take a look at all of the past work on sized types leading up to CIC

$\widehat{\ast}$

, Section 5 contains the main analysis and results on the performance. Finally, we take a look at all of the past work on sized types leading up to CIC

![]() $\widehat{\ast}$

in Section 6, and conclude in Seciton 7.

$\widehat{\ast}$

in Section 6, and conclude in Seciton 7.

2 CIC

$\widehat{\ast}$

$\widehat{\ast}$

In this section, we introduce the syntax and judgements of CIC

![]() $\widehat{\ast}$

, culminating in the typing and well-formedness judgements. Note that this is the core calculus, which is produced from plain CIC by the inference algorithm, introduced in Section 4.

$\widehat{\ast}$

, culminating in the typing and well-formedness judgements. Note that this is the core calculus, which is produced from plain CIC by the inference algorithm, introduced in Section 4.

2.1 Syntax

The syntax of CIC

![]() $\widehat{\ast}$

, environments, and signatures are described in Figure 1. It is a standard CIC with expressions (or terms) consisting of cumulative universes, dependent functions, definitions, (co)inductives, case expressions, and mutual (co)fixpoints. Additions relevant to sized types are highlighted in grey, which we explain in detail shortly. Notation such as syntactic sugar or metafunctions and metarelations will also be highlighted in grey where they are first introduced in the prose.

$\widehat{\ast}$

, environments, and signatures are described in Figure 1. It is a standard CIC with expressions (or terms) consisting of cumulative universes, dependent functions, definitions, (co)inductives, case expressions, and mutual (co)fixpoints. Additions relevant to sized types are highlighted in grey, which we explain in detail shortly. Notation such as syntactic sugar or metafunctions and metarelations will also be highlighted in grey where they are first introduced in the prose.

Figure 1. Syntax of CIC

![]() $\widehat{\ast}$

terms, environments, and signatures.

$\widehat{\ast}$

terms, environments, and signatures.

The overline

![]() denotes a sequence of syntactic constructions. We use 1-based indexing for sequences using subscripts; sequences only range over a single index unless otherwise specified. Ellipses may be used in place of the overline where it is clearer; for instance, the branches of a case expression are written as

denotes a sequence of syntactic constructions. We use 1-based indexing for sequences using subscripts; sequences only range over a single index unless otherwise specified. Ellipses may be used in place of the overline where it is clearer; for instance, the branches of a case expression are written as

![]() $\langle \overline{c_j \Rightarrow e_j} \rangle$

or

$\langle \overline{c_j \Rightarrow e_j} \rangle$

or

![]() $\langle c_1 \Rightarrow e_1, \dots, c_j \Rightarrow e_j, \dots \rangle$

, and

$\langle c_1 \Rightarrow e_1, \dots, c_j \Rightarrow e_j, \dots \rangle$

, and

![]() $e_j$

is the jth branch expression in the sequence. Additionally,

$e_j$

is the jth branch expression in the sequence. Additionally,

![]() is syntactic sugar for application of e to the terms in

is syntactic sugar for application of e to the terms in

![]() .

.

2.1.1 Size Annotations and Substitutions

As we have seen, (co)inductive types are annotated with a size expression representing its size. A (co)inductive with an infinite

![]() $\infty$

size annotation is said to be a full type, representing (co)inductives of all sizes. Otherwise, an inductive with a noninfinite size annotation s represents inductives of size s or smaller, while a coinductive with annotation s represents coinductives of size s or larger. This captures the idea that a construction of an inductive type has some amount of content to be consumed, while one of a coinductive type must produce some amount of content.

$\infty$

size annotation is said to be a full type, representing (co)inductives of all sizes. Otherwise, an inductive with a noninfinite size annotation s represents inductives of size s or smaller, while a coinductive with annotation s represents coinductives of size s or larger. This captures the idea that a construction of an inductive type has some amount of content to be consumed, while one of a coinductive type must produce some amount of content.

As a concrete example, a list with s elements has type

![]() , because it has at most s elements, but it also has type

, because it has at most s elements, but it also has type

![]() , necessarily having at most

, necessarily having at most

![]() $\hat{s}$

elements as well. On the other hand, a stream producing at least

$\hat{s}$

elements as well. On the other hand, a stream producing at least

![]() $\hat{s}$

elements has type

$\hat{s}$

elements has type

![]() , and also has type

, and also has type

![]() since it necessarily produces at least s elements as well. These ideas are formalized in the subtyping rules in an upcoming subsection.

since it necessarily produces at least s elements as well. These ideas are formalized in the subtyping rules in an upcoming subsection.

Variables bound by local definitions (introduced by let expressions) and constants bound by global definitions (introduced in global environments) are annotated with a size substitution that maps size variables to size expressions. The substitutions are performed during their reduction. As mentioned in the previous section, this makes definitions size polymorphic.

In the type annotations of functions and let expressions, as well as the motive of case expressions, rather than ordinary sized terms, we instead have bare terms

![]() $t^\circ$

. This denotes terms where size annotations are removed. These terms are required to be bare in order to preserve subject reduction without requiring explicit size applications or typed reduction, both of which would violate backward compatibility with Coq. We give an example of the loss of subject reduction when type annotations aren’t bare in Subsubsection 3.2.2

$t^\circ$

. This denotes terms where size annotations are removed. These terms are required to be bare in order to preserve subject reduction without requiring explicit size applications or typed reduction, both of which would violate backward compatibility with Coq. We give an example of the loss of subject reduction when type annotations aren’t bare in Subsubsection 3.2.2

In the syntax of signatures, we use the metanotation

![]() $t^\infty$

to denote full terms, which are sized terms with only infinite sizes and no size variables. Note that where bare terms occur within sized terms, they remain bare in full terms. Similarly, we use the metanotation

$t^\infty$

to denote full terms, which are sized terms with only infinite sizes and no size variables. Note that where bare terms occur within sized terms, they remain bare in full terms. Similarly, we use the metanotation

![]() $t^*$

to denote position terms, whose usage is explained in the next subsubsection.

$t^*$

to denote position terms, whose usage is explained in the next subsubsection.

2.1.2 Fixpoints and Cofixpoints

In contrast to CIC

![]() $\widehat{~}$

and CIC

$\widehat{~}$

and CIC

![]() $\widehat{_-}$

, CIC

$\widehat{_-}$

, CIC

![]() $\widehat{\ast}$

has mutual (co)fixpoints. In a mutual fixpoint

$\widehat{\ast}$

has mutual (co)fixpoints. In a mutual fixpoint

![]() , each

, each

![]() ${f_k^{n_k} : t_k^* \mathbin{:=} e_k}$

is one fixpoint definition.

${f_k^{n_k} : t_k^* \mathbin{:=} e_k}$

is one fixpoint definition.

![]() $n_k$

is the index of the recursive argument of

$n_k$

is the index of the recursive argument of

![]() $f_k$

, and

$f_k$

, and

![]() means that the mth fixpoint definition is selected. Instead of bare terms, fixpoint type annotations are position terms

means that the mth fixpoint definition is selected. Instead of bare terms, fixpoint type annotations are position terms

![]() $t^*$

, where size annotations are either removed or replaced by a position annotation

$t^*$

, where size annotations are either removed or replaced by a position annotation

![]() $\ast$

. They occur on the inductive type of the recursive argument, as well as the return type if it is an inductive with the same or smaller size. For instance (using

$\ast$

. They occur on the inductive type of the recursive argument, as well as the return type if it is an inductive with the same or smaller size. For instance (using

![]() ${t \rightarrow u}$

as syntactic sugar for

${t \rightarrow u}$

as syntactic sugar for

![]() ), the recursive function

), the recursive function

![]() has a position-annotated return type since the return value won’t be any larger than that of the first argument.

has a position-annotated return type since the return value won’t be any larger than that of the first argument.

Mutual cofixpoints

![]() are similar, except cofixpoint definitions don’t need

are similar, except cofixpoint definitions don’t need

![]() $n_k$

, as cofixpoints corecursively produce a coinductive rather than recursively consuming an inductive. Position annotations occur on the coinductive return type as well as any coinductive argument types with the same size or smaller. As an example,

$n_k$

, as cofixpoints corecursively produce a coinductive rather than recursively consuming an inductive. Position annotations occur on the coinductive return type as well as any coinductive argument types with the same size or smaller. As an example,

![]() , a corecursive function that duplicates each element of a stream, has a position-annotated argument type since it returns a larger stream.

, a corecursive function that duplicates each element of a stream, has a position-annotated argument type since it returns a larger stream.

Position annotations mark the size annotation locations in the type of the (co)fixpoint where we are allowed to assign the same size expression. This is why we can give the

![]() $\mathit{minus}$

fixpoint the type

$\mathit{minus}$

fixpoint the type

![]() , for instance. In general, if a (co)fixpoint has a position annotation on an argument type and the return type, we say that it is size preserving in that argument. Intuitively, f is size preserving over an argument e if using fe in place of e should be allowed, size-wise.

, for instance. In general, if a (co)fixpoint has a position annotation on an argument type and the return type, we say that it is size preserving in that argument. Intuitively, f is size preserving over an argument e if using fe in place of e should be allowed, size-wise.

2.1.3 Environments and Signatures

We divide environments into local and global ones. They consist of declarations, which can be either assumptions or definitions. While local environments represent bindings introduced by functions and let expressions, global environments represent top-level declarations corresponding to Coq vernacular. We may also refer to global environments alone as programs. Telescopes (that is, environments consisting only of local assumptions) are used in syntactic sugar: given

![]() ,

,

![]() ${{\Pi {\Delta} \mathpunct{.} {t}}}$

is sugar for

${{\Pi {\Delta} \mathpunct{.} {t}}}$

is sugar for

![]() $\prod{x_1}{t_1}{\dots \prod{x_i}{t_i}{\dots t}}$

, while

$\prod{x_1}{t_1}{\dots \prod{x_i}{t_i}{\dots t}}$

, while

![]() is the sequence

is the sequence

![]() $\overline{x_i}$

. Additionally,

$\overline{x_i}$

. Additionally,

![]() $\Delta^\infty$

denotes telescopes containing only full terms.

$\Delta^\infty$

denotes telescopes containing only full terms.

We use

![]() ,

,

![]() , and

, and

![]() to represent the presence of some declaration binding x, the given assumption, and the given definition in

to represent the presence of some declaration binding x, the given assumption, and the given definition in

![]() $\Gamma$

, respectively, and similarly for

$\Gamma$

, respectively, and similarly for

![]() $\Gamma_G$

and

$\Gamma_G$

and

![]() $\Delta$

.

$\Delta$

.

Signatures consist of mutual (co)inductive definitions. For simplicity, throughout the judgements in this paper, we assume some fixed, implicit signature

![]() $\Sigma$

separate from the global environment, so that well-formedness of environments can be defined separately from that of (co)inductive definitions. Global environments and signatures should be easily extendible to an interleaving of declarations and (co)inductive definitions, which would be more representative of a real program. A mutual (co)inductive definition

$\Sigma$

separate from the global environment, so that well-formedness of environments can be defined separately from that of (co)inductive definitions. Global environments and signatures should be easily extendible to an interleaving of declarations and (co)inductive definitions, which would be more representative of a real program. A mutual (co)inductive definition

![]() consists of the following:

consists of the following:

-

$I_i$

, the names of the defined (co)inductive types;

$I_i$

, the names of the defined (co)inductive types; -

$\Delta_p$

, the parameters common to all

$\Delta_p$

, the parameters common to all

$I_i$

;

$I_i$

; -

$\Delta^\infty_i$

, the indices of each

$\Delta^\infty_i$

, the indices of each

$I_i$

;

$I_i$

; -

$U_i$

, the universe to which

$U_i$

, the universe to which

$I_i$

belongs;

$I_i$

belongs; -

$c_j$

, the names of the defined constructors;

$c_j$

, the names of the defined constructors; -

$\Delta^\infty_j$

, the arguments of each

$\Delta^\infty_j$

, the arguments of each

$c_j$

;

$c_j$

; -

$I_j$

, the (co)inductive type to which

$I_j$

, the (co)inductive type to which

$c_j$

belongs; and

$c_j$

belongs; and -

$\overline{t^\infty}_j$

, the indices to

$\overline{t^\infty}_j$

, the indices to

$I_j$

.

$I_j$

.

We require that the index and argument types be full types and terms. Note also that

![]() $I_j$

is the (co)inductive type of the jth constructor, not the jth (co)inductive in the sequence

$I_j$

is the (co)inductive type of the jth constructor, not the jth (co)inductive in the sequence

![]() $\overline{I_i}$

. We forgo the more precise notation

$\overline{I_i}$

. We forgo the more precise notation

![]() $I_{i_j}$

for brevity.

$I_{i_j}$

for brevity.

As a concrete example, the usual ![]() type (using

type (using

as syntactic sugar for

![]() ${\Pi x \mathbin{:} t \mathpunct{.} u}$

) would be defined as:

${\Pi x \mathbin{:} t \mathpunct{.} u}$

) would be defined as:

As mentioned in the previous section, unlike CIC

![]() $\widehat{~}$

and CIC

$\widehat{~}$

and CIC

![]() $\widehat{_-}$

, our (co)inductive definitions don’t have parameter polarity annotations. In those languages, for

$\widehat{_-}$

, our (co)inductive definitions don’t have parameter polarity annotations. In those languages, for ![]() parameter for instance, they might write

parameter for instance, they might write

![]() , giving it positive polarity, so that

, giving it positive polarity, so that

![]() is a subtype of

is a subtype of

![]() .

.

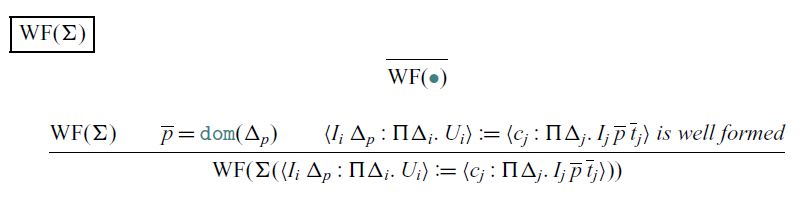

As is standard, the well-formedness of (co)inductive definitions depends not only on the well-typedness of its types but also on syntactic positivity conditions. We reproduce the strict positivity conditions in Appendix 1, and refer the reader to clauses I1–I9 in Sacchini (Reference Sacchini2011), clauses 1–7 in Barthe et al. (Reference Barthe, Grégoire and Pastawski2006), and the Coq Manual (The Coq Development Team, 2021) for further details. As CIC

![]() $\widehat{\ast}$

doesn’t support nested (co)inductives, we don’t need the corresponding notion of nested positivity. Furthermore, we assume that our fixed, implicit signature is well-formed, or that each definition in the signature is well-formed. The definitions of well-formedness of (co)inductives and signatures are also given in Appendix 1.

$\widehat{\ast}$

doesn’t support nested (co)inductives, we don’t need the corresponding notion of nested positivity. Furthermore, we assume that our fixed, implicit signature is well-formed, or that each definition in the signature is well-formed. The definitions of well-formedness of (co)inductives and signatures are also given in Appendix 1.

2.2 Reduction and Convertibility

The reduction rules listed in Figure 2 are the usual ones for CIC with definitions:

![]() $\beta$

-reduction (function application),

$\beta$

-reduction (function application),

![]() $\zeta$

-reduction (let expression evaluation),

$\zeta$

-reduction (let expression evaluation),

![]() $\iota$

-reduction (case expressions),

$\iota$

-reduction (case expressions),

![]() $\mu$

-reduction (fixpoint expressions),

$\mu$

-reduction (fixpoint expressions),

![]() $\nu$

-reduction (cofixpoint expressions),

$\nu$

-reduction (cofixpoint expressions),

![]() $\delta$

-reduction (local definitions), and

$\delta$

-reduction (local definitions), and

![]() $\Delta$

-reduction (global definitions).

$\Delta$

-reduction (global definitions).

Figure 2. Reduction rules

In the case of

![]() $\delta$

-/

$\delta$

-/

![]() $\Delta$

-reduction, where the variable or constant has a size substitution annotation, we modify the usual rules. These reduction rules are important for supporting size inference with definitions. If the definition body contains (co)inductive types (or other defined variables and constants), we can assign them fresh size variables for each distinct usage of the defined variable. Further details are discussed in Section 4.

$\Delta$

-reduction, where the variable or constant has a size substitution annotation, we modify the usual rules. These reduction rules are important for supporting size inference with definitions. If the definition body contains (co)inductive types (or other defined variables and constants), we can assign them fresh size variables for each distinct usage of the defined variable. Further details are discussed in Section 4.

Much of the reduction behaviour is expressed in terms of term and size substitution. Capture-avoiding substitution is denoted with

![]() , and simultaneous substitution with

, and simultaneous substitution with

![]() .

.

![]() denotes applying the substitutions

denotes applying the substitutions

![]() ${{e} [\overline{{\upsilon_i} := {s_i}}]}$

for every

${{e} [\overline{{\upsilon_i} := {s_i}}]}$

for every

![]() $\upsilon_i \mapsto s_i$

in

$\upsilon_i \mapsto s_i$

in

![]() $\rho$

, and similarly for

$\rho$

, and similarly for

![]() .

.

This leaves applications of size substitutions to environments, and to size substitutions themselves when they appear as annotations on variables and constants. A variable

![]() bound to

bound to

![]() ${{x} \mathbin{:} {t} \mathbin{:=} {e}}$

in the environment, for instance, can be thought of as a delayed application of the sizes

${{x} \mathbin{:} {t} \mathbin{:=} {e}}$

in the environment, for instance, can be thought of as a delayed application of the sizes

![]() $\overline{s}$

, with the definition implicitly abstracting over all size variables

$\overline{s}$

, with the definition implicitly abstracting over all size variables

![]() $\overline{\upsilon}$

. Therefore, the “free size variables” of the annotated variable are those in

$\overline{\upsilon}$

. Therefore, the “free size variables” of the annotated variable are those in

![]() $\overline{s}$

, and given some size substitution

$\overline{s}$

, and given some size substitution

![]() $\rho$

, we have that

$\rho$

, we have that

![]() $\rho x^{\{{\overline{\upsilon \mapsto s}}\}} = x^{\{{\overline{\upsilon \mapsto \rho s}}\}}$

. Meanwhile, we treat all

$\rho x^{\{{\overline{\upsilon \mapsto s}}\}} = x^{\{{\overline{\upsilon \mapsto \rho s}}\}}$

. Meanwhile, we treat all

![]() $\overline{\upsilon}$

in the definition as bound, so that

$\overline{\upsilon}$

in the definition as bound, so that

![]() $\rho(\Gamma_1 ({{x} \mathbin{:} {t} \mathbin{:=} {e}}) \Gamma_2) = (\rho\Gamma_1)({{x} \mathbin{:} {t} \mathbin{:=} {e}})(\rho\Gamma_2)$

, skipping over all definitions, and similarly for global environments.

$\rho(\Gamma_1 ({{x} \mathbin{:} {t} \mathbin{:=} {e}}) \Gamma_2) = (\rho\Gamma_1)({{x} \mathbin{:} {t} \mathbin{:=} {e}})(\rho\Gamma_2)$

, skipping over all definitions, and similarly for global environments.

Finally,

![]() is syntactic equality up to

is syntactic equality up to

![]() $\alpha$

-equivalence (renaming), the erasure metafunction

$\alpha$

-equivalence (renaming), the erasure metafunction

![]() removes all size annotations from a sized term, and

removes all size annotations from a sized term, and

![]() yields the cardinality of its argument (e.g. sequence length, set size, etc.).

yields the cardinality of its argument (e.g. sequence length, set size, etc.).

We define reduction (

![]() $\rhd$

) as the congruent closure of the reductions, multi-step reduction (

$\rhd$

) as the congruent closure of the reductions, multi-step reduction (

![]() $\rhd^*$

) in Figure 3 as the reflexive–transitive closure of

$\rhd^*$

) in Figure 3 as the reflexive–transitive closure of

![]() $\rhd$

, and convertibility (

$\rhd$

, and convertibility (

![]() $\approx*$

) in Figure 4. The latter also includes

$\approx*$

) in Figure 4. The latter also includes

![]() $\eta$

-convertibility, which is presented informally in the Coq manual (The Coq Development Team, 2021) and formally (but part of typed conversion) by Abel et al. (Reference Abel, Öhman and Vezzosi2017). Note that there are no explicit rules for symmetry and transitivity of convertibility because these properties are derivable, as proven by Abel et al. (Reference Abel, Öhman and Vezzosi2017).

$\eta$

-convertibility, which is presented informally in the Coq manual (The Coq Development Team, 2021) and formally (but part of typed conversion) by Abel et al. (Reference Abel, Öhman and Vezzosi2017). Note that there are no explicit rules for symmetry and transitivity of convertibility because these properties are derivable, as proven by Abel et al. (Reference Abel, Öhman and Vezzosi2017).

Figure 3. Multi-step reduction rules.

Figure 4. Convertibility rules.

2.3 Subtyping and Positivity

First, we define the subsizing relation in Figure 5. Subsizing is straightforward since our size algebra is simple. Notice that both

![]() and

and

![]() hold.

hold.

Figure 5. Subsizing rules.

The subtyping rules in Figure 6 extend those of cumulative CIC with rules for sized (co)inductive types. In other words, they extend those of CIC

![]() $\widehat{~}$

, CIC

$\widehat{~}$

, CIC

![]() $\widehat{_-}$

, and CC

$\widehat{_-}$

, and CC

![]() $\widehat{\omega}$

with universe cumulativity. The name

$\widehat{\omega}$

with universe cumulativity. The name ![]() is retained merely for uniformity with Coq’s kernel; it could also have been named

is retained merely for uniformity with Coq’s kernel; it could also have been named

![]() .

.

Figure 6. Subtyping rules.

Figure 7. Positivity/negativity of size variables in terms.

Inductive types are covariant in their size annotations with respect to subsizing (Rule st-ind), while coinductive types are contravariant (Rule st-coind). Coming back to the examples in the previous section, this means that

![]() holds as we expect, since a list with s elements has no more than

holds as we expect, since a list with s elements has no more than

![]() $\hat{s}$

elements; dually,

$\hat{s}$

elements; dually,

![]() holds as well, since a stream producing

holds as well, since a stream producing

![]() $\hat{s}$

elements also produces no fewer than s elements.

$\hat{s}$

elements also produces no fewer than s elements.

Rules st-prod and st-app differ from past work in their variance, but correspond to those in Coq. As (co)inductive definitions have no polarity annotations, we treat all parameters as ordinary, invariant function arguments. The remaining rules are otherwise standard.

In addition to subtyping, we define a positivity and negativity judgements like in past work. They are syntactic approximations of monotonicity properties of subtyping with respect to size variables; we have that

![]() $\upsilon \text{pos } t \Leftrightarrow t \leq t[\upsilon:={\hat{\upsilon}}]$

and

$\upsilon \text{pos } t \Leftrightarrow t \leq t[\upsilon:={\hat{\upsilon}}]$

and

![]() $\upsilon \text{neg } t \Leftrightarrow t [\upsilon:={\hat{\upsilon}}] \leq t$

hold. Positivity and negativity are then used to indicate where position annotations are allowed to appear in the types of (co)fixpoints, as we will see in the typing rules.

$\upsilon \text{neg } t \Leftrightarrow t [\upsilon:={\hat{\upsilon}}] \leq t$

hold. Positivity and negativity are then used to indicate where position annotations are allowed to appear in the types of (co)fixpoints, as we will see in the typing rules.

2.4 Typing and Well-Formedness

We begin with the rules for well-formedness of local and global environments, presented in Figure 8. As mentioned, this and the typing judgements implicitly contain a signature

![]() $\Sigma$

, whose well-formedness is assumed. Additionally, we use

$\Sigma$

, whose well-formedness is assumed. Additionally, we use

![]() $\_$

to omit irrelevant constructions for readability.

$\_$

to omit irrelevant constructions for readability.

Figure 8. Well-formedness of environments.

The typing rules for sized terms are given in Figure 11. As in CIC, we define the three sets Axioms and Rules (Barendregt, 1993), as well as Elims, in Figure 9. These describe how universes are typed, how products are typed, and what eliminations are allowed in case expressions, respectively. Metafunctions that construct important function types for inductive types, constructors, and case expressions are listed in Figure 10 they are also used by the inference algorithm in Section 4.

Figure 9. Universe relations: Axioms, Rules, and Eliminations.

Figure 10. Metafunctions for typing rules.

Figure 11. Typing rules.

Rules var-assum, const-assum, univ, cumul, pi, and app are essentially unchanged from CIC. Rules lam and let differ only in that type annotations need to be bare to preserve subject reduction.

The first significant usage of size annotations are in Rules var-def and const-def. If a variable or a constant is bound to a term in the local or global environment, it is annotated with a size substitution such that the term is well typed after performing the substitution, allowing for proper

![]() $\delta$

-/

$\delta$

-/

![]() $\Delta$

-reduction of variables and constants. Notably, each usage of a variable or a constant doesn’t have to have the same size annotations.

$\Delta$

-reduction of variables and constants. Notably, each usage of a variable or a constant doesn’t have to have the same size annotations.

Inductive types and constructors are typed mostly similar to CIC, with their types specified by indType and constrtype. In Rule ind, the (co)inductive type itself holds a single size annotation. In Rule constr, size annotations appear in two places:

-

In the argument types of the constructor. We annotate each occurrence of

$I_j$

in the arguments

$I_j$

in the arguments

$\Delta^\infty_j$

with a size expression s.

$\Delta^\infty_j$

with a size expression s. -

On the (co)inductive type of the fully-applied constructor, which is annotated with the size expression

$\hat{s}$

. Using the successor guarantees that the constructor always constructs a construction that is larger than any of its arguments of the same type.

$\hat{s}$

. Using the successor guarantees that the constructor always constructs a construction that is larger than any of its arguments of the same type.

As an example, consider a possible typing of VCons:

It has a single parameter A and

corresponds to the index

![]() $\overline{t^\infty}_j$

of the constructor’s inductive type. The input

$\overline{t^\infty}_j$

of the constructor’s inductive type. The input ![]() has size s, while the output

has size s, while the output ![]() has size

has size

![]() $\hat{s}$

.

$\hat{s}$

.

In Rule case, a case expression has three parts:

-

The target e that is being destructed. It must have a (co)inductive type I with a successor size annotation

$\hat{s}_k$

so that recursive constructor arguments have the predecessor size annotation.

$\hat{s}_k$

so that recursive constructor arguments have the predecessor size annotation. -

The motive P, which yields the return type of the case expression. While it ranges over the (co)inductive’s indices, the parameter variables

$\text{dom}({\Delta_p})$

in the indices’ types are bound to the parameters

$\text{dom}({\Delta_p})$

in the indices’ types are bound to the parameters

$\overline{p}$

of the target type. As usual, the universe of the motive U is restricted by the universe of the (co)inductive U’ according to Elims.

$\overline{p}$

of the target type. As usual, the universe of the motive U is restricted by the universe of the (co)inductive U’ according to Elims.

(This presentation follows that of Coq 8.12, but differs from those of Coq 8.13 and by Sacchini (Reference Sacchini2011, Reference Sacchini2014, Reference Sacchini2013), where the case expression contains a return type in which the index and target variables are free and explicitly stated, in the syntactic form

![]() $\overline{y}.x.P$

.)

$\overline{y}.x.P$

.)

-

The branches

$e_j$

, one for each constructor

$e_j$

, one for each constructor

$c_j$

. Again, the parameters of its type are fixed to

$c_j$

. Again, the parameters of its type are fixed to

$\overline{p}$

, while ranging over the constructor arguments. Note that like in the type of constructors, we annotate each occurrence of

$\overline{p}$

, while ranging over the constructor arguments. Note that like in the type of constructors, we annotate each occurrence of

$c_j$

’s (co)inductive type I in

$c_j$

’s (co)inductive type I in

$\Delta_j$

with the size expression s.

$\Delta_j$

with the size expression s.

Finally, we have the typing of mutual (co)fixpoints in rules fix and cofix. We take the annotated type

![]() $t_k$

of the kth (co)fixpoint definition to be convertible to a function type containing a (co)inductive type, as usual. However, instead of the guard condition, we ensure termination/productivity using size expressions.

$t_k$

of the kth (co)fixpoint definition to be convertible to a function type containing a (co)inductive type, as usual. However, instead of the guard condition, we ensure termination/productivity using size expressions.

The main complexity in these rules is supporting size-preserving (co)fixpoints. We must restrict how the size variable

![]() $v_k$

appears in the type of the (co)fixpoints using the positivity and negativity judgements. For fixpoints, the type of the

$v_k$

appears in the type of the (co)fixpoints using the positivity and negativity judgements. For fixpoints, the type of the

![]() $n_k$

th argument, the recursive argument, is an inductive type annotated with a size variable

$n_k$

th argument, the recursive argument, is an inductive type annotated with a size variable

![]() $v_k$

. For cofixpoints, the return type is a coinductive type annotated with

$v_k$

. For cofixpoints, the return type is a coinductive type annotated with

![]() $v_k$

. The positivity or negativity of

$v_k$

. The positivity or negativity of

![]() $v_k$

in the rest of

$v_k$

in the rest of

![]() $t_k$

indicate where

$t_k$

indicate where

![]() $v_k$

may occur other than in the (co)recursive position. For instance, supposing that

$v_k$

may occur other than in the (co)recursive position. For instance, supposing that

![]() $n = 1$

,

$n = 1$

,

![]() is a valid fixpoint type with respect to

is a valid fixpoint type with respect to

![]() $\upsilon$

, while

$\upsilon$

, while

![]() is not, since

is not, since

![]() $\upsilon$

illegally appears negatively in

$\upsilon$

illegally appears negatively in ![]() and must not appear at all in the parameter of the second

and must not appear at all in the parameter of the second ![]() argument type. This restriction ensures the aforementioned monotonicity property of subtyping for the (co)fixpoints’ types, so that

argument type. This restriction ensures the aforementioned monotonicity property of subtyping for the (co)fixpoints’ types, so that

![]() $u_k \leq {u_k}[{\upsilon_k}:={\hat{\upsilon}_k}]$

holds for fixpoints, and that

$u_k \leq {u_k}[{\upsilon_k}:={\hat{\upsilon}_k}]$

holds for fixpoints, and that

![]() ${u}[{\upsilon_k}:={\hat{\upsilon}_k}] \leq u$

for each type u in

${u}[{\upsilon_k}:={\hat{\upsilon}_k}] \leq u$

for each type u in

![]() $\Delta_k$

holds for cofixpoints.

$\Delta_k$

holds for cofixpoints.

As in Rule lam, to maintain subject reduction, we cannot keep the size annotations, instead replacing them with position variables. The metafunction

![]() replaces

replaces

![]() $\upsilon$

annotations with the position annotation

$\upsilon$

annotations with the position annotation

![]() $\ast$

and erases all other size annotations.

$\ast$

and erases all other size annotations.

Checking termination and productivity is then relatively straightforward. If

![]() $t_k$

are well typed, then the (co)fixpoint bodies should have type

$t_k$

are well typed, then the (co)fixpoint bodies should have type

![]() $t_k$

with a successor size when

$t_k$

with a successor size when

![]() $({{f_k} \mathbin{:} {t_k}})$

are in the local environment. This tells us that the recursive calls to

$({{f_k} \mathbin{:} {t_k}})$

are in the local environment. This tells us that the recursive calls to

![]() $f_k$

in fixpoint bodies are on smaller-sized arguments, and that corecursive bodies produce constructions with size larger than those from the corecursive call to

$f_k$

in fixpoint bodies are on smaller-sized arguments, and that corecursive bodies produce constructions with size larger than those from the corecursive call to

![]() $f_k$

. The type of the mth (co)fixpoint is then the mth type

$f_k$

. The type of the mth (co)fixpoint is then the mth type

![]() $t_m$

with some size expression s substituted for the size variable

$t_m$

with some size expression s substituted for the size variable

![]() $v_m$

.

$v_m$

.

In Coq, the indices of the recursive elements are often elided, and there are no user-provided position annotations at all. We show how indices and position annotations can be computed during size inference in Section 4.

3 Metatheoretical Results

In this section, we describe the metatheory of CIC

![]() $\widehat{\ast}$

. Some of the metatheory is inherited or essentially similar to past work (Sacchini, Reference Sacchini2011, Reference Sacchini2013; Barthe et al., Reference Barthe, Grégoire and Pastawski2006), although we must adapt key proofs to account for differences in subtyping and definitions. Complete proofs for a language like CIC

$\widehat{\ast}$

. Some of the metatheory is inherited or essentially similar to past work (Sacchini, Reference Sacchini2011, Reference Sacchini2013; Barthe et al., Reference Barthe, Grégoire and Pastawski2006), although we must adapt key proofs to account for differences in subtyping and definitions. Complete proofs for a language like CIC

![]() $\widehat{\ast}$

are too involved to present in full, so we provide only key lemmas and proof sketches.

$\widehat{\ast}$

are too involved to present in full, so we provide only key lemmas and proof sketches.

In short, CIC

![]() $\widehat{\ast}$

satisfies confluence and subject reduction, with the same caveats as in CIC for cofixpoints. While strong normalization and logical consistency have been proven for a variant of CIC

$\widehat{\ast}$

satisfies confluence and subject reduction, with the same caveats as in CIC for cofixpoints. While strong normalization and logical consistency have been proven for a variant of CIC

![]() $\widehat{\ast}$

with features that violate backward compatibility, proofs for CIC

$\widehat{\ast}$

with features that violate backward compatibility, proofs for CIC

![]() $\widehat{\ast}$

itself remain future work.

$\widehat{\ast}$

itself remain future work.

3.1 Confluence

Recall that we define

![]() $\rhd$

as the congruent closure of

$\rhd$

as the congruent closure of

![]() $\beta\zeta\delta\Delta\iota\mu\nu$

-reduction and

$\beta\zeta\delta\Delta\iota\mu\nu$

-reduction and

![]() $\rhd^*$

as the reflexive–transitive closure of

$\rhd^*$

as the reflexive–transitive closure of

![]() $\rhd$

.

$\rhd$

.

Theorem 3.1

(Confluence) If

![]() and

and

![]() , then there is some term e’ such that

, then there is some term e’ such that

![]() and

and

![]() .

.

Proof [sketch]. The proof is relatively standard. We use the Takahashi translation technique due to Komori et al. (Reference Komori, Matsuda and Yamakawa2014), which is a simplification of the standard parallel reduction technique. It uses the Takahashi translation

![]() $e^\dagger$

of terms e, defined as the simultaneous single-step reduction of all

$e^\dagger$

of terms e, defined as the simultaneous single-step reduction of all

![]() $\beta\zeta\delta\Delta\iota\mu\nu$

-redexes of e in left-most inner-most order. One notable aspect of this proof is that to handle let expressions that introduce local definitions, we need to extend the Takahashi method to support local definitions. This is essentially the same as the presentation in Section 2.3.2 of Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019). In particular, we require Theorem 2.1 (Parallel Substitution) of Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019) to ensure that

$\beta\zeta\delta\Delta\iota\mu\nu$

-redexes of e in left-most inner-most order. One notable aspect of this proof is that to handle let expressions that introduce local definitions, we need to extend the Takahashi method to support local definitions. This is essentially the same as the presentation in Section 2.3.2 of Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019). In particular, we require Theorem 2.1 (Parallel Substitution) of Sozeau et al. (Reference Sozeau, Boulier, Forster, Tabareau and Winterhalter2019) to ensure that

![]() $\delta$

-reduction (i.e. reducing a let-expression) is confluent. The exact statement of parallel substitution adapted to our setting is given in the following Lemma 3.2.

$\delta$

-reduction (i.e. reducing a let-expression) is confluent. The exact statement of parallel substitution adapted to our setting is given in the following Lemma 3.2.

Lemma 3.2 (Parallel Substitution) Fix contexts

![]() . For all terms e,t and x free in e, we have

. For all terms e,t and x free in e, we have

![]() ${{e} [{x} := {t}]}^{\dagger} = {{e^\dagger} [{x} := {t^\dagger}]}$

, where

${{e} [{x} := {t}]}^{\dagger} = {{e^\dagger} [{x} := {t^\dagger}]}$

, where

![]() $-^\dagger$

denotes the Takahashi translation (simultaneous single-step reduction of all redexes in left-most inner-most order) in

$-^\dagger$

denotes the Takahashi translation (simultaneous single-step reduction of all redexes in left-most inner-most order) in

![]() .

.

3.2 Subject Reduction

Subject reduction does not hold in CIC

![]() $\widehat{\ast}$

or in Coq due to the way coinductives are presented. This is a well-known problem, discussed previously in a sized-types setting by Sacchini (Reference Sacchini2013), on which our presentation of coinductives is based, as well as by the Coq developers

Footnote 2

.

$\widehat{\ast}$

or in Coq due to the way coinductives are presented. This is a well-known problem, discussed previously in a sized-types setting by Sacchini (Reference Sacchini2013), on which our presentation of coinductives is based, as well as by the Coq developers

Footnote 2

.

In brief, the current presentation of coinductives requires that cofixpoint reduction be restricted, i.e. occurring only when it is the target of a case expression. This allows for strong normalization of cofixpoints in much the same way restricting fixpoint reduction to when the recursive argument is syntactically a fully-applied constructor does. One way this can break subject reduction is by making the type of a case expression not be convertible before and after the cofixpoint reduction. As a concrete example, consider the following coinductive definition for conaturals.

For some motive P and branch e, we have the following

![]() $\nu$

-reduction.

$\nu$

-reduction.

Assuming both terms are well typed, the former has type

while the latter has type

, but for an arbitrary P these aren’t convertible without allowing cofixpoints to reduce arbitrarily.

On the other hand, if we do allow unrestricted

![]() $\nu'$

-reduction as in Figure 12, subject reduction does hold, at the expense of normalization, as a cofixpoint on its own could reduce indefinitely.

$\nu'$

-reduction as in Figure 12, subject reduction does hold, at the expense of normalization, as a cofixpoint on its own could reduce indefinitely.

Theorem 3.3

(Subject Reduction) Let

![]() $\Sigma$

be a well-formed signature. Suppose

$\Sigma$

be a well-formed signature. Suppose

![]() $\rhd$

includes unrestricted

$\rhd$

includes unrestricted

![]() $\nu'$

-reduction of cofixpoints. Then

$\nu'$

-reduction of cofixpoints. Then

![]() and

and

![]() $e \rhd e'$

implies

$e \rhd e'$

implies

![]() .

.

Proof [sketch]. By induction on

![]() . Most cases are straightforward, making use of confluence when necessary, such as for a lemma of

. Most cases are straightforward, making use of confluence when necessary, such as for a lemma of

![]() $\Pi$

-injectivity to handle

$\Pi$

-injectivity to handle

![]() $\beta$

-reduction in Rule app. The case for Rule case where

$\beta$

-reduction in Rule app. The case for Rule case where

![]() $e \rhd e'$

by

$e \rhd e'$

by

![]() $\iota$

-reduction relies on the fact that if x is the name of a (co)inductive type and appears strictly positively in t, then x appears covariantly in t. (This is only true without nested (co)inductive types, which CIC

$\iota$

-reduction relies on the fact that if x is the name of a (co)inductive type and appears strictly positively in t, then x appears covariantly in t. (This is only true without nested (co)inductive types, which CIC

![]() $\widehat{\ast}$

disallows in well-formed signatures.)

$\widehat{\ast}$

disallows in well-formed signatures.)

Figure 12. Reduction rules with unrestricted cofixpoint reduction.

The case for Rule case and e (guarded)

![]() $\nu$

-reduces to e’ requires an unrestricted

$\nu$

-reduces to e’ requires an unrestricted

![]() $\nu$

-reduction. After guarded

$\nu$

-reduction. After guarded

![]() $\nu$

-reduction, the target (a cofixpoint) appears in the motive unguarded by a case expression, but must be unfolded to re-establish typing the type t.

$\nu$

-reduction, the target (a cofixpoint) appears in the motive unguarded by a case expression, but must be unfolded to re-establish typing the type t.

3.2.1 The Problem with Nested Inductives

Recall from Section 2 that we disallow nested (co)inductive types. This means that when defining a (co)inductive type, it cannot recursively appear as the parameter of another type. For instance, the following definition ![]() , while equivalent to

, while equivalent to ![]() , is disallowed due to the appearance of

, is disallowed due to the appearance of

as a parameter of ![]() .

.

Notice that we have the subtyping relation

, but as all parameters are invariant for backward compatibility and need to be convertible, we do not have

. But because case expressions on some target

force recursive arguments to have size s exactly, and the target also has type

by cumulativity, the argument of S could have both type

and

, violating convertibility. We exploit this fact and break subject reduction explicitly with the following counterexample term.

By cumulativity, the target can be typed as

(that is, with size

![]() $\hat{\hat{\hat{\upsilon}}}$

). By Rule case, the second branch must then have type

$\hat{\hat{\hat{\upsilon}}}$

). By Rule case, the second branch must then have type

(and so it does). Then the case expression is well typed with type

. However, once we reduce the case expression, we end up with a term that is no longer well typed.

By Rule app, the second argument should have type

(or a subtype thereof), but it cannot: the only type the second argument can have is

.

There are several possible solutions, all threats to backward compatibility. CIC

![]() $\widehat{~}$

’s solution is to require that constructors be fully-applied and that their parameters be bare terms, so that we are forced to write

$\widehat{~}$

’s solution is to require that constructors be fully-applied and that their parameters be bare terms, so that we are forced to write

![]() . The problem with this is that Coq treats constructors essentially like functions, and ensuring that they are fully applied with bare parameters would require either reworking how they are represented internally or adding an intermediate step to elaborate partially-applied constructors into functions whose bodies are fully-applied constructors. The other solution, as mentioned, is to add polarities back in, so that

. The problem with this is that Coq treats constructors essentially like functions, and ensuring that they are fully applied with bare parameters would require either reworking how they are represented internally or adding an intermediate step to elaborate partially-applied constructors into functions whose bodies are fully-applied constructors. The other solution, as mentioned, is to add polarities back in, so that ![]() with positive polarity in its parameter yields the subtyping relation

with positive polarity in its parameter yields the subtyping relation

![]() .

.

Interestingly, because the implementation infers all size annotations from a completely bare program, our counterexample and similar ones exploiting explicit size annotations aren’t directly expressible, and don’t appear to be generated by the algorithm, which would solve for the smallest size annotations. For the counterexample, in the second branch, the size annotation would be (a size constrained to be equal to)

![]() $\hat{\upsilon}$

. We conjecture that the terms synthesized by the inference algorithm do indeed satisfy subject reduction even in the presence of nested (co)inductives by being a strict subset of all well-typed terms that excludes counterexamples like the above.

$\hat{\upsilon}$

. We conjecture that the terms synthesized by the inference algorithm do indeed satisfy subject reduction even in the presence of nested (co)inductives by being a strict subset of all well-typed terms that excludes counterexamples like the above.

3.2.2 Bareness of Type Annotations

As mentioned in Figure 1, type annotations on functions and let expressions as well as case expression motives and (co)fixpoint types need to be bare terms (or position terms, for the latter) to maintain subject reduction. To see why, suppose they were not bare, and consider the term

![]() . Under empty environments, the fixpoint argument is well typed with type

. Under empty environments, the fixpoint argument is well typed with type

![]() for any size expression s, while the fixpoint itself is well typed with type

for any size expression s, while the fixpoint itself is well typed with type

![]() for any size expression r. For the application to be well typed, it must be that r is

for any size expression r. For the application to be well typed, it must be that r is

![]() $\hat{\hat{s}}$

, and the entire term has type

$\hat{\hat{s}}$

, and the entire term has type

![]() .

.

By the

![]() $\mu$

-reduction rule, this steps to the term

$\mu$

-reduction rule, this steps to the term

![]() . Unfortunately, the term is no longer well typed, as

. Unfortunately, the term is no longer well typed, as

![]() cannot be typed with type

cannot be typed with type

![]() as is required. By erasing the type annotation of the function, there is no longer a restriction on what size the function argument must have, and subject reduction is no longer broken. An alternate solution is to substitute

as is required. By erasing the type annotation of the function, there is no longer a restriction on what size the function argument must have, and subject reduction is no longer broken. An alternate solution is to substitute

![]() $\tau$

for

$\tau$

for

![]() $\hat{s}$

during

$\hat{s}$

during

![]() $\mu$

-reduction, but this requires typed reduction to know what the correct size to substitute is, violating backward compatibility with Coq, whose reduction and convertibility rules are untyped.

$\mu$

-reduction, but this requires typed reduction to know what the correct size to substitute is, violating backward compatibility with Coq, whose reduction and convertibility rules are untyped.

3.3 Strong Normalization and Logical Consistency

Following strong normalization and logical consistency for CIC

![]() $\widehat{_-}$

and CC

$\widehat{_-}$

and CC

![]() $\widehat{\omega}$

, we conjecture that they hold for CIC

$\widehat{\omega}$

, we conjecture that they hold for CIC

![]() $\widehat{\ast}$

as well. We present some details of a model constructed in our a proof attempt; unfortunately, the model requires changes to CIC

$\widehat{\ast}$

as well. We present some details of a model constructed in our a proof attempt; unfortunately, the model requires changes to CIC

![]() $\widehat{\ast}$

that are backward incompatible with Coq, so we don’t pursue it further. We discuss from where these backward-incompatible changes arise for posterity.

$\widehat{\ast}$

that are backward incompatible with Coq, so we don’t pursue it further. We discuss from where these backward-incompatible changes arise for posterity.

Conjecture 3.4

(Strong Normalization) If

![]() then there are no infinite reduction sequences starting from e.

then there are no infinite reduction sequences starting from e.

Conjecture 3.5

(Logical Consistency) There is no e such that

![]() .

.

3.3.1 Proof Attempt and Apparent Requirements for Set-Theoretic Model

In attempting to prove normalization and consistency, we developed a variant of CIC

![]() $\widehat{\ast}$

called CIC

$\widehat{\ast}$

called CIC

![]() $\widehat{\ast}$

-Another which made a series of simplifying assumptions suggested by the proof attempt:

$\widehat{\ast}$

-Another which made a series of simplifying assumptions suggested by the proof attempt:

-

Reduction, subtyping, and convertibility are typed, as is the case for most set-theoretic models. That is, each judgement requires the type of the terms, and the derivation rules may have typing judgements as premises.

-

A new size irrelevant typing judgement is needed, similar to that introduced by Barras (Reference Barras2012). While CIC

$\widehat{\ast}$

is probably size irrelevant, this is not clear in the model without an explicit judgement.

$\widehat{\ast}$

is probably size irrelevant, this is not clear in the model without an explicit judgement. -

Fixpoint type annotations require explicit size annotations (i.e. are no longer merely position terms) and explicitly abstract over a size variable, and fixpoints are explicitly applied to a size expression. The typing rule no longer erases the type, and the size in the fixpoint type is fixed.

The fixpoint above binds the size variable

![]() $\upsilon$

in t and in e. The reduction rule adds an additional substitution of the predecessor of the size expression, in line with how f may only be called in e with a smaller size.

$\upsilon$

in t and in e. The reduction rule adds an additional substitution of the predecessor of the size expression, in line with how f may only be called in e with a smaller size.

-

Rather than inductive definitions in general, only predicative W types are considered. W types can be defined as an inductive type:

Predicative W types only allow U to be

or

or  , while impredicative W types also allow it to be Prop. Including impredicative W types as well poses several technical challenges.

, while impredicative W types also allow it to be Prop. Including impredicative W types as well poses several technical challenges.

Because some of these changes violate backward compatibility, they cannot be adopted in CIC

![]() $\widehat{\ast}$

.

$\widehat{\ast}$

.

The literature suggests that future work could prove CIC

![]() $\widehat{\ast}$

-Another and CIC

$\widehat{\ast}$

-Another and CIC

![]() $\widehat{\ast}$

equivalent to derive that strong normalization (and therefore logical consistency) of CIC

$\widehat{\ast}$

equivalent to derive that strong normalization (and therefore logical consistency) of CIC

![]() $\widehat{\ast}$

-Another implies that they hold in CIC

$\widehat{\ast}$

-Another implies that they hold in CIC

![]() $\widehat{\ast}$

. More specifically, Abel et al. (Reference Abel, Öhman and Vezzosi2017) show that a typed and an untyped convertibility in a Martin–Löf type theory (MLTT) imply each other; and Hugunin (Reference Hugunin2021); Abbott et al. (Reference Abbott, Altenkirch and Ghani2004) show that W types in MLTT with propositional equality can encode well-formed inductive types, including nested inductive types.

$\widehat{\ast}$

. More specifically, Abel et al. (Reference Abel, Öhman and Vezzosi2017) show that a typed and an untyped convertibility in a Martin–Löf type theory (MLTT) imply each other; and Hugunin (Reference Hugunin2021); Abbott et al. (Reference Abbott, Altenkirch and Ghani2004) show that W types in MLTT with propositional equality can encode well-formed inductive types, including nested inductive types.

We leave this line of inquiry as future work

Footnote 3

, since we have other reasons to believe backward-incompatible changes are necessary in CIC

![]() $\widehat{\ast}$

to make sized typing practical. Nevertheless, we next explain where each of these changes originate and why they seem necessary for the model.

$\widehat{\ast}$

to make sized typing practical. Nevertheless, we next explain where each of these changes originate and why they seem necessary for the model.

3.3.2 Typed Reduction

Recall from Subsubsection 6.2.1 that we add universe cumulativity to the existing universe hierarchy in CIC

![]() $\widehat{_-}$

. We follow the set-theoretical model presented by Miquel and Werner (Reference Miquel and Werner2002), where

$\widehat{_-}$

. We follow the set-theoretical model presented by Miquel and Werner (Reference Miquel and Werner2002), where ![]() is treated proof-irrelevantly: its set-theoretical interpretation is the set

is treated proof-irrelevantly: its set-theoretical interpretation is the set

![]() $\{\emptyset, \{\emptyset\}\}$

, and a type in

$\{\emptyset, \{\emptyset\}\}$

, and a type in ![]() is either

is either

![]() $\{\emptyset\}$

(representing true, inhabited propositions) or

$\{\emptyset\}$

(representing true, inhabited propositions) or

![]() $\emptyset$

(representing false, uninhabited propositions).

$\emptyset$

(representing false, uninhabited propositions).

Impredicativity of function types is encoded using a trace encoding (Aczel, Reference Aczel1998). First, the trace of a (set-theoretical) function

![]() $f : A \to B$

is defined as

$f : A \to B$

is defined as

Then the interpretation of a function type

![]() $\prod{x}{t}{u}$

is defined as

$\prod{x}{t}{u}$

is defined as

where A is the interpretation of t and

![]() $B_a$

is the interpretation of u when

$B_a$

is the interpretation of u when

![]() $x = a$

, while a function

$x = a$

, while a function

![]() ${\lambda {x} : {t} \mathpunct{.} {e}}$

is interpreted as

${\lambda {x} : {t} \mathpunct{.} {e}}$

is interpreted as

![]() $\{(a, b) \mid a \in A, b \in y_a\}$

where

$\{(a, b) \mid a \in A, b \in y_a\}$

where

![]() $y_a$

is the interpretation of e when

$y_a$

is the interpretation of e when

![]() $x = a$

.

$x = a$

.

To see that this definition satisfies impredicativity, suppose that u is in Prop. Then

![]() $B_a$

is either

$B_a$

is either

![]() $\emptyset$

or

$\emptyset$

or

![]() $\{\emptyset\}$

. If it is

$\{\emptyset\}$

. If it is

![]() $\emptyset$

, then there is no possible f(a), making the interpretation of the function type itself

$\emptyset$

, then there is no possible f(a), making the interpretation of the function type itself

![]() $\emptyset$

. If it is

$\emptyset$

. If it is

![]() $\{\emptyset\}$

, then

$\{\emptyset\}$

, then

![]() $f(a) = \emptyset$

, and

$f(a) = \emptyset$

, and

![]() $\text{trace}(f) = \emptyset$

since there is no

$\text{trace}(f) = \emptyset$

since there is no

![]() $b \in f(a)$

, making the interpretation of the function type itself

$b \in f(a)$

, making the interpretation of the function type itself

![]() $\{\emptyset\}$

.

$\{\emptyset\}$

.

Since reduction is untyped, it is perfectly fine for ill-typed terms to reduce. For instance, we can have the derivation

![]() even though the left-hand side is not well typed. However, to justify a convertibility (such as a reduction) in the model, we need to show that the set-theoretic interpretations of both sides are equal. For the example above, since

even though the left-hand side is not well typed. However, to justify a convertibility (such as a reduction) in the model, we need to show that the set-theoretic interpretations of both sides are equal. For the example above, since

![]() is in

is in ![]() and is inhabited by

and is inhabited by

![]() , its interpretation must be

, its interpretation must be

![]() $\{\emptyset\}$

. Then the interpretation of the function on the left-hand side must be

$\{\emptyset\}$

. Then the interpretation of the function on the left-hand side must be

![]() $\{(\emptyset, \emptyset)\}$

. By the definition of the interpretation of application, since the interpretation of

$\{(\emptyset, \emptyset)\}$

. By the definition of the interpretation of application, since the interpretation of ![]() is not in the domain of the function, the left-hand side becomes

is not in the domain of the function, the left-hand side becomes

![]() $\emptyset$

. Meanwhile, the right-hand side is

$\emptyset$

. Meanwhile, the right-hand side is

![]() $\{\emptyset, \{\emptyset\}\}$

, and the interpretations of the two sides aren’t equal.

$\{\emptyset, \{\emptyset\}\}$

, and the interpretations of the two sides aren’t equal.

Ultimately, the set-theoretic interpretations of terms only make sense for well-typed terms, despite being definable for ill-typed ones as well. Therefore, to ensure a sensible interpretation, reduction (and therefore subtyping and convertibility) needs to be typed.

3.3.3 Size Irrelevance

In the model, we need to know that functions cannot make computational decisions based on the value of a size variable, i.e. that computation is size irrelevant. This is necessary to model functions as set-theoretic functions, since sizes are ordinals and (set-theoretic) functions quantifying over ordinals may be too large to be proper sets.

In short, while we conjecture that size irrelevance holds in CIC