1. Introduction

The minimum spanning tree (MST) problem ranks among the simplest Combinatorial Optimization problems, with many applications, well beyond its historical introduction for network design [Reference Graham and Hell17] including approximation algorithms for more complex problems [Reference Christofides13, Reference Kou, Markowsky and Berman20] and cluster analysis [Reference Asano, Bhattacharya, Keil and Yao4].

Its formulation is straightforward: given a weighted undirected graph

![]() $G=(V,E,w)$

with

$G=(V,E,w)$

with

![]() $w \,:\, E \to (0, \infty )$

, find a subgraph

$w \,:\, E \to (0, \infty )$

, find a subgraph

![]() $T\subseteq E$

that connects all nodes

$T\subseteq E$

that connects all nodes

![]() $V$

and has a minimal total edge cost

$V$

and has a minimal total edge cost

thus defining the MST cost functional

![]() $\mathcal{C}_{\text{MST}}(G)$

. Minimality yields that redundant connections can be discarded, so that the resulting subgraph

$\mathcal{C}_{\text{MST}}(G)$

. Minimality yields that redundant connections can be discarded, so that the resulting subgraph

![]() $T$

turns out to be a tree, i.e., connected and without cycles. Several algorithms have been proposed for its solution, from classical greedy to more efficient ones [Reference Chazelle12], possibly randomised [Reference Karger, Klein and Tarjan18].

$T$

turns out to be a tree, i.e., connected and without cycles. Several algorithms have been proposed for its solution, from classical greedy to more efficient ones [Reference Chazelle12], possibly randomised [Reference Karger, Klein and Tarjan18].

Despite its apparent simplicity, a probabilistic analysis of the problem, i.e., assuming that weights are random variables with a given joint law and studying the resulting random costs and MST’s yields interesting results. Moreover, it may suggest mathematical tools to deal with more complex problems, such as the Steiner tree problem or the travelling salesperson problem, where one searches instead for a cycle connecting all points having minimum total edge weight.

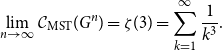

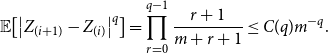

The most investigated random model is surely that of i.i.d. weights with a regular density, as first studied by Frieze [Reference Frieze16], who showed in particular the following law of large numbers: if

![]() $G^n= (V^n, E^n, w^n)$

, with

$G^n= (V^n, E^n, w^n)$

, with

![]() $(V^n,E^n)= K_n$

the complete graph over

$(V^n,E^n)= K_n$

the complete graph over

![]() $n$

nodes and

$n$

nodes and

![]() $w^n = \left ( w_{ij} \right )_{i,j =1}^n$

are independent and uniformly distributed on

$w^n = \left ( w_{ij} \right )_{i,j =1}^n$

are independent and uniformly distributed on

![]() $[0,1]$

, then almost surely

$[0,1]$

, then almost surely

\begin{equation} \lim _{n\to \infty } \mathcal{C}_{\text{MST}}(G^n) = \zeta (3) = \sum _{k=1}^\infty \frac{1}{k^3}. \end{equation}

\begin{equation} \lim _{n\to \infty } \mathcal{C}_{\text{MST}}(G^n) = \zeta (3) = \sum _{k=1}^\infty \frac{1}{k^3}. \end{equation}

Another well studied setting is provided by Euclidean models, where nodes are i.i.d. sampled points in a region (say uniformly on a cube

![]() $[0,1]^d \subseteq{\mathbb{R}}^d$

, for simplicity) and edge weights are functions of their distance, e.g.

$[0,1]^d \subseteq{\mathbb{R}}^d$

, for simplicity) and edge weights are functions of their distance, e.g.

![]() $w(x,y) = |x-y|^p$

for some parameter

$w(x,y) = |x-y|^p$

for some parameter

![]() $p\gt 0$

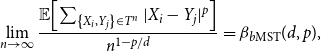

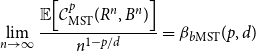

. This setting dates back at least to the seminal paper by Beardwood, Halton and Hammersley [Reference Beardwood, Halton and Hammersley8] where they focused on the travelling salesperson problem, but stated that other problems may be as well considered, including the MST one. A full analysis was later performed by Steele [Reference Steele28] who proved that, if the Euclidean graph consists of

$p\gt 0$

. This setting dates back at least to the seminal paper by Beardwood, Halton and Hammersley [Reference Beardwood, Halton and Hammersley8] where they focused on the travelling salesperson problem, but stated that other problems may be as well considered, including the MST one. A full analysis was later performed by Steele [Reference Steele28] who proved that, if the Euclidean graph consists of

![]() $n$

nodes, then for every

$n$

nodes, then for every

![]() $0\lt p\lt d$

, almost sure convergence holds

$0\lt p\lt d$

, almost sure convergence holds

where

![]() $\beta _{\text{MST}}(p,d) \in (0,\infty )$

is a constant. The rate

$\beta _{\text{MST}}(p,d) \in (0,\infty )$

is a constant. The rate

![]() $n^{1-p/d}$

is intuitively clear due to the fact that there are

$n^{1-p/d}$

is intuitively clear due to the fact that there are

![]() $n-1$

edges in a tree over

$n-1$

edges in a tree over

![]() $n$

points and the typical distance between two adjacent points is expected to be of order

$n$

points and the typical distance between two adjacent points is expected to be of order

![]() $n^{-1/d}$

. The constraint

$n^{-1/d}$

. The constraint

![]() $p\lt d$

was removed by Aldous and Steele [Reference Aldous and Steele3] and Yukich [Reference Yukich31], so that convergence holds in fact for any

$p\lt d$

was removed by Aldous and Steele [Reference Aldous and Steele3] and Yukich [Reference Yukich31], so that convergence holds in fact for any

![]() $p\gt 0$

. This result can be seen as an application of a general Euclidean additive functional theory [Reference Steele27, Reference Yukich32]. However, such general methods that work for other combinatorial optimisation problems give not much insight on the precise value of the limit constant

$p\gt 0$

. This result can be seen as an application of a general Euclidean additive functional theory [Reference Steele27, Reference Yukich32]. However, such general methods that work for other combinatorial optimisation problems give not much insight on the precise value of the limit constant

![]() $\beta _{MST}(p,d)$

. The MST problem is known to be exceptional, for a (sort of) explicit series representation, analogue to (1.1), was obtained by Avram and Bertismas [Reference Avram and Bertsimas1], although only in the range

$\beta _{MST}(p,d)$

. The MST problem is known to be exceptional, for a (sort of) explicit series representation, analogue to (1.1), was obtained by Avram and Bertismas [Reference Avram and Bertsimas1], although only in the range

![]() $0\lt p\lt d$

. The latter was used by Penrose [Reference Penrose24], in connection with continuum percolation, to study, among other things, the MST in the high dimensional regime

$0\lt p\lt d$

. The latter was used by Penrose [Reference Penrose24], in connection with continuum percolation, to study, among other things, the MST in the high dimensional regime

![]() $d\to \infty$

. An alternative approach towards explicit formulas was proposed by Steele [Reference Steele, Chauvin, Flajolet, Gardy and Mokkadem26], but limited to the case of i.i.d. weights, based on Tutte polynomials.

$d\to \infty$

. An alternative approach towards explicit formulas was proposed by Steele [Reference Steele, Chauvin, Flajolet, Gardy and Mokkadem26], but limited to the case of i.i.d. weights, based on Tutte polynomials.

The aim of this paper is to investigate analogous results for bipartite Euclidean random models, i.e., when nodes correspond to two distinct families of sampled points (e.g., visually rendered by red/blue colourings) and weights, still given by a power of the distance, are only defined between points with different colours. Formally, we replace the underlying complete graph

![]() $K_n$

with a complete bipartite graph

$K_n$

with a complete bipartite graph

![]() $K_{n_R, n_B}$

with

$K_{n_R, n_B}$

with

![]() $n_R+n_B=n$

.

$n_R+n_B=n$

.

A similar question was formulated and essentially solved in the model with independent weights by Frieze and McDiarmid [Reference Frieze and McDiarmid15]. In Euclidean models, however, it is known that such innocent looking variant may in fact cause quantitative differences in the corresponding asymptotic results. For example, in the Euclidean bipartite travelling salesperson problem with

![]() $d=1$

and

$d=1$

and

![]() $d=2$

, the correct asymptotic rates (for

$d=2$

, the correct asymptotic rates (for

![]() $p=1$

) are known to be respectively

$p=1$

) are known to be respectively

![]() $\sqrt{n}$

[Reference Caracciolo, Di Gioacchino, Gherardi and Malatesta11] and

$\sqrt{n}$

[Reference Caracciolo, Di Gioacchino, Gherardi and Malatesta11] and

![]() $\sqrt{n \log n }$

[Reference Capelli, Caracciolo, Di Gioacchino and Malatesta9], larger than the natural

$\sqrt{n \log n }$

[Reference Capelli, Caracciolo, Di Gioacchino and Malatesta9], larger than the natural

![]() $n^{1-1/d}$

for the non-bipartite problem. Similar results are known for other problems, such as the minimum matching problem [Reference Steele27] and its bipartite counterpart, also related to the optimal transport problem [Reference Ajtai, Komlós and Tusnády2, Reference Ambrosio, Stra and Trevisan5, Reference Caracciolo, Lucibello, Parisi and Sicuro10, Reference Talagrand29, Reference Talagrand30]. Barthe and Bordenave proposed a bipartite extension of the Euclidean additive functional theory [Reference Barthe, Bordenave, Donati-Martin, Lejay and Rouault6] that allows to recover an analogue of (1.2) for many relevant combinatorial optimisation problems on bipartite Euclidean random models, although its range of applicability is restricted to

$n^{1-1/d}$

for the non-bipartite problem. Similar results are known for other problems, such as the minimum matching problem [Reference Steele27] and its bipartite counterpart, also related to the optimal transport problem [Reference Ajtai, Komlós and Tusnády2, Reference Ambrosio, Stra and Trevisan5, Reference Caracciolo, Lucibello, Parisi and Sicuro10, Reference Talagrand29, Reference Talagrand30]. Barthe and Bordenave proposed a bipartite extension of the Euclidean additive functional theory [Reference Barthe, Bordenave, Donati-Martin, Lejay and Rouault6] that allows to recover an analogue of (1.2) for many relevant combinatorial optimisation problems on bipartite Euclidean random models, although its range of applicability is restricted to

![]() $0\lt p\lt d/2$

(the cases

$0\lt p\lt d/2$

(the cases

![]() $p=1$

,

$p=1$

,

![]() $d\in \left \{ 1,2 \right \}$

are indeed outside this range) and anyway the MST problem does not fit in the theory. The main reason for the latter limitation is that there is no uniform bound on the maximum degree of an MST on a bipartite Euclidean random graph – their theory instead applies to a variant of the problem where a uniform bound on the maximum degree is imposed, which is in fact algorithmically more complex (if the bound is two it recovers essentially the travelling salesperson problem).

$d\in \left \{ 1,2 \right \}$

are indeed outside this range) and anyway the MST problem does not fit in the theory. The main reason for the latter limitation is that there is no uniform bound on the maximum degree of an MST on a bipartite Euclidean random graph – their theory instead applies to a variant of the problem where a uniform bound on the maximum degree is imposed, which is in fact algorithmically more complex (if the bound is two it recovers essentially the travelling salesperson problem).

1.1. Main results

Our first main result describes precisely the asymptotic maximum degree of an MST on a bipartite Euclidean random graph, showing that it grows logarithmically in the total number of nodes, in the asymptotic regime where a fraction of points

![]() $\alpha _R \in (0,1)$

is red and the remaining

$\alpha _R \in (0,1)$

is red and the remaining

![]() $\alpha _B = 1-\alpha _R$

is blue.

$\alpha _B = 1-\alpha _R$

is blue.

Theorem 1.1.

Let

![]() $d\ge 1$

, let

$d\ge 1$

, let

![]() $n \ge 1$

and

$n \ge 1$

and

![]() $R^n = \left ( X_i \right )_{i=1}^{n_R}$

,

$R^n = \left ( X_i \right )_{i=1}^{n_R}$

,

![]() $B^n= \left ( Y_i \right )_{i=1}^{n_B}$

be (jointly) i.i.d. uniformly distributed on

$B^n= \left ( Y_i \right )_{i=1}^{n_B}$

be (jointly) i.i.d. uniformly distributed on

![]() $[0,1]^d$

with

$[0,1]^d$

with

![]() $n_R+n_B = n$

and

$n_R+n_B = n$

and

Let

![]() $T^n$

denote the MST over the complete bipartite graph with independent sets

$T^n$

denote the MST over the complete bipartite graph with independent sets

![]() $R^n$

,

$R^n$

,

![]() $B^n$

and weights

$B^n$

and weights

![]() $w(X_i,Y_j) = |X_i-Y_j|$

, and let

$w(X_i,Y_j) = |X_i-Y_j|$

, and let

![]() $\Delta (T^n)$

denote its maximum vertex degree. Then, there exists a constant

$\Delta (T^n)$

denote its maximum vertex degree. Then, there exists a constant

![]() $C= C(d,\alpha _R)\gt 0$

such that

$C= C(d,\alpha _R)\gt 0$

such that

(Indeed, the structure of the MST does not depend on the specific choice of the exponent

![]() $p\gt 0$

, so we simply let

$p\gt 0$

, so we simply let

![]() $p=1$

above). The proof is detailed in Section 3.

$p=1$

above). The proof is detailed in Section 3.

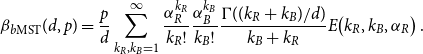

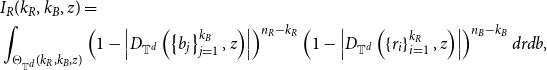

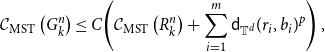

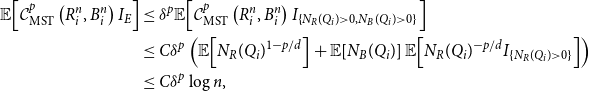

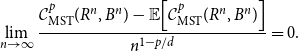

Our second main result shows that, although the general theory of Barthe and Bordenave does not apply and the maximum degree indeed grows, the total weight cost for the bipartite Euclidean MST problem turns out to be much closer to the non-bipartite one, since no exceptional rates appear in low dimensions. Before we give the complete statement, let us introduce the following quantity, for

![]() $d \ge 1$

,

$d \ge 1$

,

![]() $k_R$

,

$k_R$

,

![]() $k_B \ge 1$

,

$k_B \ge 1$

,

![]() $\alpha _R \in (0,1)$

,

$\alpha _R \in (0,1)$

,

\begin{equation*} \begin{split} E\!\left(k_R, k_B, \alpha _R\right) = & \int _{\Theta \!\left(k_R, k_B\right)} \left ( \alpha _R \left|D\!\left(\left \{ b_j \right \}_{j=1}^{k_B}\right)\right| + \alpha _B \left| D\!\left(\left \{ r_i \right \}_{i=1}^{k_R}\right)\right| \right )^{-(k_R+k_B)/d}\cdot \\ & \quad \cdot \left ( \frac{k_R}{\alpha _R} \delta _0(r_1)d b_1 + \frac{k_B}{\alpha _B}d r_1 \delta _0(b_1) \right ) dr_2 \ldots d r_{k_R} db_2 \ldots d b_{k_B},\end{split} \end{equation*}

\begin{equation*} \begin{split} E\!\left(k_R, k_B, \alpha _R\right) = & \int _{\Theta \!\left(k_R, k_B\right)} \left ( \alpha _R \left|D\!\left(\left \{ b_j \right \}_{j=1}^{k_B}\right)\right| + \alpha _B \left| D\!\left(\left \{ r_i \right \}_{i=1}^{k_R}\right)\right| \right )^{-(k_R+k_B)/d}\cdot \\ & \quad \cdot \left ( \frac{k_R}{\alpha _R} \delta _0(r_1)d b_1 + \frac{k_B}{\alpha _B}d r_1 \delta _0(b_1) \right ) dr_2 \ldots d r_{k_R} db_2 \ldots d b_{k_B},\end{split} \end{equation*}

where

![]() $\alpha _B = 1-\alpha _R$

and we write

$\alpha _B = 1-\alpha _R$

and we write

for the set of (ordered) points

![]() $\left( \left ( r_i \right )_{i=1}^{k_R}, \left ( b_j \right )_{j=1}^{k_B}\right)$

such that, in the associated Euclidean bipartite graph with weights

$\left( \left ( r_i \right )_{i=1}^{k_R}, \left ( b_j \right )_{j=1}^{k_B}\right)$

such that, in the associated Euclidean bipartite graph with weights

![]() $\left ( |r_i-b_j| \right )_{i,j}$

, the subgraph with all edges having weight less than

$\left ( |r_i-b_j| \right )_{i,j}$

, the subgraph with all edges having weight less than

![]() $1$

is connected (or equivalently, there exists a bipartite Euclidean spanning tree having all edges with length weight less than

$1$

is connected (or equivalently, there exists a bipartite Euclidean spanning tree having all edges with length weight less than

![]() $1$

), and for a set

$1$

), and for a set

![]() $A \subseteq{\mathbb{R}}^d$

, we write

$A \subseteq{\mathbb{R}}^d$

, we write

and

![]() $|D(A)|$

for its Lebesgue measure. Notice also that the overall integration is performed with respect to the

$|D(A)|$

for its Lebesgue measure. Notice also that the overall integration is performed with respect to the

![]() $d$

-dimensional Lebesgue measure over

$d$

-dimensional Lebesgue measure over

![]() $k_R+k_B-1$

variables and one (either

$k_R+k_B-1$

variables and one (either

![]() $r_1$

or

$r_1$

or

![]() $b_1$

) is instead with respect to a Dirac measure at

$b_1$

) is instead with respect to a Dirac measure at

![]() $0$

.

$0$

.

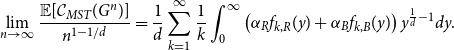

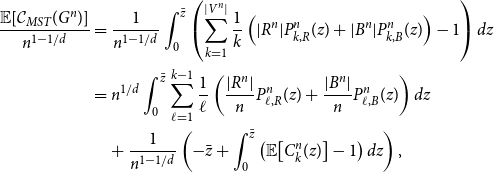

These quantities enter in the explicit formula for the limit constant in the bipartite analogue of (1.2), as our second main result shows.

Theorem 1.2.

Let

![]() $d\ge 1$

, let

$d\ge 1$

, let

![]() $n \ge 1$

and

$n \ge 1$

and

![]() $R^n = \left ( X_i \right )_{i=1}^{n_R}$

,

$R^n = \left ( X_i \right )_{i=1}^{n_R}$

,

![]() $B^n= \left ( Y_i \right )_{i=1}^{n_B}$

be (jointly) i.i.d. uniformly distributed on

$B^n= \left ( Y_i \right )_{i=1}^{n_B}$

be (jointly) i.i.d. uniformly distributed on

![]() $[0,1]^d$

with

$[0,1]^d$

with

![]() $n_R+n_B = n$

and

$n_R+n_B = n$

and

Let

![]() $T^n$

denote the MST over the complete bipartite graph with independent sets

$T^n$

denote the MST over the complete bipartite graph with independent sets

![]() $R^n$

,

$R^n$

,

![]() $B^n$

and weights

$B^n$

and weights

![]() $w(X_i,Y_j) = |X_i-Y_j|$

. Then, for every

$w(X_i,Y_j) = |X_i-Y_j|$

. Then, for every

![]() $p \in (0, d)$

, the following convergence holds

$p \in (0, d)$

, the following convergence holds

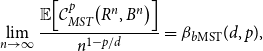

\begin{equation} \lim _{n \to \infty } \frac{ \mathbb{E}\!\left [ \sum _{\left \{ X_i,Y_j \right \} \in T^n} |X_i - Y_j|^p \right ]}{n^{1-p/d}} = \beta _{b\text{MST}}(d,p), \end{equation}

\begin{equation} \lim _{n \to \infty } \frac{ \mathbb{E}\!\left [ \sum _{\left \{ X_i,Y_j \right \} \in T^n} |X_i - Y_j|^p \right ]}{n^{1-p/d}} = \beta _{b\text{MST}}(d,p), \end{equation}

and the constant

![]() $\beta _{b\text{MST}}(d,p) \in (0, \infty )$

is given by the series

$\beta _{b\text{MST}}(d,p) \in (0, \infty )$

is given by the series

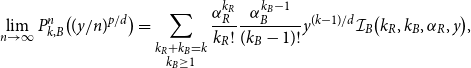

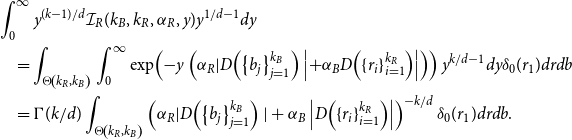

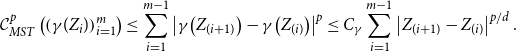

\begin{equation} \beta _{b\text{MST}}(d,p) = \frac{p}{d}\sum _{k_R,k_B=1}^{\infty } \frac{\alpha _R^{k_R}}{k_R! }\frac{ \alpha _B^{k_B}}{k_B!} \frac{\Gamma ((k_R+k_B)/d)}{k_B+k_R} E\!\left(k_R, k_B, \alpha _R\right). \end{equation}

\begin{equation} \beta _{b\text{MST}}(d,p) = \frac{p}{d}\sum _{k_R,k_B=1}^{\infty } \frac{\alpha _R^{k_R}}{k_R! }\frac{ \alpha _B^{k_B}}{k_B!} \frac{\Gamma ((k_R+k_B)/d)}{k_B+k_R} E\!\left(k_R, k_B, \alpha _R\right). \end{equation}

Moreover, if

![]() $p\lt d/2$

for

$p\lt d/2$

for

![]() $d \in \left \{ 1,2 \right \}$

or

$d \in \left \{ 1,2 \right \}$

or

![]() $p\lt d$

for

$p\lt d$

for

![]() $d \ge 3$

, convergence is almost sure:

$d \ge 3$

, convergence is almost sure:

The proof is detailed in Section 4. The one-dimensional random bipartite Euclidean MST has been recently theoretically investigated in the statistical physics literature by Riva, Caracciolo and Malatesta [Reference Riva, Caracciolo and Malatesta25], together with extensive numerical simulations also in higher dimensions, hinting at the possibility of a non-exceptional rate

![]() $n^{1-p/d}$

also for

$n^{1-p/d}$

also for

![]() $d=2$

. In particular, our result confirms this asymptotic rate in the two dimensional case, with a.s. convergence if

$d=2$

. In particular, our result confirms this asymptotic rate in the two dimensional case, with a.s. convergence if

![]() $p\lt 1$

and just convergence of the expected costs if

$p\lt 1$

and just convergence of the expected costs if

![]() $1\le p \lt 2$

– in fact we also have a general upper bound if

$1\le p \lt 2$

– in fact we also have a general upper bound if

![]() $p\ge 2$

(Lemma 4.5).

$p\ge 2$

(Lemma 4.5).

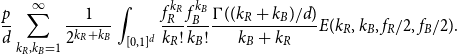

1.2. Further questions and conjectures

Several extensions of the results contained in this work may be devised, for example by generalising to

![]() $k$

-partite models or more general block models, allowing for weights between the same coloured points but possibly with a different function, e.g. the same power of the distance function, but multiplied by a different pre-factor according to pair of blocks. An interesting question, also open for the non-bipartite case, is to extend the series representation for the limiting constant to the case

$k$

-partite models or more general block models, allowing for weights between the same coloured points but possibly with a different function, e.g. the same power of the distance function, but multiplied by a different pre-factor according to pair of blocks. An interesting question, also open for the non-bipartite case, is to extend the series representation for the limiting constant to the case

![]() $p\ge d$

. On the other side, we suspect that additivity techniques may yield convergence in (1.5) also in the range

$p\ge d$

. On the other side, we suspect that additivity techniques may yield convergence in (1.5) also in the range

![]() $p \ge d$

, without an explicit series, but we leave it for future explorations. A further question, that has no counterpart in the non-bipartite case, is what happens if the laws of different coloured points are different, say with densities

$p \ge d$

, without an explicit series, but we leave it for future explorations. A further question, that has no counterpart in the non-bipartite case, is what happens if the laws of different coloured points are different, say with densities

![]() $f_R$

and

$f_R$

and

![]() $f_B$

that are regular, uniformly positive and bounded. Assuming that

$f_B$

that are regular, uniformly positive and bounded. Assuming that

![]() $n_R/n \to 1/2$

, a natural conjecture is that the limit holds with (1.6) obtained by replacing

$n_R/n \to 1/2$

, a natural conjecture is that the limit holds with (1.6) obtained by replacing

![]() $\alpha _R$

and

$\alpha _R$

and

![]() $\alpha _B$

with the ‘local’ fraction of points

$\alpha _B$

with the ‘local’ fraction of points

![]() $f_R(x)/2$

,

$f_R(x)/2$

,

![]() $f_R(x)/2$

and then integrating with respect to

$f_R(x)/2$

and then integrating with respect to

![]() $x \in [0,1]^d$

, i.e.,

$x \in [0,1]^d$

, i.e.,

\begin{equation} \frac{p}{d}\sum _{k_R,k_B=1}^{\infty }\frac{1}{2^{k_R+k_B}}\int _{[0,1]^d} \frac{f_R^{k_R}}{k_R! }\frac{ f_B^{k_B}}{k_B!} \frac{\Gamma ((k_R+k_B)/d)}{k_B+k_R} E(k_R, k_B, f_R/2, f_B/2). \end{equation}

\begin{equation} \frac{p}{d}\sum _{k_R,k_B=1}^{\infty }\frac{1}{2^{k_R+k_B}}\int _{[0,1]^d} \frac{f_R^{k_R}}{k_R! }\frac{ f_B^{k_B}}{k_B!} \frac{\Gamma ((k_R+k_B)/d)}{k_B+k_R} E(k_R, k_B, f_R/2, f_B/2). \end{equation}

Furthermore, a central limit theorem is known for MST problem [Reference Chatterjee and Sen14, Reference Kesten and Lee19] and it may be interesting to understand the possible role played by the additional fluctuations introduced in bipartite setting for analogue results.

Finally, it may be of interest to strengthen Theorem 1.1, by establishing the limit (e.g. in probability)

for some constant

![]() $\gamma (d) \in (0, \infty )$

, and further investigating the vertex degree distribution of

$\gamma (d) \in (0, \infty )$

, and further investigating the vertex degree distribution of

![]() $T^n$

.

$T^n$

.

1.3. Structure of the paper

In Section 2 we collect useful notation and properties of general MST’s, together with crucial observations in the metric setting (including the Euclidean one) and some useful probabilistic estimates. We try here to keep separate as much as possible probabilistic from deterministic results, to simplify the exposition. In Section 3 we prove Theorem 1.1 and in Section 4 we first extend [Reference Avram and Bertsimas1, Theorem 1] to the bipartite case and then apply it in the Euclidean setting. An intermediate step requires to argue on the flat torus

![]() $\mathbb{T}$

to exploit further homogeneity. We finally use a concentration result to obtain almost sure convergence: since the vertex degree is not uniformly bounded, the standard inequalities were not sufficient to directly cover the case

$\mathbb{T}$

to exploit further homogeneity. We finally use a concentration result to obtain almost sure convergence: since the vertex degree is not uniformly bounded, the standard inequalities were not sufficient to directly cover the case

![]() $p\lt 1$

, so we prove a simple variant of McDiarmid inequality in Appendix A that we did not find in the literature and may be of independent interest.

$p\lt 1$

, so we prove a simple variant of McDiarmid inequality in Appendix A that we did not find in the literature and may be of independent interest.

2. Notation and preliminary results

We always consider the space

![]() ${\mathbb{R}}^d$

,

${\mathbb{R}}^d$

,

![]() $d \ge 1$

, endowed with the Euclidean distance, which we denote with

$d \ge 1$

, endowed with the Euclidean distance, which we denote with

![]() $|x-y|$

for

$|x-y|$

for

![]() $x$

,

$x$

,

![]() $y \in{\mathbb{R}}^d$

. The volume of the unit ball is denoted with

$y \in{\mathbb{R}}^d$

. The volume of the unit ball is denoted with

![]() $\omega _d$

. We also use throughout the letter

$\omega _d$

. We also use throughout the letter

![]() $C$

to denote constants that may depend upon parameters (such as the dimension

$C$

to denote constants that may depend upon parameters (such as the dimension

![]() $d$

, exponents

$d$

, exponents

![]() $p$

, etc.), warning the reader that to simplify the notation in some proofs, the same letter may be used in different locations to denote possibly different constants.

$p$

, etc.), warning the reader that to simplify the notation in some proofs, the same letter may be used in different locations to denote possibly different constants.

2.1. Minimum spanning trees

Although our focus is on weighted graphs induced by points in the Euclidean space

![]() ${\mathbb{R}}^d$

, the following general definition of minimum spanning trees will be useful.

${\mathbb{R}}^d$

, the following general definition of minimum spanning trees will be useful.

Definition 2.1.

Given a weighted undirected finite graph

![]() $G = (V,E, w)$

, with

$G = (V,E, w)$

, with

![]() $w\,:\, E \to [0, \infty ]$

, the MST cost functional is defined as

$w\,:\, E \to [0, \infty ]$

, the MST cost functional is defined as

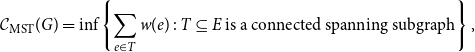

\begin{equation} \mathcal{C}_{\text{MST}}(G) = \inf \left \{ \sum _{e \in T} w(e)\, : \, \text{$T \subseteq E$ is a connected spanning subgraph} \right \}, \end{equation}

\begin{equation} \mathcal{C}_{\text{MST}}(G) = \inf \left \{ \sum _{e \in T} w(e)\, : \, \text{$T \subseteq E$ is a connected spanning subgraph} \right \}, \end{equation}

Here and below, connected is in the sense that only edges with finite weight must be considered. We consider only minimisers

![]() $T$

in (2.1) that are trees, i.e., connected and acyclic, otherwise removing the most expensive edge in a cycle would give a competitor with smaller cost (since we assume possibly null weights, there may be other minimisers). The following lemma is a special case of the cut property of minimum spanning trees, but will play a crucial role in several occasions, so we state it here.

$T$

in (2.1) that are trees, i.e., connected and acyclic, otherwise removing the most expensive edge in a cycle would give a competitor with smaller cost (since we assume possibly null weights, there may be other minimisers). The following lemma is a special case of the cut property of minimum spanning trees, but will play a crucial role in several occasions, so we state it here.

Lemma 2.2.

Let

![]() $G = (V, E, w)$

,

$G = (V, E, w)$

,

![]() $v \in V$

and assume that

$v \in V$

and assume that

![]() $e \in \operatorname{arg min}\left \{ w(f)\, : \, v \in f \right \}$

is unique. Then,

$e \in \operatorname{arg min}\left \{ w(f)\, : \, v \in f \right \}$

is unique. Then,

![]() $e$

belongs to every minimum spanning tree of

$e$

belongs to every minimum spanning tree of

![]() $G$

.

$G$

.

Proof. Assume that

![]() $e$

does not belong to a minimum spanning tree

$e$

does not belong to a minimum spanning tree

![]() $T$

. Addition of

$T$

. Addition of

![]() $e$

to

$e$

to

![]() $T$

induces a cycle that includes necessarily another edge

$T$

induces a cycle that includes necessarily another edge

![]() $f$

, with

$f$

, with

![]() $v \in f$

and by assumption

$v \in f$

and by assumption

![]() $w(f)\gt w(e)$

. By removing

$w(f)\gt w(e)$

. By removing

![]() $f$

, the cost of the resulting connected graph is strictly smaller that the cost of

$f$

, the cost of the resulting connected graph is strictly smaller that the cost of

![]() $T$

, a contradiction.

$T$

, a contradiction.

We write

![]() $\mathsf{n}_G(v) \in V$

, or simply

$\mathsf{n}_G(v) \in V$

, or simply

![]() $\mathsf{n}(v)$

if there are no ambiguities, for the closest node to

$\mathsf{n}(v)$

if there are no ambiguities, for the closest node to

![]() $v$

in

$v$

in

![]() $G$

, i.e.,

$G$

, i.e.,

assuming that such node is unique.

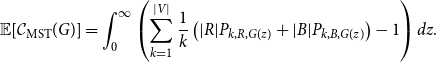

The subgraphs

are strongly related to the minimum spanning tree on

![]() $G$

, since the execution of Kruskal’s algorithm yields the identity, already observed in [Reference Avram and Bertsimas1],

$G$

, since the execution of Kruskal’s algorithm yields the identity, already observed in [Reference Avram and Bertsimas1],

where we write

![]() $C_G$

denotes the number of connected components of a graph

$C_G$

denotes the number of connected components of a graph

![]() $G$

. Indeed, the function

$G$

. Indeed, the function

![]() $z \mapsto C_{G(z)}$

is piecewise constant and decreasing from

$z \mapsto C_{G(z)}$

is piecewise constant and decreasing from

![]() $|V|$

towards

$|V|$

towards

![]() $1$

(assuming that all weights are strictly positive and

$1$

(assuming that all weights are strictly positive and

![]() $G$

is connected). Assume for simplicity that all weights

$G$

is connected). Assume for simplicity that all weights

![]() $\left \{ w_e \right \}_{e \in E}$

are different, so that

$\left \{ w_e \right \}_{e \in E}$

are different, so that

![]() $z \mapsto C_{G(z)}$

has only unit jumps, on a set

$z \mapsto C_{G(z)}$

has only unit jumps, on a set

![]() $J_{-}$

. An integration by parts gives the identity

$J_{-}$

. An integration by parts gives the identity

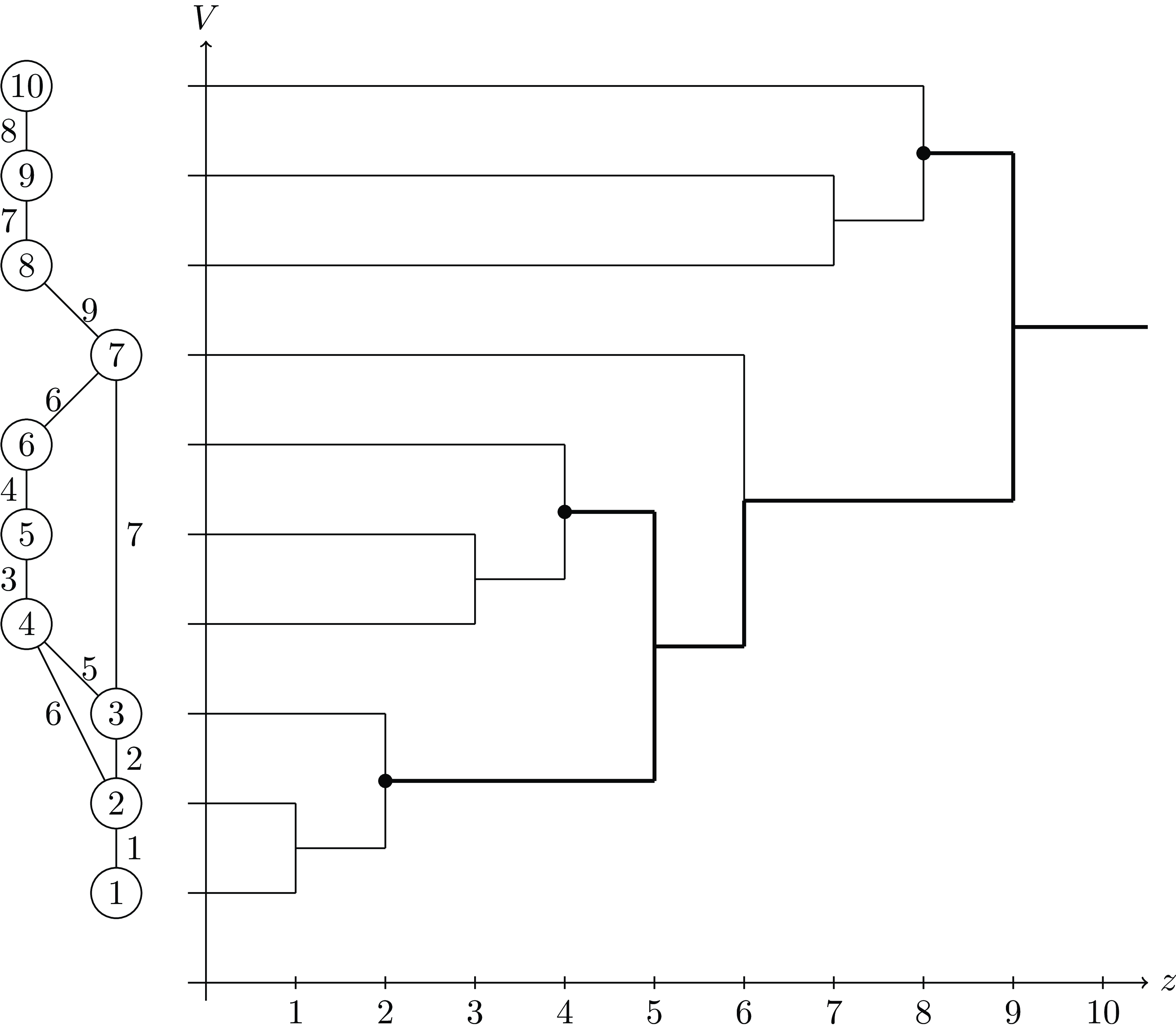

To argue that the right hand side is the cost of an MST, e.g., obtained by Kruskal’s algorithm, we may represent the connected components of

![]() $G(z)$

as a function of

$G(z)$

as a function of

![]() $z$

in a tree-like graph (see Fig. 1): starting with components consisting of single nodes at

$z$

in a tree-like graph (see Fig. 1): starting with components consisting of single nodes at

![]() $z=0$

, whenever two components merge (i.e., at values

$z=0$

, whenever two components merge (i.e., at values

![]() $z \in J_{-}$

) we connect the corresponding segments. This yields a (continuous) tree with leaves given by the nodes and a root at

$z \in J_{-}$

) we connect the corresponding segments. This yields a (continuous) tree with leaves given by the nodes and a root at

![]() $z =\infty$

. Since Kruskal’s algorithm returns exactly the tree consisting of the edges corresponding to such

$z =\infty$

. Since Kruskal’s algorithm returns exactly the tree consisting of the edges corresponding to such

![]() $z \in J_{-}$

, we obtain (2.2).

$z \in J_{-}$

, we obtain (2.2).

Figure 1. A weighted graph

![]() $G$

and its tree-like representation of the connected components of

$G$

and its tree-like representation of the connected components of

![]() $G(z)$

. Black dots correspond to seeds for the construction of

$G(z)$

. Black dots correspond to seeds for the construction of

![]() $C_i$

’s with

$C_i$

’s with

![]() $k=3$

. Notice that regardless whether the node

$k=3$

. Notice that regardless whether the node

![]() $7$

is added to

$7$

is added to

![]() $\{ 1,2,3 \}$

or

$\{ 1,2,3 \}$

or

![]() $\{ 4,5,6\}$

, the resulting (different) trees have always total weight

$\{ 4,5,6\}$

, the resulting (different) trees have always total weight

![]() $5+9=14$

.

$5+9=14$

.

Remark 2.3. The construction above also yields that the minimum spanning trees of

![]() $G = (V, E, w)$

are also minimum spanning trees associated to the graph

$G = (V, E, w)$

are also minimum spanning trees associated to the graph

![]() $G^\psi = (V,E, \psi \circ w)$

, i.e., weights are

$G^\psi = (V,E, \psi \circ w)$

, i.e., weights are

![]() $\psi (w(e))$

where

$\psi (w(e))$

where

![]() $\psi$

is an increasing function. In particular, assuming that

$\psi$

is an increasing function. In particular, assuming that

![]() $\psi \,:\,[0, \infty )$

is strictly increasing with

$\psi \,:\,[0, \infty )$

is strictly increasing with

![]() $\psi (0) = 0$

, then

$\psi (0) = 0$

, then

![]() $G^\psi (z) = G(\psi ^{-1}(z))$

, hence

$G^\psi (z) = G(\psi ^{-1}(z))$

, hence

In particular, choosing

![]() $\psi (x) = x^p$

and letting

$\psi (x) = x^p$

and letting

![]() $p \to \infty$

, we obtain that any minimum spanning tree

$p \to \infty$

, we obtain that any minimum spanning tree

![]() $T$

is also a minimum bottleneck spanning tree, i.e.,

$T$

is also a minimum bottleneck spanning tree, i.e.,

![]() $T$

minimises the functional

$T$

minimises the functional

A similar argument [Reference Avram and Bertsimas1, Lemma 4] yields an upper bound for a similar quantity where

![]() $C_{G}$

is replaced with

$C_{G}$

is replaced with

![]() $C_{k,G}$

, the number of connected components having at least

$C_{k,G}$

, the number of connected components having at least

![]() $k$

nodes.

$k$

nodes.

Lemma 2.4.

Let

![]() $G = (V, E, w)$

be connected with all distinct weights

$G = (V, E, w)$

be connected with all distinct weights

![]() $(w(e))_{e \in E}$

(if finite) and

$(w(e))_{e \in E}$

(if finite) and

![]() $2 \le k \le |V|$

. Then, there exists a partition

$2 \le k \le |V|$

. Then, there exists a partition

![]() $V = \bigcup _{i=1}^{m} C_i$

such that letting

$V = \bigcup _{i=1}^{m} C_i$

such that letting

![]() $G_k$

be the graph over the node set

$G_k$

be the graph over the node set

![]() $\left \{ C_1,\ldots, C_m \right \}$

with weights

$\left \{ C_1,\ldots, C_m \right \}$

with weights

then

Moreover, for every

![]() $i=1, \ldots, m$

,

$i=1, \ldots, m$

,

![]() $|C_i| \ge k$

, hence

$|C_i| \ge k$

, hence

![]() $m \le |V|/k$

, and there exists

$m \le |V|/k$

, and there exists

![]() $v \in C_i$

such that

$v \in C_i$

such that

![]() $\mathsf{n}_G(v) \in C_i$

and

$\mathsf{n}_G(v) \in C_i$

and

![]() $\mathsf{n}_G(\mathsf{n}_G(v)) = v$

.

$\mathsf{n}_G(\mathsf{n}_G(v)) = v$

.

Proof. The function

![]() $z \mapsto C_{k, G(z)}$

is piecewise constant, with jumps of absolute size

$z \mapsto C_{k, G(z)}$

is piecewise constant, with jumps of absolute size

![]() $1$

, with positive sign on a set

$1$

, with positive sign on a set

![]() $J_+$

and negative sign on a set

$J_+$

and negative sign on a set

![]() $J_-$

. An integration by parts gives

$J_-$

. An integration by parts gives

We interpret the right hand side above as

![]() $\mathcal{C}_{\text{MST}}(G_k)$

for a suitable graph

$\mathcal{C}_{\text{MST}}(G_k)$

for a suitable graph

![]() $G_k$

. To define the sets

$G_k$

. To define the sets

![]() $C_i$

, we let

$C_i$

, we let

![]() $J_+ = \left \{ z_1, \ldots, z_{m} \right \}$

and define, for every

$J_+ = \left \{ z_1, \ldots, z_{m} \right \}$

and define, for every

![]() $z_i$

, the ‘seed’ of

$z_i$

, the ‘seed’ of

![]() $C_i$

as the set of nodes that gives an additional component with at least

$C_i$

as the set of nodes that gives an additional component with at least

![]() $k$

nodes, i.e., obtained by merging two components in

$k$

nodes, i.e., obtained by merging two components in

![]() $G(z_i^-)$

, both having less that

$G(z_i^-)$

, both having less that

![]() $k$

nodes. Notice that, since

$k$

nodes. Notice that, since

![]() $C_i$

will be then completed by adding nodes to such seeds, the last statement is already fulfilled. Indeed, any seed contains at least

$C_i$

will be then completed by adding nodes to such seeds, the last statement is already fulfilled. Indeed, any seed contains at least

![]() $k$

nodes we can always choose

$k$

nodes we can always choose

![]() $v_1$

,

$v_1$

,

![]() $v_2$

in a seed such that the paths from

$v_2$

in a seed such that the paths from

![]() $v_1$

,

$v_1$

,

![]() $v_2$

merge first (among those from other nodes in the same seed). This gives that

$v_2$

merge first (among those from other nodes in the same seed). This gives that

![]() $v_2= \mathsf{n}_G(v_1)$

and

$v_2= \mathsf{n}_G(v_1)$

and

![]() $v_2 = \mathsf{n}_G(v_1)$

.

$v_2 = \mathsf{n}_G(v_1)$

.

To completely determine every

![]() $C_i$

, it is simpler to argue graphically on the the tree-like representation (Fig. 1), where we highlight the ‘birth’ of

$C_i$

, it is simpler to argue graphically on the the tree-like representation (Fig. 1), where we highlight the ‘birth’ of

![]() $C_i$

at

$C_i$

at

![]() $z_i \in J_{-}$

by thickening the shortest path from the seed towards the root at

$z_i \in J_{-}$

by thickening the shortest path from the seed towards the root at

![]() $z =\infty$

. At every

$z =\infty$

. At every

![]() $z$

such that two thick paths merge, the corresponding two connected components with at least

$z$

such that two thick paths merge, the corresponding two connected components with at least

![]() $k$

elements become one, hence

$k$

elements become one, hence

![]() $C_{k,G(z)}$

jumps downwards, i.e.,

$C_{k,G(z)}$

jumps downwards, i.e.,

![]() $z \in J_{-}$

. Given

$z \in J_{-}$

. Given

![]() $v \in V$

, we introduce the following rule in order to determine the set

$v \in V$

, we introduce the following rule in order to determine the set

![]() $C_i$

to which

$C_i$

to which

![]() $v$

belongs. Consider the shortest path from the trivial component containing only

$v$

belongs. Consider the shortest path from the trivial component containing only

![]() $v$

at

$v$

at

![]() $z=0$

towards the root at

$z=0$

towards the root at

![]() $z = \infty$

in the tree-like representation. We focus on the first point where such path merges with a thick line and write

$z = \infty$

in the tree-like representation. We focus on the first point where such path merges with a thick line and write

![]() $z_v$

for the corresponding value on the

$z_v$

for the corresponding value on the

![]() $z$

-axis and let

$z$

-axis and let

![]() $\mathcal{S}_v$

denote the subset of ‘seeds’ among the

$\mathcal{S}_v$

denote the subset of ‘seeds’ among the

![]() $C_i$

’s that are located further from the root (i.e., with smaller

$C_i$

’s that are located further from the root (i.e., with smaller

![]() $z$

values) along the thick path, starting from such point (for example, in Fig. 1 we report the construction for

$z$

values) along the thick path, starting from such point (for example, in Fig. 1 we report the construction for

![]() $k=3$

, where we have for

$k=3$

, where we have for

![]() $v=7$

,

$v=7$

,

![]() $z_7 = 6$

and

$z_7 = 6$

and

![]() $\mathcal{S}_7 = \left \{ \left \{ 1,2,3 \right \}, \left \{ 4,5,6 \right \} \right \}$

). If

$\mathcal{S}_7 = \left \{ \left \{ 1,2,3 \right \}, \left \{ 4,5,6 \right \} \right \}$

). If

![]() $z_v \in J_+$

, then

$z_v \in J_+$

, then

![]() $v$

already becomes part of a seed of some

$v$

already becomes part of a seed of some

![]() $C_i$

, hence the situation is trivial, and we let

$C_i$

, hence the situation is trivial, and we let

![]() $v \in C_i$

. Otherwise the node

$v \in C_i$

. Otherwise the node

![]() $v$

will be assigned to a chosen cluster

$v$

will be assigned to a chosen cluster

![]() $C_i$

among those in

$C_i$

among those in

![]() $\mathcal{S}_v$

. We choose to add the vertex

$\mathcal{S}_v$

. We choose to add the vertex

![]() $v$

to the

$v$

to the

![]() $C_i \in \mathcal{S}_v$

with smallest label index

$C_i \in \mathcal{S}_v$

with smallest label index

![]() $i$

. Our choice ensures that all the connected component of

$i$

. Our choice ensures that all the connected component of

![]() $v$

in the graph

$v$

in the graph

![]() $G(z_v^-)$

is added to the same

$G(z_v^-)$

is added to the same

![]() $C_i$

. We highlight that, as long as it is consistent, other choices of

$C_i$

. We highlight that, as long as it is consistent, other choices of

![]() $C_i$

’s would be possible, as such nodes play a role only later in the construction of the MST, when the clusters are already merged. Anyway, the resulting

$C_i$

’s would be possible, as such nodes play a role only later in the construction of the MST, when the clusters are already merged. Anyway, the resulting

![]() $C_i$

’s thus well-defined and moreover the induced weight between them defined via (2.4) actually coincides with the weight between the original seeds. Using this fact, to prove (2.5) it is then elementary to check that the graphical representation of Kruskal’s algorithm on the graph

$C_i$

’s thus well-defined and moreover the induced weight between them defined via (2.4) actually coincides with the weight between the original seeds. Using this fact, to prove (2.5) it is then elementary to check that the graphical representation of Kruskal’s algorithm on the graph

![]() $G_k$

gives exactly the thickened tree.

$G_k$

gives exactly the thickened tree.

2.2. Metric MST problem

If

![]() $(X, \mathsf{d})$

is a metric space and

$(X, \mathsf{d})$

is a metric space and

![]() $V \subseteq X$

, then a natural choice for a weight is

$V \subseteq X$

, then a natural choice for a weight is

![]() $w(\{ x,y\}) = \mathsf{d}(x,y)^p$

, where

$w(\{ x,y\}) = \mathsf{d}(x,y)^p$

, where

![]() $p\gt 0$

is fixed. If

$p\gt 0$

is fixed. If

![]() $V$

,

$V$

,

![]() $R$

,

$R$

,

![]() $B \subseteq X$

, are finite sets and

$B \subseteq X$

, are finite sets and

![]() $p\gt 0$

, we write

$p\gt 0$

, we write

for the the MST cost functional on the complete graph on

![]() $V$

(and respectively, on the complete bipartite graph with independent sets

$V$

(and respectively, on the complete bipartite graph with independent sets

![]() $R$

,

$R$

,

![]() $B$

) and edge weights

$B$

) and edge weights

![]() $w(\{ x,y\}) = \mathsf{d}(x,y)^p$

, for

$w(\{ x,y\}) = \mathsf{d}(x,y)^p$

, for

![]() $\{ x,y\} \in E$

. Notice that, by Remark 2.3, the MST does not in fact depend on the choice of

$\{ x,y\} \in E$

. Notice that, by Remark 2.3, the MST does not in fact depend on the choice of

![]() $p$

, and moreover we may let

$p$

, and moreover we may let

![]() $p\to \infty$

and obtain

$p\to \infty$

and obtain

where

![]() $\mathcal{C}_{\text{MST}}^\infty$

is the minimum bottleneck spanning tree cost defined in (2.3) with edge weight given by the distance.

$\mathcal{C}_{\text{MST}}^\infty$

is the minimum bottleneck spanning tree cost defined in (2.3) with edge weight given by the distance.

We denote by

and

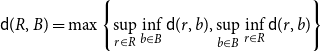

\begin{equation*} \mathsf {d}(R, B) = \max \left \{ \sup _{r \in R} \inf _{b \in B} \mathsf {d}(r,b), \sup _{b \in B} \inf _{r \in R} \mathsf {d}(r,b) \right \}\end{equation*}

\begin{equation*} \mathsf {d}(R, B) = \max \left \{ \sup _{r \in R} \inf _{b \in B} \mathsf {d}(r,b), \sup _{b \in B} \inf _{r \in R} \mathsf {d}(r,b) \right \}\end{equation*}

respectively the distance function from

![]() $V$

and the Hausdorff distance between

$V$

and the Hausdorff distance between

![]() $R$

and

$R$

and

![]() $B$

. Clearly,

$B$

. Clearly,

![]() $\mathcal{C}_{\text{MST}}^p(R \cup B) \le \mathcal{C}_{\text{MST}}^p(R, B)$

. The following lemma provides a sort of converse inequality.

$\mathcal{C}_{\text{MST}}^p(R \cup B) \le \mathcal{C}_{\text{MST}}^p(R, B)$

. The following lemma provides a sort of converse inequality.

Lemma 2.5.

Let

![]() $p\gt 0$

. There exists a constant

$p\gt 0$

. There exists a constant

![]() $C = C(p) \in (0, \infty )$

such that, for finite sets

$C = C(p) \in (0, \infty )$

such that, for finite sets

![]() $R$

,

$R$

,

![]() $B \subseteq X$

,

$B \subseteq X$

,

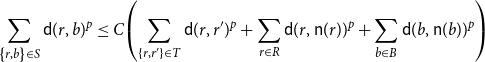

\begin{equation*} \mathcal {C}_{\text{MST}}^p(R, B) \le C \!\left ( \mathcal {C}_{\text{MST}}^p(R) + \sum _{r \in R} \mathsf {d}(B,r)^p + \sum _{b \in B} \mathsf {d}(R,b)^p \right ),\end{equation*}

\begin{equation*} \mathcal {C}_{\text{MST}}^p(R, B) \le C \!\left ( \mathcal {C}_{\text{MST}}^p(R) + \sum _{r \in R} \mathsf {d}(B,r)^p + \sum _{b \in B} \mathsf {d}(R,b)^p \right ),\end{equation*}

and, for some constant

![]() $C\gt 0$

,

$C\gt 0$

,

Proof. For simplicity, we assume that all edge weights are different (otherwise a small perturbation of the weights and a suitable limit gives the thesis). Let

![]() $T_R$

denote the MST for the vertex set

$T_R$

denote the MST for the vertex set

![]() $R$

and fix

$R$

and fix

![]() $\bar{r} \in R$

. For every

$\bar{r} \in R$

. For every

![]() $r \in R$

, there exists a unique path in

$r \in R$

, there exists a unique path in

![]() $T_R$

with minimal length connecting

$T_R$

with minimal length connecting

![]() $r$

to

$r$

to

![]() $\bar{r}$

. We associate to every

$\bar{r}$

. We associate to every

![]() $r \in R\setminus \left \{ \bar{r} \right \}$

the first edge

$r \in R\setminus \left \{ \bar{r} \right \}$

the first edge

![]() $e(r)$

of such path (so that

$e(r)$

of such path (so that

![]() $r \in e(r)$

). The correspondence

$r \in e(r)$

). The correspondence

![]() $r \mapsto e(r)$

is a bijection.

$r \mapsto e(r)$

is a bijection.

We use this correspondence to define a connected spanning graph

![]() $S$

(not necessarily a tree) on the bipartite graph with independent sets

$S$

(not necessarily a tree) on the bipartite graph with independent sets

![]() $R$

,

$R$

,

![]() $B$

. For every

$B$

. For every

![]() $r \in R \setminus \left \{ \bar{r} \right \}$

, if

$r \in R \setminus \left \{ \bar{r} \right \}$

, if

![]() $e(r) = \left \{ r, r^{\prime} \right \}$

, we add the edge

$e(r) = \left \{ r, r^{\prime} \right \}$

, we add the edge

![]() $\left \{ \mathsf{n}(r), r^{\prime} \right \}$

to

$\left \{ \mathsf{n}(r), r^{\prime} \right \}$

to

![]() $S$

, where

$S$

, where

![]() $\mathsf{n}(r) = \mathsf{n}_G(r) \in B$

and

$\mathsf{n}(r) = \mathsf{n}_G(r) \in B$

and

![]() $G$

is the complete bipartite graph with independent sets

$G$

is the complete bipartite graph with independent sets

![]() $R$

,

$R$

,

![]() $B$

. Moreover, for every

$B$

. Moreover, for every

![]() $r \in R$

,

$r \in R$

,

![]() $b \in B$

we also add the edges

$b \in B$

we also add the edges

![]() $\left \{ r, \mathsf{n}(r) \right \}$

,

$\left \{ r, \mathsf{n}(r) \right \}$

,

![]() $\left \{ b, \mathsf{n}(b) \right \}$

.

$\left \{ b, \mathsf{n}(b) \right \}$

.

![]() $S$

is connected because every

$S$

is connected because every

![]() $b \in B$

is connected to

$b \in B$

is connected to

![]() $R$

and the vertex set

$R$

and the vertex set

![]() $R$

is connected: any path on

$R$

is connected: any path on

![]() $T_R$

naturally corresponds to a path on

$T_R$

naturally corresponds to a path on

![]() $S$

using the pair of edges

$S$

using the pair of edges

![]() $\left \{ r, \mathsf{n}(r) \right \}$

,

$\left \{ r, \mathsf{n}(r) \right \}$

,

![]() $\left \{ \mathsf{n}(r), r^{\prime} \right \}$

instead of an edge

$\left \{ \mathsf{n}(r), r^{\prime} \right \}$

instead of an edge

![]() $e(r) = \big\{ r,r^{\prime} \big\}$

. The triangle inequality gives

$e(r) = \big\{ r,r^{\prime} \big\}$

. The triangle inequality gives

for some constant

![]() $C = C(p)\ge 1$

, hence

$C = C(p)\ge 1$

, hence

\begin{equation*} \sum _{ \left \{ r,b \right \} \in S} \mathsf {d}(r,b)^p \le C \!\left ( \sum _{\{ r,r^{\prime}\} \in T} \mathsf {d}(r, r^{\prime})^p + \sum _{r \in R} \mathsf {d}(r,\mathsf {n}(r))^p + \sum _{b \in B} \mathsf {d}(b,\mathsf {n}(b))^p \right )\end{equation*}

\begin{equation*} \sum _{ \left \{ r,b \right \} \in S} \mathsf {d}(r,b)^p \le C \!\left ( \sum _{\{ r,r^{\prime}\} \in T} \mathsf {d}(r, r^{\prime})^p + \sum _{r \in R} \mathsf {d}(r,\mathsf {n}(r))^p + \sum _{b \in B} \mathsf {d}(b,\mathsf {n}(b))^p \right )\end{equation*}

and the first claim follows. Taking the

![]() $p$

-th root both sides and letting

$p$

-th root both sides and letting

![]() $p \to \infty$

yields the second inequality.

$p \to \infty$

yields the second inequality.

Remark 2.6. In the Euclidean setting

![]() $X={\mathbb{R}}^d$

, it is known (see [Reference Steele28]) that, if

$X={\mathbb{R}}^d$

, it is known (see [Reference Steele28]) that, if

![]() $p\lt d$

, there exists a constant

$p\lt d$

, there exists a constant

![]() $C =C(d,p)\gt 0$

such that

$C =C(d,p)\gt 0$

such that

for any

![]() $V \subseteq [0,1]^d$

. A similar uniform bound cannot be true in the bipartite case, as simple examples show.

$V \subseteq [0,1]^d$

. A similar uniform bound cannot be true in the bipartite case, as simple examples show.

A second fundamental difference between the usual Euclidean MST and its bipartite variant is that for the latter its maximum vertex degree does not need to be uniformly bounded by a constant

![]() $C = C(d)\gt 0$

(again, examples are straightforward). The following result will be crucial to provide an upper bound in the random case. We say that

$C = C(d)\gt 0$

(again, examples are straightforward). The following result will be crucial to provide an upper bound in the random case. We say that

![]() $Q \subseteq{\mathbb{R}}^d$

is a cube if

$Q \subseteq{\mathbb{R}}^d$

is a cube if

![]() $Q = \prod _{i=1}^d (x_i, x_i+a)$

with

$Q = \prod _{i=1}^d (x_i, x_i+a)$

with

![]() $x = (x_i)_{i=1}^d \in{\mathbb{R}}^d$

and

$x = (x_i)_{i=1}^d \in{\mathbb{R}}^d$

and

![]() $a\gt 0$

is its side length. The diameter of

$a\gt 0$

is its side length. The diameter of

![]() $Q$

is then

$Q$

is then

![]() $\sqrt{d}a$

and its volume

$\sqrt{d}a$

and its volume

![]() $|Q| = a^d$

.

$|Q| = a^d$

.

Lemma 2.7.

Let

![]() $R$

,

$R$

,

![]() $B \subseteq X$

, let

$B \subseteq X$

, let

![]() $T$

be an MST on the bipartite graph with independent sets

$T$

be an MST on the bipartite graph with independent sets

![]() $R$

,

$R$

,

![]() $B$

and edge weight

$B$

and edge weight

![]() $w(x,y) = \mathsf{d}(x,y)$

and let

$w(x,y) = \mathsf{d}(x,y)$

and let

![]() $\left \{ r,b \right \} \in T$

with

$\left \{ r,b \right \} \in T$

with

![]() $\delta \,:\!=\, \mathsf{d}(r,b) \gt \mathsf{d}(R,B)$

. Then

$\delta \,:\!=\, \mathsf{d}(r,b) \gt \mathsf{d}(R,B)$

. Then

![]() $S \cap R = \emptyset$

, where

$S \cap R = \emptyset$

, where

In particular, when

![]() $X = [0,1]^d$

,

$X = [0,1]^d$

,

![]() $S$

contains a cube

$S$

contains a cube

![]() $Q\subseteq [0,1]^d$

with volume

$Q\subseteq [0,1]^d$

with volume

Proof. Assume by contradiction that there exists

![]() $r^{\prime} \in S \cap R$

, and consider the two connected components of the disconnected graph

$r^{\prime} \in S \cap R$

, and consider the two connected components of the disconnected graph

![]() $T \setminus \left \{ r,b \right \}$

. If

$T \setminus \left \{ r,b \right \}$

. If

![]() $r^{\prime}$

is in the same component as

$r^{\prime}$

is in the same component as

![]() $r$

, then adding

$r$

, then adding

![]() $\left \{ r^{\prime}, b \right \}$

to

$\left \{ r^{\prime}, b \right \}$

to

![]() $T \setminus \left \{ r,b \right \}$

yields a tree (hence, connected) with strictly smaller cost, since

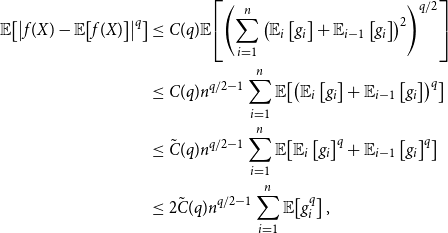

$T \setminus \left \{ r,b \right \}$

yields a tree (hence, connected) with strictly smaller cost, since

![]() $\mathsf{d}(r^{\prime},b) \lt \delta$

, hence a contradiction. If

$\mathsf{d}(r^{\prime},b) \lt \delta$

, hence a contradiction. If

![]() $r^{\prime}$

is in the same component as

$r^{\prime}$

is in the same component as

![]() $b$

, then adding

$b$

, then adding

![]() $\left \{ \mathsf{n}(r), r^{\prime} \right \}$

to

$\left \{ \mathsf{n}(r), r^{\prime} \right \}$

to

![]() $T\setminus \left \{ r,b \right \}$

again yields a tree with strictly smaller cost, since the triangle inequality gives

$T\setminus \left \{ r,b \right \}$

again yields a tree with strictly smaller cost, since the triangle inequality gives

To prove the last statement, notice first that by convexity of

![]() $[0,1]^d$

, the point

$[0,1]^d$

, the point

![]() $x$

on the segment connecting

$x$

on the segment connecting

![]() $r$

and

$r$

and

![]() $b$

at

$b$

at

![]() $(\delta - \mathsf{d}(R,B))/2$

from

$(\delta - \mathsf{d}(R,B))/2$

from

![]() $r$

belongs to

$r$

belongs to

![]() $[0,1]^d$

. Moreover, the open ball centred at

$[0,1]^d$

. Moreover, the open ball centred at

![]() $x$

with radius

$x$

with radius

![]() $(\delta - \mathsf{d}(R,B))/2$

is entirely contained in

$(\delta - \mathsf{d}(R,B))/2$

is entirely contained in

![]() $S$

. Finally, intersection of any ball with radius

$S$

. Finally, intersection of any ball with radius

![]() $u\ge 0$

and centre

$u\ge 0$

and centre

![]() $x \in [0,1]^d$

contains at least a cube of side length

$x \in [0,1]^d$

contains at least a cube of side length

![]() $\min \{ u/\sqrt{d}, 1\}$

(the worst case is in general when

$\min \{ u/\sqrt{d}, 1\}$

(the worst case is in general when

![]() $x$

is a vertex of

$x$

is a vertex of

![]() $[0,1]^d$

).

$[0,1]^d$

).

2.3. Probabilistic estimates

In this section we collect some basic probabilistic bounds on distances between i.i.d. uniformly distributed random variables

![]() $(X_i)_{i=1}^n$

on a cube

$(X_i)_{i=1}^n$

on a cube

![]() $Q \subseteq{\mathbb{R}}^d$

. Some of these facts are well known, especially for

$Q \subseteq{\mathbb{R}}^d$

. Some of these facts are well known, especially for

![]() $d=1$

, since they are related to order statistics, but we provide here short proofs for completeness. The basic observation is that, for every

$d=1$

, since they are related to order statistics, but we provide here short proofs for completeness. The basic observation is that, for every

![]() $x\in{\mathbb{R}}^d$

,

$x\in{\mathbb{R}}^d$

,

![]() $0 \le \lambda \le \big( |Q|/\omega _d \big)^{1/d}$

, we have

$0 \le \lambda \le \big( |Q|/\omega _d \big)^{1/d}$

, we have

hence, by independence,

If

![]() $x \in Q$

, we also have the upper bound (the worst case being

$x \in Q$

, we also have the upper bound (the worst case being

![]() $x$

a vertex of

$x$

a vertex of

![]() $Q$

)

$Q$

)

hence,

Assume

![]() $Q = [0,1]^d$

. The standard layer-cake formula

$Q = [0,1]^d$

. The standard layer-cake formula

![]() $\mathbb{E}\!\left [ Z^p \right ] = \int _0^\infty \mathbb{P}(Z\gt \lambda ) p \lambda ^{p-1} d \lambda$

yields, for every

$\mathbb{E}\!\left [ Z^p \right ] = \int _0^\infty \mathbb{P}(Z\gt \lambda ) p \lambda ^{p-1} d \lambda$

yields, for every

![]() $p\gt 0$

, the existence of a constant

$p\gt 0$

, the existence of a constant

![]() $C =C(d,p)\gt 0$

such that, for every

$C =C(d,p)\gt 0$

such that, for every

![]() $n\ge 1$

,

$n\ge 1$

,

For

![]() $A \subseteq [0,1]^d$

, write

$A \subseteq [0,1]^d$

, write

![]() $N(A) = \sum _{i=1}^n I_{A}(X_i)$

. Then

$N(A) = \sum _{i=1}^n I_{A}(X_i)$

. Then

![]() $N(A)$

has binomial law with parameters

$N(A)$

has binomial law with parameters

![]() $(n, |A|)$

. In particular, for every

$(n, |A|)$

. In particular, for every

![]() $t\gt 0$

,

$t\gt 0$

,

An application of Markov’s inequality yields that, letting

then, for every

![]() $t\gt 1$

, it holds

$t\gt 1$

, it holds

and, for

![]() $t\lt 1$

,

$t\lt 1$

,

We need some uniform bounds on

![]() $N(Q)$

for every cube

$N(Q)$

for every cube

![]() $Q \subseteq [0,1]^d$

with sufficiently large or small volume. We write for brevity, for

$Q \subseteq [0,1]^d$

with sufficiently large or small volume. We write for brevity, for

![]() $v \ge 0$

,

$v \ge 0$

,

and

Lemma 2.8.

Let

![]() $(X_i)_{i=1}^n$

be i.i.d. uniformly distributed on

$(X_i)_{i=1}^n$

be i.i.d. uniformly distributed on

![]() $[0,1]^d$

. For every

$[0,1]^d$

. For every

![]() $v\in (0,1)$

, there exists

$v\in (0,1)$

, there exists

![]() $C = C(v,d) \ge 1$

such that, for every

$C = C(v,d) \ge 1$

such that, for every

![]() $n \ge C$

, if

$n \ge C$

, if

![]() $t\gt 2^{2d}$

, then

$t\gt 2^{2d}$

, then

while, if

![]() $t\lt 2^{-2d}$

,

$t\lt 2^{-2d}$

,

with

![]() $F$

as in (2.10).

$F$

as in (2.10).

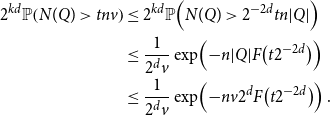

Proof. We prove (2.13) first. Let

![]() $k \in \mathbb{Z}$

be such that

$k \in \mathbb{Z}$

be such that

so that every cube

![]() $Q$

with

$Q$

with

![]() $|Q| =v$

is contained in a dyadic cube

$|Q| =v$

is contained in a dyadic cube

![]() $\prod _{\ell =1}^d ( n_\ell 2^{-k}, (n_\ell +1)2^{-k})$

, with

$\prod _{\ell =1}^d ( n_\ell 2^{-k}, (n_\ell +1)2^{-k})$

, with

![]() $(n_\ell )_{i=1}^d \in \big\{ 0,\ldots, 2^{k}-1 \big\}^d$

. It is then sufficient to consider the event

$(n_\ell )_{i=1}^d \in \big\{ 0,\ldots, 2^{k}-1 \big\}^d$

. It is then sufficient to consider the event

![]() $N(Q) \gt t nv$

for at least one such dyadic cube, i.e., using the union bound (2.11), we bound from above

$N(Q) \gt t nv$

for at least one such dyadic cube, i.e., using the union bound (2.11), we bound from above

Using that, for a dyadic cube with

![]() $|Q| = 2^{-dk}$

,

$|Q| = 2^{-dk}$

,

it follows that (2.11) applies with

![]() $2^{-2d}t$

instead of

$2^{-2d}t$

instead of

![]() $t$

, yielding

$t$

, yielding

\begin{equation*} \begin{split} 2^{kd} \mathbb{P}\!\left ( N(Q) \gt t nv \right ) & \le 2^{kd} \mathbb{P}\!\left ( N(Q) \gt 2^{-2d} t n| Q| \right ) \\ & \le \frac{1}{2^d v} \exp \!\left ( - n|Q| F\big( t 2^{-2d}\big) \right )\\ & \le \frac{1}{2^d v} \exp \!\left ( - n v 2^{d} F\big( t 2^{-2d}\big) \right ). \end{split} \end{equation*}

\begin{equation*} \begin{split} 2^{kd} \mathbb{P}\!\left ( N(Q) \gt t nv \right ) & \le 2^{kd} \mathbb{P}\!\left ( N(Q) \gt 2^{-2d} t n| Q| \right ) \\ & \le \frac{1}{2^d v} \exp \!\left ( - n|Q| F\big( t 2^{-2d}\big) \right )\\ & \le \frac{1}{2^d v} \exp \!\left ( - n v 2^{d} F\big( t 2^{-2d}\big) \right ). \end{split} \end{equation*}

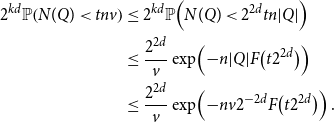

The argument for (2.14) is analogous. Let

![]() $k \in \mathbb{Z}$

be such that

$k \in \mathbb{Z}$

be such that

hence every cube

![]() $Q$

with

$Q$

with

![]() $|Q| \ge v$

contains at least one dyadic cube with volume

$|Q| \ge v$

contains at least one dyadic cube with volume

![]() $2^{-kd}$

, and we are reduced to consider the event that for such a cube

$2^{-kd}$

, and we are reduced to consider the event that for such a cube

![]() $N(Q) \lt t nv$

, i.e.,

$N(Q) \lt t nv$

, i.e.,

Using that

it follows that (2.12) applies with

![]() $2^{2d}t$

instead of

$2^{2d}t$

instead of

![]() $t$

, yielding

$t$

, yielding

\begin{equation*} \begin{split} 2^{kd} \mathbb{P}\!\left ( N(Q) \lt t nv \right ) & \le 2^{kd} \mathbb{P}\!\left ( N(Q) \lt 2^{2d} t n |Q| \right ) \\ & \le \frac{ 2^{2d}}{v} \exp \!\left ( - n |Q| F\big( t 2^{2d}\big) \right )\\ & \le \frac{ 2^{2d}}{v} \exp \!\left ( - n v 2^{-2d} F\big( t 2^{2d}\big) \right ). \end{split} \end{equation*}

\begin{equation*} \begin{split} 2^{kd} \mathbb{P}\!\left ( N(Q) \lt t nv \right ) & \le 2^{kd} \mathbb{P}\!\left ( N(Q) \lt 2^{2d} t n |Q| \right ) \\ & \le \frac{ 2^{2d}}{v} \exp \!\left ( - n |Q| F\big( t 2^{2d}\big) \right )\\ & \le \frac{ 2^{2d}}{v} \exp \!\left ( - n v 2^{-2d} F\big( t 2^{2d}\big) \right ). \end{split} \end{equation*}

In the following result we investigate the random variable (for

![]() $n \ge 2$

)

$n \ge 2$

)

where

![]() $\mathsf{n}(X_i)$

denotes the closest point to

$\mathsf{n}(X_i)$

denotes the closest point to

![]() $X_i$

among

$X_i$

among

![]() $\left \{ X_j \right \}_{j \neq i}$

, so that

$\left \{ X_j \right \}_{j \neq i}$

, so that

Heuristically, since

![]() $|X_i-\mathsf{n}(X_i)| \sim n^{-1/d}$

, and the random variables are almost independent, we still expect that

$|X_i-\mathsf{n}(X_i)| \sim n^{-1/d}$

, and the random variables are almost independent, we still expect that

![]() $M_n \sim n^{-1/d}$

, up to logarithmic factors. This is indeed the case.

$M_n \sim n^{-1/d}$

, up to logarithmic factors. This is indeed the case.

Proposition 2.9.

Let

![]() $n \ge 2$

,

$n \ge 2$

,

![]() $(X_i)_{i=1}^n$

be i.i.d. uniformly distributed on

$(X_i)_{i=1}^n$

be i.i.d. uniformly distributed on

![]() $[0,1]^d$

. Then, for every

$[0,1]^d$

. Then, for every

![]() $a\gt 0$

, there exists a constant

$a\gt 0$

, there exists a constant

![]() $C = C(a,d) \ge 1$

such that

$C = C(a,d) \ge 1$

such that

In particular, for every

![]() $q\gt 0$

, there exists

$q\gt 0$

, there exists

![]() $C =C(d,q)\gt 0$

such that, for every

$C =C(d,q)\gt 0$

such that, for every

![]() $n \ge 2$

,

$n \ge 2$

,

Proof. It is convenient to replace the Euclidean distance in the definition of

![]() $M_n$

with the

$M_n$

with the

![]() $\ell ^{\infty }$

distance

$\ell ^{\infty }$

distance

![]() $|x-y|_\infty = \max _{i=1, \ldots, d} |x_i-y_i|$

, i.e., we consider

$|x-y|_\infty = \max _{i=1, \ldots, d} |x_i-y_i|$

, i.e., we consider

Using that

![]() $|x-y|_\infty \le |x-y| \le \sqrt{d} | x-y|_\infty$

, we have

$|x-y|_\infty \le |x-y| \le \sqrt{d} | x-y|_\infty$

, we have

![]() $M_n^\infty \le M_n \le \sqrt{d} M_n^\infty$

, hence the thesis for the Euclidean case would follow once established for

$M_n^\infty \le M_n \le \sqrt{d} M_n^\infty$

, hence the thesis for the Euclidean case would follow once established for

![]() $M_n^\infty$

, since we do not aim for a sharp constant

$M_n^\infty$

, since we do not aim for a sharp constant

![]() $C$

.

$C$

.

For every

![]() $n$

sufficiently large, we choose

$n$

sufficiently large, we choose

![]() $\eta = \eta (d,n) \in ((a+1)2^{2d+1}, (a+1)2^{2d+2})$

such that, defining

$\eta = \eta (d,n) \in ((a+1)2^{2d+1}, (a+1)2^{2d+2})$

such that, defining

![]() $\delta = \left ( \eta \log ( n)/{n} \right )^{1/d}$

, we have that

$\delta = \left ( \eta \log ( n)/{n} \right )^{1/d}$

, we have that

![]() $1/(3\delta )^d$

is an integer.

$1/(3\delta )^d$

is an integer.

Consider a partition of

![]() $[0,1]^d$

into cubes

$[0,1]^d$

into cubes

![]() $\left \{ Q_j \right \}_{j\in J}$

of volume

$\left \{ Q_j \right \}_{j\in J}$

of volume

with

![]() $J = \big\{ 1, \ldots, \delta ^{-d} \big\}$

. Fix

$J = \big\{ 1, \ldots, \delta ^{-d} \big\}$

. Fix

![]() $\bar{t}$

large enough, in particular such that

$\bar{t}$

large enough, in particular such that

![]() $\bar{t}\gt 2^{2d}\eta$

and

$\bar{t}\gt 2^{2d}\eta$

and

![]() $\eta 2^d F(\bar{t}2^{-2d}/\eta ) \gt a+1$

. Lemma 2.8 entails that the event

$\eta 2^d F(\bar{t}2^{-2d}/\eta ) \gt a+1$

. Lemma 2.8 entails that the event

has probability larger than

![]() $1- C/n^a$

for some constant

$1- C/n^a$

for some constant

![]() $C = C(d,a)$

. Indeed, since

$C = C(d,a)$

. Indeed, since

![]() $v = \delta ^d = \eta \log (n)/n$

, inequality (2.13) yields

$v = \delta ^d = \eta \log (n)/n$

, inequality (2.13) yields

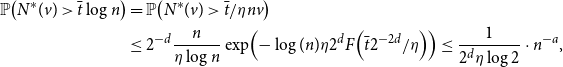

\begin{equation*} \begin{split} \mathbb{P}\!\left( N^*(v) \gt \bar{t} \log n \right) & = \mathbb{P}\!\left ( N^*(v) \gt \bar{t}/\eta nv \right ) \\ & \le 2^{-d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^d F\!\left(\bar{t}2^{-2d}/\eta \right) \right ) \le \frac{1}{2^{d} \eta \log 2} \cdot n^{-a},\end{split} \end{equation*}

\begin{equation*} \begin{split} \mathbb{P}\!\left( N^*(v) \gt \bar{t} \log n \right) & = \mathbb{P}\!\left ( N^*(v) \gt \bar{t}/\eta nv \right ) \\ & \le 2^{-d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^d F\!\left(\bar{t}2^{-2d}/\eta \right) \right ) \le \frac{1}{2^{d} \eta \log 2} \cdot n^{-a},\end{split} \end{equation*}

where we used that

![]() $\log (n)\gt \log (2)$

.

$\log (n)\gt \log (2)$

.

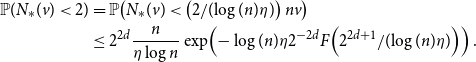

Similarly, by (2.14), with

![]() $t = 2/({\log}\,(n) \eta )$

(that is smaller than

$t = 2/({\log}\,(n) \eta )$

(that is smaller than

![]() $2^{-2d}$

for

$2^{-2d}$

for

![]() $n$

large enough) it holds

$n$

large enough) it holds

\begin{equation*} \begin{split} \mathbb{P}( N_*(v) \lt 2 ) & = \mathbb{P}\!\left ( N_*(v) \lt \left ( 2/({\log}\,(n) \eta ) \right ) nv \right ) \\ & \le 2^{2d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^{-2d} F\!\left(2^{2d+1}/({\log}\,(n) \eta )\right) \right ).\end{split} \end{equation*}

\begin{equation*} \begin{split} \mathbb{P}( N_*(v) \lt 2 ) & = \mathbb{P}\!\left ( N_*(v) \lt \left ( 2/({\log}\,(n) \eta ) \right ) nv \right ) \\ & \le 2^{2d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^{-2d} F\!\left(2^{2d+1}/({\log}\,(n) \eta )\right) \right ).\end{split} \end{equation*}

For

![]() $n$

sufficiently large, it holds

$n$

sufficiently large, it holds

![]() $F\!\left ( 2^{2d+1}/({\log}\,(n) \eta ) \right )\gt 1/2$

, hence

$F\!\left ( 2^{2d+1}/({\log}\,(n) \eta ) \right )\gt 1/2$

, hence

\begin{equation*} \begin{split} 2^{2d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^{-2d} F\!\left(2^{2d+1}/({\log}\,(n) \eta )\right) \right ) & \le 2^{2d} \frac{n}{\eta \log n } \exp \!\left ( - \log (n) \eta 2^{-2d-1} \right )\\ & \le \frac{2^{2d}}{\eta n^a}. \end{split} \end{equation*}

\begin{equation*} \begin{split} 2^{2d} \frac{n}{\eta \log n} \exp \!\left ( - \log (n) \eta 2^{-2d} F\!\left(2^{2d+1}/({\log}\,(n) \eta )\right) \right ) & \le 2^{2d} \frac{n}{\eta \log n } \exp \!\left ( - \log (n) \eta 2^{-2d-1} \right )\\ & \le \frac{2^{2d}}{\eta n^a}. \end{split} \end{equation*}

using that

![]() $\eta \gt (a+1)2^{2d+1}$

.

$\eta \gt (a+1)2^{2d+1}$

.

Hence, to prove the thesis, we can assume that

![]() $E$

holds. In such a case, it follows immediately that

$E$

holds. In such a case, it follows immediately that

![]() $M_n^\infty \le \delta$

, since the

$M_n^\infty \le \delta$

, since the

![]() $\ell ^\infty$

-diameter of a cube

$\ell ^\infty$

-diameter of a cube

![]() $Q_j$

for

$Q_j$

for

![]() $j \in J$

is

$j \in J$

is

![]() $v^{1/d} = \delta$

and each point belongs in one such cube where at least one other point can be found. Hence, we deduce

$v^{1/d} = \delta$

and each point belongs in one such cube where at least one other point can be found. Hence, we deduce

Next, for each

![]() $j \in J$

, we introduce the random variables

$j \in J$

, we introduce the random variables

i.e., we maximise the minimum

![]() $\ell ^\infty$

distances between points in

$\ell ^\infty$

distances between points in

![]() $Q_j$

and those that are at

$Q_j$

and those that are at

![]() $\ell ^\infty$

distance at most

$\ell ^\infty$

distance at most

![]() $\delta$

. These are not necessarily in

$\delta$

. These are not necessarily in

![]() $Q_j$

but must belong to the union of all cubes

$Q_j$

but must belong to the union of all cubes

![]() $Q_\ell$

covering the set

$Q_\ell$

covering the set

and is easily seen to be a cube of side length

![]() $3 \delta$

. Notice also that, since we argue in the event

$3 \delta$

. Notice also that, since we argue in the event

![]() $E$

, each cube

$E$

, each cube

![]() $Q_j$

contains at least two elements, hence

$Q_j$

contains at least two elements, hence

We now use the following fact: for every

![]() $f\,:\, \left \{ 1, \ldots, n \right \} \to J$

, conditioning upon the event

$f\,:\, \left \{ 1, \ldots, n \right \} \to J$

, conditioning upon the event

the random variables

![]() $(X_i)_{i=1}^n$

are independent, each

$(X_i)_{i=1}^n$

are independent, each

![]() $X_{i}$

uniform on

$X_{i}$

uniform on

![]() $Q_{f(i)}$

. Thus, we further disintegrate upon the events

$Q_{f(i)}$

. Thus, we further disintegrate upon the events

![]() $A_f$

, and since

$A_f$

, and since

![]() $E$

holds we consider only

$E$

holds we consider only

![]() $f$

’s such that, for every

$f$

’s such that, for every

![]() $j\in J$

,

$j\in J$

,

We introduce then a subfamily

![]() $K \subseteq J$

consisting of

$K \subseteq J$

consisting of

![]() $(3 \delta )^{-d}$

cubes such that, for

$(3 \delta )^{-d}$

cubes such that, for

![]() $j,k \in K$

, with

$j,k \in K$

, with

![]() $j \neq k$

,

$j \neq k$

,

![]() $Q_{j,\delta } \cap Q_{k,\delta } = \emptyset$

, so that the random variables

$Q_{j,\delta } \cap Q_{k,\delta } = \emptyset$

, so that the random variables

![]() $(M_{n,k})_{k \in K}$

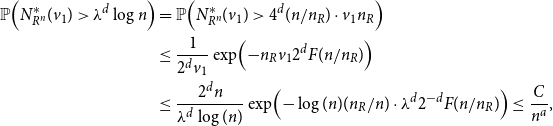

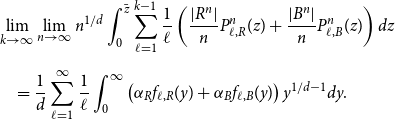

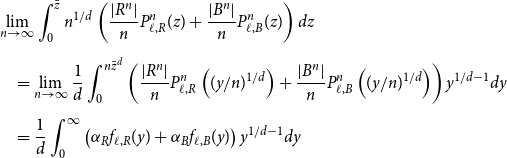

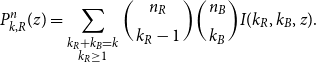

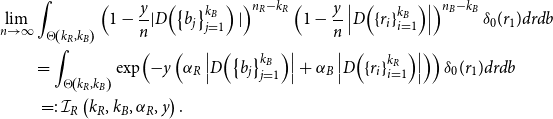

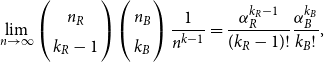

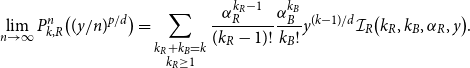

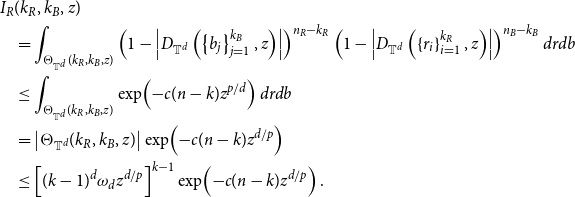

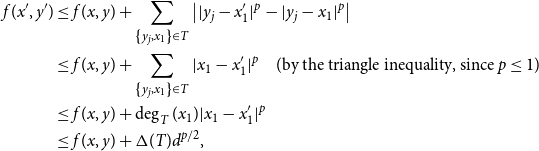

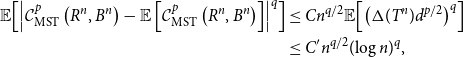

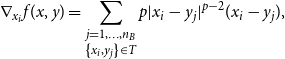

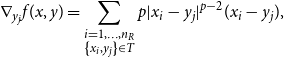

are independent (after conditioning upon