- ASF

animal-sourced food

- GHG

greenhouse gas

- LRNI

lower reference nutrient intake

- NDNS

National Diet and Nutrition Survey

- P:E

protein:energy expressed as % energy as protein

- RNI

reference nutrient intake

- SACN

Scientific Advisory Committee on Nutrition

- tHcy

homocysteine

The problem

Reductions in greenhouse gas (GHG) emissions by 2050 of ≥50% globally and ≥80% in the developed world are required to avoid dangerous climate change(Reference Metz, Davidson, Bosch, Dave and Meyer1). The 2008 UK Climate Change Act put in place legally-binding legislation to ensure such reductions through action in the UK and abroad to reduce CO2 emissions by ≥26% by 2020 and 80% by 2050 against a 1990 baseline(2).

The food system produces emissions at all stages in its life cycle, from the farming process itself to manufacture, distribution and cold storage through to food preparation and consumption in the home and the disposal of waste. Up to the farm gate the dominant GHG are N2O from soil and livestock processes (i.e. from manure, urine and fertiliser, which contribute soil N) and CH4 from ruminant digestion, rice cultivation (albeit not in the UK) and anaerobic soils. CO2 emissions arising from fossil fuel combustion to power machinery and for the manufacture of synthetic fertilisers also contribute, albeit to a lesser extent. However, CO2 resulting from agriculturally-induced land-use change can also add considerably to farm-stage impacts(Reference Bellarby, Foereid and Hastings3).

The supply of food to UK consumers has been estimated to contribute approximately 160×106 t CO2 equivalents, or approximately 19% of the UK's total GHG emissions, with agriculture accounting for approximately half this level (8·5%)(Reference Garnett4). This estimate is relatively conservative, since another EU study has found that food accounts for >30% of the EU's emissions(Reference Garnett4).

While the production and consumption of all foods contributes to GHG emissions, it is increasingly recognised that livestock's contribution to the total food burden is dominant(Reference Garnett5). FAO estimates that globally the livestock system accounts for 18% of GHG emissions(6). An EU-commissioned report puts the contribution of meat and dairy products at approximately 13% of all EU GHG, or half the total impact of food(Reference Tukker, Huppes and Guinée7). For the UK it has been estimated (T Garnett, unpublished results) that currently meat and dairy consumption (the supply to the UK) gives rise to approximately 60×106 t CO2 equivalents, equivalent to approximately 40% of the UK's food-related attributable GHG emissions. The disproportionately high emissions from meat and dairy foods have recently been exemplified by calculations of the GHG emissions in relation to food protein yield within the Swedish economy(Reference Carlsson-Kanyama and Gonzalez8); 1 kg GHG emissions is associated with yields of 162 g protein from wheat, 32 g from milk and only 10 g from beef.

Clearly, it might be argued that food is a special case and that GHG reductions in this sector of the economy should be less than in others. However, special pleading is expected from all sectors, so that agriculture will be required to play its part in the reductions. Indeed, global consumption of livestock products is growing, with demand for meat and milk set to double by 2050(6). On this basis, in order that the overall targets of GHG emission reductions are met, overall consumption needs to be reduced by ≥19% by 2020 and by 67% by 2050, i.e. consumption emissions will need to be virtually halved (T Garnett, unpublished results).

Meeting these GHG reduction targets will require changes for the meat and dairy industry and for the UK diet. In fact, total food consumption in the UK is likely to rise in part from expected population growth (8% by 2020 and 20% by 2050) and because of the obesity epidemic. Each unit increase in BMI represents for an average BMI of 25 kg/m2 an approximately 4% increase in individual demand for food energy. Thus, the transition from normal weight (BMI <25 kg/m2) to obesity (BMI 30 kg/m2) contributes to a >20% per capita increase in food consumption and food-related GHG emissions.

As GHG emissions associated with pork and poultry are less per g protein than those for beef it might be thought that a change from red to white meat consumption is all that is required. However, striking the balance is complicated. Grazing livestock can make use of land unsuited to other purposes and in so doing can help sequester C, i.e. keep C locked up in the soil and even increase the rate of C accumulation. In addition, pigs and poultry are more heavily implicated in soyabean production than ruminants and account for approximately 90% of the soyabean-meal consumption in the EU(9). If CO2 emissions arising from land-use change associated with soyabean production are included in the overall attributable GHG production then the differences between red and white meat production become less.

Nutritional implications of reductions in intakes of meat and dairy foods in the UK diet

Reductions in animal-sourced food (ASF) in general, and red meat and dairy foods in particular, are inevitable, even if the scale is difficult to predict. The purpose of the present paper is to conduct, as far as is possible, an assessment of the risk to nutritional status of the UK population of reductions in meat and dairy foods consumption. The intention is to begin by very briefly reviewing the general dietary influences of meat and dairy foods on health, well-being, morbidity and mortality and then identify the main nutrients at risk from a reduction in intakes of meat and dairy foods and the potential health problems likely to flow from their reduced intakes for which solutions would need to be found.

Current intakes and general advice on meat and dairy foods

According to the UK National Diet and Nutrition Survey (NDNS) of adults(Reference Henderson, Gregory and Swan10, Reference Henderson, Gregory and Irving11) 95% eat meat and 99% consume milk and dairy foods, with <1% pursuing vegan lifestyles avoiding all ASF. ASF account for 30% energy intakes, of which meat at 15% and dairy foods at 10% together account for about 25% (with liquid milk accounting for half all dairy products), and fish at 3% and eggs at 2% account for the remainder. Current daily intakes of meat and meat products at 204 g/d for men and 135 g/d for women are an overestimate of actual carcass meat intakes. Recent estimates of red and processed meat intakes, as indicated by the NDNS, are approximately 88 g/d for men and 52 g/d for women, i.e. 70 g/d on average(12).

There are no specific recommendations for intakes of meat or dairy foods within the UK. The ‘eatwell plate’ shows milk and dairy foods and meat, fish and alternatives comprising two of the five main food groups, representing about 13 and 14% of the plate area respectively(13). For meat ‘the balance of good health’ states: ‘Eat moderate amounts, choose lower fat versions and use smaller quantities of meat in dishes’(13). Also, high consumers of red meat are asked to consider a reduction to reduce colo-rectal cancer risk(14). As will be discussed later, this advice has recently been strengthened(12). Milk is not recommended for infants <1 year of age but is recommended for young children aged 1–5 years, in terms of full-fat milk at approximately 300 ml/d(13). Low-fat milk and dairy foods are also recommended for pregnant women on the basis of Ca provision(13).

Meat in the diet and overall health

The benefit of meat as a rich source of bioavailable macro- and micronutrients is clear but it needs to be balanced against its potential adverse influences on health. Many meat products are a major source of saturated fat in the diet and would be expected to be linked to the circulatory diseases associated with increasing BMI. Red and processed meat consumption has been associated with an increased risk of colo-rectal cancer(12, 14, Reference Marmot, Atinmo and Byers15).

Risk assessment of meat consumption by the adult population has proved to be difficult, in that avoidance of meat is usually associated with lifestyle and socio-economic factors that generally have strong positive influences on health and well-being. As a result, in surveys examining vegetarians the meat-eating controls are usually selected from similar population groups that also have generally healthy lifestyles with only moderate average meat intakes and with relatively high intakes of fruit and vegetables. Thus, the standardised all-cause mortality rate is often much lower than that of the general UK population; e.g. 52% in the EPIC-Oxford Study(Reference Key, Appleby and Spencer16). For such populations no influence of meat consumption on mortality is observed apart from a slightly but non-significantly higher mortality from IHD consistent with a slightly higher BMI and associated higher systolic blood pressure and serum LDL-cholesterol(Reference Key, Appleby and Spencer16, Reference Key, Fraser and Thorogood17).

As indicated earlier several major reports have concluded that the evidence is convincing for a causal influence of high intakes of red and processed meat on colo-rectal cancer(12, 14, Reference Marmot, Atinmo and Byers15). However, this relationship is not observed in the ‘healthy’ meat eaters within the EPIC-Oxford cohort of vegetarians and meat eaters(Reference Key, Appleby and Spencer18). For this cohort the incidence of colo-rectal cancer is lower in meat eaters than vegetarians, a finding that the authors have not been able to explain. The Scientific Advisory Committee on Nutrition (SACN) has reviewed the relationship and concludes that while the available evidence does suggest that red and processed meat intake is probably associated with increased colo-rectal cancer risk, some uncertainty remains and it is not possible to identify any dose response or threshold level because of limitations in the data(12). Thus, SACN derives pragmatic recommendations that red and processed meat intakes should be reduced for high consumers to a maximum of 70 g/d, the current average intake. The extent to which this recommendation would reduce current overall intakes is not clear in the report.

For children the literature on the influence of meat intakes is surprisingly limited. In the developed countries the growth of children with minimal amounts of dietary meat or with meat-free lacto-ovo vegetarian diets is virtually indistinguishable from that of the omnivore healthy population(Reference Sanders19). For children who are vegan lack of meat and all other ASF can be problematic for energy density in preschool children with slower growth(Reference O'Connell, Dibley and Sierra20), but after this age their growth in the USA(Reference O'Connell, Dibley and Sierra20) and UK(Reference Sanders and Manning21) differs only very slightly from that of reference populations (e.g. 0·1 sd for height at 10 years(Reference O'Connell, Dibley and Sierra20)).

Considerable publicity was recently given to an intervention with meat as one of three school snacks (the others being vegetable stew and milk) provided to Kenyan schoolchildren, of which the meat was reported to boost growth(Reference Grillenberger, Neumann and Murphy22) and cognitive development(Reference Whaley, Sigman and Neumann23). One of the principal investigators was quoted as saying that ‘Bringing up children as vegans is unethical’(Reference Hopkin24). However, the results of this intervention, which have yet to be published in full, are problematic since it would appear that because of two periods of severe food shortage during the study(Reference Grillenberger, Neumann and Murphy22) food intake at home declined for the children given snacks but not in the no-snack control group, and only for the meat group was there a significant increase in energy intakes(Reference Murphy, Gewa and Liang25). This factor may explain the failure to observe any significant impact on height growth in any of the children and why the impact of the three snacks on growth was mainly limited to an increase in muscle mass in the meat groups(Reference Grillenberger, Neumann and Murphy22).

Dairy products in the diet and overall health

With the exceptions of the adverse impact of unmodified cow's milk on Fe status in infants <1 years and the general acceptance of the adverse influences of the saturated fat in full-fat dairy products for older children and adults, dairy products are generally judged to be important beneficial components of the diets, with an important social history in relation to free school milk and the improvement of child growth in the UK and India(Reference Waterlow26). Height growth is one outcome of the insulin-like growth factor-1-mediated anabolic drive of dietary amino acids on the organism, well established in animal studies(Reference Millward and Rivers27–Reference Yahya and Millward29), and intakes of milk but not meat or vegetable protein in children have been shown to be associated with height and serum insulin-like growth factor-1 concentration(Reference Hoppe, Mølgaard and Michaelsen30, Reference Hoppe, Udam and Lauritzen31).

However, a central plank of the anti-dairy argument is that because lactose tolerance tends to occur mainly in Caucasian populations of northern European descent(Reference Vesa, Marteau and Korpela32), with most of the rest of the world lactose intolerant, milk and dairy foods are not necessary for human subjects after the age of weaning(Reference Lanou33). Indeed, when this argument is put together with the evidence of a link of milk with prostate cancer and autoimmune diseases (type 1 diabetes and multiple sclerosis), with the childhood ailments of cow's milk allergy and otitis media and the cross-cultural direct relationship between dairy food intakes and osteoporosis and fracture rates, it has been concluded that ‘Vegetarians will most likely have healthier outcomes for a host of chronic diseases by limiting or avoiding milk and other dairy products’ and that ‘All nutrients found in milk are readily available in healthier foods from plant sources’(Reference Lanou33). However, this position has been robustly challenged(Reference Weaver34). In fact there are possible benefits of milk for colo-rectal cancer that may balance any risk for prostate cancer(Reference Marmot, Atinmo and Byers15). Also, the general issue of lactose intolerance is quite complex, with milk of traditional importance in the diets of several African and Indian population groups(Reference Waterlow26) and fermented dairy foods serving as traditional staples of the diets of many lactose-intolerant populations who appear to be able to adapt colonic microflora enabling induction of tolerance(Reference Jackson and Savaiano35, Reference Hertzler and Savaiano36). Thus, risk assessment for overall influences of milk and dairy on health is a difficult task given the polarity of many of the reviewers in the area.

Nutrient intakes from meat and dairy foods

In terms of nutrient provision the key characteristic of ASF is that they are rich sources of both macro- and micronutrients. Table 1 shows nutrients from meat and meat products and all dairy foods with a nutrient index (food nutrient (% total nutrient intake)/food energy (% total energy intake) value of ≥1·5, i.e. those that are disproportionately provided by the food. The listings in Table 1 relate to food groups as analysed in the NDNS(Reference Henderson, Gregory and Swan10, Reference Henderson, Gregory and Irving11, Reference Henderson, Irving and Gregory37) (i.e. meat and meat products, milk and milk products), which could underestimate potential nutrient availability from carcass meat of several of the nutrients shown and overestimate the provision of Na and SFA, which derive to some extent from additions made in meat processing and manufacture of meat products. Of the nutrients listed the analysis here will be limited to those judged to be at potential risk, i.e. haem-Fe, protein and Zn in relation to reduced intake of meat and Ca, iodine, vitamin B12 and riboflavin in relation to reduced intakes of dairy foods.

Table 1. Major nutrients provided by meat and dairy foods in the UK diet

* Food nutrient (% total nutrient intake)/food energy (% total energy intake). Values from the UK adult National Diet and Nutrition Survey(Reference Henderson, Gregory and Swan10, Reference Henderson, Gregory and Irving11, Reference Henderson, Irving and Gregory37).

Iron

Although there is a large literature on Fe nutrition and the importance of meat, the likely impact of changes in meat intake on Fe supplies, status, prevalence of deficiency and overall health is nevertheless difficult to judge. However, Fe and health in the UK and the role of red meat is the subject of a recent comprehensive review(12), which was conducted to examine the implications of a previous recommendation(14) that ‘higher consumers should consider a reduction in red and processed meat consumption’ because of evidence that intakes of red and processed meat are associated with colo-rectal cancer. Here the intention is to highlight the main issues, all of which are discussed in more detail in the SACN report(12).

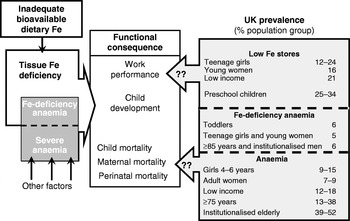

The key issues are illustrated in Fig. 1. Inadequate intakes of sufficiently bioavailable Fe can result in tissue Fe deficiency. Lack of tissue Fe is one cause of anaemia (Fe-deficiency anaemia), with anaemia also reflecting vitamin B12 and folate deficiency, genetic disorders and the inflammatory response. The functional consequences of severe Fe deficiency and anaemia can be impaired work performance in adults, poor motor and possibly cognitive development in children and ultimately, if the deficiency and anaemia is severe enough, increased perinatal, maternal and child mortality(12, Reference Stoltzfus38). Within the UK, according to the NDNS(Reference Henderson, Gregory and Swan10, Reference Henderson, Gregory and Irving11, Reference Henderson, Irving and Gregory37), there is an obvious problem with both low Fe intakes and with poor Fe status and anaemia for several population groups in the UK. Thus, very low Fe intakes (intakes lower than the lower reference nutrient intake (RNI; LRNI)) are observed in 12–24% of children aged 1·5–3·5 years, 44–48% of girls aged 11–18 years and 25–40% of women aged 19–49 years(39). For low-income families 50% of premenopausal women aged 19–49 years have intakes below the LRNI(39). As shown in Fig. 1, the prevalence of low Fe stores and anaemia is high for several population groups, with Fe-deficiency anaemia being observed in ≥5% of toddlers, teenage girls and young women and some elderly groups. The problem lies in estimating whether the low Fe intakes and high prevalence of poor Fe status does impact on health in the ways shown in Fig. 1(Reference Stoltzfus38). Since there is very little evidence of actual Fe deficiency in the UK in terms of functional outcomes or poor health, if either the cut-off points used to judge status (plasma ferritin) or the dietary reference values for Fe are set too high then the problem can be judged to be more apparent than real.

Fig. 1. Is iron deficiency a concern in the UK? (Modified from Stoltzfus(Reference Stoltzfus38), with UK statistics from Scientific Advisory Committee on Nutrition(12).)

In reviewing the problem of assessing Fe status SACN has concluded that while Hb (functional Fe) and ferritin (Fe stores) are considered to be the most useful indicators of Fe status(12), ‘Fe status is essentially a qualitative concept. It cannot be precisely quantified because of difficulties in determining accurate thresholds for adaptive responses and for adverse events associated with Fe deficiency or excess. The thresholds selected for use are not based on functional outcomes’. As for dietary reference intakes for Fe, the UK values derive from a single 1968 study of Fe losses(Reference Green, Charlton and Seftel40) with additions for growth and blood losses in women, adjusted for a single value for efficiency of utilisation (15%), and with the RNI and LRNI values calculated assuming a CV of 15%. Thus, the efficiency of utilisation or bioavailability of Fe is a key determinant of the RNI and LRNI used to judge the prevalence of dietary Fe deficiency. It is also the key determinant of the importance of meat as an Fe source. However, as will be discussed later, there is now considerable scientific uncertainty about the extent of the superiority of Fe from meat as a dietary source.

Iron bioavailability

As no specific excretory pathways exist for Fe in the body, the regulation of Fe homeostasis occurs only through regulation of its absorption and very little net absorption occurs if Fe stores are replete (e.g. <1%(Reference Hunt and Roughead41)). Regulation occurs both at the apical surface of the enterocyte, allowing entry into the mucosal Fe pool (mainly ferritin), and at the basolateral surface, allowing export into the systemic circulation.

Entry of haem- and non-haem-Fe into the mucosal cell involves independent mechanisms and the better bioavailability of haem-Fe reflects its specific (but poorly understood) absorption, which is generally unaffected by other dietary constituents except Ca(Reference Roughead, Zito and Hunt42). In contrast, the divalent Fe transporter DMT1 responsible for free non-haem-Fe2+ uptake into the enterocyte is easily inhibited by accompanying dietary factors in plant food such as phytic acid in cereals, rice, legumes and lentils, polyphenols such as tannic and chlorogenic acids in tea and coffee and other polyphenols in red wine, vegetables and herbs, as well as fibre, possibly Ca(Reference Roughead, Zito and Hunt42, Reference Singh, Sanderson and Hurrell43) and some soyabean proteins(Reference Lonnerdal44). Non-haem-Fe absorption can be optimised, however, by ascorbate, citrate and some spices, by using food preparation methods that reduce food phytate content and importantly by meat(12).

The main locus of overall regulation of Fe absorption appears to be basolateral Fe export from the enterocyte(12). This process involves the circulating peptide hepcidin, which is synthesised in the liver when Fe stores are adequate or high (and during inflammation) and acts to prevent the transfer of Fe from the enterocyte to plasma transferrin. As a result any Fe absorbed into the enterocyte when hepcidin levels are substantial, both haem- and non-haem-Fe, stays within the enterocyte until it is shed into the gut lumen after 1–2 d and lost in the faeces as ferritin(12).

The implication of such a regulatory system is that when the metabolic demand for Fe is high and hepcidin levels fall Fe within the enterocyte, from whatever dietary source, is exported into the circulating plasma transferrin pool by the Fe exporter ferroportin. Furthermore, if conditions for uptake of non-haem-Fe from the intestinal lumen into the enterocyte are optimised, the efficiency of overall non-haem-Fe absorption can increase 10-fold to approach that of haem-Fe (for example, see Hunt & Roughead(Reference Hunt and Roughead41)). Consequently, Fe stores (serum ferritin) appear to be of much greater importance than dietary composition as the determinant of overall Fe absorption, and in premenopausal women the major determinant of body Fe stores is menstrual blood loss. SACN conclude that the overall effect of enhancers and inhibitors on Fe absorption from habitual diets is considerably less than that predicted from single-meal studies, with the systemic need for Fe, mainly from blood loss, being the main determinant of the amount of dietary Fe absorbed by the body(12).

Meat intake and iron status

Meat intake is a determinant of Fe stores, with lower serum ferritin concentrations in vegetarians(Reference Worthington-Roberts, Breskin and Monsen45–Reference Hunt48). However, Fe stores can fall considerably before there is any measurable anaemia. Furthermore, although low Fe stores are often said to increase the risk of anaemia, vegetarians in developed countries do not have a greater incidence of Fe-deficiency anaemia(Reference Hunt48, Reference Nathan, Hackett and Kirby49). Indeed, in population studies lower serum ferritin concentrations have been associated with a lower risk of heart disease(Reference Salonen, Nyyssonen and Korpela50), a potential benefit of vegetarian and vegan diets. The up-regulation of non-haem-Fe absorption with lower Fe stores may mean that an equilibrium in terms of body Fe can be achieved in subjects with low-meat diets at lower levels of stores than those observed in subjects with high-meat diets without any Fe-related functional consequence. On this basis meat in the diet may be less important in relation to Fe nutrition than is commonly supposed, and reductions in meat intake could occur without substantial risk if attention is paid to optimising non-meat Fe bioavailability.

Indeed, it has proved to be extraordinarily difficult to identify lack of meat intakes specifically as a cause of poor Fe status. In infants the weaning period at 6 months represents a critical period and Fe intakes vary markedly with feeding practices. It is not clear how much meat is consumed at this time in the UK, although meat consumption is recommended by the Department of Health from 6 months to 8 months of age together with fruit, vegetables and/or vitamin C at every meal to increase Fe absorption. Many infant foods and follow-on formulas are fortified with Fe and vitamin C. Thus, low Fe intakes result from inappropriate feeding practices, especially an excessive reliance on unmodified cow's milk, which cannot provide sufficient Fe at this time stage. However, 27% of infants are reported as drinking unmodified cow's milk by the age of 9 months(Reference Gregory, Collins and Davies51). A study of children <4 years of age has not identified a lack of meat intake (or any other specific dietary component) as a determinant of anaemia in this age-group, but has shown that a combined low intake (less than the median intake) of cereals, vitamin C and meat is associated with a higher prevalence of anaemia (13%)(Reference Gibson52). Analyses of meat intakes and Fe status in other population groups have generally failed to identify meat intakes per se as primary determinants, but have shown meat to be one of several foods with positive influences(Reference Doyle, Crawley and Robert53). The SACN review of Fe and health(12) concludes that because so few consistent associations could be identified within the NDNS between markers of Fe status and intakes of total Fe, non-haem-Fe, haem-Fe or vitamin C, ‘this would be expected in a population with an adequate supply of dietary Fe’.

In a model of the likely impact of capping meat intakes at various levels from 180 g/d to no intake on Fe intakes SACN has found very little impact even at zero meat intake (unsurprising given that red meat Fe represents only approximately 10% total Fe intake)(12). Implicit in this model is the premise that Fe from meat is no more important than non-meat Fe sources.

In the conclusion of the review of Fe and health SACN comment that although most population groups in the UK are Fe replete, health professionals need to be vigilant for poor Fe status in those at risk of Fe deficiency (toddlers, girls and women of reproductive age and adults aged >65 years) and provide medical and dietary advice on how to increase Fe intakes(12). The report argues for a public health approach to increasing Fe intake in terms of a healthy balanced diet with a variety of foods containing Fe, rather than focusing on particular inhibitors or enhancers of the bioavailability of Fe or the use of Fe-fortified foods. In relation to the risk of colo-rectal cancer, SACN argue that lower consumption of red and processed meat would probably reduce this risk, so that as a precaution it may be advisable for intakes of red and processed meat not to increase above the current average (70 g/d) and for high consumers of red and processed meat (≥100 g/d) to reduce their intakes.

Protein

The key feature of dietary meat in relation to protein intakes is that meat intake determines quantity and quality of dietary protein intakes. Lean meat is almost entirely protein so that dietary meat will have a marked influence on overall dietary protein content. Examples of the influence of meat intakes on dietary protein content are shown in Table 2.

Table 2. Protein intakes as a function of the animal-sourced food (ASF) content of the diet

NDNS, National Diet and Nutrition Survey; Health ABC Study, Health, Aging, and Body Composition Study; P:E, protein:energy expressed as % energy as protein; VP, vegetable protein; ASFP, ASF protein; F, females; M, males.

* Weight (g) of meat+fish+eggs+cheese; milk not included (milk represented by far the largest component of ASF by weight and was inversely correlated with meat, fish and eggs, so was not included).

† Top quartile of VP intake, bottom quartile of ASFP intake.

‡ Bottom quartile of VP intake and top quartile of ASFP intake.

§ Cohort from China, Japan, UK and USA.

Within the NDNS of the elderly, trimmed for under-reporters (energy intake <1·35×BMR) and stratified into quartiles of non-milk ASF intakes (meat, fish, cheese and eggs), the mean protein:energy expressed as % energy as protein (P:E) increases significantly (P<0·0001) from 12·3 for quartile 1 to 14·9 for quartile 4, equivalent to absolute protein intakes of 1·03 g/kg per d and 1·25 g/kg per d respectively(Reference Finch, Doyle and Lowe54). (Milk represents by far the largest component of ASF by weight and is inversely correlated with meat, fish and eggs, so is not included.) In a large US study of the elderly stratified into quintiles of protein intakes ASF protein increases from 47·5% of the total for quintile 1 (P:E 10·9) to 66·7% of the total for quintile 5 (P:E 18·6)(Reference Houston, Nicklas and Ding55). In the INTERMAP study of blood pressure in relation to macronutrient intake in an international cohort the P:E for those subjects who mainly consume ASF is 17·4 compared with 13·4 for those with mainly plant-based diets(Reference Elliott, Stamler and Dyer56). Finally, in a comparison of meat eaters with vegans(Reference Haddad, Berk and Kettering57) the P:E is 15–16 compared with 12–13 for vegans.

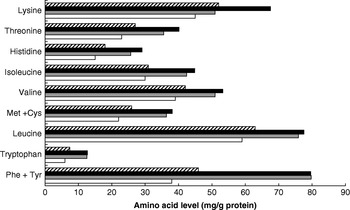

Dietary meat intake will also improve overall dietary protein quality in terms of digestibility. This variable is higher for meat than for many plant protein sources, especially in the case of diets with whole grains and many legumes that may contain anti-nutritional factors that influence digestibility adversely(Reference Millward58, Reference Millward and Jackson59). Thus, for vegetable-based diets in developed countries digestibility is likely to be approximately 80% compared with >95% for ASF and some plant-food-protein isolates. Dietary meat may also improve the amino acid score (a measure of dietary quality in terms of its biological value, the extent to which the amino acid profile of the food protein matches the requirement amino acid profile); however, although contrary to popular belief, for many mixed plant-food-based diets the amino acid score is already maximal. A comparison of the amino acid content of the high- and low-ASF diets identified in the INTERMAP study(Reference Elliott, Stamler and Dyer56) with the requirement patterns for adults or 1–2-year-old children(60) (Fig. 2) shows that amounts of the indispensable amino acids exceed the adult requirement pattern for all amino acids including lysine (the limiting amino acid in cereal proteins) and almost meets the requirement of young children. The biological value is likely to be low only in developing societies with poor diets based on cereals, or especially on starchy roots, and little else. On this basis increasing the proportion of ASF in the diet is likely to increase overall quality (digestibility×biological value(Reference Millward58)) from 0·8 (no ASF) to 0·95 (all ASF).

Fig. 2. Amino acid pattern (mg/g protein) of high (▪)- and low (![]() )-animal-sourced-food diets (data from Elliott et al.(Reference Elliott, Stamler and Dyer56)) and the requirement patterns for adults (□) and children (1–2 years old;

)-animal-sourced-food diets (data from Elliott et al.(Reference Elliott, Stamler and Dyer56)) and the requirement patterns for adults (□) and children (1–2 years old; ![]() ; data from World Health Organization/Food and Agriculture Organization/United Nations University(60)).

; data from World Health Organization/Food and Agriculture Organization/United Nations University(60)).

Risk of protein deficiency from low-animal-sourced-food diets

The identification of population groups most likely to be vulnerable to inadequate protein intakes is best achieved by expressing protein requirements relative to energy requirements, P:Erequirement(Reference Millward and Jackson59, 60), which can then be directly compared with the protein density of diets in terms of % protein energy. The mean P:Erequirement increases from quite low values in childhood (3·5–5) to approximately 10 in sedentary elderly women because energy requirements fall to a greater extent than protein requirements from childhood into old age. Thus, older adults are the group most vulnerable to low-protein diets. On this basis the prevalence of protein deficiency has been calculated for low- and high-ASF consumers in the UK elderly(Reference Finch, Doyle and Lowe54) identified in Table 2; digestibility-adjusted protein concentrations (as P:E) are 10·4 (0·87 g/kg per d) rising to 13·5 (1·12 g/kg per d).

The prevalence of protein deficiency (intakes<requirement) calculated with the algorithm reported in the WHO/FAO protein report(60) is substantial in the lowest quartile at 11%, with <3% deficiency in the highest quartile. However, the algorithm includes the coefficient of correlation between protein intakes and the requirement, and the standard model for protein requirements assumes the value to be vanishingly small (protein intakes and requirements not correlated). As discussed elsewhere(Reference Millward61), from the perspective of a protein requirement (defined as metabolic demand adjusted for the efficiency of utilisation) the current protein requirement of 0·66 g good-quality protein/kg per d is not biologically sensible. The reason is that when the metabolic demand is defined in terms of the obligatory N losses equivalent to approximately 0·3 g protein/kg per d the current requirement implies an efficiency of utilisation of good-quality protein of <50%, and there is no reason why it should be this low. This anomaly has been explained on the basis of an adaptive metabolic demand model for the protein requirement with incomplete adaptation to the different protein intakes used in the multilevel N balance studies from which the protein requirement was derived. For this protein requirements model intakes and requirements are highly correlated at intakes down to quite low levels, which could approach the obligatory demand of 0·3 g/kg per d(Reference Millward61). Accordingly, the risk of deficiency calculation should include a large positive correlation coefficient which, as shown in Table 3, reduces the prevalence of deficiency to <1% on the basis of the current requirement value. Furthermore, since the adaptive metabolic demand model identifies the minimum requirement as much lower than 0·66 g/kg per d, then it is likely that for the elderly population, in which the lowest reported intake after trimming (see Table 3) is 0·43 g/kg per d, no elderly subject is deficient.

Table 3. Prevalence of protein deficiency for the UK elderly population (from the National Diet and Nutrition Survey(Reference Finch, Doyle and Lowe54); trimmed of energy intakes <1·35×BMR)

ASF, animal-sourced food; P:E, protein:energy expressed as % energy as protein.

* Adjusted for digestibilities of 0·848 for quartile 1 and 0·903 for quartile 4 assuming: (a) plant and animal digestibility are 0·80 and 0·95; (b) vegetable protein:animal protein decreased from 2·12 in the lowest quartile to 0·45 in the highest quartile as observed in the lowest and highest quartiles of animal protein reported in the INTERMAP study(Reference Elliott, Stamler and Dyer56).

† Deficiency=intake – requirement (0·654 g/kg per d); prevalence=Φ(−(MR−MI)/sd), where Φ is unit normal distribution, MR is mean requirement and MI is mean intake, with sd =√(SI2+SR2 – 2 R SISR)(60), where SI and SR are sdr and sdI respectively.

‡ Intake and requirement not correlated (R 0·1).

§ Intake and requirement correlated (R 0·99).

Practical importance of protein deficiency with diets low in animal-sourced food

As there is no objective measure of protein status, ‘deficiency’ can only be defined as a statistical construct, i.e. a measure of prevalence of intakes <requirement. On this basis prevalence is model dependent in terms of the inclusion of adaptation, as discussed earlier. It will also change as the requirement changes. In fact, if the calculations in Table 3 are based on the mean adult requirement of 0·6 g/kg per d as in the 1985 FAO/WHO/United Nations University report(62), rather than 0·66 g/kg per d in the recent protein report(60), the deficiency prevalence for the low-ASF-diet group in Table 3 would be halved at 6·4% compared with 11·3%.

Furthermore, the protein requirement is defined only in terms of achievement of N balance, the measurement and interpretation of which is fraught with difficulty(60). It is not an intake defined in relation to other indicators of health and well-being. Consequently, the requirement is controversial, with many authorities arguing it is too low, especially for the elderly(Reference Millward63). One specific example of this debate is the suggestion that higher protein intakes will protect against sarcopenia(Reference Paddon-Jones, Short and Campbell64). In fact, the evidence for this premise has been hard to identify, with several cross-sectional studies failing to show any association between protein intakes and sarcopenia(Reference Baumgartner, Koehler and Gallagher65–Reference Mitchell, Haan and Steinberg67). However, a recent large community-based longitudinal study of the loss of appendicular lean mass over 3 years in men and women aged 75 years (n 2066) has shown that the appendicular lean mass loss is greater in the lowest, compared with the highest, quintile of protein intake(Reference Houston, Nicklas and Ding55), suggesting that dietary protein may be a modifiable risk factor for sarcopenia in older adults and that higher intakes afford some protection. However, some caution is needed in the interpretation of this study. First, there is no relationship between appendicular lean mass and protein intake at baseline when the dietary data were collected, which means that, as others have found(Reference Baumgartner, Koehler and Gallagher65–Reference Mitchell, Haan and Steinberg67), the cross-sectional data show no influence of protein intake on sarcopenia. Second, the positive protein intake–appendicular lean mass relationship is only observed for those who either lost or gained weight during the 3-year study. The loss of appendicular lean mass in the otherwise-weight-stable subjects, half the entire cohort, is not related to protein intake, which means that the relationship observed for the whole cohort is unlikely to be a simple consequence of higher dietary protein intakes reducing sarcopenia.

In fact, the unambiguous identification of disease risk in relation to protein intakes or an optimal protein requirement is exceedingly difficult(60, Reference Millward68, Reference Rodriguez and Garlick69). There is certainly little evidence to suggest that the lower protein intakes associated with low-meat diets are a cause for concern in terms of morbidity and mortality associated with vegetarian diets, as reviewed earlier. Dietary protein can exert both beneficial anabolic insulin-like growth factor-1-mediated influences on bone health and detrimental influences as a source of acid through the oxidation of its S-containing amino acids, which induces a calciuria(60). However, the balance between these effects is a longstanding debate. High animal-protein intakes have been associated with high bone-fracture rates in cross-cultural studies(Reference Abelow, Holford and Insogna70), but within populations there is little evidence for this relationship. In fact, a recent systematic review and meta-analysis of dietary protein and bone health has found only benefit or no influence(Reference Darling, Millward and Torgerson71).

One of the few specific relationships that has been identified is the inverse relationship between vegetable-protein intake (but not animal-protein intake) and blood pressure indicated by the INTERMAP study(Reference Elliott, Stamler and Dyer56). However, as with all such studies, whether the relationship is causal is difficult to judge. There are high correlations between vegetable-protein intake and fibre and Mg intake and negative associations with dietary cholesterol and total SFA, all factors likely to influence blood pressure, and although the regression models adjusted for these and other covariates, the possibility of residual confounding remains.

Overall, it might be concluded that there is little cause for concern for lower protein intakes with reduced meat consumption but there is sufficient uncertainty to allow dissent from this view.

Zinc

In the UK diet meat and meat products are the main source of Zn(Reference Henderson, Irving and Gregory37) (providing about one-third) followed by cereals and cereal products (25%) and milk and milk products (17%). Thus, red meat makes a greater contribution to total Zn intake than to total Fe intake(12), so that moving towards a plant-based diet could be more critical for Zn than for Fe or protein, but it is difficult to evaluate.

The Zn content of the human body is similar to that of Fe, but in contrast to Fe the approximately fifty Zn metalloenzymes are more widely distributed throughout all classes of enzymes(Reference Cousins, Bowman and Russell72). As these proteins include some involved in the regulation of gene expression (such as ‘Zn finger’ transcription factors), Zn is absolutely required for protein synthesis, normal cell growth and differentiation. Severe Zn deficiency is part of the aetiology of stunting of growth, hypogonadism, impaired immune function, skin disorders, cognitive dysfunction and anorexia(73). In developing countries Zn deficiency has recently been estimated to account for 4·4% of childhood deaths in Latin America, Africa and Asia(Reference Fischer Walker, Ezzati and Black74), and randomised controlled Zn-supplementation trials have unequivocally identified Zn deficiency as part of the aetiology of stunted growth(Reference Brown, Peerson and Rivera75). Nevertheless, the importance of Zn for maintaining good health in adults and normal growth and development in children in the developed countries is poorly understood because of lack of sensitive measures of marginal Zn status. None of the Zn proteins act as Zn-storage molecules that could be monitored as an index of status comparable with the Fe-storage molecule ferritin. Plasma or serum Zn is the only currently feasible index of Zn status suitable for surveys such as the NDNS(Reference Brown, Peerson and Rivera75).

Plasma Zn is not reported in the NDNS of adults but according to an analysis of the NDNS of children and adolescents, despite Zn intakes being below the RNI, Zn intakes and status appear to be generally adequate in young people aged 4–18 years(Reference Thane, Bates and Prentice76). The analysis shows that plasma Zn concentrations are ‘low’ in only 2·6% overall, with substantial numbers only for boys aged 4–6 years (9%) and girls aged 15–18 years (5%), and are not consistently or significantly correlated with Zn intake. The conclusion of adequate Zn intakes for UK children and adolescents has been challenged(Reference Amirabdollahian and Ash77), but the authors have defended their findings on the basis that the prevalence of low serum Zn concentration (2·6% overall) is below the >10% level thought to signal that Zn deficiency in a population is a public health problem requiring action(Reference Thane, Bates and Prentice78). Nevertheless, because Zn intakes for boys and girls, after excluding low reporters, are marginal for the 4–6 year olds (with low intakes (below the LRNI) for 6% of boys and 17% of girls) and also below the LRNI for 12% of 11–14-year-old girls, a more cautious interpretation of the NDNS data is needed; especially if it is the case that the homeostatic control of Zn in plasma means that in marginal Zn deficiency plasma Zn may remain within the normal range(Reference Amirabdollahian and Ash77).

Zn bioavailability from plant food sources is considerably less well understood than that for Fe, so that predicting the effect of reduced meat intakes is difficult. Cross-sectional plasma Zn measurements have not usually differed between vegetarians and meat eaters(Reference Hunt79), but most of these studies involve lacto-ovo vegetarians so that bioavailable Zn from ASF is still present in the diet. Without ASF the main plant sources of Zn are legumes, whole grains, seeds and nuts, but these sources also contain phytic acid, the main inhibitor of Zn absorption(Reference Cousins, Bowman and Russell72, 73). Thus, the bioavailability may fall from 50–55% for diets based on ASF to 30–35% absorption for mixed lacto and/or ovo vegetarian and vegan diets not primarily based on unrefined cereal grains or to only 15% absorption when unrefined cereal grains predominate(73), although there is evidence of up-regulation of Zn absorption at low intakes(Reference Wada, Turnland and King80). Indeed, a small but detailed study of twenty-five vegans and twenty meat eaters has reported similar dietary Zn intakes for the two groups and a slightly lower but not significantly different plasma Zn for the vegans, suggesting that any lower absorption of Zn from plant foods is not enough to impair Zn status(Reference Haddad, Berk and Kettering81). Comprehensive measures of immune function in the two groups reveal that vegans have lower leucocyte, lymphocyte and platelet counts and lower concentrations of complement factor 3 but no reductions in functional measures such as mitogen stimulation and natural killer cell activity. It was not possible to identify whether the immune status of vegans is compromised or enhanced compared with other groups(Reference Haddad, Berk and Kettering81).

There is strong evidence for the contribution of Zn deficiency to growth faltering among children; even mild to moderate Zn deficiency may affect growth(Reference Rivera, Hotz and Gonzalez-Cossıo82) and in Zn-supplementation studies boys have generally responded more than girls in terms of increased height growth(Reference Gibson83). Among UK children who are vegan slightly lower than average height growth rates are mainly seen in boys(Reference Sanders and Manning21, Reference Sanders84).

The modelling of the influences of reducing meat intake in the recent SACN review of Fe and health concludes that the percentage of the population with Zn intakes below the LRNI would slightly increase to 4–5 if red meat intakes were to be capped at a maximum of 70 g/d and to 20–30 if red meat were to be removed entirely without replacement by Zn-containing foods(12). However, in reality Zn could be provided by other ASF and, as indicated earlier for adults, entirely plant-based Zn dietary sources appear to be able to provide adequate intakes and maintain near-normal levels of plasma Zn. So, replacement by plant-based Zn sources would reduce the prevalence of very low intakes, although whether it would be the case for children remains an open question.

Calcium

Ca intakes in population groups in the UK reflect the consumption of milk and dairy foods, which provide >40% total intake(Reference Henderson, Irving and Gregory37). Thus, low intakes of these foods, as observed for some UK teenagers, result in low Ca intakes (20% of teenage girls and 10% of boys have intakes below the LRNI (450–480 mg/d))(39). The implication is certain deficiency according to dietary reference intakes, i.e. a serious problem for this age-group. However, for Ca, like protein, there are difficult and unresolved issues about the metabolic demand and efficiency of utilisation that set the Ca requirement, and this factor makes risk assessment for this population group difficult.

As it is important to maintain plasma Ca levels Ca homeostasis is finely regulated at the levels of absorption, renal excretion and deposition and mobilisation from bone by the calcitropic hormones parathyroid hormone, 1,25-dihydroxycholecalciferol and calcitonin(Reference Weaver, Bowman and Russell85). Ca losses fall as intakes fall and the active component of Ca absorption is up regulated. The skeleton comprises 99% of the body Ca (in the form hydroxyapatite) and the RNI for teenagers is based on a factorial model in which assumed peak bone retention rates of 250–300 mg/d are adjusted for a net absorption of 40% to give an estimated average requirement of 625–750 mg/d and an RNI (+2 sd, 30%) of 800–1000 mg/d(86). Up-regulation of absorption to an efficiency of 60% would mean that the desired accretion could theoretically be achieved at intakes of 420–500 mg/d, i.e. close to the LRNI.

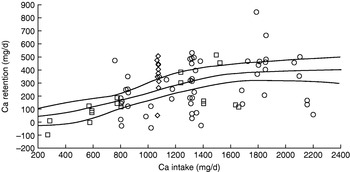

Is achievement of this level of efficiency of Ca utilisation likely? On the basis of the Ca balance studies used to derive the US adequate intake for Ca(87) the answer is definitely no. As shown in Fig. 3, none of the subjects with intakes of ⩽600 mg/d retain >100 mg/d. The US adequate intake for Ca is 1300 mg/d for adolescents, twice the UK estimated average requirement, and includes a Ca demand for peak velocity of bone mineral content deposition, which when 55 mg/d for sweat losses is included is 332 mg/d for boys and 262 mg/d for girls. Appropriate intakes predicted from the non-linear analysis of Ca balance data shown in Fig. 3 are 1070 mg/d (females) and 1310 mg/d (males), equivalent to an efficiency of utilisation of 24–25%.

Fig. 3. Calcium balance studies of adolescents used to derive the US adequate intake for calcium(87) of 1300 mg/d. The regression line (with 95% CI) derives from a non-linear fit to the data points.

The reason that the question of an increased efficiency of Ca utilisation and decreased requirements can be posed at all is a result of both early work (see Prentice(Reference Prentice88)) and the recent studies on Gambians(Reference Prentice, Schoenmakers and Laskey89), which have clearly demonstrated an adaptive metabolic demand for Ca. It is also consistent with the ‘Ca paradox’, i.e. that many countries do appear to achieve bone health in terms of very low fracture rates (<10% of those in the UK, US and Nordic countries) with Ca intakes as low as those of some UK adolescents if not lower (e.g. in Gambia 200–400 mg/d(Reference Prentice88)). However, there is no clear understanding as to why fracture rates are so low in these countries, only that they seem to have successfully adapted to a low Ca intake. Bone health is a complex function of genetics, exercise, vitamins D and K and alkaline foods (mainly fruit and vegetables) as well as Ca(Reference Prentice88). It is the case that intervention studies with Ca do not provide an unequivocal answer, enabling reviewers to reach different conclusions about the benefits, or not, of high Ca or milk intakes(Reference Lanou, Berkow and Barnard90). A recent meta-analysis of randomised controlled trials of Ca supplementation in healthy children has identified only a small effect of Ca supplementation on bone mineral density in the upper limb that is unrelated to gender, baseline Ca intake, pubertal stage, ethnicity or level of physical activity(Reference Winzenberg, Shaw and Fryer91). The authors conclude that the small effect is unlikely to be sufficient to reduce the risk of fracture, either in childhood or later life, to an extent of major public health importance. However, there are technical issues about the importance of measures of bone mineral density or bone mineral content(Reference Prentice, Schoenmakers and Laskey89). Thus, if there is an accompanying increase in skeletal size including stature (bone length) size-adjusted changes in bone mineral content or bone mineral density will not be observed. These authors conclude from their review of nutrition and bone growth and development that it is not possible to define dietary reference values using bone health as a criterion(Reference Prentice, Schoenmakers and Laskey89), and the question of what type of diet constitutes the best support for optimal bone growth and development remains open. Thus, prudent recommendations are to consume a Ca intake close to the RNI, optimise vitamin D status through adequate summer sunshine exposure (and diet supplementation where appropriate), be physically active, have a body weight in the healthy range, restrict salt intake and consume plenty of fruit and vegetables(92, 93).

Finally, vegans are a population with low Ca intakes and their comparative fracture risk compared with dairy-consuming vegetarians and meat eaters is an important clue to the Ca intake problem. According to the EPIC-Oxford study, which included 1126 UK vegans(Reference Appleby, Roddam and Allen94), their fracture rates are 30% higher than those of meat eaters, fish eaters or vegetarians (fracture incidence rate ratio (fracture incidence rate compared with that of meat eaters) 1·30 (95% CI 1·02, 1·66)). Importantly, after adjustment for Ca intakes the average fracture incidence rate ratio falls to a non-significant 1·15 (95% CI 0·89, 1·49). This finding shows that the fracture rate does reflect Ca intakes. For the vegans consuming at least the adult estimated average requirement for Ca of 525 mg/d(86) (56% of the population) fracture incidence rate ratios are the same as those for fish eaters and vegetarians. What this finding shows is that for those 30% of teenagers with Ca intakes below the LRNI (450–480 mg/d) there should certainly be considerable concern. It also shows that it is difficult with plant-based diets to achieve sufficient Ca intakes to minimise fracture risk, especially to achieve the RNI of 700 mg/d, which is only achieved by <25% of the vegans studied. However, it is clearly possible.

Iodine

The UK situation in relation to iodine is reviewed in another paper from this symposium(Reference Zimmerman95). Iodine deficiency is a serious issue influencing, like Zn deficiency, up to one-third of the world's population through irreversible impairment of child development during both fetal and postnatal development phases(Reference Walker, Wachs and Meeks Gardner96). It used to be widespread in the UK and, although iodine-deficient soils are common in England and Wales with low levels of iodine in plant foods and goitre relatively common in the past, since the early 1960s visible goitre has largely disappeared. This change has taken place even though Britain has never had mandatory iodisation of salt and other foods. The reason is the use of iodine in the dairy industry; iodinated casein is given to cows to promote lactation and iodophor disinfectants are used for cleaning teats and milk tankers(Reference Zimmerman95). This practice has resulted in iodine contamination in milk and dairy produce and increased iodine intake in those consuming milk and dairy foods. According to the NDNS dairy foods account for 38% of the iodine intake from food(Reference Henderson, Irving and Gregory37). However, it means that population groups with low intakes of milk will have low iodine intakes, and NDNS reports a poor status in young women, with 12% reporting intakes below the LRNI(Reference Henderson, Irving and Gregory37). Furthermore, a small survey of urinary iodine excretion in young Surrey women shows 30% with mild to moderate iodine deficiency(Reference Rayman, Sleeth and Walter97). This situation raises serious concern for pregnancy outcomes within this group; indeed, it may get worse if the use of iodinated feeds and iodophor disinfectants is reduced, as has happened in Australia(Reference Zimmerman95). As a consequence there may be a marked fall in iodine status in the UK comparable with that in Se status associated with the changes in bread making(Reference Rayman98). In the US iodised salt is widely used and some other foods are fortified with iodine(Reference Zimmerman95). In Canada all table salt is iodised. The UK has no iodine fortification strategy for plant foods or salt. Perhaps the time has come to reconsider this position.

Vitamin B12

Vitamin B12 is a key nutrient in the present discussion because about 70% of vitamin B12 in the UK diet derives from meat and dairy foods, although eggs and especially fish are very rich sources(Reference Henderson, Irving and Gregory37). The UK vitamin B12 RNI of 1·5 μg/d can be met in theory by relatively low intakes of ASF, i.e. 75 g fish or meat, 60 g eggs and approximately 375 g semi-skimmed milk(86). Also, many non-ASF foods are fortified with vitamin B12. According to the NDNS mean intakes of most populations in the UK are three to four times the current UK RNI, with little evidence of any vitamin B12 deficiency apart from 7–8% of the elderly who exhibit low plasma vitamin B12(39). This finding is consistent with a long-held view that poor vitamin B12 status is only likely to occur in the elderly, because of malabsorption of food-bound vitamin B12 associated with chronic or atrophic gastritis(Reference Cannel99) in relation to pernicious anaemia, and in the complete absence of any dietary ASF or supplemental vitamin B12, as in some groups of vegans. However, it has become apparent that this view is likely to be incorrect, with vitamin B12 deficiency more common in several population groups with low intakes of ASF(Reference Allen100).

As vitamin B12 has the central role in C1 metabolism associated with nucleic acid synthesis and methylation, vitamin B12 deficiency may be associated with megaloblastic anaemia and various neurological disorders including dementia(Reference Elmadfa and Singer101). Vitamin B12 deficiency in pregnancy can increase the risk of birth defects(Reference Ray, Wyatt and Thompson102) and infants born to vitamin B12-deficient mothers have low vitamin B12 stores, receive low levels of vitamin B12 in breast milk and are likely to develop symptoms of macrocytic anaemia and impaired growth and development(Reference Dror and Allen103). This outcome is a recognised serious concern in populations with low ASF intakes in the developing world in which poor vitamin B12 status is widespread in pregnant women(Reference Guerra-Shinohara, Morita and Peres104) and children(Reference Rogers, Boy and Miller105). The important question here is the likely impact of reduced intakes of meat and dairy foods on vitamin B12 status within the UK population.

There are two important issues that need to be considered in relation to vitamin B12 status in the population and the likely risks associated with reduced dietary intakes as ASF intakes fall. First, it is widely recognised that plasma vitamin B12 levels are a poor indication of functional vitamin B12 status, so the currently-assumed UK lower cut-off point for vitamin B12 deficiency (<118 pmol/l(39)) may be too low and subjects at the lower end of the normal range may be functionally deficient. In the USA the cut-off for deficiency is 148 pmol/l, with marginal status defined as 148–221 pmol/l(Reference Allen100). Furthermore, functional measures of status such as plasma or urinary methylmalonic acid, holotranscobalamin and homocysteine (tHcy) indicate a more widespread vitamin B12 deficiency. Thus, a more detailed analysis of UK elderly subjects within NDNS has indicated that 46% of institutionalised elderly have elevated methylmalonic acid(Reference Bates, Schneede and Mishra106). Importantly, several UK population studies of the elderly in the community have identified similar frequencies of elevated methylmalonic acid and have shown that low vitamin B12 status, as indicated by elevated methylmalonic acid, low holotranscobalamin or elevated tHcys, is associated with cognitive decline(Reference McCracken, Hudson and Ellis107–Reference Clarke, Birks and Nexo109).

The second issue relates to vitamin B12 bioavailability. Vitamin B12 status varies not only between plant-eating and meat-eating groups, as might be expected(Reference Elmadfa and Singer101), but also within the general adult population in relation to the dietary source of vitamin B12. Within the Framingham Offspring Study(Reference Tucker, Rich and Rosenberg110), in which higher plasma vitamin B12 cut-off levels identify 39% of adults of all ages at risk of deficiency, plasma vitamin B12 reflects mainly intakes of supplements, fortified cereal and milk, which appear to protect against lower concentrations. In contrast, intakes of meat, poultry and fish sources have less of an influence on plasma vitamin B12, suggesting that vitamin B12 from these sources is less efficiently absorbed. A recent large cross-sectional study of Norwegian adults aged 47–49 and 71–74 years(Reference Vogiatzoglou, Smith and Nurk111) also shows that plasma vitamin B12 levels vary with intake of fish (by far the largest dietary source in this population) and dairy products (specifically from milk) but not from meat, which provides similar dietary amounts of vitamin B12 to those of milk. Possible explanations for this finding are the loss of vitamin B12 during cooking (especially at high temperatures), the binding of vitamin B12 to collagen in meat (in contrast to milk in which it appears to be bound to transcobalamin, the vitamin B12-carrier protein) or impaired digestion of meat collagen by pepsin if gastric secretion is inadequate, as in some elderly subjects. In the Global Livestock-CRSP Kenyan schoolchildren intervention responses in terms of plasma vitamin B12 are generally greater in the children given milk supplements than in those given meat(Reference Siekmann, Allen and Bwibo112).

Do these data indicate that for vitamin B12 the key issue is obtaining sufficient intake from dairy-food sources? The problem is that, compared with dairy foods, the vitamin B12 content (on an energy (/kJ) basis) is much greater for meat (2-fold), eggs (2·4-fold) and fish (5·4-fold)(Reference Henderson, Irving and Gregory37), which means that even with a higher dietary vitamin B12 bioavailability lower vitamin B12 status may occur with meat-free lacto-ovo vegetarian diets. Thus, pregnant German women on long-term ovo-lacto vegetarian diets have half the vitamin B12 intakes of omnivores and lower vitamin B12 status in terms of serum vitamin B12, serum holo-haptocorrin and elevated tHcy(Reference Koebnick, Hoffmann and Dagnelie113). Furthermore, only at the lower intakes observed in the vegetarians does dietary vitamin B12 predict serum vitamin B12 and tHcy. Thus, for this population the lower dietary amounts from these sources result in lower serum vitamin B12 and higher plasma tHcy concentrations during pregnancy and an increased risk of vitamin B12 deficiency. Also, in a group of adult lacto-ovo vegetarians although holotranscobalamin, methylmalonic acid and tHcy predict better vitamin B12 status than for adult vegans, it is worse compared with meat eaters(Reference Herrmann, Schorr and Obeid114). These results point to any higher bioavailability of vitamin B12 from dairy products not being able to compensate for the overall lower intakes in the absence of fish or meat.

Measurements of dietary intakes in UK children who are vegan indicate that their diets are generally adequate, with most parents aware of the need to supplement with vitamin B12(Reference Sanders84), but measures of vitamin B12 status are not reported in the study. However, it is clear that infants of mothers who are vegan can be at risk of serious vitamin B12 deficiency, in that of forty-eight cases studies of infantile vitamin B12 deficiency identified in the literature thirty involve mothers who are vegan(Reference Dror and Allen103). While none of these cases are lacto-ovo vegetarians, the poor vitamin B12 status of mothers who are lacto-ovo vegetarians reviewed earlier(Reference Koebnick, Hoffmann and Dagnelie113) raises important concerns for infants and children of such mothers who are not supplementing with vitamin B12.

Taken together it would appear that vitamin B12 status in the UK population may be worse than thought previously and that there is little room for complacency in relation to lacto-ovo vegetarians. This situation raises important public health policy questions for vitamin B12. A key finding of the Framingham study(Reference Tucker, Rich and Rosenberg110) is the importance of intakes of vitamin B12 supplements and foods fortified with vitamin B12 such as breakfast cereals for improving plasma vitamin B12 concentrations. It was suggested that this outcome supports advice that adults aged >50 years obtain most of the recommended intake of vitamin B12 from supplements or fortified foods, as well as raising questions about whether younger adults should also consider the addition of these sources to their diet. In contrast, it has been suggested that the promotion of a high intake of dairy products would be a good strategy, avoiding potential pitfalls of fortification(Reference Vogiatzoglou, Smith and Nurk111). For this approach to be effective it would appear that dairy food intakes would have to be high, as they are on average in Norway(Reference Vogiatzoglou, Smith and Nurk111). Clearly, in the present context of examining nutritional risks of reducing intakes of meat and dairy foods it would appear that the risk is associated not only with low intakes of meat or fish but also for vegetarians with low intakes of milk and dairy foods. For these populations increased availability of vitamin B12 in supplements or fortified foods would be the most sensible risk-management option.

Riboflavin

Milk and dairy foods are the main source of riboflavin in the UK diet, accounting for 33% of intakes, and according to the NDNS riboflavin status does appear to reflect milk intake(Reference Henderson, Irving and Gregory37). Thus, there is a steady increase in the erythrocyte glutathione reductase activity coefficient (a marker of poor riboflavin status) with age from toddlers through school-age into young adulthood followed by an improvement in older adults and the elderly(39). This pattern parallels in general terms the milk intake in these groups.

There is no obvious deficiency disease in the UK, raising questions about the functional importance of poor riboflavin status, as reported in the NDNS(Reference Henderson, Irving and Gregory37). One important issue relates to the role of riboflavin as a coenzyme for the methylenetetrahydrofolate reductase enzyme that is of key importance in folate metabolism and especially in the regulation of plasma tHcy levels. Raised erythrocyte glutathione reductase activity coefficient is accompanied by raised tHcy levels in the NDNS adult survey(Reference Henderson, Irving and Gregory37). Indeed, poor riboflavin status will be particularly important for the approximately 10% of the population with defective folate metabolism (i.e. the methylenetetrahydrofolate reductase C677T variant) who exhibit increased tHcy with poor riboflavin status(Reference McNulty, McKinley and Wilson115). For the age-group at risk (school-age into young adulthood) of the various adverse influences of increased tHcy a poor pregnancy outcome(Reference Ronnenberg, Goldman and Chen116, Reference Refsum, Nurk and Smith117) is probably the most worrying potential problem. However, in the context of reducing intakes of milk and dairy foods and increasing risk of riboflavin insufficiency, then the risk of elevated tHcy would also extend to older age-groups in terms of dementia, cognitive impairment and osteoporosis and fracture risk, for which evidence for an elevated tHcy-linked mechanism exists(Reference Elmadfa and Singer101). In terms of risk management it is the case that in the USA flour is fortified with riboflavin (as well as folic acid), which may explain in part the very much smaller difference in tHcy in the population with the methylenetetrahydrofolate reductase C677T variant compared with other regions of the world and the lack of any increased risk of CHD for the population group(Reference Lewis, Ebrahim and Davey Smith118). This outcome suggests that this strategy is effective for managing poor riboflavin status at the population level. Fortification of flour does not occur in the UK.

Summary of risk assessment and potential strategies for risk management

Although it might be thought that the NDNS programme of monitoring nutrient intakes and status within the UK population provides sufficient information to allow a detailed nutritional risk assessment of reductions in meat and dairy intakes, in practice such a task is only possible in general qualitative terms, given the current level of nutritional science and knowledge. Current dietary reference values for most nutrients are based on surprisingly limited information, may have a poorly-defined relationship, if any, with specific nutrient deficiency diseases and an even poorer relationship with the main multifactorial chronic diseases. As a result of this scientific uncertainty there are very marked differences of opinion over many of the key nutritional issues that are relevant to such an analysis. Of the nutrients considered here modelling of the consequences of intake reductions can only be attempted in the case of haem-Fe in red and processed meat, and the outcome has been reported by SACN(12). Even then assumptions have been made that differences in bioavailability of haem- and non-haem-Fe are minimal. For protein and Ca there are complex issues relating to adaptation that need to be resolved before the implications of apparent current deficiency in particular population groups can be properly understood, or even any modelling of the impact of reducing intakes undertaken. It is the case that entrenched views on either side of these particular debates will make a scientific consensus quite difficult to achieve. For Zn, and particularly iodine, there may be cause for concern in relation to growth and development, which in the case of iodine may require action irrespective of any changes in milk and dairy food intake. For the functionally-linked vitamin B12 and riboflavin the improvement of status assessment of vitamin B12 and the growing evidence base of the potential involvement of their deficiency in poor pregnancy outcomes, dementia, osteoporosis and possibly occlusive vascular disease indicates an existing need for risk management of those with low intakes of milk and dairy foods. It may also be the case that reductions in meat consumption will be easier in terms of public acceptance of dietary change; certainly public campaigns for meat-free days in Europe have recently spread to the UK(119). Reductions in dairy foods may well pose the greatest challenge. It is the case that diets with low levels of ASF, especially low levels of dairy foods, do require more care in their formulation to ensure nutritional adequacy so that fortified foods are likely to assume increasing importance. Many countries have embraced fortification as a cheap and effective strategy for increasing the supply of limiting nutrients(Reference Allen, de Benoist, Dary and Hurrell120). Clearly, as with the current UK debate about potential risks associated with folic acid fortification, food fortification can itself be associated with risk, but such risks will have to be balanced against the increasing risk posed by climate change. Policy makers will be faced with difficult choices.

Acknowledgements

The authors declare no conflict of interest. The contribution of T. G. to the work was not supported by any specific grant from any funding agency in the public, commercial or not-for-profit sectors. The post held by T. G. is funded by the Engineering and Physical Sciences Research Council and Department for Environment, Food and Rural Affairs. T. G. provided information and analysis of the environmental impact of the food system in general and of meat and dairy foods in particular.